Abstract

A new paradigm for data science has emerged, with quantum data, quantum models, and quantum computational devices. This field, called quantum machine learning (QML), aims to achieve a speedup over traditional machine learning for data analysis. However, its success usually hinges on efficiently training the parameters in quantum neural networks, and the field of QML is still lacking theoretical scaling results for their trainability. Some trainability results have been proven for a closely related field called variational quantum algorithms (VQAs). While both fields involve training a parametrized quantum circuit, there are crucial differences that make the results for one setting not readily applicable to the other. In this work, we bridge the two frameworks and show that gradient scaling results for VQAs can also be applied to study the gradient scaling of QML models. Our results indicate that features deemed detrimental for VQA trainability can also lead to issues such as barren plateaus in QML. Consequently, our work has implications for several QML proposals in the literature. In addition, we provide theoretical and numerical evidence that QML models exhibit further trainability issues not present in VQAs, arising from the use of a training dataset. We refer to these as dataset-induced barren plateaus. These results are most relevant when dealing with classical data, as here the choice of embedding scheme (i.e., the map between classical data and quantum states) can greatly affect the gradient scaling.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The future of data analysis is incredibly exciting. The quantum revolution promises new kinds of data, new kinds of models, and new information processing devices. This is all made possible because small-scale quantum computers are currently available, while larger-scale ones are anticipated in the future (Preskill 2018). The mere fact that users will run jobs on these devices, preparing interesting quantum states, implies that new datasets will be generated. These are called quantum datasets (Perrier et al. 2021; Schatzki et al. 2021), as they exist on the quantum computer and hence must be analyzed on the quantum computer. Naturally this has led to the proposal of new models, the so-called quantum neural networks (QNNs) (Schuld et al. 2014), for analyzing (classifying, clustering, etc.) such data. Different architectures have been proposed for these models: dissipative QNNs (Beer et al. 2020), convolutional QNNs (Cong et al. 2019), recurrent QNNs (Bausch 2020), and others (Farhi and Neven 2018; Verdon et al. 2019).

Using quantum computers for data analysis is often called quantum machine learning (QML) (Biamonte et al. 2017; Schuld et al. 2015). This paradigm is general enough to also allow for analysis of classical data. One simply needs an embedding map that first maps the classical data to quantum states, and then such states can be analyzed by the QNN (Havlíček et al. 2019; LaRose and Coyle 2020; Lloyd et al. 2020). Here, the hope is that by accessing the exponentially large dimension of the Hilbert space and quantum effects like superposition and entanglement, QNNs can outperform their classical counterparts (i.e., neural networks) and achieve a coveted quantum advantage (Huang et al. 2021; Huang et al. 2021; Kübler et al. 2021; Liu et al. 2021; Aharonov et al. 2022).

Despite the tremendous promise of QML, the field is still in its infancy and rigorous results are needed to guarantee its success. Similar to classical machine learning, here one also wishes to achieve small training error (Larocca et al. 2021) and small generalization error (Banchi et al. 2021; Du et al. 2022), with the second usually hinging on the first. Thus, it is crucial to study how certain properties of a QML model can hinder or benefit its parameter trainability.

Such trainability analysis has become a staple in a closely related field called variational quantum algorithms (VQAs) (Cerezo et al. 2021). This field aims to optimize over sets of quantum circuits to discover efficient versions of quantum algorithms for accomplishing various tasks, such as finding ground states (Peruzzo et al. 2014), solving linear systems of equations (Bravo-Prieto et al. 2019; Huang et al. 2019; Xu et al. 2021), quantum compiling (Khatri et al. 2019; Sharma et al. 2020), factoring (Anschuetz et al. 2019), and dynamical simulation (Cirstoiu et al. 2020; Commeau et al. 2020; Endo et al. 2020; Li and Benjamin 2017). In this field, a great deal of effort has been put forward towards analyzing and avoiding the barren plateau phenomenon (McClean et al. 2018; Cerezo et al. 2021; Larocca et al. 2021; Marrero et al. 2020; Patti et al. 2021; Holmes et al. 2021; Holmes et al. 2021; Huembeli and Dauphin 2021; Zhao and Gao 2021; Wang et al. 2021). When a VQA exhibits a barren plateau, the cost function gradients vanish exponentially with the problem size, leading to an exponentially flat optimization landscape. Barren plateaus greatly impact the trainability of the parameters as it is impossible to navigate the flat landscape without expending an exponential amount of resources (Cerezo and Coles 2021; Arrasmith et al. 2021; Wang et al. 2021).

Given the large body of literature studying barren plateaus in VQAs, the natural question that arises is as follows: Are the gradient scaling and barren plateau results also applicable to QML? Making this connection is crucial, as it is not uncommon for QML proposals to employ certain features that have been shown to be detrimental for the trainability of VQAs (such as deep unstructured circuits McClean et al. 2018; Cerezo et al. 2021 or global measurements Cerezo et al.2021). Unfortunately, it is not straightforward to directly employ VQA gradient scaling results in QML models. Moreover, one can expect that in addition to the trainability issues arising in variational algorithms, other problems can potentially appear in QML schemes. This is due to the fact that QML models are generally more complex. For instance, in QML, one needs to deal with datasets (Schatzki et al. 2021), which further require the use of an embedding scheme when dealing with classical data (Havlíček et al. 2019; Lloyd et al. 2020; Schuld et al. 2020; LaRose and Coyle 2020). Additionally, QML loss functions can be more complicated than VQA cost functions, as the latter are usually linear functions of expectation values of some set of operators.

In this work, we study the trainability and the existence of barren plateaus in QML models. Our work represents a general treatment that goes beyond previous analysis of gradient scaling and trainability in specific QML models (Pesah et al. 2021; Sharma et al. 2022; Liu et al. 2021; Abbas et al. 2021; Haug and Kim 2021; Kieferova et al. 2021; Kiani et al. 2021; Tangpanitanon et al. 2020). Our main results are twofold. First, we rigorously connect the scaling of gradients in VQA-type cost functions and QML loss functions, so that barren plateau results in the variational algorithms literature can be used to study the trainability of QML models. This implies that known sources of barren plateaus extend to the realm of training QNNs, and thus, that many proposals in the literature need to be revised. Second, we present theoretical and numerical evidence that certain features in the datasets and embedding can additionally greatly affect the model’s trainability. These results show that additional care must be taken when studying the trainability of QML models. Moreover, they constitute a novel source for untrainability: dataset-induced barren plateaus. Finally, we note that, while this work focuses on how barren plateaus affect the trainability of QNNs, a closely-related phenomena known as exponential concentration has also been studied in quantum kernel-based models, which another popular approach of QML (Thanasilp et al. 2022).

2 Framework

2.1 Quantum machine learning

In this work, we consider supervised quantum machine learning (QML) tasks. First, as depicted in Fig. 1a let us consider the case where one has classical data. Here, the dataset is of the form {xi,yi}, where xi ∈ X is some classical data (e.g., a real-valued vector), and yi ∈ Y are values or labels associated with each xi according to some (unknown) model \(h:X\rightarrow Y\). One generates a training set \(\mathcal {S}=\{\boldsymbol {x}_{i},y_{i}\}^{N}_{i=1}\) of size N by sampling from the dataset according to some probability distribution. Using \(\mathcal {S}\) one trains a QML model, i.e., a parametrized map \(\tilde {h}_{\boldsymbol {\theta }}:X\rightarrow Y\), such that its predictions agree with those of h with high probability on \(\mathcal {S}\) (low training error), and on previously unseen cases (low generalization error).

Schematic diagram of a QML task. Consider a supervised learning task where the training dataset is either (a) classical or (b) quantum. The classical data points are of the form {xi,yi}, where xi ∈ X are input data (e.g., pictures) and yi ∈ Y are labels associated with each xi (e.g., cat/dog). An embedding channel \(\mathcal {E}_{\boldsymbol {x}_{i}}^{E}\) maps the classical data onto quantum states ρi. Alternatively, quantum data points are of the form {ρi,yi}, where ρi are quantum states in a Hilbert space \({\mathscr{H}}\), each with associated labels yi ∈ Y (e.g., ferromagnetic/paramagnetic phases). The quantum states (coming from classical or quantum datasets) are then sent through a parametrized quantum neural network (QNN), \(\mathcal {E}_{\boldsymbol {\theta }}^{QNN}\). By performing measurements on the output states, one estimates expectation values ℓi ≡ ℓi(𝜃;yi) which are then used to estimate the loss function \({\mathscr{L}}(\boldsymbol {\theta })\). Finally, a classical optimizer decides how to adjust the QNN parameters, and the loop repeats multiple times to minimize the loss function

For the QML model to access the exponentially large dimension of the Hilbert space, one needs to encode the classical data into a quantum state. As shown in Fig. 1a, one initializes m qubits in a fiduciary state such as |0〉 = |0〉⊗m and sends it through a completely positive trace-preserving (CPTP) map \(\mathcal {E}_{\boldsymbol {x}_{i}}^{E}\), so that the outputs are of the form

For instance, \(\mathcal {E}_{\boldsymbol {x}_{i}}^{E}\) can be a unitary channel given by the action of a parametrized quantum circuit whose gate rotation angles depends on the values in xi. While in general the embedding can be in itself trainable (Lloyd et al. 2020; Hubregtsen et al. 2021; Thumwanit et al. 2021), here the embedding is fixed.

Next, consider the case when the dataset is composed of quantum data (Cong et al. 2019; Schatzki et al. 2021). As one can see from Fig. 1b, here the dataset is of the form {ρi,yi}, where ρi ∈ R, and where \(R\subseteq {\mathscr{H}}\) is a set of quantum states in the Hilbert space \({\mathscr{H}}\). Then, yi ∈ Y are values or labels associated with each quantum state according to some (unknown) model \(h:R\rightarrow Y\). In what follows we simply denote as ρi the states obtained from the dataset, and clarify when appropriate if they were produced using an embedding scheme or not.

The quantum states ρi are then sent through a QNN, i.e., a parametrized CPTP map \(\mathcal {E}_{\boldsymbol {\theta }}^{QNN}\). Here, the outputs of the QNN are n-qubit quantum states (with n ≤ m) of the form

We note that Eq. 2 encompasses most widely used QNN architectures. For instance, in many cases, \(\mathcal {E}_{\boldsymbol {\theta }}^{QNN}\) is simply a trainable parametrized quantum circuit (Farhi and Neven 2018; Cong et al. 2019; Beer et al. 2020; Bausch 2020). Here, 𝜃 is a vector of continuous variables (e.g., gate rotation angles). More generally, 𝜃 could also contain discrete variables (gate placements) (Grimsley et al. 2019; Du et al. 2020; Bilkis et al. 2021). However, for simplicity, here we consider the case when one only optimizes over continuous QNN parameters.

The model predictions are obtained by measuring qubits of the QNN output states ρi(𝜃) in Eq. 2. That is, for a given data point (ρi,yi), one estimates the quantity

where \(O_{y_{i}}\) is a Hermitian operator. Here we recall that we use the term global measurement when \(O_{y_{i}}\) acts non-trivially on all n qubits. On the other hand, we say that the measurement is local when \(O_{y_{i}}\) acts non-trivially on a small constant number of qubits (e.g., one or two qubits). Finally, throughout training, the success of the QML model is quantified via a loss function \({\mathscr{L}}(\boldsymbol {\theta })\) that takes the form

where f(.) is first-order differentiable. By employing a classical optimizer, one trains the parameters in the QNN to solve the optimization task

Finally, one tests the generalization performance by assigning labels with the optimized model \(h_{\boldsymbol {\boldsymbol {\theta }}_{*}}\).

For the purpose of clarity, let us exemplify the previous concepts. Consider a binary classification task where the labels are Y = {− 1,1}. Here, one possible option to assign label 1(− 1) is to measure globally all qubits in the computational basis and estimate the number of output bitstrings with even (odd) parity. That is, the probability of assigning label yi is given by the expectation value

where \(O_{1}={\sum }_{\boldsymbol {z}:\text {even}}|\boldsymbol {z}\rangle \!\langle \boldsymbol {z}|\) and \(O_{-1}={\sum }_{\boldsymbol {z}:\text {odd}}|\boldsymbol {z}\rangle \!\langle \boldsymbol {z}|\). Alternatively, rather than computing the probability of assigning a given label, the measurement outcome can be a label prediction by itself. For instance, the latter can be achieved with the global measurement

as this is a number in [− 1,1].

Here, let us make several important remarks. First, we note that Eqs. 6 and 7 are precisely of the form in Eq. 3, where one computes the expectation value of an operator over the output state of the QNN. Then, we remark that both approaches are equivalent due to the fact that O1 − O− 1 = Z⊗n, and thus

and conversely

Finally, for the purpose of accuracy testing, one needs to map from the continuous set of outcomes of \(\tilde {y}_{i}(\boldsymbol {\theta })\) or pi(yi|𝜃) to the discrete set Y. Thus, the QML model \(\tilde {h}_{\boldsymbol {\theta }}\) assigns label yi if pi(yi|𝜃) ≥ pi(−yi|𝜃).

Here it is also worth recalling two widely used loss functions in the literature. First, the mean squared error loss function is given by

where \(\tilde {y}_{i}(\boldsymbol {\theta })\) is the model-predicted label for the data point ρi obtained through some expectation value as in Eq. 3 (see for instance the label of Eq. 7). Then, the negative log-likelihood loss function is defined as

where pi(yi|𝜃) is the probability of assigning label yi to the data point ρi obtained through some expectation value as in Eq. 3 (see for instance the probability of Eq. 6). Moreover, we recall that the expectation of the negative log-likelihood Hessian is given by the Fisher Information (FI) matrix. In practice, one can estimate the FI matrix via the empirical FI matrix

The FI matrix plays a crucial role in natural gradient optimization methods (Amari 1998). Here, the FI measures the sensitivity of the output probability distributions to parameter updates. Hence, such optimization methods rely on the estimation of the FI matrix to optimize the QNN parameters. Below we discuss how such optimization methods are affected when the QML model exhibits a vanishing gradient.

2.2 Quantum landscape theory and barren plateaus

Recently, there has been a tremendous effort in developing the so-called quantum landscape theory (Arrasmith et al. 2021; Larocca et al. 2021) to analyze properties of quantum loss landscapes, how they emerge, and how they affect the parameter optimization process. Here, one of the main topics of interest is that of barren plateaus (BPs). When a loss function exhibits a BP, the gradients vanish exponentially with the number of qubits, and thus an exponentially large number of shots are needed to navigate through the flat landscape.

Since BPs have been mainly studied in the context of variational quantum algorithms (VQAs) (Cerezo et al. 2021; McClean et al. 2018; Cerezo et al. 2021; Larocca et al. 2021; Marrero et al. 2020; Patti et al. 2021; Holmes et al. 2021; Holmes et al. 2021; Huembeli and Dauphin 2021; Zhao and Gao 2021; Wang et al. 2021), we here briefly recall that in a VQA implementation, the goal is to minimize a linear cost function that is usually of the form

Here ρ is the initial state, U(𝜃) a trainable parametrized quantum circuit, and H a Hermitian operator. BPs for cost functions such as that in Eq. 13 have been shown to arise for global operators H (Cerezo et al. 2021), as well as due to certain properties of U(𝜃) such as its structure and depth (McClean et al. 2018; Larocca et al. 2021; Wang et al. 2021), its expressibility (Holmes et al. 2021; Holmes et al. 2021), and its entangling power (Sharma et al. 2022; Marrero et al. 2020; Patti et al. 2021).

There are several ways in which the BP phenomenon can be characterized. The most common is through the scaling of the variance of cost function partial derivatives, as in a BP one has

where ∂νC(𝜃) = ∂C(𝜃)/∂𝜃ν, 𝜃ν ∈𝜃, and for α > 1. Here, the variance is taken with respect to the set of parameters 𝜃. We recall that here one also has that \(\mathbb {E}[\partial _{\nu } C(\boldsymbol {\theta })]=0\), implying that gradients exponentially concentrate at zero. In addition, a BP can also be characterized through the concentration of cost function values, so that \(\text {Var}[ C(\boldsymbol {\theta }_{A})-C(\boldsymbol {\theta }_{B})] \in \mathcal {O}(1/{\upbeta }^{n})\), where 𝜃A and 𝜃B are two randomly sampled sets of parameters, and β > 1 (Arrasmith et al. 2021). Finally, the presence of a BP can be diagnosed by inspecting the eigenvalues of the Hessian and the FI matrix, as these become exponentially vanishing with the system size (Huembeli and Dauphin 2021; Abbas et al. 2021) (see also Section 3.3 below).

Despite tremendous progress in understanding BPs for VQAs, there are only a few results which analyze the existence of BPs in QML settings (Pesah et al. 2021; Sharma et al. 2022; Liu et al. 2021; Abbas et al. 2021; Haug and Kim 2021; Kieferova et al. 2021; Kiani et al. 2021). In fact, while in the VQA community, there is a consensus that certain properties of an algorithm should be avoided (such as global measurements), these same properties are still widely used in the QML literature (e.g., global parity measurements as those in Eqs. 6 and 7). Thus, bridging the VQA and QML communities would allow the use of trainability and gradient scaling results across both fields.

2.3 VQAs versus QML

Let us first recall that, as previously mentioned, training a VQA or the QNN in a QML model usually implies optimizing the parameters in a quantum circuit. However, despite this similarity, there are some key differences between the VQA and a QML framework, which we summarize in Table 1. First, QML is based on training a QNN using data from dataset. Moreover, the input quantum stats to the QML model contain information that the model is trying to learn. On the other hand, in VQAs, there is usually no training data, but rather a single input state sent trough a parametrized quantum circuit. Here, such initial state is generally an easy-to-prepare state such as |0〉.

Then, while VQAs generally deal with quantum states, QML models are envisioned to work both on classical and quantum data. Thus, when using a QML model with classical data, there is an additional encoding step that depends on the choice of embedding channel \(\mathcal {E}_{\boldsymbol {x}_{i}}^{E}\). When the QML model deals with quantum data, the input states are usually non-trivial states.

Finally, we note that for most VQAs, the cost function of Eq. 13 is a linear function in the matrix elements of U(𝜃)ρU‡(𝜃). For QML, however, the loss function of Eq. 4 can be a more complex non-linear function of the matrix elements of ρi(𝜃) (see for instance the log-likelihood in Eq. 11). Here, it is still worth noting that the expectation values ℓi(𝜃;yi) of Eq. 3 are exactly of the form of VQA cost functions, as both of these are linear functions of the parametrized output states.

3 Analytical results

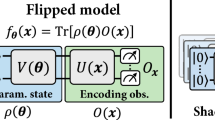

The differences between the VQA and QML frameworks discussed in the previous section mean that known gradient scaling results for VQAs do not automatically or trivially extend to the QML setting. Nevertheless, the goal of this section is to establish and formalize the connection between these two frameworks, as summarized in Fig. 2. Namely, in Section 3.1, we link the variance of the partial derivative of QML loss functions of the form of Eq. 4 to that of partial derivatives of linear expectation values, which are the quantities of study for VQAs (see Eq. 13). This allows us to show that, under standard assumptions, the landscapes of QML loss functions such as the mean-squared error loss in Eq. 10 and the negative log-likelihood loss in Eq. 11 have BPs in all the settings that standard VQAs do. In addition, as we discuss in Section 3.2, additional care should be taken to guarantee the trainability of a QML model, as here one has to deal with datasets and embeddings. This leads us to introduce a new mechanism for untrainability arising from the dataset itself. Finally, in Section 3.3, we demonstrate that under conditions which induce a BP for the negative log-likelihood loss function, the matrix elements of the empirical Fisher Information matrix also exponentially vanish.

Summary of results. (a) In this work, we bridge the gap between trainability results for the VQA and QML settings. Namely, we show that the considered class of supervised QML models will exhibit a barren plateau (BP) in all settings that standard VQAs do. This means that features such as global measurements, deep circuits, or highly entangling circuits should be avoided in QML. (b) We present analytical and numerical evidence that aspects of the dataset can be an additional source of BPs. For instance, embedding schemes for classical data can lead to states that have highly mixed reduced states and thus display trainability issues. This is a novel source for BPs which we call dataset-induced BPs

3.1 Connecting the gradient scaling of QML loss functions and linear cost functions

In the following theorem, we study the variance of the partial derivative for the generic loss function defined in Eq. 4. We upper bound this quantity by making a connection to the variance of partial derivatives of linear expectation values of the form of Eq. 3. As shown in Section Appendix of the Appendix, the following theorem holds.

Theorem 1 (Variance of partial derivative of generic loss function)

Consider the partial derivative of the loss function \({\mathscr{L}}(\boldsymbol {\theta })\) in Eq. 4 taken with respect to variational parameter 𝜃ν ∈𝜃. We denote this quantity as \(\partial _{\nu } {\mathscr{L}}(\boldsymbol {\theta }) \equiv \partial {\mathscr{L}}(\boldsymbol {\theta })/\partial \theta _{\nu }\). The following inequality holds

where we used ℓi(𝜃) as a shorthand notation for ℓi(𝜃;yi) in Eq. 3, and where the expectation values are taken over the parameters 𝜃. Here we defined

where \(\frac {\partial f}{\partial \ell _{i}}\) is the i th entry of the Jacobian Jℓ with ℓ = (ℓ1,…,ℓN), and where we denote as \(\max \limits _{\ell _{i}}\left |\frac {\partial f}{\partial \ell _{i}}\right |\) the maximum value of the partial derivative of f(ℓi,yi).

Theorem 1 establishes a formal relationship between gradients of generic loss functions and linear expectation values, which includes linear cost functions in VQA frameworks. This result provides a bridge to bound the variance of partial derivatives of QML loss functions based on known behavior of linear cost functions. As shown next, this allows us to use gradient scaling results from the VQA literature in QML settings.

The direct implication of Theorem 1 can be understood as follows. Consider the case when gi is at most polynomially increasing in n for all ρi in the training set. Then, if a facet of the model causes linear cost functions to exhibit BPs, then \({\mathscr{L}}(\boldsymbol {\theta })\) will also suffer from BPs. That is, if Var[∂νℓi(𝜃)] is exponentially vanishing, then so will be \(\text {Var}[\partial _{\nu } {\mathscr{L}}(\boldsymbol {\theta })]\). Let us now explicitly demonstrate the implications of Theorem 1 for the mean squared error and the negative log-likelihood cost functions.

Corollary 1 (Barren plateaus in mean squared error and negative log-likelihood loss functions)

Consider the mean squared error loss function \({\mathscr{L}}_{mse}\) defined in Eq. 10 and the negative log-likelihood loss \({\mathscr{L}}_{\log }\) defined in Eq. 11, with respective model-predicted labels \(\tilde {y}_{i}(\boldsymbol {\theta })\) and model-predicted probabilities pi(yi|𝜃) both of the form of Eq. 3. Suppose that the QNN has a BP for the linear expectation values, i.e., Eq. 14 is satisfied for all \(\tilde {y}_{i}(\boldsymbol {\theta })\) and pi(yi|𝜃). Then, assuming that \(\tilde {y}_{i}(\boldsymbol {\theta }) \in \mathcal {O}(\text {poly}(n)) \forall i\) we have

for α > 1. Similarly, assuming that pi(yi|𝜃) ∈ [b,1]∀i,𝜃, where b ∈Ω(1/poly(n)), we have

for α > 1.

We note the assumption on \(\tilde {y}_{i}(\boldsymbol {\theta })\) in Corollary 1 can be made to be generically satisfied as label values are usually bounded by a constant, and if not they can always be normalized by classical post-processing. The assumption on the possible values of pi(yi|𝜃) is equivalent to clipping model-predicted probabilities such that they are strictly greater than zero, which is a common practice when using the negative log-likelihood cost function (Pedregosa et al. 2011; Abadi et al. 2016).

Corollary 1 explicitly implies that, under mild assumptions, previously established BP results for VQAs are applicable to QML models that utilize mean square error and negative log-likelihood loss functions. Consequently, existing QML proposals with these BP-induced features, such those as summarized in Fig. 2, need to be revised in order to avoid an exponentially flat loss function landscape.

We note that, as shown in the Appendix D, the above results in Corollary 1 can also be extended to the generalized mean square error loss function used in multivariate regression tasks.

3.2 Dataset-induced barren plateaus

In this section, we argue that the dataset can negatively impact QML trainability if the input states to the QNN have high levels of entanglement and if the QNN employs local gates (which is the standard assumption in most widely used QNNs Farhi and Neven 2018; Cong et al. 2019; Beer et al. 2020; Bausch2020). This is due to the fact that reduced states of highly entangled states can be very close to being maximally mixed, and it is hard to train local gates acting on such states. Note that this phenomenon is not expected to arise in a standard VQA setting where the input state is considered to be a trivial tensor-product state.

To illustrate this issue, we will present an example where a VQA does not exhibit a BP, but a QML model can still have a dataset-induced BP. Consider a model where the QNN is given by a single layer of the so-called alternating layered ansatz (Cerezo et al. 2021). Here, \(\mathcal {E}_{\boldsymbol {\theta }}^{QNN}\) is a circuit composed a tensor product of ξ s-qubit unitaries such that n = sξ. That is, the QNN is of the form \(U(\boldsymbol {\theta })=\bigotimes _{k=1}^{\xi } U_{k}(\boldsymbol {\theta }_{k})\), and where Uk(𝜃k) is a general unitary acting on s qubits. Furthermore, consider a linear loss function constructed from local expectation values of the form

where |0〉 〈0|j denotes the projector on the j th qubit, and where ρi(𝜃) is defined in Eq. 2. We now quote the following result from Ref. (Cerezo et al. 2021) on the variance of the partial derivative of quantities of the form in Eq. 19.

Proposition 1 (From Supplementary Note 5 of Ref. Cerezo et al. 2021)

Suppose that \(\mathcal {E}_{\boldsymbol {\theta }}^{QNN}\) is given by an application of a single layer of the alternating layered ansatz consisting of a tensor product of s-qubit unitaries. Consider the partial derivative of the local cost in Eq. 19 taken with respect to a parameter 𝜃ν appearing in the h th unitary. If each unitary forms a local 2-design on s qubits, we have

where rn,s ∈Ω(1/poly(n)), and where we denote DHS(A,B) = Tr[(A − B)2] as the Hilbert-Schmidt distance. Here,  is the the maximally mixed state on s qubits and \(\rho _{i}^{(h)}=\text {Tr}_{\overline {h}}[\rho _{i}]\) is the reduced input state on the s qubits acted upon by the h th unitary.

is the the maximally mixed state on s qubits and \(\rho _{i}^{(h)}=\text {Tr}_{\overline {h}}[\rho _{i}]\) is the reduced input state on the s qubits acted upon by the h th unitary.

First, let us remark that Proposition 1 shows that a standard VQA that uses the cost in Eq. 19 (tensor product ansatz and local measurement) does not exhibit a BP. This is due to the fact that when the input state to the VQA is |0〉⊗n, then  , and hence Var[∂νℓi(𝜃)] ∈Ω(1/poly(n)) (assuming s does not scale with n).

, and hence Var[∂νℓi(𝜃)] ∈Ω(1/poly(n)) (assuming s does not scale with n).

However, for a QML setting, Proposition 1, combined with Theorem 1, implies that the QML model is susceptible to dataset-induced BPs even with simple QNNs and local measurements. Specifically, if the reduced input state is exponentially close to the maximally mixed state then one has a BP. Examples of such datasets are Haar-random quantum states (Brandao and Horodecki 2010) or classical data encoded with a scrambling unitary (Holmes et al. 2021), as the reduced states can be shown to concentrate around the maximally mixed state through Levy’s lemma (Ledoux 2001).

We note that this phenomenon is similar to entanglement-induced BPs (Marrero et al. 2020; Sharma et al. 2022). However, in a typical entanglement-induced BP setting, it is the QNN that generates high levels of entanglement in its output states and thus leads to trainability issues. In contrast, here it is the quantum states obtained from the dataset that already contain large amounts of entanglement even before getting to the QNN.

It is important to make a distinction between the dataset-induced BPs for classical and quantum data. Specifically, special care must be taken when using classical datasets as here one can actually choose the embedding scheme, and this choice affects the amount of entanglement that the states ρi will have. Such a choice is typically not present for quantum datasets.

For classical datasets, let us make the important remark that (in a practical scenario) the embedding is not able to prevent a BP which would otherwise exist in the model. As discussed in Section 2.1, the embedding process simply assigns a quantum state \(\rho _{i}=\mathcal {E}_{\boldsymbol {x}_{i}}^{E}(|{\boldsymbol {0}}\rangle \langle {\boldsymbol {0}}|)\) to each classical data point xi. Thus, previously established BP results that hold independently of the input state such as global cost functions (Cerezo et al. 2021), deep circuits (McClean et al. 2018), expressibility (Holmes et al. 2021), and noise (Wang et al. 2021) will hold regardless of the embedding strategy.

Our previous results further illuminate that the choice of embedding strategy is a crucial aspect of QML models with classical data, as it can affect that model’s trainability. As argued in (Havlíček et al. 2019), a necessary condition for obtaining quantum advantage is that inner products of data-embedded states \(\rho _{i}=\mathcal {E}_{\boldsymbol {x}_{i}}^{E}(|{\boldsymbol {0}}\rangle \langle {\boldsymbol {0}}|)\) should be classically hard to estimate. We note that of course this however is not sufficient to guarantee that the embedding is useful. For instance, for a classification task, it does not guarantee that states are embedded in distinguishable regions of the Hilbert space (Lloyd et al. 2020). Thus, currently, one has the following criteria on which to design encoders for QML:

-

1.

Classically hard to simulate.

-

2.

Practical usefulness.

From the results presented here, a third criterion should be carefully considered when designing embedding schemes:

-

3.

Not inducing trainability issues.

This opens up a new research direction of trainability-aware quantum embedding schemes.

Here we briefly note that while Proposition 1 was presented for a tensor product ansatz, a similar result can be obtained for more general QNNs such as the hardware efficient ansatz (Cerezo et al. 2021) or the quantum convolutional neural network (Pesah et al. 2021). For these architectures, it is found that Var[∂νℓi(𝜃)] is upper bounded by a quantities such as  . However, while the form of the upper bound is more complex and cumbersome to report, the dataset-induced BP will arise for these architectures.

. However, while the form of the upper bound is more complex and cumbersome to report, the dataset-induced BP will arise for these architectures.

3.3 Empirical FI matrix in the presence of a barren plateau

Recently, the eigenspectrum of the empirical FI matrix was shown to be related to gradient magnitudes of the QNN loss function (Abbas et al. 2021). Here we investigate this connection in more detail. Namely, we discuss how a BP in the QML model affects the empirical FI matrix and natural gradient-based optimizers (which employ the FI matrix).

In what follows, we show that under the conditions for which the negative log-likelihood loss gradients vanish exponentially, the matrix elements of the empirical FI matrix \(\tilde {F}(\boldsymbol {\theta })\), as defined in Eq. 12, also vanish exponentially. This result is complementary to and extends the results in (Abbas et al. 2021). First, consider the following proposition.

Proposition 2

Under the assumptions of Corollary 1 for which the negative log-likelihood loss function has a BP according to Eq. 14, and assuming that the number of trainable parameters in the QNN is in \( \mathcal {O}(\text {poly}(n))\), we have

where \(\tilde {F}_{\mu \nu }(\boldsymbol {\theta })\) are the matrix entries of \(\tilde {F}(\boldsymbol {\theta })\) as defined in Eq. 12.

While Proposition 2 shows that the expectation value of the FI matrix elements vanish exponentially, this result is not enough to guarantee that (with high probability) the matrix elements will concentrate around their expected value. Next we present a stronger concentration result which is valid for QNNs where the parameter shift rule holds (Mitarai et al. 2018; Schuld et al. 2019).

Corollary 2

Under the assumptions of Corollary 1 for which the negative log-likelihood loss function has a BP according to Eq. 14, and assuming that the QNN structure allows for the use of the parameter shift rule, we have

where \(\mathbb {E}[\tilde {F}_{\mu \nu }]\leq H(n)\) and \(Q(n),H(n)\in \mathcal {O}(1/\alpha ^{n})\).

We note that these two results imply that when the linear expectation values exhibit a BP, then one requires an exponential number of shots to estimate the entries of the FI matrix, and concomitantly its eigenvalues. This also implies that optimization methods that use the FI, such as natural gradient methods, cannot navigate the flat landscape of the BP (without spending exponential resources).

4 Numerical results

In this section, we present numerical results studying the trainability of QNNs in supervised binary classification tasks with classical data. Specifically, we analyze the effect of the dataset, the embedding, and the locality of the measurement operator on the trainability of QML models. In what follows, we first describe the dataset, embedding, QNN, measurement operators, and loss functions used in our numerics.

We consider two different types of datasets, one composed of structured data and the other one of unstructured random data. This allows us to further analyze how the data structure can affect the trainability of the QML model. First, the structured dataset is formed from handwritten digits from the MNIST dataset (LeCun 1998). Here, greyscale images of “0” and “1” digits are converted to length-n real-valued vectors x using a principal component analysis method (Jolliffe 2005) (see also Appendix F for a detailed description). Then, for the unstructured dataset, we randomly generate vectors x of length n by uniformly sampling each of their components from [−π,π]. In addition, each random data point is randomly assigned a label.

In all numerical settings that we study, the embedding \(\mathcal {E}_{\boldsymbol {x}_{i}}^{E}\) is a unitary acting on n qubits, which we denote as V (xi). Thus, the output state of the embedding is a pure state of the form |ψ(xi)〉 = V (xi)|0〉. As shown in Fig. 3, we use three different embedding schemes for V (xi). The first is the tensor product embedding (TPE). The TPE is composed of single-qubit rotations around the x-axis so that |ψ(xi)〉 is obtained by applying a rotation on the j th qubit whose angle is the j th component of the vector xi. The second embedding scheme is presented in Fig. 3b, and is called the hardware efficient embedding (HEE). In a single layer of the HEE, one applies rotations around the x-axis followed by entangling gates acting on adjacent pairs of qubits. Finally, we refer to the third scheme as the classically hard embedding (CHE). The CHE was proposed in Havlíček et al. (2019), and is based on the fact that the inner products between output states of V (xi) are believed to be hard to classically simulate as the depth and width of the embedding circuit increase. The unitaries W(xi) in each layer of the CHE are composed of single- and two-qubit gates that are diagonal in the computational basis. We refer the reader to Appendix F.2 for a description of the unitaries W(xi).

Circuits for embedding unitaries used in our numerics. (a) Tensor product embedding (TPE), composed of single qubit rotations around the x-axis. Here, the encoded state ρi is obtained by applying a rotation Rx on the j th qubit whose angle is the j th component of the vector xi. (b) Hardware efficient embedding (HEE). A layer is composed of single qubit rotation around the x-axis whose rotation angles are assigned in the same way as in the TPE. After each layer of rotations, one applies entangling gates acting on adjacent pairs of qubits. (c) Classically hard embedding (CHE). Each unitary W(xi) is composed of single- and two-qubit gates that are diagonal in the computational basis

For the QNN in the model, we consider two different ansatzes. The first is composed of a single layer of parametrized single-qubit rotations Ry about the y-axis. Here, the output of the QNN is an n-qubit state. The second QNN we consider is the quantum convolutional neural network (QCNN) introduced in Cong et al. (2019). The QCNN is composed of a series of convolutional and pooling layers that reduce the dimension of the input state while preserving the relevant information. In this case, the output of the QNN is a 2-qubit state. We refer the reader to Appendix F.3 for a more detailed description of the QCNN we used.

When using the QNN composed of Ry rotations, we apply a global measurement on all qubits to compute the expectation value of the global operator Z⊗n. Thus, the predicted label and label probabilities are given by Eqs. 7–9. Since global measurements are expected to lead to trainability issues, we also propose a local measurement where one computes the expectation value of Z⊗2 over the reduced state of the middle two qubits. Note that this is equivalent to computing the parity of the length-2 output bitstrings. On the other hand, when using the QCNN, one naturally has to perform a local measurement as here the output is a 2-qubit state. Thus, here we also compute the expectation value of Z⊗2.

Finally, we note that in our numerics, we study the scaling of the gradient of both the mean-squared error and log-likelihood loss functions of Eqs. 10 and 11, respectively. Moreover, we also consider the scaling of the gradients of the linear expectation value in Eq. 3.

4.1 Global measurement

Here we first study the effect of performing global measurements on the output states of the QNN. As shown in Cerezo et al. (2021), we expect that linear functions of global measurements will have BPs. For this purpose, we consider both structured (MNIST) and unstructured (random) datasets encoded through the TPE and CHE schemes (see Fig. 3). The QNN is taken to be the tensor product of single qubit rotations and we measure the expectation value of Z⊗n.

Figure 4 presents results where we numerically compute the variance of partial derivatives of the linear expectation values in Eq. 3, the mean squared error loss function in Eq. 10, and the negative log-likelihood loss function in Eq. 11 versus the number of qubits n. The variance is evaluated over 200 random sets of QNN parameters and the dataset is composed of N = 10n data points.

Variance of the partial derivative versus number of qubits. Here we consider linear expectation, log-likelihood, and mean squared error loss functions with global measurement. In (a)–(b), we consider an unstructured (random) datasets, while in (c)–(d), a structured (MNIST) dataset. The classical data is encoded via the TPE (a–c) and the CHE (b–d) schemes. We plot the variance of the partial derivative versus number of qubits for all loss functions. The partial derivative is taken over the first parameter of the tensor product QNN

Figure 4 shows that, as expected from Cerezo et al. (2021), the variance of the partial derivative of the linear loss function vanishes exponentially with the system size, indicating the presence of a BP according to Eq. 14. Moreover, the plot shows that for all dataset and embedding schemes considered, the variance of the partial derivatives of the log-likelihood and mean squared error loss functions also vanish exponentially with the system size. This scaling is in accordance with the results of Corollary 1.

Here we note that the presence of BPs can be further characterized through the spectrum of the empirical FI matrix (Abbas et al. 2021). As mentioned in Section 3.3, in a BP, the magnitudes of the eigenvalues of the empirical FI matrix will decrease exponentially as the number of qubits increases. In Fig. 5a and c, we plot the trace of the empirical FI matrix versus the number of qubits for the same structured and unstructured datasets of Fig. 4. As expected, the trace decreases exponentially with the problem size when using a global measurement due to the loss function exhibiting a BP. While the trace of the empirical FI provides a coarse-grained study of the eigenvalues, we also show in Fig. 5b and d representative results for the eigenvalue spectrum distributions of the empirical FI matrix. One can see here that all eigenvalues become exponentially vanishing with increasing system size.

Trace and spectrum of the Fisher Information matrix in a BP. The top panels correspond to an unstructured (random) dataset, with the bottom to a structured (MNIST) dataset. In both cases, the data is encoded using the CHE scheme and then sent through the tensor product QNN with a global measurement. In (a) and (c), we plot the variance of the partial derivatives of the log-likelihood loss function, and the expectation value of the trace of the empirical FI matrix versus the number of qubits n. Here, the expectation values are taken over 200 different sets of QNN parameters. In (b) and (d), we show the eigenvalues distribution of the empirical FI matrix for increasing numbers of qubits

Our results here show that even for a trivial QNN, and independently of the dataset and embedding scheme, global measurements in the loss function lead to exponentially small gradients, and thus to BPs in QML models. Moreover, we have also verified that the eigenvalues of the empirical FI matrix are, as expected from Corollary 2, exponentially small in a BP, showing that an exponential number of shots are needed to accurately estimate the matrix elements, eigenvalues, and trace of the empirical FI matrix. This precludes the possibility of efficiently estimating quantities such as the normalized empirical FI matrix \(\tilde {F}(\boldsymbol {\theta })/\text {Tr}[\tilde {F}(\boldsymbol {\theta })]\) (Abbas et al. 2021).

4.2 Dataset and embedding-induced barren plateaus

Here we numerically study how the embedding scheme and the dataset can potentially lead to trainability issues. Specifically, we recall from Section 3.2 that highly entangling embedding schemes can lead to reduced sates being concentrated around the maximally mixed state, and thus be harder to train local gates on. To check how close reduced states at the output of the CHE and HEE schemes are, we average the Hilbert-Schmidt distance  between the maximally mixed state and the reduced state \(\rho _{i}^{(2)}\) of the central two qubit. In addition, we further average over 2000 data points from a structured (MNIST) and unstructured (random) dataset.

between the maximally mixed state and the reduced state \(\rho _{i}^{(2)}\) of the central two qubit. In addition, we further average over 2000 data points from a structured (MNIST) and unstructured (random) dataset.

Results are shown in Fig. 6a and d, where we plot  versus the number of qubits for the CHE scheme with different number of layers. As expected, here we see that increasing the number of layers in the embedding leads to higher entanglement in the encoded states ρi, and thus to reduced states being closer to the maximally mixed state. Moreover, here we note that the structure of the dataset also plays a role in the mixedness of the reduced state, as the Hilbert-Schmidt distances

versus the number of qubits for the CHE scheme with different number of layers. As expected, here we see that increasing the number of layers in the embedding leads to higher entanglement in the encoded states ρi, and thus to reduced states being closer to the maximally mixed state. Moreover, here we note that the structure of the dataset also plays a role in the mixedness of the reduced state, as the Hilbert-Schmidt distances  for the unstructured random dataset can be up to one order of magnitude smaller than those for structured dataset.

for the unstructured random dataset can be up to one order of magnitude smaller than those for structured dataset.

Effect of CHE scheme with local measurement on trainability. The top panels correspond to an unstructured (random) dataset, with the bottom to a structured (MNIST) dataset. In all cases, we used the CHE scheme with different number of layers. Panels (a) and (d) show the Hilbert-Schmidt distance  versus the number of qubits. Here, \(\rho ^{(2)}_{i}\) is the reduced state of the central two qubits. Panels (b) and (e) show the variances of the partial derivative of the log-likelihood loss function versus the number of qubits n for the tensor product QNN, with panels (c) and (f) for a QCNN. We also plot as reference the variances using the non-entangling TPE scheme when the loss is evaluated over the dataset and over a single data point

versus the number of qubits. Here, \(\rho ^{(2)}_{i}\) is the reduced state of the central two qubits. Panels (b) and (e) show the variances of the partial derivative of the log-likelihood loss function versus the number of qubits n for the tensor product QNN, with panels (c) and (f) for a QCNN. We also plot as reference the variances using the non-entangling TPE scheme when the loss is evaluated over the dataset and over a single data point

In Fig. 6, we also show the variance of the log-likelihood loss function partial derivative as a function of the number of qubits and the number of CHE layers. Here we use both the tensor product QNN (panels b and e) and the QCNN (panels c and f), and we compute local expectation values of Z⊗2. Moreover, here the dataset is composed of N = 10n points, and the variance is taken by averaging over 200 sets of random QNN parameters. Since both the tensor product QNN with local cost and the QCNN are not expected to exhibit BPs with no training data and separable input states (see Cerezo et al. 2021 and Pesah et al. 2021, respectively), any unfavorable scaling arising here will be due to the structure of the data or the embedding scheme. To ensure this is the case, we plot two additional quantities as references. The first is obtained for the case when the embedding scheme is simply replaced with the TPE, representing the scenario of a non-entangling encoder. In the second, we also use the TPE encoder but rather than computing the loss function over the whole dataset, we only compute it over a single data point, i.e., \({\mathscr{L}}_{\log }(\boldsymbol {\theta })=-\log p_{i}\). Then, for this single-data point loss function, we study the scaling of the partial derivative variance and we finally average over the dataset, i.e., \({\sum }_{i} \text {Var}[\partial _{\nu } \log p_{i}]/N\). This allows us to characterize the effect of the size and the randomness associated with the dataset.

For the unstructured random dataset, we can see that \(\text {Var}[\partial _{\nu } {\mathscr{L}}(\boldsymbol {\theta })]\) appears to vanish exponentially for both QNNs and for all considered number of layers in the CHE. This shows that the randomness in the dataset ultimately translates into randomness in the loss function and in the presence of a BP. For the structured dataset, we can see that \(\text {Var}[\partial _{\nu } {\mathscr{L}}(\boldsymbol {\theta })]\) does not exhibit an exponentially vanishing behavior for small number of CHE layers. However, as the depth of the embedding increases, the variances become smaller. In particular, when using a QCNN, increasing the number of CHE layers appears to change the behavior of \(\text {Var}[\partial _{\nu } {\mathscr{L}}(\boldsymbol {\theta })]\) towards a more exponentially vanishing scaling. Finally, we observe that the variance of the loss function constructed from a single data point is always larger than the loss constructed from N data points. This indicates that the larger the dataset, the smaller the variance.

To further study this phenomenon, in Fig. 7, we repeat the calculations of Fig. 6 but using the HEE scheme instead. That is, we show the scaling of  and \(\text {Var}[\partial _{\nu } {\mathscr{L}}(\boldsymbol {\theta })]\) versus the number of qubits for the HEE scheme with different number of layers and for structured (MNIST) and unstructured (random) datasets.

and \(\text {Var}[\partial _{\nu } {\mathscr{L}}(\boldsymbol {\theta })]\) versus the number of qubits for the HEE scheme with different number of layers and for structured (MNIST) and unstructured (random) datasets.

Effect of HEE scheme with local measurement on trainability. The top panels correspond to an unstructured (random) dataset, with the bottom to a structured (MNIST) dataset. In all cases, we use the HEE scheme with increasing number of layers. Panels (a) and (d) show the Hilbert-Schmidt distance  versus the number of qubits. Here, \(\rho ^{(2)}_{i}\) is the reduced state of the central two qubits. Panels (b) and (e) show the variances of the partial derivative of the log-likelihood loss function versus the number of qubits n for the tensor product QNN, with panels (c) and (f) for a QCNN. We also plot as reference the variances using the non-entangling TPE scheme when the loss is evaluated over the dataset and over a single data point

versus the number of qubits. Here, \(\rho ^{(2)}_{i}\) is the reduced state of the central two qubits. Panels (b) and (e) show the variances of the partial derivative of the log-likelihood loss function versus the number of qubits n for the tensor product QNN, with panels (c) and (f) for a QCNN. We also plot as reference the variances using the non-entangling TPE scheme when the loss is evaluated over the dataset and over a single data point

Here, the effect of the entangling power of the embedding on the Hilbert-Schmidt distance  can be seen in panels a and d of Fig. 7. Therein, one can see that as the number of layers of the HEE increases (and thus also entangling power Holmes et al. 2021), the distance to the maximally mixed state vanishes exponentially with the system size. One can see here that, independently of the structure of the dataset, the large entangling power of the embedding scheme leads to states that are essentially maximally mixed on any reduced pair of qubits. As seen in Fig. 7b, c, e, and f, the latter then translates into an exponentially vanishing \(\text {Var}[\partial _{\nu } {\mathscr{L}}(\boldsymbol {\theta })]\) and thus a BP.

can be seen in panels a and d of Fig. 7. Therein, one can see that as the number of layers of the HEE increases (and thus also entangling power Holmes et al. 2021), the distance to the maximally mixed state vanishes exponentially with the system size. One can see here that, independently of the structure of the dataset, the large entangling power of the embedding scheme leads to states that are essentially maximally mixed on any reduced pair of qubits. As seen in Fig. 7b, c, e, and f, the latter then translates into an exponentially vanishing \(\text {Var}[\partial _{\nu } {\mathscr{L}}(\boldsymbol {\theta })]\) and thus a BP.

These results indicate that the choice of dataset and embedding method can have a significant effect on the trainability of the QML model. Specifically, QNNs that have no BPs when trained on trivial input states can have exponentially vanishing gradients arising from either the structure of the dataset, or the large entangling power of the embedding scheme. Moreover, these results show that the Hilbert-Schmidt distance can be used as an indicator of how much the embedding can potentially worsen the trainability of the model.

4.3 Practical usefulness of the embedding scheme and local measurements

As discussed in Section 3.2, a good embedding satisfies (at least) the following three criteria: classically hard to simulate, practical usefulness, not inducing trainability issues. In the previous sections, we have studied how the trainability of the model can be affected by the embedding choice and dataset. Here we point out another subtlety, namely, that “classically-hard-to-simulate” and “practical usefulness,” do not always coincide. Particularly, we here show that the CHE scheme can lead to poor performance for some standard benchmarking test.

For this purpose, we choose the QCNN architecture, which uses a local measurement and is known to not exhibit a BP (Pesah et al. 2021), to solve the task of classifying handwritten digits “0” and “1” from the MNIST dataset (see also Hur et al. 2021 for a similar study using QCNNs for MNIST classification). Then, we compare two choices for the embedding scheme. The first is a classically simulable scheme given by a single layer of the HEE, whilst the other is the (conjectured) classically hard to simulate two-layered CHE.

To make this comparison fair, both encoders are subjected to an identical setting: QCNN implemented on n = 8 qubits, compute the expectation values of Z⊗2, use the log-likelihood loss function, and have a training and testing datasets of respective size 400 and 40. In all cases, the classical optimizer used is ADAM (Kingma and Ba 2015) with a 0.02 learning rate. For each training iteration, the expectation value measured from the QML model is fed into an additional classical node with a \(\tanh \) activation function.

In Fig. 8, we show the training loss functions and test accuracy versus the number of iterations for 10 different optimization runs using each encoders. We observe that, despite being classically simulable, the model with HEE has a significantly better performance (above 90% test accuracy) than the model with CHE (around 65% accuracy) on both training and testing for this specific task. Hence, we we can see a particular embedding example where hard-to-simulate does not translate into practical usefulness.

Loss function (a) and test accuracy (b) versus number of iterations for MNIST classification using a QCNN. We train the QML model with n = 8 qubits using two different embedding schemes (1 HEE layer and 2 CHE layers) for the task of binary classification between digits “0” and “1” from the MNIST dataset. Solid lines represent the average over 10 instances of different initial parameters, and shaded areas represents the range over these instances

We emphasize that this result should not be interpreted as the CHE scheme being generally unfavorable for practical purposes. Rather, that additional care should be taken when choosing encoders to suit specific tasks, and to highlight the challenge of designing encoders to satisfy all necessary criteria for achieving a quantum advantage.

5 Implications for the literature

Here we briefly summarize the implications of our results for the QML literature, specifically the literature on training quantum neural networks.

First, we have shown that features deemed detrimental to training linear cost functions in the VQA framework will also lead to trainability issues in QML models. This is particularly relevant to the use of global observables such as measuring the parity of the output bitstrings on all qubits, which have been employed in the QML literature. We remark that there is no a priori reason to consider the global parity. One could instead just measure a subset of qubits and assign labels via local parities, or even average the local parities across subsets of qubits. As shown in our numerics section, local parity measurements are practically useful and one can use them to optimize the model and achieve small training and generalization errors.

Second, our results indicate that QML models can exhibit scaling issues due to the dataset. Specifically, when the input states to the QNN have large amounts of entanglement, then the QNN’s parameters can become harder to train as local states will be concentrated around the maximally mixed state. This is particularly relevant when dealing with classical data, as here one has the freedom to choose the embedding. This points to the fact that the choice of embedding needs to be carefully considered and that trainability-aware encoders should be prioritized and developed.

Unfortunately for the field of QML, data embeddings cannot solve BPs by themselves. In other words, as proven here, the choice of embedding cannot in practice mitigate the effect of BPs or prevent a BP that would otherwise exist for the QNN. For instance, the embedding cannot prevent a BP arising from the use of a global measurement, or from the use of a QNN that forms a 2-design. Hence, while embeddings can lead to a novel source of BPs, they cannot cure a BP that a particular QNN suffers from.

Finally, we show that optimizers relying on the FI matrix, such as natural gradient descent, require an exponential number of measurement shots to be useful in a BP. This is due to the fact that the matrix elements of the empirical FI matrix are exponentially small in a BP. Hence, quantities such as the normalized empirical FI matrix, which has been employed in the literature, are also inaccessible without incurring in an exponential cost.

6 Discussion

Quantum machine learning (QML) has received significant attention due to its potential for accelerating data analysis using quantum computers. A near-term approach to QML is to train the parameters of a quantum neural network (QNN), which consists of a parametrized quantum circuit, in order to minimize a loss function associated with some data set. The data can either be quantum or classical (as shown in Fig. 1), with classical data requiring a quantum embedding map. While this novel, general paradigm for data analysis is exciting, there are still very few theoretical results studying the scalability of QML. The lack of theoretical results motivates our work, in which we focused on the trainability and gradient scaling of QNNs.

In the context of trainability, most of the previous results have been derived for the field of variational quantum algorithms (VQAs). While VQAs and QML models share some similarities in that both train parametrized quantum circuits, there are some key differences that make it difficult to directly apply VQA trainability results to the QML setting. In this work, we bridged the gap between the VQA and QML frameworks by rigorously showing that gradient scaling results from VQAs will hold in a QML setting. This involved connecting the gradients of linear cost functions to those of mean squared error and log-likelihood cost functions.

In light of our results, many QML proposals in the literature would need to be revised, if they aim to be scalable. For instance, we rigorously proved that features deemed detrimental for VQAs, such as global measurements or deep unstructured (and thus highly entangling) ansatzes, should also be avoided in QML settings. These results hold regardless of the data embedding, and hence one cannot expect the data embedding to solve a barren plateau issue associated with a QNN.

Moreover, due to the use of datasets, we discovered a novel source for barren plateaus in QML loss functions. We refer to this as a dataset-induced barren plateau (DIBP). DIBPs are particularly relevant when dealing with classical data, as here additional care must be taken when choosing an embedding scheme. A poor embedding choice could lead to a DIBP. Until now, a “good” embedding was one that is classically hard to simulate and practically useful. However, our results show that a third criterion must be added for the encoder: not inducing gradient scaling issues. This paves the way towards the development of trainability-aware embedding schemes.

Our numerical simulations verify the DIBP phenomenon, as therein we show how the gradient scaling can be greatly affected both by the structure of the dataset, as well as by the choice of the embedding scheme. Furthermore, our results illustrate another subtlety that arises when using classical data, as the classically-hard-to-simulate embedding of Havlíček et al. (2019) leads to large generalization error on a standard MNIST classification task. Thus, “classically-hard-to-simulate” and “practical usefulness” of an encoder do not always coincide.

Taken together, our results illuminate some subtleties in training QNNs in QML models, and show that more work needs to be done to guarantee that QML schemes will be trainable, and thus useful for practical applications. Finally, although it is utterly important to study and ensure the trainability of QNNs since an untrained model renders the rest of the pipeline useless, other fundamental aspects such as generalization (Caro et al. 2022a, 2022b; Jerbi et al. 2023) and local minimum (Anschuetz et al. 2022) must also be explored to achieve a quantum advantage.

Data availability

Codes and numerical data used in this work are available upon further request.

References

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M et al (2016) Tensorflow: a system for large-scale machine learning. In: 12th {USENIX} symposium on operating systems design and implementation ({OSDI} 16), pp 265–283

Abbas A, Sutter D, Zoufal C, Lucchi A, Figalli A, Woerner S (2021) The power of quantum neural networks. Nat Comput Sci 1(6):403–409

Aharonov D, Cotler J, Qi X-L (2022) Quantum algorithmic measurement. Nat Commun 13(1):1–9

Amari S-I (1998) Natural gradient works efficiently in learning. Neur Comput 10(2):251–276

Anschuetz E, Olson J, Aspuru-Guzik A, Cao Y (2019) Variational quantum factoring. In: International Workshop on Quantum Technology and Optimization Problems. Springer, pp 74–85

Anschuetz ER, et al. (2022) Quantum variational algorithms are swamped with traps. Nat Commun 13:7760

Arrasmith A, Cerezo M, Czarnik P, Cincio L, Coles P J (2021) Effect of barren plateaus on gradient-free optimization. Quantum 5:558

Arrasmith A, Holmes Z, Cerezo M, Coles P J (2021) Equivalence of quantum barren plateaus to cost concentration and narrow gorges. arXiv:https://arxiv.org/abs/2104.05868

Banchi L, Pereira J, Pirandola S (2021) Generalization in quantum machine learning: a quantum information perspective. PRX Quantum 2(4):040321

Bausch J (2020) Recurrent quantum neural networks. arXiv:https://arxiv.org/abs/2006.14619

Beer K, Bondarenko D, Farrelly T, Osborne T J, Salzmann R, Scheiermann D, Wolf R (2020) Training deep quantum neural networks. Nat Commun 11(1):808

Biamonte J, Wittek P, Pancotti N, Rebentrost P, Wiebe N, Lloyd S (2017) Quantum machine learning. Nature 549(7671):195–202

Bilkis M, Cerezo M, Verdon G, Coles P J, Cincio L (2021) A semi-agnostic ansatz with variable structure for quantum machine learning. arXiv:https://arxiv.org/abs/2103.06712

Brandao FGSL, Horodecki M (2010) On hastings’ counterexamples to the minimum output entropy additivity conjecture. Open Syst Inform Dyn 17(01):31–52

Bravo-Prieto C, LaRose R, Cerezo M, Subasi Y, Cincio L, Coles P (2019) Variational quantum linear solver. arXiv:https://arxiv.org/abs/1909.05820

Bremner M J, Jozsa R, Shepherd D J (2011) Classical simulation of commuting quantum computations implies collapse of the polynomial hierarchy. Proc R Soc A: Math Phys Eng Sci 467(2126):459–472

Caro MC, et al. (2022a) Out-of-distribution generalization for learning quantum dynamics, arxiv:https://arxiv.org/abs/2204.10268

Caro MC, et al. (2022b) Generalization in quantum machine learning from few training data. Nat Commun 13:4919

Cerezo M, Arrasmith A, Babbush R, Benjamin S C, Endo S, Fujii K, McClean J R, Mitarai K, Yuan X, Cincio L, Coles P J (2021) Variational quantum algorithms. Nat Rev Phys 3 (1):625–644

Cerezo M, Coles P J (2021) Higher order derivatives of quantum neural networks with barren plateaus. Quant Sci Technol 6(2):035006

Cerezo M, Sone A, Volkoff T, Cincio L, Coles P J (2021) Cost function dependent barren plateaus in shallow parametrized quantum circuits. Nat Commun 12(1):1–12

Cirstoiu C, Holmes Z, Iosue J, Cincio L, Coles P J, Sornborger A (2020) Variational fast forwarding for quantum simulation beyond the coherence time. npj Quant Inform 6(1):1–10

Commeau B, Cerezo M, Holmes Z, Cincio L, Coles P J, Sornborger A (2020) Variational hamiltonian diagonalization for dynamical quantum simulation. arXiv:https://arxiv.org/abs/2009.02559

Cong I, Choi S, Lukin M D (2019) Quantum convolutional neural networks. Nat Phys 15 (12):1273–1278

Du Y, Huang T, You S, Hsieh M-H, Tao D (2020) Quantum circuit architecture search: error mitigation and trainability enhancement for variational quantum solvers. arXiv:https://arxiv.org/abs/2010.10217

Du Y, Tu Z, Yuan X, Tao D (2022) An efficient measure for the expressivity of variational quantum algorithms. Phys Rev Lett 128(8):080506

Endo S, Sun J, Li Y, Benjamin S C, Yuan X (2020) Variational quantum simulation of general processes. Phys Rev Lett 125(1):010501

Farhi E, Neven H (2018) Classification with quantum neural networks on near term processors. arXiv:https://arxiv.org/abs/1802.06002

Grimsley H R, Economou S E, Barnes E, Mayhall N J (2019) An adaptive variational algorithm for exact molecular simulations on a quantum computer. Nat Commun 10(1):1–9

Haug T, Kim MS (2021) Optimal training of variational quantum algorithms without barren plateaus. arXiv:https://arxiv.org/abs/2104.14543

Havlíček V, Córcoles A D, Temme K, Harrow A W, Kandala A, Chow J M, Gambetta J M (2019) Supervised learning with quantum-enhanced feature spaces. Nature 567(7747):209–212

Holmes Z, Arrasmith A, Yan B, Coles P J, Albrecht A, Sornborger A T (2021) Barren plateaus preclude learning scramblers. Phys Rev Lett 126(19):190501

Holmes Z, Sharma K, Cerezo M, Coles P J (2021) Connecting ansatz expressibility to gradient magnitudes and barren plateaus. PRX Quant 3:010313

Huang H-Y, Bharti K, Rebentrost P (2019) Near-term quantum algorithms for linear systems of equations. arXiv:https://arxiv.org/abs/1909.07344

Huang H-Y, Broughton M, Mohseni M, Babbush R, Boixo S, Neven H, McClean J R (2021) Power of data in quantum machine learning. Nat Commun 12(1):1–9

Huang H-Y, Kueng R, Preskill J (2021) Information-theoretic bounds on quantum advantage in machine learning. Phys Rev Lett 126:190505

Hubregtsen T, Wierichs D, Gil-Fuster E, Derks Peter-Jan HS, Faehrmann P K, Meyer J J (2021) Training quantum embedding kernels on near-term quantum computers. arXiv:https://arxiv.org/abs/2105.02276

Huembeli P, Dauphin A (2021) Characterizing the loss landscape of variational quantum circuits. Quant Sci Technol 6(2):025011

Hur T, Kim L, Park D K (2021) Quantum convolutional neural network for classical data classification. arXiv:https://arxiv.org/abs/2108.00661

Jerbi S, et al. (2023) The power and limitations of learning quantum dynamics incoherently, arXiv:https://arxiv.org/abs/2303.12834

Jolliffe I (2005) Principal component analysis. Encyclopedia of statistics in behavioral science

Khatri S, LaRose R, Poremba A, Cincio L, Sornborger A T, Coles P J (2019) Quantum-assisted quantum compiling. Quantum 3:140

Kiani B T, De Palma G, Marvian M, Liu Z-W, Lloyd S (2021) Quantum earth mover’s distance: a new approach to learning quantum data. arXiv:https://arxiv.org/abs/2101.03037

Kieferova M, Carlos O M, Wiebe N (2021) Quantum generative training using r∖’enyi divergences. arXiv:https://arxiv.org/abs/2106.09567

Kingma D P, Ba J (2015) Adam: a method for stochastic optimization. In: Proceedings of the 3rd International Conference on Learning Representations (ICLR)

Kübler J M, Buchholz S, Schölkopf B (2021) The inductive bias of quantum kernels. Adv Neur Inform Process Syst 34:12661–12673

Larocca M, Czarnik P, Sharma K, Muraleedharan G, Coles P J, Cerezo M (2021) Diagnosing barren plateaus with tools from quantum optimal control. arXiv:https://arxiv.org/abs/2105.14377

Larocca M, Ju N, García-Martín D, Coles P J, Cerezo M (2021) Theory of overparametrization in quantum neural networks. arXiv:https://arxiv.org/abs/2109.11676

LaRose R, Coyle B (2020) Robust data encodings for quantum classifiers. Phys Rev A 102 (3):032420

LeCun Y (1998) The mnist database of handwritten digits. http://yann.lecun.com/exdb/mnist/

Ledoux M (2001) The concentration of measure phenomenon. Number 89. American Mathematical Soc

Li Y, Benjamin S C (2017) Efficient variational quantum simulator incorporating active error minimization. Phys Rev X 7:021050

Liu Y, Arunachalam S, Temme K (2021) A rigorous and robust quantum speed-up in supervised machine learning. Nat Phys, 1–5

Liu Z, Yu L-W, Duan L-M, Deng D-L (2021) The presence and absence of barren plateaus in tensor-network based machine learning. arXiv:https://arxiv.org/abs/2108.08312

Lloyd S, Schuld M, Ijaz A, Izaac J, Killoran N (2020) Quantum embeddings for machine learning. arXiv:https://arxiv.org/abs/2001.03622

Marrero C O, Kieferová M, Wiebe N (2020) Entanglement induced barren plateaus. PRX Quant 2(4):040316

McClean J R, Boixo S, Smelyanskiy V N, Babbush R, Neven H (2018) Barren plateaus in quantum neural network training landscapes. Nat Commun 9(1):1–6

Mitarai K, Negoro M, Kitagawa M, Fujii K (2018) Quantum circuit learning. Phys Rev A 98(3):032309

Patti T L, Najafi K, Gao X, Yelin S F (2021) Entanglement devised barren plateau mitigation. Phys Rev Res 3(3):033090

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12:2825–2830

Perrier E, Youssry A, Ferrie C (2021) Qdataset: quantum datasets for machine learning. arXiv:https://arxiv.org/abs/2108.06661

Peruzzo A, McClean J, Shadbolt P, Yung M-H, Zhou X-Q, Love P J, Aspuru-Guzik A, O’brien J L (2014) A variational eigenvalue solver on a photonic quantum processor. Nat Commun 5(1):1–7

Pesah A, Cerezo M, Wang S, Volkoff T, Sornborger A T, Coles P J (2021) Absence of barren plateaus in quantum convolutional neural networks. Phys Rev X 11(4):041011

Preskill J (2018) Quantum computing in the nisq era and beyond. Quantum 2:79

Schatzki L, Arrasmith A, Coles P J, Cerezo M (2021) Entangled datasets for quantum machine learning. arXiv:https://arxiv.org/abs/2109.03400

Schuld M, Bergholm V, Gogolin C, Izaac J, Killoran N (2019) Evaluating analytic gradients on quantum hardware. Phys Rev A 99(3):032331

Schuld M, Bocharov A, Svore K M, Wiebe N (2020) Circuit-centric quantum classifiers. Phys Rev A 101(3):032308

Schuld M, Sinayskiy I, Petruccione F (2014) The quest for a quantum neural network. Quantum Inf Process 13(11):2567– 2586

Schuld M, Sinayskiy I, Petruccione F (2015) An introduction to quantum machine learning. Contemp Phys 56(2):172–185

Sharma K, Cerezo M, Cincio L, Coles P J (2022) Trainability of dissipative perceptron-based quantum neural networks. Phys Rev Lett 128(18):180505

Sharma K, Khatri S, Cerezo M, Coles P J (2020) Noise resilience of variational quantum compiling. New J Phys 22(4):043006

Tangpanitanon J, Thanasilp S, Dangniam N, Lemonde M-A, Angelakis D G (2020) Expressibility and trainability of parametrized analog quantum systems for machine learning applications. Phys Rev Res 2(4):043364

Thanasilp S, et al. (2022) Exponential concentration and untrainability in quantum kernel methods, arxiv:https://arxiv.org/abs/2208.11060

Thumwanit N, Lortararprasert C, Yano H, Raymond R (2021) Trainable discrete feature embeddings for variational quantum classifier. arXiv:https://arxiv.org/abs/2106.09415

Verdon G, Marks J, Nanda S, Leichenauer S, Hidary J (2019) Quantum hamiltonian-based models and the variational quantum thermalizer algorithm. arXiv:https://arxiv.org/abs/1910.02071

Wang S, Czarnik P, Arrasmith A, Cerezo M, Cincio L, Coles P J (2021) Can error mitigation improve trainability of noisy variational quantum algorithms? arXiv:https://arxiv.org/abs/2109.01051

Wang S, Fontana E, Cerezo M, Sharma K, Sone A, Cincio L, Coles P J (2021) Noise-induced barren plateaus in variational quantum algorithms. Nat Commun 12(1):1–11

Xu X, Sun J, Endo S, Li Y, Benjamin S C, Yuan X (2021) Variational algorithms for linear algebra. Sci Bull 66(21):2181–2188

Zhao C, Gao X-S (2021) Analyzing the barren plateau phenomenon in training quantum neural network with the zx-calculus. arXiv:https://arxiv.org/abs/2102.01828

Acknowledgements

We thank Michael Grosskopf, Julia Nakhleh, Amira Abbas, Christa Zoufal, and David Sutter for helpful discussions. ST and NAG were supported by the US DOE through a quantum computing program sponsored by the Los Alamos National Laboratory (LANL) Information Science & Technology Institute. ST was also supported by the National Research Foundation, Prime Minister’s Office, Singapore and the Ministry of Education, Singapore under the Research Centres of Excellence programme, as well as from the Sandoz Family Foundation-Monique de Meuron program for Academic Promotion. SW was supported by the UKRI EPSRC grant no. EP/T001062/1 and the Samsung GRP grant. PJC acknowledges initial support from the Laboratory Directed Research and Development (LDRD) program of LANL under project number 20190065DR, as well as support by the U.S. DOE, Office of Science, Office of Advanced Scientific Computing Research, under the Accelerated Research in Quantum Computing (ARQC) program. MC acknowledges initial support from the Center for Nonlinear Studies at LANL, as well as support from the LDRD program of LANL under project number 20210116DR. Research presented in this article was supported by the NNSA’s Advanced Simulation and Computing Beyond Moore’s Law Program at LANL.

Funding

Open access funding provided by EPFL Lausanne

Author information

Authors and Affiliations

Contributions

Project was conceived by ST, SW, and MC. Analytical results derived by ST, SW, and MC. Numerical results by ST and NAH. All authors wrote and reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix : A

In this Appendix, we present further details for the results of the main text. In Appendix A, we present some supplemental lemmas. In Appendix B, we present a proof for our main result Theorem 1. In Appendix C, we present a proof of Corollary 1 where we discuss the implications of our main result for the mean squared error and negative log-likelihood loss functions, and we discuss extensions to the corollary to other machine learning settings in Appendix D. Finally, in Appendix F, we provide additional details on our numerical simulations not presented in the main text.

A. Supplemental lemmas

In this section, we present some supplemental lemmas that will be useful in deriving our results.

Supplemental Lemma 1 (Variance of sum of random variables)

Given a set of correlated random variables {Xi}i, we have

Proof

The variance of the sum of two correlated random variables is given by

Using induction along with the fact that Cov(X1 + X2,X3) = Cov(X1,X3) + Cov(X2,X3), the variance of the full sum can be bounded as

where the inequality in the second line comes from the Cauchy-Schwarz inequality. □

Supplemental Lemma 2 (Variance of product)

Given two correlated random variables X and Y, we have

where \(|Y^{2}|_{\max \limits }\) is the maximum possible value of Y2, i.e., \(|Z|_{\max \limits } = \max \limits \{ |Z| : \text {Pr}(Z) > 0 \}\).

Proof

We have

where in the first inequality we have used Cauchy-Schwarz, and the second inequality comes from the rearrangement inequality. Now consider

where in the first inequality we have used Eq. A10, in the second inequality we have used the definition of the variance, and in the third inequality we have simply taken the maximum value for Y2. □

Appendix: B. Proof of Theorem 1: generic loss function