Abstract

This paper narrows the gap between previous literature on quantum linear algebra and practical data analysis on a quantum computer, formalizing quantum procedures that speed-up the solution of eigenproblems for data representations in machine learning. The power and practical use of these subroutines is shown through new quantum algorithms, sublinear in the input matrix’s size, for principal component analysis, correspondence analysis, and latent semantic analysis. We provide a theoretical analysis of the run-time and prove tight bounds on the randomized algorithms’ error. We run experiments on multiple datasets, simulating PCA’s dimensionality reduction for image classification with the novel routines. The results show that the run-time parameters that do not depend on the input’s size are reasonable and that the error on the computed model is small, allowing for competitive classification performances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantum computation is a computing paradigm that promises substantial speed-ups in a plethora of tasks that are computationally hard for classical computers. In 2009, Harrow, Hassidim, and Lloyd Harrow et al. (2009) presented quantum procedures to create a quantum state proportional to the solution of a linear system of equations \(\mathbf {A}\mathbf {x}=\mathbf {b}\) in time logarithmic in the size of \(\mathbf {A}\). This result has promoted further research on optimization, linear algebra, and machine learning problems, leading to faster quantum algorithms for linear regressions (Chakraborty et al. 2019), support vector machines (Rebentrost et al. 2014a), k-means (Kerenidis et al. 2019a), and many others (Biamonte et al. 2017). Following this research line, in this work, we focus on quantum algorithms for singular value based data analysis and representation. When handling big data, it is crucial to learn effective representations that reduce the data’s noise and help the learner perform better on the task. Many data representation methods for machine learning, such as principal component analysis (Partridge and Calvo 1997), correspondence analysis (Greenacre 1984), slow feature analysis (Kerenidis and Luongo 2020), or latent semantic analysis (Deerwester et al. 1990), heavily rely on singular value decomposition and are impractical to compute on classical computers for extensive datasets.

We have gathered and combined state-of-the-art quantum techniques to present a useful and easy-to-use framework for solving eigenvalue problems at large scale. While we focus on machine learning problems, these subroutines can be used for other problems that are classically solved via an SVD of a suitable matrix. More specifically, we formalize novel quantum procedures to compute classical estimates of the most relevant singular values, factor scores, factor score ratios of an \(n\times m\) matrix in time poly-logarithmic in nm, and the most relevant singular vectors sub-linearly in nm. We show how to use these procedures to obtain a classical description of the models of three machine learning algorithms: principal component analysis, correspondence analysis, and latent semantic analysis. We also discuss how to represent the data in the new feature space with a quantum computer. We provide a thorough theoretical analysis for all these algorithms bounding the run-time, the error, and the failure probability.

The remainder of the paper is organized as follows. Section 2 introduces our notation and discusses the relevant quantum preliminaries. Section 3 presents the novel quantum algorithms. In Section 4, we show applications of the algorithms to principal component analysis, correspondence analysis, and latent semantic analysis. Section 5 presents numerical experiments assessing the run-time parameters. Finally, we provide detailed information on the experiments and extensively discuss related work in quantum and classical literature in the appendix.

2 Quantum preliminaries and notation

2.1 Notation

Given a matrix \(\mathbf {A}\), we write \(\mathbf {a}_{i,\cdot }\) to denote its \(i^{th}\) row, \(\mathbf {a}_{\cdot , j}\) for its \(j^{th}\) column, and \(a_{ij}\) for the element at row i, column j. We write its singular value decomposition as \(\mathbf {A}=\mathbf {U}{{{\varvec{\Sigma }}}} \mathbf {V}^T\). \(\mathbf {U}\) and \(\mathbf {V}\) are orthogonal matrices, whose column vectors \(\mathbf {u}_i\) and \(\mathbf {v}_i\) are respectively the left and right singular vectors of \(\mathbf {A}\). \({{{\varvec{\Sigma }}}}\) is a diagonal matrix with positive, non-negative, entries \(\sigma _i\): the singular values. The row/column size of \({{{\varvec{\Sigma }}}}\) is the rank of \(\mathbf {A}\) and is denoted as r. We use \(\lambda _i\) to denote the \(i^{th}\) eigenvalue of the covariance matrix \(\mathbf {A}^T\mathbf {A}=\mathbf {V}{{{\varvec{\Sigma }}}}^2\mathbf {V}^T\), and \(\lambda ^{(i)}=\frac{\lambda _i}{\sum _j^r\lambda _j}\) to denote the relative magnitude of each eigenvalue. Using the notation of Hsu et al. (2019) for correspondence analysis, we refer to \(\lambda _i\) as factor scores and to \(\lambda ^{(i)}\) as factor score ratios. Note that \(\lambda _i=\sigma _i^2\) and \(\lambda ^{(i)}=\frac{\sigma _i^2}{\sum _j^r\sigma _j^2}\). We denote the number of non-zero elements of a matrix/vector with nnz(). Given a scalar a, |a| is its absolute value. The \(\ell _\infty\) and \(\ell _0\) norm of a vector \(\mathbf {a}\) are defined as \(\Vert {\mathbf {a}}\Vert _\infty = \max _i (\Vert {a_i}\Vert )\), \(\Vert {\mathbf {a}}\Vert _0 = nnz(\mathbf {a})\). If the vector norm is not specified, we refer to the \(\ell _2\) norm. The Frobenius norm of a matrix is \(\Vert {\mathbf {A}}\Vert _F = \sqrt{\sum _i^r \sigma _i^2}\), its spectral norm is \(\Vert {\mathbf {A}}\Vert = \max _{\mathbf {x} \in \mathbb {R}^m}\frac{\Vert {\mathbf {A}\mathbf {x}}\Vert }{\Vert {\mathbf {x}}\Vert } = \sigma _{max}\), and finally \(\Vert {\mathbf {A}}\Vert _\infty = \max _i (\Vert {\mathbf {a}_{i,\cdot }}\Vert _1)\). A contingency table is a matrix that represents categorical variables in terms of the observed frequency counts. Finally, when stating the complexity of an algorithm, we use \(\widetilde{O}\) instead of O to omit the poly-logarithmic terms on the size of the input data (i.e., \(O(\text {polylog}(nm)) = \widetilde{O}(1)\)), on the error, and the failure probability.

2.2 Quantum preliminaries

We represent scalars as states of the computational basis of \(\mathcal {H}_n\), where n is the number of bits required for binary encoding. The quantum state corresponding to a vector \(\mathbf {v} \in \mathbb {R}^m\) is defined as a state-vector \(|t{\mathbf {v}}\rangle =\frac{1}{\Vert {v}\Vert }\sum _j^m v_j |{j}\rangle\). Note that to build \(|t{\mathbf {v}}\rangle\) we need \(\lceil \log m\rceil\) qubits.

Data access

To access data in the form of state-vectors, we use the following definition of quantum access.

Definition 1

(Quantum access to a matrix) We have quantum access to a matrix \(\mathbf {A} \in \mathbb {R}^{n \times m}\), if there exists a data structure that allows performing the mappings \(|{i}\rangle |{0}\rangle \mapsto |{i}\rangle |{\mathbf {a}_{i, \cdot }}\rangle = |{i}\rangle \frac{1}{\Vert {\mathbf {a}_{i,\cdot }}\Vert }\sum _j^m a_{ij}|{j}\rangle\), for all i, and \(|{0}\rangle \mapsto \frac{1}{\Vert {\mathbf {A}}\Vert _F}\sum _i^n \Vert {\mathbf {a}_{i,\cdot }}\Vert |{i}\rangle\) in time \(\widetilde{O}(1)\).

By combining the two mappings we can create the state \(|{\mathbf {A}}\rangle = \frac{1}{\Vert {\mathbf {a}}\Vert _F}\sum _i^n\sum _j^m a_{ij}|{i}\rangle |{j}\rangle\) in time \(\widetilde{O}(1)\).

Kerenidis and Prakash (2017, 2020a) have described one implementation of such quantum data access. Their implementation is based on a classical data structure such that the cost of updating/deleting/inserting one element of the matrix is poly-logarithmic in the number of its entries. In addition, their structure gives access to the Frobenius norm of the matrix and the norm of its rows in time O(1). The cost of creating this data structure is \(\widetilde{O}(\text {nnz}(\mathbf {A}))\). This input model requires the existence of a QRAM (Giovannetti et al. 2008). While there has been some skepticism on the possibility of error-correcting such a complex device, recent results show that bucket-brigade QRAMs are highly resilient to generic noise (Hann et al. 2021).

Sometimes it is desirable to normalize the input matrix to have a spectral norm smaller than one. Kerenidis and Prakash (2020a) provide an efficient routine to estimate the spectral norm.

Theorem 1

(Spectral norm estimation (Kerenidis and Prakash 2020a)) Let there be quantum access to the matrix \(\mathbf {A} \in \mathbb {R} ^{n\times m}\), and let \(\epsilon >0\) be a precision parameter. There exists a quantum algorithm that estimates \(\Vert {\mathbf {A}}\Vert\) to additive error \(\epsilon \Vert {\mathbf {A}}\Vert _F\) in time \(\widetilde{O}\left( \frac{\log (1/\epsilon )}{\epsilon }\frac{\Vert {\mathbf {a}}\Vert _F}{\Vert {\mathbf {a}}\Vert }\right)\).

If we have \(\Vert {\mathbf {a}}\Vert\), we can create quantum access to \(\mathbf {A}'=\frac{\mathbf {A}}{\Vert {\mathbf {A}}\Vert } = \mathbf {U}\frac{{{{\varvec{\Sigma }}}}}{\sigma _{max}}\mathbf {V}^T\) in time \(\widetilde{O}\left( \text {nnz}(\mathbf {A})\right)\) by dividing each entry of the data structure. Once we have quantum access to a dataset, it is possible to apply a pipeline of quantum machine learning algorithms for data representation, analysis, clustering, and classification (Rebentrost et al. 2014b; Kerenidis et al. 2020a; Wang 2017; Allcock et al. 2020; Kerenidis et al. 2019a; Kerenidis and Luongo 2020). Since the cost of each step of the pipeline should be evaluated independently, we consider \(\widetilde{O}(nnz(\mathbf {A}))\) to be a pre-processing cost and do not include it in our run-times.

We conclude this section by stating a useful claim that connects errors on classical vectors with errors on quantum states.

Claim 2

(Closeness of state-vectors (Kerenidis and Prakash 2020a)) Let \(\theta\) be the angle between vectors \(\mathbf {x}, \overline{\mathbf {x}}\) and assume that \(\theta < \pi /2\). Then, \(\Vert {\mathbf {x} - \overline{\mathbf {x}}}\Vert \le \epsilon\) implies \(\Vert {|{\mathbf {x}}\rangle - |{\overline{\mathbf {x}}\rangle }}\Vert \le \sqrt{2}\frac{\epsilon }{\Vert {\mathbf {x}}\Vert }\).

Useful subroutines

We state two relevant quantum linear algebra results: quantum singular value estimation (SVE) and quantum matrix-vector multiplication.

Theorem 3

(Singular value estimation (Kerenidis and Prakash 2020a)) Let there be quantum access to \(\mathbf {A} \in \mathbb {R} ^{n\times m}\), with singular value decomposition \(\mathbf {A}=\sum _i^r\sigma _i \mathbf {u}_i\mathbf {v}_i^T\) and \(r=min(n,m)\). Let \(\epsilon >0\) be a precision parameter. It is possible to perform the mapping \(|{b}\rangle =\sum _i\alpha _i|{\mathbf {v}\rangle _i} \mapsto \sum _i\alpha _i|{\mathbf {v}\rangle _i}|{\overline{\sigma }_i}\rangle\), such that \(|{\frac{\sigma _i}{\mu (\mathbf {A})}-\overline{\sigma }_i}| \le \epsilon\) with probability at least \(1 - 1/poly(m)\), in time \(\widetilde{O}(\frac{1}{\epsilon })\) where \(\mu (\mathbf {A}) = \min \limits _{p \in [0,1]}(\Vert {\mathbf {A}}\Vert _F, \sqrt{s_{2p}(\mathbf {A})s_{2(1-p)}(\mathbf {A}^T)})\) and \(s_p(\mathbf {A}) = \max \limits _{i}\Vert {\mathbf {a}_{i,\cdot }}\Vert _p^p\). Similarly, we can have \(|{\sigma _i-\overline{\sigma }_i}|\le \epsilon\) in time \(\widetilde{O}(\frac{\mu (\mathbf {A})}{\epsilon })\).

Unlike previous results with Hamiltonian simulations (Rebentrost et al. 2014b), this algorithm enables performing conditional rotations using the singular values of a matrix without any special requirement (e.g., sparsity, being square, Hermitian, etc.). By choosing the same matrix \(|{\mathbf {A}}\rangle = \frac{1}{\Vert {\mathbf {A}}\Vert _F}\sum _i^n\sum _j^m a_{ij}|{i}\rangle |{j}\rangle = \frac{1}{\Vert {\mathbf {A}}\Vert _F}\sum _i^k\sigma _i|{\mathbf {u}\rangle _i}|{\mathbf {v}\rangle _i}\) as starting state \(|{b}\rangle\), we obtain a superposition of all the singular values entangled with the respective left and right singular vectors \(\frac{1}{\Vert {\mathbf {A}}\Vert _F}\sum _i^r \sigma _i |{\mathbf {u}\rangle _i}|{\mathbf {v}\rangle _i}|{\overline{\sigma }_i}\rangle\). In this case, the requirement \(r=\min (n,m)\) is not needed anymore as \(|{b}\rangle = |{\mathbf {A}}\rangle\) can be fully decomposed in terms of \(\mathbf {A}\)’s right singular vectors.

This algorithm uses phase estimation. In this work, we consider this algorithm to use a consistent version of phase estimation, so that the errors in the estimates of the singular values are consistent across multiple runs (Ta-Shma 2013; Kerenidis and Prakash 2020a).

Theorem 4

(Matrix-vector multiplication (Chakraborty et al. 2019) (Lemma 24, 25)) Let there be quantum access to the matrix \(\mathbf {A} \in \mathbb {R} ^{n\times n}\), with \(\sigma _{max} \le 1\), and to a vector \(\mathbf {x} \in \mathbb {R}^{n}\). Let \(\Vert {\mathbf {A}\mathbf {x}}\Vert \ge \gamma\). There exists a quantum algorithm that creates a state \(|{\mathbf {z}}\rangle\) such that \(\Vert {|{\mathbf {z}}\rangle - |{\mathbf {A}\mathbf {x}}\rangle }\Vert \le \epsilon\) in time \(\widetilde{O}(\frac{1}{\gamma }\mu (\mathbf {A})\log (1/\epsilon ))\), with probability at least \(1-1/poly(n)\). Increasing the run-time by a multiplicative factor \(\widetilde{O}\left( \frac{1}{\eta }\right)\) one can retrieve an estimate of \(\Vert {\mathbf {A}\mathbf {x}}\Vert\) to relative error \(\eta\).

Data output

Finally, to read out the quantum states, we state one version of amplitude amplification and estimation, and two state-vector tomographies.

Theorem 5

(Amplitude amplification and estimation (Brassard et al. 2002; Kerenidis et al. 2019c)) Let there be a unitary that performs the mapping \(U_x: |{0}\rangle \mapsto sin(\theta )|{\mathbf {x},0}\rangle + cos(\theta )|{\mathbf {G},0^\perp }\rangle\), where \(|{\mathbf {G}}\rangle\) is a garbage state, in time \(T(U_x)\). Then, \(sin(\theta )^2\) can be estimated to multiplicative error \(\eta\) in time \(O(\frac{T(U_x)}{\eta sin(\theta )})\) or to additive error \(\eta\) in time \(O(\frac{T(U_x)}{\eta })\), and \(|{\mathbf {x}}\rangle\) can be generated in expected time \(O(\frac{T(U_x)}{sin(\theta )})\).

Theorem 6

(\(\ell _2\) state-vector tomography (Kerenidis and Prakash 2020b; Kerenidis et al. 2019c)) Given a unitary mapping \(U_x: |{0}\rangle \mapsto |{\mathbf {x}}\rangle\) in time \(T(U_x)\) and \(\delta >0\), there is an algorithm that produces an estimate \(\overline{\mathbf {x}} \in \mathbb {R}^m\) with \(\Vert {\overline{\mathbf {x}}}\Vert = 1\) such that \(\Vert {\mathbf {x} - \overline{\mathbf {x}}}\Vert \le \delta\) with probability at least \(1 - 1/poly(m)\) in time \(O(T(U_x)\frac{m \log m}{\delta ^2})\).

Theorem 7

(\(\ell _\infty\) state-vector tomography (Kerenidis et al. 2019b)) Given access to a unitary mapping \(U_x: |{0}\rangle \mapsto |{\mathbf {x}}\rangle\) and its controlled version in time \(T(U_x)\), and \(\delta >0\), there is an algorithm that produces an estimate \(\overline{\mathbf {x}} \in \mathbb {R}^m\) with \(\Vert {\overline{\mathbf {x}}}\Vert =1\) such that \(\Vert {\mathbf {x} - \overline{\mathbf {x}}}\Vert _\infty \le \delta\) with probability at least \(1 - 1/poly(m)\) in time \(O(T(U_x)\frac{\log m}{\delta ^2})\).

3 Novel quantum methods

Building from the previous section’s techniques, we formalize a series of quantum algorithms that allow us to retrieve a classical description of the singular value decomposition of a matrix to which we have quantum access.

3.1 Estimating the quality of the representation

Algorithms such as principal component analysis and correspondence analysis are often used for visualization or dimensionality reduction purposes. These applications work better when a small subset of factor scores have high factor score ratios. We provide a fast procedure that allows verifying if this is the case: given efficient quantum access to a matrix \(\mathbf {A} \in \mathbb {R}^{n \times m}\), it retrieves the most relevant singular values, factor scores, and factor score ratios in time poly-logarithmic in the number of elements of \(\mathbf {A}\), with no strict dependencies on its rank.

The main intuition behind this algorithm is that it is possible to create the state \(\sum _{i}^{r} \sqrt{\lambda ^{(i)}} |{\mathbf {u}\rangle _i}|{\mathbf {v}\rangle _i}|{\overline{\sigma }_i}\rangle\). The third register, when measured in the computational basis, outputs the estimate \(\overline{\sigma }_i\) of a singular value with probability equal to its factor score ratio \(\lambda ^{(i)}\). This enables sampling the singular values of \(\mathbf {A}\) directly from the factor score ratios’ distribution. When a matrix has a huge number of small singular values and only a few of them that are very big, the ones with the greatest factor score ratios will appear many times during the measurements. In contrast, the negligible ones are not likely to be measured. This intuition has already appeared in literature (Gyurik et al. 2020; Cade and Montanaro 2018). Nevertheless, the analysis and the problem solved in these works are different from ours. In the context of data representation and analysis, this intuition has only been sketched for sparse or low rank square symmetric matrices by Lloyd et al. (2014), without a precise formalization. We thoroughly formalize it for any real matrix.

Theorem 8

(Quantum factor score ratio estimation) Let there be quantum access to a matrix \(\mathbf {A} \in \mathbb {R}^{n \times m}\), with singular value decomposition \(\mathbf {A} = \sum _i\sigma _i\mathbf {u}_i\mathbf {v}^T_i\). Let \(\gamma , \epsilon\) be precision parameters. There exists a quantum algorithm that runs in time \(\widetilde{O}\left( \frac{1}{\gamma ^2}\frac{\mu (\mathbf {A})}{\epsilon }\right)\) and estimates:

-

all the factor score ratios \(\lambda ^{(i)} > \gamma\), with probability at least \(1-1/\text {poly}(r)\), such that \(|{\lambda ^{(i)} - \overline{\lambda^{(i)}}}| \le 2\epsilon \frac{\sigma _i}{\Vert {A}\Vert ^2_F}\), with probability at least \(1-1/\text {poly}(n)\);

-

the corresponding singular values \(\sigma _i\), such that \(|{\sigma _i - \overline{\sigma }_i}| \le \epsilon\) with probability at least \(1-1/\text {poly}(n)\);

-

the corresponding factor scores \(\lambda _i\), such that \(|{\lambda _i - \overline{\lambda }_i}| \le 2\epsilon \sqrt{\lambda _i}\) with probability at least \(1-1/\text {poly}(n)\).

The proof consists in bounding the run-time, the error, and the probability of failure of Algorithm 1.

Proof

By the definition of quantum access, the cost of step 2 is \(\widetilde{O}(1)\). The singular value estimation in step 3 can be performed using Theorem 3 in time \(\widetilde{O}\left( \frac{\mu (\mathbf {A})}{\tau }\right)\), such that \(\Vert {\sigma _i - \overline{\sigma }_i}\Vert \le \epsilon\) with probability at least \(1-1/\text {poly}(n)\). A measurement of the third register at step 4 can output any \(\overline{\sigma }_i\) with probability \(\lambda ^{(i)} = \frac{\sigma _i^2}{\sum _j^r\sigma _j^2}\).

Theorem 7 guarantees that with \(O(1/\gamma ^2)\) measurements we can get estimates \(|{\lambda ^{(i)} - \overline{\lambda _i}}|\le \gamma\). In particular, Kerenidis et al. (2019b) estimate that \(N=36\log (r)/\gamma ^2\) measures should suffice for our goal.

Alternatively, we could consider the measurement process as performing r Bernoulli trials: one for each \(\overline{\lambda }^{(i)}\), so that if we measure \(\overline{\sigma }_i\) it is a success for the \(i^{th}\) Bernoulli trial and a failure for all the others. Given a confidence level z, it is possible to use the Wald confidence interval to determine a value for N such that \(|{\lambda ^{(i)} - \frac{\zeta _{\overline{\sigma }_i}}{N}}| \le \gamma\) with confidence level z, where \(\zeta _{\overline{\sigma }_i}\) is the number of times that \(\overline{\sigma }_i\) has appeared in the measurements. In this case, it suffice to choose \(N=\frac{z^2}{4\gamma ^2}\) [Schuld and Petruccione (2018), Section 5.1.3]. Having \(|{\lambda ^{(i)} - \overline{\lambda ^{(i)}}}|\le \gamma\) means measuring all the \(\overline{\sigma }_i\) whose factor score ratio is greater than \(\lambda\).

We now proceed with the error analysis. We can compute \(\overline{\lambda }_i=\overline{\sigma }_i^2\).

If we keep the error analysis at the first order and consider that \(\sigma _i = \sqrt{\lambda _i}\), we can conclude the bound as \(|{\lambda _i - \overline{\sigma }_i^2}| \le 2\epsilon \sqrt{\lambda _i}\). Similarly, we can compute \(\overline{\lambda^{(i)}}=\frac{\overline{\sigma }_i^2}{\Vert {A}\Vert _F^2}\).

The parameter \(\gamma\) is the one that controls how big a factor score ratio should be for the singular value/factor score to be measured. If we choose \(\gamma\) bigger than the least factor scores ratio of interest, the estimate for the smaller ones is likely to be 0, as \(|{\lambda ^{(i)}-0}|\le \gamma\) would be a plausible estimation.

Often in data representations, the cumulative sum of the factor score ratios is a measure of the quality of the representation. By slightly modifying Algorithm 1 to use Theorem 7, it is possible to estimate this sum such that \(|{\sum _i^k \lambda ^{(i)} - \sum _i^k \overline{\lambda^{(i)}}}| \le k\epsilon\) with probability \(1-1/\text {poly}(r)\). However, a slight variation of Algorithm IV.3 for spectral norm estimation in Kerenidis and Prakash (2020a) provides a more accurate estimation in less time, given a threshold \(\theta\) for the smallest singular value to retain.

Theorem 9

(Quantum check on the factor score ratios’ sum) Let there be quantum access to a matrix \(\mathbf {A} \in \mathbb {R}^{n \times m}\), with singular value decomposition \(\mathbf {A} = \sum _i\sigma _i\mathbf {u}_i\mathbf {v}^T_i\). Let \(\eta , \epsilon\) be precision parameters, and \(\theta\) be a threshold for the smallest singular value to consider. There exists a quantum algorithm that estimates \(p = \sum _{i: \overline{\sigma }_i \ge \theta } \lambda ^{(i)}\), where \(|{\sigma _i - \overline{\sigma }_i}| \le \epsilon\), to relative error \(\eta\) in time \(\widetilde{O}\left( \frac{\mu (\mathbf {A})}{\epsilon }\frac{1}{\eta \sqrt{p}}\right)\).

Proof

As discussed in the previous proof, the cost of preparing the state at step 2 is \(\widetilde{O}\left( \frac{\mu (\mathbf {A})}{\epsilon }\right)\). The complexity of step 3 is \(\widetilde{O}(1)\), as it is an arithmetic operation that only depends on the encoding of \(|{\overline{\sigma }_i}\rangle\). Step 4 consists in uncomputing step 2 and has its same cost. Finally, the cost of amplitude estimation, with relative precision \(\eta\), on the last register being \(|{0}\rangle\) is equal to \(O\left( T(U_{4})\frac{1}{\eta \sqrt{p}}\right)\), where \(p = \frac{\sum _{i: \overline{\sigma }_i \ge \theta } \sigma _i^2}{\sum _j^r \sigma _j^2}\) is the probability of measuring \(|{0}\rangle\) (Theorem 5). The overall complexity is proven: \(\widetilde{O}\left( \frac{\mu (\mathbf {A})}{\epsilon }\frac{1}{\eta \sqrt{p}}\right) .\)

Since the sum of factor score ratios p is a measure of the representation quality, in problems such as PCA, CA, and LSA, this is usually a constant number bigger than 0 (i.e., often in practice, \(p \in [0.3, 1]\)). This makes the term \(\sqrt{p}\) negligible in most of the practical applications. Moreover, we further modify Algorithm IV.3 to perform a binary search of \(\theta\) given the desired sum of factor score ratios.

Theorem 10

(Quantum binary search for the singular value threshold) Let there be quantum access to a matrix \(\mathbf {A} \in \mathbb {R}^{n \times m}\). Let \(\eta , \epsilon\) be precision parameters, and \(\theta\) be a threshold for the smallest singular value to consider. Let \(p \in [0,1]\) be the factor score ratios sum to retain. There exists a quantum algorithm that runs in time \(\widetilde{O}\left( \frac{\mu (\mathbf {A})\log (\mu (\mathbf {A})/\epsilon )}{\epsilon \eta }\right)\) and outputs an estimate \(\theta\) such that \(|{p - \sum _{i: \overline{\sigma }_i \ge \theta } \lambda ^{(i)}}| \le \eta\), where \(|{\overline{\sigma }_i - \sigma _i}| \le \epsilon\), or detects whether such \(\theta\) does not exists.

The proof consists in proving the correctness and the run-time of Algorithm 3.

Proof

The algorithm searches for \(\theta\) using \(\tau\) as an estimate between 0 and 1. The search is performed using \(\text {sign}(p_\tau - p)\) as an oracle that tells us whether to update the lower or upper bound for \(\tau\).

The algorithm terminates when \(|{\overline{p}_\tau - p}| \le \eta /2\) or when it is not possible to update \(\tau\) anymore (i.e., there are not enough qubits to express the next \(\tau\)). In this last case, there is no \(\theta\) that satisfies the requisites and the algorithm returns \(-1\).

In the first case, instead, we need to guarantee that \(|{p - \sum _{i: \overline{\sigma }_i \ge \theta } \lambda ^{(i)} }| = |{p - p_\tau }| \le \eta\). Since we run amplitude estimation with additive error \(\eta /2\) we have \(|{\overline{p}_\tau - {p}_\tau }| \le \eta /2\), and we require \(|{\overline{p}_\tau - p}| \le \eta /2\) to stop. This two conditions entail

If we want \(\theta\) to be comparable with the singular values of \(\mathbf {A}\) and use \(\tau\) for the binary search, we have to use Theorem 3 with error \(\frac{\mu (\mathbf {A})}{\epsilon }\), meaning that Step 6 can be done in time \(O(\mu (\mathbf {A})/\epsilon )\). The total cost of the inner loop has to be evaluated at the end of Step 9, which runs in time \(O(\frac{\mu (\mathbf {A})}{\epsilon \eta })\).

The maximum number of updates of \(\tau\) is bounded by the number of qubits that we use to store the singular values \(\hat{\sigma }_i\). This is given by the logarithm of the error used in Step 6, and is \(O\left( \log \left( \frac{\mu {(\mathbf{A})}}{\epsilon }\right) \right)\).

The run-time of this algorithm is bounded by \(\widetilde{O}\left( \frac{\mu (\mathbf {A})\log (\mu (\mathbf {A})/\epsilon )}{\epsilon \eta }\right)\).

Using the quantum counting algorithms of Brassard et al. (2002) after step 3 of Algorithm 2, it is possible to count the number of singular values retained by a certain threshold \(\theta\).

Corollary 11

(Quantum reduced rank estimation) Let there be quantum access to a matrix \(\mathbf {A} \in \mathbb {R}^{n \times m}\), with singular value decomposition \(\mathbf {A} = \sum _i^r\sigma _i\mathbf {u}_i\mathbf {v}^T_i\) and rank r. Let \(\epsilon\) be a precision parameter, and \(\theta\) be a threshold for the smallest singular value to consider. There exists a quantum algorithm that estimates the exact number k of singular values such that \(\overline{\sigma }_i \ge \theta\), where \(|{\sigma _i - \overline{\sigma }_i}| \le \epsilon\), in time \(\widetilde{O}\left( \frac{\mu (\mathbf {A})}{\epsilon }\sqrt{(k+1)(r-k+1)}\right)\) with probability at least \(\frac{2}{3}\).

Similarly, given a parameter \(\eta\), it is possible to produce an estimate \(\overline{k}\) such that \(|{\overline{k}-k}| \le \eta k\) in time \(\widetilde{O}\left( \frac{\mu (\mathbf {A})}{\epsilon \eta }\sqrt{\frac{r}{k}}\right)\) with probability at least \(\frac{2}{3}\).

Estimating the number of singular values retained by \(\theta\) is helpful. When the singular values are dense around \(\theta\), this Corollary, together with Theorem 9, can help the analyst evaluate trade-offs between big p and small k. On the one hand, the bigger p is, the more information on the dataset one can retain. On the other hand, the bigger k is, the slower will the algorithms in the next section be.

3.2 Extracting the SVD representation

After introducing the procedures to test for the most relevant singular values, factor scores and factor score ratios of \(\mathbf {A}\), we present a routine to extract the corresponding right/left singular vectors. The inputs of this algorithm, other than the matrix, are a parameter \(\delta\) for the precision of the singular vectors, a parameter \(\epsilon\) for the precision of the singular value estimation, and a threshold \(\theta\) to discard the non interesting singular values/vectors. The output guarantees a unit estimate \(\overline{\mathbf {x}}_i\) of each singular vector such that \(\Vert {\mathbf {x}_i -\overline{\mathbf {x}}_i}\Vert \le \delta\), ensuring that the estimate has a similar orientation to the original vector. Additionally, this subroutine can provide an estimation of the singular values greater than \(\theta\), to absolute error \(\epsilon\).

Theorem 12

(Top-k singular vectors extraction) Let there be efficient quantum access to a matrix \(\mathbf {A} \in \mathbb {R}^{n \times m}\), with singular value decomposition \(\mathbf {A} = \sum _i^r \sigma _i \mathbf {u}_i \mathbf {v}_i^T\). Let \(\delta > 0\) be a precision parameter for the singular vectors, \(\epsilon >0\) a precision parameter for the singular values, and \(\theta >0\) be a threshold such that \(\mathbf {A}\) has k singular values greater than \(\theta\). Define \(p=\frac{\sum _{i: \overline{\sigma }_i \ge \theta } \sigma _i^2}{\sum _j^r \sigma _j^2}\). There exist quantum algorithms that estimate:

-

The top k left singular vectors \(\mathbf {u}_i\) of \(\mathbf {A}\) with unit vectors \(\overline{\mathbf {u}}_i\) such that \(\Vert {\mathbf {u}_i-\overline{\mathbf {u}}_i}\Vert _2 \le \delta\) with probability at least \(1-1/poly(n)\), in time \(\widetilde{O}\left( \frac{\Vert {\mathbf{A}}\Vert }{\theta }\frac{1}{\sqrt{p}}\frac{\mu (\mathbf {A})}{\epsilon }\frac{kn}{\delta ^2}\right)\);

-

The top k right singular vectors \(\mathbf {v}_i\) of \(\mathbf {A}\) with unit vectors \(\overline{\mathbf {v}}_i\) such that \(\Vert {\mathbf {v}_i-\overline{\mathbf {v}}_i}\Vert _2 \le \delta\) with probability at least \(1-1/poly(m)\), in time \(\widetilde{O}\left( \frac{\Vert {\mathbf{A}}\Vert }{\theta }\frac{1}{\sqrt{p}}\frac{\mu (\mathbf {A})}{\epsilon }\frac{km}{\delta ^2}\right)\).

-

The top k singular values \(\sigma _i\), factor scores \(\lambda _i\), and factor score ratios \(\lambda ^{(i)}\) of \(\mathbf {A}\) to precision \(\epsilon\), \(2\epsilon \sqrt{\lambda _i}\), and \(\epsilon \frac{\sigma _i}{\Vert \mathbf{A}\Vert ^2_F}\) respectively, with probability at least \(1 - 1/\text {poly}(m)\), in time \(\widetilde{O}\left( \frac{\Vert {\mathbf{A}}\Vert }{\theta }\frac{1}{\sqrt{p}}\frac{\mu (\mathbf {A})k}{\epsilon }\right)\) or during any of the two procedures above.

The proof consists in proving the time complexity and the error of Algorithm 4.

Proof

Like in the previous proofs, the cost of preparing the state at step 4, is \(\widetilde{O}\left( \frac{1}{\sqrt{p}}\frac{\mu (\mathbf {A})}{\epsilon }\right)\), where \(\widetilde{O}\left( \frac{\mu (\mathbf {A})}{\epsilon }\right)\) is the cost of singular value estimation and \(\widetilde{O}\left( \frac{1}{\sqrt{p}}\right)\) is the one of amplitude amplification. Step 5 is a conditional rotation and similarly to step 3 it has a negligible cost. The next step is to analyze the amplitude amplification at 6. The constant C is a normalization factor in the order of \(\widetilde{O}(1/\kappa (\mathbf {A}^{(k)}))\) where \(\kappa (\mathbf {A}^{(k)}) = \frac{\sigma _{max}}{\sigma _{min}}\) is the condition number of the low-rank matrix \(\mathbf {A}^{(k)}\). Since for construction \(\sigma _{min} \ge \theta\), we can bound the condition number \(\kappa (\mathbf {A}^{(k)}) \le \frac{\Vert {\mathbf {A}}\Vert }{\theta }\). From the famous work of Harrow, Hassidim and Lloyd Harrow et al. (2009) we know that applying amplitude amplification on the state above, with the the third register being \(|{0}\rangle\), would cost \(T(U_{6}) \sim \widetilde{O}(\kappa (\mathbf {A}^{(k)}) T(U_{5})) \sim \widetilde{O}\left( \frac{\Vert {\mathbf {A}}\Vert }{\theta }\frac{1}{\sqrt{p}}\frac{\mu (\mathbf{A})}{\epsilon }\right)\).

This last amplitude amplification leaves the registers in the state

where \(\overline{\sigma }_i \in [\sigma _i - \epsilon , \sigma _i + \epsilon ]\) and \(\frac{\sigma _i}{\sigma _i \pm \epsilon } \rightarrow 1\) for \(\epsilon \rightarrow 0\).

When measuring the last register of state 6 in the computational basis, we measure \(|{\overline{\sigma }_i}\rangle\) and the first two registers collapse in the state \(|{\mathbf {u}\rangle _i}|{\mathbf {v}\rangle _i}\). It is possible to perform vector-state tomography on \(|{\mathbf {u}\rangle _i}|{\mathbf {v}\rangle _i}\), using Theorem 6 on the first register to retrieve \(\overline{\mathbf {u}}_i\), or on the second one to retrieve \(\overline{\mathbf {v}}_i\). The costs are \(O(\frac{n\log {n}}{\delta ^2})\) and \(O(\frac{m\log {m}}{\delta ^2})\), respectively. Using a coupon collector’s argument (Erdős and Rényi 1961), if the k states \(|{\overline{\sigma }_i}\rangle\) are uniformly distributed, to get all the k possible couples \(|{\mathbf {u}\rangle _i}|{\mathbf {v}\rangle _i}\) at least once, we would need \(k\log k\) measurements on average. This proves that it is possible to estimate all the singular values, factor scores and factor score ratios with the guarantees of Theorem 3 in time \(\widetilde{O}(\frac{\Vert {\mathbf{A}}\Vert }{\theta }\frac{1}{\sqrt{p}}\frac{\mu (\mathbf {A})k}{\epsilon })\).

To perform tomography on each state-vector, one should satisfy the coupon collector the same number of times as the measurements needed by the tomography procedure. The costs of the tomography for all the vectors \(\{\overline{\mathbf {u}}_i\}_i^k\) and \(\{\overline{\mathbf {v}}_i\}_i^k\) are \(O\left( T(U_{(6)})\frac{k\log {k}\cdot n\log {n}}{\delta ^2}\right)\), and \(O\left( T(U_{(6)})\frac{k\log {k}\cdot m\log {m}}{\delta ^2}\right) .\) Therefore, the following complexities are proven: \(\widetilde{O}\left( \frac{||{\mathbf {A}}||}{\theta }\frac{1}{\sqrt{p}}\frac{\mu (\mathbf {A})}{\epsilon }\frac{kn}{\delta ^2}\right) , \widetilde{O}\left( \frac{||{\mathbf {A}}||}{\theta }\frac{1}{\sqrt{p}}\frac{\mu (\mathbf {A})}{\epsilon }\frac{km}{\delta ^2}\right) .\)

In the appendix, Section 6.3., we provide experiments that show that the coupon collector’s argument of Eq. 4 is accurate for practical \(\epsilon\). Besides \(1/\sqrt{p}\) being negligible, it is interesting to note that the parameter \(\theta\) can be computed using: 1. the procedures of Theorems 8, 9; 2. the binary search of Theorem 10; 3. the available literature on the type of data stored in the input matrix \(\mathbf {A}\).

About the latter, the original paper of latent semantic indexing (Deerwester et al. 1990) states that the first \(k=100\) singular values are enough for a good representation. We believe that, in the same way, fixed thresholds \(\theta\) can be defined for different machine learning applications. The experiments of Kerenidis and Luongo (2020) on the run-time parameters of the polynomial expansions of the MNIST dataset support this expectation: even though in qSFA they keep the k smallest singular values and refer to \(\theta\) as the biggest singular value to retain, this value does not vary much when the the dimensionality of their dataset grows. In our experiments, we observe that different datasets for image classification have similar \(\theta\)s. For completeness, we also state a different version of Theorem 12, with \(\ell _\infty\) guarantees on the vectors.

Corollary 13

(Fast top-k singular vectors extraction) The run-times of 12 can be improved to \(\widetilde{O}\left( \frac{\Vert {\mathbf {a}}\Vert }{\theta }\frac{1}{\sqrt{p}}\frac{\mu (\mathbf {A})}{\epsilon }\frac{k}{\delta ^2}\right)\) with estimation guarantees on the \(\ell _\infty\) norms.

Proof

The proof consists in using \(\ell _\infty\) tomography (Theorem 7) at step 7 of Algorithm 4.

Note that, given a vector with d non-zero entries, performing \(\ell _\infty\) tomography with error \(\frac{\delta }{\sqrt{d}}\) provides the same guarantees of \(\ell _2\) tomography with error \(\delta\). This implies that the extraction of the singular vectors with \(\ell _2\) guarantees can be faster if we can make assumptions on their sparseness: \(\widetilde{O}\left( \frac{\Vert {\mathbf {A}}\Vert }{\theta }\frac{1}{\sqrt{p}}\frac{\mu (\mathbf {A})}{\epsilon }\frac{kd}{\delta ^2}\right)\).

4 Applications to machine learning

The new quantum procedures can be used for principal component analysis, correspondence analysis, and latent semantic analysis. Besides extracting the orthogonal factors and measuring their importance, we provide a procedure to represent the data in PCA’s reduced feature space on a quantum computer. In a similar way, it is possible to compute the representations of CA and LSA.

4.1 Principal component analysis

Principal component analysis is a widely-used multivariate statistical method for continuous variables with applications in machine learning. Its uses range from outlier detection to dimensionality reduction and data visualization. Given a matrix \(\mathbf {A} \in \mathbb {R}^{n \times m}\) storing information about n data points with m coordinates, its principal components are the set of orthogonal vectors along which the variance of the data points is maximized. The goal of PCA is to compute the principal components with the amount of variance they capture and rotate the data points to express their coordinates along the principal components. It is possible to represent the data using only the k coordinates that express the most variance for dimensionality reduction.

Model

The model of PCA is closely related to the singular value decomposition of the data matrix \(\mathbf {A}\), shifted to row mean 0. The model consists of the principal components and the amount of variance they explain. The principal components coincide with the right singular vectors \(\mathbf {v}_i\), the factor scores \(\lambda _i = \sigma _i^2\) represent the amount of variance along each of them, and the factor score ratios \(\lambda ^{(i)}=\frac{\lambda _i}{\sum _j^r\lambda _j}\) express the percentage of retained variance. For datasets with 0 mean, the transformation consists in a rotation along the principal components: \(\mathbf {Y} = \mathbf {A}\mathbf {V} = \mathbf {U}{{{\varvec{\Sigma }}}}\mathbf {V}^T\mathbf {V} = \mathbf {U}{{{\varvec{\Sigma }}}} \in \mathbb {R}^{n \times m}\). When performing dimensionality reduction, it suffice to use the top k singular values and vectors.

Using the procedures from Section 3 it is possible to extract the model for principal component analysis. In particular, Theorems 8, 9, and 10 allow to retrieve information on the factor scores and on the factor score ratios, while Theorem 12 allows extracting the principal components. The run-time of the model extraction is the sum of the run-times of the theorems: \(\widetilde{O}\left( \left( \frac{1}{\gamma ^2} + \frac{km}{\theta \delta ^2}\right) \frac{\mu (\mathbf {A})}{\epsilon }\right)\). The model comes with the following guarantees: \(|{\sigma _i - \overline{\sigma }_i}| \le \frac{\epsilon }{2}\); \(|{\lambda _i - \overline{\lambda }_i}| \le \epsilon \sqrt{\lambda _i}\); \(|{\lambda ^{(i)} - \overline{\lambda^{(i)}}}| \le \epsilon \frac{\sigma _i}{\Vert {\mathbf {A}}\Vert _f}\); \(\Vert {\mathbf {v}_i - \overline{\mathbf {v}}_i}\Vert \le \delta\) for \(i \in \{0, \dots ,k-1\}\). This run-time is generally smaller than the number of elements of the input data matrix, providing polynomial speed-ups on the best classical routines for non-sparse matrices. In writing the time complexity of the routines, we have omitted the term \(\frac{1}{\sqrt{p}}\) because usually p is chosen to be a number greater than 0.5 (generally in the order of 0.8/0.9).

When performing dimensionality reduction, the goal is to obtain the matrix \(\mathbf {Y}=\mathbf {U}{{{\varvec{\Sigma }}}} \in \mathbb {R}^{n\times k}\), where \(\mathbf {U} \in \mathbb {R}^{n \times k}\) and \({{{\varvec{\Sigma }}}} \in \mathbb {R}^{k \times k}\) are composed respectively of the top k left singular vectors and singular values. In Lemma 14, we provide a theoretical error bound for \(\mathbf {Y}\), using the estimated entries of \(\mathbf {U}\) and \({{{\varvec{\Sigma }}}}\). For sake of completeness, the error bound is also stated for \(\mathbf {V}{{{\varvec{\Sigma }}}}\). These bounds stand regardless of how the singular values and vectors are extracted and hold when the multiplication is done with a classical computer.

Lemma 14

(Accuracy of \(\overline{\mathbf {U}{{{\varvec{\Sigma }}}}}\) and \(\overline{\mathbf {V}{{{\varvec{\Sigma }}}}}\)) Let \(\mathbf {A} \in \mathbb {R}^{n \times m}\) be a matrix. Given some approximate procedures to retrieve estimates \(\overline{\sigma }_i\) of the singular values \(\sigma _i\) such that \(|{\sigma _i - \overline{\sigma }_i}| \le \epsilon\) and unit estimates \(\overline{\mathbf {u}}_i\) of the left singular vectors \(\mathbf {u}_i\) such that \(\Vert {\overline{\mathbf {u}}_i - \mathbf {u}_i}\Vert _2 \le \delta\), the error on \(\mathbf {U}{{{\varvec{\Sigma }}}}\) can be bounded as \(\Vert {\mathbf {U}{{{\varvec{\Sigma }}}} - \overline{\mathbf {U}}\overline{{{{\varvec{\Sigma }}}}}}\Vert _F \le \sqrt{\sum _j^k \left( \epsilon + \delta \sigma _j \right) ^2}\). Similarly, \(\Vert {\mathbf {V}{{{\varvec{\Sigma }}}} - \overline{\mathbf {V}}\overline{{{{\varvec{\Sigma }}}}}}\Vert _F \le \sqrt{\sum _j^k \left( \epsilon + \delta \sigma _j \right) ^2}\). Both are bounded by \(\sqrt{k}(\epsilon +\delta \Vert {\mathbf {a}}\Vert )\).

We prove this result for \(\Vert {\mathbf {U}{{{\varvec{\Sigma }}}} - \overline{\mathbf {U}}\overline{{{{\varvec{\Sigma }}}}}}\Vert _F\). The proof for \(\Vert {\mathbf {V}{{{\varvec{\Sigma }}}}-\overline{\mathbf {V}}\overline{{{{\varvec{\Sigma }}}}}}\Vert _F\) is analogous.

Proof

We first bound the error on the columns:

Because of the triangular inequality, \(\Vert {\sigma _i (\overline{\mathbf {u}}_i - \mathbf {u}_i) \pm \epsilon \overline{\mathbf {u}}_i }\Vert \le \sigma _i\Vert {\overline{\mathbf {u}}_i - \mathbf {u}_i}\Vert + \epsilon \Vert {\overline{\mathbf {u}}_i }\Vert\). Also by hypothesis, \(\Vert {(\overline{\mathbf {u}}_i - \mathbf {u}_i)}\Vert \le \delta\) and \(\Vert {{\mathbf {u}}_i}\Vert = 1\) . Thus, \(\sigma _i\Vert { \overline{\mathbf {u}}_i - \mathbf {u}_i}\Vert + \epsilon \Vert {\overline{\mathbf {u}}_i }\Vert \le \sigma _i \delta + \epsilon\). Since \(f(x) = \sqrt{x}\) is an increasing monotone function, it is possible to prove:

Using matrix-multiplication from Theorem 4, we can have algorithms to produce quantum states proportional to the data representation in the new feature space. Having access to \(\mathbf {V}^{(k)} \in \mathbb {R}^{m\times k}\), these routines create the new data points in almost constant time and are helpful when chained to other quantum machine learning algorithms that need to be executed multiple times.

Corollary 15

(Quantum PCA: vector dimensionality reduction) Let \(\xi\) be a precision parameter. Let there be efficient quantum access to the top k right singular vectors \(\overline{\mathbf {V}}^{(k)} \in \mathbb {R}^{m \times k}\) of a matrix \(\mathbf {A}= \mathbf {U}{{{\varvec{\Sigma }}}}\mathbf {V}^T \in \mathbb {R}^{n \times m}\), such that \(\Vert {\mathbf {V}^{(k)} - \overline{\mathbf {V}}^{(k)}}\Vert \le \frac{\xi }{\sqrt{2}}\). Given quantum access to a row \(\mathbf {a}_i\) of \(\mathbf {A}\), the quantum state \(|{\overline{\mathbf {y}}_{i}}\rangle = \frac{1}{\Vert {\overline{\mathbf {y}}_i}\Vert }\sum _i^k \overline{y}_k |{i}\rangle\), proportional to its projection onto the PCA space, can be created in time \(\widetilde{O}\left( \mu (\mathbf {V}^{(k)})\frac{\Vert {\mathbf {a}_i}\Vert }{\Vert {\overline{\mathbf {y}}_i}\Vert }\right)\) with probability at least \(1-1/\text {poly}(m)\) and precision \(\Vert {|{\mathbf {y}_i}\rangle - |{\overline{\mathbf {y}}_i}\rangle }\Vert \le \frac{\Vert {\mathbf {a}_i}\Vert }{\Vert {\overline{\mathbf {y}}_i}\Vert }\xi\). An estimate of \(\Vert {\overline{\mathbf {y}}_i}\Vert\), to relative error \(\eta\), can be computed in \(\widetilde{O}(1/\eta )\).

Proof

Here with \(\mathbf {V}\) we denote \(\mathbf {V}^{(k)} \in \mathbb {R}^{m\times k}\). Given a vector \(\mathbf {a}_i\), its projection onto the k-dimensional PCA space of \(\mathbf {A}\) is \(\mathbf {y}_i^T = \mathbf {a}_i^T \mathbf {V}\), or equivalently \(\mathbf {y}_i = \mathbf {V}^T\mathbf {a}_i\). Note that \(\Vert {\mathbf {y}_i}\Vert = \Vert {\mathbf {V}^T\mathbf {a}_i}\Vert\).

It is possible to use Theorem 4 to multiply the quantum state \(|{\mathbf {a}_i}\rangle\) by \(\mathbf {V}^T\), appropriately padded with 0s to be a square \(\mathbb {R}^{m\times m}\) matrix. In this way, we can create an approximation \(|{\overline{\mathbf {y}}_i}\rangle\) of the state \(|{\mathbf {y}_i}\rangle = |{\mathbf {v}\rangle ^T\mathbf {a}_i}\) in time \(\widetilde{O}\left( \frac{\mu (\mathbf {V}^T)\log (1/\epsilon )}{\gamma }\right)\) with probability \(1-1/\text {poly}(m)\), such that \(\Vert {|{\mathbf {y}_i}\rangle - |{\overline{\mathbf {y}}_i}\rangle }\Vert \le \epsilon\). Since \(\mathbf {V}^T\) has rows with unit \(\ell _2\) norm, we can prepare efficient quantum access to it by creating access to its rows [Kerenidis and Prakash (2020a), Theorem IV.1]. Having \(\gamma = \Vert {\mathbf {V}^T\mathbf {a}_i}\Vert /\Vert {\mathbf {a}_i}\Vert\), we get a run-time of \(\widetilde{O}\left( \mu (\mathbf {V})\frac{\Vert {\mathbf {a}_i}\Vert }{\Vert {\mathbf {y}_i}\Vert }\log (1/\epsilon )\right)\). The term \(\log (1/\epsilon )\) can be considered negligible. We conclude that the state \(|{\mathbf {y}_i}\rangle\) can be created in time \(\widetilde{O}\left( \mu (\mathbf {V})\frac{\Vert {\mathbf {a}_i}\Vert }{\Vert {\mathbf {y}_i}\Vert }\right)\) with probability \(1-1/\text {poly}(m)\) and that its norm can be estimated to relative error \(\eta\) in time \(\widetilde{O}\left( \mu (\mathbf {V})\frac{\Vert {\mathbf {a}_i}\Vert }{\Vert {\mathbf {y}_i}\Vert }\frac{1}{\eta }\right)\).

For what concerns the error, we start by bounding \(\Vert {\mathbf {y}_i - \overline{\mathbf {y}}_i}\Vert\) and then use Claim 2 to bound the error on the quantum states. Assume to have estimates \(\overline{\mathbf {v}}_i\) of the columns of \(\mathbf {V}\) such that \(\Vert {\mathbf {v}_i - \overline{\mathbf {v}}_i}\Vert \le \delta\).

Considering that \(\Vert {\mathbf {y}_i - \overline{\mathbf {y}}_i}\Vert = {\mathbf {a}_i^T\mathbf {V}^{(k)} - \mathbf {a}_i^T\overline{\mathbf {V}}^{(k)}}\Vert \le \Vert {\mathbf {a}_i}\Vert \sqrt{k}\delta\), we can use Claim 2 to state

Setting \(\delta = \frac{\xi }{\sqrt{2k}}\) leads to the requirement \(\Vert { \mathbf {V} - \overline{\mathbf {V}} }\Vert _F \le \frac{\xi }{\sqrt{2}}\).

This result also holds when \(\mathbf {a}_i\) is a previously unseen data point, not necessarily stored in \(\mathbf {A}\). Note that from the row orthogonality of \(\mathbf {V}^{(k)}\) it follows that \(\mu (\mathbf {V}^{(k)}) \le \Vert {\mathbf {V}^{(k)}}\Vert _F = \sqrt{k}\). Furthermore, \(\frac{\Vert {\mathbf {y}_i}\Vert }{\Vert {\mathbf {a}_i}\Vert }\) is expected to be close to 1, as it is the percentage of support of \(\mathbf {a}_i\) on the new feature space spanned by \(\mathbf {V}^{(k)}\). We formalize this better using Definition 2 below.

Definition 2

(PCA-representable data) A set of n data points described by m coordinates, represented through a matrix \(\mathbf {A}=\sum _i^r\sigma _i \mathbf {u}_i\mathbf {v}_i^T \in \mathbb {R}^{n \times m}\) is said to be PCA-representable if there exists \(p \in [\frac{1}{2},1], \varepsilon \in [0, 1/2], \beta \in [p-\varepsilon , p+\varepsilon ], \alpha \in [0,1]\) such that:

-

\(\exists k \in O(1)\) such that \(\frac{\sum _i^k \sigma ^2_i}{\sum _i^m \sigma ^2_i}= p\)

-

for at least \(\alpha n\) points \(\mathbf {a}_i\) it holds \(\frac{\Vert {\mathbf {y}_i}\Vert }{\Vert {\mathbf {a}_i}\Vert } \ge \beta\), where \(\Vert {\mathbf {y}_i}\Vert = \sqrt{\sum _i^k |{\langle {\mathbf {a}_i}|{\mathbf {v}_j}}\rangle|^2} \Vert {\mathbf {a}_i}\Vert\).

Claim 16

(Quantum PCA on PCA-representable datasets) Let \(\mathbf {a}_i\) be a row of \(\mathbf {A} \in \mathbb {R}^{n\times d}\). Then, for \(p \in [1/2, 1]\), the run-time of Corollary 15 is \(\mu (\mathbf {V})\frac{\Vert {\mathbf {a}_i}\Vert }{\Vert {\overline{\mathbf {y}}_i}\Vert } = \mu (\mathbf {V})\frac{1}{\beta } = O(\mu (\mathbf {V}))\) with probability greater than \(\alpha\).

It is known that, in practical machine learning datasets, \(\alpha\) is a number fairly close to one. We have tested the value of \(\alpha\) for the MNIST, Fashion MNIST and CIFAR-10 datasets, finding values over 0.85 for any \(p \in (0,1]\).

The next corollary shows how to perform perform dimensionality reduction on the whole matrix, enabling quantum access to the data matrix in the reduced feature space.

Corollary 17

(Quantum PCA: matrix dimensionality reduction) Let \(\xi\) be a precision parameter and p be the amount of variance retained after the dimensionality reduction. Let there be efficient quantum access to \(\mathbf {A}= \mathbf {U}{{{\varvec{\Sigma }}}}\mathbf {V}^T \in \mathbb {R}^{n \times m}\) and to its top k right singular vectors \(\overline{\mathbf {V}}^{(k)} \in \mathbb {R}^{m \times k}\), such that \(\Vert {\mathbf {V}^{(k)} - \overline{\mathbf {V}}^{(k)}}\Vert \le \frac{\xi \sqrt{p}}{\sqrt{2}}\). There exists a quantum algorithm that, with probability at least \(1-1/\text {poly}(m)\), creates the state \(|{\overline{\mathbf {Y}}}\rangle = \frac{1}{\Vert {\mathbf {Y}}\Vert _F}\sum _i^n \Vert {\mathbf {y}_{i,\cdot }}\Vert |{i}\rangle |{\mathbf {y}_{i,\cdot }}\rangle\), proportional to the projection of \(\mathbf {A}\) in the PCA subspace, with error \(\Vert {|{\mathbf {Y}}\rangle - |{\overline{\mathbf {Y}}}\rangle }\Vert \le \xi\) in time \(\widetilde{O}(\mu (\mathbf {V})/\sqrt{p})\). An estimate of \(\Vert {\overline{\mathbf {Y}}}\Vert _F\), to relative error \(\eta\), can be computed in \(\widetilde{O}(\frac{\mu (\mathbf {V})}{\sqrt{p}\eta })\).

Proof

Here with \(\mathbf {V}\) we denote \(\mathbf {V}^{(k)} \in \mathbb {R}^{m\times k}\). Using the same reasoning as the proof above and giving a closer look at the proof of Theorem 4 (Lemma 24 (Chakraborty et al. 2019)), we see that it is possible to create the state \(|{0}\rangle (\frac{\overline{\mathbf {V}}^T}{\mu (\mathbf {V})}|{\mathbf {a}_i}\rangle ) + |{0_\perp }\rangle\) in time \(\widetilde{O}(1)\) and that the term \(\frac{\mu (\mathbf {V})}{\gamma }\) is introduced to boost the probability of getting the right state. Indeed, if we apply Theorem 4 without the amplitude amplification step to the superposition of the rows of \(\mathbf {A}\), we obtain the following mapping in time \(\widetilde{O}(1)\):

where \(\Vert {\mathbf {y}_{i,\cdot \perp }}\Vert\) are normalization factors. Keeping in mind that \(\Vert {\mathbf {A}}\Vert _F = \sqrt{\sum _i^r \sigma _i^2}\) and \(\Vert {\mathbf {Y}}\Vert _F = \sqrt{\sum _i^n \Vert {\mathbf {y}_{i,\cdot }}\Vert ^2} = \sqrt{\sum _i^k \sigma _i^2}\), we see that the amount of explained variance is \(p = \frac{\sum _i^k \sigma _i^2}{\sum _j^r \sigma _j^2}=\left( \frac{\Vert {\mathbf {Y}}\Vert _F}{\Vert {\mathbf {A}}\Vert _F}\right) ^2\). The probability of obtaining \(|{Y}\rangle = \frac{1}{\Vert {\mathbf {Y}}\Vert _F} \sum _i^n \Vert {\mathbf {y}_{i,\cdot }}\Vert |{i}\rangle |{\mathbf {y}_{i,\cdot }}\rangle\) is \(\frac{p}{\mu (\mathbf {V})^2} = \frac{\Vert {\mathbf {Y}}\Vert _F^2}{\Vert {\mathbf {A}}\Vert _F^2}\frac{1}{\mu (\mathbf {V})^2} = \frac{\sum _i^n \Vert {\mathbf {y}_{i,\cdot }}\Vert ^2}{\Vert {\mathbf {A}}\Vert _F^2\mu (\mathbf {V})^2}\). We conclude that, using \(\widetilde{O}(\mu (\mathbf {V})/\sqrt{p})\) rounds of amplitude amplification, we obtain \(|{\mathbf {Y}}\rangle\) with probability \(1-1/\text {poly}(m)\) (Theorem 5).

For the error, consider that \(\Vert {\mathbf {Y} - \overline{\mathbf {Y}}}\Vert = \Vert {\mathbf {A}\mathbf {V}^{(k)} - \mathbf {A}\overline{\mathbf {V}}^{(k)}}\Vert \le \Vert {\mathbf {A}}\Vert \sqrt{k}\delta\), so we can use Claim 2 to state

We can set \(\delta = \frac{\xi }{\sqrt{2k}}\frac{\Vert {\mathbf {Y}}\Vert _F}{\Vert {\mathbf {a}}\Vert _F} = \frac{\xi \sqrt{p}}{\sqrt{2k}}\), so we require \(\Vert { \mathbf {V} - \overline{\mathbf {V}} }\Vert _F \le \frac{\xi \sqrt{p}}{\sqrt{2}}\).

The error requirements of the two corollaries propagate to the run-time of the model extraction in the following way.

Corollary 18

(Quantum PCA: fitting time) Let \(\epsilon\) be a precision parameter and \(p=\frac{\sum _{i: \overline{\sigma }_i \ge \theta } \sigma _i^2}{\sum _j^r \sigma _j^2}\) the amount of variance to retain, where \(|{\sigma _i - \overline{\sigma }_i}|\le \epsilon\). Given efficient quantum access to a matrix \(\mathbf {A} \in \mathbb {R}^{n \times m}\), the run-time to extract \(\mathbf {V}^{(k)} \in \mathbb {R}^{m \times k}\) for corollaries 15, 17 is \(\widetilde{O}\left( \frac{\mu (\mathbf {A})k^2m}{\theta \epsilon \xi ^2}\right)\).

Proof

The procedure to train the model consists in using Theorem 10 or 8 to extract the threshold \(\theta\), given the amount of variance to retain p, and to leverage Theorem 12 to extract the k right singular vectors that compose \(\mathbf {V} \in \mathbb {R}^{m \times k}\). The run-time of Theorem 10 and 8 are smaller than the one of Theorem 12, so we can focus on the last one. To have \(\Vert {\mathbf {V} - \overline{\mathbf {V}} }\Vert _F \le \frac{\xi \sqrt{p}}{\sqrt{2}}\) we need \(\Vert {\mathbf {v}_i - \overline{\mathbf {v}}_i}\Vert \le \frac{\xi \sqrt{p}}{\sqrt{2k}}\). Substituting \(\delta = \frac{\xi \sqrt{p}}{\sqrt{2k}}\) in the run-time of Theorem 12, we get \(\widetilde{O}(\frac{\mu (\mathbf {A})k^2m}{p^{3/2}\theta \epsilon \xi ^2})\). If we consider that p to be a reasonable number (e.g., at least grater than 0.05), we can consider it a constant factor that is independent from the input’s size. The asymptotic run-time is proven to be \(\widetilde{O}(\frac{\mu (\mathbf {A})k^2m}{\theta \epsilon \xi ^2})\).

When training the model for Corollary 17, the run-time has a dependency on \(1/p^{3/2}\). However, this term is constant and independent from the size of the input dataset.With this additional \(1/p^{3/2}\) cost, the error of Corollary 15 drops to \(\xi\) for every row of the matrix and generally decreases in case of new data points.

Using the same framework and proof techniques, it is possible to produce similar results for the representations of CA and LSA.

Remark: Note that [Yu et al. (2019), Theorem 1] propose a lower bound for a quantity similar to our \(\alpha\). However, their result seems to be a loose bound: using their notation and setting \(\eta =1, \theta =1\) they bound this quantity with 0, while a tight bound should give 1.

4.2 Correspondence analysis

Correspondence analysis is a multivariate statistical tool from the family of factor analysis methods. It is used to explore relationships among categorical variables. Given two random variables, X and Y, with possible outcomes in \(\{x_1, \cdots , x_n\}\) and \(\{y_1, \cdots , y_m\}\), the model of Correspondence Analysis enables representing the outcomes as vectors in two related Euclidean spaces. These vectors can be used for data visualization, exploration, and other unsupervised machine learning tasks.

Model

Given a contingency table for X and Y (see Section 2), it is possible to compute the matrix \(\mathbf {A} = \mathbf {D}_X^{-1/2}(\hat{\mathbf {P}}_{X,Y} - \hat{\mathbf {p}}_X\hat{\mathbf {p}}_Y^T)\mathbf {D}_Y^{-1/2} \in \mathbb {R} ^{n \times m}\), where \(\hat{\mathbf {P}}_{X,Y} \in \mathbb {R}^{n \times m}\) is the estimated matrix of joint probabilities, \(\hat{\mathbf {p}}_X \in R^n\) and \(\hat{\mathbf {p}}_X \in \mathbb {R}^m\) are the vectors of marginal probabilities, and \(\mathbf {D}_X^{-1/2} = diag(\hat{\mathbf {p}}_X)\), \(\mathbf {D}_Y^{-1/2} = diag(\hat{\mathbf {p}}_Y)\). The computation of \(\mathbf {A}\) requires linear time in the non-zero entries of the contingency table. The singular value decomposition of \(\mathbf {A}\) is strictly related to the model of correspondence analysis (Greenacre 1984; Hsu et al. 2019). The new coordinates of X’s outcomes are given by the rows of \(\mathbf {D}_X^{-1/2}\mathbf {U} \in \mathbb {R}^{n\times k}\), while the ones of Y by the rows of \(\mathbf {D}_Y^{-1/2}\mathbf {V}\in \mathbb {R}^{m\times k}\). Like in PCA, it is possible to choose only a subset of the orthogonal factors as coordinates for the representation. Factor scores and factor score ratios measure of how much “correspondence” is captured by the respective orthogonal factor, giving an estimate of the quality of the representation.

Similarly to what we have already discussed, it is possible to extract the model for CA by creating quantum access to the matrix \(\mathbf {A}\) and using Theorems 8, 9, and 12 to extract the orthogonal factors, the factor scores and the factor score ratios in time \(\widetilde{O}\left( \left( \frac{1}{\gamma ^2} + \frac{k(n+m)}{\theta \delta ^2}\right) \frac{\mu (\mathbf {A})}{\epsilon }\right)\). We provide a theoretical bound for the data representations in Lemma 19.

Lemma 19

(Accuracy of \(\mathbf {D}_{X}^{-1/2}\mathbf {U}\) and \(\mathbf {D}_{Y}^{-1/2}\mathbf {V}\)) Let \(\mathbf {A} \in \mathbb {R}^{n \times m}\) be a matrix. Given some approximate procedures to retrieve unit estimates \(\overline{\mathbf {u}}_i\) of the left singular vectors \(\mathbf {u}_i\) such that \(\Vert {\overline{\mathbf {u}}_i - \mathbf {u}_i}\Vert \le \delta\), the error on \(\mathbf {D}_{X}^{-1/2}\mathbf {U}\) can be bounded as \(\Vert { \mathbf {D}_{X}^{-1/2}\mathbf {U}- \mathbf {D}_{X}^{-1/2}\overline{\mathbf {U}}}\Vert _F \le \Vert {\mathbf {D}_{X}^{-1/2}}\Vert _F\sqrt{k}\delta\). Similarly, \(\Vert {\mathbf {D}_{Y}^{-1/2}\mathbf {V} - \mathbf {D}_{Y}^{-1/2}\overline{\mathbf {V}}}\Vert _F \le \Vert {\mathbf {D}_{Y}^{-1/2}}\Vert _F\sqrt{k}\delta .\)

Proof

It suffices to note that \(\Vert {\mathbf {D}_X^{-1/2}\overline{\mathbf {U}} - \mathbf {D}_X^{-1/2}\mathbf {U}}\Vert _\mathbf{F} \le \Vert {\mathbf {D}_X^{-1/2}}\Vert _F\Vert {\overline{\mathbf {U}} - \mathbf {U}}\Vert _F \le \Vert {\mathbf {D}_X^{-1/2}}\Vert _F\sqrt{k}\delta\). Similar conclusions can be drawn for \(\Vert {\mathbf {D}_{Y}^{-1/2}\mathbf {V} - \mathbf {D}_{Y}^{-1/2}\overline{\mathbf {V}}}\Vert _F\).

4.3 Latent semantic analysis

Latent semantic analysis is a data representation method used to represent words and text documents as vectors in Euclidean spaces. Using these vector spaces, it is possible to compare terms, documents, and terms and documents. LSA spaces automatically model synonymy and polysemy (Deerwester et al. 1990), and their applications in machine learning range from topic modeling to document clustering and retrieval.

Model The input of LSA is a contingency table of n words and m documents \(\mathbf {A} \in \mathbb {R}^{n \times m}\). Inner products of rows are a measure of words similarity, and can be computed at once as \(\mathbf {A}\mathbf {A}^T=\mathbf {U}{{{\varvec{\Sigma }}}}^2\mathbf {U}^T\). Inner products of columns \(\mathbf {A}^T\mathbf {A}=\mathbf {V}{{\varvec{\Sigma }}}^2\mathbf {V}^T\) are a measure of documents similarity, and the \(a_{ij}\) entry of \(\mathbf {A}=\mathbf {U}{{\varvec{\Sigma }}}\mathbf {V}^T\) is a measure of similarity between word i and document j. We can use SVD to express words and documents in new spaces where we can compare them with respect to this similarity measure. In particular, we can compute: 1. a representation for word comparisons \(\mathbf {U}{{\varvec{\Sigma }}} \in {{\mathbb {R}}}^{n\times k}\); 2. a representation for document comparisons \(\mathbf {V}{{\varvec{\Sigma }}} \in {{\mathbb {R}}}^{m\times k}\); 3. two representations for word and document comparisons \(\mathbf {U}{{\varvec{\Sigma }}}^{1/2} \in \mathbb {R}^{n\times k}\) and \(\mathbf {V}{{\varvec{\Sigma }}}^{1/2} \in {{\mathbb {R}}}^{m\times k}\).

When using LSA for document indexing, like in a search engine, we need to represent the query as a vector in the document space. In this case, instead of increasing \(\mathbf {A}\)’s size and recomputing the document space, the new vector can be expressed as \(\mathbf {v}_q^T = \mathbf {x}_q^T\mathbf {U}{{\varvec{\Sigma }}}^{-1}\), where \(\mathbf {x}_q \in {{\mathbb {R}}}^{n}\) is obtained using the same criteria used to store a document in \(\mathbf {A}\). The representation of the query can then be used to compare the query to the other documents in the document representation space. Finally, factor score ratios play an important role in LSA too. For instance, the columns of V can be seen as latent topics of the corpus. The importance of each topic is proportional to the corresponding factor score ratio. This paragraph only stresses how computing the SVD of \(\mathbf {A}\) is connected to LSA. For a better introduction to LSA and indexing, we invite the reader to consult the original paper (Deerwester et al. 1990).

Even in this case, the cost of extracting the orthogonal factors and the factor scores is bounded by \(\widetilde{O}\left( \left( \frac{1}{\gamma ^2} + \frac{k(n+m)}{\theta \delta ^2}\right) \frac{\mu (\mathbf {A})}{\epsilon }\right)\). In some applications, the data analyst might use a fixed number of singular values and vectors, regardless of the factor score ratios. In Deerwester et al. (1990), \(k=100\) is found to be a good number for document indexing. Similarly, we believe that if we scale the singular values by the spectral norm, it is possible to empirically determine a threshold \(\theta\) to use in practice. Determining such threshold would reduce the complexity of model computation to the one of Theorem 12: \(\widetilde{O}\left( \frac{k(n+m)}{\theta \delta ^2}\frac{\mu (\mathbf {A})}{\epsilon }\right)\).

For what concerns the error bounds, we already know that it is possible to retrieve an approximation \(\overline{\mathbf {U}{{\varvec{\Sigma }}}}\) and \(\overline{\mathbf {V}{{\varvec{\Sigma }}}}\) with precision \(\sqrt{k}(\epsilon +\delta \Vert {\mathbf {a}}\Vert )\) (Lemma 14), where \(\delta\) is the precision on the singular vectors and \(\epsilon\) the precision on the singular values. To provide bounds on the estimations of \(\mathbf {U}{{\varvec{\Sigma }}}^{1/2}\), \(\mathbf {V}{{\varvec{\Sigma }}}^{1/2}\), and \(\mathbf {U}{{\varvec{\Sigma }}}^{-1}\) we introduce Lemmas 20 and Lemma 21.

Lemma 20

(Accuracy of \(\overline{\mathbf {U}{{\varvec{\Sigma }}}}^{1/2}\) and \(\overline{\mathbf {V}{{\varvec{\Sigma }}}}^{1/2}\)) Let \(\mathbf {A} \in {{\mathbb {R}}}^{n \times m}\) be a matrix. Given some approximate procedures to retrieve estimates \(\overline{\sigma }_i\) of the singular values \(\sigma _i\) such that \(|{\overline{\sigma }_i - \sigma _i}| \le \epsilon\) and unitary estimates \(\overline{\mathbf {u}}_i\) of the left singular vectors \(\mathbf {u}_i\) such that \(\Vert {\overline{\mathbf {u}}_i - \mathbf {u}_i}\Vert \le \delta\), the error on \(\mathbf {U}{{\varvec{\Sigma }}}^{1/2}\) can be bounded as \(\Vert {\mathbf {U}{{\varvec{\Sigma }}}^{1/2} - \overline{\mathbf {U}}\overline{{{\varvec{\Sigma }}}}^{1/2}}\Vert _F \le \sqrt{\sum _j^k \left( \delta \sqrt{\sigma _j} + \frac{\epsilon }{2\sqrt{\theta }}\right) ^2}\).

Similarly, \(\Vert {\mathbf {V}{{\varvec{\Sigma }}}^{1/2} - \overline{\mathbf {V}}\overline{{{\varvec{\Sigma }}}}^{1/2} }\Vert _F \le \sqrt{\sum _j^k \left( \delta \sqrt{\sigma _j} + \frac{\epsilon }{2\sqrt{\theta }}\right) ^2}\). Both are bounded by \(\sqrt{k}\left( \delta \sqrt{\Vert {\mathbf {A}}\Vert } + \frac{\epsilon }{2\sqrt{\theta }}\right)\)

We prove this result for \(\Vert {\overline{\mathbf {U}}\overline{{{\varvec{\Sigma }}}}^{1/2} - \mathbf {U}{{\varvec{\Sigma }}}^{1/2}}\Vert _F\).

Proof

We start by bounding \(|{\sqrt{\overline{\sigma }_i} - \sqrt{\sigma _i}}|\). Let’s define \(\epsilon = \gamma \sigma _i\) as a relative error:

By definition \(\gamma = \frac{\epsilon }{\sigma _i}\) and we know that \(\sigma _{min} \ge \theta\):

Using the bound on the square roots, we can bound the columns of \(\overline{\mathbf {U}}\overline{{{\varvec{\Sigma }}}}^{1/2}\):

From the error bound on the columns we derive the bound on the matrices:

Lemma 21

(Accuracy of \(\overline{\mathbf {U}{{\varvec{\Sigma }}}}^{-1}\) and \(\overline{\mathbf {V}{{\varvec{\Sigma }}}}^{-1}\)) Let \(\mathbf {A} \in {{\mathbb {R}}}^{n \times m}\) be a matrix. Given some approximate procedures to retrieve estimates \(\overline{\sigma }_i\) of the singular values \(\sigma _i\) such that \(|{\overline{\sigma }_i - \sigma _i}| \le \epsilon\) and unitary estimates \(\overline{\mathbf {u}}_i\) of the left singular vectors \(\mathbf {u}_i\) such that \(\Vert {\overline{\mathbf {u}}_i - \mathbf {u}_i}\Vert \le \delta\), the error on \(\mathbf {U}{{\varvec{\Sigma }}}^{-1}\) can be bounded as \(\Vert { \mathbf {U}{{\varvec{\Sigma }}}^{-1} - \overline{\mathbf {U}}\overline{{{\varvec{\Sigma }}}}^{-1} }\Vert _F \le \sqrt{k}\left( \frac{\delta }{\theta } + \frac{\epsilon }{\theta ^2 - \theta \epsilon }\right)\). Similarly, \(\Vert { \mathbf {V}{{\varvec{\Sigma }}}^{-1} - \overline{\mathbf {V}}\overline{{{\varvec{\Sigma }}}}^{-1} }\Vert _F \le \sqrt{k}(\frac{\delta }{\theta } + \frac{\epsilon }{\theta ^2 - \theta \epsilon })\).

We prove this result for \(\Vert {\overline{\mathbf {U}}\overline{{{\varvec{\Sigma }}}}^{-1} - \mathbf {U}{{\varvec{\Sigma }}}^{-1}}\Vert _F\).

Proof

We start by bounding \(|{ \frac{1}{\overline{\sigma }_i} - \frac{1}{\sigma _i}}|\). Knowing that \(\sigma _{min} \ge \theta\) and \(\epsilon < \theta\):

From the bound on the inverses, we can obtain the bound on the columns of \(\overline{\mathbf {U}}\overline{{{\varvec{\Sigma }}}}^{-1}\):

To complete the proof, we compute the bound on the matrices:

5 Experiments

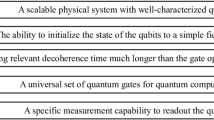

All of our experiments are numerical and can be carried out on classical computers.Footnote 1 We have analysed the distribution of the factor score ratios in the MNIST, Fashion MNIST, CIFAR-10, Tiny Imagenet and Research Papers datasets. They decrease exponentially fast (figures in the appendix), confirming the low rank nature of the data. Focusing on MNIST, Fashion-MNIST, and CIFAR-10, we have simulated PCA’s dimensionality reduction for image classification. The datasets have been shifted to row mean 0 and normalized so that \(\sigma _{max}=1\). We have simulated Algorithm 1 by sampling \(1/\gamma ^2 = 1000\) times from the state \(\sum _i^r\lambda _i |{\mathbf {u}\rangle _i} |{\mathbf {v}\rangle _i} |{\overline{\sigma }_i}\rangle\) to search the first k principal components that account for a factor score ratios sum \(p=0.85\). The simulation occurs by sampling with replacement from the discrete probability distribution given by the \(\lambda _i\). We then estimated the measured \(\lambda _i\) using the Wald estimator (see the proof of Theorem 8) and searched for the most important k.Footnote 2 In all cases, sampling the singular values has been enough to decide how many to keep. However, as p increases, the gap between the factor score ratios decreases and the quality of the estimation of k or \(\theta\) decreases. As discussed in Section 3.1, it is possible to detect this problem using Theorem 9 and solve it with a binary search for \(\theta\) (Theorem 10). We have tested the quality of the representation by observing the accuracy of 10-fold cross-validation k-nearest neighbors with \(k=7\) as we introduce error in the representation’s Frobenius norm (see Fig. 1). To introduce the error, we have added truncated Gaussian noise to each element of \(\mathbf {U}{{\varvec{\Sigma }}}\) to have \(\Vert {\mathbf {U}{{\varvec{\Sigma }}} - \overline{\mathbf {U}}\overline{{{\varvec{\Sigma }}}}}\Vert \le \xi = \sqrt{k}(\epsilon +\delta )\) (Lemma 14). The parameter \(\delta\) has been estimated using the bound above, choosing the error so that the accuracy drops no more than 0.01 and fixing \(\epsilon\) to a number that allows for correct thresholding. Table 1 summarizes the run-time parameters. The results show that Theorems 8, 9, 10 are already advantageous on small datasets, while Theorem 12 requires bigger datasets to express its speed-up. We have also simulated the creation of the state at step 6 of Algorithm 4 to test the average number of measurements needed to collect all the singular values as \(\epsilon\) increases. The analysis has confirmed the run-time’s expectations. To end with, we have tested the value of \(\alpha\) (Definition 2, Claim 16) for the MNIST dataset, fixing \(\varepsilon =0\) and trying \(p \in \{0.1,0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9\}\). We have observed that \(\alpha = 0.97 \pm 0.03\), confirming that the run-time of Corollary 15 can be assumed \(\widetilde{O}(\mu (\mathbf {V})^{(k)})\) for the majority of the data points of a PCA-representable dataset.

We point out that more experiments on the run-time parameters have been extensively discussed in other works that rely on the same parameters (Kerenidis and Luongo 2020; Kerenidis et al. 2020b). These works study the scaling of the parameters as the dataset size increases, both in features and samples, and conclude that the parameters of interest are almost constant. In addition to the existing experiments, we have studied the trend of the run-time parameters on the Tiny Imagenet dataset as the number of samples scales. While the spectral norm increases, the other run-time parameters become constant after a certain number of samples. Figure 2 shows that the algorithms discussed in Section 3.1 are already of practical use for small datasets, while the singular vector extraction routines of Section 3.2 require larger datasets to be convenient over their classical counterparts. We refer the interested reader to the appendix for more details about the experiments.

Accuracy of 10-fold cross-validation using K-Nearest-Neighbors, with 7 neighbors, on the MNIST dataset after PCA’s dimensionality reduction (0.8580% of variance retained). The benchmark accuracy was computed with an exact PCA. The experiment line shows how the classification accuracy decreases as error is introduced in the Frobenius norm of the representation

6 Conclusions

In this paper, we formulate many eigenvalue problems in machine learning within a useful framework, filling the gap left open by previous literature with new algorithms. Our new procedures fill the gap by estimating the quality of a representation and extracting a classical description of the top-k singular values and vectors. We have shown how to use the new tools to extract the information needed by SVD-based data representation algorithms, computing theoretical error bounds for three machine learning applications. Besides identifying the proper quantum tools and formalizing the novel quantum algorithms, the main technical difficulty was analyzing how the error propagates to bound the algorithms’ run-time properly.

We do not expect run-time improvements that exceed poly-logarithmic factors or constant factors, using similar techniques. For non-zero singular values and dense singular vectors, the run-time of the extraction can not be smaller than kz, as one needs to read vectors of size kz. The \(\delta ^2\) parameter is a tight bound for the \(\ell _2\) norm of the vectors, as it is a result of Chernoff’s bound. The parameter \(\epsilon\) is a tight error bound from phase estimation, which is necessary to distinguish the singular vectors. \(\theta\) is the condition number of the low-rank approximation of the matrix, and it is necessary to amplify the amplitudes of the smallest singular values.

As future work, we deem it interesting to explore quantum algorithms for incremental SVD or for datasets whose points are available as a data streaming. It might be possible to reduce the overhead due to tomography and achieve greater speed-ups in these settings. It also remains an open question whether there are particular applications and dataset distributions for which the singular vector extraction algorithms offer a practical advantage over their classical counterparts. Finally, an appropriate resource estimation that takes into consideration different quantum hardware architectures, noise models, and error correction codes is out of the scope of this paper and is left for future work.

Notes

The code of the experiments is available at https://github.com/ikiga1/qadra.

Note that, in practice, one could also estimate the factor score ratios as \(\overline{\lambda }_i = \frac{\overline{\sigma }_i}{\Vert {\mathbf A}\Vert _F}\). This method should require less measurements: a bound on the necessary number of measurements can be obtained via the coupon collector’s problem with non-uniform probabilities.

References

Allcock J, Hsieh CY, Kerenidis I et al (2020) Quantum algorithms for feedforward neural networks. ACM Transactions on Quantum Computing 1(1):1–24

Arrazola JM, Delgado A, Bardhan BR et al (2020) Quantum-inspired algorithms in practice. Quantum 4:307. https://doi.org/10.22331/q-2020-08-13-307

Biamonte J, Wittek P, Pancotti N et al (2017) Quantum machine learning. Nature 549(7671):195–202. https://doi.org/10.1038/nature23474

Brassard G, Hoyer P, Mosca M et al (2002) Quantum amplitude amplification and estimation. Contemporary Mathematics 305:53–74. https://doi.org/10.1090/conm/305/052152

Bravo-Prieto C, García-Martín D, Latorre JI (2020) Quantum singular value decomposer. Physical Review A 101(6):062,310. https://doi.org/10.1103/PhysRevA.101.062310

Cade C, Montanaro A (2018) The Quantum Complexity of Computing Schatten \(p\)-norms. In: 13th Conference on the Theory of Quantum Computation, Communication and Cryptography. 2018

Cavanagh JM, Potok TE, Cui X (2009) Parallel latent semantic analysis using a graphics processing unit. In: Proceedings of the 11th Annual Conference Companion on Genetic and Evolutionary Computation Conference: Late Breaking Papers, pp 2505–2510 https://doi.org/10.1145/1570256.1570352

Chakraborty S, Gilyén A, Jeffery S (2019) The Power of Block-Encoded Matrix Powers: Improved Regression Techniques via Faster Hamiltonian Simulation. In: 46th International Colloquium on Automata, Languages, and Programming (ICALP 2019), Leibniz International Proceedings in Informatics (LIPIcs), vol 132. Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik, pp 33:1–33:14 https://doi.org/10.4230/LIPIcs.ICALP.2019.33

Chepurko N, Clarkson KL, Horesh L, et al (2020) Quantum-inspired algorithms from randomized numerical linear algebra. arXiv preprint arXiv:2011.04125

Chia NH, Gilyén A, Li T, et al (2020) Sampling-based sublinear low-rank matrix arithmetic framework for dequantizing quantum machine learning. In: Proceedings of the 52nd Annual ACM SIGACT Symposium on Theory of Computing, pp 387–400 https://doi.org/10.1145/3357713.3384314

Clausen SE (1998) Applied correspondence analysis: An introduction, vol 121. Sage. https://doi.org/10.4135/9781412983426

Deerwester S, Dumais ST, Furnas GW et al (1990) Indexing by latent semantic analysis. Journal of the American society for information science 41(6):391–407. https://doi.org/10.1002/(SICI)1097-4571(199009)41:6<3c391::AID-ASI1>3e3.0.CO;2-9

Erdős P, Rényi A (1961) On a classical problem of probability theory. Magyar Tud Akad Mat Kutató Int Közl 6(1–2):215–220

Frieze A, Kannan R, Vempala S (2004) Fast monte-carlo algorithms for finding low-rank approximations. Journal of the ACM (JACM) 51(6):1025–1041. https://doi.org/10.1145/1039488.1039494

Giovannetti V, Lloyd S, Maccone L (2008) Quantum random access memory. Physical review letters 100(16):160,501

González FA, Caicedo JC (2011) Quantum latent semantic analysis. In: Amati G, Crestani F (eds) Advances in Information Retrieval Theory. Springer Berlin Heidelberg, Berlin, Heidelberg, pp 52–63 https://doi.org/10.1007/978-3-642-23318-0_7

Greenacre M (2017) Correspondence analysis in practice. CRC Press. https://doi.org/10.1201/9781315369983

Greenacre MJ (1984) Theory and applications of correspondence analysis. London (UK) Academic Press

Gu L, Wang X, Zhang G (2019) Quantum higher order singular value decomposition. In: 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), IEEE, pp 1166–1171 https://doi.org/10.1109/SMC.2019.8914525

Gyurik C, Cade C, Dunjko V (2020) Towards quantum advantage for topological data analysis. arXiv preprint arXiv:2005.02607

Halko N, Martinsson PG, Shkolnisky Y et al (2011) An algorithm for the principal component analysis of large data sets. SIAM Journal on Scientific computing 33(5):2580–2594. https://doi.org/10.1016/S0169-7439(01)00130-7

Hann CT, Lee G, Girvin S, et al (2021) Resilience of quantum random access memory to generic noise. PRX Quantum 2(2):020,311

Harrow AW, Hassidim A, Lloyd S (2009) Quantum algorithm for linear systems of equations. Physical review letters 103(15):150,502. https://doi.org/10.1103/PhysRevLett.103.150502

Harun-Ur-Rashid (2018) Research paper dataset. https://www.kaggle.com/harunshimanto/research-paper

He C, Li J, Liu W (2020) An exact quantum principal component analysis algorithm based on quantum singular value threshold. arXiv preprint arXiv:2010.00831

Hsu H, Salamatian S, Calmon FP (2019) Correspondence analysis using neural networks. In: The 22nd International Conference on Artificial Intelligence and Statistics, pp 2671–2680

Jolliffe IT, Cadima J (2016) Principal component analysis: a review and recent developments. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 374(2065):20150,202. https://doi.org/10.1098/rsta.2015.0202

Kerenidis I, Luongo A (2020) Classification of the mnist data set with quantum slow feature analysis. Physical Review A 101(6):062,327. https://doi.org/10.1103/PhysRevA.101.062327

Kerenidis I, Prakash A (2017) Quantum recommendation systems. In: 8th Innovations in Theoretical Computer Science Conference (ITCS 2017), Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik https://doi.org/10.4230/LIPIcs.ITCS.2017.49

Kerenidis I, Prakash A (2020a) Quantum gradient descent for linear systems and least squares. Physical Review A 101(2):022,316. https://doi.org/10.1103/PhysRevA.101.022316

Kerenidis I, Prakash A (2020) A quantum interior point method for lps and sdps. ACM Transactions on Quantum Computing 1(1):1–32. https://doi.org/10.1145/3406306