Abstract

Despite the advances in intelligent systems, there is no guarantee that those systems will always behave normally. Machine abnormalities, unusual responses to controls or false alarms, are still common; therefore, a better understanding of how humans learn and respond to abnormal machine behaviour is essential. Human cognition has been researched in many domains. Numerous theories such as utility theory, three-level situation awareness and theory of dual cognition suggest how human cognition behaves. These theories present the varieties of human cognition including deliberate and naturalistic thinking. However, studies have not taken into consideration varieties of human cognition employed when responding to abnormal machine behaviour. This study reviews theories of cognition, along with empirical work on the significance of human cognition, including several case studies. The different propositions of human cognition concerning abnormal machine behaviour are compared to dual cognition theories. Our results show that situation awareness is a suitable framework to model human cognition of abnormal machine behaviour. We also propose a continuum which represents varieties of cognition, lying between explicit and implicit cognition. Finally, we suggest a theoretical approach to learn how the human cognition functions when responding to abnormal machine behaviour during a specific event. In conclusion, we posit that the model has implications for emerging waves of human-intelligent system collaboration.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recent advancements in the development of intelligent systems have led to an increased interest in understanding how humans learn about and respond to abnormal situations. Responding to this wave of interest, in this paper, we model how humans learn about and respond to abnormal machine behaviour, thus linking studies of human cognition with studies of intelligent systems. We define an abnormal machine behaviour as any circumstance when a machine deviate from normal behaviour; it can be but not limited to an abnormal response to controls, abnormal combination of alarms and/or alerts or an unusual emission of noise or heat. Intelligent systems offer a plethora of information; in the maintenance field, this includes equipment health status (thus replacing the use of human sensory systems), diagnosis through cause analysis and prognosis through mathematical and physical models (Botelho et al. 2014). In contrast, humans acquire knowledge and understanding through thought, experience and the senses when they interact with machines and systems. In fact, extent of the involvement from the intelligent systems has a significant effect on the level of human engagement that helps to ameliorate out-of-the-loop performance problems (Endsley 2015). Out-of-the-loop problems bring surprises, suggesting human to experience even more behaviours, as not “normal”. Recent developments in the field of synthetic psychology (Dawson 2004) have triggered an interest in modelling human cognition. These studies strive to explain cognitive processes, with some now attempting to link them with intelligent systems; the cognitive, interactive training environment (Crowder and Carbone 2014) allows each actor to learn from other actors and to operate in modes that use the strengths of all actors.

In the past three decades, researchers have sought to pinpoint the varieties of human cognition involved in decision-making. A critical mainstay in such research has been cognitive continuum theory (Hammond 1981), with researchers noting its implications in multiple domains, including management, economics, war and healthcare. Whilst “types” represent dichotomous poles on the cognitive continuum, “modes” can vary continuously along it. In this article, we adopt these distinctions when we use the words “types” and “modes”. Some authors (e.g. Rasmussen 1983; Wickens 1984; Shappell and Wiegmann 2000; Reason and Hobbs 2003) focus on the limitations of cognitive capacities in making critical judgements under higher cognitive loads.

Although the advancements in intelligent systems have obvious implications for human cognitive involvement during abnormal machine behaviour, to date, few researchers have applied cognitive continuum theory in this context. Despite the availability of extensive empirical research on different cognitive modes, previous studies on the human-machine interaction fail to discuss a wide range of cognitive modes or to consider their involvement in responses to abnormal machine behaviour. This article fills the gap by summing up the various cognitive modes and applying cognitive continuum theory to the human response to abnormal machine behaviour.

2 Competing accounts of cognition

Until the early 1970s, humans were assumed to make logical inferences from evidence following the subjective expected utility theory or to make uncertain judgements following statistical decision theory or Bayes Theorem (Reason 1990). Various authors (e.g. Tversky and Kahneman 1974) criticised the rationalist bias of this view, with several suggesting human cognition is unable to engage in the laborious yet computationally powerful processes involved in analytic reasoning (e.g. Lichtenstein et al. 1978; Reason 1990). New theories and models began to suggest a wider range of cognitive modes, including naturalistic decision-making (e.g. Endsley 1995; Reasons and Hobbs 2003; Klein 2008), and several attempts have been made to identify the capacities of less well-understood cognitive phenomena, such as pre-cognition (Snodgrass et al. 2004; Marwaha and May 2015).

References to the working modes of the mind have gradually accepted the idea of two permanent operating information-processing systems—intuitive and analytic. Dual cognition is not a new idea, however. It can be traced to William James’ Principles of Psychology (1890). The dual processing account of cognition has been applied in many areas, including learning, attention, reasoning, decision-making and social cognition (Evans 2006). Rather confusingly, studies on dual processing have developed their own terms to distinguish the two cognition types: heuristic/analytic (Evans 1984), experiential/rational (Epstein 1994), autonomous/algorithmic (Dennett 1996), associative/rule-based (Sloman 1996), implicit/explicit (Evans and Over 1996), heuristic/systematic (Chen and Chaiken 1999), autonomous/algorithmic and reflective mind (Stanovich 1999), holistic/analytic (Nisbett et al. 2001), type 1/type 2 (Evan 2006), and C-system/X-system (Lieberman 2007), etc. Although these propositions use different terminologies, Kahenman (2011) says there is consensus among them, and he defines them as follows:

-

System 1: Cognitive process working associatively, intuitively and tacitly;

-

System 2: Cognitive process operating deliberately, rationally and analytically.

A large volume of work explores the features of both systems.

2.1 Key characteristics of system 1: intuitive cognition

Although a general definition of intuition is “knowing without being able to explain how we know” (Vaughan 1979), many continue to debate the nature of intuition. In the management domain, intuition is defined as “a capacity for attaining direct knowledge or understanding without the apparent intrusion of rational thoughts or logical inference” (Saddler-Smith and Shefy 2004) and as “affectively-charged judgments that arise through rapid, non-conscious, and holistic associations” (Dane and Pratt 2007). Despite the number of definitions, recent studies (Evans and Stanovich 2013; Patterson 2017) agree on certain factors in intuitive cognition:

-

It is prominent in tasks solved from experience (e.g. Klein 1993);

-

It is used when a secondary task draws away working-memory resources (e.g. De Neys 2006);

-

It appears under increased time pressure (e.g. Evans and Curtis-Holmes 2005);

-

It focuses on perceptual cues (e.g. Hammond et al. 1997);

-

It is applicable in situations with meaning (e.g. Reyna 2012);

-

It has autonomous processing capabilities (e.g. Evans and Stanovich 2013; Patterson 2017);

-

It has the ability to process multiple cues simultaneously (e.g. Evans and Stanovich 2013);

-

It has a higher propensity to make biased responses (e.g. Kahenman 2000).

2.2 Key characteristics of system 2: analytic cognition

System 2 refers to the deliberate type of thinking involved in focus, reasoning or analysis. In this thinking, the distinct features of individual capacity and task characteristics work together to prescribe a specific procedure for selecting a course of action. For the most part, researchers agree system 2 has the following major characteristics (Evans and Stanovich 2013; Patterson 2017):

-

It solves reflective rather than impulsive thinking (e.g. Evans 2008);

-

It verbalises thoughts, providing the foundation for decision-making (e.g. Hayes and Broadbent 1988);

-

A long time is spent on deliberation (e.g. Hammond et al. 1997);

-

Symbols are used to support precise analysis (e.g. Hammond et al. 1997);

-

It searches for rules in events (e.g. Hayes and Broadbent 1988);

-

It is capable of serial processing (Evans and Stanovich 2013);

-

It usually derives normative responses (Evans and Stanovich 2013).

2.3 System 1 vs system 2

Presently, there is a lack of consensus about the effectiveness of two systems against each other. Many researchers argue intuitive cognition has an advantage over analytical cognition, particularly as it has been shown to be immune to time pressures and workload (e.g. Evans and Stanovich 2013; Klein 2008; Patterson 2017). Referring to experiments at the University of Tulsa, Myers (2002) claims non-conscious learning can create patterns too complex and too confusing to notice consciously. A meta-analysis of research on intuition in the managerial context (Dane and Pratt 2007) suggests intuitive decisions are, in many situations, of higher quality than analytic ones. Kahneman (2000) suggests the existence of kind of “intuitive intelligence”, arguing that some people make better judgments based on representativeness than others and, consequently, achieve greater predictive accuracy.

However, Kahneman (2000) recognises that intelligence is not only the ability to reason. It is also the ability to find relevant material in memory and to deploy attention when needed; not doing so is prone to make mistakes in judgement. Although the heuristics of intuition (Tversky and Kahneman 1974) are highly economical and usually effective, they can lead to systematic and predictable errors (Gigerenzer and Todd 1999; Gladwell 2005). Intuition has also been considered limited and constrained, poor in predictive ability and biased (Buckwalter 2016; Amadi-Echendu and Smidt 2015). Whilst analytical cognitive processes lead to slow decision performance, a purely intuitive cognitive process may be too risky (Klein 2008). With a more balanced view, SRK model (Rasmussen 1983) provides a foundation for exploring the errors at different cognitive processes: skill based, rule based and knowledge based.

Evans and Stanovich (2013) argue that only the dichotomous demands for working memory (does not require working memory versus requires working memory) actually define two systems; other characteristics are simply correlating. Nevertheless, researchers concur that there are two systems. What is missing is an understanding of their possible interactions; the dual cognitive system is criticised for its limited explanation of how the two systems may interact (e.g. Evans 2006; Harteis and Billett 2013). These criticisms demand a proposition on the conjunction of the two systems.

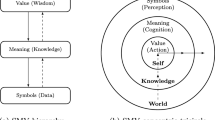

2.4 Continuum not dichotomy

Hammond (1981) suggests a cognitive continuum, placing intuitive cognition (system 1) on the unconscious end, analytical cognition (system 2) on the conscious end and quasi-rationality in the middle. Distinctions between system 1 and system 2 types vary within this continuum (see Fig. 1). This theory rejects a dichotomous view of system 1 and 2 cognitions, as pure intuition vs pure analysis. Effective decision-making may require flexibility on the cognitive continuum, oscillating between analysis and intuition (Hamm 1988). Moreover, intuitive decisions can be partly conscious and rational decisions can be partly unconscious.

In support of this view, Langsford and McKenzie (1995) and Creswell et al. (2013) suggest decision-making can be influenced by both implicit and explicit processes. Reason (1990) envisages a continuum of dual task situations; at one extreme, tasks are wholly automatic and thus make little or no claim on attention; at the opposite extreme, the demands imposed by each task are so high that they cannot be performed adequately, even in isolation. Hammond et al. (1997) present a framework to analyse how the characteristics of various tasks can induce the corresponding cognitive modes of decision-making and reasoning. These characteristics include number of cues, measurement of cues, redundancy among cues, degree of task certainty, display of cues and the time period. Gibson (1977), in his affordance theory, identifies more determinants to the opportunities for action provided by a particular object or environment. Vicente and Rasmussen (1992) identify and apply number of determinants for cognition in the design of technology that works for people in complex sociotechnical systems (ecological design).

3 Limitations of existing models and the need for a new model

Across a number of domains, there is an ongoing discussion of the significance of the various modes of human cognition (e.g. Kahenman 2011). Two domains where cognitive continuum theory is especially prominent are management and healthcare (e.g. Cader et al. 2005; Dhami and Thomson 2012; Custers 2013; Parker-Tomlin et al. 2017). Even though human cognition has been extensively researched in multiple domains, there are gaps in the understanding of human-machine interaction. To the best of our knowledge, no research to date has focused on human cognition of abnormal machine behaviour using the cognitive continuum approach. Therefore, in this study, we suggest a conceptual model describing how humans use different cognition modes to respond to abnormal machine behaviours. A conceptual model can describe a system based on qualitative assumptions about its elements, their interrelationships and system boundaries (businessdictionary.com). As such, it can help the researcher know, understand or simulate the subject represented by the model. This study touches on three important areas, making an original contribution to the field of human-machine interaction.

Firstly, we critically examine the potential of different cognition modes in the context of human-machine interaction. Although extensive research has considered their limitations and their influence on safety during human-machine interactions (e.g. Dhillon 2014), previous studies lack critical emphasis on their potential. Although we acknowledge the limitations, our intention is not to reiterate them; instead, we explore the effectiveness of different cognition modes in responding to abnormal machine behaviour. To support our position, we offer empirical evidence showing human handling of abnormal machine behaviour.

Secondly, we seek a label that can demarcate the cognition modes in our domain of interest. We have already shown the general consensus on the aspects of system 1 and system 2, despite the proliferation of dual-process theories with confusing labels (autonomous/algorithmic, associative/rule-based, implicit/explicit etc.). Distinctions are not always clear cut (Evans and Stanovich 2013), and if we rely on such distinctions or general labels (i.e. system 1/system 2) for the two types of processing, our domain-specific understanding might be limited. Therefore, we define labels suitable for mapping cognition when humans respond to abnormal machine behaviour.

Thirdly, we take advantage of continuum theory to explain how the two cognitive systems are separated yet interrelated. The literature makes a variety of propositions on the degree of separation and tendencies for dominance by one particular system. For instance:

-

Studies in the management context propose people rely on intuitive and rational processes at the same time (e.g. Hodgkinson and Clarke 2007);

-

Default-interventionist theories assume fast, intuitive processing generates intuitive default responses into which subsequent reflective analytical processing may or may not intervene (e.g. Evans 2007; Kahneman 2011);

-

Intuitive cognition is the basis for all human reasoning and decision-making, with analytical cognition controlling the search for information (Betsch and Glöckner 2010);

-

Experience leads to decision-making supported partly by unconscious processes and partly by mental simulations (Klein 2003);

-

The cognition phenomenon of sudden insights is intuitive, but it appears after a period of deliberate but unsuccessful thinking activities (e.g. Myers 2002; Bastick 2003);

-

Conscious learning is argued by some to be the default case for human learning, but the theory has not been satisfactorily established (Shanks and St John 1994);

-

Differences between individuals’ cognitive abilities and thoughts may show the differences between more analytical minds and more intuitive minds (e.g. Dennett 1996; Epstein et al. 1996);

-

Various task characteristics induce the corresponding cognitive mode of decision-making and reasoning (Hammond et al. 1997).

To sum up, research has not applied the understanding of human cognition as existing on a continuum to human-machine interactions. Therefore, the objectives of this work are the following:

-

1.

To critically assess the literature on the different cognition concepts and examine empirical evidence to show how cognitive involvement during abnormal machine behaviour points to a domain-specific cognitive continuum.

-

2.

To classify and evaluate different concepts to suggest a suitable classification of a continuum representing how humans respond to abnormal machine behaviour.

-

3.

To recommend a theoretical approach to represent cognition concepts related to abnormal machine behaviour on the continuum by taking into account the degree of cognitive separateness.

4 Research design

In the introductory sections, we referred to a range of concepts representing different types of cognition and explained our intention to explore cognition modes along a continuum. The development of a new theoretical model requires an examination of the literature, summarising and synthesising its findings. We do not intend to provide a comprehensive list of the published and unpublished studies, as this would be the focus of a systematic literature review (Cronin et al. 2008). Rather, we summarise a body of literature and draw conclusions on the discussions of our topic. However, we borrow some features of a systematic literature review by using explicit criteria to identify the literature and making the criteria apparent to the reader. The key search engine used was Google Scholar; it subsequently directed us to the respective scientific publisher webpages. The literature review consisted of two phases; each had different criteria for its selection of literature.

4.1 Research design phase 1: selection of the framework for the new model

During the first phase of the literature review, we searched for a suitable cognitive concept for this study. We identified three qualifying criteria for the framework.

-

Firstly, when working with machines, certain physical requirements are related to the operation of the system: system safety and reliability, detection of abnormalities, correct diagnosis (determining root causes) and prognosis (predicting consequences); all play an important role in how an individual should respond to abnormal machine behaviours. Therefore, we define cognition as including the ability to detect abnormalities, give a diagnosis and perform prognosis.

-

Secondly, a framework must accommodate multiple and broader concepts of human cognition that can be accommodated on a continuum. The selected theoretical perspectives must be able to model wider modes of cognition.

-

Thirdly, the theoretical perspective should refer to the key literature in this field, including empirical studies addressing cognition and abnormal machine behaviour.

Following the literature review in phase 1, we hypothesise that three-level situation awareness (SA) model meets the selection criteria. This hypothesis process is explained at greater length in the following sections.

4.2 Research design phase 2: development of the theoretical model

The second phase of the literature review reviews empirical work on the subject area to gain a better theoretical understanding of the underlying concepts. For the second phase of the literature review, we developed the following criteria:

-

Firstly, propositions of SA in empirical studies. This will suggest modes on the continuum.

-

Secondly, empirical studies (of SA) on human response in relation to abnormal machine behaviour. This is to frame in different cognitive demands of response to abnormal machine behaviours and what means of cognition modes cater to these demands.

-

Thirdly, in-depth exploration of any concepts requiring better understanding for our argumentation.

In our search for empirical studies on cognition modes, we prioritise real-life events of human-machine interactions, although we considered a few laboratory studies as well. Yin (2003) states “the distinctive need for case studies arises out of the desire to understand complex phenomena because the case study method allows investigators to retain the holistic and meaningful characteristics of real-life events”. Therefore, we use case studies to provide empirical evidence of how different SA propositions apply human cognition of abnormal machine behaviour.

The keywords used in the Google Scholar search are “situation awareness”, “human-machine interaction”, “machine abnormality”, “diagnostic”, “accidents” and “case study”.

So far, the focus has been on the first objective—to critically assess the literature on cognition concepts and empirical evidence. To reach the second objective, we classify the cognitive concepts reviewed in the literature. Because we want to develop a continuum, we classify those under two themes: significance for learning about abnormal machine behaviour and dominance of systems 1 or 2. The former classification guides us to disregard any concept that appears less significant for the domain of study. We qualitatively assess a concept’s significance through a critical review. Given our inclusion criteria, we do not expect to disregard many concepts. The latter classification, the dominance of systems 1 or 2, enables us to reach the objective of labelling the poles on the cognition continuum. Our critical qualitative assessment of the dominance (of system 1/2) also suggests positioning on the continuum. To emphasise an important point: once the classification is completed, the “concepts” should be positioned on the continuum. At this point, they can be called “modes” (on the continuum).

Finally, we critically compare the positions of the different cognition concepts based on their key characteristics, determined through the literature review. This allows us to build relations between different cognitive demands and to determine the effectiveness of different modes in fulfilling those demands. This takes us to our final objective, recommending a theoretical approach to the degree of separateness and the positioning of a particular cognition mode on the continuum. Figure 2 illustrates the research design.

5 Review of theories and empirical work: development of the new model

The theoretical and empirical work reviewed in this section provides the architecture for the poles and modes of the continuum. We first justify the selection of SA as the framework for the new model. Then we review the various propositions of SA and the empirical evidence on the cognition of abnormal machine behaviour.

Various theories and models show how human cognition behaves with respect to awareness of the environment and awareness of abnormalities: signal detection theory (Wickens 2002), SEEV (Salience, Effort, Expectancy, Value) model (Wickens et al. 2003), perception/action cycle model (Smith and Hancock 1995), predictive account of awareness (Hourizi and Johnson 2003), theory of activity model (Bendny and Meister 1999) and three-level situation awareness model (Ensley 1995). However, the first two are more concerned about and limited to the detection of cues; they cannot theorise how a diagnosis or prognosis is performed. From the rest, we are mostly concerned with the latter, the three-level SA model, that has been mostly referred (Uhlarik and Comerford 2002). There is consensus within the cognitive paradigm that situation awareness (SA) includes the perception of the elements in the environment within a certain time and space, the comprehension of their meaning and the projection of their status for the near future (Endsley 1995). This consensus suggests the development of SA through the perception of cues (level 1), the integration of multiple pieces of information and the determination of their relevance to certain goals (level 2) and the ability to forecast future situation events and dynamics (level 3). The three-level SA model is illustrated in Fig. 3.

The three-level model of situation awareness (Endsley 1995)

Three main aspects of the three-level SA model motivate us to consider it as the framework for modelling human cognition of abnormal machine behaviour:

-

Handling of machine abnormalities and the three levels of SA are analogous;

-

SA interpretation and interventions are applied in similar domains; and

-

Different SA propositions enable a discussion along the continuum.

Each of these motivators is discussed below.

5.1 Motivations for considering three-level SA model

5.1.1 Motivation 1: the levels of SA and the handling of machine abnormalities are analogous

The three levels of SA models, i.e. perception, comprehension and projection, are analogous to the detection, diagnosis and prognosis of abnormal machine behaviour. Figure 4 shows this comparison. This analogous structure suggests possibilities of applying SA interventions to assist human cognition of abnormal machine behaviour.

5.1.2 Motivation 2: SA intervention applies in similar domains

The SA community has shown that although the capacity to collect sensory data is increasing, data overload can threaten the ability of people to operate (Endsley and Jones 2011). Systems need to allow people to manage information in order to understand what is currently happening and project what will happen next. Certain methodologies and design principles have been proposed for engineers and designers (Endsley 2004). A wide range of relevant issues in a variety of safety critical domains (such as aircraft, air traffic control, power plants, construction, offshore drilling, maritime operations, advanced manufacturing systems and collaborative and isolative work) can benefit from the application of these principles (e.g. Endsley and Garland 2000; Sneddon et al. 2006; Grech and Horeberry 2002; Salmon et al. 2008). Recent studies (e.g. Brady et al. 2013; Wassef et al. 2014) focus on the use of three-level SA models in healthcare interventions to perform detection, diagnosis and prognosis. These applications aim to proactively identify patients at risk and thereby mitigate risk. The use of a SA framework helps researchers to analyse and understand diagnostic errors in primary medical care settings (e.g.Singh et al. 2011). The applicability of SA to aircraft maintenance has also been supported (Endsley and Robertson 2000). Other recent studies use a SA approach to improve effectiveness in the maintenance domain (e.g. Golightly et al. 2013; Oliveira et al. 2014), focusing on information that changes from situation to situation.

5.1.3 Motivation 3: SA covers a wide range of cognitive concepts

SA has been shown as affected by and coming after chain of psychological constructs such as stress, mental workload and trust (Stanton and Young 2000; Heikoop et al. 2015). Notably, none of the relationships were found reversible, suggesting SA as a central construct.

The three-level SA model can cover a wide range of cognitive concepts. Whilst novices combine and interpret information and strive to make projections with a limited working memory, Endsley (1995) argues that for experts, long-term memory stored as schema and mental models can largely circumvent these limits even when information is incomplete. Schemas are patterns of thought or behaviour that organise categories of information and the relationships among them (DiMaggio 1997). Mental models allow human to “generate descriptions of the system purpose and form, explanations of system functioning and observed system status, and predictions of the future status” (Rouse and Morris 1986). The three-level SA model captures the conscious analysis as well as additional processes such as deliberate sense-making processes, story building, recognition prime decisions, heuristics and implicit cues associated with socio-technical systems (Endsley 1995, 2015). It has been identified as contributing to the development of intuitive or naturalistic theories of decision-making (Klein 2000).Therefore, in the context of the cognition of abnormal machine behaviours, we hypothesise the three-level SA model will be able to explain deliberate attempts at detection, diagnosis and prognosis, as well as the formation of understanding with less deliberation, more naturalistically. In the next sections, we discuss theoretical perspectives and empirical evidence of how SA relates to the cognition of abnormal machine behaviour. We analyse the three-level SA model, within the machine abnormality domain for its ability to explain multiple propositions such as sense-making, recognition prime decision-making, intuition, handling implicit cues and heuristics, those claimed as compatible with. To test our hypothesis, we also look for models with alternative explanations to SA in the domain of machine abnormality.

5.2 Sense-making

Sense-making is a concept explaining how individuals understand the environment by assigning meaning to experiences (Kramer 2010; Ibbara and Barbuescu 2010). Therefore, sense-making is not about information, but about values, priorities and preferences (e.g. Weick 1993, 1995; Woods and Sarter 1993). Sense-making is a reasonable and memorable story that holds disparate elements together long enough to energise and guide actions (Weick 1995). The literature points to four major attempts to make connections and draw distinctions between SA and sense-making.

Firstly, sense-making can be accused of a sense of predetermination. How sense-making differs from SA is shown in the case study about the grounding of the ship Royal Majesty on a sand bank close to the Massachusetts shore instead of arriving in Boston (Dekker and Lutzhöft 2004). The retrospective analysis of events uncovers series of indications that the crew should have noticed and understood regarding the abnormality of the GPS unit. Not experiencing the repeated warnings about the real nature of the situation by the vary audience who mostly needed it (i.e. the crew), raises serious questions. Answering these questions, the study refers to an outcome of the phenomenon of hindsight. Weick (1995) notes: “People who know the outcome of a complex prior history of tangled, indeterminate events, remember that history as being much more determinant, leading inevitably to the outcome they already knew”. Kahneman (2011) holds the same position: “a compelling narrative fosters an illusion of inevitability”. As a result, we do not build (deliberately or not) a schema or a correct mental model, merely because the event is now seen as having been inevitable. Even though the notion of “errant mental model” (Endsley 2011) is closely related, this vulnerability is not adequately addressed in SA literature.

Secondly, efforts to understand the environment, effortful processes and retrospection provide evidence of the explicit nature of the information in sense-making. In a meta-analysis of sense-making research, Mills et al. (2010) proposes a “critical sense-making” approach and demonstrates how it considers power and context. A recent study in sense-making (Rankin et al. 2016) focuses on the difficulties faced by pilots during the abnormal behaviour of aircrafts. These difficulties include identifying subtle cues, managing the uncertainties of automated systems, coping with multiple goals and narrow timeframes and identifying appropriate actions. Although many approaches use three-level SA to model such situations (e.g. Endsley 1995, 1999; Bolstad et al. 2010), Rankin et al. approaches using sense-making. They recognise sense-making as a complex process. Their attempt to model the cognition about the abnormal behaviour considers the abilities to identify cues, diagnose problems and take action, by questioning, preserving, elaborating, comparing, switching and abandoning the search for a frame and rapid frame-switching. Whilst we see a similarity between “frame” (data are fitted into a structure linking them to other elements: Klein et al. 2007) and the SA “schema”, we do not discuss it here.

Thirdly, Endsley (2004) argues, sense-making is basically the process of forming level 2 SA from level 1 data. This means sense-making is an effortful process of gathering and synthesising information, using story building and mental models to find a representation that accounts for and explains disparate data. Several studies from fire-fighting (Klein et al. 2007, 2010) and air traffic controlling (Malakis and Kontogiannis 2013) focus on sense-making as a process used to achieve the knowledge status achieved by the three-level SA model (Endsley and Garland 2000; Endsley 2015). Golightly et al. (2013) from the research in railway maintenance domain say sense-making combines experience, expectations and interpretations in understanding a critical situation. The takeaway for the cognition of abnormal machine behaviour is that, the drive for sense-making can direct and lead attention to seek out additional information (Chater and Loewenstein 2016) required for performing a diagnosis in a critical situation.

Finally, sense-making involves retrospection. Endsley (2015) distinguishes sense-making from SA, considering the former to be a backward looking process. Put another way, as Sonenshein (2007) suggests, post hoc attributions of sense-making can reflect individuals’ engagement, whereby individuals infer their own behaviour after the fact through self-perception. In this understanding, sense-making fails to develop a model linked to goals, plans, expectations, task/system effects and dynamic processing. Weick (1995) defends sense-making, arguing “although sense making can be constructed retrospectively, it can also be used prospectively and even resonates with other people”. The important part of sense-making for cognition about abnormal machine behaviour is understanding or making sense of the difference between a developing and the old settings (Louis 1980), thus to acknowledge an abnormality itself. Sense-making is not the activity of solely perceiving and interpreting input from the environment after the fact, but the continuous process of fitting what is observed with what is expected, an active process guided by our current understanding (Klein et al. 2007). Likewise, the effects of a person’s goals and expectations on SA influence how attention is directed and information is perceived and interpreted (Endsley 1995).

These studies suggest that people do not produce an internalised simile of a situation but construct a plausible story of what is going on, accounting for the sensory experiences in a way that supports actions and goal achievement. The plausibility allows people to make retrospective sense of whatever happens. In sum, Weick (1995) says sense-making is not about accuracy, but about plausibility. This is influenced by the Gestlet tradition whereby humans actually perceive meaningful wholes not elements and meanings come effortlessly and pre-rationally (Dekker and Lutzhöft 2004).

5.3 Recognition-primed decisions

Authors (e.g. Cannon-Bowers et al. 1996) support the suitability of the recognition-primed decision model (RPDM) to study decisions in naturalistic environments, such as under time pressure, changing conditions, unclear goals, degraded information and in team interactions. Thus, the model dominates in studies of fire commanders, tank platoon leaders, critical care nurses, chess tournament players and design engineers (Klein 1993, 2008, 2015). In the selection of cases for studies, Klein (2008) prefers typical events, believing selection of rare critical incidents will over-emphasise deliberate, analytical decisions. There is a growing demand to adapt RPDM to intelligent systems. Demir and McNeese (2015) suggest that modelling interactions between humans and synthetic teammates need to build on RPDM to support human-agent collaborations and expectancy-based decisions. Along the same lines, Dorton et al. (2016) calls for a decision support system that can emulate recognition-primed decisions of experts. We see opportunities for applying RPDM in such systems, not merely to deal with the external environment, but to understand and predict behaviours (and abnormalities) of both human and synthetic team mates.

Although “perception” (of the three-level SA model) is not obvious in RPDM, Klein (2000) identifies perception as the totality of the events in the experienced environment. Klein’s definition suggests that level 2 SA will map more directly as the recognition of the situation itself, particularly as it leads to a determination of the most important cues, the relevant goals and the reasonable actions. Klein (2000) also identifies “projection” within the RPDM model as the expectancies generated once a situation is recognised as typical. However, as expectancies fall into the four aspects of the “recognition” of RPDM (see Fig. 5), the above consideration implies “projection” is part of the recognition of the totality of the situation. In other words, recognition brings the knowledge about the most critical cues, the relevant goals, the events to expect next and the actions commonly taken.

A recognition-primed decision-making model (Klein 1993)

Despite the four distinct aspects of “recognition”, i.e. plausible goals, expectancies, relevant cues and actions, RPDM does not claim that a decision maker determines each of the four aspects and then infers the nature of the situation; in fact, Klein (2000) suggests the contrary is true. Using their intuition, i.e. the patterns they have acquired, experts usually identify an effective option as the first one they consider (Klein et al. 2010). Moreover, a “familiar situation” in the model does not exclusively refer to “patterns”. Instead, it refers to the four aspects of recognition. This emphasises the dominance of “recognition” in this model. When applied to the domain, we are interested in, RPDM suggests, “recognition” as the totality of detection of a machine abnormality, diagnosis and the prognosis, instead of recognising them as distinct steps.

The outcome of the pattern matching is an action queue of plausible responses, options that start with the most plausible (“actions 1…n” in the model). Therefore, it can model a good enough solution for a novel situation, from which to simulate, and/or proceed from. This suggests that RPDM can model how a novel and abnormal machine behaviour can become familiar through sufficing expectancies. However, RPDM holds that experts do not just consciously examine the options; notably, Klein believes they do not subconsciously examine them either. Their challenge is not choosing between alternative options but making sense of the events and conditions, leading them to choose the first option as the most plausible (Klein 2015). RPDM recognises mental simulation is followed by action cues; the choice that comes first does not require deliberation, and subsequent options may or may not be considered. The model implies familiar situations. We argue that merely seeking more information and reassessing the situation (as shown in the model) cannot guarantee a situation will become familiar. Therefore, we support the proposition that RPDM is more suitable for modelling familiar events of machine abnormalities, handled by human experts.

5.4 Skilled intuition

Intuition is usually defined as the capability to act or decide appropriately without deliberately and consciously balancing alternatives, without following a certain rule or routine and, possibly, without awareness (Kahneman and Klein 2009). There are two types of intuitive abilities: the first type enables people to jump to a decision on action based on expertise gained through domain expertise (skilled intuition); the second is a heuristics bias (e.g. Kahneman and Klein 2009; Mishra 2018). The central goal of the studies on naturalistic decision-making has been to explicate intuition by recognising the role of cues used by experts to make their judgments, whilst acknowledging that those cues are difficult for the expert to articulate. Despite the long debate, Kahneman and Klein (2009) “fail to disagree” that skilled intuition will only develop in an environment of sufficient regularity. We discuss heuristics bias in the next section; here, we focus on skilled intuition.

Pirsig (1974) notes “each machine has its own, unique personality which probably could be defined as the intuitive sum total of everything the user know and feel about it”. The concept of machine personality reflects humans’ natural ability to concurrently apply their senses to identify, track and interpret different signals to provide useful descriptions of machine behaviour. A person affected by the operating environment can often “feel” the condition of a machine in a manner that may remain tacit to the individual (Amadi-Echendu and Smidt 2015). A methodology developed to assess the significance of human sensors shows that sight, touch and sound can assist abnormality detection (Illankoon et al. 2016); since these abilities are mostly subtle, they use cognitive task analysis techniques to uncover them. The existence of intuitive cognition has important implications for how human cognition and its interaction with computers are conceptualised, characterised and understood (Patterson et al. 2010). This can provide a foundation for the concept of an intuitive user interface (Baerentsen 2000) which is immediately understandable to all users, without the need for special knowledge by the user or for the initiation of special educational measures. There is also an increasing interest in modelling human-like intuition (e.g. Tao and He 2009; Dundas and Chik 2011; Mishra 2018).

Eraut (2000) identifies intuition as not only pattern recognition but also rapid responses to developing situations based on the tacit application of tacit rules. They may not be explicit or are unable to be justified; their distinctive feature is that they are tacit at the moment of use. Polanyi developed the concept of tacit knowledge as part of a problematic directed at a philosophic conception of science and scientific theorising (Mooradian 2005). Polanyi (1962) distinguishes subsidiary and focal awareness; he notes: “Our subsidiary awareness of tools and probes can be regarded now as the act of making them form a part of our own body. We pour ourselves out into them and assimilate them as parts of our own existence. We accept them existentially by dwelling in them” (see Polanyi (1962) for classic examples of skilfully using a hammer and playing piano). Authors (e.g. Brown and Duguid 1998; McAdam et al. 2007; Wellman 2009) say tacit knowledge is hard to communicate and define and subconsciously understood and applied; they recognise it as a valuable source of knowledge for an organisation (Nonaka 1994).

Although information derived from sensors is commonly accepted in condition assessment, the characterisation provided by such information can be suboptimal (see, e.g. Amadi-Echendu and Smidt 2015). With the high levels of uncertainty prevailing in industries, such as underground mining, the relevance of operator experiential knowledge of specific conditions remains significant. Human operators can prevent disastrous outcomes during non-routine critical events (Overgard et al. 2015). In a study of critical events in maritime operations, event trees of three-level SA show that only in a few incidents were operators certain of cues, but they still projected the incident before it happened. The operators said they neither identified a base event nor understood the problem, yet were able to understand that something was wrong and successfully resolved the situation (Overgard et al. 2015). The notion of using an individual’s experience, feelings or intuition is often treated with suspicion, and sometimes rejected (Amadi-Echendu and Smidt 2015). Some studies suggest augmenting tacit knowledge with information derived from physical parameters to enhance the cognition of an abnormal condition (e.g. Griffiths 2009; Botelho et al. 2014; Dudek and Patalas-Maliszewska 2016). However, these studies also point out that the intuitive cognition of an abnormal condition comprises a measure of personal interpretation and understanding relative to the operating environment. Implicit learning involves the largely unconscious learning of dynamic statistical patterns and features, and this leads to the development of tacit knowledge (Patterson et al. 2010). Cognitive psychologists discuss the similarities between the implicit processing of tacit knowledge and intuition (e.g. Hodgkinson et al. 2008). We explore these relations in following sections.

5.5 Implicit learning and retrieval

Whilst appreciating the notions of SA, situation assessment and sense-making, some authors (Croft et al. 2004; Durso and Sethumadhavan 2008) believe understanding the implicit components of SA can help describe performance. Much of the literature associates implicit awareness with two major concepts. Firstly, learning is implicit when we acquire new information without intending to do so, and in such a way that the resulting knowledge is difficult to express (Berry and Dienes 1993). It can be characterised as a natural occurrence when an organism attends to a structured stimulus in the absence of conscious attempts to learn and the unconscious holding of resulting new knowledge (Reber 1993). Conversely, explicit learning is a conscious hypothesis-driven process that is employed when learning how to solve a problem (Cleeremans et al. 1998, Stadler and Frensch 1998). Secondly, implicit memory is the non-intentional, none conscious retrieval of previously acquired information and is demonstrated by the performance of tasks that do not require conscious relocation of past experience (Schacter 1987). This form of memory is different from explicit memory, or the conscious intentional recollection of past experience. Studies of artificial grammar learning and sequence learning find participants can perform tasks above a chance level but are subsequently unable to report the underlying rules (e.g. Darryl et al. 2004). The literature on implicit memory engagement during human-machine interactions includes both real-life events and laboratory experiments with novice subjects. These studies highlight the relevance of implicit learning and memory in many domains, increasing confidence in implicit knowledge as a generalisable construct regarding the learning about and responding to machine abnormalities.

A recent study (Lo et al. 2016) finds inconsistent correlations between three-level SA measured using the situation awareness global assessment technique (SAGAT) (Endsley 1988) and the performance of expert operators in unplanned train stoppages. The results suggest expert operators can have high level SA, even when they do not have complete or accurate level 1 SA. In fact, Endsley (2004) suggests that higher levels of SA (level 2 and level 3) can drive the search for and acquisition of level 1 SA, implying the preference to be explicitly aware of the cues perceived, their meanings and the immediate future status (SA of three-levels). This claim and related SAGAT method can be constrained by the presence of inconsistent correlations between SAGAT scores and the actual performance. Final performance can be affected by factors other than the SA (Endsley 1995). However, improved performance despite weak awareness (indicated through SAGAT score) is not explainable through this claim. Endsley (2015) justifies the attempt to measure various elements using SAGAT as more pragmatic, without trying to assess the linkages between them, because linkages are generally determined through deeply imbedded mental models and schema (Nisbett and Wilson 1977), causing very difficult articulation.

A laboratory study (Gardner et al. 2010) finds implicit fault diagnostic knowledge, as the actual task performance, exceeds the knowledge level displayed in responses to a questionnaire. Although the participants’ operators usually have a good understanding of the faults they encounter, they seem unable to articulate that knowledge. Studies on the type of knowledge acquired by operators of complex systems point to “mental models” of the systems’ behaviour. Operators’ knowledge of the components and their interrelationships may be organised on different levels of detail, called “lattices”, and Moray (1990) suggests that at certain levels, these lattices may differ considerably from the actual system and may even constitute wrong knowledge. In a recent study by Gugliotta et al. (2017), drivers are shown video clips ending with a hazardous situation. Drivers are more accurate in answering a question asking what they would do in a similar situation, than they are in answering a question on their awareness of what the hazard is, where it is located and what happens next. As Wickens et al. (2015) say, SA “need not be based on conscious awareness”. However, a car accident is a life-threatening event; as such, in the participants’ responses, we see some influence of the evolved capacities of the human brain (instead of implicit learning), something we discuss in the next section. In a study using a unique method involving factor analysis, Gutzwiller and Clegg (2012) find no relationship between working memory and recognition, but they do find a clearer relationship of working memory with higher levels of SA (e.g. projection).

Fraher’s (2011) case study of US airways flight 1549 is inspired by the research methods used in leadership, organisation and management studies of disaster. The pilot who landed the aircraft in the Hudson River says, “Everything I had done in my career had in some way been a preparation for that moment”. The case has been widely studied for the challenges of responding to a sudden crisis involving intense pressure and significant uncertainty, focusing on implicit cognition as a central concept (e.g. Farley 2016). It provides insight into a decision-making process that necessitated considerable improvisation but that also relied on experience and preparedness (Weeks 2012). A number of implicit cues were heeded by the captain; for example, he felt vibrations, could smell a “cooked bird”, felt “a dramatic loss of thrust” (Fraher’s 2011) and was aware of the silence of both aircraft engines. The “design for intuition” will not only make interfaces intuitive but will provide opportunities for humans to implicitly learn how systems behave (Illankoon 2018). In sum, evidence of these studies shows an implicit awareness of abnormal machine behaviours.

5.6 Fast and frugal heuristics

Heuristics is considered a simple procedure to find an adequate, though often imperfect answer to a difficult question (Simon 1987; Gigerenzer and Todd 1999; Gladwell’s 2005; Kahneman 2011). In fact, skilled intuition and heuristics are both automatic, arising effortlessly and often coming to mind without immediate justification. However, they have distinct features from each other. Sources of heuristics include individual learning, social learning and phylogenetic learning (Gigerenzer et al. 2008). These are, in turn, based on evolved capacities of the brain and environmental structures (Gigerenzer 2007; Järvilehto 2015). Skilled intuition, unlike heuristics, is driven by ontogenetic processes linked to previous experience and expertise (Klein 2000; Gladwell 2005; Gigerenzer 2007; Dane and Pratt 2007; Kahneman and Klein 2009; Kahneman 2011). Intuitive judgments arising from experience and skills are the province of naturalistic decision-making (NDM), but heuristic bias is mainly concerned with intuitive judgments based on simplified heuristics, not specific experience (Kahnman and Klein 2009). Heuristic bias comes into play when the heuristics match the underlying statistical features of the situation (Klein 2015). Gigerenzer (2007) assumes gut feelings produced by heuristics or rules of thumb are common to all humans and produced by our environment, but Klein states recognition-priming picks up complex strategies of action based on prior experience and expertise. Generally, heuristic bias is considered less accurate and more prone to systematic errors, but those working in the area of fast and frugal heuristics (FFH) see heuristics as having both positive and negative aspects (Gigerenzer and Todd 1999). Some researchers claim simple heuristics might even outperform complex and deliberate algorithms, as it can enable both living organisms and intelligent systems to make smart choices quickly with minimum information.

Three aspects of heuristics employed in judgements under uncertainty (Tversky and Kahneman 1974) are frequently mentioned in studies on human error. Firstly, “representativeness” is usually employed when people attempt to judge the probability of an object or event. Secondly, “availability” of instances or scenarios is employed when people attempt to assess the frequency of a class or the plausibility of a particular development. Thirdly, “adjustment from an anchor” is employed when people numerically predict the availability of a relevant value. Somewhat differently, Murata et al. (2015) classifies heuristics as representativeness, availability and confirmation (affect). Acknowledging the rather confusing terminologies, Peon et al. (2017) classifies heuristics and judgemental biases to five main categories: availability, representativeness, affect, familiarity and excessive optimism and overconfidence.

Closer inspection of human errors during abnormal machine behaviours suggests an association with heuristics. Murata et al. (2015) demonstrate how heuristic-based biases contribute to distorted decision-making and eventually become the main cause of disasters. Their analysis of the Three Mile Island nuclear power plant disaster concludes that the incident is possibly related to confirmation bias. Operators believed that the closed valve of an auxiliary water feeding pump would not be the cause of any critical disaster, even though the information was available contradicting the expectation. Overall, their study suggests that we must identify where and under what conditions these heuristic, overconfident and framing-based cognitive biases are likely to come into play within specific man-machine interactions.

Many studies find human judgemental errors in response to machine abnormalities, particularly form aviation sector (Shappell and Wiegmann 2000; Schmidt et al. 2003; Krulak 2004, Rashid et al. 2013). However, challenged by the inability to understand the cognitive mechanisms behind the judgements, these studies do not extend to uncover the heuristics behind the errors. Even though skilled intuition and heuristic bias are different, many individuals cannot identify which system comes into play when they employ automatic thinking (Kahanman and Klien 2009). The problem with gut feelings is that many other things appear suddenly in our minds with similar clarity, and we feel like acting on them as well (Gigerenzer 2007). Challenged by the same reason, not many studies approach to uncover the potentials of human judgements with respect to machine abnormalities. In fact, this challenge poses some important avenues for future research.

On the other hand, there is an increasing interest for heuristics-based-intelligent systems for machine abnormality detection, despite the fact that the computational models of heuristics often have explicit rules for searches (e.g. Marewski et al. 2010; Parpart 2017). However, these heuristics pose an important question; an abnormality detected by heuristics based intelligent system could not necessarily associate with a fault. A data instance might be a contextual anomaly in a given context, but an identical data instance could be reflected as regular in a different context (Galar et al. 2015). Notably, this can lead human (driven by human heuristics) to misinterpret an abnormal data instant essentially as a fault. On the other hand, cry wolf syndrome (Wickens et al. 2015) can lead human to neglect such alarm (which was faulty before), even when a critical fault is alarmed.

Authors hold different positions about the significance of heuristics for developing SA. In the attempt to develop the situated SA model, Chiappe et al. (2012) suggest individuals to incorporate external representations into their operations in a way that increases the likelihood of successful performance (by imposing less demand on working memory). Whilst accepting such heuristics and reminders can help keeping up with information, Endsley (2015) disputes that such cues do not constitute SA nor substitute for it. Furthermore, Endsley does not believe that cueing techniques such as heuristics can adequately explain the sheer volume of information and the deeper mental processing associated with higher levels of SA.

5.7 System approach to situation awareness

Distributed cognition (Norman 1993; Saloman 1993; Hutchins 1995) is a system view of cognition. The focus is on an ensemble of individuals and artefacts, not an individual (Hutchins 1995). In other words, it focuses on how information represents, transforms and distributes across individuals and representational media. Distributed situation awareness (DSA) (Stanton et al. 2010) is inspired by distributed cognition. It focuses on “transactions” (exchanges of situation awareness between agents, not just the communication of information) between humans and artefacts to understand how a system undertakes tasks and what each agent is aware of at any given point (Neville et al. 2015). This means DSA has a socio-technical influence. Stanton et al. (2010) define DSA as “activated knowledge for a specific task within a system at a specific time by specific agents”. DSA assumes that in distributed teamwork, cognitive processes occur at a system rather than an individual level.

DSA research has entered many domains, including infrastructure development, driver training, supervisory control, aviation accident investigation, command and control tasks and health care. The DSA view can model collaborative processes (between agents) and dynamic situations. Stanton (2016) reviews several studies using the DSA approach in laboratory-based studies, accident analysis, health care and command and control, in dynamic domains. These studies provide empirical support that people dynamically use information from many environmental relationships “beyond monitoring” in fast changing situations.

Relating DSA to cognition about abnormal machine behaviour, Salmon et al. (2015, 2016) focus on “transactions” between system agents in the 2009 crash of an Air France Airbus A330. The authors say the sociotechnical system (i.e. aircrew, cockpit, aeroplane systems) “loses” situation awareness, not individual operators. They develop situation awareness networks for different phases of the incident, showing the dynamic connection between many actors and highlighting the essential connections that were missing. They identify four classes of transaction failure that directly contributed to the accident: absent transactions (e.g. not discussing the risk of loss of speed information related to high-density ice crystals), inappropriate transactions (e.g. inappropriate airspeed information through the affected pitot tubes and cockpit displays), incomplete transactions (e.g. discussing the sequence of events) and misunderstood transactions (e.g. misunderstood stall warning). The main point is that both human and non-human agents were involved in all forms of failed transactions. We see an important implication of the concept of failed transaction for the cognition of machine abnormalities. “Failed transactions” enable DSA to model the “abnormality” beyond the technicalities, to a level comprising of the interactions between the system agents.

Sandhåland et al. (2015) study accidents during bridge operation on platform supply vessels. They consider why crew members did not coordinate situation-related information that would have helped them prevent an accident. Their use of DSA leads them to suggest contingency planning is an individual activity. They note a limited use of standardised communication during transfers of command in real-life incidents, and they identify non-essential conversations as distractions and interruptions. They view a socially constructed world, but not entirely so, as in reality, social structures can be dominated by individual activities. They suggest promoting planning as a team activity, setting up closed-loop communications and managing interruptions to avoid impaired attention. Whilst accommodating the notion of distributed awareness, this study invites further inquiry to determine the reality of social connections.

SA (three-level) and DSA seem to be in competition. Firstly, the DSA claims that it does not matter if the individual human agents do not know everything, provided that the system has the information, which enables the system to perform effectively (Hutchins 1995). In this understanding, humans (and other actors) simply receive information, making them somewhat passive participants. In contrast, three-level SA stresses the active involvement of operators and their need to seek information (Endsley 1995). Endsley (2015) says it is not enough that information is out there somewhere if the person who needs it is not aware of it. In many cases, if the information is not known by the person who needs it, a significant performance problem or accident is the result. Secondly, DSA says that by focusing solely on the individual mind or solely on the environment, SA misses much in socio-technical systems. However, Endsley (2015) does not recognise the data residing in a report or a display or an electronic system as “situation awareness”, as inanimate objects do not have “awareness”. Endsley (2015) refers to studies on social aspects within the scope of the three-level SA model. Finally, SA has the ability to address a broader spectrum of cognition, and Endsley (2015) identifies the importance of the implicit nature of cues associated with socio-technical aspects: “DSA does not account for much of SA that occurs silently—viewing, listening, and perceiving within the workspace without observable or explicit communications or interactions”.

Stanton et al. (2010) propose a resolution to the discussion: The resolution that is proposed is that viewing SA “in-mind”, “in-world” or “in-interaction” is a declaration of the boundaries that are applied to the analysis; this is not to say that one position is necessarily right or wrong, rather those boundaries are declared openly in the analysis”. In our view, SA and DSA take rather extreme positions at times, but the core idea converges to the same thing. Their claims can actually meet, once it is accepted that information can be held and processed by non-human actors; what is important is that humans realise what is happening during critical situations, when intervention is needed.

However, we agree with the more convincing contrast between SA and DSA concept with respect to acknowledging the implicit cues. In fact, whilst SA attempts to appreciate wide range of human cognition concepts between deliberation and NDM, prime focus of DSA is to model awareness at the system level, beyond human actors. Therefore, we recognise the future research opportunities to model the cognition of abnormal machine behaviour using a synergised approach along two dimensions: multiple cognition concepts among multiple levels of agents.

Along somewhat similar lines to DSA, Masys (2005) uses the Actor Network Theory (ANT) (Latour 1987) in the case study of the mid-air collision of two planes over Uberlingen, Germany in 2002. This study interprets SA as “a systemic attribute, a construct resident within a network of heterogeneous elements”. However, we exclude consideration of ANT in this study due to two reasons. Firstly, the available studies (e.g. Masys 2005: Somerville 1997; Cresswell et al. 2010: Nguyen et al. 2015) based on ANT are not essentially about the cognition of machine abnormalities. Secondly, ANT is challenged by critics who say the approach is too descriptive and fails to provide detailed suggestions of how actors should be seen and their actions analysed and interpreted (Cresswell et al. 2010).

6 The cognitive continuum of abnormal machine behaviour

In the above sections, we critically assess the literature on cognition concepts and the empirical evidence of abnormal machine behaviour, comparing the various views to those of the three-level SA model-our selected theoretical framework. We now focus on how these concepts apply to a continuum specific to the cognition of abnormal machine behaviour. To this end, we apply our insights to four main themes. First, we choose the theme “position on rationality” to compare different SA propositions to experiential/rational (Epstein 1994) dual processing. Second, we select the theme “position on autonomous processing of typical events” to compare different SA propositions to autonomous/algorithmic mind theory (Dennett’s 1996). Third, we choose the theme “position on holistic environment” to compare different SA propositions to holistic/analytic (Nisbett et al. 2001). Fourth and finally, we choose the theme “position on type of cues perceived and use of memory” to compare different SA propositions to implicit/explicit dual processing (Evans and Over 1996).

We do not use the themes to compare different SA propositions with those of heuristic/analytic (Evans 1984) or heuristic/systematic (Chen and Chaiken 1999) theory because we see heuristics itself as a proposition, so the discussion would be obvious. Nor do we use the themes to compare SA propositions to general dual processing dichotomies, system 1/system 2, C-system–X-system and type 1–type 2. As we want to suggest a specific labelling for types of cognition of abnormal machine behaviour, we purposefully avoid a discussion of general classifications.

6.1 Positions on rationality

Epstein (1994) in his experiential/rational dual processing theory refers those in the rational system as beliefs and those in the experiential system as implicit beliefs or, alternatively, as schemata. He assumes the experiential system to be intimately associated with the experience of affect, including vibes, which refer to subtle feelings of which people are often unaware. Some authors (e.g. Dekker and Lutzhöfts 2004) say three-level SA has a hidden assumption, namely that “people can be perfectly rational in considering what is important to look at and can be perfectly aware of what is going on”. They argue that SA theories aim to understand the world by referring to ideals or normative standards that describe optimal strategies. They favour a radical empirical view and reject the notion of separate mental and material worlds (i.e. a mental model vs the real world). As Lo et al. (2016) point out, many studies support the notion of bounded rationality (Simon 1957) associated with SA in reality. These studies acknowledge the cognitive limitations of sensory inputs, computational powers and situational circumstances.

The sociotechnical and system views can accommodate a more pragmatic view, taking the cognitive limitations of humans into account by considering distributed cognition abilities. Cognition maps and their dynamics used in DSA suggest a systematic approach to trace the transactions between these distributions. Sense-making, by definition, accepts pre-rational meanings. Likewise, RPDM’s preference for first choice and the argument that experts will not even subconsciously consider alternatives, raise questions of rationality, despite their apparent effectiveness. Personal knowledge (Polanyi 1962) that is embedded in tacit knowing can hardly be considered rational. The inability to understand the underlying cognitive mechanisms of heuristics is closely related to implicit beliefs and may offer the least rational solution out of the concepts discussed.

To sum up, different SA propositions about the abnormal machine behaviour vary in how they approach rationality. On the one hand, three-level SA has been criticised for its apparently perfect rationality; on the other hand, heuristics seems to be the least rational. With respect to the rational/experiential dichotomy, each proposition can be placed close to one of the poles. DSA traces clear evidence for transactions among actors, suggesting a rational presence of them. Three-level SA can accommodate deliberate detection, diagnosis and prognosis in novel experimental situations; although it refers to schemata, implicit nature is warned. RPDM’s mental simulations can, to a certain degree, address experimental efforts pulling it towards the rational end. Other propositions are not associated with the “rational” pole of dual cognition and associate more with the experiential pole.

6.2 Positions on the autonomous processing of typical events

This section compares different SA propositions about machine abnormalities to autonomous/algorithmic mind theory (Dennett’s 1996). The algorithmic mind provides the essential computational machinery, particularly the ability to decouple suppositions from beliefs and engage in hypothetical thinking (Evans and Stanovich 2013). Three-level SA model accommodates both typical and novel situations (and novices). It considers both deliberate and automatic pattern matching (Endsley 2015).

Three-level SA model covers automaticity. With automaticity, a person can perform highly practised procedures without deliberate attention and handle dynamic situations such as driving a car (Anderson 1982). Automaticity may reduce demands on limited attention resources; however, it can reduce responsiveness to novel stimuli (Endsley and Garland 2000). Three-level SA model warns automaticity, so it can hardly be positioned at or close to the autonomous pole. The definition of algorithmic “ability to decouple suppositions from beliefs and engage in hypothetical thinking” suggests algorithmic as a more suitable pole for three-level SA. Therefore, we suggest that three-level SA can accommodate algorithmic mind in novel situations, or for novices.

Some authors (e.g. Hogarth 2001; Kahneman and Klein 2009) argue that intuition can only be trusted if it reflects repeated experience in environments with reliable feedback. Yet, they say intuition does not require the kind of precise repetitions needed for automaticity; it simply requires sufficient repetition to synthesise patterns and prototypes. Therefore, we agree with Harteis and Billett (2013) when they say, “Understanding what constitutes intuition goes beyond the accounts of highly practised routine procedures applied automatically”. We refer here to Polanyi; whilst the skilful use of hammer and piano requires the “subsidiary awareness” of skilled intuition, such an action as riding a bicycle belongs in the automaticity group. However, all can be seen as tacit actions. The point is that although three-level SA model covers “automaticity”, it does not explicitly address “intuition”.

Based on the empirical evidence, we recognise the ability of implicit learning and retrieval to dominate time critical events, even with less typical incidents. For example, the pilot who landed on Hudson River (NTSB/AAR-10/03 Accident Report) had not been exposed to multiple instances of the experiences for which he implicitly developed effective responses, so we distinguish implicit learning and retrieval from automaticity. RPDM is tied up with suppositions of typical events, so it does not carry features of algorithmic mind. Heuristics autonomously handle less typical events, at least those atypical during the lifetime of a human being. Although the deliberation associated with sense-making puts it away from autonomous mind, no evidence supports it towards algorithmic; we exclude sense-making from this comparison. The empirical evidence suggests DSA has the ability to study atypical events such as accidents. Although the awareness may be distributed to autonomous systems, the DSA prefers transactions those are decoupled from suppositions. This puts DSA much closer to algorithmic mind.

In sum, the SA propositions about machine abnormalities associate with the autonomous quality to different degrees, depending on the typicality or non-typicality of the events. However, none of the concepts shows a connection to algorithmic mind, except for DSA and three-level SA. We do not find suitable claims for sense-making to compare against the notion of autonomous and algorithmic mind.

6.3 Position on the holistic environment

This section compares different SA propositions about machine abnormalities to holistic-analytic notion by Nisbett et al. (2001) who define holistic thought as “involving an orientation to the context or field as a whole, including attention to relationships between a focal object and the field, and a preference for explaining and predicting events on the basis of such relationships”. To different degrees, all the concepts discussed support a holistic picture. Three-level SA holds that comprehending the significance of objects and events forms a holistic picture of the environment. This is followed by perceiving those elements in the environment within the holistic form itself. We acknowledge the value of SA for remote systems, as these often require the perception of elements in the environment (alarms, gauges), not just a holistic perception. Same applies for awareness of novices or during novel conditions. Therefore, the deliberated awareness covered by the three-level SA model can accommodate for analytic thinking to a certain degree, although its roots go to NDM. However, levels are often tightly integrated (Endsley 2015), and the three-level SA can perceive the larger holistic environment. In addition, various sociotechnical factors are associated with SA (Endsley 2015).

“Sense-making” offers the opportunity to perceive meaningful wholes instead of elements. A plausible story has value, priority and clarity, implying the realisation of a holistic environment. RPDM is holistic as it identifies “recognition” as the totality of relevant cues, plausible goals, expectancies and action cues. Skilled intuition is also associated with the use of patterns without the need to decompose them into components. However, the concept of subsidiary awareness (related to skilled intuition) seems to include a limited number of cues, although those cues can be implicit. Meanwhile, implicit learning and retrieval focus on the relevant cues, but the concept demands extensive experience in the discipline, not necessarily experience of a specific act. This can be seen as connected with holistic experience in the discipline, not just automaticity. Despite connections with evolved capacities of the brain, heuristics seems to be accompanied by a narrow focus (e.g. bias to representativeness). Therefore, we suggest heuristics do not belong in the holistic camp, neither to analytical. Finally, the sociotechnical and system views of DSA can define it as holistic.

In short, the three-level SA proposition can accommodate a wide range of cognition of machine abnormalities, between analytic and holistic. We do not find enough claims for heuristics to compare with the notion of holistic to analytic. Rest of the propositions carry the features of holistic thinking.

6.4 Position on type of information perceived and use of memory for processing it

This section compares SA propositions to implicit/explicit dual processing (Evans and Over 1996) notion. The preceding review of theoretical perspectives and empirical evidence shows the ability of the SA concept to include many modes of cognition along a continuum of explicit-implicit. Endsley and Garland (2000) notes the role of subtle cues that are registered subconsciously and suggests new technologies should consider possible interference from them. SA interventions also recommend exploring the degree to which multiple modalities can be used to increase throughput (Endsley and Jones 2011). With the move towards remote operations, a major challenge will be to compensate for these cues (Endsley et al. 1996).

Endsley (2015) does not suggest people need to incorporate all information into their situation representation; rather, they should incorporate information relevant to their model of what is happening. The three-level SA model states that higher levels of SA can drive the search for and acquisition of level 1 SA, when these levels are deficient (Endsley 2004). Endsley (2015) stresses experts should accommodate various meanings of the environment when they are using SA. The use of the Goal Directed Task Analysis (GDTA) technique (Endsley and Jones 2011) often reveals a comprehensive list of elements in the environment that need attention. Whilst such information is of enormous value, it is questionable whether even experts can express the elements they use, unless the technique accepts and enables a deeper analysis of all information that arrives either explicitly or implicitly.