Abstract

In this paper, we propose a method for estimating the algebraic Riccati equation (ARE) with respect to an unknown discrete-time system from the system state and input observation. The inverse optimal control (IOC) problem asks, “What objective function is optimized by a given control system?” The inverse linear quadratic regulator (ILQR) problem is an IOC problem that assumes a linear system and quadratic objective function. The ILQR problem can be solved by solving a linear matrix inequality that contains the ARE. However, the system model is required to obtain the ARE, and it is often unknown in fields in which the IOC problem occurs, for example, biological system analysis. Our method directly estimates the ARE from the observation data without identifying the system. This feature enables us to economize the observation data using prior information about the objective function. We provide a data condition that is sufficient for our method to estimate the ARE. We conducted a numerical experiment to demonstrate that our method can estimate the ARE with less data than system identification if the prior information is sufficient.

Article Highlights

-

Proposal of a data-driven method to form the algebraic Riccati equation.

-

Getting rid of the necessity of the knowledge of the system model in inverse linear quadratic problems.

-

Giving an example of data economization compared with the method using system identification.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The inverse optimal control (IOC) problem is a framework used to determine the objective function optimized by a given control system. By optimizing the objective function obtained by IOC, a different system can imitate the given control system’s behavior. For example, several studies have been conducted on robots imitating human or animal movements [1,2,3]. To apply IOC, in these studies, the researchers assumed that human and animal movements are optimal for unknown criteria. Such an optimality assumption holds in some cases [4].

The first IOC problem, which assumes a single-input linear time-invariant system and quadratic objective function, was proposed by Kalman [5]. Anderson [6] generalized the IOC problem in [5] to the multi-input case. For this type of IOC problem, called the inverse linear quadratic regulator (ILQR) problem, Molinari [7] proposed a necessary and sufficient condition for a linear feedback input to optimize some objective functions. Moylan et al. [8] proposed and solved the IOC problem for nonlinear systems. IOC in [5,6,7,8] is based on control theory, whereas Ng et al. [9] proposed inverse reinforcement learning (IRL), which is IOC based on machine learning. Recently, IRL has become an important IOC framework, along with control theory methods [10].

There are many variations of data-driven ILQR problems depending on available information. For example, Zhang and Ringh [11] considered the case where the system is known, and the states can be observed, but the input cannot. In this paper, we consider the discrete-time case where the system is unknown, but the states and inputs can be observed. Such a case often occurs in biological system analysis, which is the main application target of IOC. The control theory approach solves the ILQR problem by solving a linear matrix inequality (LMI) that contains the algebraic Riccati equation (ARE) [12]. In [12], they discussed the method to numerically solve the ARE, assuming that the full knowledge of the system model is available. However, the system model is often unknown beforehand. In [12], they solved this problem by inserting the step of system identification. Suppose the system identification step can be bypassed. In that case, it will be beneficial in some cases, where we are not interested in the system itself, but only in the criterion for control. Recent studies [13,14,15] also consider the IOC problem with linear quadratic control over a finite-horizon. However, all the above-mentioned studies also assume that the system model is known. The IRL approach also has difficulty solving our problem. There is IRL with unknown systems [16], and IRL with continuous states and input spaces [17]; however, to the best of our knowledge, no IRL exists with both.

In the continuous-time case, we proposed a method to estimate the ARE from the observation data of the system state and input directly [18]. In the present paper, we estimate the ARE for a discrete-time system using a method that extends the result in [18]. Similarly to [18], our method transforms the ARE by multiplying the observed state and input on both sides. However, this technique alone cannot calculate ARE without the system model because the form of the ARE differs between continuous and discrete-time. We solve this problem using inputs from the system’s controller. Also, the use of such inputs enables us to estimate the ARE without knowing the system’s control gain. We prove that the equation obtained from this transformation is equivalent to the ARE if the dataset is appropriate. The advantage of our method is the economization of the observation data using prior information about the objective function. We conducted a numerical experiment to demonstrate that our method can estimate the ARE with fewer data than system identification if the prior information is sufficient.

The structure of the remainder of this paper is as follows: In Sect 2, we formulate the problem considered in this paper. In Sect 3, we propose our estimation method and prove that the estimated equation is equivalent to the ARE. In Sect 4, we describe numerical experiments that confirm our statement in Sect 3. Sect 5 concludes the paper.

2 Problem formulation

We consider the following discrete-time linear system:

with an arbitrary initial state x(0), where \(A\in {{\mathbb {R}}}^{n\times n}\) and \(B\in {{\mathbb {R}}}^{n\times m}\) are constant matrices, and \(x{\left( k\right) }\in {{\mathbb {R}}}^n\) and \(u{\left( k\right) }\in {{\mathbb {R}}}^m\) are the state and the input at time \(k\in {\mathbb {Z}}\), respectively.

Let \({\mathbb {Z}}_+=\left\{ 0,1,2,\cdots \right\}\). We write the set of real-valued symmetric \(n\times n\) matrices as \({{\mathbb {R}}}^{n\times n}_{\textrm{sym}}\). In the LQR problem [19], we determine the input sequence \(u{\left( k\right) }\) \(\left( k\in {\mathbb {Z}}_+\right)\) that minimizes the following objective function:

where \(Q\in {{\mathbb {R}}}^{n\times n}_{\textrm{sym}}\) and \(R\in {{\mathbb {R}}}^{m\times m}_{\textrm{sym}}\).

We assume that the system (1) is controllable and matrices Q and R are positive definite. Then, for any \(x{\left( 0\right) }\), the LQR problem has a unique solution written as the following linear state feedback law [19]:

where \(P\in {{\mathbb {R}}}^{n\times n}_{\textrm{sym}}\) is a unique positive definite solution of the ARE:

For u defined in (3), \(J{\left( u\right) }=x{\left( 0\right) }^\top Px{\left( 0\right) }\) holds.

In the ILQR problem, we find positive definite matrices \(Q\in {{\mathbb {R}}}^{n\times n}_{\textrm{sym}}\) and \(R\in {{\mathbb {R}}}^{m\times m}_{\textrm{sym}}\) such that an input \(u{\left( k\right) }=-Kx{\left( k\right) }\) with the given gain \(K\in {{\mathbb {R}}}^{m\times n}\) is a solution of the LQR problem, that is, u minimizes (2).

We can solve the ILQR problem by solving an LMI. Let the system (1) be controllable. Then, \(u{\left( k\right) }=-Kx{\left( k\right) }\) minimizes (2) if and only if a positive definite matrix \(P\in {{\mathbb {R}}}^{n\times n}_{\textrm{sym}}\) exists that satisfies (4) and the following:

By transforming (4) and (5), we obtain the following pair of equations equivalent to (4) and (5):

Hence, we can solve the ILQR problem by determining P, \(Q\in {{\mathbb {R}}}^{n\times n}_{\textrm{sym}}\), and \(R\in {{\mathbb {R}}}^{m\times m}_{\textrm{sym}}\) that satisfy the following LMI:

In biological system analysis or reverse engineering, the system model and control gain is often unknown, and the ARE (6) is not readily available. Hence, we consider the following ARE estimation problem where we determine a linear equation equivalent to (6) using the system state and input observation:

Problem 1

Consider controllable system (1) and controller \(u{\left( k\right) }=-Kx{\left( k\right) }\) with unknown A, B, and K. Suppose \(N_d\) observation data \(\left( x_i{\left( 0\right) },u_i,x_i{\left( 1\right) }\right)\) \(\left( i\in \left\{ 1,\ldots ,N_d\right\} \right)\) of the system state and input are given, where

Let \(N'_d\le N_d\). \(N'_d\) inputs in the data are obtained from the unknown controller as follows:

Determine a linear equation of P, \(Q\in {{\mathbb {R}}}^{n\times n}_{\textrm{sym}}\), and \(R\in {{\mathbb {R}}}^{m\times m}_{\textrm{sym}}\) with the same solution space as (6).

We discuss without assuming that the data is sequential, that is, always satisfies \(x_{i+1}{\left( 0\right) }=x_i{\left( 1\right) }\). However, in an experiment, we show that our result can also be applied to one sequential data. The ARE has multiple solutions and we call the set of these solutions, which is a linear subspace, a solution space.

3 Data-driven estimation of ARE

The simplest solution to Problem 1 is to identify the control system, that is, matrices A, B and K. We define matrices \(X\in {\mathbb {R}}^{n\times N_d}\), \(X'\in {\mathbb {R}}^{n\times N'_d}\), \(U\in {\mathbb {R}}^{m\times N_d}\), \(U'\in {\mathbb {R}}^{m\times N'_d}\), and \(D\in {\mathbb {R}}^{(n+m)\times N_d}\) as follows:

Let the matrices D and \(X'{\left( 0\right) }\) have row full rank. Then, matrices A, B, and K are identified using the least square method as follows:

In ILQR problems, prior information about matrices Q and R may be obtained. However, such information cannot be used for system identification. We propose a novel method that uses the prior information and estimates the ARE using less observation data than system identification.

The following theorem provides an estimation of the ARE:

Theorem 1

Consider Problem 1. Let F be an \(N'_d\times N_d\) matrix whose ith row jth column element \(f_{i,j}\) is defined as

Then, the following condition is necessary for the ARE (6) to hold.

Proof

We define \(G_1\in {\mathbb {R}}^{n\times n}\) and \(G_2\in {\mathbb {R}}^{m\times n}\) as

The ARE (6) is equivalent to the combination of \(G_1=0\) and \(G_2=0\). Let \(i\in \left\{ 1,\ldots ,N'_d\right\}\) and \(j\in \left\{ 1,\ldots ,N_d\right\}\) be arbitrary natural numbers. From the ARE, we obtain

Using the assumption (8) and (9), we can transform the left-hand side of (15) into the following:

Therefore, we obtain \(f_{i,j}=0\) from (15) and (16), which proves Theorem 1. \(\square\)

Equation (13) is the estimated linear equation that we propose in this paper and it can be obtained directly from the observation data without system identification. Our method obtains one scalar linear equation of P, Q, and R from a pair of observation data. However, at least one of the paired data must be a transition by a linear feedback input with the given gain K. Equation (13) consists of \(N_dN'_d\) scalar equations obtained in such a manner. Therefore, it is expected that if \(N_d\) and \(N'_d\) are sufficiently large, (13) is equivalent to (6), which is also linear for the matrices P, Q, and R.

Equation (12) is similar to the Bellman equation [20]. Suppose that the ARE (6) holds, that is, \(u{\left( k\right) }=-Kx{\left( k\right) }\) minimizes \(J{\left( u\right) }\). Then, the following Bellman equation holds:

The equation \(f_{i,j}=0\) is equivalent to the Bellman equation if \(x_i{\left( 0\right) }=x_j{\left( 0\right) }\) and \(u_i=u_j=-Kx_i{\left( 0\right) }\). The novelty of Theorem 1 is that \(f_{i,j}=0\) holds under the weaker condition, that is, only \(u_i=-Kx_i{\left( 0\right) }\). As shown in Appendix, Theorem 1 is also proved from the Bellman equation (17).

The following theorem demonstrates that if the data satisfies the condition required for system identification, our estimation is equivalent to the ARE:

Theorem 2

Consider Problem 1. Suppose that matrices D and \(X'{\left( 0\right) }\) defined in (10) have row full rank. Then, the estimation (13) is equivalent to the ARE (6).

Proof

From Theorem 1, the ARE (6) implies the estimation (13), that is, \(F=0\). As shown in the proof of Theorem 1, matrix F is expressed as follows using the matrices defined in (10) and (14):

Because the matrix D has row full rank, \(F=0\) implies

Because \(X'{\left( 0\right) }\) has row full rank, we obtain \(G_1=0\) and \(G_2=0\) from (19), which proves Theorem 2. \(\square\)

The advantage of our method is the data economization that results from having prior information about Q and R. Suppose that some elements of Q and R are known to be zero in advance, and independent ones are fewer than the scalar equations in the ARE (6). Then, there is a possibility that our method can estimate the ARE with fewer data than \(m+n\) required for system identification.

For fixed \(N_d\), our method can provide the largest number of scalar equations when \(N'_d=\min \left\{ n,N_d\right\}\). We use the following lemma to explain the reason:

Lemma 1

Suppose that there exist \(k\in \left\{ 1,\ldots ,N'_d\right\}\) and \(c_i\in {\mathbb {R}}\) \(\left( i\in \left\{ 1,\ldots ,N'_d\right\} \backslash \left\{ k\right\} \right)\) that satisfy

where \(d_i=\left[ x_i{\left( 0\right) }^\top \;u_i^\top \right] ^\top\) for any \(i\in \left\{ 1,\ldots ,N_d\right\}\). Then, the following holds for any \(j\in \left\{ 1,\ldots ,N_d\right\}\):

Proof

We can readily prove Lemma 1 using the following derived from (18)

\(\square\)

Lemma 1 means that if data \(d_k\) is the linear combination of other data, the equations obtained from \(d_k\) are also linear combinations of other equations not using \(d_k\). Hence, without noise, such a data as \(d_k\) is meaningless. If \(N'_d>n\), at least one of \(d_1,\ldots ,d_{N'_d}\) is the linear combination of other data because the inputs in those data are obtained from the same gain K. Therefore, \(N'_d\) larger than n reduces data efficiency. From the above and \(f_{i,j}=f_{j,i}\), our method can provide \(\frac{n\left( n+1\right) }{2}+n\left( N_d-n\right)\) equations if \(N_d\ge n\).

For an example of prior information, we consider diagonal Q and R. In this case, the number of the independent elements of P, Q, and R is \(\frac{n\left( n+1\right) }{2}+n+m\). Hence, if \(N_d\) is \(N_{d\mathrm min}{\left( n,m\right) }=n+1+\lceil \frac{m}{n}\rceil\) or larger, we have more equations than the independent elements. Figure 1 compares the numbers \(N_{d\mathrm min}\) and \(n+m\) of data required for our method and system identification, respectively, if Q and R are diagonal. From Fig. 1, our method may estimate the ARE with less data than system identification. Additionally, this tendency becomes stronger as the number m of inputs becomes larger than the number n of states.

Remark 1

If the number of data \(N_d\) satisfies \(N_d < n + m\), but the matrix D in (10) has column full rank, we have

where \(W \in {\mathbb {R}}^{n \times (n+m)}\) is any matrix that satisfies \(WD = 0\). Because our ARE estimation relies solely on the data D, this suggests that many systems result in the same ARE estimation, if there is some prior knowledge of R and Q. However, in Problem 1, we assume that \(1,\ldots ,N'_d\)th trajectories are optimal, but a system satisfying (23) does not always satisfy this assumption.

In the case of noisy data, we discuss the unbiasedness of the coefficients in (13). The coefficient of kth row lth column element \(q_{k,l}\) of Q in (12) is expressed as follows:

where \(x_{i,k}{\left( 0\right) }\) is the kth element of \(x_i{\left( 0\right) }\). Suppose that the data is a sum of the true value and zero-mean noise distributed independently for each data. Then, we can readily confirm that if \(i\ne j\), the coefficient (24) of \(q_{k,l}\) in (12) is unbiased, and otherwise, it is biased. This result is also the same for the coefficients of the elements of P and R.

4 Numerical experiment

4.1 Distance between solution spaces

In our experiments, we evaluated the ARE estimation using a distance between the solution spaces of the ARE and estimation. Let \(s\in {\mathbb {R}}^{N_v}\) be a vector generated by independent elements in the matrices P, Q, and R in any fixed order, where \(N_v\) is defined according to the prior information. Because the ARE (6) is linear for s, we can transform (6) into the following equivalent form:

where \(\Theta \in {\mathbb {R}}^{N_{\textrm{ARE}}\times N_v}\) is the coefficient matrix and \(N_{\textrm{ARE}}=\frac{n\left( n+1\right) }{2}+mn\). Similarly, the estimation (13) is transformed into the following equivalent form:

where \(\hat{\Theta} \in \mathbb R^{N_\textrm{est}\times N_v}\) is the coefficient matrix and \(N_{\textrm{est}}=N_dN'_d-N'_d\left( N'_d-1\right) /2\). We define the solution spaces S and \({\hat{S}}\) of the ARE (25) and estimation (26) as follows:

We define the distance between the solution spaces using the approach provided in [21]. Assume that \(\Theta\) and \({\hat{\Theta }}\) have the same rank. Let \(\Pi\), \(\hat{\Pi }\in \mathbb R^{N_v\times N_v}\) be the orthogonal projections to S and \({\hat{S}}\), respectively. The distance between S and \({\hat{S}}\) is defined as follows:

where \(\left\| \cdot \right\| _2\) is the matrix norm induced by the 2-norm of vectors. Distance \(d{\left( S,\hat{S}\right) }\) is the maximum Euclidian distance between \(\hat{s}\in \hat{S}\) and \(\Pi \hat{s}\in S\) when \(\left\| \hat{s}\right\| _2=1\), as explained by the following equation:

Hence, the distance satisfies \(0\le d{\left( S,{\hat{S}}\right) }\le 1\).

The orthogonal projections \(\Pi\) and \({\hat{\Pi }}\) are obtained from the orthogonal bases of S and \({\hat{S}}\). To obtain these orthogonal bases, we use singular value decompositions of \(\Theta\) and \({\hat{\Theta }}\). In our experiments, we performed the computation using MATLAB 2023a.

4.2 Experiment 1

In this experiment, we confirmed Theorem 2 by solving Problem 1. We set the controllable pair \(\left( A,B\right)\) as follows:

The ground truth values of Q and R are defined as

The optimal gain \(K\in {\mathbb {R}}^{2\times 3}\) is the solution of the LQR problem for the above Q and R. We considered the following \(N_d=n+m\) observation data with \(N'_d=n\) that satisfy the condition of Theorem 2:

Using this setting, each of the ARE (6) and our estimation (13) contained 12 scalar equations for \(N_v=15\) variables, that is, independent elements in the symmetric matrices P, Q, and R.

Figure 2 shows the singular values of \(\Theta\) and \({\hat{\Theta }}\), in descending order, that we computed using the default significant digits. As shown in Fig. 2, there is no zero singular value of \(\Theta\), \(\hat{\Theta }\in \mathbb R^{12\times 15}\). Hence, the ranks of \(\Theta\) and \(\hat{\Theta }\) are 12, and the solution spaces S, \(\hat{S}\subset \mathbb R^{15}\) are three-dimensional subspaces. To investigate the influence of the computational error, we performed the same experiment with various numbers of significant digits. Figure 3 shows the relationship between the distance \(d{\left( S,{\hat{S}}\right) }\) and the number of significant digits. As shown in Fig. 3, the logarithm of the distance decreased in proportion to the number of significant digits. Therefore, we concluded that non-zero \(d{\left( S,{\hat{S}}\right) }\) was caused by the computational error and that our method correctly estimated the ARE.

4.3 Experiment 2

In this experiment, we confirmed that, if Q and R are diagonal, Theorem 1 can estimate the ARE with fewer observation data than system identification. We randomly set the matrices \(A\in {\mathbb {R}}^{100\times 100}\) and \(B\in {\mathbb {R}}^{100\times 50}\) by generating each element from the uniform distribution in \(\left[ -1,1\right]\). After the generation, we confirmed that \(\left( A,B\right)\) is controllable. The given \(K\in {\mathbb {R}}^{50\times 100}\) is the solution of the LQR problem for the diagonal matrices Q and R whose elements were generated from the uniform distribution in \(\left[ 0.01,1\right]\). We used \(N_d=N_{d\mathrm min}=102\) observation data that satisfy (9) for \(N'_d=n=100\). We generated the elements of \(x_{1}{\left( 0\right) },\ldots ,x_{102}{\left( 0\right) }\in {\mathbb {R}}^{100}\) and \(u_{101}\), \(u_{102}\in {\mathbb {R}}^{50}\) from the uniform distribution in \(\left[ -1,1\right]\). The number of used data, 102, is less than \(n+m=150\) required for system identification (11).

Using this setting, the ARE (6) and our estimation (13) contained \(N_{\textrm{ARE}}=\frac{n\left( n+1\right) }{2}+mn=10050\) and \(N_{\textrm{est}}=N_dN'_d-N'_d\left( N'_d-1\right) /2=5250\) scalar equations, respectively. The variables of these scalar equations were \(N_v=\frac{n\left( n+1\right) }{2}+n+m=5200\) independent elements in the symmetric matrix P and diagonal matrices Q and R.

Because the arbitrary-precision arithmetic was too slow to perform for the scale of this experiment, we performed the computation using the default significant digits. We indexed the singular values of \(\Theta \in \mathbb R^{10050\times 5200}\) and \(\hat{\Theta }\in \mathbb R^{5250\times 5200}\) in descending order; Fig. 4 shows the 5180–5200th singular values. From Fig. 4, we assumed that, without computational error, \(\Theta\) and \({\hat{\Theta }}\) each had a zero singular value. Then, the solution spaces S, \({\hat{S}}\subset {\mathbb {R}}^{5200}\) were one-dimensional subspaces, and the distance \(d{\left( S,{\hat{S}}\right) }\) was \(4.3\times 10^{-10}\).

We compared our method with system identification. For system identification, we generated additional data \(x_{103},\ldots ,x_{150}\) and \(u_{103},\ldots ,u_{150}\) in the same manner as \(x_{101}\) and \(u_{101}\). Using the same approach as that for S, we defined the solution space \({\hat{S}}_{\textrm{SI}}\subset {\mathbb {R}}^{N_v}\) of the ARE (6) using A, B, and K identified by (11). Then, the distance \(d{\left( S,{\hat{S}}_{\textrm{SI}}\right) }\) was \(2.8\times 10^{-10}\). Therefore, our method estimated the ARE with the same order of error as system identification using fewer observation data than system identification.

4.4 Experiment 3

In this experiment, we compared our method and system identification under practical conditions: a single noisy and sequential data and sparse but not diagonal Q and R. We set the matrices \(A\in {\mathbb {R}}^{40\times 40}\) and \(B\in {\mathbb {R}}^{40\times 20}\) in the same manner as Experiment 2. The control gain \(K\in {\mathbb {R}}^{20\times 40}\) is the solution of the LQR problem for the sparse matrices Q and R. We performed three operations to generate Q and R. First, we generated matrices \(M_Q\in {\mathbb {R}}^{40\times 40}\) and \(M_R\in {\mathbb {R}}^{20\times 20}\) in the same manner as A and set \(Q:=M_Q^\top M_Q\) and \(R:=M_R^\top M_R\). Second, we randomly set 800 non-diagonal elements of Q to 0 to make it sparse while maintaining symmetry. Similarly, we set 200 elements of R to 0. Finally, add the product of a constant and the identity matrix to Q and R so that the maximum eigenvalues are 10 times as large as the minimum ones, respectively.

To generate data, we used the following system:

where \(x^*{\left( k\right) }\in {{\mathbb {R}}}^n\) and \(u^*{\left( k\right) }\in {{\mathbb {R}}}^m\) are the state and input at time \(k\in {\mathbb {Z}}_+\), respectively. If \(\left\| x^*{\left( k\right) }\right\| _2\le 1\), the vector \(v{\left( k\right) }\in {{\mathbb {R}}}^n\) with norm 0.2 is the product of a scalar constant and a random vector whose elements follow a uniform distribution in \(\left[ -1,1\right]\). Otherwise, \(v{\left( k\right) }=0\). Note that v(k) is not a noise: it is an input signal to be added intentionally to make the trajectory data rich in information by making the input different from the optimal feedback input. We ran the system (34) through \(N_d=200\) time steps with a random initial state \(x^*{\left( 0\right) }\) with norm 1 and obtained data as

where \(\varepsilon _{\textrm{state}}\in {{\mathbb {R}}}^n\) and \(\varepsilon _{\textrm{input}}\in {{\mathbb {R}}}^m\) are the observation noises whose elements follow a normal distribution with a mean 0 and variance \(\sigma ^2\). We explain the value of \(\sigma ^2\) later. Note that, although \(N_d\) can be arbitrary as long as \(N_d \ge n+m = 60\), we used a larger \(N_d\) because the data is contaminated with noise. Throughout the simulation, \(v{\left( k\right) }=0\) holds 121 times. Hence, \(N'_d=121\). We sorted the data \(\left( x_i{\left( 0\right) },u_i,x_i{\left( 1\right) }\right)\) \(\left( i\in \left\{ 1,\ldots ,N_d\right\} \right)\) so that (9) holds if noise does not exist.

Under the above condition, the ARE (6) and our estimation (13) contained \(N_{\textrm{ARE}}=\frac{n\left( n+1\right) }{2}+mn=1620\) and \(N_{\textrm{est}}=N_dN'_d-N'_d\left( N'_d-1\right) /2=16940\) scalar equations, respectively. The variables of these equations were \(N_v=n\left( n+1\right) +\frac{m\left( m+1\right) }{2}-500=1350\) independent elements in the symmetric matrix P and sparse matrices Q and R.

We indexed the singular values of \(\Theta \in \mathbb R^{1620\times 1350}\) and \(\hat{\Theta }\in \mathbb R^{16940\times 1350}\) in descending order. Figure 5 shows the 1330–1350th singular values when \(\sigma ^2=10^{-8}\). Although there is no zero singular value due to noise, the last singular value is noticeably small as shown in Fig. 5. Hence, we can conclude that the solution spaces S, \({\hat{S}}\subset {\mathbb {R}}^{1350}\) were one-dimensional subspaces.

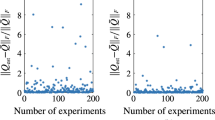

We conducted experiments with different noise variance \(\sigma ^2\) from \(10^{-6}\) to \(10^{-16}\). Also, the noise seed differs for each experiment, but the other seeds used to determine (A, B), the initial state, and (Q, R) are fixed. Figure 6 shows the relationship between the noise variance \(\sigma ^2\) and distances \(d{\left( S,{\hat{S}}\right) }\) and \(d{\left( S,{\hat{S}}_{\textrm{SI}}\right) }\). As shown in Fig. 6, our method outperformed system identification in almost all experiments. In the experiments of variances from \(10^{-8}\) to \(10^{-16}\), the ratio of \(d{\left( S,{\hat{S}}\right) }\) to \(d{\left( S,{\hat{S}}_{\textrm{SI}}\right) }\) is approximately constant, and the average ratio is 0.17. Because the distance is bounded from above by 1, as is clear from (29), we conclude that the estimations using both methods failed with a larger noise variance than \(10^{-8}\), which have distance values close to 1.

4.5 Experiment 4

In the case where the discrete-time system is a discretization of a continuous-time system, our previous method [18] can also be used to solve the problem. In this experiment, we compare the performance of the proposed method and our previous method.

We set the controllable pair (A, B) as follows:

which is obtained by discretizing the following continuous-time system with a sampling period of \(10^{-3}\) s:

The given gain \(K\in {\mathbb {R}}^{2\times 3}\) is the solution to the LQR problem for the following Q and R:

The data was prepared in the same way as in Sect. 4.2 using the following \(N_d=n + m\) observation data with \(N'_d=n\) that satisfies the condition of Theorem 2:

Some integration needed to use the previous method [18] was performed by using the trapezoidal rule.

Let the solution space \(\hat{S}\) for the previous method be \(\hat{S}_{\text {prev}}\). The distances \(d\left( S, \hat{S} \right)\) and \(d \left( S, \hat{S}_{\text {previous}} \right)\) were \(1.36 \times 10^{-12}\) and \(4.85 \times 10^{-4}\), respectively. This result shows the superiority of the proposed method in the case where the data is discretized.

5 Discussion

The purpose of our method is to obtain the ARE from the state and the input trajectories without identifying the system model. The ARE is important to solve ILQR problems [5, 7, 11, 12]. From the result of Experiment 1, it is clear that this purpose is achieved. Moreover, Experiment 2 shows that, if there is some additional prior information other than the trajectory data, the estimation of ARE can be obtained with less data than is required for system identification. Also, Experiment 3 suggests that the estimate of the ARE by our method can be more accurate than that using the identified system model. Although most existing studies on ILQR assume that the system model is known in advance by system identification [5, 7, 11,12,13,14,15], our results suggest that the system identification is not essential and will better be avoided.

The present study is, in some sense, a discrete version of our previous study [18], in which we assume a continuous time system. In most cases where continuous systems are discretized because of the discrete observation, the method in [18] is also applicable by using the trapezoidal rule to approximate the integral. However, Experiment 4 clearly illustrates the superiority of the proposed method in the case where only the discrete-time observation is available. Because almost all systems only admit discrete-time observation, this result is essential in practice.

Although Experiment 3 shows that the proposed method is less sensitive to noise than the method using system identification, the effect of noise is still to be discussed. Especially, the question of whether it is unbiased and consistent is an important question to be discussed.

6 Conclusions

In this paper, we proposed a method to estimate the ARE with respect to an unknown discrete-time system from the input and state observation data. Our method transforms the ARE into a form calculated without the system model by multiplying the observation data on both sides. We proved that our estimated equation is equivalent to the ARE if the data are sufficient for system identification. The main feature of our method is the direct estimation of the ARE without identifying the system. This feature enables us to economize the observation data using prior information about the objective function. We conducted a numerical experiment that demonstrated that our method requires less data than system identification if the prior information is sufficient.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

Code availability

The code used during the current study available from the corresponding author on reasonable request.

References

Mombaur K, Truong A, Laumond JP. From human to humanoid locomotion—an inverse optimal control approach. Autonomous Robots. 2010;28:369–83.

Li W, Todorov E, Liu D. Inverse optimality design for biological movement systems. IFAC Proc Vol. 2011;44(1):9662–7.

El-Hussieny H, Abouelsoud AA, Assal SFM, Megahed SM. Adaptive learning of human motor behaviors: an evolving inverse optimal control approach. Eng Appl Artif Intell. 2016;50:115–24.

Alexander RM. The gaits of bipedal and quadrupedal animals. Int J Robot Res. 1984;3(2):49–59.

Kalman RE. When is a linear control system optimal? J Basic Eng. 1964;86(1):51–60.

Anderson BDO. the inverse problem of optimal control, technical report: Stanford University. Stanford University; 1966. 6560(3).

Molinari B. The stable regulator problem and its inverse. IEEE Trans Autom Control. 1973;18(5):454–9.

Moylan P, Anderson B. Nonlinear regulator theory and an inverse optimal control problem. IEEE Trans Autom Control. 1973;18(5):460–5.

Ng AY, Russell S. Algorithms for inverse reinforcement learning. In: Proceedings of the seventeenth international conference on machine learning; 2000. p. 663–70.

Ab Azar N, Shahmansoorian A, Davoudi M. From inverse optimal control to inverse reinforcement learning: a historical review. Annu Rev Control. 2020;50:119–38.

Zhang H, Ringh A. Inverse optimal control for averaged cost per stage linear quadratic regulators. Syst Control Lett. 2024;183:105658.

Priess MC, Conway R, Choi J, Popovich JM, Radcliffe C. Solutions to the inverse LQR problem with application to biological systems analysis. IEEE Trans Control Syst Technol. 2015;23(2):770–7.

Zhang H, Li Y, Hu X. Discrete-time inverse quadratic optimal control over finite time-horizon under noisy output measurements. Control Theory Technol. 2021;19:563–72.

Yu C, Li Y, Fang H, Chen J. System identification approach for inverse optimal control of finite-horizon linear quadratic regulators. Automatica. 2021;129: 109636.

Wu H-N, Li W-H, Wang M. A finite-horizon inverse linear quadratic optimal control method for human-in-the-loop behavior learning. IEEE Trans Syst Man Cybernetics Syst. 2024. https://doi.org/10.1109/TSMC.2024.3357973.

Herman M, Gindele T, Wagner J, Schmitt F, Burgard W. Inverse reinforcement learning with simultaneous estimation of rewards and dynamics. Artificial intelligence and statistics. In: PMLR. 2016. p. 102–10.

Aghasadeghi N, Bretl T. Maximum entropy inverse reinforcement learning in continuous state spaces with path integrals. In: IEEE/RSJ international conference on intelligent robots and systems. 2011. p. 1561–6.

Sugiura S, Ariizumi R, Tanemura M, Asai T, Azuma S. Data-driven estimation of algebraic riccati equation for inverse linear quadratic regulator problem. In: SICE annual conference; 2023.

Antsaklis PJ, Michel AN. Linear systems. Birkhäuser Boston; 2005.

Bardi M. Optimal control and viscosity solutions of Hamilton-Jacobi-Bellman equations. Birkhäuser; 1997.

Golub GH, Van Loan CF. Matrix computations. JHU Press; 1997.

Acknowledgements

We thank Edanz (https://jp.edanz.com/ac) for editing a draft of this manuscript.

Funding

This work was supported in part by the Japan Society for the Promotion of Science KAKENHI under Grant JP22K04027 and in part by JST FOREST Program JPMJFR2123.

Author information

Authors and Affiliations

Contributions

Conceptualization: S.S.; Methodology: S.S.; Writing—original draft preparation: S.S.; Writing—review and editing: All authors; Funding acquisition: R.A., S.A.; Supervision: R.A., M.T., T.A., S.A.

Corresponding author

Ethics declarations

Competing interests

There is no competing financial interest or personal relationship that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

We give another proof of Theorem 1 using the Bellman equation (17). To this end, we prove the following theorem equivalent to Theorem 1.

Theorem 1’

Consider Problem 1. Let i, \(j\in \left\{ 1,\ldots ,N_d\right\}\) be arbitrary natural numbers. Suppose that the ARE (6) holds. Then, we have \(f_{i,j}=0\) if \(u_i=-Kx_i{\left( 0\right) }\) holds, where \(f_{i,j}\) is defined as (12).

Proof

From the Bellman Eq. (17), we can readily obtain \(f_{i,j}=0\) if \(i=j\). Next, suppose \(i \ne j\) and that \(u_j=-Kx_j{\left( 0\right) }\) also holds. Then, we have \(\left[ x_i{\left( 1\right) }+x_j{\left( 1\right) }\right] =A\left[ x_i{\left( 0\right) }+x_j{\left( 0\right) }\right] +B\left( u_i+u_j\right)\) and \(u_i+u_j=-K\left[ x_i{\left( 0\right) }+x_j{\left( 0\right) }\right]\). Hence, from the Bellman Eq. (17), we obtain

Because \(f_{i,i}=f_{j,j}=0\) and \(f_{i,j}=f_{j,i}\), we obtain

Finally, we consider the case without the assumption \(u_j=-Kx_j{\left( 0\right) }\). Let \(\Delta u_j=u_j+Kx_j{\left( 0\right) }\). Substituting \(x_j{\left( 1\right) }=Ax_j{\left( 0\right) }+Bu_j\) and \(u_j=\Delta u_j-Kx_j{\left( 0\right) }\) to (12), we have

We can consider \(\left( x_j{\left( 0\right) },-Kx_j{\left( 0\right) },\left( A-BK\right) x_j{\left( 0\right) }\right)\) as an extra data \(\left( x'_j{\left( 0\right) },u'_j,x'_j{\left( 1\right) }\right)\) satisfying \(u'_j=-Kx'_j{\left( 0\right) }\). Hence, from (42), the sum of the first, third, and fourth terms on the right-hand side of (43) is zero. Hence, substituting \(x_i{\left( 1\right) }=Ax_i{\left( 0\right) }+Bu_i\) and \(u_i=-Kx_i{\left( 0\right) }\) to (43), we have

From \(K=\left( R+B^\top PB\right) ^{-1}B^\top PA\), we obtain \(f_{i,j}=0\), which proves Theorem 1’. \(\square\)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sugiura, S., Ariizumi, R., Tanemura, M. et al. Data-driven estimation of the algebraic Riccati equation for the discrete-time inverse linear quadratic regulator problem. Discov Appl Sci 6, 284 (2024). https://doi.org/10.1007/s42452-024-05931-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-024-05931-0