Abstract

Audio is one of the most used ways of human communication, but at the same time it can be easily misused to trick people. With the revolution of AI, the related technologies are now accessible to almost everyone, thus making it simple for the criminals to commit crimes and forgeries. In this work, we introduce a neural network method to develop a classifier that will blindly classify an input audio as real or mimicked; the word ‘blindly’ refers to the ability to detect mimicked audio without references or real sources. We propose a deep neural network following a sequential model that comprises three hidden layers, with alternating dense and drop out layers. The proposed model was trained on a set of 26 important features extracted from a large dataset of audios to get a classifier that was tested on the same set of features from different audios. The data was extracted from two raw datasets, especially composed for this work; an all English dataset and a mixed dataset (Arabic plus English). For the purpose of comparison, the audios were also classified through human inspection with the subjects being the native speakers. The ensued results were interesting and exhibited formidable accuracy, as we were able to get at least \(94\%\) correct classification of the test cases, as against the \(85\%\) accuracy in the case of human observers.

Article Highlights

-

A neural network method for blindly classifying audio inputs as genuine or mimicked, without prior references or information.

-

Deals a scenario wherein exactly only one speech sample, either fake or real but not both, of the purported speaker is available.

-

On datasets composed of both English and its combination with Arabic speech samples, the method achieves a remarkable accuracy of at least 94% in differentiating between real and spoofed voices, surpassing human observer accuracy of 85%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In today’s digital age, falsehoods propagate faster than truths, fueled by the proliferation of social media platforms. The rise of ‘deep fakes’, enabled by advancements in deep learning algorithms, has further exacerbated the spread of misinformation. Fake multimedia content, spanning texts, audios, images, and videos, has become increasingly sophisticated, with ‘deep fakes’ posing a significant threat to individuals’ reputations and livelihoods. High quality fake audios/videos are commonplace and, sometimes, threatening to ruin lives. Add to it statistics, and you would find keen recipients everywhere in the world, in this post-truth era, from almost every age group and with every educational background. In the words of Mark Twain [1], quoting former British PM Benjamin Disraeli, “There are three kinds of lies: lies, damned lies, and statistics.”

However, it is essential to acknowledge the crucial role of authentic multimedia content in documenting global events and holding perpetrators accountable. Audiovisual media is one of the most important instruments to highlight not only atrocities/genocides across the globe, but also fixing the responsibility of a given crime on the guilty. Its importance in a court of law, as well as the court of the people, cannot be underestimated. Hence, we cannot undermine altogether important means of evidence merely on the suspicion of being fake. Consequently, there is a pressing need for robust methods to verify the authenticity of multimedia content, including audios. In this context, one important aspect of non-machine voice impersonation is mimicking someone’s voice to attribute to him/her something which was never said in one go in a context that may be uncomfortable.

The detection of human audio mimicry, performed by skilled voice actors, remains challenging, particularly in cases of blind mimicry, where there is either partial or complete absence of the authentic recorded voice of the attributed person. This difficulty lies in identifying mimicked audio without any reference-be it original, mimicked, or impersonated. This challenge forms the crux of the research problem addressed in this study. Specifically, we seek to determine whether a given audio is human-mimicked or authentic, without relying on prior knowledge of the purported speaker. Leveraging recent advances in machine learning, particularly neural networks and deep learning, we aim to extract meaningful features from audio data to train models capable of discerning genuine recordings from forgeries. Additionally, we may explore the influence of spoken language in our study. Our underlying assumption is that the system has never encountered the purported speaker before.

The recent advances in Machine Learning (ML)—especially neural networks and deep learning—can be exploited in forgery detection in audiovisual data. With the potential amount of available data being huge, deep learning can be the best way to classify it. The idea is to extract important features from a lot of audios and feed it to a neural/deep network that will help the model to learn how to identify the real audios from faked audios.

The rest of the paper is arranged as follows. Section 2 briefly describes the background speech processing concepts needed for the comprehension of this article. The related work from literature is outlined in Sect. 3 which is followed by Sect. 4 to introduce our dataset for this work. Section 5 explains our methodology which is then trained and tested on the dataset presented in Sect. 6 that analyses all the ensued results. Section 7 outlines the benchmarking results. Finally, Sect. 8 concludes the paper.

2 Speech pocessing

It is important to know the difference between audio and speech. While an audio is a waveform data in which the amplitude changes with respect to time, speech is the oral communication [2] and pertains to the act of speaking and expressing thoughts and emotions by sounds and gestures. The human brain processes and analyses everything around to help the body with the right response or reaction, and it does the same for the voices [3]. To be able to hear and process a voice, the inside of a human ear is equipped with small hair of various sizes; some are short and respond to resonate with low frequency voices while others are long that resonate with the high frequency voices. Each of these hairs is connected to a nerve that carries a signal to the brain for processing [4].

An audio signal is a representation of sound in function to the vibration of sound that is audible to the human ear [5]. Audio frequency [6] is the periodic variation of sound, with the human audible frequency being 20 Hz to 20 kHz [7]. For a machine, the processing of an audio is different from humans. In order for the machine to get a sound it should have the needed devices that are able to record and save the audio in machine processable formats like the well-known mp3, WMA, WAV, or others.

Features from speech signals can be broadly classified as temporal and spectral features. The temporal features are time domain features having simple physical interpretation and easy to compute. Examples are signal’s energy, maximum amplitude, zero crossing rate, minimum energy etc [8]. The spectral features, on the other hand, are frequency-based features that are extracted after passing the time domain signal to the frequency domain using Fourier or other similar transforms. Examples are frequency components, fundamental frequency, centroid, spectral flux, spectral density, roll-off etc [8]. In the context of audio signals such features may be helpful in the identification of pitch, notes, rhythm and melody etc.

Spectrum and cepstrum are two important frequency-based concepts in audio processing. A spectrum is mathematically a Fourier transform of a signal which converts a time-domain signal into frequency domain [9], i.e. spectrum is the audio signal in frequency domain. A cepstrum is the log of the magnitude of the spectrum followed by an inverse Fourier transform. That’s why its domain is neither frequency nor the time; its domain is called quefrency [9]. Cepstrum can be said of as a sequence of numbers that characterize a frame of speech [10]. Since the Fourier transform is a linear operation, so is consequently the cepstrum; the spectrums of the wavelet and reflectivity series are additively combined in the cepstrum [11].

Following are some important features [12] exploited in speech processing:

-

Zero crossing rate: It indicates the number of times the value of the signal changes between positive and negative and vise versa. It is also used to measure the noise in a signal, and it usually gives high value in case of a noisy signal [13].

-

Spectral centroid: It is a feature based on frequency which indicates the location of the center of mass of the spectrum. In audios, it is known as a good predictor of “ brightness” of a sound [14].

-

Spectral roll off: This feature is used to differentiate between the harmonic sound (below roll off) and the noise sound (above roll off). It is known as the energy spectrum under a specific percentage that is defined by the used (85% by default) [15].

-

Spectral bandwidth: The difference between the higher and lower frequencies in a group of continuous frequencies.

-

Chroma: This representation for audio where the spectrum is divided onto 12 bins representing the 12 distinct semitones (or chroma) of the musical octave.

-

Root Mean Square Energy (RMSE): RMSE represents the energy of the signal, and shows how loud the signal is [16].

-

Spectral flux: It measures how quick the power spectrum of a signal is changing, and it is calculated by comparing the changes of the power spectrum between one frame and the frame before it[17].

-

Spectral density: It is the measure of signal’s power content against frequency [18].

-

Cepstral Features: These are, as stated above, quefrency domain features with the following being considered important:

-

Mel Frequency Cepstral Coefficients (MFCCs [19, 20]): MFCCs are widely used features for speech recognition. The Mel-frequency scale represents subjective or perceived pitch as its construction is based on pairwise comparisons of sinusoidal tones. The conversion between Hertz (f) and Mel frequencies (m) can be generalized as:

$$\begin{aligned} m= & {} 2595 \cdot \log \left( 1+\frac{f}{700}\right) , \end{aligned}$$(1)$$\begin{aligned} f= & {} 700 \cdot (10^{m/2595} - 1). \end{aligned}$$(2)MFCCs are obtained by applying a short time Fourier transform to window-based slices from the audio signal, followed by calculating the power spectrum and consequently filter banks (triangular in shape). The filter bank coefficients are highly correlated and one way to de-correlate them is by applying a Discrete Cosine Transform DCT to get a compressed representation in the form of MFCC. Typically, MFCC 2–13 (i.e. 12 coefficients) are kept, and the rest are discarded [21].

-

Gammatone Frequency Cepstral Coefficients (GFCCs) [22]: used in a number of speech processing applications, such as speaker identification. A Gammatone filter bank approximates the impulse response of the auditory nerve fiber thus emulating human hearing and its shape can be likened to a Gamma function (\(e^{-2\pi (f_c) b t}\)) modulating the tone (\(\cos (2\pi f_{c}t+\phi )\)) [23]:

$$\begin{aligned} g(t) = at^{n-1}e^{-2\pi (f_c) b t}\cos (2\pi f_{c}t+\phi ) \end{aligned}$$(3)Where a is peak value, n the order of the filter, b the bandwidth, \(f_c\) the characteristic frequency and \(\phi\) is initial phase. \(f_c\) and b can be derived from Equivalent Rectangular Bandwidth (ERB) scale, using the following equation [24]: =

$$\begin{aligned} \text {ERB}(f_c)= & {} 24.7 \cdot \left( 4.37 \cdot \frac{f_c}{1000} + 1\right) \end{aligned}$$(4)$$\begin{aligned} b= & {} 1.019 \times \text {ERB}(f_c) \end{aligned}$$(5)For GFCC, FFT treated speech signal is multiplied by the Gammatone filter bank, reverted by IFFT, noise is suppressed by decimating it to \(100\) Hz and rectified using a non-linear process. The rectification is carried out by applying a cubic root operation to the absolute valued input [24]. Approximately, the first 22 features are called GFCC and these may be very useful in speaker identification. For a concise comparison on MFCC and GFCC, the reader can further consult [22].

Linear Prediction Cepstral Coefficients (LPCCs) and Linear Prediction Coefficients (LPCs) were the main features used in automatic speech recognition before MFCC specially with Hidden Markov Model (HMM) classifiers [10]. Some other important Cepstral features are Bark Frequency Cepstral Coefficients (BFCCs) and Power-Normalized Cepstral Coefficients (PNCCs).

-

3 Related work

Digital Multimedia forensics has garnered significant attention in recent years, primarily focusing on image forgery detection [25], particularly in the realm of copy/move forgery detection [26]. However, digital audio forgery detection has not received commensurate attention. It is crucial in multimedia forensics to ensure the authenticity and integrity of the data before it is used as evidence. The primary objective of audio forensics is to ascertain the integrity of the audio, distinguishing between real and fake recordings and identifying the individuals speaking. The applications of audio forensics vary, from legal proceedings to preempting social media or paparazzi rumors.

3.1 The spectrum of voice impersonation

Digital impersonation involves creating speech that can deceive people or machines into attributing it to a legitimate source, potentially leading to social or economic harm. An important aspect of audio impersonation involves mimicking someone’s voice to falsely attribute statements to them in uncomfortable contexts. The output of voice impersonation must be convincing, both to humans and machines, in being naturally uttered by the target speaker. This requires mimicking the signal qualities, like pitch, as well as the speaking style of the target [27]. In this age of deep fakes, seamless machine-based impersonation is a reality. The method in [27] relies on using a neural network-based framework that uses Griffin-Lim method [28] which can learn to mimic a person’s voice and style and then produce a voice that mimics the persons’ voice.

3.1.1 Voice cloning

Cloning technologies can learn the characteristics of the target speaker and utilize prepared models to mimic a person’s voice from only a few sound samples. The developments in cloned speech generation technologies can create a fake machine speech that is similar to the real voice of the target speaker [29]. There have been research efforts focusing on how to detect this kind of audio and how to enable systems to recognize them, such as the anti-Spoofing works [30,31,32,33]. In this context, the ASVspoof series of challenges are an important mention in dealing with spoofing in automatic speaker verification systems. ASVspoof baseline systems are based in modern deep learning architectures and having deep- and handcrafted features. although related, the problem we are dealing here is yet to be taken up in these challenges, to the best of our knowledge at least.

3.1.2 Voice disguise

Voice disguise refers to altering one’s voice deliberately to conceal one’s identity. Impersonation is concerned with a voice disguise aimed at sounding like another person who exists [34]. While impersonation may be easily detectable, it is a hard task to trace back a disguised voice, of presumably a person who never existed, to the original speaker. A study in [35] reaffirms the importance of phonetically trained specialists in subjective voice disguise identification after an untrained audience failed to identify known speakers in case of falsetto disguise. Another study along similar lines [34], reports that naive listeners can better distinguish between an impersonator and a target rather than identifying voice disguise. Readers are recommended a review of similar studies [36]. The system proposed in [37] relies on the magnetic field produced by loudspeakers to detect machine-based voice impersonation attacks. The reported results in combination with a contemporary system against human impersonation attacks, are incredible, viz. \(100\%\) accuracy and \(0\%\) Equal Error Rate (EER) [38].

3.1.3 Human mimicry

Human attempted voice impersonation (or voice imitation) is mimicry of another speaker’s voice characteristics and speech behavior [39] without relying on computer related spoofing; in fact, ruling out the quest for technical artifacts in the suspected audio. The focus is mainly on voice timbre and prosody of the target [40]. Being a “technically valid speech”, mimic attacks may not be detectable, especially in Automatic Speaker verification (ASV) environments. A professional impersonator is likely to target all lexical, prosodic [41] and idiosyncratic aspects of the subject speaker; exaggeration may be inevitable [39]. The study reported in [42] states that speech patterns, pitch contours, formant contours, and spectrograms etc. from speech signals of maternal twins are at least almost identical, if not exactly the same. Hence, even a mere verification may be a difficult task in the case of identical twins. Therefore, more exploration of discriminating speech features is needed, as suggested about half a century ago [43]. Even a paternal twin may be hand, as in a recent incident related to phone banking [44, 45], a non-identical twin mimic the voice of his brother, a BBC reporter, to deceive the system [46]. The literature contains many such incident of fraud [47].

3.2 Detecting human mimicry

Even extensive works, like [48, 49], do little to touch the subject of impersonation, especially the human mimicry, i.e., mimicking someone’s voice to attribute to him/her something which was neither ever said nor uttered in one go. Here, we are talking of an audio that has never been tampered; other than the usual pre-processing, filtering and compression etc. As of datasets, there exist quite a few forensic databases [50], but even these don’t touch the aspect of impersonation in the form of human mimicking.

The problem of human mimicry may be the earliest one addressed in the literature and can be traced back to as far as 1970 s. For example, an old study [51] employed four professional experts to identify voice disguises from the spectrogram of two sentences uttered by a sample of 30 subjects (15 reference + 15 matching) in undisguised as well as five disguised modes. Even without any disguise, the experts could go as far as \(56\%\) accuracy in matching the speakers. To classify speakers, another early days’ simulation in [52] uses such parameters as fundamental frequency, word duration, vowel/nasal consonant spectra, voice onset time and glottal source spectrum slope. The parameters were estimated at manually identified locations from speech events within utterances. A later study [53], on a professional impersonator and one of his voice impersonations, showed that the impersonator not only focused on the voice of the target, but also matched the speech style and intonation pattern as well as the accent and pronunciation peculiar to the target.

A voice impersonator may be identified by finding the features a typical impersonator chooses to exploit and what he ignores in the targeted voice, as had been tried in [54] whereby two professional and one amateur impersonators were asked to mimic the same target in order to observe whether they have chosen the same features to change with the same degree of success.

The work described in [55] used professional impersonatorsFootnote 1 to mimic a person’s voice to identify the acoustic characteristics that each impersonator attempts to change to match the target. A comparison of the impersonated voices and the actual voice of the impersonator affirmed the importance of the pitch frequencies and vocal/glottal acoustics of the target speaker and impersonator. A similar work in [56], involving three voice impersonators with nine distinct voice identities, recorded synchronous speech and Electro Glotto Graphic (EGG) signals. An analysis based on the EGG and the vocal traces—including speech rate, vowel formant frequencies, and timing characteristics of the vocal folds—led to the conclusion that each impersonator modulated every parameter during imitation. In addition, vowel pronunciations were observed to have a high dependency on the vowel category.

More recently, the method in [57] uses a Support Vector Machine (SVM) to create speaker models based on the prosodic features (intonation, loudness, pitch dependent rhythm, intensity and mimic duration in addition to jitter, shimmer, energy change, and various duration measures) from the original speech of celebrities and professional mimicry artists; as well as the original speech of the latter. A related effort [58] uses Bayesian interpretation in combination with SVM. A similar prosodic features-based method [59], analyzes the ability of impersonators to estimate the prosody of their target voices while using both intra-gender and cross-gender speeches.

4 The dataset

It seems that a standard dedicated speech impersonation database may not be publicly available, e.g., the study reported in [60] used the YOHO database that was designed for ASV systems. The best one can get is to collect from online sources, like YouTube, audios of celebrities and their mimicked versions by various professionals. Alternatively, one may exploit the public datasets, like voxceleb [61] that contain the original voices of celebrities; one may still vie for human mimicked version voices of these celebrities on YouTube. A related dataset is [62].

To collect the raw data, we went through a number of social media apps and sites and downloaded the audios which were then edited to conform to the proposed model by limiting it to a maximum duration of 20 s in WAV format. One part of the dataset consists of all English audios (both real and mimicked). The second part of the dataset contains a mix of both English and Arabic audios. The audio files are named so that the first four characters are digits to represent the index and the fifth character is either ‘r’ or ‘f’ to label the voice as real or faked, respectively.

Our goal is to blindly identify whether a given voice is mimicked or otherwise. Hence, for our experiments, a set of independent real and faked audios was required to create the dataset; real and faked voices uttered independently of what is being said and who said it and independent of the language. We employed 933 distinct English spoken audio samples, divided into 746 for training (including \(20\%\) for cross validation) and 187 for testing. To ensure language versatility, an additional 194 Arabic medium samples were included, resulting in a total of 1127 samples (901 for training and cross validation, and 226 for testing).

5 The proposed method

The use case of our method concerns the scenario of a complete blindness wherein no prior or side information is available about the speaker. The idea is to accept/reject an input voice, right at the outset, without any recourse to the already available record. Our emphasis is on classifying the speech, as faked, or otherwise, under the assumption that no other recorded voice of the speaker is available; whether genuine or disguised. The purported speaker is only represented once in the training data; that too, either as real or mimicked, but not both. Potentially, our method may be very useful in improving efficiency of many audio processing methods, especially, when applied at the pre-processing stage.

The method we used is loosely based on the one described in [63] for recognizing the spoken digits (0–9) from the audio samples of six people.

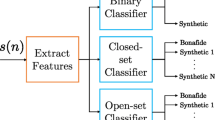

The proposed model is outlined in Fig. 1 that follows the steps given below.

5.1 Input

For the training set, it is essential that:

-

1.

Exactly one sample pertains to a purported speaker.

-

2.

Either the real or the faked voice of a given speaker is part of the dataset; in fact, they should be mutually exclusive.

-

3.

The spoken words are not required to be identical.

Keeping the above in view, We extracted our dataset from the raw dataset to contain 933 English-only audio samples and 1127 samples in both English and Arabic.

The input is constituted by the WAV files corresponding to the above that are stored in a suitable data structure. The model works in labeling our data based on the last letter (‘f’ or ‘r’) in the name of the file before storing it in a separate array of labels. The array is mapped to a separate perspective array of features obtained after the subsequent two steps.

5.2 Feature extraction

The model works on extracting the needed features using the Python librosa [64] package. The main features we relied on were 26 in total:

-

RMSE (E for Energy),

-

Zero crossing rate,

-

Spectral centroid,

-

Spectral bandwidth,

-

Roll off,

-

Chroma,

-

20 MFCCs.

These features are already described in Sect. 2 with some details. All these 26 features would be normalized and concatenated, before being fed to the neural network, as input, during the training phase.

5.3 Pre-processing the dataset

Using sklearn.model_selection, the feature set is first partitioned to training and testing sets. During the training phase, the training set is dynamically partitioned to training and validation parts, as is the case with cross validation. By employing the sklearn.preprocessing.StandardScaler class, the data is normalized in order to better structure it for visualization and analyses. The data is standardized, which means that they will have a mean of 0 and a standard deviation of 1.

5.4 The neural network

The next step is the deep neural network outlined in Fig. 1 that follows a sequential model that has three hidden layers. The reason to choose the sequential model is its simplicity and the ability to add up more layers easily.

The model has alternating dense and dropout layers. The dropout layer is used for toning down too many feature associations during training in order to avoid over-fitting; a phenomenon called regularization. The relevant hyperparameter p, called ‘dropout rate’, is set to 0.5. For hidden layers, in general, the Rectified Linear Unit function (ReLU) is the activation function of choice. Being a binary classification, for the output layer, we are relying on the sigmoid activation function. We chose the sigmoid activation function for binary classification due to its ability to provide clear class probabilities between 0 and 1. While softmax is typically used for multi-class classification, sigmoid is well-suited for binary outcomes. Note that:

Fig. 2 gives a snapshot of the layers involved in an example execution in the form of model summary.

After preparing the model, we pass on the data for training the model via the usual fit() function. We use the adaptive moment estimation (adam) as optimizer [65] for its efficiency, manageable memory requirements and its amenability for larger data/parameters. As we have only two possible labels (real or faked), for better accuracy, the loss function is based on the sparse categorical cross entropy. The number of epochs were set to 140 which is the number of times the model will train before it completes the training process. The batch-size was set to 128 and corresponds to the number of samples processed before the model is updated. Finally, the testing phase involved the usual prediction function (predict()) with subsequent comparison of the predicted labels with the actual labels from the dataset. The learning rate was optimized to 0.0003.

6 Experimental results

It must be noted that although the size of our dataset is almost double the sample size we are reporting (every voice had a faked and real versions), for the sake of blindness either the real or fake version was included in the dataset. In other words, our sample has either a real or mimicked version of an individual’s voice, but not both.

-

1.

The model was first trained and tested with all-English audios already described in the previous section. It consisted of 933 samples which were partitioned into 746 training (\(20\%\) cross validation) and 187 test cases. The resultant confusion matrix corresponding to the training of the model on 933 samples was:

$$\begin{aligned} Train\ (all\ English)\ \ \begin{pmatrix} TP &{} FP \\ FN &{} TN \end{pmatrix} = \begin{pmatrix} 372 &{} 13 \\ 4 &{} 357 \end{pmatrix}, \end{aligned}$$(8)where TP is the True positives, TN is the True Negatives, FP is the False Positives, FN is the False Negatives.

Over the same dataset, the resultant confusion matrix par rapport the testing, based on 187 samples, was observed to be:

$$\begin{aligned} Test\ (all\ English) = \begin{pmatrix} 85 &{} 9 \\ 2 &{} 91 \end{pmatrix}. \end{aligned}$$(9)Based on the above confusion matrices, for all English dataset, the resultant training accuracy was found to be \(0.977 (97.7\%)\) as against the testing accuracy of \(94.1\%\). These results are interesting in the face of the fact that the method is blind and no additional information is made available to our method.

-

2.

To check whether the spoken language has any bearing upon the results, we chose a test case of 194 Arabic language audios from the mixed set of data taken from the raw dataset. When tested on the model trained on the English-only datset, we got a classification accuracy of \(59.9\%\). This implied that the spoken language also seems to be a deciding factor. We therefore ought to revisit the training part using a mix of English and Arabic audios. In fact we combined the three sets to get our mixed set to retrain our network on. It must be noted that our use case has one and only one sample per purported speaker; ‘real’ or ‘fake’ as our use of the terms blind and zero prior knowledge dictates. That is to say, if there’s a ‘fake’ speech sample of a given purported speaker, then there’s no ‘real’ speech sample, and vice versa. Neither has the proposed system ever encountered the purported speaker, whether fake or real. With that in mind, we must have no spoken language barrier in training our network. That is why we combined the English and Arabic speech samples in the mixed data set to nullify language dependence in our use case.

-

3.

The mixed set of 1127 audios was partitioned into 901 training samples, including \(20\%\) for cross validation, and the rest 226 constituted the test set. The resultant confusion matrix was:

$$\begin{aligned} Train\ (Mixed) = \begin{pmatrix} 450 &{} 13 \\ 9 &{} 429 \end{pmatrix}. \end{aligned}$$(10)The computed training accuracy was thus found to be \(97.6\%\) in classifying the real and faked audios. The test accuracy for the mixed part was however \(94.2\%\) as can be deduced from the following confusion matrix:

$$\begin{aligned} Test\ (Mixed) = \begin{pmatrix} 108 &{} 10 \\ 3 &{} 105 \end{pmatrix}. \end{aligned}$$(11)

Figure 3 indicates that our model has a good learning curve, albeit noisy at later part. Note that we optimized based on the mixed data; hence the better curve (Fig. 3.b). Although we fixed the number of epochs to 140, most of the convergence is realized well before 60 epochs; only some refining need further epochs. The curves of loss decrease to a point of stability, although one can observe a small gap of validation loss with the training loss.

Table 1 sums up all the results par rapport both the all English and mixed parts of the dataset. For a better idea about the obtained results, the Receiver Operating Characteristic (ROC) curves for both the parts are illustrated in Fig. 4. The model has enviable efficiency as demonstrated by the high ROC AUC scores of 0.974 and 0.963 with respect to All-English and Mixed samples, respectively. A very important ROC based metric is the equal error rate (EER) which is the location on a ROC curve where the false acceptance rate and false rejection rate are equal. In general, a lower EER indicates highly accurate classification. The EERFootnote 2 in our case was 0.053 for English-only and 0.057 for the mixed part.

7 Benchmarking results

Even after a thorough search, we were not able to find a reference method that could benchmark the research problem we are after. There are methods, like [66], but they are non-blind, i.e., to say these methods require a recording of the original voice. One may argue in favor of spoofing detection literature, especially the one-class methods, like OC-Softmax [67] and its variants [68,69,70], but the main method concerns machine generated speech. Hence, it was decided to come up with reference data by inspection through human subjects/volunteers. We gathered a group of 10 native Arabic speakers and 20 native English speakers. Each volunteer would have to listen the audios from our dataset and tabulate it as real or faked as per his/her observation. Due to the scarcity of native English speakers, we had to contact the volunteers through social media and carry out the process live online.

By conducting the tests on two groups of people, the English native speakers were able to blindly identify 85% of the English audios given as real or fake correctly. In contrast, the proposed model got \(94\%\) accuracy in classifying the real and faked audios using the all-English dataset. With the native Arab speakers, 89% of the Arabic audios were identified correctly, however.

After conducting the human subject test and the results of the model test we found that there were some audios on which both tests agreed being faked audios, where in fact those audios were real, see Table 2. The probable cause, of the failure of the test participants in identifying those audios, may be the background noise that may have made them think that those audios were real.

8 Conclusion

The results reveal that, assuming zero prior knowledge about the speaker and his speech, our system can classify a given speech as faked or otherwise, on the fly. We had at our disposal only English and Arabic audios, but still we were able to deduce that the nature of spoken language may be important, as we got less than \(60\%\) accuracy in classifying Arabic audios using a network trained solely on English data. Following the incorporation of Arabic audio data, approximately one-fifth the size of the original English training dataset, and subsequent retraining of the network, a significant improvement in classification results was observed. This suggests that while spoken language may influence classification, its impact can be mitigated by introducing a small number of additional samples. Thus, maintaining a strict 1 : 1 ratio between languages in the training dataset may not be essential for addressing the language factor. However, further investigation into this aspect may be warranted, potentially by incorporating audio data from additional languages. In precise terms, the performance of our model is evidenced by its accuracy, which consistently exceeded \(94\%\) (specifically, achieving \(94.1\%\) for the English dataset and \(94.2\%\) for the mixed dataset, as outlined in Table 1). A Comparison with results by inspection from human subjects proves that our model can identify real and faked audios with a better accuracy.

As a future improvement, we first aim to collect more data to improve the Arabic dataset and make it available for researchers. Secondly, there is a need of diversity in the form of the inclusion of audios in other languages too. This may improve the classification capability of the model. Last but not least, deploying the model in mobile based software may help against impersonation offenses. Fundamentally, for an audio clip to be classified with high accuracy as being fake, without any references, original, mimicked, or impersonated target even exist, a much deeper analysis will be needed to provide a plausible feature-set upon which such a decision is being made by the model.

Data availability

Can be made available by writing an email to the first author.

Code availability

Can be made available by writing an email to the first author.

Notes

One interesting aspect of mimicry detection, in addition to employing professionals, could be to employ twins [43], at least of reference.

https://stackoverflow.com/questions/28339746/equal-error-rate-in-python. Accessed 07 June 2023.

References

Twain M. EBook of chapters from my autobiography. The project Gutenberg. Chap. XX. Published as EBook No. 19987; 1907. https://www.gutenberg.org/files/19987/19987-h/19987-h.htm#CHAPTERS_FROM_MY_AUTOBIOGRAPHY_I1. Accessed 1 Dec 2006.

https://www.dictionary.com/browse/speech. Accessed 26 Sept 2022.

https://www.computerhope.com/jargon/a/audio.htm. Accessed 26 Sept 2022.

Selig J. What is machine learning? A definition. https://expertsystem.com/machine-learning-definition/. Accessed 26 Sept 2022.

https://www.sciencedirect.com/topics/engineering/audio-signal. Accessed 26 Sept 2022.

https://www2.ling.su.se/staff/hartmut/bark.htm. Accessed 30 May 2020.

https://www.teachmeaudio.com/mixing/techniques/audio-spectrum/. Accessed 26 Sept 2022.

Hossain N. What are the spectral and temporal features in speech signal? https://www.researchgate.net/post/What-are-the-Spectral-and-Temporal-Features-in-Speech-signal. Accessed 26 Sept 2022.

Singh J. An introduction to audio processing and machine learning using Python. https://opensource.com/article/19/9/audio-processing-machine-learning-python. Accessed 26 Sept 2022.

A Tutorial on Cepstrum and LPCCs. http://www.practicalcryptography.com/miscellaneous/machine-learning/tutorial-cepstrum-and-lpccs/. Accessed 26 Sept 2022.

Hall M. The spectrum of the spectrum. https://agilescientific.com/blog/2012/3/23/the-spectrum-of-the-spectrum.html. Accessed 26 Sept 2022.

Kotha SP, Nallagari S, Fiaidhi J. Deep learning for audio; 2020. https://doi.org/10.36227/techrxiv.12089682.v1

Kulkarni N, Bairagi V. EEG-based diagnosis of Alzheimer disease: a review and novel approaches for feature extraction and classification techniques. Amsterdam: Elsevier Science; 2018. (ISBN: 9780128153925).

Spectral centroid. https://en.wikipedia.org/wiki/Spectral_centroid. Accessed 26 Sept 2022.

RollOff. https://essentia.upf.edu/reference/streaming_RollOff.html. Accessed 26 Sept 2022.

Notes on music information retrieval. https://github.com/stevetjoa/musicinformationretrieval.com/. Accessed 26 Sept 2022.

https://www.sciencedirect.com/topics/engineering/spectral-flux. Accessed 26 Sept 2022.

What is a Power Spectral Density (PSD)? https://community.sw.siemens.com/s/article/what-is-a-power-spectral-density-psd. Accessed 26 Sept 2022.

Davis S, Mermelstein P. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans Acoust Speech Signal Process. 1980;28(4):357–66. https://doi.org/10.1109/TASSP.1980.1163420.

Mermelstein P. Distance measures for speech recognition, psychological and instrumental. Pattern Recogn Artif Intell. 1976;116:374–88.

Mel Frequency Cepstral Coefficient (MFCC) tutorial. http://practicalcryptography.com/miscellaneous/machine-learning/guide-mel-frequency-cepstral-coefficients-mfccs/. Accessed 26 Sept 2022.

Zhao X, Wang D. Analyzing noise robustness of mfcc and gfcc features in speaker identification. In: 2013 IEEE international conference on acoustics, speech and signal processing. 2013. p. 7204–8. https://api.semanticscholar.org/CorpusID:15100309

http://www.cs.tut.fi/~sgn14006/PDF2015/S04-MFCC.pdf. Accessed 28 May 2020.

Jeevan M, Dhingra A, Hanmandlu M, Panigrahi BK. Robust speaker verification using gfcc based i-vectors. In: Lobiyal DK, Mohapatra DP, Nagar A, Sahoo MN, editors. Proceedings of the international conference on signal, networks, computing, and systems. Springer, India. 2017. p. 85–91. https://api.semanticscholar.org/CorpusID:63438840

Qazi T, Hayat K, Khan SU, Madani SA, Khan IA, Kołodziej J, Li H, Lin W, Yow KC, Xu C, et al. Survey on blind image forgery detection. IET Image Process. 2013;7(7):660–70. https://doi.org/10.1049/iet-ipr.2012.0388.

Hayat K, Qazi T. Forgery detection in digital images via discrete wavelet and discrete cosine transforms. Comput Electr Eng. 2017;62:448–58. https://doi.org/10.1016/j.compeleceng.2017.03.013.

Gao Y, Singh R, Raj B. Voice impersonation using generative adversarial networks. In: 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP); 2018. https://doi.org/10.1109/icassp.2018.8462018

Griffin D, Lim J. Signal estimation from modified short-time Fourier transform. IEEE Trans Acoust Speech Signal Process. 1984;32(2):236–43. https://doi.org/10.1109/TASSP.1984.1164317.

Malik H. Securing voice–driven interfaces against fake (cloned) audio attacks. In: 2019 IEEE conference on multimedia information processing and retrieval (MIPR); 2019. https://doi.org/10.1109/mipr.2019.00104

Gomez-Alanis A, Peinado AM, Gonzalez JA, Gomez AM. A gated recurrent convolutional neural network for robust spoofing detection. IEEE/ACM Trans Audio Speech Lang Process. 2019;27(12):1985–99. https://doi.org/10.1109/TASLP.2019.2937413.

Yamagishi J, Wang X, Todisco M, Sahidullah M, Patino J, Nautsch A, Liu X, LEE KA, Kinnunen TH, Evans NWD, Delgado H. Asvspoof 2021: accelerating progress in spoofed and deepfake speech detection. 2021. ArXiv abs/2109.00537 https://api.semanticscholar.org/CorpusID:237385791

Gomez-Alanis A, Peinado AM, Gonzalez JA, Gomez AM. A light convolutional GRU-RNN deep feature extractor for ASV spoofing detection. In: Proceedings Interspeech 2019. 2019. p. 1068–72. https://doi.org/10.21437/Interspeech.2019-2212.

Tak H, Patino J, Todisco M, Nautsch A, Evans NWD, Larcher A. End-to-end anti-spoofing with rawnet2. In: ICASSP 2021-2021 IEEE international conference on acoustics, speech and signal processing (ICASSP). 2020. p. 6369–73. https://api.semanticscholar.org/CorpusID:226236862

Delvaux V, Caucheteux L, Huet K, Piccaluga M, Harmegnies B. Voice disguise vs. impersonation: acoustic and perceptual measurements of vocal flexibility in non experts. In: Proceedings of the Interspeech 2017. 2017. p. 3777–81. https://doi.org/10.21437/Interspeech.2017-1080.

Wagner I, Köster O. Perceptual recognition of familiar voices using falsetto as a type of voice disguise. In: Proceedings of the 14th international congress of phonetic sciences (ICPhS 99). 1999. https://www.internationalphoneticassociation.org/icphs-proceedings/ICPhS1999/papers/p14_1381.pdf

Perrot P, Aversano G, Chollet G. Voice disguise and automatic detection: review and perspectives. Lecture Notes Comput Sci Progr Nonlinear Speech Process. 2007. https://doi.org/10.1007/978-3-540-71505-4_7.

Chen S, Ren K, Piao S, Wang C, Wang Q, Weng J, Su L, Mohaisen A. You can hear but you cannot steal: Defending against voice impersonation attacks on smartphones. In: 2017 IEEE 37th international conference on distributed computing systems (ICDCS); 2017. https://doi.org/10.1109/icdcs.2017.133

Furui S. Chapter 7—speaker recognition in smart environments. In: Aghajan H, Delgado RLC, Augusto JC, editors. Human-centric interfaces for ambient intelligence. Oxford: Academic Press; 2010. p. 163–84. https://doi.org/10.1016/B978-0-12-374708-2.00007-3. (ISBN: 978-0-12-374708-2).

Hautamäki RG, Kinnunen TH, Hautamäki V, Leino T, Laukkanen A-M. I-vectors meet imitators: on vulnerability of speaker verification systems against voice mimicry. In: Proceedings of InterSpeech, the 14th annual conference of the international speech communication association. Interspeech; 2013. p. 930–934. https://api.semanticscholar.org/CorpusID:14330856

Hao B, Hei X. Voice liveness detection for medical devices. In: Design and implementation of healthcare biometric systems. IGI Global; 2019. p. 109–36. https://doi.org/10.4018/978-1-5225-7525-2.ch005.

Farrús M, Wagner M, Anguita J, Hernando J. How vulnerable are prosodic features to professional imitators? In: The speaker and language recognition workshop. 2008. https://api.semanticscholar.org/CorpusID:241776

Patil HA, Parhi KK. Variable length Teager energy based MEL cepstral features for identification of twins. In: Chaudhury S, Mitra S, Murthy CA, Sastry PS, Pal SK, editors. Pattern recognition and machine intelligence. Berlin: Springer; 2009. p. 525–30. https://doi.org/10.1007/978-3-642-11164-8_85.

Rosenberg AE. Automatic speaker verification: a review. Proc IEEE. 1976;64(4):475–87. https://doi.org/10.1109/PROC.1976.10156.

HSBC reports high trust levels in biometric tech as twins spoof its voice id system. Biometric Technol Today 2017;2017(6):12. https://doi.org/10.1016/S0969-4765(17)30119-4

Simmons D. BBC fools HSBC voice recognition security system. https://www.bbc.com/news/technology-39965545. Accessed 26 Sept 2022.

Twins fool HSBC voice biometrics—BBC. https://www.finextra.com/newsarticle/30594/twins-fool-hsbc-voice-biometrics--bbc. Accessed 26 Sept 2022.

Jain AK, Prabhakar S, Pankanti S. On the similarity of identical twin fingerprints. Pattern Recogn. 2002;35(11):2653–63. https://doi.org/10.1016/S0031-3203(01)00218-7.

Zakariah M, Khan MK, Malik H. Digital multimedia audio forensics: past, present and future. Multimedia Tools Appl. 2018;77(1):1009–40. https://doi.org/10.1007/s11042-016-4277-2.

Masood M, Nawaz M, Malik KM, Javed A, Irtaza A, Malik H. Deepfakes generation and detection: state-of-the-art, open challenges, countermeasures, and way forward. Appl Intell. 2022;53(4):3974–4026. https://doi.org/10.1007/s10489-022-03766-z.

Kraetzer C, Oermann A, Dittmann J, Lang A. Digital Audio Forensics: A First Practical Evaluation on Microphone and Environment Classification. In: Proceedings of the 9th workshop on multimedia & security. Association for Computing Machinery, New York, NY, USA; 2007. p. 63–74. https://doi.org/10.1145/1288869.1288879.

Reich AR. Effects of selected vocal disguises upon spectrographic speaker identification. J Acoust Soc Am. 1976. https://doi.org/10.1121/1.2002461.

Wolf JJ. Efficient acoustic parameters for speaker recognition. J Acoust Soc Am. 1972;51(6B):2044–56. https://doi.org/10.1121/1.1913065.

Zetterholm E. Impersonation—reproduction of speech. Linguistics working papers, 49. 2001. p. 176–179. https://api.semanticscholar.org/CorpusID:16452236

Zetterholm E. Detection of speaker characteristics using voice imitation. In: Müller C, editor. Speaker classification II: selected projects. Berlin: Springer; 2007. p. 192–205. https://doi.org/10.1007/978-3-540-74122-0_16.

Kitamura T. Acoustic analysis of imitated voice produced by a professional impersonator. In: Proceedings of the annual conference of the international speech communication association (INTERSPEECH). 2008. p. 813–6. https://api.semanticscholar.org/CorpusID:13374900

Amin TB, Marziliano P, German JS. Glottal and vocal tract characteristics of voice impersonators. IEEE Trans Multimedia. 2014;16(3):668–78. https://doi.org/10.1109/TMM.2014.2300071.

Mary L, Babu KKA, Joseph A. Analysis and detection of mimicked speech based on prosodic features. Int J Speech Technol. 2012;15(3):407–17. https://doi.org/10.1007/s10772-012-9163-3.

S, R, Mary L, KK, AB, Joseph A, George GM. Prosody based voice forgery detection using svm. In: 2013 International conference on control communication and computing (ICCC); 2013. p. 527–30. https://doi.org/10.1109/ICCC.2013.6731711

Farrus M, Wagner M, Erro D, Hernando J. Automatic speaker recognition as a measurement of voice imitation and conversion. Int J Speech Lang Law. 2010. https://doi.org/10.1558/ijsll.v17i1.119.

Campbell JP. Speaker recognition: a tutorial. Proc IEEE. 1997;85(9):1437–62. https://doi.org/10.1109/5.628714.

Nagrani A, Chung JS, Xie W, Zisserman A. Voxceleb: large-scale speaker verification in the wild. Comput Speech Lang. 2020;60: 101027. https://doi.org/10.1016/j.csl.2019.101027.

Mandalapu H, Ramachandra R, Busch C. Multilingual voice impersonation dataset and evaluation. In: Yildirim Yayilgan S, Bajwa IS, Sanfilippo F, editors. Intelligent technologies and applications. Cham: Springer; 2021. p. 179–88. https://doi.org/10.1007/978-3-030-71711-7_15.

Vasconcelos R. Speaker recognition. 2022. https://github.com/ravasconcelos/spoken-digits-recognition/blob/master/src/speaker-recognition.ipynb. Accessed 15 Nov 2019.

McFee B, Raffel C, Liang D, Ellis DP, McVicar M, Battenberg E, Nieto O. librosa: Audio and music signal analysis in python. In: Proceedings of the 14th python in science conference; 2015. p. 8. https://doi.org/10.5281/zenodo.6759664.

Géron A. Hands-on machine learning with scikit-learn and tensorFlow : concepts, tools, and techniques to build intelligent systems. 2nd ed. Sebastopol: O’Reilly Media Inc; 2019.

Rodríguez-Ortega Y, Ballesteros DM, Renza D. A machine learning model to detect fake voice. In: Florez H, Misra S, editors. Applied informatics. Cham: Springer; 2020. p. 3–13. https://doi.org/10.1007/978-3-030-61702-8_1.

Zhang Y, Jiang F, Duan Z. One-class learning towards synthetic voice spoofing detection. IEEE Signal Process Lett. 2021;28:937–41. https://doi.org/10.1109/LSP.2021.3076358.

Li L, Xue X, Peng H, Ren Y, Zhao M. Improved one-class learning for voice spoofing detection. In: 2023 Asia pacific signal and information processing association annual summit and conference (APSIPA ASC); 2023. p. 1978–1983 . https://doi.org/10.1109/APSIPAASC58517.2023.10317117

Ding S, Zhang Y, Duan Z Samo: Speaker attractor multi-center one-class learning for voice anti-spoofing. In: ICASSP 2023-2023 IEEE international conference on acoustics, speech and signal processing (ICASSP); 2023. p. 1–5 . https://doi.org/10.1109/ICASSP49357.2023.10094704

Lin G, Luo W, Luo D, Huang J. One-class neural network with directed statistics pooling for spoofing speech detection. IEEE Trans Inf For Secur. 2024;19:2581–93. https://doi.org/10.1109/TIFS.2024.3352429.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

S.A.A., K.H. and A.M.O. prepared the manuscript. S.A.A. and B.M. prepared the figures. K.H., A.M.O., N.K. , and M.S.N. reviewed the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All the authors have consented to participate as per all the subsequent bullet items.

Consent for publication

All the authors consented to publish the article.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Al Ajmi, S.A., Hayat, K., Al Obaidi, A.M. et al. Faked speech detection with zero prior knowledge. Discov Appl Sci 6, 288 (2024). https://doi.org/10.1007/s42452-024-05893-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-024-05893-3