Abstract

Insulated gate bipolar transistor (IGBT) is a power semiconductor module .Voids may arise in its solder process when a contaminant or gas is absorbed into the solder joint. They heavily influence the heat exchange efficiency of IGBT, so void inspection is very important. The segmentation of solder region is a crucial step for automated defect detection of IGBT based on x-ray computed laminography (CL) system. In recent years, deep learning has made remarkable process in semantic segmentation and has been used for the segmentation of solder joint between the direct bonded copper (DBC) substrate and baseplate, which has been proved to be accurate and efficient. However, deep learning architectures exhibit a critical drop of performance due to catastrophic forgetting when new IGBT samples encountered. Hence, this paper proposes to use online learning techniques to continuously improve the learned model by feeding new IGBT samples without losing previously learned knowledge.

Article Highlights

-

Acknowledges the inherent limitation of deep learning models, such as catastrophic forgetting, which can hinder performance when encountering new IGBT samples.

-

Introduces the use of online learning techniques as a solution to continuously improve the model by incorporating new IGBT samples without forgetting previously learned knowledge.

-

Aims to enhance model robustness and adaptability over time, ensuring consistent and accurate defect detection in IGBT soldering processes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Insulated Gate Bipolar Transistor (IGBT) is a power semiconductor device utilized as an electronic switch, offering advantages such as reduced control circuitry size and complexity, resulting in cost savings [1]. IGBTs find diverse applications in electric cars, trains, variable speed refrigerators, and air-conditioners. During the die-attached DBC process, which involves joining chips and Direct Bonded Copper (DBC) substrates, the presence of contaminants or gases can lead to voids within the solder joint. Additionally, voids may occur when soldering the DBC substrate to the baseplate. These voids pose a significant problem as they impede heat dissipation and can cause electrical failures. Hence, it is crucial to segment the DBC for effective void inspection and resolution [2]. Figure 1 presents a cross-sectional view of an IGBT module.

Ultrasonic testing (UT) can be used to examine the voids in solder joint. However, it cannot examine the IGBT modules with pin-fin baseplate. Furthermore, IGBT module is easily contaminated by the liquor medium used in UT.

X-ray testing, utilizing digital radiography (DR) and computed tomography (CT), is also employed for identifying internal defects. DR generates two-dimensional images that capture the object’s structure along the X-ray direction [3]. It has been effectively used for void inspection in solder balls [4, 5] and solder joints [6, 7]. Conversely, CT generates three-dimensional images by reconstructing object slices from projection data collected at different angles, providing depth information [8]. IGBT modules are carefully placed on the planar stage, situated between the X-ray source and the planar detector. The planar stage allows for precise adjustment of the modules’ position, while the computer image processing system utilizes advanced algorithms to generate reconstructed slices of the modules. These reconstructed slices provide valuable insights into the internal structure of the IGBT modules, facilitating comprehensive analysis and evaluation.

Indeed, deep convolutional neural networks (CNNs) have achieved remarkable advancements in the field of semantic segmentation. These networks have demonstrated the ability to accurately assign semantic labels to individual pixels in images, enabling precise delineation of object boundaries and semantic understanding of the visual scene. By leveraging the hierarchical nature of deep CNN architectures, these models [9,10,11] are capable of learning complex patterns and representations from image data. The layers of the network capture increasingly abstract features, allowing for the extraction of fine-grained details and contextual information necessary for accurate semantic segmentation.

However, these networks encounter a major obstacle called catastrophic forgetting, which impedes their ability to learn new tasks while retaining performance on previously learned ones [12,13,14]. Traditional learning models, like Joint Training [15], require access to all samples related to old and new tasks during the entire training process. In contrast, real-world systems should be capable of updating their knowledge with minimal training steps, seamlessly incorporating new tasks while preserving previously acquired knowledge without any modification.

The online learning problem encompasses the capability of deep learning architectures to continuously improve the learned model by integrating new data while retaining previously acquired knowledge. Various methods [16,17,18,19] employ network architectures that expand during the training process. Another approach involves freezing or slowing down the learning process in specific parts of the network [20, 21]. Additionally, knowledge distillation has been proposed as a method to maintain high performance on old tasks. The concept was initially introduced in [22] and has since been adapted in several studies [23] to ensure the network’s responses remain stable for old tasks while incorporating new training samples.

While the problem of incremental learning has traditionally been addressed in image classification and object detection [24, 25], semantic segmentation has received relatively less attention. In our study, the semantic segmentation network DeepLabv3+-Lite (DL3) was employed to accurately and efficiently segment the DBC area [26]. However, two main challenges arise. Firstly, the original model performs poorly on unseen new IGBT samples of the same type. Secondly, it also exhibits subpar performance on unseen new types of IGBT samples. To address these challenges, online learning techniques is utilized as a solution.

This paper proposes online learning for semantic segmentation to effectively segment the solder region in new IGBT samples. We also compare our approach with other methods, including Feature Extraction, Fine-tuning, Independent Training, and Joint Training. The structure of this paper is organized as follows: Sect. 2 presents a novel framework for conducting online learning in semantic segmentation. In Sect. 3, we evaluate the performance of online learning for DBC segmentation, providing a comprehensive and intuitive comparison with related methods. Section 4 includes a discussion on the findings and implications of online learning. Finally, Sect. 5 concludes the paper, summarizing the key contributions and suggesting future research directions.

2 Methodology

This study introduces two methods based on DL3 for the continual addition and integration of new tasks in DBC segmentation, without utilizing data from previous tasks. The first method involves knowledge distillation applied to the output layer of the DL3 network. The second method focuses on knowledge distillation in the intermediate feature space of the DL3 network. These approaches enable the incorporation of new tasks while preserving the performance and knowledge acquired by the network.

DL3 is a semantic segmentation model that adopts an encoder-decoder architecture [27, 28]. This architecture enables precise object boundary delineation in the segmentation task. The encoder component of DL3 consists of two key neural network modules: the Xception module [29] and the atrous spatial pyramid pooling (ASPP) module [30].

The Xception module is employed as the backbone of the encoder. It utilizes depthwise separable convolutions, which decompose the standard convolution operation into depthwise convolution and pointwise convolution. This approach helps to reduce the computational complexity of the model while maintaining expressive power and capturing relevant features.

The ASPP module, on the other hand, is responsible for capturing contextual information. It performs atrous (dilated) convolutions at multiple dilation rates, effectively enlarging the receptive field of each neuron in the network. By pooling features at different resolutions, the ASPP module enables the model to gather contextually rich information and capture fine-grained details, which contributes to more accurate segmentation results.

By combining the Xception module and the ASPP module within the encoder-decoder architecture, DL3 achieves both efficiency and accuracy in semantic segmentation. The model efficiently captures and processes relevant spatial and contextual information, enabling accurate delineation of object boundaries in the segmentation task.

Conversely, the decoder module merges the encoder features with corresponding low-level features [31] of the same spatial resolution. It then applies simple bilinear up-sampling, followed by an additional round of simple bilinear up-sampling. Nonetheless, the adoption of large-scale models like DL3 requires significant computational and memory resources. To address this challenge, several techniques have been explored to reduce computational costs, including tensor factorization [32], channel/network pruning [33], and sparse connections [34]. These strategies aim to simplify the deep network structure and alleviate computational and memory requirements.

The DBC segmentation approach categorizes each pixel in an image as either DBC or non-DBC, making it a binary classification problem. Proper network pruning can be applied to optimize the system. Chen et al. [32] made modifications to the Xception model and achieved promising outcomes in semantic image segmentation. Notably, we introduced specific alterations to enhance computational speed and memory efficiency: (1) Reduced the number of channels for separable convolutions. (2) In the middle flow, each block does not require sixteen repetitions. The flow diagram and complete architecture of DL3 is shown in Fig. 2.

To initiate the training process, we utilize the DL3 network architecture to recognize the existing classes. Initially, the network is trained in a supervised manner using a standard cross-entropy loss. Once training is completed, we save the resulting model as \({M}_{0}\).

Subsequently, we proceed with an incremental step to enable the model to learn a new set of classes each time. During this incremental step, we load and update the previous model \({M}_{0}\), employing a linear combination of two losses: the cross-entropy loss \({L}_{ce}\), which facilitates learning the new classes, and the distillation loss \({L}_{d}\), which assists in retaining the knowledge of previously encountered classes. Further details about the distillation loss will be provided below.

Following the incremental step, we save the updated model as \(\text{M}\), and this procedure is repeated whenever a new set of classes needs to be learned. The total loss L utilized to train the model is determined by a combination of the cross-entropy loss and the distillation loss, as follows:

The parameter λ plays a crucial role in balancing the two terms of the loss function. When λ is set to 0, it indicates that no knowledge distillation is applied, resulting in the simplest scenario of fine-tuning.

The choice of λ allows for flexibility in balancing the importance of preserving previous knowledge (through distillation) and adapting to new data. Higher values of λ give more weight to the distillation loss, emphasizing the retention of previous knowledge, while lower values or setting λ to 0 prioritize the new data and fine-tuning process over retaining the previously learned knowledge.

The objective function used to train the network is the sparse softmax cross-entropy loss. This loss function is specifically chosen for its ability to improve classification accuracy, especially in multiclass problems.

The sparse softmax cross-entropy loss [35] involves applying the softmax function to the outputs of the network’s final layer, which generates a probability distribution across the different classes. Mathematically, the softmax function for an input vector x, with j indexing the output units and M representing the number of classification categories, is defined as: \(\left[{S}_{j}\left(x\right)=\frac{{e}^{xj}}{{\sum }_{k=1}^{N}{e}^{xk}}\right]\). The cross-entropy loss, denoted as \({L}_{ce}\), is then calculated by comparing the predicted probabilities \({p}_{k}\) from the softmax layer with the actual labels \({y}_{k}\) of the input image. The cross-entropy loss equation is given as:

In this equation, N represents the number of classes, \({y}_{k}\) represents the actual label of the input image, and \({p}_{k}\) represents the predicted probability for the image belonging to class k. The loss is averaged over each loss element in the batch.

By utilizing the sparse softmax cross-entropy loss, the network aims to optimize its parameters through backpropagation and gradient descent, thereby improving its ability to accurately classify inputs across multiple classes. The loss function guides the learning process by quantifying the discrepancy between the predicted probabilities and the true labels, allowing the network to update its weights and biases accordingly.

2.1 Distillation on the output layer ( \({\varvec{L}}_{\varvec{d}}^{\varvec{{\prime }}}\) )

In our approach, we aim to ensure that the output probabilities of the current model closely resemble the recorded outputs from the original network for each original task. To achieve this, we employ the Knowledge Distillation loss, which was initially proposed by Hinton et al. [26] and has been shown to be effective in encouraging the outputs of the current model to approximate those of the old model.

The modified cross-entropy loss, denoted as \({L}_{d }^{{\prime }}\), is utilized for this purpose. It measures the discrepancy between the predicted labels, \({y}_{k}^{{\prime }}\), generated by the output of the old model \({M}_{0}\), and the output probabilities, \({p}_{k}^{{\prime }}\), produced by the softmax layer in the current model \(\text{M}\). Importantly, the cross-entropy loss is masked to consider only the already seen classes, as our objective is to guide the learning process to retain the knowledge of these classes.

By incorporating the Knowledge Distillation loss into the training process, we enable the current model to learn from the outputs of the old model, effectively transferring the previously learned knowledge to the updated model. This facilitates the preservation of information related to the already seen classes, contributing to the continuous improvement and retention of the learned knowledge during the incremental learning process, i.e.:

2.2 Distillation on intermediate feature space ( \({\varvec{L}}_{\varvec{d}}^{\varvec{{\prime }}\varvec{{\prime }}}\) )

In our approach, we have introduced a different strategy to preserve previously learned knowledge by maintaining the similarity between the encoder of the current model and the previously learned model. This is achieved by applying a knowledge distillation function to the intermediate level of the feature space, specifically before the decoding stage.

In this case, the distillation function used for the features space is no longer the cross-entropy loss but instead the L2 loss. The reason behind this choice is that the considered layer is not a classification layer anymore, but rather an internal stage where the output needs to be kept close to the previous one, for example, using the L2-norm. Through empirical evaluation, we have found that using cross-entropy or L1 loss in this context yields inferior results.

By employing the L2 loss as the distillation function for the intermediate feature space, we are able to effectively transfer knowledge from the previously learned model to the current one. This enables the preservation of learned representations and facilitates the continuous improvement of the model’s performance over time. Considering that model \(\text{M}\) can be decomposed into an encoder and a decoder, the distillation term would become:

where \({E}_{0}\) and \(\text{E}\) denotes the features computed by the encoder of the old model \({M}_{0}\) and the current model \(\text{M}\) when new data is fed as input.

3 Experimental results

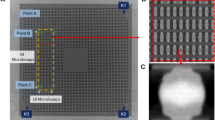

In order to validate the effectiveness of our proposed method, an experiment was conducted using a dataset of reconstructed slices. These slices were generated by the x-ray CL system mentioned earlier. The dataset consists of four types of IGBTs (P6, B, P3, C6) obtained from a semiconductor company, shown as Fig. 3. Each IGBT type is associated with a set of corresponding reconstructed slices. In total, 1536 reconstructed slices were obtained for each sample. However, for the purpose of our study, we focused only on slices that exhibit clear DBC edges, as these are crucial for accurate segmentation. From the entire set of slices, we selected ten slices above and below the welding layers, resulting in a total of 20 slices for each IGBT type. All the selected slices have a size of 2048 × 2048 pixels. These slices serve as the input for the segmentation task and are instrumental in evaluating the performance of our proposed method.

In the segmentation task, we focused solely on labeling the welding layer within each image slice using two classes: background and DBC region. To accomplish this, we utilized professional labeling software called Labelme. The labeled images served as the ground truth for both the segmentation training phase and the final evaluation of segmentation performance. This ensured that the network was trained and evaluated based on accurate and reliable annotations provided by Labelme.

Due to computational power constraints, we resized the input images to a resolution of 256 × 256. This down sampling step was performed to reduce the computational load and facilitate the training process.

To initialize the weights of the network, we employed the technique described in the paper by He et al. [36]. This initialization method, known as He initialization which is commonly used in deep learning architectures. It helps in setting the initial weights of the network in a way that promotes efficient training and prevents the saturation or vanishing gradient problems often encountered in deep neural networks. By utilizing He initialization, we ensured a solid starting point for the network’s weight parameters and facilitated more effective training.

To train all the variants of the network, we employed the stochastic gradient descent (SGD) optimization algorithm with a momentum of 0.9. SGD with momentum is a popular optimization method in deep learning, which helps accelerate convergence and overcome local minima. By utilizing a momentum value of 0.9, we incorporated a factor that retained a memory of the previous weight updates, allowing the optimizer to maintain a more consistent direction and speed during the training process. This contributed to more efficient training and improved the overall convergence of the network variants. The specific reference for the choice of momentum value can be found in the paper [37].

In our training setup, we adopted the “poly” learning rate policy to adjust the learning rate during training. The initial learning rate was set to 0.05 and then multiplied by \({\left(1-\frac{iter}{\text{m}\text{a}\text{x}\_iter}\right)}^{power}\), where iter represents the current iteration and max_iter represents the maximum number of iterations. The power value was set to 0.9 [38]. This policy allows the learning rate to gradually decrease over time, aiding in fine-tuning the model.

To prevent overfitting and encourage regularization, we included weight decay regularization with a coefficient of 4·10−5. Weight decay helps to prevent the model from assigning excessively large weights to certain parameters, thus promoting more robust generalization. During training, we employed a batch size of 16 images. The maximum number of epochs was set to 300, allowing the model to iterate through the entire training dataset multiple times. For the incremental training steps, a lower learning rate of 0.01 was used to better preserve the previously learned weights. The maximum number of epochs for the incremental training phase was set to 200.

To evaluate the performance of the trained models, we utilized the Mean Intersection over Union (mIoU) metric, which is widely used for semantic segmentation. The mIoU is calculated by considering true negatives (TN), false negatives (FN), false positives (FP), and true positives (TP) for all classes, and it is defined as:

This metric provides an overall measure of the segmentation accuracy by considering both class-wise intersection over union and balancing the contributions of true negatives and true positives.

3.1 Addition of the same type

We consider one type of IGBT as the original sample and analyze the addition of new IGBT samples belonging to the same type to DL3. We indicate \({M}_{0}\) as the first standard training of DL3 using the original sample as training dataset. The network is then updated exploiting the dataset of new IGBT samples. The resulting model is referred to as \(\text{M}\).

The original images of the first row in Fig. 4 show reconstructed of three groups of IGBT modules. In every group, they belong to the same type of IGBT with different characteristic. The images of the second and the third row show the output predicted by the old model \({M}_{0}\) and the current model \(\text{M}\). The DBC regions were marked with white color. The quantitative comparison of DL3 predicted for DBC segmentation is shown in Table 1. From the odd rows of Table 1 we can appreciate that feature extraction performs poor for new IGBT samples with final mIoU of 92.6%, 97.7%, 90.1% which are lower than 99.4%. From the third columns, we can appreciate that online learning performs well on all datasets.

Table 2 provides a summary of the comparisons between the proposed methodologies for DBC segmentation and other commonly used methods. In the second row of Table 2, it is evident that fine-tuning the network results in a performance degradation, with a mIoU of 98.2%. This observation confirms the presence of the catastrophic forgetting phenomenon in semantic segmentation, even when incorporating new samples of the same type of IGBT.

However, the overall comparison reveals that online learning techniques are capable of enhancing the segmentation performance. By continuously incorporating new IGBT samples without losing previously learned knowledge, the online learning approach improves the segmentation results compared to other methods.

This demonstrates the effectiveness of the proposed methodology in addressing the challenge of catastrophic forgetting and continuously improving the segmentation model’s performance. The online learning approach allows for the accumulation of knowledge while adapting to new data, leading to improved segmentation accuracy and avoiding the loss of previously acquired information.

3.2 Addition of different types

In this section we tackle a more challenging scenario where the initial learning stage is followed by one step of online learning with a different type of IGBT. We consider P6 as the original sample and analyze the addition of new type B to DL3.

The leftmost column in Fig. 5 displays the original images, which represent reconstructed slices of two types of IGBT modules. In this case, we denote the initial training of DL3 using the P6 sample dataset as \({M}_{0}\). Subsequently, the network is updated by incorporating the B dataset, resulting in an updated model referred to as \(\text{M}\). The right columns of the figure illustrate the qualitative comparisons of DL3’s predictions for DBC segmentation using the proposed methods.

Table 3 provides a summary of the comparisons between the proposed methods for DBC segmentation and other commonly used methods. From the first row of Table 3, it is evident that fine-tuning the network leads to a significant degradation in performance, with a final mIoU of 94.7%. This reaffirms the presence of the catastrophic forgetting phenomenon in semantic segmentation when adding a new type of IGBT samples.

Overall, the approaches based on\({L}_{d}^{{\prime }}\) (modified cross-entropy loss) outperform the approaches based on \({L}_{d}^{{\prime }{\prime }}\) (L2 loss). This is particularly evident due to the differences between the P6 and B types of IGBT modules. Since their encoders do not have a similar intermediate feature space, the \({L}_{d}^{{\prime }}\) approach, which aims to retain knowledge by aligning the outputs with the old model, proves to be more effective.

These results demonstrate the challenge of adapting deep learning models to new data while preserving knowledge learned from previous samples. The proposed approaches based on \({L}_{d}^{{\prime }}\) mitigate the detrimental effects of catastrophic forgetting and achieve improved segmentation performance, even when dealing with different types of IGBT modules.

4 Discussion

In this work, we wish to add new IGBT samples to DL3 neural network without require access to the training data for original tasks. Table 4 shows relative advantages of our method compared to commonly used methods. It is not appropriate to train all kinds of models for the same type of IGBT like independent training. When new IGBT samples encountered, we can use online learning techniques to continuously improve the learned model by feeding new IGBT samples without losing previously learned knowledge. In this way, we can ensure the best original and new task performance while being high efficiency without require previous task data.

Moreover, online learning and joint learning can be combined. Joint learning aims to trained one model for every type of IGBT like. In this way, the training model could be smaller, and the training speed was faster. For the same type of IGBT, when new IGBT samples encountered, we should use online learning techniques to improve the learned model by feeding new IGBT samples without using old dataset. We have developed an online detection system, which is shown in Fig. 6. Using this system, various types of IGBT welding defects can be detected online quickly.

5 Conclusion

This paper introduces an online learning method for segmenting IGBT solder joints using the DL3 neural network. The presence of voids in IGBT solder joints significantly impacts the heat exchange efficiency of the IGBT. In this study, two distillation loss functions are proposed, which are combined with a cross-entropy loss for the segmentation task. Notably, our method does not require storing images from previous datasets, thereby reducing memory consumption. Instead, only the previous model is used to update the current one. By leveraging tomogram data reconstructed from our x-ray CL system, we demonstrate the accuracy and efficiency of our method in segmentation compared to commonly used techniques. The proposed approach allows for continuous improvement of the learned model by incorporating new IGBT samples without compromising the previously acquired knowledge. This contributes to both accurate and efficient segmentation, addressing the challenges associated with IGBT solder joint analysis.

Data availability

The datasets analysed during the current study are not publicly available due to privacy but are available from the corresponding author on reasonable request.

References

Khanna VK. Insulated gate bipolar transistor IGBT theory and design. Hoboken: Wiley-IEEE; 2004.

Xu L, Zhou Y, Zhang Z, Chen M, Liu S. Influence of solder void to thermal distribution of IGBT module. J CAEIT. 2014;9(2):125–9.

Buzug T. Computed tomography: from photon statistics to modern cone-beam CT. Medical Physics. 2009;36(8):3858.

Said AF, Bennett BL, Karam LJ, Pettinato J. Automated void detection in solder balls in the presence of vias and other artifacts. In: IEEE transactions on components, packaging and manufacturing technology. 2012;2(11):1890-1901.

van Veenhuizen M. Void detection in solder bumps with deep learning. Microelectron Reliab. 2018;88:315–20.

Liu BD, Wei CF, Wei L, Hu SH. Computed laminography system for IGBT testing. In: 15th Asia Pacifc conference for non-destructive testing. 2017.

Su L, Yu X, Li K, Pecht MJMR. Defect inspection of flip chip solder joints based on non-destructive methods: a review. Microelectronics Reliability. 2020;110:113677.

Wei ZH, Yuan LL, Liu BD, Wei CF, Sun CL, Yin PF, et al. A micro-CL system and its applications. Rev Sci Instrum. 2017;88:11.

Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Commun ACM. 2017;60(6):84–90.

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv. 2015. https://doi.org/10.48550/arXiv.1409.1556.

Szegedy C, Liu W, Jia YQ, et al. Going Deeper with Convolutions. Comput Vision Pattern Recognit (CVPR). 2015. https://doi.org/10.48550/arXiv.1409.4842.

French RM. Catastrophic forgetting in connectionist networks. Trends Cognit Sci. 1999;3(4):128–35.

Goodfellow IJ, Mirza M, Xiao D, Courville A, Bengio Y. An empirical investigation of Catastrophic forgetting in gradient-based neural networks. arXiv. 2013. https://doi.org/10.48550/arXiv.1312.6211.

McCloskey M, Cohen NJ. Catastrophic interference in connectionist networks: the sequential learning problem. Psychol Learn Motiv–Adv Res Theory. 1989;24:109–65.

Caruana R. Multitask learning. Mach Learn. 1997;28:41–75.

Istrate R, Malossi ACI, Bekas C, Nikolopoulos D. Incremental training of deep convolutional neural networks. arXiv. 2018. https://doi.org/10.48550/arXiv.1803.10232.

Roy D, Panda P, Roy K. Tree-CNN: a hierarchical deep convolutional neural network for incremental learning. Neural Netw. 2020;121:148–60.

Sarwar SS, Ankit A, Roy K. Incremental learning in deep convolutional neural networks using partial network sharing. Ieee Access. 2020;8:4615–28.

Xiao T, Zhang J, Yang K, Peng Y, Zhang Z. Error-driven incremental learning in deep convolutional neural network for large-scale image classification. In: Proceedings of the 22nd ACM international conference on multimedia. 2014;177–86.

Kirkpatricka J, et al. Overcoming catastrophic forgetting in neural networks. Proc Natl Acad Sci USA. 2017;114(13):3521–26.

Li ZZ, Hoiem D. Learning without forgetting. IEEE Trans Pattern Anal Mach Intell. 2018;40(12):2935–47.

Hinton G, Vinyals O, Dean J. Distilling the knowledge in a neural network. Neural Inform Proc Syst (NIPS) Deep Learn Represent Learn Workshop. 2015. https://doi.org/10.48550/arXiv.1503.02531.

Castro FM, Marín-Jiménez MJ, Guil N, Schmid C, Alahari K. End-to-end incremental learning. In: Proceedings of European conference on computer vision (ECCV); 2018. p. 233–48.

Shmelkov CSK, Alahari K. Incremental learning of object detectors without catastrophic forgetting. In: Proceedings of international conference on computer vision (ICCV); 2017. p. 3400–9.

Rebuffi SA, Kolesnikov A, Sperl G, Lampert CH. icarl: Incremental classifier and representation learning. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR); 2017. p. 2001–10.

Li Y, Liu SQ, Li CM, Zheng YS, Wei CF, Liu BD, et al.. Automated defect detection of insulated gate bipolar transistor based on computed laminography imaging. Microelectronics Reliability. 2020;115:113966.

Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. Med Image Comput Comput-Assisted Interven. 2015;9351(Pt Iii):234–41.

Badrinarayanan V, Kendall A, Cipolla R. Segnet: A deep convolutional encoder-decoder architecture for scene segmentation. IEEE Trans Pattern Anal Mach Intell. 2017;39(12):2481–95.

Chollet F. Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition; 2017. p. 1251–8.

He KM, Zhang XY, Ren SQ, Sun J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intell. 2015;37(9):1904–16.

Hariharan B, Arbelaez P, Girshick R, Malik J. Hypercolumns for object segmentation and fine-grained localization. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR); 2015. p. 447–56.

Kim YD, Park E, Yoo S, Choi T, Yang L, Shin DJCS. Compression of deep convolutional neural networks for fast and low power mobile applications. arXiv. 2015. https://doi.org/10.48550/arXiv.1511.06530.

Wen W, Wu CP, Wang YD, Chen YR, Li H. Learning structured sparsity in deep neural networks. In: Advances in neural information processing systems; 2016. p. 29.

Han S, Liu X, Mao H, Pu J, Pedram A, Horowitz MA, et al. EIE: efficient inference engine on compressed deep neural network. ACM SIGARCH Comput Architect News. 2016;44(3):243–54.

Chen LC, Zhu Y, Papandreou G, Schroff F, Adam H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European conference on computer vision (ECCV). 2018; p. 801–18.

He K, Zhang X, Ren S, Sun J. Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision (ICCV); 2016. p. 1026–34

Bottou L. Large-scale machine learning with stochastic gradient descent. In: Proceedings of COMPSTAT’2010: 19th International conference on computational statistics; 2010. p. 177–86.

Chen LC, Papandreou G, Schroff F, Adam H. Rethinking Atrous convolution for semantic image segmentation. 2017. https://doi.org/10.48550/arXiv.1706.05587.

Acknowledgements

This research was supported by the National Key R&D Program of China (2017YFF0107201); the National Natural Science Foundation of China (62006072); the Key Technologies Research and Development Program of Henan Province (222102210108); the Innovative Funds Plan of Henan University of Technology (2022ZKCJ11); the Research Foundation for Advanced Talents of Henan University of Technology (2022BS034).

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Contributions

YL: conceptualization, methodology, software, validation, formal analysis, investigation, data curation, writing - original draft, writing - review & editing, visualization. ML: software, resources, writing - review & editing. XF: supervision, project administration, conceptualization, methodology, funding acquisition. SL: project administration, conceptualization, methodology, validation, software, resources, writing - review & editing, funding acquisition. CW: supervision, project administration, conceptualization, methodology, funding acquisition. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, Y., Luo, M., Fei, X. et al. Online learning for DBC segmentation of new IGBT samples based on computed laminography imaging. Discov Appl Sci 6, 145 (2024). https://doi.org/10.1007/s42452-024-05807-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-024-05807-3