Abstract

Conventional linearized deterministic inversions of transient electromagnetic (TEM) data inherently simplify the non-uniqueness and ill-posed nature of the problem. While Monte-Carlo-type approaches allow for a comprehensive search of the solution space, gaining the ensemble of inferred solutions as comprehensive as possible may be limited utility in high-dimensional problems. To overcome these limitations, we utilize a Markov Chain Monte Carlo (MCMC) inversion approach for surface-based TEM data, which incorporates Bayesian concepts into Monte-Carlo-type global search strategies and can infer the posterior distribution of the models satisfying the observed data. The proposed methodology is first tested on synthetic data for a range of canonical earth models and then applied to a pertinent field dataset. The results are consistent with those obtained by standard linearized inversion approaches, but, as opposed to the latter, allow us to estimate the associated non-linear, non-Gaussian uncertainty.

Article Highlights

-

1.

We propose a Bayesian MCMC procedure for surface-based TEM data inversion and apply it successfully both in synthetic data and field data.

-

2.

We use the modified Gaussian proposal distribution to improve the sampling efficiency and deep resistivity resolution.

-

3.

The results of Bayesian can provide uncertainty information on parameters and judge the reliability of inversion results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Deterministic inversion approaches have a long and successful history in geophysics in general [1] and for transient electromagnetic (TEM) data in particular [2]. Studies based on linearized deterministic inversion methods, including the conventional least-squares-type [3,4,5], least-squares-type with specific regulation method (e.g. the Occam method, Tikhonov regulation method, Levenberg–Marquardt algorithm, etc.) [6,7,8,9,10,11], have contributed significantly to advance the interpretation of TEM data. These methods are computationally efficient but strongly dependent on the initial model and, thus, prone to getting stuck in the local minima of the solution space. This problem can be alleviated through the use of Monte-Carlo-type global search techniques, such as simulated annealing (SA) [12] and genetic algorithms (GA) [13, 14]. These approaches do, however, generally do not allow for comprehensive uncertainty analysis, which is biased to the optimum rather than analyzing and assessing all plausible models. From a mathematical point of view, the inversion of TEM data is an ill-posed problem, which means that a number of models can in general fit the observed data within their inherent uncertainties. Hence, a vital element in the solution of the TEM inverse problem is the quantification of non-uniqueness [15]. Probabilistic inversion approaches in general and Bayesian methods, in particular, have significant potential for addressing this issue (e.g., Mosegaard [16]). A common way to combine the advantages of Monte-Carlo-type global search strategies with those of Bayesian inference is through the so-called Markov Chain Monte Carlo approaches [17,18,19]. Initially, Tarantola and Valette combined Bayes’ rule with MCMC sampling to describe the uncertainty of non-linear inversion problems [20]. Subsequently, Grandis et al. [21] and Schott et al. [22] showed that the posterior uncertainties in the resistivity of a finely layered medium obtained by inverting surface electromagnetic measurements are huge unless the solution is smoothed or made parsimonious, or both. Malinverno applied parsimonious trans-dimensional Bayesian MCMC inversion to the analysis of resistivity soundings. Gunning and Glinsky introduced a new open-source toolkit named “Delivery” for model-based Bayesian MCMC seismic inversion [23]. Yang and Hu illustrate how Bayesian combined with Metropolis sampler can be used to invert noisy airborne frequency-domain EM data [24]. Minsley developed a trans-dimensional Bayesian MCMC algorithm to determine the model from frequency-domain EM data [25]. Yin et al. executed the trans-dimensional Bayesian MCMC inversion of frequency-domain airborne EM data [26]. Blatter et al. also employed Bayesian MCMC inversion for airborne TEM data [27]. Macchioli-Grande et al. implemented Bayesian MCMC inversion for the joint interpretation of seismic and seismo-electric data [28].

Previous research based on Bayesian MCMC inversion of surface-based EM data mostly focused on frequency-domain surface-based or airborne data. Surface-based TEM is sensitive to low-resistivity anomalies in the shallow subsurface, which is also a popular, efficient, and convenient method to carry out groundwater investigation and mineral exploration, etc. Besides, surface-based TEM is widely used due to its advantages such as high signal-to-noise ratio and resolution. Here, we adapt, validate, and apply Bayesian MCMC inversion to the surface-based TEM data. Given the computational cost of MCMC approaches as well as the inherent ambiguity which plagues the inversion of TEM data, we employ an appropriate proposal distribution to ensure robust and rapid convergence. In the following, we first outline the underlying methodology, which we then test and validate on several pertinent synthetic datasets, before applying it to an observed case study.

2 Methodology

2.1 Bayesian inference in TEM inversion

In the Bayesian inference for TEM data, the posterior probability density function (pdf) measures how well a given layered model agrees with the prior information and the observed data [29, 30]. The posterior pdf can be written as

where \(x|y\) means the probability of having x when for a given value of y. \({\mathbf{m}} = \left[ {m_{1} , m_{2} ,m_{3} \ldots m_{k} } \right]\) is the model parameter vector, \({\mathbf{d}} = \left[ {d_{1} , d_{2} ,d_{3} \ldots d_{N} } \right]\) denotes the data vector, \(k\) is the number of parameters, N is the number of observations, \(p\left( {\mathbf{m}} \right)\) denotes the prior distribution, \(p({\mathbf{d}}|{\mathbf{m}})\) denotes the likelihood function, and \(p\left( {\mathbf{d}} \right)\) denotes the marginal likelihood. In the given context, m and d denote the resistivity structure of the probed subsurface region and the observed TEM data, respectively. Following common practice, we assume a Gaussian form of the prior distribution \(p\left( {\mathbf{m}} \right)\), which represents our known a priori knowledge concerning the model parameters m.

where the prior mean resistivity \({\overline{\mathbf{m}}}\) is assumed to correspond to the resistivity structure inferred from a standard deterministic inversion of the data. The prior variance \(\overline{\user2{C}}_{{{\mathbf{m}}0}}\) can be written as \(\overline{\user2{C}}_{{{\mathbf{m}}0}} = {\text{diag}}\left( {\lg \left( {1 + p_{r} } \right)^{2} } \right)\), where \(p_{r}\) is the factor related to the resistivity range values. The factor is the assumed maximum resistivity value minus the minimum resistivity value [15].

The likelihood function \(p({\mathbf{d}}|{\mathbf{m}})\) relies on the magnitude of the measurement error vector, defined as the difference between the observed data d and modeled data g(m)

where the diagonal of the covariance matrix of the measurement error vector \(\overline{\user2{C}}_{{\mathbf{d}}}\) contains the variances of the expected errors of the observed data d. The likelihood thus increases with decreasing the difference between the observed d and synthetic g(m) data. The marginal likelihood \(p\left( {\mathbf{d}} \right)\), which is commonly referred to as the normalizing constant, is independent of the model parameters and only depends on the observed data [31, 32]. It can be written as

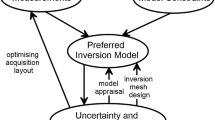

where \({\Omega }\) denotes the outer limits of the parameter space. For discrete inverse problems, p(d) is notoriously difficult to estimate. This problem can be circumvented by combining Bayesian inference with MCMC sampling, the density of which is proportional to the posterior pdf and is constant for a fixed number of parameters. This approach, which is pursued in the work, is schematically outlined in Fig. 1 and described in the following.

2.2 Bayesian MCMC sampling

Our MCMC inversion approach for TEM data involves a two-step procedure. The first step, referred to as the burn-in period, can be viewed as a global optimization that moves from a random starting model to a high-probability region of the model space. In this period, we randomly generate new models from the proposal distribution \({ }q({\mathbf{m}}_{{{\text{new}}}} |{\mathbf{m}})\) of the model parameters, which only depends on the current values of the model parameters

where m denotes the current model parameters and \(\overline{\user2{C}}_{{\mathbf{m}}}\) the proposal parameter covariance matrix. The proposal covariance \(\overline{\user2{C}}_{{\mathbf{m}}}\) provides a smaller search range for parameters with high sensitivity and a wide search range for those with low sensitivity. Adequate determination of \(\overline{\user2{C}}_{{\mathbf{m}}}\) is very important as it determines the sampling step size and, thus, affects the accuracy and efficiency of the MCMC search process [33]. Malinverno suggested an effective way to define \(\overline{\user2{C}}_{{\mathbf{m}}}\) based on a linearized estimate of the posterior model covariance [14]

where \({ }{\mathbf{J}} = \frac{{\partial {\mathbf{d}}}}{{\partial {\mathbf{m}}}}\) is the linearized sensitivity concerning a current model \({\mathbf{m}}\), which is the partial derivative of predicted data with respect to each element of \({\mathbf{m}}\)[6]. \(\lambda\) denotes the weight controlling the trade-off between the data fitting term \({\mathbf{J}}^{{\text{T}}} \overline{C}_{{\mathbf{d}}}^{ - 1} {\mathbf{J}}\) and the model constraint term \(\overline{C}_{{{\mathbf{m}}0}}^{ - 1}\). This factor can increase the effect of model constraint on inversion results rather than only depending on the data fitting error. Reasonable selection of \(\lambda\) can improve the resolution of deep resistivity [32]. \(\overline{\user2{C}}_{{{\mathbf{m}}0}}\) is the model covariance matrix associated with the prior information, while \(\overline{C}_{{\mathbf{d}}}\) denotes the data covariance matrix, which quantifies the propagation of observational uncertainty into the forward modeling space \({\mathbf{g}}\left( {\mathbf{m}} \right)\). The sensitivity matrix \({\mathbf{J}}\) reflects the contribution of model changes to observation data changes. Taking advantage of \(\lambda\) in improving the solution resolution of the model, we apply \(\lambda\) to the prior distribution. Equation (2) is transformed as:

During the MCMC sampling process, it is almost impossible to obtain the full conditional distribution of the posterior probability and, hence, it is difficult to use the Gibbs algorithm [34] to sample it directly. Therefore, we apply the Metropolis–Hastings (M-H) algorithm [18, 19] to construct the acceptance probability function by integrating the likelihood, proposal distribution, and prior distribution, and then guide the model updating. Once a candidate model is generated from proposal distribution, we exploit the M-H algorithm with the acceptance probability α to determine whether to accept or reject the candidate model. The acceptance probability α is defined as

where \(q\left( {{\mathbf{m}}_{{{\text{new}}}} {|}{\mathbf{m}}} \right)\) is the proposal distribution that defines the new model \({\mathbf{m}}_{{{\text{new}}}}\) as a random deviate from a probability distribution conditioned only on the current model m.\(p\left( {{\mathbf{d}}{|}{\mathbf{m}}_{{{\text{new}}}} } \right)\) and \(p\left( {{\mathbf{d}}{|}{\mathbf{m}}} \right)\) are the likelihood function measuring the probability of fit between the forward modeling response and the observed data, respectively.\(p\left( {\mathbf{m}} \right)\) and \({\varvec{p}}({\mathbf{m}}_{{{\text{new}}}} )\) are the prior distributions related to m and \({\mathbf{m}}_{{{\text{new}}}}\), separately. When \({\mathbf{m}}_{{{\text{new}}}}\) is generated from the proposal distribution, we use the acceptance probability \({ }\alpha\) to determine whether it would be accepted or rejected. To this end, we randomly generate a value 0 ≤ u ≤ 1 from a uniform distribution and compare it to α. If \(u < \alpha\), the candidate model is accepted with probability \(\alpha\), otherwise, it is rejected. This allows for the acceptance of candidate models, whose likelihood is lower than that of the current model, which, in turn, avoids that the parameter search gets stuck in the local minima of the solution space. With an increasing number of iterations, the selected candidate models will thus move to higher posterior probability regions of the model space.

The second step, following the initial burn-in period, is generally referred to as the sampling stage, for which the data errors of models descend to a legitimate value, and the MCMC samples gradually approach a stationary regime. Meanwhile, the output models are adopted into the posterior distribution [35]. Finally, based on the accepted models after the burn-in period, we numerically compute the posterior pdf of the parameters and uncertainty analysis for the model.

2.3 Convergence judgment

In general, the MCMC algorithm starts from an arbitrary initial state, and the model sampling reaches a stable state only when the Markov chain forgets where it started and achieves stationarity. Model sampling before the Markov chain reaches a stable state is usually discarded, and this initial stage is called the "burn-in period". The convergence judgment of this study is to determine when the burn-in period ends when to start to output posterior models, and whether to output all posterior models.

Firstly, judging the end of the burn-in period, one of the methods commonly used in geophysical data inversion is to set an expected error threshold. When the data fitting error of the sampling model reaches the preset error threshold, it indicates that the burn-in period has ended. As the average error level of continuously accepted M models fitting observed data meets this threshold, we consider the burn-in stage to be over. The error threshold can be set according to the actual inversion requirements, whether synthetic data or field data.

After the end of the burn-in period, once an MCMC chain achieves stationarity, (i.e., there are no trends visible in the misfit or negative log-likelihood), the posterior distribution does not change appreciably with the addition of more samples we can assume convergence. However, in the process of posterior statistics, we still find some models in our posterior series that deviated greatly from the real observation data, which can mix and contaminate the posterior distribution. To eliminate these unreasonable models, we add a supplementary condition to the output. We use R to auxiliary access the qualification of the output posterior model:

-

(1)

The current model fitting error of a single observation point can be expressed as

$$e_{i} = {\mathbf{d}}_{{\text{i}}} - g_{i} \left( {\mathbf{m}} \right),$$(9) -

(2)

The observed data error at a single point can be expressed as \({\text{E}}^{{{\text{obs}}}}_{i}\).

Assuming there are N observation points, the R condition can be expressed by the following formula:

R is user-designed by actual demand. In this study, we usually choose R = 1.5–2. Only models complying with this value are eligible to be output, otherwise, they will not be included in the posterior distribution. Finally, we give enough total number of sampling N to end the posterior sampling, and users can choose according to the requirements of inversion precision and computational efficiency.

3 Results

3.1 Tests on synthetic data

In the following, we apply Bayesian MCMC sampling approach to the inversion of synthetic TEM data for a range of pertinent models. We execute a digital linear filter algorithm on forward modeling [36], which will be used for the field data subsequently. The synthetic TEM data are simulated for a 100 m × 100 m square transmission loop, 1 A source current, and a central loop recording system. Considering the realistic TEM observation situation, at early times the signal-to-noise ratio is superb whereas at late times the signal is lost in the noise. Referring to the EM noise literature of Munkholm and Auken [37], we use the synthetic data contaminated with 5% Gaussian noise. Synthetic data with the noise of Model A is shown as an example in Fig. 2.

We first consider four classic three-layer models, referred to as H-, K-, A-, and Q-type, which are the typical model types in TEM inversion algorithms [38]. Models A and Q correspond to successive increases and decreases of the layer resistivity, respectively. Conversely, models H and K contain a layer of high and low resistivity, respectively, embedded by layers of intermediate resistivity. We then analyze the effects of the Gaussian proposal distribution with \(\lambda\) on the convergence MCMC process and deep resistivity resolution for a complex six-layer model.

For the inversion procedure, we follow the basic idea of smoothness, which implies that the resistivity structure of the subsurface tends to vary continuously rather than discontinuously. To achieve this, we use as many 1D grid nodes as practically possible for the Bayesian MCMC inversion process. For the considered synthetic data, we use 10 nodes with a constant thickness of 20 m and invert for their resistivity. Based on the synthetic data and the apparent resistivity inferred from them, we can determine the approximate distribution depth of high and low resistivity layers., thus avoiding unnecessary and costly searches in regions of low acceptance probability.

We offer the posterior distributions of the four models (in Fig. 3) and the data fitting errors curves (in Fig. 4). Figure 3 shows the inversions of the canonical three-layer models, for which we used the Gaussian proposal distribution with \(\lambda = 20\). For all the synthetic models considered here, the prior distribution of the resistivities is expressed as Eq. (7). Once the average error level of continuously accepted 3000 models fitting observed data meets 40%, we consider that is time to end the burn-in period. We set the total accepted sample number N as 1,600,000. The results are expressed in terms of the posterior pdf in the final stage, described through a posterior marginal distribution estimated as the maximum probability when we regard the statistics of the posterior distribution are satisfactory. In Fig. 3, it is illustrated that the inferred posterior distributions of the resistivities are in good agreement with the underlying models, while at the same time allowing for a quantitative assessment of the uncertainty associated with the inversion process. Although they consume a large number of samples, the results can reflect the changes in the uncertainty of model parameters. The probability distribution in the upper layers (< 100 m) is narrow than bottom layers. We can determine the beat parameter intervals from the upper layers’ posterior distribution, rather than gain a single optimal value. From the data error curves, after the burn-in period, the data error curves gradually shrink and converge quickly. The resistivity resolution of the bottom layers is lower than that of the upper layer, but it is enough to distinguish the best parameter intervals.

Posterior distributions of the estimated resistivity for synthetic data contaminated by 5% white noise for four types of canonical three-layer models. Model H: low-resistivity layer in the middle; model K: high-resistivity layer in the middle; model A: resistivity increases with depth; model Q: resistivity decreases with depth. Also shown are the prior models inferred from least-squares inversion (green lines). Maximum probability models (black lines) and the real models used to generate the underlying synthetic TEM data (red lines). The orange dotted lines are the 5% and 95% confidence boundary of the posterior distribution in each layer

To assess the importance of adding \(\lambda\) in the Gaussian proposal distribution, we then proceed to invert the more complex six-layer model shown in Fig. 5 with \(\lambda = 20\) and without \(\lambda\) in the Gaussian proposal distribution. The optimal model inferred through a damped least-square inversion serves as the prior distribution for the subsequent Bayesian MCMC sampling of the model space. In nature, Gaussian proposal distribution is commonly used (as illustrated by [14]) to improve the algorithm efficiency in sampling the PPD. We adopted modified Gaussian proposal distribution with \(\lambda\) to improve the deep resistivity resolution and avoid useless sampling. In Fig. 5, we output the posterior models sampled with two proposal distributions separately. This modified proposal distribution avoids useless samples and can fully sample in the high probability space after the burn-in period. The deeper resistivity has a relatively narrower parameter interval. It is indicated that proposal distribution with λ can raise deeper resistivity resolution. In Fig. 6, the proposal distribution without λ, we consumed approximately 700,000 samples before ending the burn-in period. However, adopting modified Gaussian proposal distribution, we only need about approximately 300,000 samples to end the burn-in period (~ 50% of the former). Consequently, the modified Gaussian proposal distribution is suitable for the expensive computation of MCMC sampling.

Comparison of MCMC inversion results of synthetic data contaminated with 5% white noise for a six-layer model with Gaussian proposal distribution: a \(\lambda = 1\), b \(\lambda = 20\). Also shown are the underlying real model used to generate the synthetic TEM data (red line), the prior model based on least-squares inversion (green line), and the maximum probability model (black line). The orange dotted lines are the 5% and 95% confidence boundary of the posterior distribution in each layer. The MCMC sample using \(\lambda = 20\) in Gaussian proposal distribution can improve deeper resistivity resolution

Date error curves of MCMC inversion results of a six-layer model with Gaussian proposal distribution: a \(\lambda = 1\), b \(\lambda = 20\). The horizontal black dotted line is data error 50%, which is the threshold of the end burn-in period. The total sample number is 1600000. Sampling with \(\lambda = 20\) only costs > 50% sample times (~ 30,0000) of that with \(\lambda = 1\) (~ 700,000) to end the burn-in period

The above tests on synthetic data clearly illustrate the viability of the Bayesian MCMC inversion of TEM data, as the underlying probabilistic nature of this approach addresses the inherently ill-posed nature of the problem. Our results also point to the importance of the modified Gaussian proposal distribution for reducing the computational cost to achieve convergence. Although Bayesian inversion consumes a large number of samples, the results obtained can reflect the changes in the uncertainty of model parameters. Owing to the nature of MCMC sampling, the model perturbation is freer than linear inversion, without many restricted strategies. We just added the factor \(\lambda\) to improve the deep resolution. The convergence characteristics are obvious, while the error level posterior sample in anaphase is a little high, which also is acceptable to us in this expensive computation. What we want is to extract the dynamic uncertainty in the continuous process of model updating and data fitting, rather than just to obtain a single optimal model. This is of profound significance for us to analyze the inversion problem.

3.2 Application to field data

In the following, we apply the Bayesian MCMC inversion procedure outlined above to observed TEM data recorded on the site of the Yuanfu coal mine-out area near the city of Jinan, China (in Fig. 7). The TEM data have been acquired along with a profile with a length of 700 m, which is referred to as Line2. We used a TEM central loop configuration to acquire the data with a 100 m × 100 m square transmitter loop, a receiver loop with an equivalent area of 3400 m2, and a bipolar rectangular pulse sequence with a base frequency of 25 Hz and an amplitude of 7 A. The cut-off time for this setup is 10 μs. We set 24 measurement points and observed field data. During the field data observation, there were various noise sources in the field environment, including human noise such as high-tension wire and vehicles. It needs to be considered in parameter setting and convergence judgment of inversion.

For each measurement point, the prior model is based on inferred apparent resistivity results and the least-squares inversion of the TEM data. We apply the proposal distribution to update the current model resistivity, which is a multi-dimensional normal distribution function, regarding the current model resistivity as the mean resistivity (Eq. (5)). The Bayesian MCMC inversion procedure then follows that outlined for the synthetic data (Fig. 1). For the proposal distribution, we set a smaller \(\lambda\) \(\left( {\lambda = 10} \right)\) in proposal covariance, due to the lack of accurate and abundant prior information. The total sample number N is 4,000,000. For the convergence judgment, when the average error level of continuously accepted 2000 models fitting observed data meets 45%, we consider that is the end of the burn-in period. After the burn-in period, we start the posterior sampling. To compare deterministic inversion with probabilistic inversion, we also implement the Occam inversion and compare the results with that of MCMC. Combined with the results of the two methods, we want to display the advantages of probabilistic inversion like the Bayesian method. Figure 8 shows the results of the sample points 2, 6, 8, 15, 21, and 24, while Fig. 9 compares pseudo-2D resistivity profiles derived from the lateral assembly of the 1D Occam’s and Bayesian MCMC inversions with a corresponding geological profile based on the local survey report [39].

Results of the Bayesian MCMC inversion of surface-based TEM field data compared to those obtained with an Occam-type inversion approach (dashed green lines) for points a 2, b 6, c 8, d 15, e 21, and f 24. The orange dotted lines are the 5% and 95% confidence boundary of the posterior distribution in each layer. It also shows the prior models (red lines) based on the local survey report [39] and the maximum probability models (black lines)

Comparison of a maximum-probability results of Bayesian MCMC and b Occam’s inversion along the survey line with a c schematic geological profile based on local geology survey report [39]

The resistivity models inferred through both Occam’s and Bayesian MCMC inversion are broadly characterized by a three-layer structure. The uncertainty of the associated parameter estimation revealed by the Bayesian MCMC inversion is particularly obvious at the interface between the low- and high-resistivity regions around 200–250 m depth. Based on the overall results along the survey line, it is apparent that the Bayesian MCMC inversion has a stronger ability to characterize transverse continuity of stratified anomalous regions, while Occam’s inversion provides noisier and/or less consistent results. In this context, it is important to note that, in the deeper regions of the profile, the results of Occam’s inversion are particularly variable, which we attribute to the manner, in which this inversion approach deals with the elevated noise levels in the corresponding parts of the data. You need additional smoothing measures and constraints to achieve the desired inversion results. The ultimate objective of Occam’s inversion approaches is to find the best and smoothest model that satisfies the residual error. Its results are easily biased by noise or other disturbances. Conversely, Bayesian MCMC inversion inherently accounts for the effects of noise and data errors and, instead of looking for a single best-fitting model, seeks a series of models that agree with the observed data given their underlying uncertainties. The smoothing and roughness are unnecessary in MCMC inversion. A reasonable sampling strategy is enough to achieve the max-likelihood model and uncertainty analysis. The uncertainty analysis of parameters is more important for complex field data inversion. Users can also evaluate the reliability of a single model based on accurate uncertainty analysis, which provides an available verification standard for deterministic inversion. This makes the proposed Bayesian MCMC inversion particularly attractive to the noisy datasets in general and TEM data in particular.

To assess the realism and validity of the inversion results, we compare them to a schematic geological profile, which has been compiled from a local geology survey [39]. The resulting broadly layered resistivity structure correlates well with some of the most prominent geological discontinuities. Notably, the transition from the surficial alluvial layer to the underlying consolidated sediments around 40 m depth is evident in the results of both the Bayesian MCMC and Occam’s inversion approaches. Conversely, the transition from formations of low to intermediate resistivity into the resistive coal-bearing strata at around 300 m depth is much more clearly and consistently revealed by the results of the Bayesian MCMC inversion, which again underlines the potential of this inversion approach for handling inherently complex and uncertain surface-based TEM data.

Moreover, this posterior distribution can help us to determine the reliability of different parameter values in different depths. It can be seen that the resistivity probability distribution of upper layers in MCMC results is more concentrated, indicating that the max-likelihood value predicted is more reliable. However, the resistivity probability distribution of bottom layers has a wider range, indicating that the max-likelihood value predicted has lower reliability. And we need more geophysical information to recover the structure of deeper strata. Deterministic inversion such as Occam cannot provide the above analyses of reliability and uncertainty of results. Uncertainty information is a key for us to explore the distribution of strata in practical application, where the interpretation of a single model is weak.

4 Conclusions

We have explored the application of a MCMC search strategy in Bayesian framework for the inversion of inherently noisy surface-based TEM data with the underlying motivation to not only obtain a single optimal inverse model, but also quantitative information with regard to its uncertainty. We can extract the optimal distribution interval of parameters through a great number of samples and offer the reliability of parameter values. The method has been tested and validated on pertinent synthetic data and is then applied to field data. Our results demonstrate that method is largely insensitive to the starting model and exhibits stable convergence characteristics. And the use of a proposal distribution with \(\lambda\) effectively supports a stable and efficient convergence of the inversion process and improves the resolution of deeper parameters. A limitation of the current approach is the need to use relatively simple forward models, which is essentially mandated by the inherently high computational cost of Bayesian MCMC procedures. An obvious way to alleviate this problem is through the use of multiple parallel Markov chains. Furthermore, considering the unknown of the predicted model’s layer number of field data, we also need to introduce the idea of trans-dimension into TEM inversion, which we intend to explore in the future.

Data availability

All data generated or analyzed during this study are available upon request to the corresponding author.

References

Menke W (2012) Geophysical data analysis: discrete inverse theory, 3rd edn. Academic Press, Cambridge

Zhdanov MS (2010) Electromagnetic geophysics: notes from the past and the road ahead. Geophysics 75:49A-75A. https://doi.org/10.1190/1.3483901

Huang H, Palacky GJ (1991) Damped least-squares inversion of time-domain airborne EM data based on sigular value decomposition. Geophys Prospect 39:827–844

Lines L, Treitel S (1984) A_Self-parameterising_partition_model_approach_to_. Geophys Prospect 32:159–186. https://doi.org/10.1111/j.1365-2478.1984.tb00726.x

Yang C, Mao L, Mao X, Wang C, Guo M (2020) Study on the semi-aerospace transient electromagnetic adaptive regularization-damped least squares algorithm. Geol Explor 56:137–146

Constable SC, Parker RL, Constable CG (1987) Occam’s inversion; a practical algorithm for generating smooth models from electromagnetic sounding data. Geophysics 52:289–300. https://doi.org/10.1190/1.1442303

Farquharson CG, Oldenburg DW (2004) A comparison of automatic techniques for estimating the regularization parameter in non-linear inverse problems. Geophys J Int 156:411–425. https://doi.org/10.1111/j.1365-246X.2004.02190.x

Marquardt DW (1963) An algorithm for least-squares estimation of nonlinear parameters. J Soc Ind Appl Math 11:431–441. https://doi.org/10.1137/0111030

Chen XB, Zhao GZ, Tang J, Zhan Y, Wang JJ (2005) An adaptive regularized inversion algorithm for magnetotelluric data. Chin J Geophys 48:937–946. https://doi.org/10.3321/j.issn:0001-5733.2005.04.029

Tikhonov A, Arsenin V (1977) Solutions of Ill-posed problems. V.H. Winston & Sons, Washington DC

Oristaglio ML, Blok H (2004) Wavefield Imaging and Inversion in Electromagnetics and Acoustics. Cambridge University Press

Wang R, Yin CC, Wang MY, Wang GJ (2012) Simulated annealing for controlled-source audio-frequency magnetotelluric data inversion. Geophysics 77:E127–E133. https://doi.org/10.1190/geo2011-0106.1

Akça I, Basokur AT (2010) Extraction of structure-based geoelectric models by hybrid genetic algorithms. Geophysics 75:F15–F22. https://doi.org/10.1190/1.3273851

Sen M, Stoffa P (1995) Global optimization methods in Geophysical inversion. Cambridge University Press. https://doi.org/10.1017/CBO9780511997570

Malinverno A (2002) Parsimonious Bayesian Markov chain Monte Carlo inversion in a nonlinear geophysical problem. Geophys J Int 151:675–688. https://doi.org/10.1046/j.1365-246X.2002.01847.x

Mosegaard K, Tarantola A (1995) Monte Carlo sampling of solutions to inverse problems. J Geophys Res 100:12431–12447. https://doi.org/10.1029/94JB03097

Sambridge M, Mosegaard K (2002) Monte Carlo methods in geophysical inverse problems. Rev Geophys 40:1003–1009. https://doi.org/10.1029/2000RG000089

Hastings WK (1970) Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57:97–109. https://doi.org/10.1093/biomet/57.1.97

Metropolis N, Rosenbluth AW, Rosenbluth MN, Teller AH, Teller E (1953) Equation of state calculations by fast computing machines. J Chem Phys 21:1087–1092. https://doi.org/10.1063/1.1699114

Tarantola A, Valette B (1982) Inverse problems = Quest for information. J Geophys 50:159–170

Grandis H, Menvielle M, Roussignol M (1999) Bayesian inversion with Markov chains- I The magnetotelluric one-dimensional case. Geophys J Int. https://doi.org/10.1046/j.1365-246x.1999.00904.x

Schott JJ, Roussignol M, Menvielle M, Nomenjahanary FR (1999) Bayesian inversion with Markov chains—II. The one-dimensional DC multilayer case. Geophys J Int 138:769–783. https://doi.org/10.1046/j.1365-246x.1999.00905.x

Gunning J, Glinsky ME (2004) Delivery: an open-source model-based Bayesian seismic inversion program. Comput. Geosci. UK 30:619–636. https://doi.org/10.1016/j.cageo.2003.10.013

Yang DK, Hu XY (2008) Inversion of noisy data by probabilistic methodology. Chin J Geophys 51:901–907

Minsley BJ (2011) A trans-dimensional Bayesian Markov chain Monte Carlo algorithm for model assessment using frequency-domain electromagnetic data. Geophys J Int 187:252–272. https://doi.org/10.1111/j.1365-246X.2011.05165.x

Yin CC, Qi YF, Liu YH, Cai J (2014) Trans-dimensional Bayesian inversion of frequency-domain airborne EM data. Chin J Geophys 57:2971–2980. https://doi.org/10.6038/cjg20140922

Blatter D, Key K, Ray A, Foley N, Tulaczyk S, Auken E (2018) Trans-dimensional Bayesian inversion of airborne transient EM data from Taylor Glacier. Antarctica Geophys J Int 214:1919–1936. https://doi.org/10.1093/gji/ggy255

Macchioli Grande F, Zyserman F, Monachesi L, Jouniaux L, Rosas Carbajal M (2020) Bayesian inversion of joint SH seismic and seismoelectric data to infer glacier system properties. Geophys Prospect. https://doi.org/10.1111/1365-2478.12940

Jackson DD, Matsu’Ura M (1985) A Bayesian approach to nonlinear inversion. J Geophys Res 90:581–591. https://doi.org/10.1029/JB090iB01p00581

Backus GE (1988) Bayesian inference in geomagnetism. Geophys J Int 92:125–142. https://doi.org/10.1111/j.1365-246X.1988.tb01127.x

Dettmer J, Dosso SE, Holland CW (2010) Trans-dimensional geoacoustic inversion. J Acoust Soc Am 128:3393–3405. https://doi.org/10.1121/1.3500674

Yin CC, Qi YF, Liu YH (2014) Trans-dimensional Bayesian inversion of frequency-domain airborne EM data. Chinese J Geoohys (in Chinese) 57:2971–2980. https://doi.org/10.6038/cjg20140922

Bodin T, Sambridge M (2009) Seismic tomography with the reversible jump algorithm. Geophys J Int 178:1411–1436. https://doi.org/10.1111/j.1365-246X.2009.04226.x

Gelfand AE, Smith AFM (1990) Sampling-based approaches to calculating marginal densities. J Am Stat Assoc 85:398–409. https://doi.org/10.2307/2289776

Aleardi M, Salusti A (2019) Markov chain Monte Carlo algorithms for target-oriented and interval-oriented amplitude versus angle inversions with non-parametric priors and non-linear forward modellings. Geophys Prospect 68:735–760. https://doi.org/10.1111/1365-2478.12876

Lee KH, Liu G, Morrison HF (1989) A new approach to modeling the electromagnetic response of conductive media. Geophysics 54:1180–1192. https://doi.org/10.1190/1.1442753

Munkholm MS, Auken E (1996) Electromagnetic noise contamination on transient electromagnetic sounding in culturally disturbed environments. J Environ Eng Geophys 1:119–127. https://doi.org/10.4133/JEEG1.2.119

Sun H, Li X, Li S, Qi Z, Su M, Xue Y (2012) Multi-component and multi-array TEM detection in karst tunnels. J Geophys Eng 9:359–373. https://doi.org/10.1088/1742-2132/9/4/359

Shandong Provincial Geo-mineral Engineering Exploration Institute (2018) Report on the geological stability evaluation in Shengjing area. Shandong Provincial Geo-mineral Engineering Exploration Institute

Funding

This research is supported by Natural Science Foundation, Shandong Province (Grant No. ZR2019MD019), and National Natural Science Foundation of China (Grand No. 42074145).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Deng, S., Zhang, N., Kuang, B. et al. Bayesian Markov Chain Monte Carlo inversion of surface-based transient electromagnetic data. SN Appl. Sci. 4, 254 (2022). https://doi.org/10.1007/s42452-022-05134-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-022-05134-5