Abstract

This study presents the vulnerability of digital documents and its effective way to protect the ownership and detection of unauthorized modification of multimedia data. Watermarking is an effective way to protect vulnerable data in a digital environment. In this paper, a watermarking algorithm has been proposed based on a lossy compression algorithm to ensure authentication and detection of forgery. In this proposed method, the CDF9/7 biorthogonal wavelet is used to transform the watermark image and encoded the wavelet coefficients using Set Partition in Hierarchical Tree algorithm. Then, the encoded bits are encrypted by shuffling and encrypting using symmetric keys. After that the encrypted bits are inserted into the Least Significant Bit position of the cover image. In addition, two tamper detection bits are generated based on texture information and pixel location and inserted in the watermarked image. The proposed algorithm reconstructs the watermark and the tampering region more efficiently and achieved 56.5463 dB PSNR for STARE database. Experimental result shows that the proposed algorithm is effectively prevented different attacks and ensure the integrity of watermark bits within the watermarked image. Also finds the tampered region more efficiently compared with the existing state of art algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Due to extensive development in the internet and digital communication technologies, the data generation processes are rapidly changing in contemporary society. Presently, the online digital communication system help to easily store and spread multimedia files such as image, audio, and video. However, during multimedia transmission and storage, the data may alter for illegal use by intruders. Therefore, the copyright protection and identification of ownership, and forgery detection do not maintain data integrity and create problems with image authentication [1]. In many human-centered applications, such as medical image, military communication, remote sensing, and geographic data system implementation, this illegal modification becomes an issue. Digital watermarking systems can be integrated to address these problems. Digital image watermarking is a technology that provides protection from an opponent by implanting an imperceptible or perceptible watermark in a digital image.

In this paper, a fragile watermarking algorithm has been proposed for image authentication, tamper identification, tamper localization, and watermark reconstruction. To prevent unlawful digital data transformation, many watermarking systems have been proposed to tackle the problem [2,3,4]. In this field, numerous researchers have done great work. The authors of [5, 6] proposed a Discrete Wavelet Transform (DWT) based blind image watermarking algorithm coupled with a second level Singular Value Decomposition (SVD) algorithm to improve both imperceptibility and robustness. The authors used image blocking to find the optimum image sub-block size. Also, a two-level authentication is performed to ensure security. Liu et al. [7] proposed a chaotic-based watermarking algorithm. The watermark bits has generated by mapping the differential binary image from the original chaotic image. Then, the watermark bits have embedded into the LSB bit-plane on the original image. Rawat [8] proposed a chaotic pattern-based fragile watermarking algorithm, using an ’XoR’ operation between a binary watermark image and a chaotic logistics mapping image. All these strategies are effective in some common attacks, but can’t resist attacking content alone. In order to address this issue, a fragile watermarking algorithm based on [8] has been proposed by Teng et al. [9]. The Local Binary Pattern (LBP) in the watermarking area has been introduced in [10, 11]. Zhang and Shih proposed a semi-fragile aqueduct based on space-related LBP operators [10]. The host image is fitted with a binary watermark by changing the pixel values of the neighborhood in each block using its LBP pattern. Experimental results have shown that this algorithm has some degree of robustness on overall image processing operations, such as contrast and JPEG compression. The main disadvantage of these watermarking systems is that the detection process is not blind. When the detection process is applied on the receiver side, the original watermark or image required. This is not possible because it is quite difficult to provide the original watermark or image at the receiver. Therefore, the semi-blind and blind watermarking method with high detective precision becomes a subject of study. Benrhouma et al. [12] proposed a watermarking algorithm for blind manipulator detection in which a local pixel contrast is established between the pixel values of the neighborhood and the average pixel value of the respective frames. Preda [13] has proposed a semi-fragile wavelet-based watermarking scheme. The wavelet coefficients are permuted first by using a secret key and then it has been divided into various groups. The watermark is used as a binary random sequence made up of the secret key. The watermark bits are generated by quantizing the coefficients. Despite low watermark payloads, this approach achieves better image quality. Nevertheless, several noise dots are scattered in the image during tamper detection, which decreases the detection accuracy. Filtering and morphology operations are performed to purify noise points. However, for different images, it is hard to achieve and the post-processing operations should be different. The literature survey shows that any watermarking scheme requires a subset of the following property. Imperceptibility: The fundamental requirement for unseen watermarking. In other words, it is vital after the watermark is incorporated to maintain good visual quality. Robustness: The watermark should be constructed so that all assaults do not affect the system performance. Reversibility: Watermarking is one of the finest authentications and manipulation detection methods. However, the watermark may harm the significant data in the initial cover image after the insertion phase. So, a precise cover image at the receiver is hard to obtain. However, applications include military, medical, etc., where it is important to recover initial cover media. Reversible watermarking systems are used in such applications instead of standard watermarking. Payload: The number of watermark bits is the payload. Security: security is evaluated by the assessment of the system’s strength against current assaults. Existing research has shown that in practical application some safety loopholes exist in the watermarking technology. Tamper detection: Manipulation is a deliberate change of files to harm consumers. It is therefore important that during the extraction phase, the watermark and the cover image is revealed. Authentication: Authentication ensures the claimed entity.

However, there are few methods that exist to achieve tamper detection, authentication, and restoration problems in one model. Moreover, most of these study attempts focused on the gray image. Few numbers of study have been made on the enhancement of the visual appearance of the image, and many of those watermarking systems have focused on the effectiveness to detect the tamper region. So, it is essential to develop a system for watermarking which could detect manipulation and also check authenticity to fully retrieve information. Some scientists have used error recovery into account in watermarking systems through the LBP. The contributions of this paper are described as: a fragile watermarking algorithm has been proposed based on a pixel by pixel processing image authentication, tamper detection, and watermark restoration. Here the cover image has transformed into the wavelet domain using the CDF 9/7 bi-orthogonal wavelet. It has a huge success in image compression. The transform coefficient is encoded using the SPIHT algorithm. Then watermark bits have shuffled and encrypted to provide security of the watermark. The embedding process has been done pixel by pixel in the LSB layer of the cover image. Also, two tamper detection bits are embedded into the LSB of each pixel sub-blocks to detect the tampering region. Self-embedding watermarking is done to reconstruct the watermark and host image. The authentication watermark generation process is the reverse of the encoding process. The remaining paper is structured as follows. A brief literature review has presented in Sect. 2. The proposed watermarking algorithm with a suitable block diagram has been presented in Sect. 3. The experiments and performances of different methods are reported in Sect. 4 followed by the concluding remarks are given at the end of this paper.

2 Literature review

This section provides a brief of the development and application of the watermark authentication and recovery process. The performance of the watermarking process is generally described by the recovered watermark, recovered cover image, and the condition of the restoration process. The quality of the recovered image is compared with the original image and it is represented by Peak Signal to Noise Ratio (PSNR), Structural Similarity Index (SSIM), etc. The quality of the watermark and the restoration image is highly depending on the tampering rate. Higher the tampering rate causes more restoration data have been lost, resultant a low-quality image is recovered. A large number of algorithms exist to recover tamper content [14,15,16]. In image authentication techniques, generally, the watermarked image is generated by embedded the watermark bits in the LSB position of the cover image. So, any modification of the watermarked image will change the LSB bit plane and will be lost the watermark bits. The authors of [17] proposed a non-blind digital watermarking technique to preserve the ownership of the color image. In this algorithm, the original watermark is extracted from four similar watermarks. To do this, four similar watermarks are extracted from the watermarked image, which is then combined to generate sub-watermark images, among these images the appropriate watermark is selected using the correlation coefficient (CC). A lossless compression-based image watermarking scheme has been proposed in [18]. Here adaptive prediction technique is used to compress medical images to produce watermark bit and embedded in the LSB of the original image. An adaptive image watermarking algorithm has been proposed in [19] for color images by using the features of the Discrete Cosine Transform (DCT), DWT, and Arnold transformation. The DCT based watermark generation is described in [19, 20]. In these methods, the watermark and original image have divided into image sub-block and independently applied DCT on each block. The DCT coefficients of the watermark block has been partially added with the DCT coefficients of the original image and inverse DCT has been performed to generate the watermarked image.

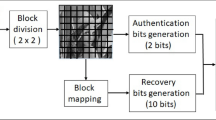

In many watermarking algorithms, the authentication bits and recovery bits are embedded into another block of the original image. If these blocks have tampered, it is not possible to recover the watermark bits. This tampering process is called a coincidence problem. The algorithms described in [21,22,23] do not deal with this problem. A hierarchical watermarking algorithm has been proposed in [24]. In this algorithm, the author used four levels of tamper detection process and used 2 authentication watermark bits in each 2x2 image sub-block. Due to the block independency of the authentication, this algorithm is vulnerable against Vector Quantization (VQ) and college attack. In the reconstruction phase, the bits are recovered by averaging the 6 MSB bit planes of the sub-block.

The authors of [14, 25] used a reference sharing mechanism to proposed a self-embedding watermarking method. By embedding the redundant information in the cover image both methods provide improved quality of the recovered cover image. Again, the described algorithm is vulnerable to the VQ attack. The accuracy of tamper localization is decreased due to the use of a large block.

In [26] authors proposed a self-embedding watermarking algorithm to avoid coincident problems. In this method, the watermark bits have been inerted to the whole image. At first, the watermark image pixels are permuted using a secret private key and a series of pixel pairs are used to divide the permuted image. The recovery bit is generated by XoR the pixel pair of the 5th MSB layer. The recovery bit is generated by XoR the pixel pair of the 5th MSB layer. The generated authentication bits and recovery bit have been embedded into the 3rd LSB bit plane of the cover image. In this method, the reference data is used to recover the 5th MSB bit plane. The percentage of the actual recover bit extraction depends on the amount of the tampering rate.

Recently the deep learning-based image watermarking became popular to achieve high capacity and robustness of the watermarking systems [27,28,29]. The synergetic neural networks based digital image watermarking has proposed in [27] to ensure the security and robustness of the watermarking system. The authors embedded the watermark bits into the block DCT component. In this algorithm, the cooperative neural network has been used to detect and extract the watermark. In [28], the host image is divided into equal size subblock, and each subblock is transformed using slantlet transformation. Three copies of watermark information are embedded into the cover image. Optimal block selection logic is used coupled with a multilayer deep neural network. A robust zero watermarking algorithm has been proposed in [29] based on conventional neural networks and deep neural networks. The watermarked image has been generated using Conventional Neural Network (CNN) and XoR operation between the cover image and the watermark image.

3 Proposed method

The proposed watermarking algorithm is described in this section. In the proposed method, the watermark bits generation is done in the transform domain, however, the embedding is done in the spatial domain. The overall image watermarking process is divided into five steps: bi-orthogonal CDF 9/7 wavelet transform, then encoding the wavelet coefficient using the SPIHT algorithm, after that, the watermark bits are permutated and encryption using private keys, then generated two tamper detection bits and finally the embedding process is done in the cover image. Additionally, an error correction coding is used to become the algorithm more robust against different attacks. The block diagram of the proposed watermarking algorithm is shown in Fig. 1.

3.1 Wavelet transformation

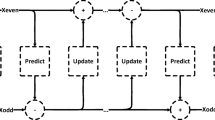

The wavelet transform creates a floating-point coefficient, which helps to compress the image significantly [30]. Although these coefficients are sufficient to reconstruct the original image, the quantization of the coefficient using finite arithmetic precision turns the process into lossy. In the proposed algorithm a bi-orthogonal wavelet is used to decompose the image. The bi-orthogonal wavelet has the invertible capability and supported the symmetric property. These symmetric properties of filter coefficients are required for the linear transfer function. However, the bi-orthogonal wavelet transform has two scaling functions, which efficiently generates multi-resolution coefficients. The CDF 9/7 bi-orthogonal wavelet transform produces a greater number of zero coefficients and the image energy is concentrated within fewer bits. The wavelet filter pairs have the ability to convert into a primary and dual lifting sequence to lift the application. Figure 2 shows the 2-level wavelet transform of Bird image. The 9/7 filter poly-phase matrix for effective production are as follows:

where a, b, c, d are the four lifting parameters and K is the scaling parameter.

3.2 Encoding with SPIHT algorithm

The set partitioning in the hierarchical tree is the most advanced image encoding technique. Its performance is quite better than the existing well-known state of art methods such as JPEG-2000, EZW. It is a progressive coding method, where the wavelet transformed coefficient is considered significant or insignificant based on a threshold [31]. If a particular coefficient of subband has the highest level of value against the threshold is considered as a significant subband otherwise insignificant. In this way, a large group of coefficients has been encoded using fewer bits. The SPIHT algorithm saves a large number of bits according to this relationship that indicates minor coefficients. SPIHT works on two steps: sorting pass and refinement pass.

The block diagram of the SPIHT algorithm is presented in Fig. 3. At the beginning of the encoding process, the highest coefficient value is used to calculate the maximum iteration number. Then, the wavelet coefficient is put into the sorting pass and searching all significance coefficients. The sign of each significant coefficient has been encoded by 0 or 1 for (−) negative or (+) positive coefficient respectively. All significant coefficients are put into the refinement pass from the sorting pass for encoding each coefficient. So, two bits are required to reconstruct and approaching to the real value. The above process is repeated iteratively and the threshold \(T_n\) decreases in each step. The threshold value \(T_n=2^n\) where n is the number of iterations starting with the highest value. The reconstruction process is just reverse and the reconstruction value is considered as \((R_n-R_n-1/2)\).

3.3 Error correction coding

The error correction code is widely used to correct the bit error. In the proposed watermarking algorithm, the convolutional encoder has been used to correct bit error and the Viterbi decoder to decode the encoded bit sequences. The SPIHT coding is very vulnerable to reconstruct the watermark in case bit error. To reduce the bit error and to enhance the reconstructed watermark image, here used 1/2 convolutional error correction coding. At first, the SPIHT algorithm encodes the most significant coefficient and then encodes the less significant coefficient. However, the Most Significant Bit (MSB) can reconstruct the original image approximately. In this work, the first 15,000 bits have considered as a most significant bit, which is encoded by using a 1/2 convolutional encoder, and the other 5536 bits are considered as the less important bits. The less important bits kept unencoded. Finally, 35,536 bits (equivalent to 0.25 bpp) have been embedded into the cover image. Figure 4 shows the block diagram of 1/2 convolutional encoder. At the reconstruction phase, the Viterbi algorithm has been used, which is the most efficient method and used the maximum likelihood decoding algorithm. The algorithm calculates the mean distance between the received signal and the trellis path entered in each state [32]. The Viterbi algorithm drops the least likely trellis path at each stage which decreasing decoding complexity and provides efficient concentration on survival paths of the trellis.

3.4 Encryption

Encryption converts the data in a form that is difficult to understand by the intruder. The encryption process in the watermarking system hides the watermark information from the intruder. Also, this system can be used in data steganography applications. Moreover, data encryption ensures that no one can reconstruct the watermark image except the owner. To keep the watermark more confidential and difficult to understand here is used data permutation and three symmetric keys. The permutation process makes the data sequence random and the keys are used to encrypt the watermark bits. Figure 5 represents the data encryption process. At first, the data stream has been converted into an 8 \(\times \) n block in a zigzag manner as shown in Fig. 5. Then, XoR is performed of every odd row with the secret symmetric key and keep unchanged every even row. After that, every pixel in each row is shifted differently and the shuffling process is done as:

The initial value of ’m’ is considered as 13 and decreases by one for each consecutive row. To get more random data, column-wise and row-wise shuffling is done and mixing all bits effectively. The whole process has repeated several times, in this experiment the encryption process done three times.

3.5 Tamper detection bits generation and embedding process

The bits have been embedded in the LSB of the cover image. The cover image has been divided into 8 \(\times \) 8 non-overlapping blocks. Sixteen watermark bits and 2 tamper detection bits are embedded in each block and keep a specific distance between every embedded bit position. The watermark embedding process with an example is shown in Fig. 7. Two-tamper detection bits are generated using the LBP information, pixel coordinates, MSB value, and a secret key. Figure 6 represents the key generation process for the tamper detection of the proposed watermarking algorithm. The LBP is the best technique to find the texture information of the cover image. The tamper detection keys generation process are described as:

-

1.

The cover image has divided into 8 \(\times \) 8 non-overlapping blocks.

-

2.

Calculated the LBP of the non-overlapping image subblock. The LBP value is 1 when the center pixel’s value is greater than its average value of the neighboring pixel, otherwise, it is 0.

-

3.

Now, summed up each coordinate (i, j) of each block, mod it by 2, then the result is ’XoR’ with LBP.

-

4.

Summed up each column of step 3, then the result is mod by 2 to create a binary row matrix.

-

5.

Now, the row matrix is encrypted by XoR with secret key \(k_i\).

-

6.

After that, the row matrix has converted into a decimal number, to get the first tamper detection bit \(a_1\), the decimal number is modded by 2.

-

7.

To generate the second tamper detection bit \(a_2\), the MSB value has been ’XoR’ed with the LSB value of each pixel block.

-

8.

Steps 5 and 6 is repeated to get the second tamper detection bit \(a_2\).

3.6 Watermark extraction

The watermark extraction process is done by watermark reconstruction, tamper detection, and localization. The extraction processes are as follows:

-

1.

The watermarked image or any suspicious image has divided into 8 \(\times \) 8 non-overlapping image block.

-

2.

All bits from the specified pixel position have Extracted. These are the encrypted watermark.

-

3.

The watermark bits are obtained by decrypting the extracted watermark.

-

4.

The SPIHT decoding algorithm is applied to the watermark and generate the wavelet approximation coefficients.

-

5.

After the inverse wavelet transform, the approximate watermark image is found.

-

6.

For tamper detection and localization, the two-tamper detection bit \(Ga_1\), \(Ga_2\) is calculated as described earlier for the taken watermarked image.

-

7.

Tamper detection bits \(a_1\), \(a_2\) is extracted from the watermarked image. If \(Ga_1=a_1\) and \(Ga_2=a_2\), then the block is valid otherwise the block is marked as a tampered block.

4 Result and discussion

This section evaluates the performances of the proposed watermarking system. A set of images with size 512 \(\times \) 512 has been considered to test the performance of the proposed scheme. The experiment is performed in Intel core-i3, 3110M CPU, 1000 series hp laptop. Which have 4GB RAM, 64 bit windows operating system and 2.40GHz clock frequency. More specifically, here are used four different image datasets USC-SIPI [33], UCID [34], STARE [35], and HDR [36] for performance testing and also use some well-known standard images.The USC-SIPI image dataset contain digitized image with 256 \(\times \) 256, 512 \(\times \) 512 and 1024 \(\times \) 1024 pixels. The gray images are 8 bits/pixel and the color image have 24 bits/pixel. The image dataset has textures, aerials, miscellaneous, and sequences type images. UCID is an uncompressed color image dataset having 1338 images. The STARE dataset contains 20 retinal fundus images with 700 \(\times \) 605 pixels. The dataset has two-part, one part used for training and testing and the other part acts as baseline. The HDR image dataset has 105 images and the image is captured using a Nikon D700 digital still camera. The raw image contains 14 bits image with size 4284 \(\times \) 2844.Three different types of watermark images are considered, one is a bird image; another one is a logo image and the last one is the self-embedded image. All watermark images are 128 \(\times \) 128 in dimension. Figure 8 shows 8 standard images along with the watermark image, the embedded watermarked images, and the corresponding recovered watermark image. Figure 7 shows that there is no visual distortion on the watermark image and the recovered watermark approximations have perfect visual quality. To calculate the performances and effectiveness of the proposed method here are calculated Peak Signal to Noise Ratio (PSNR), Structural Similarity Index Measurement (SSIM), Mean Square Error (MSE), Quality Index (Q-index) and Normalized Correlation Coefficients (NCC). The PSNR, MSE, SSIM, NCC, and Q-index of the standard 512 \(\times \) 512 images are shown in Table 1. The table has shown that the average PSNR of the watermarked image is 56.21. The average MSE, SSIM, NCC and Q-index are 0.1158, 0.9988, 0.9999 and 0.9954 respectively. Also, the proposed algorithm tested on four different datasets and calculates the performance parameter which is shown in Table 2. The tested algorithm shows a better performance parameter value of the watermarked image. Also, Table 2 shows the variation of PSNR, MSE, SSIM, NCC, and Q-index value for the SIPI, UCID, STARE, and HDR image datasets. In the case of the SIPI data set used 64 texture images and 38 aerials images and achieved above 56.1374dB and 56.3774dB average PSNR. 100 images have been used for both UCID and HDR datasets and got an average of 56.5815dB and 56.615dB respectively. However, 56.5463dB average PSNR is found for 397 images in the STARE dataset. Again Table 3 represents the various evaluation results of the four individual images in four datasets. It is shown that the DHR, STARE, and UCID images have greater PSNR than 56.5dB, and SIPI images have less PSNR than 56.5dB. Table 4 shows the comparison of PSNR for Lena, Pepper, Barbara, and Soldhill images fo various existing watermarking algorithms with other proposed algorithms, and the comparison of the proposed scheme is done with the existing LBP scheme. The comparison has shown that for Lena image the proposed algorithm provides better PSNR 56.6702dB than 46.7dB-53.6dB [1, 37,38,39,40,41,42, 44,45,46], [47], and comparable with 57.31 [43]. The result of the proposed algorithm has shown that the scheme has better visual quality (56dB PSNR), which is very important in medical, military, and e-governance applications. Table 3 shows the performance in terms of PSNR, MSE, NCC, SSIM, and Q-index for the four different datasets images.

The robustness of the proposed algorithm is analyzed by measuring the evaluating parameters such as PSNR, SSIM, Q-index, NCC, and BER in presence of different types of attacks as salt and pepper noise, cropping, and copy-move and forgery. The effect of the salt and pepper noise on the Lena image is shown in Figs. 9, 10, and 11 represent the effect of cropping and copy-move and forgery on the Lena and Boat image respectively. From these experiments it is shown that the reconstructed watermark image is slightly changed in quality, however, the tamper location of the watermarked image has been identified efficiently.

Figure 12 represent 3 different types of phase. The definition of each phase are:

Phase 1: The watermark image has 128 \(\times \) 128 pixels and the reconstructed image have the same size (128 \(\times \) 128).

Phase 2: The watermark image has 128\(\times \) 128 pixels and the reconstructed image have 512 \(\times \) 512 pixels.

Phase 3: The watermark image has 512 \(\times \) 512 pixels and the reconstructed image have the same size (512 \(\times \) 512).

In the proposed self-fragile watermarking algorithm, the 128 \(\times \) 128 image is the resized image of the cover image (512 \(\times \) 512). After the reconstruction of the watermark image (128 \(\times \) 128) is converted into a 512 \(\times \) 512 image, which is marked as phase 2 in Table 5. This reconstructed image (Phase 2) is used to reconstruct the tampered region of the watermarked image. At a low bit rate, the Phase 2 approach is well performed than when using a 512 \(\times \) 512 image as the watermark image (represent Phase 3). Figure 12 shows the comparison of the PSNR variation with respect to the changing of the number of bits. The experimental result has shown that at a low bit rate the Phase 2 watermark image provides better PSNR than the reconstruction done in Phase 3. However, at a higher bit rate, the reconstruction is done in Phase 3 achieve higher PSNR than the reconstruction done in Phase 2. Table 5 represents the experimental result for the reconstruct of original 512 \(\times \) 512 watermark images from the 128 \(\times \) 128 and 512 \(\times \) 512 watermark images. It has shown that at a lower bit rate the reconstruction from 128 \(\times \) 128 image (Phase 2) provides better PSNR (23.2976, 25.8606, 27.7552 28.0593) then the process done from 512 \(\times \) 512 image (Phase 3) (8.9894, 11.8017, 14.184, 24.4337). Also, at a low bit rate Phase 2 provides better SSIM and MES than Phase 3. In the proposed watermarking algorithm are used 35,536 watermark bits to provide authentication of an image.

The self-embedding watermarking and reconstruction result is shown in Fig. 13. The experiment had been done on different cover images (Lena, Boat, and Barbara) and different attacks (cropping, and copy-move and forgery). It has shown that at low noise level the reconstructed watermark and the reconstructed cover image have better visual quality around 21dB and 30dB respectively. At higher cropping attack the proposed algorithm facing some vulnerability. At 40% of the cropping attack, many significant bits of the watermark image has corrupted, which destroys the reconstructed watermark image. To improve the visual quality of the watermark image, here incorporated the error correction algorithm that corrects the corrupted bits of the watermark image. The proposed algorithm used the state of art 1/2 convolutional encoder to encode the watermark bits and the Viterbi decoder is used to decode watermark bits. This approach has improved the visual quality of the watermark image and increases the visual image quality metrics such as PSNR, SSIM, Q-index, BER, and NCC. Figure 14 shows the results of the error correction approach and it is clearly shown that the analyzed result increased significantly. The proposed algorithm has tested on a different color image and provides a significant outcome. Table 6 shows the comparison of PSNR, MSE, SSIM, NCC, and Q-index for a different color image. The result has shown that the quality metrics of PSNR, MSE, SSIM, NCC, and Q-index are around 56dB, 0.14, 0.999, 1, 0.99 respectively.

5 Conclusion

In this paper, SPIHT based fragile image watermarking scheme is presented. The CDF 9/7 wavelet transform has been used to convert the watermark image into the wavelet domain, and then the wavelet coefficients encoded using the SPIHT algorithm. This algorithm can localize the tamper region successfully and has restoration capability. The scheme also can detect copy-move falsification successfully, although a single bit is modified in an image. Due to the adaption of error correction coding, this scheme can correct the error bit which is created due to the tampering of the watermarked image and improves the quality of the reconstructed watermark image under different types of attacks. The proposed algorithm has tested on different standard benchmark images. Experimental results indicate that both watermarked images and watermarks are highly sensitive. The average PSNR of the proposed scheme is around 56dB which is higher than the existing LBP based scheme and provides better visual qualities. Also, the security of the proposed scheme is strengthened when the block of data is encoded with the use of separate secret keys in case of a deliberate attack. It allows the proposed system as a better alternative for addressing authentication and copyright protection compared to similar watermarking schemes. This algorithm can be applied in many applications where image authentication and detection of tamper are essential. The proposed algorithm is a fragile watermarking scheme, so, the watermark information may be destroyed by applying basic image processing operations like blurring, contrast enhancement, JPEG compression. Therefore, in the future, the proposed scheme will be extended to a semi-fragile watermarking scheme couple with a deep learning algorithm.

References

Jung KH (2018) Authenticable reversible data hiding scheme with less distortion in dual stego-images. Multimed Tools Appl 77(5):62256241

Verma VS, Jha RK, Ojha A (2015) Significant region based robust watermarking scheme in lifting wavelet transform domain. Expert Syst Appl 42(21):81848197

Pal P, Chowdhuri P, Jana B (2018) Weighted matrix based reversible watermarking scheme using color image. Multimed Tools Appl 77(21):2307323098

Su Q, Chen B (2018) Robust color image watermarking technique in the spatial domain. Soft Comput 22(1):91106

Araghi TK, Manaf AA (2019) An enhanced hybrid image watermarking scheme for security of medical and non-medical images based on DWT and 2-D SVD. Future Generat Comput Syst 101:12231246

Araghi TK, Manaf AA, Araghi SK (2018) A secure blind discrete wavelet transform based watermarking scheme using two-level singular value decomposition. Expert Syst Appl 112:208–228

Liu SH, Yao HX, Gao W, Liu YL (2007) An image fragile watermark scheme based on chaotic image pattern and pixel-pairs. Appl Math Comput 185(2):869–882

Rawat S, Raman B (2011) A chaotic system based fragile watermarking scheme for image tamper detection. AEU - Int J Electron Commun 65(10):840–847

Teng L, Wang XY, Wang XK (2013) Cryptanalysis and improvement of a chaotic system based fragile watermarking scheme. AEU - Int J Electron Commun 67(6):540–547

Zhang WY, Shih FY (2011) Semi-fragile spatial watermarking based on local binary pattern operators. Opt Commun 284(16–17):3904–3912

Chang JD, Chen BH, Tsai CS (2013) LBP-based fragile watermarking scheme for image tamper detection and recovery. In: Proceedings of the IEEE international symposium on next-generation electronics. Taiwan, Kaohsiung, pp 173–176

Benrhouma O, Hermassi H, El-Latif AAA, Belghith S (2016) Chaotic watermark for blind forgery detection in images. Multimed Tools Appl 75(14):8695–8718

Preda RO (2013) Semi-fragile watermarking for image authentication with sensitive tamper localization in the wavelet domain. Measurement 46(1):367–373

Korus P, Dziech A (2013) Efficient method for content reconstruction with self-embedding. IEEE Trans Image Process 22:11341147

Korus P, Dziech A (2014) Adaptive self-embedding scheme with controlled reconstruction performance. IEEE Trans Inf Forensics Secur 9:169181

Singh D, Singh SK (2015) DCT based efficient fragile watermarking scheme for image authentication and restoration. Multimedi Tools Appl 125

Nasir I, Weng Y, Jiang J, Ipson S (2010) Multiple spatial watermarking techniques in color images. Signal Image Video Process 4(2):145154

Castiglione A, Pizzolante R, Santis AD, Carpentieri B, Castiglione A, Palmieri F (2015) Cloud-based adaptive compression and secure management services for 3D healthcare data. Future Gener Comput Syst 43:120134

Kalra GS, Talwar R, Sadawarti H (2015) Adaptive digital image watermarking for color images in frequency domain. Multimed Tools Appl 74(17):68496869

Das C, Panigrahi S, Sharma VK, Mahapatra KK (2014) A novel blind robust imagewatermarking in DCT domain using inter-block coefficient correlation. AEU-Int J Electron Commun 68(3):244253

Li C, Wang Y, Ma B, Zhang Z (2011) A novel self-recovery fragile watermarking scheme based on dual-redundant-ring structure. Comput Electr Eng 37:927940

Shivani S, Singh D, Agarwal S (2013) DCT based approach for tampered image detection and recovery using block wise fragile watermarking scheme. In: Pattern recognition and image analysis. Springer, pp 640647

Singh D, Shivani S, Agarwal S (2013) Quantization-based fragile watermarking using block-wise authentication and pixel-wise recovery scheme for tampered image. Int J Image Graph 13

Lin PL, Hsieh CK, Huang PW (2005) A hierarchical digital watermarking method for image tamper detection and recovery. Pattern Recogn 38:25192529

Zhang X, Qian Z, Ren Y, Feng G (2011) Watermarking with flexible self-recovery quality based on compressive sensing and compositive reconstruction. IEEE Trans Inform Forensics Secur 6:12231232

Zhang X, Wang S, Qian Z, Feng G (2011) Self-embedding watermark with flexible restoration quality. Multimed Tools Appl 54:385395

Li D, Deng L, Gupta BB, Wang H, Choi C (2019) A novel CNN-based security guaranteed image watermarking generation scenario for smart city applications. Inf Sci 479:432–447

Sinhal R, Ansari IA, Jain DK (2020) Realtime watermark reconstruction for the identification of source information based on deep neural network. J Real-Time Image Process 17:2077–2095

Fierro-Radilla A, Nakano-Miyatake M, Cedillo-Hernandez M, Cleofas-Sanchez L, Perez-Meana H (2019) A robust image zero-watermarking using convolutional neural networks. In: 7th international workshop on biometrics and forensics (IWBF), Cancun, Mexico, pp 1–5

Kabir MA, Khan MAM, Islam MT, Hossain ML, Mitul AF (2013) Image compression using lifting based wavelet transform coupled with SPIHT algorithm. In: 2013 international conference on informatics, electronics and vision (ICIEV), Dhaka, pp 1–4

Kabir MA, Mondal MRH (2018) Edge-based and prediction-based transformations for lossless image compression. J Imaging 4:64

Kulkarni A, Mantri D, Prasad NR, Prasad R (2013) Convolutional encoder and Viterbi decoder using SOPC for variable constraint length. In: 2013 3rd IEEE international advance computing conference (IACC), Ghaziabad, pp 1651–1655

University of Southern California. The USC-SIPI Image Database. http://sipi.usc.edu/database/database.php. Accessed 30 Sept 2019

Nottingham Trent University, UCID Image Database. http://jasoncantarella.com/downloads/ucid.v2.tar.gz. Accessed 30 Sept 2019

University of California, San Diego. STARE Image Database. https://cecas.clemson.edu/ahoover/stare/. Accessed 30 Sept 2019

Fun B, Shi L (2019) HDR Dataset Computational Vision Lab Computing Science, Simon Fraser University, Burnaby, BC, Canada. http://www.cs.sfu.ca/colour/data/funthdr/. Accessed 30 Sept

Pal P, Jana B, Bhaumik J (2019) Watermarking scheme using local binary pattern for image authentication and tamper detection through dual image. Secur Privacy 2:e59

Jana B, Giri D, Mondal SK (2018) Dual image based reversible data hiding scheme using (7, 4) hamming code. Multimed Tools Appl 77(1):763785

Jafar IF, Darabkh KA, Al-Zubi RT, Saifan RR (2016) An efficient reversible data hiding algorithm using two steganographic images. Signal Process 128:98109

Lu TC, Tseng CY, Wu JH (2015) Dual imaging-based reversible hiding technique using LSB matching. Signal Process 108:7789

Qin C, Chang CC, Hsu TJ (2015) Reversible data hiding scheme based on exploiting modification direction with two steganographic images. Multimed Tools Appl 74(15):58615872

Lee CF, Huang YL (2013) Reversible data hiding scheme based on dual stegano-images using orientation combinations. Telecommun Syst 52(4):2237–2247

Wang C, Zhang H, Zhou X (2018) LBP and DWT based fragile watermarking for image authentication. J Inf Process Syst 14:666–679

Chang CC, Chou YC, Kieu TD (2009) Information hiding in dual images with reversibility. In: Third international conference on multimedia and ubiquitous engineering, Qingdao, China

Cao F, An B, Wang J, Ye D, Wang H (2017) Hierarchical recovery for tampered images based on watermark self-embedding. Displays 46:52–60

Penga Y, Niub X, Fua L, Yina Z (2018) Image authentication scheme based on reversible fragile watermarking with two images. J Inf Secur Appl 40:236–246

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kabir, M.A. An efficient low bit rate image watermarking and tamper detection for image authentication. SN Appl. Sci. 3, 400 (2021). https://doi.org/10.1007/s42452-021-04387-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-021-04387-w