Abstract

In recent years, with increase in concern about public safety and security, human movements or action sequences are highly valued when dealing with suspicious and criminal activities. In order to estimate the position and orientation related to human movements, depth information is needed. This is obtained by fusing data obtained from multiple cameras at different viewpoints. In practice, whenever occlusion occurs in a surveillance environment, there may be a pixel-to-pixel correspondence between two images captured from two cameras and, as a result, depth information may not be accurate. Moreover use of more than one camera exclusively adds burden to the surveillance infrastructure. In this study, we present a mathematical model for acquiring object depth information using single camera by capturing the in focused portion of an object from a single image. When camera is in-focus, with the reference to camera lens center, for a fixed focal length for each aperture setting, the object distance is varied. For each aperture reading, for the corresponding distance, the object distance (or depth) is estimated by relating the three parameters namely lens aperture radius, object distance and object size in image plane. The results show that the distance computed from the relationship approximates actual with a standard error estimate of 2.39 to 2.54, when tested on Nikon and Cannon versions with an accuracy of 98.1% at 95% confidence level.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivation

Depth recovery is pre-requisite for identifying human actions in surveillance and this information can be attained from sequence of images taken in multiple views using more than one camera. But the real challenge lies in dealing with conventional photography that captures only two dimensional projection of a 3d scene. As conventional cameras [1] are capable of capturing in-focused potions of object(s) which are away from the plane of focus, focusing is considered to be one of the contributing factors for retrieving coarse depth information. When image is in-focus, knowledge of camera parameters help us in estimating range of an object with a typical experimental set up as shown in Fig. 4. When an object is near to the camera, the image tends to grow larger and tends to diminish gradually while moving away from the camera. This inverse relationship is studied in detail by relating three parameters lens aperture radius, object size in image plane and the object distance along camera axial line.

1.2 Research contributions

-

A relations is established between the object distance (or depth), object size and lens aperture radius and observations are taken in auto focusing mode.

-

A multiplying factor is introduced to compensate the effect of object lightning on camera exposure settings while estimating depth of an object.

-

A curve estimation regression analysis is made for validating the relation between camera to object distance (or depth) and object size.

Literature Survey is presented in Section 2, proposed work in section 3 with subsections Prerequisites, Experimental set up and Camera Calibration, Database creation for Depth Estimation, Results & interpretation in Section 4 with subsections dealing with the influence of object lighting and Camera exposure settings during Depth Estimation, Finding Real height from Depth estimated, Method validation, Model accuracy with reference to the existing works. The rest of paper is concluded in Section 5.

2 Literature survey

Depth Estimation or Extraction refers a set of algorithms or techniques aiming at reconstructing a spatial structure of a 3D scene. In order to obtain depth of an object in a 3D scene, several approaches have been in existence. Electronically, all these approaches can be categorized in two ways namely active and passive. Among the active methods so far proposed, light is the first kind of energy to measure distance. Incandescent light produced from high temperature of a coil is used for distance measuring. But the system is sensitive to color of illuminated object and hence it fails.

Time of Flight depth [2,3,4] using phase delay of the reflected IR light, estimates the depth directly without the help of conventional computer vision algorithms. But as the principle is based on phase shift calculation, only a range of distances with in one unambiguous range [0, 2 \(\pi ]\) can be retrieved. And also calculations made from the phase shift \(\emptyset\) gives possible measurement errors. Pulse modulation approach is an alternative ToF, where depth of object is associated not only with duration of light pulse but also with duration of camera shutter. This solves the range ambiguity but at the same time suffers from calibration issues.

Nonsystematic depth errors [5], caused by light scattering and multi path propagation effects in 3D scene reconstruction makes ToF cameras inefficient for practical use. Another critical problem seen in ToF depth image is motion blur [6] occurs when camera or target is in motion. The error in phase measurements induces overshoot or undershoots in depth transition regions with in the bounded integration time limiting the depth accuracy and frame rate.

Triangulation methods offer better solution in estimating depth by capturing a scene taken from multiple views. Here we are restricting our discussion to binocular vision (left and right views). As discussed in [7] differences between two images, give depth information and these differences are known as disparities. In other words shift between stereocorresponding points is termed as disparity.

However estimation of disparity map is considered to be the fundamental problem in computer vision. As mentioned in [8] stereo correspondence points are determined either with maximum correlation or with minimum sum of squared differences (SAD) or absolute intensity Differences (AID) and hence disparity map is constructed. Furthermore efforts are continued in order to increase accuracy in depth estimation and in this process depth map merging approaches [9] multi view stereo came into existence. In this process, depth map is computed for each view point using binocular stereo, synthesized according to path based normalized cross correlation metric and are merged to produce 3D models. However, the disparity map construction needs knowledge of camera configuration with epi-polar geometry constraint. And also occlusions in the monitoring environment creates mismatch in pixel to pixel correspondence which in turn leads to ambiguity in disparity map computation.

Power consumption, camera mounting space [10] and memory stack space for computation process [11] are also the factors that alarmed the researchers to go with monocular vision, i.e. information from single image) in order to obtain depth information.\par A single view point of ToF depth sensor [12, 13] offers significant benefit in providing accurate depth measurements without being affected by ambient lightning, shadows and occlusions. Here the design itself provides illumination and also phase measurement is taken as criteria for depth measurement but not the intensity.

As on Today CW-ToF (Continuous Wave ToF) sensors are dominating the consumer electronics and low end robotics space with certain limitations. As stated in [14] the design mainly suffers from three fundamental issues. One is the range which is limited by power consumption and eye safety considerations. Second is accuracy which is adversely affected by illumination affects. And finally the interference comes in to picture when they start operating in bulk amount in indoor and outdoor environments. Further in order to evaluate depth range accuracy, Plenoptic cameras [15,16,17] design came in to existence. This camera uses not only the intensity of light in the scene but also the directional information of light distribution in scene for retrieving depth of the surface. But with poor reconstruction depth quality and large storage requirements, human action recognition in surveillance systems is impossible and hence the design is not recommended.

Considering the economic and technical issues in all the above mentioned approaches, we proposed a theory relating three fundamental parameters Object size, lens aperture radius and object depth. This theory can be well established in any conventional surveillance cameras without incorporating any additional hardware or software and even it works in both indoor and outdoor environments under any circumstances.

3 Proposed work

3.1 Prerequisites

3.1.1 Focal length

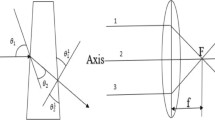

Consider an object ‘O’ at infinity focus, photographed by rectilinear convex lens with a focal length ‘\(f\)’ and aperture radius’\(a_{R}\)’to form an image at a distance equal to ‘\(f\)’ from the image plane.

As shown in Fig. 1 lens with aperture of radius ‘\(a_{R}\)’ project incoming rays on to image plane and hence image distance for object distance at infinity defines the focal point ‘F’. In this case, an object is at infinite focus appears sharp with an image distance \(I_{inf} \; = \;f\)

As shown in Fig. 2, the point ‘F’ is focused at a distance ‘\({\text{I}}_{{{\text{of}}}}\) behind the lens but in front of the image plane. As receiving plane is not at the Image focus, blurriness appears in the image.

'\(O_{d}\)' – Object distance from lens

'\(I_{inf}\)'- Distance between lens and image plane when image is in perfect focus\par

'\(I_{inf} \; - \;I_{of}\)-Distance between lens and image plane when image is de-focused\par.

As shown in Fig. 2 Light rays emanating from an object ‘O’ fall on lens and converges at distance '\(I_{of}\)' on sensor side of the lens.

For a thin lens [18] with focal length ‘\(f\)’, the relation between '\(O_{d}\)' and '\(I_{of}\)'is stated as

We now explore what happens if an object is at a distance greater than or less than \(O_{d}\) is imaged. As show in Fig. 2, object at infinity focus, would be focused at distance \(I_{of}\) behind the lens but in front of the sensor creating a sharp image.

From the Geometry of Fig. 3, we obtain

From the Geometry of Fig. 3, we also obtain

Substituting Eq. (8) in Eq. (2), we obtain

where

Knowing the object distance from camera \(O_{d}\), object real height can be determined from the relation

In many imaging applications, always object distance from lens is considerably greater than image distance. Hence the Eq. (1) can be approximated as

By substituting Eq. (12) in Eq. (11),we obtain

3.2 Experimental set-up and camera calibration

Our research aims at measuring the depth of a stationary object using a single RGB camera by establishing a relationship among focal length (\(f\)), lens aperture (\(a_{R}\)), object size (\(O_{s}\)) and camera to object distance (or depth)(\(O_{d}\)) parameters. In this perspective, a 50 mm Nikkor prime lens (known as conventional lenses with fixed angle of view) is used to focus objects at different working distances starting from 10 cm by successively altering the opening \(f_{stop}\) for repeated collection of readings. ISO and shutter speed parameters are tuned in required ratios for sensor to render the sharp image. The device and its exposure are mentioned in Tables 1 and 2.

The experiment is carried out by setting the camera in auto focus mode. When testing the auto focus accuracy of a camera or lens, it is always essential to have exposure consistency. In our Nikon D5300 with Nikkor 50 mm prime lens, to maintain auto focus consistency, we ensure that camera is set at AF-S/Single Servo mode. Internally the camera relies on contract-detect to acquire focus. Using this focus method, camera forces the lens to shift back and forth anywhere in the frame by adjusting the focal point 'F' to get a sharp image. This focus confirmation happens electronically through the camera sensor and hence it will always be accurate. Ensure that camera is placed on a stable tripod and an object of interest; say aluminum leveling staff is placed in front of the camera at a distance of 10 cm with 1.8 mm \(f_{stop}\), 1000 ISO and 1/100 s exposure setting as shown in Fig. 4.

3.3 Creating a database for depth estimation

300 sample pictures are taken in real time using two camera models canonEOS760D and Nikon D5300 under the guidance of SICA (Southern Indian Association of Cinematographers) to validate the suggested concept. These images are captured in indoor environments. For depth calculation, images acquired from camera are scaled and cropped. The procedure is as follows.

The photographs are taken by moving an object 10 cm ahead and behind the camera within range of 50 cm to 110 cm. In this experiment we see the object literally growing whenever an object approaches near to the camera lens and shrinks literally while moving away from the lens as shown in Fig. 5.

This successive variation in object size is taken as a criterion for distance measurement. The object is cropped from the background from each photograph by specifying its size and position. The cropped image is enclosed by the rectangle. As the rectangle width acquired in pixels; it is multiplied by pixel size to be measured in SI unit. This value is denoted as \(O_{s} .\) the same experiment is repeated with the constant exposure settings as mentioned in the Table 2 for different aperture settings starting from 1.8 to 16 mm.

4 Results and discussions

For each aperture reading (i.e. \(f_{stop}\) = 1.8 mm to 16 mm), object depth (\(O_{d}\)) is estimated from the relation obtained in Eq. (11) and the results are tabulated in Tables 3, 4, 5, 6 and 7.

By getting involved with the readings in Tables 34, 5, 6 and 7 we realize that the focusing distance (i.e. depth of an object) is naturally influenced by an unfolding change in aperture settings whenever an object is taken over / off from the camera and the relation follows.

[From Eq. (10)].

4.1 Influence of object lighting on camera exposure settings during depth estimation

It is evident from [19]; each pixel \(N_{d}\) in the image is proportional to the scene luminance \(l_{s}\) and also dependent on camera settings like Film speed (ISO), exposure time (t) and aperture number \(f_{stop}\).where

(\(R,G and B\) are red, green and blue values of \(i^{th}\) pixel.)

The range of \(K_{c}\) as recommended by ANSI/ISO 2720-1974 [20] varies from 10.6 to 13.4. In practice, value 12.5 is recommended for Canon and Nikon camera. It is understood from our observation that changes in aperture settings affects \(\frac{{l_{s} }}{{f_{stop}^{2} }}\) ratio. This in turn affects the brightness and also the subject distance from the lens. In order to compensate the subject lighting affects induced by exposure settings, a multiplying factor 'k' is included in Eq (10). The modified equation with respect to object lightning on film exposure settings is written as

It is observed that in most of the cases, the value ‘k’ varies in accordance with aperture reading except for \(f_{stop} =\) 1.8 to 2 mm, 2.2 mm to 2.5 mm, 4 to 4.5 mm and 8 to 9 mm. Table 8 discloses the following information.

4.1.1 Finding optimum value of 'k'

But our objective is to find the optimum 'k' value for which the estimated camera to object depth approximate the actual value. It is inferred from our observations, by applying Generalized reduced gradient method that at k=0.666, we attain minimum MSE of 2.566 with in the defined constraints for k.

\({\text{k}} \ge 0.45\;{\text{and}}\;{\text{k}} \le 3.2\)

It is concluded from our observation that for achieving a closes approximation in camera to object depth, an \(f_{{{\text{stop}}}} \; = \;2.8\;{\text{mm}}\) and k value = 0.666 is chosen in surveillance camera design.

In a similar fashion , the proposed equation Eq. (16) also tested on cannon cameras for the \(f_{stop}\) ranging from 5 to 32mm and found that camera to object depth approximating to actual value for lens \(f_{{{\text{stop}}}} \; = \;7.1\;{\text{mm}}\) with optimum 'k' value = 1.44 .

4.2 Finding real height of an object from depth (\({\mathbf{O}}_{{\mathbf{d}}} )\) estimated

Real height can be obtained from an object centered at distinct operating distances provided that object distance (\(O_{d}\)) from the lens, image height and focal length are known. The object depth is calculated from Eq. (16) and the image height is computed by cropping the object of interest for a specified size over each image. As height is obtained in pixels, it is multiplied with pixel size as mentioned in NIKON D5300, for getting measurement in SI units.

On substituting the image height, object depth and focal length in Eq. (16), object real height is obtained. For all the \(f_{stop}\) readings ranging from 1.8 to 16 mm, object is placed at different working distances starting from 50 to 110 cm and, photographs are taken. And real height is extracted from each photographic object and the results are tabulated in the Tables 9 and 10.

4.3 Method validation

The independent variable is object size (\(O_{s}\))

The Table 11 provides the R, \(R^{2} ,\) adjusted \(R^{2}\) and std error of estimate determines how well a inverse model fits the data.

The R column represents multi correlation coefficient, which is considered to be measure quality of prediction of dependent variable. A value of a 0.990 indicates good level of prediction. The \(R^{2}\) column represents the value of proportion of variance in dependent variable. From our value of 0.990, we can see that 99% of the variation of our dependent variable is explained by our autonomous variable ‘objects size’.

The F-ratio in Table 12 tests whether the reverse model fits the information well. The Table 12 demonstrates that the independent variable predicts the dependent variable statistically and substantially, F (1, 74) = 2294.695, p \(<\) 0.0005 (i.e.the inverse model is a good fit for the data).

As shown in ‘Sig’ column of Table 13 all independent variable coefficients are statistically and significantly different from 0(zero), and p \(<\) 0.05 concludes that the coefficients are statistically and significantly different to 0(zero). Hence from general form of an equation to predict estimated distance from object size is expressed as :

4.4 Comparison of our work with existing works

The Table 14 illustrates the fitting process of the model in one of the validation folds. It is found from our observations that our model is competing Yujiao Chen[21] depth estimation technique by showing high consistency between the predicted (estimated) and reference (actual) depth values with a Pearson's correlation coefficient of r = 0.99213. Besides, variables estimated distance and object size are also proved to be highly consistent with the pearson's correlation coefficient of r1 = 0.86 ( 95% confidence level) as shown in Table 14 The negative value indicates that for every positive increase in distance there is negative decrease of fixed proportion in object size. Hence the absolute value is taken. It is also observed that our depth estimation technique is outperforming the MyleneC.Q.Farias[22] stereo vision system based distance measurement in single camera system by giving a good quality of curve fit with RMSE of 0.041356 on Nikon model data set acquisition and 0.056232 on canon model. The details are furnished in the Table 15.

5 Conclusions

This work provided a theory related to object size, lens aperture radius and object depth. For this purpose, near range photography is used for this purpose to explore the impact of object size and lens aperture radius on depth (or distance) in auto focusing modes. It is also found that change in aperture settings induces a variation in luminance of an object which is to be calculated. This variation is also taken into account when estimating an object's depth from the center of the lens. For experimentation, Nikon D5300 with 50 mm prime lens is used. By placing the lens in infinite focus, the distance measurements are calculated for different apertures.

This research created an inverse model to object distances with a permissible standard error estimate of 3.012, R = 0.990 and \(R^{2} =\) 0.981 regulated by object size values with camera. It is noted that when the lens aperture changes, object lightning also affects the camera to object distance (or depth). This change in exposure settings affects \(\frac{{l_{s} }}{{f_{stop}^{2} }}\) ratio. We considered this ratio as 'k'. We are able to achieve an optimum 'k'value= 0.45 for Nikon model with \(f_{stop}\)=2.8 mm and k = 1.44 for cannon model with \(f_{stop}\) = 7.1 mm. When compared with the Mylene C.Q.Farias[22], Yujiao Chen [21] stereo vision set-up and Said Pertuz et al. [17] Palmieri et al. [23] light field imaging experimental set up, the proposed techniques used single lens arrangement for finding depth estimates. The depth estimates obtained from the proposed model approximate the ground truth with RMSE of 0.05, when compared with the recent works on stereo and light imaging by exhibiting around 98.1% correlation.

In our current study we are confined to finding the depth estimate only when object is on camera axial line. But in future the work is likely to be extended in such a way that depth of person is estimated when person is in slanting position. And also finding the depth of person in long range, say up to 40 mts is also a task which is under execution.

References

Chaudhuri S, Rajagopalan AN (2012) Depth from defocus: a real aperture imaging approach. Springer, Germany

Hansard M, Lee S, Choi O, Horaud RP (2012) Time-of-flight cameras: principles, methods and applications. Springer, Germany

Lefloch D, Nair R, Lenzen F, Schäfer H, Streeter L, Cree MJ, Kolb A (2013) Technical foundation and calibration methods for time-of-flight cameras. In Grzegorzek M, Theobalt C, Koch R, Kolb A (eds) Time-of-flight and depth imaging. Sensors, algorithms, and applications. Springer, Berlin, Heidelberg, pp 3–24

Li L (2014)Time-of-flight camera–an introduction. Technical white paper, (SLOA190B)

Fuchs S (2010).Multipath interference compensation in time-of-flight camera images. In 2010 20th International Conference on Pattern Recognition (pp. 3583-3586).IEEE

Whyte O, Sivic J, Zisserman A, Ponce J (2012) Non-uniform deblurring for shaken images. Int J Comput Vision 98(2):168–186

Cabezas I, Padilla V, Trujillo M (2011) A measure for accuracy disparity maps evaluation. In San Martin C, Kim S-W (eds) Iberoamerican congress on pattern recognition. Springer, Berlin, Heidelberg, pp 223–231

Scharstein D, Szeliski R (2002) A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int J Comput Vision 47(1–3):7–42

Liu Y, Cao X, Dai Q, Xu W (2009) Continuous depth estimation for multi-view stereo. In 2009 IEEE Conference on Computer Vision and Pattern Recognition (pp. 2121-2128).IEEE

Wahab MNA, Sivadev N, Sundaraj K (2011) Development of monocular vision system for depth estimation in mobile robot—Robot soccer. In 2011 IEEE Conference on Sustainable Utilization and Development in Engineering and Technology (STUDENT) (pp. 36-41).IEEE

Shan-shan C, Wu-heng Z, Zhi-lin F (2011) Depth estimation via stereo vision using Birchfield's algorithm. In 2011 IEEE 3rd International Conference on Communication Software and Networks (pp. 403-407). IEEE

Langmann B (2014) Depth Camera Assessment. In Wide Area 2D/3D Imaging (pp. 5-19) Springer Vieweg, Wiesbaden

Sarbolandi H, Lefloch D, Kolb A (2015) Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput Vision Image Underst 139:1–20

Achar S, Bartels JR, Whittaker WLR, Kutulakos KN, Narasimhan SG (2017) Epipolar time-of-flight imaging. ACM Transactions Gr (ToG) 36(4):1–8

Wang TC, Efros AA, Ramamoorthi R (2016) Depth estimation with occlusion modeling using light-field cameras. IEEE Transactions Pattern Anal Mach Intell 38(11):2170–2181

Monteiro NB, Marto S, Barreto JP, Gaspar J (2018) Depth range accuracy for plenoptic cameras. Comput Vision Image Underst 168:104–117

Pertuz S, Pulido-Herrera E, Kamarainen JK (2018) Focus model for metric depth estimation in standard plenoptic cameras. ISPRS J Photogramm Remote Sens 144:38–47

Kingslake R, Johnson RB (2009) Lens design fundamentals. Academic press, Cambridge

Hiscocks PD, Eng P (2011) Measuring luminance with a digital camera. Syscomp electronic design limited, 686

Conrad J (2007) Exposure metering relating subject lighting to film exposure

Chen Y, Wang X, Zhang Q (2016) Depth extraction method based on the regional feature points in integral imaging. Optik 127(2):763–768

Sánchez-Ferreira C, Mori JY, Farias MC, Llanos CH (2016) A real-time stereo vision system for distance measurement and underwater image restoration. J BrazSocMechSciEng 38(7):2039–2049

Palmieri L, Scrofani G, Incardona N, Saavedra G, Martínez-Corral M, Koch R (2019) Robust depth estimation for light field microscopy. Sensors 19(3):500

Acknowledgement

The research is supported by Viswesvaraya Ph.D. scheme for Electronics & IT [Order No: PhD-MLA/4(16)/2014], a division of the Ministry of Electronics & IT, Govt of India. We gratefully acknowledge the association of SICA (Southern Indian Cinematographers), Chennai, for creating the Dataset to validate the proposed theory. The author would also like to thank the Student society, Department of Computer Applications who have been with us and supported us in Dataset creation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alphonse, P.J.A., Sriharsha, K.V. Depth perception in single rgb camera system using lens aperture and object size: a geometrical approach for depth estimation. SN Appl. Sci. 3, 595 (2021). https://doi.org/10.1007/s42452-021-04212-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-021-04212-4