Abstract

The present work focuses on the use of a low-cost equipment to monitor static and dynamic tests as an alternative to expensive commercial devices. First, we describe a procedure for cameras calibration and image processing in order to establish a less invasive (than other common instrumentation, such as strain gauges) and much cheaper motion capture method (than commercial tracking systems). Following, two cameras and one personal computer (with an image acquisition board) are used as the monitoring apparatus for tracking the movements of a flexible cable under harmonic excitation at its top end. The experimental results are then compared with numerical simulations, showing a fairly good agreement and the same level of precision as that obtained with one of the most used motion capture system in laboratories. The proposed experimental methodology correctly identifies the displacements, frequencies, and dynamic geometric behavior of the flexible model in all directions. Although commercial solutions are faster, since data processing and cameras calibration take place in real time, the acquisition cost of the suggested equipment is most affordable for small industries, educational institutions and projects with restrict budget on kinesiology, physiology, dentistry, facial recognition and mechanics, among others.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently, many areas of knowledge developed in an unprecedented way in the history of science, partly due to technological advances and increased processing power of computers. One of these areas is the Digital Image Processing (DIP).

In the DIP field, several algorithms were developed to extract information from images (or frames) obtained by digital cameras (or camcorders), as discussed by Gonzalez and Woods in [1]; Ekstrom in [2]; and Ravi and Ashokkumar in [3], just to name a few important works.

In addition to the DIP, cameras calibration is a mandatory procedure, performed to determine a mathematical relation between the coordinate systems of the image and the object of interest. There are many calibration techniques and some of them are presented and compared by Zollner and Sablatnig in [4]; Kwon in [5]; Hieronymus in [6]; and Abdel-Aziz et al in [7], for example.

Among the camera calibration methods, Zollner and Sablatnig [4] mention that the Direct Linear Transformation, a technique proposed by Abdel-Aziz and Karara (refer to [7]), is efficient, presents a high convergence level for multi-vision purposes and a great accuracy in 3D reconstruction, so that this method is used in the present work.

The use of optical monitoring systems has the advantage of being a minimally invasive and is as an alternative to the classic instrumentation for numerous areas of knowledge, such as Robotics, as presented by Kim and Kweon in [8]; for industrial purposes, as cited by D’Emilia and Gasbarro in [9]; for applications on traffic such as studied by Guiducci in [10]; Tummala in [11]; and Feng et al in [12].

Cattaneo et al [13] emphasizes the importance of camera calibration and distortion correction when using cameras for surveillance. The optical monitoring is also extensively used in Biomechanics, e.g., for performance improvement and injury prevention, as studied by Spörri in [14].

In addition to traditional applications and use in monitoring experiments, digital cameras have been shown to be useful in Education, mainly to measure the attention and participation of students in pedagogical activities, as investigated by Neves et al in [15], and Anh et al in [16].

Recently, the use of this type of resource has been used in Machine Learning and Deep Learning, respectively refers to Nichols [17], and Bogdan et al [18], for example.

Underwater applications are also doable, both using commercial or in-house solutions, as respectively studied by Silvatti et al in [19], and Amarante in [20].

All of these numerous and varied applications form a non-exhaustive list of the possible uses of optical monitoring, based on DIP.

Moreover, although optical instrumentation applications have increased considerably in the last two decades, the relatively high cost of acquisition makes its use unfeasible for many sectors, such as schools, small and medium-sized companies, and independent professionals and researchers.

For comparison purposes, the equipment used in this work cost less than US$ 400 and a commercial optical monitoring system, with the same number of cameras and a built-in software, costs up to US$ 60,000.

In this paper, we propose the use of digital image processing and camera calibration techniques to track targets attached to an object of interest using a low cost equipment and a numerical routine specially developed for this work. For the method validation, experimental tests are performed to determine the static geometric configuration and dynamic response of a flexible tube subject only to its own weight.

The dynamics of flexible cables have several practical motivations, such as electrical wiring under environmental loads or marine risers, which are tubes specialized in offshore oil and gas production. This application also holds some particularities, such as its elasticity, the importance of the bending stiffness at its both ends and the different levels of movement along the suspended length, which challenges the proposed methodology in terms of its accuracy.

Using low-cost devices together with proper numerical procedures to monitor, tests has some advantages over commercial solutions; for example, this equipment is plug and play, able to be customized, easy to transport and store, as well as it can be used as a teaching resource and, above all, makes high-precision monitoring systems more accessible for various purposes.

In order to support the present work, the next section introduces the theoretical background and the methodology used in the experiments and analyzes. Section 4 presents the methods and equipment used in the tests performed. Then, the experimental results obtained are discussed and compared with those obtained with the use of a commercial optical monitoring system. Finally, the conclusions related to the experimental trials are drawn based on the tests results.

2 Theoretical background and methodology

The Digital Image Processing (DIP) is a set of interconnected procedures in which input and output are images, acquired by using a physical device sensitive to a particular electromagnetic spectrum bandwidth.

The amount of light captured by the device’s sensors is controlled by the camera’s aperture and the shutter speed. Other important factors are the proper choice of the camera lens and their focal length, which are related to the magnification or reduction factor that can be obtained.

However, the use of lens may cause distortions in the image, especially near its borders where straight lines bowed (Fig. 1a). To eliminate these deformations, a non-linear automatic correction method developed by Ojanen in [21] is applied, in which perspective in the images is compensated by computing its effects through a pinhole and varying the parameters of the latter. Next, two mappings are applied, promoting translation and rotation of the image pixels, and culminating on a non distorted image (Fig. 1b).

Following, it is necessary to segment the image, used to distinguish two or more objects (or regions), based on the discontinuities (edges and borders) and similarities between gray levels (regions).

The main objective of image segmentation is the patterns recognition for detaching the foreground from the rest of the picture (background).

In a movie, the background is a collection of pixels that does not show significant changes throughout the frame sequence. Subjective segmentation methods, based on the ad hoc choices of an observer, have no generality; automatic techniques, in turn, are appropriate only for images that have gray-scale histograms with peculiar characteristics.

In the present work, reflective targets are used for easy differentiate background and foreground, since sharp changes in the image histogram may indicate object boundaries or patterns, as illustrated in the example of Fig. 2b.

A very usual and efficient procedure for threshold is the Otsu Method, which evaluates the histogram of a shade of gray image as a discrete function of probability density, in order to determine the threshold k that maximizes a inter-class variance relation, see [1].

Next, the image is binarized; however, noises may still remain, especially the so-called “salt and pepper” (impulsive) noise, characterized by small clusters of inverted-color pixels, evident in Fig. 2c.

To attenuate impulsive noises it is recommended to use the median filter, that does not interfere with the edges of the objects. For each pixel p(u, v) of the image, the median of its C-connected neighbors values are calculated. After, a new image is built by replacing the value p(u, v) by med(u, v, C), as shown in Fig. 3. According to [1], C is usually equal to 4, 6 or 8 depending on the positions and amount of noise in the original image.

After obtain a denoised image, as the one shown in Fig. 2d, the next step consists on calibrate the cameras for obtaining the intrinsic and extrinsic parameters of the cameras, and also the transformation between image and object coordinate systems. Zollner and Sablatnig in [4] compare and discuss the main cameras calibration methods. In the present paper, the Direct Linear Transformation (DLT) developed in [7] is used.

DLT method assumes the collinearity of the optical center of camera N = \((u_{0}, v_{0})\), a point of interest \(O=(x, y, z)\) of the object and its corresponding position \(I = (u, v)\) in the image (refer to Fig. 4), which may be determined by an adequate combination of translation and rotation of (x, y, z), given by Eq. 1, formulated by Kwon in [5].

Equations 1a and 1b present 11 unknown parameters (\(L_{1},\ldots,L_{11}\)). In order to calculate these DLT parameters, at least 6 control points, with known coordinates in both systems, are required. Generally, a rigid reference structure is used for this purpose (Fig. 5, at left).

After, those equations are solved numerically, often by the Least Squares Method (LSM); thus, the greater the number of control points, the better the calibration accuracy.

where \(L_{1},\ldots ,L_{11}\) are the so-called DLT calibration parameters, distinct for each camera device.

Image and object coordinates systems. Source: adapted from [5]

DLT parameters determination is the last step concerning cameras calibration. After that, it is now possible to use the calibrated cameras to track the motion of a body, as long as the cameras are not re-positioned or moved in any way.

Determining the real coordinates from the image plane requires to isolate x, y and z in Eqs. 1a and 1b. Given two equations per camera (refer to Eq. 2) and three unknown values, using more than one camera is mandatory.

In Eq. 2, superscript indexes in brackets refer to each individual camera, and m is the number of available cameras.

with

As with the number of control points, the greater the number of cameras, the better the interpolation results of the three-dimensional reconstruction.

3 Materials and methods

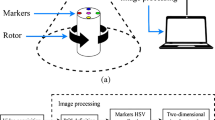

From the theoretical subsidies introduced in the previous section, Fig. 6 illustrates the sequential method used in this work to optically track moving objects, based on each of the methods presented.

The reference frame shown in Fig. 5, at left, was built for the cameras calibration procedures. A fixed XYZ coordinate system was positioned so that its origin coincided with the center of the very left black circle located on the ground, as shown in that figure, at right, where the image is already undistorted, binarized and filtered. Note that the coordinates (X, Y, Z) of each black circle are known.

For both calibration procedures and statics assessment, videos of approximately 1s in time were recorded by two digital CCTV (closed-circuit television) cameras with 600 lines resolution in black-and-white mode, automatic control for gain, 1/3” color CCD (charge-coupled device) sensor, adjustable focus (2.8mm to 12.0mm).

These cameras were connected to a PC by a 16-channel video board, with maximum acquisition rate at 120 frames per second (fps) and attached to a fixed rigid tripod, as shown on the right of the Fig. 7, from which the coordinates (u, v) of the center of each white cluster were determined.

Now, the coordinates (X, Y, Z), in meters, and (u, v), in pixels, are known and Equations 1a and 1b may be used to determine the DLT parameters, by the method of least squares. Cameras are, then, calibrated after this step.

Following, from videos of the flexible cable, each frame is treated by the DIP procedures described in the previous section. The coordinate (u, v) of each object of interest is determined. Applying Equation 2 provide the corresponding (x, y, z) coordinates (3D reconstruction). This latter procedure is repeated by each target, frame by frame.

A commercial motion capture (QTM, for short) system, with two cameras (Fig. 7, at left), were also used, for comparison purposes. Each camera of the QTM system have the following features: adjustable threshold, infrared spectrum range, complementary metal-oxide semiconductor (CMOS) sensor (640 x 320 pixels), 250fps of maximum acquisition rate, and adjustable aperture and focus.

The commercial system requires using reflective targets (light weight and small dimensions spheres, shown on the right of the Fig. 7, at right) for both calibration and motion tracking. The reflective properties of these targets naturally contrast with dark backgrounds, so that CCTV cameras are also able to identify them after digitally processing the captured images. Refer to the mentioned figure.

In the experimental tests, seven reflective targets were attached on the flexible model, at the vicinity of its touchdown point, the most challenging to track due to its interaction with the rigid ground.

In order to improve color contrast, the flexible tube model (filled with fine sand to increase its own weight) and the background were painted in black. For identification purposes, the target closest to the ground, most left, is “target #1”, its adjacent to the right is “target #2” and so on, so the highest on the right is “target #7”, refer to Fig. 7, at right.

It is a best practice to use a calibration structure large enough to ensure that all traceable movement is inside a calibrated control volume (in this work 0.80m x 0.12m x 0.50m, see dimensions in Fig. 7, at left). A valid alternative consists on using a smaller movable structure and take calibration frames in different positions, so that the control volume condition is valid. Moreover, in [5] it is also recommended do not position the cameras facing each other (or do not use this combination in reconstruction), to avoid experimental errors involved in the digitization process.

After cameras calibration, the top end of the flexible tube was lift and attached to an excitation device, so that the vertical and horizontal projections of its suspended length were 4.85m and 2.30m, respectively, as illustrated in Fig. 7, at left. In this configuration, the top angle is about \(10^{\circ }\) with respect to vertical. For better results, the vertical plane of the cable was aligned with x-axis, what is also considered in [5], as a DLT method best practice.

At this point, it is worth mentioning some practical applications of this launching geometry: in offshore production, corresponds to a usual steel catenary riser (SCR) static configuration, often used by semi submersible, tension leg and spar platforms; electric supply cables and other hanging cables; tunnels and arches structures; among others.

Furthermore, this shape design presents stability and flexibility, have excellent distribution of forces and tensions, and provide strength to the structures. These examples are generally used by teachers as a motivation to study the catenary.

The experiments were conducted at Numerical Offshore Tank facilities (at University of São Paulo) using a silicone tube filled with sand, which main properties are in Table 1.

The top end of the model was connected by bearings to a servo-controlled mechanism capable of imposing displacements to the connection point in three different directions.

Circular top motions were imposed to the top end of the model, in the vertical plane (Fig. 7), in pairs of amplitude (20mm, 50mm and 100mm) and period (0.8s, 1.0s and 1.25s). Each condition was repeated 3 times.

Since the test carried out in the shortest period and in the lowest amplitude is the most challenging in relation to monitoring using cameras, for the sake of brevity, we have chosen to present only this set of both static and dynamic results in the next section.

4 Experimental results and discussions

The results obtained are divided in statics and dynamics. After cameras calibration, experiments were carried out, always at the same launch condition, determined from the horizontal (xy-plane) and vertical (z-direction) projections, related to the local coordinates system in Fig. 7, at left.

4.1 Static evaluation

As a first test, static positions were determined from a 1s video recorded by both camera systems at 30fps. For the results, refer to Fig. 8, in which asterisks correspond to the targets identified by the QTM system, and circles are related to those recognized by DIP techniques presented herein. In addition, second degree polynomial approximations are plotted in Fig. 8 for each set of targets identified, as well as a catenary interpolation, using LSM.

Static evaluation, flexible model at \(\theta _{t} \approx 10^{\circ }\) wrt vertical, launched from 4.85m height, only under the action of its own weight. “2nd poly” refers to the second degree polynomial approximation, DIP and QTM represent the results of the Digital Image Processing techniques and the commercial system, respectively, and “catenary” is the theoretical curve

In an academic context, for high school or undergraduate students, second degree polynomial approximations may be used to visually demonstrate the inadequacy of this geometry as the solution to the hanging cables problem, since is very similar in appearance to the actual answer: a catenary (see [22], for example).

Moreover, as investigated by Irvine and Caughey in [23], parabolic arches may describe accurately the geometry of suspended cables, as long as the sag to span ratio is about 1:8, or less, what is verifiable around the origin in Fig. 8.

In a broader analysis, results from the proposed methodology and the QTM system present quite small variations, which are explained by lighting variations, devices sensitivity, or calibration uncertainties. The greatest difference measured in the targets positions is less than 1.0% horizontally (relative to model projection in the xy-plane) and about 1.3% vertically (flexible tube projection in yz-plane).

4.2 Dynamic results

Figure 9 illustrates a compilation of the maximum and minimum amplitudes of targets (#1, #4 and #7) displacements, for all trials of the experiment excited at amplitude of 20mm and period of 0.8s, corresponding to a frequency equal to 1.25Hz. Solid lines refer to the calibration uncertainties incorporated in the corresponding amplitudes measured by the proposed methodology (dotted lines).

From that figure, note the fairly good agreement of results from both monitoring systems, even considering calibration and tracking uncertainties. Moreover, the greater the amplitude of displacement, the smaller the relative errors and the greater the precision of the proposed method.

Still referring to Fig. 9, note that the greatest uncertainties related to the 3D reconstruction are associated with the y-direction, for which the amplitudes of the displacements are smaller, since the flexible model was excited only in the xz-plane. Thus, considering both solid and dashed lines, one may conclude that both cameras systems present a fairly good agreement with each other.

Results are also presented as displacements time series (in x-, y- and z-directions) of the targets (refer to Figs. 10 to 12, at left) and the respective power spectral densities (PSD), provided in the same figure, the first at left, the latter at right, allowing analysis in both time and frequency domains.

For target #1, both proposed methodology and QTM system provided consistent results, specially in the z-direction, due to the its higher displacements amplitudes (Fig. 10). Motions along x- and y- directions are negligible, since their values are close to the calibrations uncertainties (0.2mm for the QTM system and shown in Fig. 9 for this work methodology). Although this is the most difficult target to track, the results obtained are considered quite good.

With respect to Fig. 10 at right, note that there are three peaks in the power spectral density graph: the most prominent is related to the excitation frequency while the others correspond to the 1st and 3rd vibrating modes (associated with relatively low energy levels) of the model.

Table 2 presents the first five natural frequencies of the model, which were calculated using the analytical formulation developed by Pesce et al in [24]. In that work, authors determined a closed solution for the catenary cable eigenvalue problem, solved by a classical perturbation technique (WBK method). Note that the first three vibration modes have been excited, especially in x- and z-directions, for all targets (refer to the power spectral density charts on the right of Figs. 10 to 12).

For targets #4 and #7, refer to Figs. 11 and 12 respectively, in which is evident that the time series of displacements and the respective PSD are even more consistent, when both systems results are compared, since the farther a target is from the ground, the greater its displacements and, therefore, the greater the accuracy of the measurements, both in the qualitative and in the quantitative aspect, especially verifiable for target #7 displacements in the x- and z-directions.

As the harmonic excitation was imposed only in xz-plane, the motions amplitudes along the y-direction are quite smaller, so that the respective differences presented by the two monitoring systems are not of concern in practical terms. Furthermore, targets closer to the ground showed displacement levels in the same order as the calibration uncertainties, in the direction perpendicular to the vertical plane which statically holds the model.

5 Conclusions

Digital Image Processing and camera calibration have being increasingly used to monitor experiments, usually performed by commercial systems or conventional instrumentation. This work proposed an easily implementable equipment for target tracking, privileging accuracy and low cost, for situations in which the latter is an important constraint.

Tests were monitored by a commercial system, built-in software, and by an in-house system of two digital cameras connected to a computer. Unlike the commercial system, camera calibration is performed offline in the method proposed, which takes a longer time to obtain the data for a single test; however, serial or repeated experiments, under the same camera calibration, can dilute this time difference, so that this initial disadvantage becomes not significant.

Regarding to experimental tests, the in-house system was able to monitor adequately the static and the dynamics of the flexible tube. Results show a fairly good agreement each other, particularly in x- and z-directions, since the model was initially launched in this plane and the top circular harmonic motions were restricted to those directions. The compatibility between acquired results and its good accuracy is verified for both displacements and frequencies.

The effectiveness of the proposed method and the suitability of the equipment used for monitoring a flexible structure under relative severe conditions (small amplitude and high frequency) is remarkable, both in qualitative and quantitative aspects.

Albeit the methods employed in this work are relatively well known and commercial systems are already widely used in the academic environment for monitoring experiments, it is also the role of scientists to develop, disseminate and make available techniques that are important for both industry and teachers in order to to engage young students for STEM courses. In this sense, this article presents a low-cost and highly accurate solution for experimental monitoring, whose practical applicability transcends academic boundaries, in order to potentially assist a large number of projects, in the most diverse areas of knowledge.

References

Gonzalez RC, Woods RE (2002) Digital Image Processing. Prentice Hall New Jersey, Upper Saddle River

Ekstrom MP (2012) Digital image processing techniques (V.2). Academic Press, Cambridge

Ravi P, Ashokkumar A (2017) Analysis of Various Image Processing Techniques. Int J Adv Netw Appl 8(5):86–89

Zollner H, Sablatnig R (2012) Comparison of methods for geometric camera calibration using planar calibration targets. Int Arch Photogramm Remote Sens Spat Inf Sci. https://doi.org/10.5194/isprsarchives-XXXIX-B5-595-2012

Kwon YH (2008) Measurement for deriving kinematic parameters: numerical methods. In: Hong Y, Barlet R (eds) Handbook of Biomechanics and Human Movement Science. Rooutledge, Abingdon, pp 156–181

Hieronymus J (2012) Comparison of methods for geometric camera calibration. In: Prooceedings of the 22nd International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 39(B5), 595-599

Abdel-Aziz YI, Karara HM, Hauck M (2015) Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Photogramm Eng Remote Sens. https://doi.org/10.14358/PERS.81.2.103

Kim J S, Kweon I S (2001) A new camera calibration method for robotic applications. In Proceedings of the International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium, v. 2, 778-783. IEEE, https://doi.org/10.1109/IROS.2001.976263

D’Emilia G, Di Gasbarro D (2017) Review of techniques for 2D camera calibration suitable for industrial vision systems. In Journal of Physics: Conference Series (Vol. 841, No. 1, p. 012030). IOP Publishing

Guiducci A (2000) Camera calibration for road applications. Comput vis image underst 79(2):250–266

Tummala G K (2019) Automatic Camera Calibration Techniques for Collaborative Vehicular Applications. PhD Thesis, The Ohio State University

Feng W, Zhang S, Liu H, Yu Q, Wu S, Zhang D (2020) Unmanned aerial vehicle-aided stereo camera calibration for outdoor applications. Opt Eng 59(1):014110

Cattaneo C, Mainetti G, Sala R (2015) The importance of camera calibration and distortion correction to obtain measurements with video surveillance systems. Journal of Physics: Conference Series (Vol. 658, No. 1, p. 012009). IOP Publishing, https://doi.org/10.1088/1742-6596/658/1/012009

Spörri J (2012) Biomechanical aspects of performance enhancement and injury prevention in alpine ski racing. PhD Thesis. University of Zurich

Neves AJR, Canedo D, Trifan A (2018) Monitoring Students’ Attention in a Classroom Through Computer Vision. International Conference on Practical Applications of Agents and Multi-Agent Systems, Springer, Cham 371–378. https://doi.org/10.1007/978-3-319-94779-2

Ngoc Anh B, Tung Son N, Truong Lam P, Le Chi P, Huu Tuan N, Cong Dat N, Trung NH, Aftab MU, Van Dinh T (2019) A Computer-Vision Based Application for Student Behavior Monitoring in Classroom. Appl Sci 9(22):4729

Nichols S A (2001) Improvement of the Camera Calibration Through the Use of Machine Learning Techniques. Master Dissertation. University of Florida

Bogdan O, Eckstein V, Rameau F, Bazin J C (2018) DeepCalib: a deep learning approach for automatic intrinsic calibration of wide field-of-view cameras. In: Proceedings of the 15th ACM SIGGRAPH European Conference, https://doi.org/10.1145/3278471.3278479

Silvatti A, Dias F, Cerveri P, Barros R (2012) Camera Calibration for Underwater Applications: Effects of Object Position on the 3d Accuracy. In: Proceedings of the International 30th Conference of Biomechanics in Sports, (191), 127-130

Amarante R M (2015) Dynamic compression of risers. PhD Thesis, University of São Paulo

Ojanen H (1999) Automatic correction of lens distortion by using digital image processing. Rutgers University, Department of Mathematics technical report

Bernitsas M M (1981) Static analysis of marine risers. PhD Thesis, University of Michigan

Irvine H M, Caughey T K (1974) The linear theory of free vibrations of a suspended cable. Proceedings of the Royal Society of London. A. Mathematical and Physical Sciences, 341(1626), 299-315

Pesce CP, Fujarra ALC, Simos AN, Tannuri E A (1999) Analytical and closed form solutions for deep water riser-like eigenvalue problem. In The Ninth International Offshore and Polar Engineering Conference, ISOPE-I-99-154, 10p

Acknowledgements

The lead author thanks Dr. Eng. Rafael A. Watai for his invaluable support in experimental tests and friendship. National Council for Scientific and Technological Development (CNPq) is acknowledged by authors for the scholarships granted (process numbers 153174/2010-2 and 304600/2016-4). Authors also express gratitude to Prof. Dr. Paula S.A. Michima, Federal University of Pernambuco, for her assistance in reviewing this text, and to Numeric Offshore Tank team, notably Prof. Dr. Kazuo Nishimoto, TPN-USP coordinator.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of the authors, Prof. Dr. André Luís Condino Fujarra states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Amarante, R.M., Fujarra, A.L.C. Low-cost experimental apparatus for motion tracking based on image processing and camera calibration techniques. SN Appl. Sci. 2, 1511 (2020). https://doi.org/10.1007/s42452-020-03268-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-020-03268-y