Abstract

The computer vision community has extensively researched the area of human motion analysis, which primarily focuses on pose estimation, activity recognition, pose or gesture recognition and so on. However for many applications, like monitoring of functional rehabilitation of patients with musculo skeletal or physical impairments, the requirement is to comparatively evaluate human motion. In this survey, we capture important literature on vision-based monitoring and physical rehabilitation that focuses on comparative evaluation of human motion during the past two decades and discuss the state of current research in this area. Unlike other reviews in this area, which are written from a clinical objective, this article presents research in this area from a computer vision application perspective. We propose our own taxonomy of computer vision-based rehabilitation and assessment research which are further divided into sub-categories to capture novelties of each research. The review discusses the challenges of this domain due to the wide ranging human motion abnormalities and difficulty in automatically assessing those abnormalities. Finally, suggestions on the future direction of research are offered.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Computer vision (CV)-based human motion modelling and analysis has been extensively researched by the community. But, most of the research can be categorised into pose estimation [160], human-object interaction [63, 98], activity/gesture recognition [31, 65, 113] or human-human interaction [53]. However, comparative analysis of human motion has received relatively less attention from the community. Comparative analysis of human motion is necessary for application areas like automated rehabilitation and/or assessment of stroke, Spinal Cord Injury (SCI), Parkinson’s Disease (PD) or patients with other physical impairments. Patients recovering from such impairments undergo extensive physical rehabilitation and are assessed by Clinicians (physicians, physiotherapists or occupational therapists) that require patients to spend time with their carer(s). The process is expensive, labour-intensive, time-consuming and subject to human error. Statistics show that informal care for rehabilitation is the reason behind 27% of the whole treatment cost. In the case of stroke patients, this amounted to around 2.42 billion pounds a year in the UK in 2016 [130]. Moreover, such assessments may suffer from inaccuracies as visual progress reporting scheme is prone to inconsistent perception. Inaccuracies may also arise from the subjectivity of these behavioral and clinical assessments [96]. In addition, integration of assessment based on kinematic parameters can be more robust and accurate as compared to visual assessment by clinicians alone [17]. Body-worn sensors or marker-based systems are expensive and can be very intrusive to a patient’s day to day activities. Marker-less vision-based human motion modelling and subsequent comparison has the potential to provide home-based, inexpensive and unobtrusive monitoring. It also has potential applications in sports including, but not limited to, diving and figure skating.

1.1 Scope of this review

This review includes relevant articles from the last 20 years that is representative of research in the domain of vision-based physical rehabilitation and assessment. We have focused on articles where the data captured using CV methods has been used for comparative analysis i.e., where intelligent processing is involved. The article also includes articles on virtual rehabilitation and serious games involving vision-based sensors. In virtual rehabilitation although the role of CV is largely limited to tracking, we have focused on articles having a secondary ‘learning’ objective. Activity recognition methods specific to rehabilitation exercises have been also included. Research not set in a clinical scenario but aimed towards assessment of physical impairments have been also covered. Existing research suggest that accurate body joint position estimation is vital for vision-based rehabilitation and assessment. However, human pose estimation has been extensively researched and covered in several surveys and reviews [118, 160]. Similarly, human activity recognition also has been widely explored by the CV community and covered in several surveys [65, 113]. Thus, this review does not aim to cover joint position estimation or human activity recognition methods. Also, it does not include inertial or other non-vision sensor-based research.

2 Domain characteristics

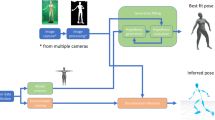

There are many aspects to a vision-based research including but not limited to raw data, feature extraction, feature representation, feature comparison, statistical and stochastic modelling (DL). However, the general flow of a research in the domain of vision-based rehabilitation and monitoring can be broadly illustrated by the Fig. 1. The illustration highlights important characteristics of this domain. It includes, a vision-based sensor such as monocular RGB or depth camera for sensing the data. A low-level feature such as human joint positions. A feature encoding and representation method such as group of joint positions or combination of human kinematic parameters. Then, the encoded features are compared through simple graphical and statistical techniques or through intelligent algorithms. Finally, assessment is done in the form of kinematic parameter comparisons, pose recognition, automated clinical scoring, impairment classification and others. Rehabilitation systems usually have an exercise program and provide feedback. These characteristics can be broadly described in three major parts: primary data, feature extraction and representation, and feature comparison. For application of CV to rehabilitation and assessment of physically impaired persons, we focus on the above mentioned aspects. The domain characteristics w.r.t these aspects are discussed next.

2.1 Physical impairment data

In many other vision-based human motion modelling applications including, but not limited to, human pose estimation and activity recognition large-scale datasets are publicly available. Thus, collecting data is often outside the scope of research. But, for research in the physical impairment domain, authors have often collected their own data. Human movements are multidimensional and so are its abnormalities. Musculo-skeletal impairments are exhibited differently in different patients over a period of time. A multitude of factors such as the impairment involved, extent of injury, area affected, physiological characteristics and care provided lead to hugely varying manifestation of impairments across patients. This is in addition to the wide range of motion capabilities of human beings. Clinicians have specific tests and exercises designed for rehabilitation and assessment of different types of motor abnormalities. Therefore, researchers are also required to run specific experiment to capture data for the assessment of specific musculo-skeletal impairments. Thus, most authors have captured data catering to specific situations corresponding to their objective. Due to difficulty in accessing patients, ethical issues and other such issues, data is difficult to acquire and the datasets are often small. Researchers have used alternative strategies such as healthy persons acting like patients, use of noise to create varied data and others. In this domain, there are a very few publicly available datasets (Table 7) and even these are very small when compared to datasets available for other CV applications areas (e.g., image recognition, human activity recognition). In this article, we highlight the target abnormality, area of body affected and the corresponding data collected for each article reviewed.

2.2 Feature extraction and representation

The ultimate goal of musculo-skeletal patient monitoring is to provide an automated assessment. To assess the progress in physical rehabilitation in the case of physically impaired persons, clinicians rely on physical characteristics such as extent of elbow flexion, shoulder abduction, speed of motion and others. To determine such characteristics researchers have almost exclusively relied on estimation of human body joint positions as primary low-level features. For automated assessment, it is often required to compare a patient’s execution of Activities of Daily Living (ADL) or rehabilitation exercises with a regular healthy execution. Here also, the objective is to compare sequence of joint positions. For normal activity recognition, researchers use image-based features including VLAD [58], Bag of Visual Words (BoVW) [109], Dense trajectories [148] and others. These features incorporate valuable information such as context, optical flow and so on, which are not available when only joint positions are used as low-level features. So far, researchers have mostly used Kinect [161] for obtaining 3D joint positions which has its limitations as explained in [152]. Deep Convolutional Neural Networks (DCNN) have been very successful in 2D human pose estimation [20, 50] and more recently, these networks are used for 3D pose estimation with much higher accuracy [107, 157]. But research in this domain is yet to fully explore the DCNN-based pose estimation.

Various kinematic features such as joint angle trajectory, relative joint position, speed and acceleration are used for establishing the clinical condition of patients. Thus, joint position estimations have been encoded in various different forms for feature representation. Such encoding often comprises of simple human body kinematic features such as relative angles, velocities, body centric coordinates and others [127, 128]. This is useful when a specific type of impairment is in consideration. For example, for discriminating pathological gait, knee angle, step distance and other such parameters are considered. Another approach is to quantify the difference between patient activity and a perfect template consisting of regular healthy activity. For this, researchers have used statistical representations such as Hidden Markov Model (HMM) [138] or Dynamic Time Warping (DTW) [11, 121]. The main aim of feature representation is to select and encode joint positions in a manner that improves the discriminatory power of comparative algorithms with regards to the given clinical condition.

2.3 Feature comparison

One of the major goals of research in vision-based physical rehabilitation and monitoring is to provide an automated clinical assessment of a musculo-skeletal patient’s physical condition. For many CV applications such as object detection or activity recognition the objective is well-defined (e.g., classification). However, for assessment of patients the goal varies widely and often depends on the clinical requirements. The requirements vary from statistical analysis to methods for automatically establishing clinical scores such as Fugl-Meyer Assessment (FMA) [59], Unified PD Rating Scale (UPDRS) [103] and others. For some cases simple presentation and comparison of joint angle trajectories is enough, but for other cases such as automated clinical scoring, advanced comparison algorithms are often required. It needs to be emphasized that researchers have mostly relied only on joint positions as low-level features. Thus, rich vision-based feature representations (e.g., BoVW, MBH) that can provide contextual information are not available. Therefore, it is essential to develop techniques for comparative analysis of features based only on joint positions. Such comparisons can be done in many ways including, but not limited to simple graphical analysis, statistical analysis, sequence comparison, classification and regression. Methods such as graphical comparison are often simple and may not require large datasets. On the other hand, establishing automated clinical scoring requires advanced algorithms and large datasets to work reliably. As explained earlier, obtaining large-scale dataset for each type of abnormal motion is difficult. Therefore, the main challenge in this area is to maximize the applicability of advanced algorithms with limited data.

3 Surveys and taxonomies

3.1 Surveys

Table 1 lists surveys and reviews aimed towards vision-based physical rehabilitation and assessment. Zhou et al. [164] surveyed human motion tracking for rehabilitation. It focuses mainly on various vision and sensor based tracking systems. It further discusses home-based and robot-aided rehabilitation systems. The article does not describe algorithms used for comparative evaluation or abnormal activity detection.

Webster and Celik [152] reviewed Kinect-based research and focused on formulation of rehabilitation exercises for monitoring. The authors discuss elderly care and stroke rehabilitation methods. Within elderly care, fall detection, fall risk reduction and Kinect-based gaming are discussed. Articles under stroke rehabilitation are categorised into evaluation of Kinect, rehabilitation methods and Kinect gaming. Similarly, Da Gama et al. [40] also reviewed Kinect based research. The focus of this review is on formulation of rehabilitation experiments, subsequent monitoring of progress and analysis of various comparison techniques. Most of these techniques rely on basic methods including average angle flexion, Euclidean distance, mean error, correlation coefficient and others. The authors present taxonomy in terms of ‘Evaluative’, ‘Applicability’, ‘Validation’ and ‘Improvement’ categories. The taxonomy is based on a clinical perspective. Both Webster et al. [152] and Da Gama et al. [40] review articles from a clinical perspective where clinical progress made by patients is a major focus. Sathyanarayana et al. [119] reviewed articles from a CV perspective and highlighted vision algorithms. Their taxonomy is based on clinical application and articles include areas such as ADL recognition or fall detection which does not always include abnormal or impaired physical motion. Moreover, the review does not include articles after 2014.

In the current study, existing research has been reviewed from a CV application perspective. We highlight the musculo-skeletal impairment, visual sensor, feature extraction and comparison algorithms for each reviewed article. The discussion focuses on algorithms used for discriminating and assessing physically impaired activity in comparison to regular healthy activity.

3.2 Taxonomy

In this article we develop our own taxonomy, which is necessitated due to the lack of reviews in this area from a CV application perspective. The review both categorises and tabulates the articles for highlighting different aspects. As discussed in Sect. 2, it focuses on the following three characteristics: (1) data collection, (2) feature extraction and representation, and (3) feature comparison. Thus, the articles reviewed are tabulated to address these aspects. The columns headed Target and Dataset highlight the kind of impairment, area of body affected and briefly summarises the data collected. The columns headed Sensor/Data and Feature summarises the types of sensor data, feature extracted from the sensor and feature representation or encoding algorithm. We have also listed any non-vision hardware used along with vision sensors. The last column headed Objective summarises the comparison method and the objective from the application perspective. Most of the reviews on other areas of vision-based research have focused on categorising the discussion in terms of algorithms or techniques used. Articles reviewed often have common goals such as activity recognition, pose estimation and they also use common datasets. Thus a readily available and fair comparison between the methods used can be drawn. But, due to the wide ranging goal of research in the vision-based rehabilitation domain, authors have used very different data, features and comparison methods. Thus, it is very difficult to categorise each research in terms of methods or algorithms used and compare them. Instead, we propose our taxonomy based on end user application. However, discussions on each application type have been further broken in paragraphs based on similarity of methods used. The Author column in each Table also indicates the sub-category an article is placed into. Primarily applications are placed into two major categories, rehabilitation and assessment. These can be further sub-categorised as listed below:

-

1.

Rehabilitation: Automated rehabilitation system

-

(a)

Virtual rehabilitation

-

(b)

Direct rehabilitation

-

(a)

-

2.

Assessment: Point in time assessment

-

(a)

Comparison

-

(b)

Categorisation

-

(c)

Scoring

-

(a)

3.2.1 Rehabilitation

In rehabilitation systems, the primary goal is to provide an automated home or in clinic system for patients to undergo physical therapy, gesture therapy or other rehabilitation exercises. Such a system guides patients to perform their rehabilitation tasks. Rehabilitation may be fully automated and/or clinician mediated. Research in this category normally aims to improve the patient’s physical condition. Most of the research in this category is of the type Virtual Rehabilitation. In virtual rehabilitation, a patient’s performance in a virtual world is assessed rather than directly assessing a patient’s physical performance. This includes an avatar performing tasks in a virtual world and the use of serious games for rehabilitation. Here, subjects are required to perform activities in a virtual world through real world movements. In Direct Rehabilitation systems, users are guided by a web-based interface to perform rehabilitation exercises, while their movements are directly tracked through vision-based sensor. In this case, physical performance of patient is measured instead of their avatar’s performance or their ability to complete tasks in a virtual world. Patient assessment may be inbuilt or may require clinicians.

3.2.2 Assessment

In assessment applications, the goal is to provide a point in time assessment of a patient’s quality of motion linked to one or more body parts. There is no rehabilitation system involved. Assessment may be carried out in a clinical or non-clinical setting. Assessment application can be further categorised into three types and based on the way a user would receive the end output. The first type is Comparison where a patient’s data (e.g., kinematic parameters) are extracted for comparison but there is no decisive automated scoring system available. In such applications, there may be statistical comparison like Analysis of Variance (ANOVA) or simple graphical comparison of kinematics represented by trajectories of an ideal vs a patient’s joint angle or position in time. Second, we have Categorisation type applications, which are more decisive, where the main goal is classification. Movements may be classified as correct-incorrect or may be classified into a few types of abnormalities. This includes both gesture/posture and activity recognition. In the third Scoring type applications, a decisive score is attached to patient movements to assess their quality of motion. This can be clinical scoring such as FMA [59] or author-proposed scoring. The score may be for assessing the quality of movement or quantify the differences from an ideal motion. Next, we review various articles published in the domain of monitoring and rehabilitation of musculo-skeletal patients according to the taxonomy developed. We present all the articles in tabular format and discuss more relevant articles in detail.

4 Virtual rehabilitation

The objective in virtual reality and serious games-based rehabilitation application is to provide a set of virtual tasks that will require the user to perform therapeutic gestures, rehabilitative or cognitive exercises (Table 2). The movement of the user in the real world is tracked through devices like Kinect, or other sensors that can accurately reproduce a user’s movement in the virtual world, often through an avatar. In virtual rehabilitation systems the role of CV is largely limited to tracking. In this survey, we have focused on works with secondary objectives related to CV such as gesture, pose recognition or simple graphical comparison of trajectories of the concerned body joint angle. The discussion is split into non-skeleton, skeleton-based and automated assessment systems.

4.1 Non-skeleton based

An example of virtual rehabilitation where performance in the virtual world is considered for assessment. Here, the hand is tracked indirectly through the green ball [133]

Virtual rehabilitation existed before skeleton tracking became feasible. Early research in this area used indirect methods for tracking human limb movements such as colour detection, object detection and others. In 2008, Sucar et al. [132] used skin colour to track hands for gesture therapy. Colour markers-based skeleton tracking has been used as a cheap alternative to inertial sensor tracking. Sucar et al. [133], developed rehabilitation system for hand movement of stroke patients. A total of 42 patients went through the rehabilitation program. A green ball attached to a hand gripper is used for tracking as shown in Fig. 2. Participants are required to move their arm through a simulated environment. Stroke patients often compensate reduced hand movement through the trunk. This trunk compensation is observed through face tracking. Face detection and tracking is implemented using Haar Cascade classifiers [147]. Authors have also attempted to use their own skeleton tracking algorithms for rehabilitation in virtual reality [101].

Non-skeleton based methods are inherently limited in ability due to lack of joint positions. Mostly, such methods are able to track single body-part such as an arm [132]. This can be sometimes compensated by using vision-based feature extraction methods such as body tracking from silhouette [83, 100]. In Natarajan et al. [100], depth information has been used in a RANSAC-based plane fitting method to discriminate the subject plane from background. This, combined with morphological operations enabled the users to select the human silhouette. In virtual rehabilitation, since most of the assessment is done to achieve the objective of completing the game, there is little scope for further statistical or other algorithmic comparison. However, to tackle complex decision processes, algorithms such as Partially Observable Markov’s Decision Process (POMDP) can be applied as in Aviles et al. [7].

4.2 Skeleton-based

With the introduction of Microsoft Kinect in 2010, skeleton tracking became feasible and readily accessible. Chang et al. [25] used Kinect to measure joint position and angle trajectories in their proposed game for shoulder rehabilitation. Each participant was required to perform 6 different shoulder exercises which were quantified as correct or wrong by OpenNI middleware. The authors also compared Kinect skeletal data with OptiTrack for establishing the ground truth. Fern et al. [52] used several common exercises of hip, knees and shoulders in the form of a serious game called rehabtimals. Joint rotation data over time was used to calculate kinematic metrics such as Range of Motion (ROM), Mean Error (ME) and Mean Error Relative (MER) in ROM. Da Gama et al. [39] used joint angles calculated from Kinect skeleton data to detect correct exercise posture. A total of 3 physiotherapists, 4 adults and 3 elderly subjects were used to evaluate the prototype. The system was able to recognize the correct movements 100% of the time under controlled conditions.

Here, most authors have used their own small datasets and thus, it is difficult to ascertain their generalisability. Owing to availability of skeleton positions, kinematic parameters have been used for performing statistical comparisons like ANOVA analysis. Small datasets are not sufficient for the application of Deep Learning (DL) algorithms but other algorithms such as HMM, DTW could have been used for comparing temporal sequences. Joint angle comparison is good for posture recognition. However, time sequence comparison algorithms are essential for comparing joint angle and/or joint position trajectories.

4.3 Automated assessment

Some virtual rehabilitation systems also have an integrated automated assessment. Adams et al. [1] proposed to assess upper limb motor function through practice of ADL in virtual reality. Motor function metrics, such as duration, normalized speed, Movement Arrest Period Ratio (MAPR) obtained from skeletal tracking via Kinect were used to calculate Wolf Motor Function Test (WMFT) [154]. This score was co-related to the proposed Virtual Occupational Therapy Assistant (VOTA) metrics and it was found that the proposed metrics can be used to assess a patient’s ability to perform ADL. With their affected arm, 14 hemiparetic stroke patients were asked to participate in a virtual meal preparation activity. The results indicated satisfactory correlation between proposed VOTA metrics and the standard WMFT metrics. VRehab [8] used Long Short-Term Memory (LSTM) networks for estimating the degree of patient impairment. For evaluation, 20 healthy subjects were filmed using Kinect and Leap Motion Controller (LMC). Kinect was used to provide joint positions, angles and speeds as features while LMC provided pinch strength, average speed of fingertips. Three different LSTM networks were trained for regressing impairment scores for three different exercises. The trial included five patients who were scored by the system and 5 physiotherapists. The proposed system provided score was shown to be very close to the average score by physiotherapists.

5 Direct rehabilitation systems

In direct rehabilitation systems, there is usually an exercise regimen prescribed for patients and the purpose is to demonstrate their functional improvement. Patients may be guided through a web-based interface for performing tasks similar to virtual rehabilitation type applications. However, unlike virtual rehabilitation, a subject’s physical performance in the physical world is considered for further assessment or feedback. The discussion can be split into two parts: First, where CV sensor is exclusively used to obtain primary data and second, where non-vision systems such as assistive robots are used (Table 3).

5.1 Pure vision-based

Ghali et al. [57] used object detection techniques for tracking hand movement. A camera was placed above a kitchen platform and movement and orientation of objects was used as a measure to track the hand movement. Sequence of hand movement is used to determine whether an activity such as ‘making coffee’ is successfully completed. Kinect does not track finger joint positions. Zariffa et al. [159] used Hu invariant and contour signature extracted from background subtraction as features for classification of hand grip variations. Two cameras, one for top view and one for side view, were used to film 10 subjects against a standard background. Several types of grips fundamental to ADL such as lateral key grip were filmed. KNN was employed for classification.

An instance of a direct rehabilitation systems where a patient’s performance is directly assessed through joint position tracking. In [89], Tai-Chi exercise pose is compared to a standard pose and feedback is provided

The Kinect SDK provides advanced information such as kinematics and gesture recognition. Authors have used this to count the number of times correct posture was attained as a measure of rehabilitation progress [26, 27, 67]. Lin et al. [89] used 10 standing and 18 seated Tai-Chi regimen as rehabilitation exercises. Rehabilitation poses of two patients were compared to a perfect execution of Tai-Chi, for measuring progress over time. Patients were rehabilitated and monitored in two phases. First, with physiotherapists and then with video and Kinect. Posture attained by patients were compared through ME with target posture and subsequently graded. Feedback was provided to the user as shown in Fig. 3. Each time the system’s assessment was compared to that of a physiotherapist for validation.

Su et al. [131] proposed a fully independent Kinect Enabled Home Rehabilitation (KEHR) system. The system provided four functions, (1) rehabilitation management software system, (2) reference exercises, (3) recording exercises performed at home using Kinect and (4) evaluation of performance. Performance was compared through DTW and Fuzzy Logic. Four different subjects were asked to perform different types of shoulder rehabilitation exercises in a controlled environment. Assessment was provided in form of messages like “right hand: good”, “left hand: bad”, “too slow” etc. Physicians and the KEHR system agreed 80% of the time.

5.2 Multi-modal

In multi-modal applications, CV sensors (e.g, Kinect) are combined with other assistive technologies including, but not limited to, assistive robots and electrical stimulation. Normally, the patients using the rehabilitation systems are guided via visual animation or clinicians. Galeano [56] used Functional Electro-Stimulation (FES) for assistance while providing visual feedback through posturography on skeletal data. Frisoli et al. [55] introduced a gaze independent, wearable Brain-Computer Interface (BCI) driven robotic exo-skeleton for upper limb rehabilitation in stroke patients. The first objective was to select real world objects by estimating eye-gaze through a vision-based eye tracking system. Speeded Up Robust Features (SURF) [14] was used for object matching and Lucas-Kanade tracking algorithm [91] was applied to track objects using depth data from Kinect. The second objective was to assist patient arm movement for moving real world objects. To achieve this, a signal from the BCI was fed to a Support Vector Machines (SVM) classifier to ascertain if the subject intended to move his or her arm. Then, the signal was used to actuate robotic-arm. Devanne et al. [42] proposed a humanoid robot guidance system for rehabilitation from lower back pain. A Gaussian Process Based Latent Variable Model (GP-LVM) has been used to model exercise movements from a clinician. It then models the clinician’s activity according to patient morphology to guide the rehabilitating patient.

In [26, 27, 67], the goal is to count correct postures by calculating the joint angles. This fails to tell us how close the patient is to getting the posture correct. A slightly better way is to compare joint angle trajectories as in Excell et al. [49] or grading of error through ME as done by Lin et al. [88]. To judge if an exercise is executed correctly it is also essential to qualify the starting posture as correct [15], which is not the case in approaches mentioned above. These approaches are mostly primitive and lack analysis of the whole temporal sequence. Later approaches have taken advantage of time-sequence comparison algorithms such as DTW or variants of it like Open-ended DTW (OE-DTW) [121]. These have been combined with various grading methods for better understanding of a patient’s state. Clinicians mostly use their experience to judge a patient’s state without taking into account kinematic parameters. Therefore, it may be beneficial to use kinematic parameters as training data and use clinician’s score as labels to build a model that can present a true representative of clinicians. In automated rehabilitation, it is not always feasible to be guided via a screen interface. In such scenarios, other assistive technologies like BCI and human motion imitating robots are very useful [51]. For assistive robots, it is important to work according to a patient’s morphology as demonstrated by Devanne et al. [42]. The authors also show us very good implementation of the latent model needed to transfer low dimensional latent space to high dimensional robot space through the probabilistic model GP-LVM.

6 Comparison

Table 4 summarises articles presenting comparative analysis of kinematic data obtained from vision sensors. In such systems, there is no rehabilitation program designed for patients. These articles are more important with regards to CV rather than clinical objectives. Authors have drawn comparison ranging from simple graphical visualisation, statistical techniques to more advanced Machine Learning (ML) algorithms. This discussion is split into three parts. First part discusses articles where kinematic data is directly used for comparison. Graphical and statistical comparison highlight differences between patient and healthy subjects’ parameters. Second, applications where ML algorithms has been used for modelling kinematic data. The third part discusses use of DL algorithms for comparative analysis of patient motion.

6.1 Kinematics-based modelling

In this type of application, research directly use kinematic data for comparison. Before the introduction of Kinect, authors have used other computer vision algorithms to extract skeleton. Leu et al. [83] used two cameras for filming 20 subjects against a standard background. Human silhouette was extracted through background subtraction and image segmentation. This data was compared to a standard stick figure model for extracting skeleton. For accuracy, the algorithm was tested against standard sensor-based marker. Simple graphical comparison showed visible difference between knee angle trajectories of regular and irregular gait. Natarajan et al. [100] also used their own tracking algorithm while introducing Reha@Home. The authors argued that detection on lower extremity joints in Kinect is not accurate enough. Reha@Home used depth information in combination with morphological operations to extract human silhouette. Four different subjects with varying conditions such as multiple sclerosis, were tested in a hospital setting both before and after treatment. The parameters for gait analysis are hip angle, knee angle, left and right foot step length and stride length. Performance of the system was evaluated through comparison with data from electrogoniometer. Graphical trajectories of gait parameters showed visible difference between the healthy subjects and patients.

The Toronto Rehab Stroke Pose Dataset (TRSP) [44] presents 3D joint positions consisting of upper arm movements for both stroke patients and healthy subjects. Kinect was used for tracking joint positions of 10 healthy subjects and 10 stroke survivors having restricted arm movements. Two experts were recruited to annotate the dataset. The dataset was labelled into 3 different compensatory movements and one normal movement. Area Under Curve (AUC) values obtained from joint angle trajectory showed substantial measurable difference between regular healthy and physically impaired patients’ examples.

Graphical comparison of patients and healthy subjects through kinematic parameters and joint angle trajectories [127]

Graphical and/or statistical comparison has also been used in situations where patients lack any specific impairment. Spasojevic et al. [128] used four different body movements and measurements, for discriminating PD patients from healthy subjects. Gait, Shoulder Abduction Adduction (SAA), Shoulder Flexion Extension (SFE) and Hand Boundary Movements (HBM) were considered for body movements. Speed, rigidity, ROM and symmetry ratio were used as measurement criteria. These were combined to create a Movement Performance Indicator (MPI) vector of size 9. For example, only speed and rigidity was considered for gait movement. Experiments were conducted on 12 PD patients of stage 1, 2 and 3. Subjects were filmed from the front at a distance of 1.5 m. Ground truth was provided by physiotherapist. Graphical and statistical comparison based on kinematic parameters showed visible differences between patients and healthy subjects. Also, four ML classifiers SVM, K-Nearest Neighbour (KNN) and Multi-Layer Percepteron (MLP) were used for classification, among which SVM and MLP performed better. In 2017, Spasojevic et al. [127] added 16 more MPIs to the system described above. Data from finger tracking through sensory glove was used for 15 MPIs representing finger flexion, extension, tapping and hand rotation. For gait, another MPI was added making a vector of total 25 MPIs. Graphical comparison as illustrated in Fig. 4 showed visible differences between PD and healthy subjects. SVM, MLP and KNN were used to classify PD patient stages and healthy subjects. In this article, although ML algorithms have been used for classification, the research presents elaborate statistical comparison directly based on kinematic parameters.

6.2 Statistical modelling

Instead of directly comparing kinematic data, authors have also used ML algorithms for modelling human movement, which is subsequently compared statistically or graphically. Tao et al. [138] used HMM modelling, for online quality of motion assessment of gait on stairs, walking on flat surface, sitting and standing. For discriminating skeleton sequences using HMM, entire sequences has to be fed to a model. This was not possible in the case of online assessment and thus, a variable window approach [99] was adapted to address the problem. Four different HMM models were used to extract features from skeleton data to classify abnormalities using SVM.

Wang et al. [150] devised a series of exercises for musculo-skeletal patients targeting PD patients. Activities include walking, walking with counting and sit to stand. Again, skeleton information was obtained through Kinect placed in front of the patient and on top of the table. Step size, postural swing level, arm swing level, stepping time were used as criteria to asses a patient’s mobility level. The paper proposed a Temporal Alignment Spatial Summarisation (TASS) algorithm to isolate repetitive skeletal movements from video stream through Skeletal Action Unit. The SAU extracted clinically important kinematic parameters like arm swing level and stepping time for evaluation. This method was evaluated against the standard MSR-Action3D [86] action recognition dataset. For clinical validation, a single PD patient and a healthy subject were asked to perform walking and sit-to-stand experiment. Data from both the experiments showed difference between the PD patient and the healthy subject.

Antunes et al. [6] framed the assessment problem as feedback to be provided to a skeleton sequence to better match a standard execution sequence template. The system has been evaluated on three publicly available datasets. The first, ModifyAction used pairs of actions from UTKinect [156] and MSR-Action3D [86] dataset. The second dataset was SPHERE-Walking2015 [104] which contained normal walking and simulated stroke patient walking. The third dataset, called Weight&Balance was introduced in this paper and it presented simulated data of stroke affected arm mobility. Data normalization was used for spatial alignment and DTW was used for temporal alignment. The importance of this research resides in the feedback mechanism that was provided at each instant for better execution of human action.

Baptista et al. [11] also saw the problem as essentially finding the difference between two skeleton sequences. This allowed them to use the publicly available UTKinect [156] dataset to address the problem without specifically using patient or simulated patient data. The authors used Sub-Sequence DTW (SS-DTW) [97] and TCD [33] algorithms to match user action to a specific template and provide feedback highlighting deviations from normal execution.

6.3 Introduction of stochastic methods

The area of vision-based rehabilitation and monitoring has not seen extensive application of DL methods. This is mainly due to a lack of large scale datasets needed to train DL networks. In 2015, Leightley et al. [82] presented the Kinect 3D Active (K3D) dataset which captured motions based on common clinical assessments used to determine altered patient movements. Fifty four subjects aged 18 to 81 were asked to perform 13 clinical tests including balance, open and closed eyes, jump, chair stand and others. Owing to the diverse age related conditions the subjects’ movements varied widely for any given activity. Several algorithms were used for action classification out of which SVM and Artificial Neural Network (ANN) achieved the best accuracy. To assess clinical condition the activities were further analysed in terms of average time taken to complete an action.

In the absence of a large-scale publicly available dataset, simulating or generating data has been also considered. Vakanski et al. [144] trained their Mixture Density Neural Network (MDNN) on the standard action recognition UTD-MHAD dataset [28], to model human movement for each action. Mean log-likelihoods of observed sequences were used as the performance metric for evaluating the consistency of a subject’s performance. Then, random noise was imparted to generate deviations from standard action and these deviations were measured. The proposed model was programmed to be usable with skeleton data captured through Kinect.

The articles presented above propose exclusive assessment type application and often do not include any rehabilitation method. They have used more robust approach for assessment in the sense that authors have compared more kinematic parameters, used more advanced statistical analysis and have used bigger datasets. For example, in rehabilitation type applications, many authors have chosen simple joint angle or joint angle trajectory comparison [49, 67]. In general, authors have used better statistical comparison including Linear Discriminant Analysis (LDA) [127, 128], TCD [11], likelihood [104, 144], ANOVA [77]. In comparison type applications, we also see the implementation of more robust kinematic parameters such as 25 different MPIs in [127, 128], normalized sequences [64], temporally aligned sequences [6]. As a result, such applications are able to carry out more complex comparison including gait analysis and compensatory movements, as opposed to simple gesture or posture recognition of a single or few joints. We also observe the introduction of publicly available datasets which paves the way for competitive evaluation of the proposed models [11, 104, 138]. However, statistical comparison does not provide a decisive scoring or classification of a patient’s condition. The next two sections discuss applications that can classify or grade patient’s quality of motion.

7 Categorisation

In this section, we review articles where the primary goal of the research is to categorise a patient activity into discrete categories including, but not limited to, correct/incorrect posture and good/bad movement. In contrast to comparative analysis, articles reviewed in this section are more decisive in terms of providing patient assessment. Technically, most of the articles in this section have the goal of posture or action recognition where discrimination is done between improper and proper execution of activities. But, it also includes disease severity classifications, determination of a patient’s cognitive abilities and so on. The discussion is split into two parts: (1) hand-crafted or rule-based and (2) statistical algorithms based (Table 5).

7.1 Rule-based

In some cases, final posture is important and simple hand-crafted algorithms are sufficient for correct posture recognition. Metcalf et al. [95] used depth frames for measuring hand (finger) kinematics. A Kinect device was placed 80 cm above a table where subjects were filmed. Binary image of the palm was extracted from depth and RGB data. Palm contour was then fitted to a geometrical kinematic model to determine joint angles. Based on the sequence of joint key-points (finger tip, finger spaces), a grip classification algorithm was developed. Gonzalez-Ortega et al. [62] used computer vision for assessment of patients' cognitive motor abilities. Here, the goal was to see whether a patient can understand verbal instructions and perform simple motor tasks. A group of 10 subjects was used to provide healthy reference while three subjects with frontal lobe injury and two with dementia were used for rehabilitation using the proposed system. The subjects were asked to perform 14 different type of movements such as “touch right eye with right hand” in a controlled environment. Facial expression was detected by combining skeleton data and depth image from Kinect with AdaBoost-based face detector. Eyes and nose were detected using HK classification [16], which is based on curvature obtained from depth image. In psycho-motor exercises, the final posture is important to judge whether the subject understood the instructions. The proximity of 3D hand position to eyes, ear and nose helped in determining successful exercise execution. The result provided by the system was compared with physicians and the overall successful monitoring rate was 96.2%. Leightley et al. [80] used the K3D dataset [82] for automated human mobility analysis. K-means clustering was used to create clinically relevant joint groups for each action. The joint groups containing relevant joint trajectories were classified for recognizing the action. Discrimination between well-performed and poorly performed action was done on the basis of the standard deviation method proposed in Baumgartner et al. [13].

7.2 Statistical and stochastic algorithms-based

An example of categorisation type system. Group of joints are used as encoded features for SVM. Patients are classified as mobile or immobile [80]

Researchers have extensively used advanced Machine learning (ML) algorithms for categorisation type systems. Taati et al. [136] developed an interactive system where subjects interacted with robots for posture correction. Again, skeleton data was obtained from a Kinect device placed 90 cm behind, and 60 cm above, the subject. Seven healthy subjects were asked to simulate a series of compensated mobility movements. Such movements include shoulder hike, trunk rotation compensation, lean forward and slouch postures. For posture classification, a combined HMM and SVM-based algorithm was used. An active learning strategy, which used a combination of manual and automatic labelling, was employed to label the data for classification. The overall accuracy was 86%. Palma et al. [105] presented a method for detecting deviations from normal movements using HMM and Multiple-Dimension DTW (MD-DTW) [140]. The authors created a dataset of 10 different upper and lower limb movements such as hip abduction, elbow flexion and so on with 14 healthy subjects. Then, a cohort of 10 subjects were asked to perform the same movements incorrectly with specified errors. For analysis, the activities were divided into two parts: (1) The limb moved away from the body and (2) the limb moved towards body. HMM was found to be more accurate for detecting error in movements when compared to MD-DTW.

In recent times, Generative Adversarial Networks (GANs) have been used to generate synthetic data including, but not limited to, human faces and human poses. Li et al. [84] used the UI-PMRD dataset [145] to generate a synthetic dataset of incorrect human activities. Four different GANs models were trained, which included two Deep Convolutional GANs (DCGAN), a Wasserstein GAN and a Recurrent GAN. A 1D Convolutional Neural Network (CNN) was trained as discriminator with the GANs and a soft-metric based on absolute differences was used for evaluating the performance of GANs. Modelling or replicating kinematic data through GAN is a major contribution of this article, although it aims to classify physical movements.

In categorisation type applications, authors have used techniques ranging from very basic rule-based classification to state-of-the-art GANs. Authors [62, 95], have used hand-crafted algorithms to classify a patient's stages which are very specific conditions whereas in Leightley et al. [80], simple standard deviation was used to classify a patient’s state. It is very difficult to ascertain the generalisability of these applications in the abesence of comparison through publicly available datasets. Authors have extensively used ML algorithms such as SVM in categorisation type applications [136, 163]. As primary data, authors have mostly used only skeleton data, with exception of Metcalf et al. [95], who have used depth data only. Some authors have relied on kinematic parameters as extracted features [70, 71, 81, 105]. However, others have introduced statistical techniques for feature extraction. For example, Junet al. [69] used PCA (Principal Component Analysis) reduced kinematics and Zhi et al. [163] used noise reduced kinematics. Then, these features have been used for classification through standard algorithms such as SVM, CNN and LSTM. Use of classification algorithms have enabled authors to grade a patient’s state rather than presenting a simple visual, graphical or statistical comparison.

8 Scoring

In this section, we review articles which aim to provide automated assessment of a patient’s state. This includes both clinical (e.g., FMA, UPDRS) and author proposed (non-clinical) scoring. For musculo-skeletal diseases, there are often a multitude of factors that describe a patient’s state or condition. Simple movements such as hip abduction or individual exercises may be classified into correct or incorrect. But, to describe a patient’s state, clinicians often use standard scoring systems including FMA, UPDRS and others. Scoring may be discrete or continuous. In technical terms, authors have used both classification and regression for scoring. This discussion can be split into two parts: (1) author proposed and (2) clinical scoring (Table 6).

8.1 Author proposed scoring

PReSenS, developed by Cuellar et al. [38] is a rehabilitation exercise systems where physiotherapists can remotely upload exercise templates to be followed by patients at home. A complete exercise program was developed consisting of two major types of exercises, posture holding and motion. Posture was compared to a single exercise template whereas motion was compared by the time series matching algorithm DTW. Features such as joint angle and joint rotation was used with DTW for action comparison. For experiment, data from 10 healthy participants were collected. They were asked to do diagnostic exercises, such as arms up, arm extension and flexion, leg-up, flamenco and cross arm. These exercises are widely used in physical therapy . All motion signals were summarised using Piece-Wise Aggregation Approximation for scoring the performance.

Khan et al. [73] used a rapid finger tapping test for clinical evaluation of PD patients. A total of 387 video footage were used from patients with advanced PD. Severity was rated by physiotherapist on a scale of 0 to 3. A group of 84 healthy subjects were clinically evaluated in the same way. Subjects were asked to tap their hands besides their face and above their shoulders. For assessment, first the region of interest was selected as rectangles beside the face. Face detection was achieved by Haar Cascade classifier [147] and motion-template gradient algorithm [18] was used to detect hand movements. Kinematic parameters for calculating UPDRS features were extracted and classified using SVM.

A major limitation of many assessment systems is that they require users to sit in front of a camera and perform exercises. It may be difficult for musculo-skeletal patients to operate the system and perform exercises in a highly constrained setting. Compliance may be poor in such cases for actual patients. Venugopalan et al. [146] proposed a rehabilitation system for traumatic brain injury where patients can be monitored in real time. In the experiments, two Kinect cameras and a near infra-red motion sensor were used to film patients at home. Real-time patient data from the system was compared with data from observation in clinical setting to compute similarity scores. The score was calculated through template matching based on DTW. For evaluation, 16 videos were captured which covered six different movements that were performed by four different volunteers.

Liao et al. [87] proposed a log-likelihood based performance metric to train their DL framework for assessment of rehabilitation exercises. Low level skeleton data was represented through a deep Auto Encoder (AE) network to initially train a Gaussian mixture model for calculating log-likelihood. Using the UI-PRMD dataset [145], the authors then trained and compared the performances of CNN, RNN and Hierarchical Neural Network (HNN) [45], where the log-likelihood based performance metric was used as a label to regress the network for predicting deviations from normal actions (Fig. 5).

8.2 Clinical scoring

An illustration of scoring type systems. Extracted features from a patient are compared to a pre-trained HSMM for automated clinical scoring [22]. Total score reflects the overall score of the whole body whereas local score includes features that assist clinicians to localise movement errors. PatA: Patient A

The ultimate goal of any assessment system is to assess the state of a patient in terms of clinical scoring. This is a very difficult task considering the multitude of factors involved in assessment. Eichler et al. [47] proposed a two Kinect camera based system for automated FMA. The two cameras were placed at a 45 degree angle with respect to the subject. Temporal synchronization was done through a network time protocol server. From Kinect skeleton data, kinematic features relevant to FMA were calculated. The features included sequence time length, minimum and maximum of each measure, average variance of each measure, difference between start and end values of each sequence, variation of average speed and acceleration of each measure. SVM was used for classification. A cohort of 22 participants took part, including 12 stroke and 10 healthy subjects. The proposed system is able to successfully predict scores for the two standard motions “Salute” and “Hand lift”. An ideal automated FMA system would be able to assess the full range of impairments in both upper and lower extremity (Fig. 6).

Performance of patients also varies from time to time during the day. Following the Abnormal Involuntary Movement Scale (AIMS) protocol, Dyshel et al. [46] recorded 9 PD patients with varying severity of Levodopa-Induced Dyskinesia (LID). The subjects performed two motor tasks normally used for UPDRS assessment. After motion segmentation and noise reduction, discriminative features are extracted. For each joint motion, chunks are extracted and put into distributions represented by two 30-bin histograms. One histogram represents normal and the other represents dyskinetic state. Earth Mover Distance (EMD) is calculated and 10 motion chunks representing the highest discrimination were selected. Each 10 dimensional vector was then reduced to a single number using one of the three methods: average motion length, average motion speed, distribution of quantized motion lengths. Soft-margin SVM-based algorithm was used to calculate AIMS score.

Since the introduction of DeepPose [142] in 2014, CNN-based human pose estimation has achieved very high accuracy. Li et al. [85] used the well-known Convolutional Pose Machines (CPM) [153] for extracting skeleton data for analysing LID. Levodopa is used to treat PD but its prolonged use causes motor complications (Dyskinesia). The study involved creating a publicly available dataset involving 9 participants having LID. The skeleton data extracted using CPM was used to generate 15 kinematic features. These features helped to score the participants based on the UPDRS.

In above-mentioned articles, authors have relied mostly on skeleton data obtained from Kinect, with the exception of Li et al. [85] who have used CNN for pose extraction from RGB data. Authors have not combined RGB and skeleton data, which may result in improve accuracy. Mostly authors have used kinematic parameters directly as primary features. In Cuellar et al. [38] and Ciabattoni et al. [36] quaternion-based pose distances has been used as primary features. Quaternions can help in catpuring rotation in 3D and is better than the normally used Euclidean distance. Liao et al. [87] have used Auto-encoders for dimensionality reduction of skeleton sequences. In most applications we have seen little application of dimensionality reduction techniques applied to kinematic data. On applications requiring continuous score Support Vector Regression (SVR) and LSTM has been used. In order to measure a similarity score, temporal sequence matching algorithms such as HMM and DTW have been used. In applications requiring discrete scoring such as UPDRS, SVM has been mainly used. It remains to be seen how modern DL algorithms would perform classification in such cases where large-scale datasets are not available.

9 Datasets

Table 7 summarises publicly available datasets that are captured through vision-based methods. SPHERE is a series of datasets that presents normal and physically impaired movements for walking, walking-up stairs and sit to stand movements. Vakanski et al. [145] introduced the UI-PRMD dataset consisting of 10 different physical activities commonly performed in physical rehabilitation or therapy scenarios. The dataset provides skeleton data obtained through Kinect along with joint angles. Mean Square Error (MSE) on joint angles has been used by authors to calculate variability between each subject, which has also provided a benchmark for establishing incorrect movements. Unlike other areas of CV, most of the research is based on relatively small datasets which are not available publicly.

10 Discussion

In this section, the methods used in articles reviewed are discussed in terms of their usage, drawbacks and disadvantages. Research in this area is very different from objectives like activity recognition where the common goal is to explore machine learning and pattern recognition techniques to recognise various activities. Also, often the datasets used to evaluate the models are the same and thus a direct comparison between the various methods employed by authors is useful. However, due to the widely varying goals, datasets used and types of physical impairments, such comparison in this domain is difficult. Instead, for the benefit of readers, we chose to compare the general techniques and algorithms employed to achieve the goals. Following Sect. 2, we split the discussion into data, feature encoding and feature comparison.

10.1 Physical impairment data

In articles discussed in this review, authors have mostly used Kinect-based skeleton data. The main advantage is that Kinect provides RGB videos, depth videos and 3D joint positions as well as posture through a very cheap and easy to use hardware/software system. Thus, authors from domains other than CV can take advantage of it. However, Kinect system is not very accurate [152] and today’s DL-based solution outperforms the Kinect system both in-terms of 2D [20, 50] and 3D pose estimation [107, 157]. Due to the lack of direct comparison, it is difficult to gauge the scope of improvement in the articles reviewed with DL-based methods instead of Kinect. Unlike other areas of CV application such as activity recognition, authors have not used RGB or depth data in combination with skeleton information. RGB data lacks the precise joint positions whereas skeleton data lacks information such as optical flow, curves, edges and others. Modern neural networks are very good at learning such information. Combining skeleton data with RGB information guides the DL model to focus on RGB features on the human body. This has lead to increased accuracy in activity recognition models [12, 143] and thus, research in this domain can also benefit from the same. Authors have also used colour-based tracking, including tracking the hand while holding a coloured ball and skin colour tracking. These methods were in use before the introduction of Kinect, but some of them are still in use today. They have several limitations such as tracking only one part of body and are subject to noise, background interference. It also needs to be noted that Kinect is no longer in production and researchers will need to switch to other devices such the Orbec Astra [37]. Authors [37], discuss the interchangeability and accuracy of Orbec Astra and the Kinect device. So it is worth taking the time and effort to switch to new devices and techniques. Authors have also used other non-vision based devices such as BCI, LMC which when used with vision-based devices expand the domain of physical rehabilitation to other areas such as BCI to support physical rehabilitation [55].

10.2 Feature encoding

Table 8 highlights the various feature encoding methods used by authors. It also outlines their drawbacks and suggests alternatives. Many authors have used skeleton trajectories or kinematic parameters derived from these trajectories directly as features for comparison. While such parameters are useful for purposes such as posture recognition and joint mobility determination, these are highly specific to the physical impairment and thus are not generalisable and may suffer from over-fitting. Instead of encoding kinematic parameters, the relationship between parameters such as performance metrics, distances, pairwise relations and others, can be used for encoding. Although these methods can produce better results, they also suffer from the same drawbacks such as an inability to learn, over-fitting and so on. A better alternative would be to learn from the data instead of comparing kinematic parameters numerically or graphically. Thus, more recently authors have used techniques including, but not limited to, DTW, HMM and TASS to build temporal models that can help to discriminate differences between patient and ideal pose sequences. Authors have also attempted to encode features from RGB videos for goals such as activity recognition. Feature encoding techniques include, Hu moments, colour-based segmentation, motion template gradients and so on. Mostly, these are pixel-based techniques which suffer from noise interference and do not work in the case of blurry images. Modern alternatives include the use of generalised local feature descriptors such as Scale-Invariant Feature Transform (SIFT), SURF, Oriented FAST and rotated BRIEF (ORB) or image descriptors such as Bag of Words (BoW), Histogram of Oriented Gradients (HoG) and others. Modern techniques also involve DL-based algorithms for semantic segmentation [9] which have produced state-of-the-art results but, again, these require large-scale datasets. In the absence of large datasets, using GANs for modelling artificial patient data can be very useful as shown by Vakanski [84]. There are many variants of GANs, each of which have their own domain of applicability and limitations. In Im et al. [68], the authors present a quantitative comparisons of various GAN types. Instead of manually selecting joints for recognizing abnormal motions [80] one can use attention-mechanism [94] to learn the importance of joints for a particular impairment.

10.3 Feature comparison

In Table 9, various feature encoding methods used by authors are highlighted along with drawbacks and possible alternatives. Most basic methods used by researchers are simple numerical and graphical comparisons of skeleton trajectories, joint angles or other kinematic parameters. The results are hard to generalise beyond the examples presented and may lack statistical significance. A better alternative would be to use some statistical tests such as ANOVA analysis, Chi-squared tests etc. Graph trajectories can be compared with methods such as KL-divergence which could provide statistically significant results. In general, authors have used temporal sequence comparison algorithms like HMM, DTW, LSTM and their variants such as HSMM (Hidden Semi-Markov Model) MD-DTW, Incremental DTW (I-DTW), SS-DTW and others. Note that these algorithms can be used for sequence encoding as well as sequence comparison. Some authors have used classification algorithms such as K-means, SVM to compare encoded sequence generated by HMM or DTW. The same could also be done with techniques such as Conditional Random Fields (CRF) and Bayesian networks. Other techniques involve the use of generative models such as Restricted Boltzmann Machine (RBM), Gaussian RBM (GRBM), Semi-Naive Bayes (SNB) for classification. These have largely been replaced by DL-based algorithms such as DCNN, LSTM and TCN. To compensate for a lack of datasets, one can also look at learning from single images [155]. When comparing sequential data with DL, LSTM is the most popular type of architecture that has been used. But, recently, Temporal Convolutional Networks (TCN) [78] and Ordinary Differential Equation (ODE) networks [29] have shown very competitive results and these two architectures are being actively pursued by researchers. DCNNs have been almost exclusively used for processing image and video data but researchers are now exploring the use of capsule networks [117] for the same.

11 Conclusion

In this review, we have collected summarised and analysed major computer vision-based research in the area of rehabilitation and assessment of patients having physical impairments. In this article, we present our own taxonomy. To the best of our knowledge, this is the only article to date that has covered the latest advances in this application area, and presented them from a CV application point of view. It particularly focuses on comparison and assessment of abnormal human motions. This is especially significant due to the wide-ranging and hugely varying manifestations of abnormal or impaired human movements. We have seen simple graphical comparison of joint angle trajectories to application of complex algorithms such as GANs. The absence of image, video-based, DL algorithms is quite contrasting to other areas such as pose estimation and action/activity recognition where DL algorithms have been almost exclusively used. This could be down to unavailability of large-scale datasets. Also, in this domain, most articles are exclusively focused on the use of skeletal information as raw data. This means low-level image/video features and high-level contextual cues (e.g. body-objects interaction) are not a part of the intelligent processing. Movement information deduced from skeleton information is sparse in nature, whilst image-based dense optical flow form video information is richer in contextual information. Thus, research in this domain may benefit from meaningful combination of skeleton and spatio-temporal information linked to video data. In the case of scoring type applications, DL-based scoring may not be easy to adapt as it's often more complicated for patients and clinicians to understand. More recently, researchers have attempted to fit existing scoring methods while training DL-based models. CV will play a significant role in rehabilitation and assessment, which is a sub-field of health and social care. But owing to several factors such as difficulty in obtaining patient data, ethical issues and so on, this area is yet to be extensively explored by the CV community. We conclude the discussion with recommendation for future research:

Datasets The lack of DL-based methods compared to other applications of CV could be due to the unavailability of large-scale publicly available datasets demonstrating physically impaired patients’ activities. The publicly available datasets mentioned in Table 7 are relatively small as compared to modern datasets targeting DL. For example, the NTU-RGB dataset [123] targeted towards DL-based activity recognition contains 60K samples and is much larger than the datasets presented in Table 7. Therefore, research in this domain needs publicly available large-scale datasets to take advantage of modern DL methods. Although datasets are indispensable, the problem can be mitigated to a certain extent by using GANs. Data Augmentation GAN (DAGAN) has been purpose-built for augmenting data [4]. It is based on conditional GAN and is capable of generating unseen within-class data samples. This is different to traditional data augmentation techniques, where images/videos are rotated or translated to augment the data. Likewise, balancing GANs can be used to mitigate class imbalance problems [93]. In the absence of real data, authors have recently created fully synthetic data from GANs. For example, Li et al. [84] generated synthetic data for incorrect human activity from four different types of GANs. Frid-Avar et al. [54] used GAN to create synthetic data for liver-lesion classification. Besides GAN, single-shot or few-shots learning has the potential to learn from a small amount of data [135]. These are often presented as Siamese networks to discriminate or tell deviations from the reference sample [34]. In Chung et al. [34], the authors used a two-stream convolutional Siamese network for person re-identification. A similar approach could be adapted for assessing physically impaired persons where deviation from regular healthy activity could be measured through a single-shot or few-shot learning.

Statistically significant results Simple graphical or numerical comparison of skeleton-trajectory is not suitable to produce statistically significant results. In such cases, authors can use statistical tests including, but not limited to, ANOVA and Chi-Square. However, such statistical approaches are model-less. These approaches lack generalisation and are not scalable. Moreover, they are often inferior to the model-based techniques. Venugopalan et al. (2013) and Liao et al. (2019) show that model-based approaches such as DTW, Gaussian Mixture Model (GMM) log-likelihood works better than non-model approaches such as Euclidean distance, Mahalanobis distance and Cross-Correlation. Thus, instead of directly comparing kinematic parameters we recommend modelling the data with algorithms including, but not limited to, HMM, DTW and TASS. Moreover, a combination of classification/regression algorithms such as SVM, Random Forest (RF) are even better than modelling the data alone. Taati et al. [136] model the data using HMM and use SVM to classify the data and show that the combination of HMM and SVM works better than using SVM alone.

Modern DL techniques Researchers in this domain have begun to use DL-based techniques such as CNNs and LSTMs. However, authors have used very basic and obsolete architectures that fail to demonstrate the true potential of these algorithms. CNNs used by Zhi et al. [163] and Leightley et al. [81] are very basic in nature. Their recommendation is to use modern pre-trained CNN architectures including, but not limited to, EfficientNets [137] and NasNet [110]. Authors have introduced TCN [74, 78] as an effective and faster alternative to LSTMs. TCN-based networks are suitable for human activity recognition as demonstrated by Kim et al. [74]. Similarly, ODENets [29] are being extensively researched for processing of temporal information. DL is a rapidly evolving field and the introduction of better and efficient techniques is a regular occurrence. For example, Graph Neural Networks first introduced in Kipf et al. [75] has been extensively used by authors for recent state-of-the-art activity recognition models [124] and authors in this domain can potentially benefit from the same.

References

Adams, R.J., Lichter, M.D., Krepkovich, E.T., Ellington, A., White, M., Diamond, P.T.: Assessing upper extremity motor function in practice of virtual activities of daily living. IEEE Trans. Neural Syst. Rehabil. Eng. 23(2), 287–296 (2015)

Ahad, Md Atiqur Rahman, Antar, Anindya Das, Shahid, Omar: Vision-based action understanding for assistive healthcare: A short review. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2019, pp. 1–11 (2019)

Antón, D., Goñi, A., Illarramendi, A., Torres-Unda, J.J.: Jesús Seco. Kires: A kinect-based telerehabilitation system. In: e-Health Networking, Applications & Services (Healthcom), 2013 IEEE 15th International Conference on, pp. 444–448. IEEE (2013)

Antoniou, A., Storkey, A., Edwards, H.: Data augmentation generative adversarial networks. arXiv:1711.04340 (2017)

Antunes, J., Bernardino, A., Smailagic, A., Siewiorek, D.P.: Aha-3d: A labelled dataset for senior fitness exercise recognition and segmentation from 3d skeletal data. In: BMVC, pp. 332 (2018)

Antunes, M., Baptista, R., Demisse, G., Aouada, D., Ottersten, B.: Visual and human-interpretable feedback for assisting physical activity. In: ECCV, pp. 115–129. Springer (2016)

Avilés, H., Luis, R., Oropeza, J., Orihuela-Espina, F., Leder, R., Hernández-Franco, J., Sucar, E.: Gesture therapy 2.0: Adapting the rehabilitation therapy to the patient progress. Probabilistic Problem Solving in BioMedicine, pp. 3 (2011)

Avola, D., Cinque, L., Foresti, G. L., Marini, M.R., Pannone, D.: Vrheab: a fully immersive motor rehabilitation system based on recurrent neural network. Multimedia Tools and Applications, pp. 1–28 (2018)

Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2481–2495 (2017)

Baptista, R., Ghorbel, E., El Rahman, A., Shabayek, F.M., Aouada, D., Douchet, A., André, M., Pager, J., Bouilland, S.: Home self-training: visual feedback for assisting physical activity for stroke survivors. Comput. Methods Programs Biomed. 176, 111–120 (2019)

Baptista, R., Antunes, M.G.A., Aouada, D., Ottersten, B.: Video-based feedback for assisting physical activity. In: VISAPP (2017)

Baradel, F., Wolf, C., Mille, J.: Human activity recognition with pose-driven attention to rgb. In: BMVC 2018-29th British Machine Vision Conference, pp. 1–14 (2018)

Baumgartner, R.N., Koehler, K.M., Gallagher, D., Romero, L., Heymsfield, S.B., Ross, R.R., Garry, P.J., Lindeman, R.D.: Epidemiology of sarcopenia among the elderly in new mexico. Am. J. Epidemiol. 147(8), 755–763 (1998)

Herbert, B., Tinne, T., Luc Van Gool, S.: Speeded up robust features. In: ECCV, pp. 404–417. Springer, New York (2006)

Benettazzo, F., Iarlori, S., Ferracuti, F., Giantomassi, A., Ortenzi, D., Freddi, A., Monteriù, A., Innocenzi, S., Capecci, M., Ceravolo, M.G. et al.: Low cost rgb-d vision based system to support motor disabilities rehabilitation at home. In: Ambient Assisted Living, pp. 449–461. Springer, New York (2015)

Besl, P.J., Jain, R.C.: Invariant surface characteristics for 3-d object recognition in range images. Comput. Vis. Graph. Image Process. 31(3), 400 (1985)

Bigoni, M., Baudo, S., Cimolin, V., Cau, N., Galli, M., Pianta, L., Tacchini, E., Capodaglio, P., Mauro, A.: Does kinematics add meaningful information to clinical assessment in post-stroke upper limb rehabilitation? A case report. J. Phys. Therap. Sci. 28(8), 2408–2413 (2016)

Bradski, G.R., Davis, J.W.: Motion segmentation and pose recognition with motion history gradients. Mach. Vis. Appl. 13(3), 174–184 (2002)

Cameirão, M.S., Badia, S.B., Oller, E.D., Verschure, P.F.M.J.: Neurorehabilitation using the virtual reality based rehabilitation gaming system: methodology, design, psychometrics, usability and validation. J. Neuroeng. Rehabil. 7(1), 48 (2010)

Cao, Zhe, Simon, Tomas, Wei, Shih-En, Sheikh, Yaser: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. (nov 2016)

Capecci, M., Ceravolo, M.G., Ferracuti, F., Iarlori, S., Kyrki, V., Longhi, S., Romeo, L., Verdini, F.: Physical rehabilitation exercises assessment based on hidden semi-markov model by kinect v2. In: 2016 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), pp. 256–259. IEEE (2016)

Capecci, M., Ceravolo, M.G., Ferracuti, F., Iarlori, S., Kyrki, V., Monteriù, A., Romeo, L., Verdini, F.: A hidden semi-markov model based approach for rehabilitation exercise assessment. J. Biomed. Inform. 78, 1–11 (2018)

Capecci, M., Ceravolo, M.G., Ferracuti, F., Iarlori, S., Monteriù, A., Romeo, L., Verdini, F.: The kimore dataset: kinematic assessment of movement and clinical scores for remote monitoring of physical rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 27(7), 1436–1448 (2019)

Cary, F., Postolache, O., Girao, P.S.: Kinect based system and artificial neural networks classifiers for physiotherapy assessment. In: Medical Measurements and Applications (MeMeA), 2014 IEEE International Symposium on, pp. 1–6. IEEE (2014)

Chang, C.-Y., Lange, B., Zhang, M., Koenig, S., Requejo, P., Somboon, N., Sawchuk, A.A., Rizzo, A.A., et al.: Towards pervasive physical rehabilitation using microsoft kinect. In: PervasiveHealth, pp. 159–162 (2012)

Chang, Y.-J., Chen, S.-F., Huang, J.-D.: A kinect-based system for physical rehabilitation: A pilot study for young adults with motor disabilities. Res. Dev. Disabil. 32(6), 2566–2570 (2011)

Chang, Y.-J., Han, W.-Y., Tsai, Y.-C.: A kinect-based upper limb rehabilitation system to assist people with cerebral palsy. Res. Dev. Disabil. 34(11), 3654–3659 (2013)

Chen, C., Jafari, R., Kehtarnavaz, N.: Utd-mhad: A multimodal dataset for human action recognition utilizing a depth camera and a wearable inertial sensor. In: ICIP, pp. 168–172. IEEE (2015)

Chen, T.Q., Rubanova, Y., Bettencourt, J., Duvenaud, D.K.: Neural ordinary differential equations. In: Advances in neural information processing systems, pp. 6571–6583 (2018)

Chen, Y.-L., Liu, C.-H., Chao-Wei, Yu., Lee, P., Kuo, Y.-W.: An upper extremity rehabilitation system using efficient vision-based action identification techniques. Appl. Sci. 8(7), 1161 (2018)

Chen, Y., Huang, S., Yuan, T., Qi, S., Zhu, Y., Zhu, S.-C.: Holistic++ scene understanding: Single-view 3d holistic scene parsing and human pose estimation with human-object interaction and physical commonsense. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 8648–8657 (2019)

Cho, C.-W., Chao, W.-H., Lin, S.-H., Chen, Y.-Y.: A vision-based analysis system for gait recognition in patients with Parkinson’s disease. Expert Syst. Appl. 36(3), 7033–7039 (2009)

Chu, W.-S., Zhou, F., De la Torre, F.: Unsupervised temporal commonality discovery. In: ECCV, pp. 373–387. Springer, New York (2012)

Chung, D., Tahboub, K., Delp, E.J.: A two stream siamese convolutional neural network for person re-identification. In: Proceedings of the IEEE international conference on computer vision, pp. 1983–1991 (2017)

Ciabattoni, L., Ferracuti, F., Iarlori, S., Longhi, S., Romeo, L.: A novel computer vision based e-rehabilitation system: From gaming to therapy support. In: Consumer Electronics (ICCE), 2016 IEEE International Conference on, pp. 43–44. IEEE (2016)