Abstract

Present paper attempted to model complex relationship between CO2 laser–MIG hybrid welding parameters and it has been completed using different algorithms of artificial neural networks (ANN). Input parameters for the study include laser power, welding speeds and wires feed rate and tensile strength of the joint is considered as output. A full factorial experimental dataset is used for the purpose. Variants of back propagation neural networks (BPNN) and Radial Basis Function Networks have been used as training algorithm. Altogether 65 different ANN architecture have been trained and tested using 6 different training algorithms to find out ANN with best prediction capability. 3-11-1 ANN architecture trained using BPNN with Bayesian regularization shows best prediction capability (mean square error 3.24E − 04) and considered as Best ANN. That ANN will be useful for determining required value of welding process parameters to yield a specific welding strength and suitable for online process monitoring and control. Finally, a sensitivity analysis has been conducted and it is found that, maximum welding strength can be obtained with low wire feed rate (4 m/min), low welding speed (2 m/min) and high laser power (3 kW).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Conventional laser beam welding has certain advantages such as high welding speed, minimal distortion by heat and deep weld penetration. But on combining it with conventional metal inert gas (MIG) welding, the process will be able to bridge large gap and to weld reflective materials with ease. That method is known as Hybrid laser-MIG welding where laser beam and MIG are simultaneously employed in the same weld zone. Nowadays, automotive and shipping industries are increasingly employing this method [1].

Hybrid laser welding is influenced by a large number of controllable process parameters bearing complex relationship among them. Initially, influence of those process parameters on weld bead characteristics [2, 3], microstructure and mechanical properties [2, 4,5,6,7,8], welding strength [9] and welding defects [10] were studied by the researchers. Gradually, optimisation of weld penetration depth [11] and arc stability [12] has been conducted using design of experiment (DOE) based techniques. Zhan et al. [13] determined optimised combination of welding parameters during laser welding of 3-mm and 5-mm-thick TA15 alloy plates using fiber laser. Laser power, welding velocity, and defocus distance were considered as input parameters while heat input, fusion depth, and welding width were considered as measurable output parameters during study. Wang et al. [14] has employed disk laser beam welding technique to fabricate 2-mm 2A14-T6 aluminum alloy plates and found that, finest microstructures, minimum porosity ratio, highest hardness and maximum tensile strength can be obtained when laser power is 2500 W, welding velocity is 2.0 m/min and heat input is 75 kJ/m.

However, for modelling of such multivariable complex process, artificial neural networks (ANN) can be employed as an effective tool. ANN is already known as ‘universal function approximator’ [15] for its ability to model underlying function in a dataset to any arbitrary degree of accuracy. It has been employed for process modelling in electric discharge machining [16], laser cutting [17, 18], laser welding [19] and conventional welding [20]. But all research work mentioned above has employed single hidden layer back-propagation neural network (BPNN) technique [21] for modelling. It is most popular among different feed forward ANN algorithms. Dong et al. [22] optimised connection weights of backpropagation neural networks using genetic algorithm to achieve improved prediction capability and employed for welded joints. Prediction error of less than 5% indicates efficacy of the model developed.

Literatures indicate application of BPNN with gradient descent momentum and BPNN with Levenberg–Marquardt (LM) algorithm for ANN modelling. BPNN with LM is decade faster [23] algorithm compared to traditional BPNN, but sometimes yield poor prediction capability while models noisy dataset. However, a network trained through BPNN with Bayesian regularisation (BR) [24] can perform exceptionally well during testing or prediction with small and noisy dataset. Very few applications of it has been found in literatures. Recently, Chaki and Ghosal [25, 26] have employed it for evolutionary computation-based optimization of hybrid laser welding process. Chaki and Ghosal [25] optimized depth of penetration during CO2 laser–MIG hybrid welding of 5005 Al–Mg alloy using ANN-GA hybrid model where maximum penetration depth of 3.84 mm has been obtained during optimisation with mean absolute % errors of 0.7198%. Chaki et al. [26] further employed a comparative study of ANN-GA, ANN-SA and ANN-Quasi Newton models for optimization of hybrid CO2 laser-MIG process and ANN-GA model has shown best optimization performance with absolute % error of only 0.0503% during experimental validation. In both the works BPNN with Bayesian regularisation (BR) has been used for computing objective function during optimization.

Radial basis function network (RBFN) [27, 28] is another feed forward network suitable for process modeling and already applied for several engineering applications [29, 30]. But its application is yet to be found in laser welding processes.

Literature survey indicates very few works on application of ANN for modelling of hybrid laser welding process parameters. Only BPNN with BR [25, 26] has been used for prediction of process parameters as a part of evolutionary computation-based optimization of hybrid laser welding process. Efficacy of no other ANN training algorithms have been tested so far for prediction of hybrid laser welding processes. However, a detailed comparative study on performance analysis of different ANN training algorithms with various network architecture is required to select a suitable ANN for a process. But no such study has been found to be conducted for hybrid laser welding process in literature.

In the present work, altogether 65 numbers of different networks have been trained and tested with six different ANN training algorithms such as Gradient descent BPNN with momentum, Gradient descent BPNN with momentum and variable learning rate, BFGS quasi Newton BPNN, BPNN with LM algorithm, BPNN with BR and RBFN for CO2 laser–MIG hybrid welding of aluminium alloy sheet. It can be noted that, apart from BPNN with BR, no other ANN algorithms have been trained and tested earlier for laser hybrid welding process. Moreover, to facilitate training and testing of such large number of ANN architecture, a single program has been developed in MATLAB2017a environment that can facilitate training and testing of all 55 different architecture of BPNN using 5 different ANN training algorithms in a single run. Training and testing performance of each network architecture is stored in a file for further comparative study. Literature does not show application of such programs for continuous evaluation of different ANN models and architecture. That program will be useful for similar ANN based process parameters prediction of other manufacturing processes also. However, 10 architecture of RBFN have been computed separately. Dataset used in the modeling has been obtained from a DOE based experimentation on CO2 laser–MIG hybrid welding of aluminium alloy sheet [26] where tensile strength of welded joint has been considered as output quality characteristic. Best ANN has been determined based on the level of accuracy achieved during prediction of output characteristic. Finally, a sensitivity analysis has been carried out to determine effect of process parameters on output. Selected ANN model is capable of determining the required process parameters to achieve a specific welding strength and can be used for online process monitoring and control.

2 Prediction modelling of hybrid laser welding process

In present work ANN has been employed for prediction modelling of hybrid laser welding process. The modelling has been done using the following steps:

-

(1)

Generation of experimental dataset

-

(2)

Training with Backpropagation neural networks

-

(3)

Training with radial basis neural networks

-

(4)

Testing of trained ANN

2.1 Generation of experimental dataset

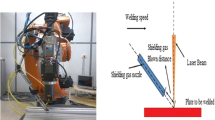

Experimental dataset used for the present work has been taken from a published literature of Chaki et al. [26]. A three level three factor full factorial experimentation was conducted for hybrid laser welding of AA8011 aluminium alloy using a hybrid setup developed in combination of a 3.5 kW CO2 laser welding system (Rofin Slab: CO2 laser) and a MIG welding machine as shown in Fig. 1.

Controllable input parameters during experimentation was considered as (1) Laser Power (P) in kW, (2) welding Speed (Vw) in m/min and (3) Wire Feed Rate (FR) in m/min. The other controllable parameters are kept fixed for the present experiment. The distance between laser beam and arc is kept 2 mm. Focal plane of the laser is kept 1 mm below the job surface. Stand-Off distance of the welding torch is 12 mm from the work piece and the torch angle is maintained at 53° with the job surface. A mixture of Helium and Argon in equal proportion is used as shielding gas with an operating pressure of 2.5 bar.

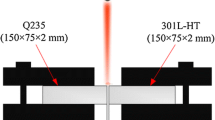

Hindalco (India) made cold rolled AA8011 grade Aluminium alloy (0.6–1.00% Fe, 0.50–0.90% Si, 0.05% Mg, 0.20% Mn, 0.10% Cu, 0.20% Zn, 0.08% Ti, 0.05% Cr and rest Al) plate of thickness 3 mm has been used as job specimen. In order to perform the butt-welding experiments, 100 mm × 75 mm specimens have been cut from a sheet of aluminium alloy by a machining process. A square butt joint configuration was prepared to fabricate joints. The filler material used for MIG welding has been ER4043 that contains 5.5% Si, around 0.4% other alloying materials (0.007% Mg, 0.17% Fe, 0.08% Cu, 0.005% Mn, 0.04% Ti, 0.0016% Be, 0.02% Sr) and rest Al. The feed wire diameter is 3.15 mm.

An exhaustive set of pilot experiments has been conducted by varying one of the process parameters at a time, while keeping the rest of them at constant value to determine feasible range of input process parameters for the weld seam with smooth appearance and the absence of any visible defects. In this way, the feasible operating regions for the process parameters are selected. Range of operations for process parameters are 2–3 KW for laser power (P), 2–4 m/min for welding speed (Vw) and 2–8 m/min for wire feed rate (FR). Three levels of each input process parameters have been selected for the experiment. Range of controllable factors during experiment along with their levels are given in Table 1. 27 numbers of experiments have been designed and conducted based on 3 factor 3 level full factorial experimental design without replication.

Welding strength (WS) of the joint is considered as output parameter and it has been measured from tensile tests carried out in 8801 microprocessor-controlled 10 Ton INSTRON universal tester with an accuracy of ± 0.4%.

The parameters laser power (P), welding speed (Vw) and wire feed rate (FR) are considered as input to ANN architecture and consists input layer. Welding Strength (WS) in MPa is constitute the output layer of ANN. Values of welding strength with respect to different input parameters has been given in Fig. 2. Replication has been carefully avoided during experimentation as it may lead to erroneous ANN training. 80% of experimental dataset randomly considered as training data and rest 20% has been considered as testing data. Input vector (X) and output vector (D) for an ANN architecture is therefore given by, \(\varvec{X} = \left[ {\begin{array}{*{20}c} P & {V_{w} } & {\text{FR}} \\ \end{array} } \right]\), \(\varvec{D} = \left[ {\text{WS}} \right]\). To ensure better training performance, experimental input and output dataset has been normalised between 0 and 1 and denoted as Xnor and Dnor before employing for ANN training and testing operation. Computer Programming for ANN training and testing process is conducted through Neural Network Toolbox of MATLAB R2017a using Intel Core i3-6006U, 2 GHz and 8 GB PC.

2.2 Training with backpropagation neural networks

Backpropagation is an ANN training method, that approximates functional relationship among input and output vectors during training phase by changing the weights associated to a multi-layered feedforward network with differentiable activation function units. Single hidden layer backpropagation neural networks (BPNN) used for the present work consists of three nodes in input layer representing V, E and FR respectively while output layer has one neuron that represents WS. Number of the hidden layer neurons are considered as variable parameter during the study and varied between 5 and 15. Activation functions associated to the hidden layer and output layer neurons are considered as sigmoidal and linear respectively. In back propagation is all neurons are interconnected to each other by network weights. The initial value of the weights is generated randomly and is updated during iterations. In every layer of network bias act exactly as weight on a connection from a unit where activation is always 1. A schematic diagram of backpropagation neural network architecture is given in Fig. 3. BPNN converts weighted sum of input signal (X) through activation function into hidden layer input which is further converted in similar process into output (O) of output layer. Difference between computed output (O) and experimental output (D) is denoted as error. Being a gradient descent method, it minimises total squared error computed by the net and is given by, mean square error,

where Q is total number of training data.

That MSE is minimised during training process by subsequent updating of weights during iteration satisfies convergence criteria training stops. In order to improve accuracy in prediction and computational speed, different methodology for weight updating has been adopted. In the present work, five different back propagation training algorithms have been used such as gradient descent back propagation with momentum (traingdm), gradient descent back propagation with momentum and adaptive learning rate (traingdx), BFGS quasi Newton backpropagation (trainbfg), backpropagation with Levenberg–Marquardt algorithm (trainlm), and backpropagation with Bayesian regularisation (trainbr).

In the present work, to facilitate training and testing of a large number of ANN architecture using different ANN training algorithms, a separate program has been developed in MATLAB2017a that completes training and testing of all 55 BPNN architecture in a single run and stores the results obtained from training and testing of each network for comparative study. The main program is associated with separate subroutines for different ANN training algorithms. Initially, one subroutine containing a specific training algorithm is called by main program and different ANN architecture are obtained sequentially by increment in numbers of hidden layer neurons by 1 for subsequent training and testing operations. When maximum number of hidden layer neurons is reached for a specific training algorithm, main program calls next subroutine for similar operations using another ANN training algorithm and the similar process as explained continues. Finally, when all subroutines or training algorithms are called and implemented for operations the program terminates. A schematic diagram of the program is given in Fig. 4.

In gradient descent back propagation with momentum method (traingdm), inclusion of the momentum term accelerates the convergence of backpropagation by allowing the network to respond not only to the local error gradient, but also in the direction of the combination of the current gradient and the previous direction for which the weight corrections have been made. Performance of gradient descent back propagation algorithm is very much sensitive to proper setting of learning rate. Gradient descent back propagation with momentum and adaptive learning rate (traingdx), instead of continuing with constant learning rate throughout training process, increases learning rate if new error term calculated during current iteration is less than old error term or vice versa. That improves rate of convergence.

Quasi newton method approximate Hessian matrix during computation of second order derivative of conventional Newton method of optimisation. BFGS quasi Newton backpropagation (trainbfg) updates the weights using Broyden, Fletcher, Goldfarb, and Shanno (BFGS) formula and results in faster convergence or minimisation of training error. Backpropagation with Levenberg–Marquardt algorithm (trainlm) is particularly a faster training algorithm with high training accuracy as it updates the weights up to second order derivative of training error. It is faster as it avoids complex computation of second order derivatives by approximation of Hessian matrix. Backpropagation with Bayesian regularisation (trainbr) minimises linear combination of sum of the squared errors (SSE) and sum of the squared weights (SSW) instead of minimising errors only. It reduces size of the network and improves accuracy particularly for modelling of small dataset.

2.3 Training with radial basis neural networks

Radial basis neural networks (RBFN) does not bear any weighted connection between input and hidden layer. It computes hidden neuron activations using Gaussian basis function which is an exponential of the Euclidean distance measure between the input vector and a prototype vector that characterizes the signal function at hidden neuron. It can be given by,

where μ is the center of the basis function, which is the training data points in this case and σ is the spread factor having a direct effect on the smoothness of the interpolating function. In RBFN with exact interpolator, number of hidden layer neurons are equal to number of training data. As in the present problem there are 22 number of training data (80% of experimental data), number of hidden layers with gaussian activation function is 22. The linearly activated output layer bears a weighted connection with hidden layer and produce output as scalar product of hidden layer output and weight vector. In the present study dataset is fed to network after normalization and spread factor varied from 0.1 to 1.0. Mean square error (MSE) is considered as criterion of performance. A schematic diagram of RBFN architecture is given in Fig. 5.

2.4 Testing of trained ANN

Training performance of ANN indicates the ease with which a network can identify a known dataset. But efficacy of a trained ANN is dependent on its behaviour in an unknown environment. It is assessed in testing phase through determination of prediction capability, when a trained ANN encounters a set of unknown data (i.e. test data). In the present work, test input dataset (\(\varvec{X}_{\text{nor}}^{\text{Test}}\)) is fed through all networks trained through BPNN and RBFN training algorithm. The resulting ANN predicted output (OTest) is compared with corresponding known experimental test output (\(\varvec{D}_{\text{nor}}^{\text{Test}}\)) to determine the prediction error and is measured by the quantity called testing MSE. It is calculated by,

where N is total number of test dataset.

3 Performance analysis of ANN prediction modelling

In the present work, 65 ANN architecture have been tested using five different BPNN training algorithm and radial basis function networks. Training functions of MATLAB2017a used for present training and testing, corresponding to those BPNN and RBFN training algorithms are traingdm (gradient descent BPNN with momentum), traingdx (gradient descent BPNN with momentum and adaptive learning rate), trainbfg (BFGS quasi Newton BPNN), trainlm (BPNN with LM algorithm), trainbr (BPNN with BR) and newrbe(radial basis function networks as exact interpolator). During BPNN training different ANN architecture have been achieved by varying hidden layer neurons from 5 to 15. Therefore, each BPNN training algorithm has trained 11 numbers of ANN architecture and altogether 55 numbers of BPNN architecture have been trained and tested in the present work. Best BPNN model has been selected based on prediction performance of ANN during testing of different architecture using BPNN training functions traingdm, traingdx, trainbfg, trainlm and trainbr. The prediction performance of all 55 BPNN networks is presented in Fig. 6 in terms of Testing MSE [Eq. (3)]. It can be clearly observed from Fig. 6 that, overall prediction capability of BPNN with BR is best compared to other training algorithms. Table 2 provides a comparative study of networks with best prediction performance obtained from different training algorithms. It is observed from Table 2 and Fig. 6 that, with 3-6-1 network with Gradient descent BPNN with momentum, 3-12-1 network with Gradient descent BPNN with momentum and variable learning rate, 3-7-1 network with BFGS quasi Newton BPNN, 3-6-1 network using BPNN with LM algorithm and 3-11-1 network using BPNN with BR algorithm provided the best performance for individual algorithm. The first term of the network architecture representation (e.g. 3-6-1, 3-11-1 etc.) indicates number of neurons in the input layer of ANN. Here three number of input layer neurons represents three number of input variables for experimentation such as V, E and FR respectively. The third term of network architecture, represents one neuron in the output layer of ANN which is WS. The middle term of the network architecture indicates number of hidden layer neurons in the ANN architecture.

Figure 7 represents mean absolute % error during prediction along with maximum and minimum error obtained by those best performing networks obtained from training and testing of different BPNN algorithms. Mean absolute % error is obtained as mean of absolute % error in prediction for every test data after corresponding de-normalisation as given below:

where \({\text{O}}_{\text{i}}^{\text{Test}}\) is output predicted by ANN and \({\text{D}}_{\text{i}}^{\text{Test}}\) represents corresponding experimental output.

It has been clearly observed from Fig. 7 that, mean absolute % error, maximum and minimum % error obtained by 3-11-1 network trained using BPNN with BR is least with value of 1.7%, 3.2%, 0.3% respectively. Figure 5 and Table 2 also indicate that, 3-11-1 network trained using BPNN with BR gives the best prediction capability with minimum testing MSE of 3.24E − 04.

Further, 10 architecture of RBFN as exact interpolator has been trained and tested considering spread factor as variable prediction performance of RBFN is presented in Fig. 8. An RBFN with 0.5 spread factor shows best prediction performance with minimum test MSE of 9.56E − 04 as indicated in Fig. 8 and Table 2. The mean absolute % error, maximum and minimum % error obtained by best RBFN network is given by 3.0%, 2.1% and 6.1% respectively which is quite inferior compared to best prediction performance of BPNN obtained by 3-11-1 network trained using BPNN with BR. Therefore, undoubtedly BPNN with BR is considered as the algorithm with best prediction capability for small training dataset as used in the present study and 3-11-1 network trained using BPNN with BR is considered as the best ANN architecture for prediction of welding strength for Laser MIG hybrid welding. A detailed prediction performance of all testing data is finally presented for 3-11-1 network trained using BPNN with BR in Table 3. However, this BPNN with BR training algorithm will be useful for prediction modelling of any small experimental dataset where generating large number of experimental datasets is costly or technically infeasible.

4 Regression model of welding strength

A multivariable regression model is developed based on the experimental dataset (Fig. 2) to find out a relationship between three input process variables such as laser power (P), welding speed (Vw) and wire feed rate (FR) with welding strength (WS) as output parameter. Initially, a linear model has been developed but it was later rejected based on the analysis of variance (ANOVA) test results. Finally, the 2nd order regression model is developed for WS and is given below:

It is very important to check the adequacy of the proposed model. Adequacy of the model has been tested by Regression coefficient (R-square) value of the regression equations and ANOVA test. The test results of ANOVA for welding strength is given in Table 4. R2 and R2 (adjusted) for welding strength regression model are 0.9796 and 0.9688 respectively. So the model can be accepted so far as R2 and R2 (adjusted) values are concerned. During ANOVA test, F-value for regression is 90.72 which is much higher than corresponding tabulated F-value, which gives F0.05 (9, 17) = 2.49. Moreover, as P value of regression model is less than 0.05 (alpha at 95% confidence interval), the null hypothesis is rejected. Therefore, regression model for welding strength is considered significant.

5 Sensitivity analysis

Sensitivity analysis is a method to identify critical input parameters that exerting the most influence upon model outputs. Mathematically, sensitivity of a design objective function with respect to a design variable is the partial derivative of that function with respect to its variables. Present study is aimed to predict the variation in welding strength due to a small change in process parameters (welding current, voltage and welding speed) for SAW processes. The weld bead characteristic models can be interpreted as design objective functions and their variables as design parameters. To accomplish the need, sensitivity equations [Eqs. (6)–(8)] has been derived from partial differentiation of regression equation mentioned in Eq. (5) with respect to each process parameters (welding current, voltage and welding speed) and are given below:

\(\frac{{\partial \left( {WS} \right)}}{\partial P}\), \(\frac{{\partial \left( {WS} \right)}}{{\partial V_{w} }}\) and \(\frac{{\partial \left( {WS} \right)}}{\partial FR}\) have been computed for all experimental dataset and mean of their values corresponding to different levels of process parameters are given in given in Table 5 and Fig. 9. Here, mean of \(\frac{{\partial \left( {WS} \right)}}{\partial P}\), \(\frac{{\partial \left( {WS} \right)}}{{\partial V_{w} }}\) and \(\frac{{\partial \left( {WS} \right)}}{\partial FR}\) indicates sensitivity of P, Vw and FR on welding strength (WS).

Figure 9a indicates high negative sensitivity in low power region which implies decrease in WS with minimum increase in laser power in that region. While it shows high positive sensitivity in high power region. So, maximum welding strength can be observed with high laser power. Overall an increase in WS is observed with increase in laser power. In Fig. 9b high negative value of sensitivity indicates a significant decrease in WS with increase in welding speed. At lower speed low positive sensitivity indicates a rise in WS. In general, WS decreases with increase in welding speed. Figure 9c indicates wire feed rate sensitivity results on WS and trend is negative. As WS is more sensitive to higher wire feed rate, a decrease in WS will be observed with increase in feed rate. Therefore, feed rate should be kept as low as possible to obtain better WS. From Fig. 9a–c it is clear that, wire feed rate is having least sensitivity compared to laser power and welding speed on WS. Therefore, variation of wire feed rate will cause little change of WS and while welding speed and laser power will be most influencing factors. Above analysis indicates that, maximum WS will be obtained if welding is carried out with high laser power, low welding speed and low wire feed rate.

6 Conclusion

In the present work, 65 numbers of different artificial neural network architecture have been employed to estimate tensile strength of hybrid CO2 laser MIG welded aluminium alloy plates. Five backpropagation neural network (BPNN) training algorithms such as (1) gradient descent BPNN with momentum, (2) gradient descent BPNN with momentum and adaptive learning rate, (3) BFGS quasi Newton BPNN, (4) BPNN with LM algorithm and (5) BPNN with BR) and one radial basis function networks (RBFN) training algorithm i)RBFN as exact interpolator have been employed for training and testing of 65 ANN architecture. Further a sensitivity analysis has been carried out to study the influence of process parameters on tensile strength. Finally, following conclusions can be drawn on the basis of results obtained:

-

(1)

3-11-1 network during BPNN with BR training and testing results best prediction performance among different architecture with MSE of 3.24E − 04 during prediction of welding strength and is considered as best ANN. Superiority of 3-11-1 network trained using BPNN with BR has been further established as it shows best prediction capability with least value of mean absolute % error, maximum and minimum % error, as 1.7%, 3.2%, 0.3% respectively.

-

(2)

Sensitivity analysis of the process indicates that, welding strength increases with increase in laser power and decreases with increase in welding speed. Wire feed rate should be kept as low as possible to obtain better joint strength. However, variation of wire feed rate will cause little change of welding strength and while welding speed and laser power will be most influencing factors. Therefore, maximum welding strength will be obtained if welding is carried out with high laser power, low welding speed and low wire feed rate.

-

(3)

A program has been developed in present work for continuous performance evaluation of large number of ANN architecture during training and testing through different ANN training algorithms in a single run. It has been used to determine ANN with best prediction capability for estimation of welding strength during laser MIG hybrid welding process. However, the same program can be efficiently used for estimating output quality of any other processes and can be employed for online process monitoring and control.

References

Steen WM, Eboo M (1979) Arc augmented laser welding. Met Constr 11:332–335

Muneharua C, Li K, Takao S, Kouji H (2009) Fiber laser-GMA hybrid welding of commercially pure titanium. Mater Des 30(1):109–114

Cao X, Wanjara P, Huang J, Munro C, Nolting A (2011) Hybrid fiber laser—arc welding of thick section high strength low alloy steel. Mater Des 32(6):3399–3413

Liming L, Jifeng W, Gang S (2004) Hybrid laser–TIG welding, laser beam welding and gas tungsten arc welding of AZ31B magnesium alloy. Mater Sci Eng, A 381(1–2):129–133

Yan J, Zeng X, Gao M, Lai J, Lin T (2009) Effect of welding wires on microstructure and mechanical properties of 2A12 aluminum alloy in CO2 laser-MIG hybrid welding. Appl Surf Sci 255(16):7307–7313

Yan J, Gao M, Zeng X (2010) Study on micro structure and mechanical properties of 304 stainless steel joints by TIG, laser and laser-TIG hybrid welding. Opt Lasers Eng 48(4):512–517

Zhao YB, Lei ZL, Chen YB, Tao W (2011) A comparative study of laser-arc double-sided welding and double-sided arc welding of 6 mm 5A06 aluminium alloy. Mater Des 32(4):2165–2171

Li R, Li Z, Zhu Y, Rong L (2011) A comparative study of laser beam welding and laser–MIG hybrid welding of Ti–Al–Zr–Fe titanium alloy. Mater Sci Eng, A 528(3):1138–1142

Qi X-d, Liu L-m (2011) Fusion Welding of Fe-added lap joints between AZ31B magnesium alloy to 6061 aluminum alloy by hybrid laser–tungsten inert gas welding technique. Materials and Design 33:436–443

Li Chenbin, Liu Liming (2013) Investigation on weldability of magnesium alloy thin sheet T-joints: arc welding, laser welding, and laser-arc hybrid welding. Int J Adv Manuf Technol 65(1–4):27–34

Casalino G (2007) Statistical analysis of MIG-laser CO2 hybrid welding of Al–Mg alloy. J Mater Process Technol 191(1–3):106–110

Campana G, Fortunato A, Ascari A, Tani G, Tomesani L (2007) The influence of arc transfer mode in hybrid laser-MIG welding. J Mater Process Technol 191(1–3):111–113

Zhan X, Wang Y, Liu Y, Zhang Q, Li Y, Wei Y (2016) Investigation on parameter optimization for laser welded butt joint of TA15 alloy. Int J Adv Manuf Technol 84(9–12):2697–2706

Wang L, Wei Y, Zhao W, Zhan X, She L (2018) Effects of welding parameters on microstructures and mechanical properties of disk laser beam welded 2A14-T6 aluminum alloy joint. J Manuf Process 31:240–246

Hornik K, Tinchcombe M, White H (1989) Multilayer feed forward networks are universal approximators. IEEE Trans Neural Netw 2:359–366

Vishnu P, Manohar M (2018) Performance prediction of electric discharge machining of inconel-718 using artificial neural network. Mater Today: Proc 5(2):3770–3780

Kuo C-FJ, Tsai W-L, Su T-L, Chen J-L (2011) Application of an LM-neural network for establishing a prediction system of quality characteristics for the LGP manufactured by CO2 laser. Opt Laser Technol 43(3):529–536

Patel P, Sheth S, Patel T (2016) Experimental analysis and ANN modelling of HAZ in laser cutting of glass fibre reinforced plastic composites. Procedia Technol 23:406–413

Sivagurumanikandan N, Saravanan S, Shanthos Kumar G, Raju S, Raghukandan K (2018) Prediction and optimization of process parameters to enhance the tensile strength of Nd: YAG laser welded super duplex stainless steel. Optik 157:833–840

Muthu Krishnan M, Maniraj J, Deepak R, Anganan K (2018) Prediction of optimum welding parameters for FSW of aluminium alloys AA6063 and A319 using RSM and ANN. Mater Today: Proc 5(1):716–772

Haykin S (2006) Neural networks: a comprehensive foundation, 2nd edn. Pearson Education Inc, Bangalore

Dong Z, Wei Y, Zhan X, Wei Y-Q (2007) Optimization of mechanical properties prediction models of welded joints combined neural network with genetic algorithm. Trans China Weld Inst 28:69–72

Hagan MT, Menhaj MB (1994) Training feed forward networks with the Marquardt algorithm. IEEE Trans Neural Netw 5(6):989–993

MacKay DJC (1992) A practical Bayesian framework for back propagation networks. Neural Comput 4:448–472

Chaki S, Ghosal S (2015) A GA–ANN hybrid model for prediction and optimization of CO2 laser- MIG hybrid welding process. Int J Autom Mech Eng 11:2458–2470

Chaki S, Shanmugarajan B, Ghosal S, Padmanabham G (2015) Application of integrated soft computing techniques for optimization of hybrid CO2 laser–MIG welding process. Appl Soft Comput 30:365–374

Broomhead DS, Lowe D (1988) Multivariable functional interpolation and adaptive networks. Complex Syst 2:321–355

Wu Y, Wang H, Zhang B, Du K-L (2012) Using radial basis function networks for function approximation and classification. ISRN Appl Math, Article ID 324194

Halali MA, Azari V, Arabloo M, Mohammadi AH, Bahadori A (2016) Application of a radial basis function neural network to estimate pressure gradient in water–oil pipelines. J Taiwan Inst Chem Eng 58:189–202

Praga-Alejo RJ, Torres-Treviño LM, González-González DS, Acevedo-Dávila J, Cepeda-Rodríguez F (2012) Analysis and evaluation in a welding process applying a redesigned radial basis function. Expert Syst Appl 39:9669–9675

Acknowledgements

Author would like to express sincere thanks to Dr. G. Padmanabham, Director, International Advance Research Centre for Power Metallurgy and New Materials, Hyderabad, Andhra Pradesh, India, for extending facilities to conduct necessary experiments for the present research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chaki, S. Neural networks based prediction modelling of hybrid laser beam welding process parameters with sensitivity analysis. SN Appl. Sci. 1, 1285 (2019). https://doi.org/10.1007/s42452-019-1264-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-019-1264-z