Abstract

This paper explores the relationship between social media and political rhetoric. Social media platforms are frequently discussed in relation to ‘post-truth’ politics, but it is less clear exactly what their role is in these developments. Specifically, this paper focuses on Twitter as a case, exploring the kinds of rhetoric encouraged or discouraged on this platform. To do this, I will draw on work from infrastructure studies, an area of Science and Technology Studies; and in particular, on Ford and Wajcman’s analysis of the relationships between infrastructure, knowledge claims and politics on Wikipedia. This theoretical analysis will be supplemented with evidence from previous studies and in the public domain, to illustrate the points made. This analysis echoes wider doubts about the credibility of technologically deterministic accounts of technology’s relationship with society, but suggests however that while Twitter may not be the cause of shifts in public discourse, it is implicated in them, in that it both creates new norms for discourse and enables new forms of power and inequality to operate.

Similar content being viewed by others

Introduction

The purpose of this paper is to investigate the links among epistemology, contemporary concerns about ‘post-truth’ society and ‘fake news’, and the infrastructures and platforms of social media. In doing so, it draws on and contributes to work exploring the relationships between technology and knowledge production in contemporary society.

It starts with a discussion of the ways in which recent political discourse has questioned the status of academic expertise and scientific knowledge. As part of this, the rhetorical strategies of populist movements working with social media will be reviewed. Next, drawing on work in Science and Technology Studies, and in particular from infrastructure studies, the ways in which technologies relate to political discussions and debates will be considered, focusing on two widely used technology platforms and services: Wikipedia and Twitter. The paper concludes with a discussion of ways in which the relationships between such digital platforms, post-truth rhetoric and expertise can be engaged with.

Post-Truth and the Political Demise of Academic Expertise

The observation that societies have grown sceptical about ‘grand narratives’ was made well over three decades ago (Lyotard 1984). Recently, however, this broad scepticism has found a keener form in the arena of contemporary politics, which have been described as taking place in a ‘post-truth’ era. This idea of post-truth has spread rapidly; the Oxford dictionary famously declared it their word of the year for 2016, for example. Within research discussions, the phrase ‘post-truth’ is used to describe a mode of politics that operates more through appeals to emotion and personal belief than to verifiable facts (Lynch 2017). As Lynch went on to argue, however, although the term itself may be relatively new, this kind of political rhetoric is not: similar rhetoric can be traced back at least a quarter of a century to the Reagan administration, and the tactics they used to ensure the plausible deniability for the president in relation the Iran-contra scandal.

However, the widespread effects of post-truth rhetoric on contemporary politics have been profound, seeming to free politicians from the burden of being well-informed, accurate or even honest. Sismondo (2017) has argued that it no longer seems to matter whether politicians are lying, so long as the positions that they espouse are consistent with those of their audience. It has even been suggested that raising the question of whether political claims are ‘true’ simply misses the point:

An ascendant view among Trump’s critics is that charges of ‘bullshit’ might be more apt than of lies and lying, since the latter charges presume specific intent and awareness rather than a more constant tendency to exaggerate and deceive, sometimes for no apparent reason. (Lynch 2017: 594)

From this perspective, it becomes relatively easy to see why ‘fact checking’ has begun to look like a partisan act, one that is particularly mistrusted by Republicans in the USA (Shin and Thorson 2017). When discussions operate to out-manoeuvring the opposition, demanding evidence for or against a statement no longer seems like a legitimate move. Instead, questioning the evidence base for a statement looks more like an attempt to undermine the rules of a political ‘game’ that is utterly disengaged from questions of truth or knowledge. However, even though there may be no way to establish what the precise motives for or against particular political claims might have been, it is still possible and productive to look at the effects of political rhetoric in terms of power, to reveal how it can be persuasive, coercive and constitutive of political reality, and how it intersects with established power relations (Krebs and Jackson 2007).

These shifting political realities and power plays are not only visible in US politics, but can also be seen in places like Europe and Australia. In Britain, for example, during a television interview in 2016, Michael Gove famously avoided answering questions about whether any economists backed the idea of ‘Brexit’ (Britain’s exit from the European Union) by asserting that ‘people in this country have had enough of experts’. Here, Gove was specifically undermining the power of what he described as ‘people from organizations with acronyms saying that they know what is best and getting it consistently wrong’.

Just as post-truth politics are not new, this scepticism about expertise also has a long history. A century earlier, for example, Henry Ford made clear his position on employing experts:

None of our men are ‘experts.’ We have most unfortunately found it necessary to get rid of a man as soon as he thinks himself an expert because no one ever considers himself expert if he really knows his job. […] The moment one gets into the ‘expert’ state of mind a great number of things become impossible.

However, the contemporary effect of this has a politically aligned shift in what Kelkar (2019) has described as the media ecosystem: in the USA, for example, the journalists and academics who might conventionally describe themselves as ‘experts’ on the basis of their commitment to objectivity are predominantly left-of-centre in their own politics; the conservative response to this systemic bias has been to create an alternative array of think tanks and media to provide other kinds of information that address their preferred public. In the context of educational policy, in the UK, USA and Australia, think tanks and ‘edu-business’ have had a growing influence, while that of academic expertise and evidence has declined (Thompson, Savage and Lingard 2016).

When analysed in terms of power dynamics, the desire of vested interests to ignore ‘inconvenient truths’ (Gore 2006) is hardly surprising. However, this is not just a simple binary setting power against knowledge; the very idea of ‘expertise’ is itself political, creating inequalities of power that render equal debates between those identified as experts and others impossible (Forss and Magro 2016). Forss and Magro go on to argue that expertise is typically linked to state or industrial funding, usually involves dissent and debate, and is fallible. These structural asymmetries, together with failures to predict important events such as the global financial crisis (as Gove alluded to), illustrate why blame has increasingly been directed towards experts themselves. Doing this, however, presupposes that they ever had the ability to do anything more or other than give the advice they did; it ignores, for example, the ways in which their expertise was made available to, misrepresented for or hidden from policy-makers and the wider public, as well as the ways in which others choose to interpret that evidence—or ignore it.

These more nuanced accounts of expertise are helpful in bring perspective to this analysis. If expertise is as Forss and Magro suggest fallible and debatable, then it is only prudent to have a degree of scepticism about the claims of academics. Indeed, caution and prudence are vital to the reflexive, critical position used to justify the idea of academic freedom (Altbach 2001). However, acknowledging this does not justify the further move from prudent scepticism to wholesale rejection. Rather than being a cautious project of constructing knowledge with care, Sismondo (2017: 5) describes the rejection of expertise as a ‘project of creating ignorance, where any disagreement is amplified to try to create a picture of complete dissensus’. In other words, rather than recognising academic challenge and critical doubt as a form of intellectual vigilance, there to test and ultimately strengthen public confidence in knowledge, it is instead portrayed as grounds for radical doubt and suspicion. Rhetorically, this allows anything that is still subject to academic debate to be dismissed if it is politically inconvenient.

This deliberate rhetorical strategy to discredit any area where critique and debate still operate provides a compelling, if disturbing, focus for analysis. It is this debate, as explored in the field of Science and Technology Studies, that will be reviewed next.

Studies of Society, Technology and Knowledge Production

These debates about the constitution of knowledge, the status of experts and claims to expertise have been of particular interest to researchers in the field of Science and Technology Studies (STS), a field with a long tradition of exploring the sociomaterial enactment of facts, assertions and beliefs—as, for example, with the classic studies of laboratory life, which showed how the production of claims that appeared in research papers were supported by complex social, political and material processes (Latour and Woolgar 1979). Moreover, questions about the status of expertise have been argued to be central to what Collins et al. (2017) have described as the ‘third wave’ of science studies. A central tenet of this programme of research is that explorations into questions about the status of knowledge should be carried out according to the principle of symmetry: the same analysis should apply irrespective of whether particular assertions are currently held to be true, rational or successful (Lynch 2017). In this case, what this means is that the political rhetoric that dismisses academic expertise should be analysed in exactly the same way as the academic rhetoric that claims to produce knowledge in the first place.

A classic example of applying this analysis to explore what might now be described as post-truth politics is Latour’s analysis of debates around global warming, conspiracy theories and artificially maintained controversies (Latour 2004). Here, he describes how the analytic techniques of STS—originally developed to show that science was not ‘neutral’ but was inevitably shaped by society and so political—had been co-opted by political interests to undermine areas of science that were inconvenient to them. As a consequence, Latour questioned whether deconstructive critique alone was sufficient, or whether new forms of social action and analysis in STS were needed.

While we spent years trying to detect the real prejudices hidden behind the appearance of objective statements, do we now have to reveal the real objective and incontrovertible facts hidden behind the illusion of prejudices? (Latour 2004: 227)

Latour’s proposed new action involved the development of ‘matters of concern’, a phrase deliberately framed to stand in contrast to the more conventional phrase, ‘matters of fact’. The proposal behind this new idea was that the techniques that had previously been used to deconstructing scientific facts could also be deployed as a way of strengthening fragile, cherished ‘things’, as well as weakening dominant ones; this would be achieved by building new associations rather than undermining existing ones. From this perspective, matters of concern were reconceived not as self-contained objects but as gatherings (Latour 2005: 114).

Undertaking this work of strengthening associations involves a series of steps:

- (1)

Recognising that the things that are of concern are fabricated.

- (2)

Following the social traces of their translation.

- (3)

Recognising the implications of these things for scientific practice.

- (4)

Observing how different groups and institutions (or perhaps, in Warner’s terms, different publics; 2002) debate them.

In contrast to the dynamics of deconstructing matters of fact, pursuing matters of concern does not involve denigrating the claims of experts in order to level the power asymmetries of a debate. This is because doing so would not eradicate inequality but simply shift the balance of power in new directions—ones that may just end up serving different vested interests, rather than being ‘better’ in some absolute and abstract sense. Instead, studying matters of concern in science and technology involves laying out the myriad stakes, grounds and inequalities of all stakeholders, so that inequalities can be recognised and engaged with. Doing so, Latour proposes, would help foreground otherwise marginal positions so that they may be developed or sustained.

Embracing epistemic democratization does not mean a wholesale cheapening of technoscientific knowledge in the process. STS’s detailed accounts of the construction of knowledge show that it requires infrastructure, effort, ingenuity and validation structures. (Sismondo 2017: 3)

This active commitment to strengthening the claims of the weak or marginalised has been taken up and developed by feminist scholars, who developed the concept ‘matters of concern’ further, to become ‘matters of care’. In this reformulation of the concept, a fifth and explicitly interventionist step was added to Latour’s process of analysing matters of concern.

The purpose of showing how things are constructed is not to dismantle things, nor undermine the reality of matters of fact with critical suspicion about the powerful (human) interests they might reflect and convey. Instead, to exhibit the concerns that attach and hold together matters of fact is to enrich and affirm their reality by adding further articulations. (Puig de la Bellacasa 2011: 89)

This step draws attention back to the researcher and their actions, demanding a reflexive element in research. The practices of researching something inevitably add new articulations to the objects of study, for example through the creation of publications that connect ‘things’ or gatherings to scholarly audiences, or through the generation of data that create new representations of the study objects that can circulate to new publics (Warner 2002).

With this fifth, feminist engagement in STS, research itself is therefore understood as political, always changing the structures of power, and in doing so, connecting the object of study to new audiences with new stakes.

Acts of care are always embroiled in complex politics. Care is a selective mode of attention: it circumscribes and cherishes some things, lives, or phenomena as its objects. In the process, it excludes others. Practices of care are always shot through with asymmetrical power relations: who has the power to care? Who has the power to define what counts as care and how it should be administered? Care can render a receiver powerless or otherwise limit their power. It can set up conditions of indebtedness or obligation. […] Care organizes, classifies, and disciplines bodies. Colonial regimes show us precisely how care can become a means of governance. (Martin et al. 2015: 627)

Social researchers, then, are called to intervene in such a way as to be generative of better knowledge and politics, supporting the survival of cherished phenomena (Martin et al. 2015: 628). In the context of current political debates, a challenge for researchers is therefore to generate better sociomaterial relationships between technology, action and knowledge. Examples of such relationships, instantiated in studies of digital platforms and services, are considered in the next section.

Infrastructures as ‘Things’

In the previous sections, I have argued first that ‘expertise’ has been undermined in political debates, and secondly that the construction of knowledge is achieved and sustained through the work of fabricating gatherings that circulate through different publics. In this section, I will look at a third element: the infrastructures that form part of the gathering together of things.

A frequent observation made about the political debates around truth and expertise is the way that specific digital technology platforms and services seem to contribute to the erosion of confidence in expert knowledge. For example, Sismondo (2017: 4), observing the tenor of recent political debates, has lamented that ‘a Twitter account alone does not make what we have been calling knowledge’. However, social media sites have operated as ‘echo bubbles’ or ‘echo chambers’—systems in which relevant voices have been omitted, either inadvertently (in the case of ‘bubbles’) or through active manipulation (for ‘chambers’) (Nguyen 2018). These systems supply users with content that reinforces rather than challenges their beliefs (Pettman 2016), ensuring that the things that they care about are sustained through the constant addition of new supporting associations, rather than being tested by being brought into contact with alternative positions that might undermine them. Similarly, when everyone is able to produce and share new content, there are new concerns about scale and quality: in an era in which the volume of information available has undermined trust, and social media has changed the ways people encounter information, the growing prevalence of lies, deception and ‘bullshit’ seems an unavoidable consequence (MacKenzie and Bhatt 2018).

These observations raise a question about the kind of relationship that exists between knowledge, society and technology. There is a long tradition of new forms of media being associated with ‘moral panics’ in society (Bennett et al. 2008), in spite of the lack of evidence that suggests that they can actually serve as the defining feature of generations. This echoes wider doubts about the credibility of technologically deterministic accounts of technology’s relationship with society (Oliver 2011). Nevertheless, it seems obvious that there is some kind of connection between the rise in these platforms and the spread of post-truth politics.

There are several different ways of approaching this issue, two of which are infrastructure studies and platform studies (Plantin et al. 2018)—neither of which is able to explain fully the characteristics of something as complex as Facebook, Google or Twitter, but each of which allows distinct insights to be drawn. Infrastructure studies have grown from accounts of the history of technological systems to include the phenomenology and sociology of infrastructures, including ideas such as dependency, ubiquity and exclusion. Platform studies are more concerned with media practices, growing from questions about programmability, through discussions of affordances, to questions about agency, structure and power in the creation and circulation of media texts. Plantin et al. propose that these perspectives can converge, however; for example, in situations where infrastructures have ‘splintered’ (for example, in the way that competing streaming services have supplanted monolithic broadcast television), or where platforms operate as monopolies (such as the way that Facebook’s application programming interface ‘locks in’ developers and in doing so supplants the openness of the Internet, so that users end up relying on Facebook without realising).

In this paper, I have focused on infrastructure studies, given that the scope of its analysis includes users’ experiences, whereas platform studies have so far focused more on the materiality of the platforms and the intentions of their designers (Apperley and Parikka 2018). Focusing on infrastructure, I suggest, fits consistently with the analytic framework laid out earlier that includes different stakeholders—particularly when the fifth reflexive and interventionist step is introduced, so as to engage with questions of power. Although both of these bodies of research resist technological determinism (see, e.g. Bogost and Montfort 2009), infrastructure studies’ focus on different enactments of the technology is helpful in keeping its fluidity (de Laet and Mol 2000) as well as its structures in view—a perspective that has already proved useful in analysing the relationships between technologies such as virtual learning environments and knowledge practices (Enriquez 2009).

Specifically, I will draw on the definition of infrastructure developed by Bowker et al. (2010), which positions infrastructures as technologies that typically exist in the background, are commonly taken for granted and are often maintained by undervalued or invisible workers. This definition therefore includes the people who work to design and maintain these technologies, as well as their material existence and the practices associated with its social and organisational operation. From this perspective, infrastructure can be analysed as a ‘thing’, rich with associations and contested by various audiences, which could be both questioned and cared for.

Infrastructure Studies and Ethnography of the Postdigital

Having laid out the three elements that I want to consider—the post-truth political rhetoric, the social and political analysis of knowledge claims and digital infrastructures—I will turn now to a methodological question that has limited how empirical data can be used to study the relationship between these elements.

A conventional analysis of political debates in terms of matters of care might involve following the five steps outlined above: (1) recognising that claims were fabricated, (2) tracing their translation, (3) seeing implications for practices, (4) observing debates and (5) strengthening associations. However, whilst some of these steps are relatively well understood and documented, the second step—the tracing of translations—poses particular problems when digital infrastructure is involved.

Of course, this is only one step in a wider a more complex process—nevertheless, it is a particularly important one in terms of understanding how contemporary digital technologies have contributed to or worked against post-truth political rhetoric and the associated epistemic slippage. It also has implications for how the subsequent steps can follow through, limiting what is possible.

However, Ford and Wajcman have developed an approach to analysing the relationship between infrastructure, knowledge and politics in digital environments that addresses this issue. In their analysis of Wikipedia (Ford and Wajcman 2017), they distinguish between three infrastructural layers: the logics embedded in Wikipedia’s installed base (referring to the systems from which Wikipedia draws, such as Western encyclopedias and the free software movement); the software infrastructure; and the policy infrastructure.

In their analysis, they explore how the first of these layers, the installed base, is partly material, but also partly conceptual:

Wikipedia epistemology is a foundational aspect of its infrastructure and is materialized in the policies and principles that guide work on the encyclopedia. Such logics determine what Wikipedia accepts and what it rejects. Logics about how knowledge is defined, who are the appropriate authors and experts, and which subjects are suitable for inclusion. This directly influences how knowledge is produced. (Ford and Wajcman 2017: 517)

The software layer consists both of the MediaWiki architecture, which is used to author content, but also the automated agents and bots that perform routine editing tasks and which are responsible for tagging areas as ‘citation needed’. The pervasive presence of these software systems means that ‘the editing process is said to look more like a computer programme than a draft of an encyclopaedia entry’ (Ford and Wajcman 2017: 520).

The policy infrastructure is described as being highly legalistic, giving rise to the term ‘wiki lawyering’ to describe the way in which Wikipedians argue for the inclusion of content and sources. Ford and Wajcman describe how the enactment of these policies is confrontational, resulting in hundreds of articles being deleted each week, many of them on the unilateral decision of members of a small cadre of longstanding users who decide that the material is not important enough to include.

Ford and Wajcman’s approach is ideally suited to a postdigital analysis of Wikipedia. Although the term ‘postdigital’ has been used in diverse, sometimes contradictory ways (Taffel 2016), it is typically understood as questioning a series of binaries (zero/one, material/immaterial, human/nonhuman, nature/culture), as well as the fixation with the novelty of digital, turning attention back instead to questions of embodiment, the analogue, and the permeation of the digital throughout day-to-day life. Here, following Jandrić et al. (2018), I use the term ‘postdigital’ in particular to highlight continuities in practices: the entanglements between technology, human and social life; the persistence of the industrial and biological alongside the apparently immaterial; and the growing sense that the digital is increasingly mundane. In this sense, the relevance of Ford and Wajcman’s approach is that it traces the associations among material infrastructure (such as hardware), digital infrastructure (such as software and algorithms), and social action (coding, classifying, writing, editing and reading), highlighting points of continuity and inheritance, as well as the consequences of all this for public knowledge.

In revealing these traces in the case of Wikipedia, Ford and Wajcman were able to show how deeply this infrastructure is gendered (as well as noting other structural inequalities). Some aspects of this are relatively visible—for example, the ratio of male to female biographies is more skewed on Wikipedia than in the Encyclopedia Britannica. Other elements require closer investigation, such as the low proportion of female engineers involved in developing the software infrastructure, the way that biographies are required to be classified as either male or female (echoing earlier analyses of the social effects of classification systems by Bowker and Star 2000), the work required to ensure that the terms of address for editors are gender-neutral, or the ways in which the power plays of ‘deletion debates’ are characterised by normalised values associated with masculinity.

However, Ford and Wajcman’s analysis also reveals more subtle consequences, and it is these in particular that underscore the value of their approach in tracing associations that might otherwise be missed. For example, they show how the logics of the installed base serve to reinforce existing inequalities. Wikipedia’s logic is that claims should reflect three core principles: neutral point of view (NPOV), no original research (NOR), and verifiability. Any original research undertaken by editors is seen as a conflict of interest, because editors are positioned as passive aggregators of work undertaken elsewhere. The unintended consequence of this is that topics that are poorly documented—such as subjects from India, Malaysia and South Africa, or the history of women scientists—are excluded on the grounds that they are not independently verifiable.

Although it is not the point of Ford and Wajcman’s paper, it is relatively easy to show that their analysis not only addresses the challenge of tracing associations that were identified earlier but also the subsequent steps in analysing Wikipedia as a matter of concern. Having recognised (1) that not only the claims made on Wikipedia but the infrastructure itself are fabricated; their analysis (2) traces the ways in which hardware, software, principles and people are associated, both through mutual support and examples of conflict; sees (3) that this leads to the production of particular kinds of Wikipedia page at the expense of others, and this sets the groundwork for then (4) observing debates, such as whether the exclusion of marginalised groups can be justified in order to preserve core values; and potentially (5) creating interventions that strengthen the ways in which those marginalised groups can associate with the creation of future content.

So, having established both that there are ongoing questions about the relationships among the rhetoric of post-truth politics, social media and expertise, and identified an approach to the analysis of digital infrastructures as a matter of concern, in the next section I will explore how the infrastructure of Twitter supports the troubling forms of political debate discussed earlier.

The Infrastructure of Knowledge Production in Twitter Debates

Although contemporary political discussions are played out across a range of media, those that take place on social media have become increasingly important, particularly in the wake of the 2016 US presidential election. Twitter has been chosen as the focus for analysis in this paper as an example that illustrates the relationships between infrastructure and public knowledge, and also because of the way that it has already been singled out as in need of attention by scholars working in STS.

Twitter may be part of the dissolution of the modern fact. Even if not, we in STS should be part of the analysis of how this and other social media platforms can easily be used as tools of very ugly kinds of politics. (Sismondo 2017: 4)

In order to undertake this kind of analysis from the point of view of infrastructure studies, this section will consider Twitter in relation to the three elements outlined above: the installed base, software infrastructure and policy infrastructure, each of which will be discussed separately, below.

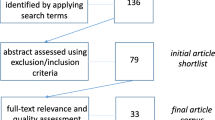

Rather than generating a new dataset for this, the analysis will work with evidence that is already available from research or is in the public domain; there is more than enough such evidence available to provide warrant for the arguments made here, and it is the analysis undertaken rather than a process of data collection that constitutes the original contribution that I seek to make. As this paper is a conceptual rather than empirical analysis, it was exempt from the conventions established for research with human participants and there were no sponsors or clients, but the obligations of professional responsibility in terms of transparency and reporting were followed (BERA 2018).

The Installed Base

Ford and Wajcman’s analysis presumes that the idea of the installed base is self-evident; however, for the sake of clarity, here I will adopt the specification provided by Igira and Aanestad (2009: 214):

The installed base is understood not just as installed technical systems, but as the interconnected practices and technologies that are institutionalized in the organization. Thus, work routines, organizational structures, and social institutions are very significant elements of the installed base.

Their analysis shows how the installed base can ‘lock in’ subsequent developments, where switching costs and sunk investments outweigh the achievable benefits of change.

Twitter’s installed base draws on its sociotechnical precedents. It was created to provide an online social networking platform that supports ‘micro-blogging’ by sharing posts of up to 140 characters, which were initially envisaged as status updates (Rogers 2013). In doing so, it built on several established technologies. The length of posts was determined by the SMS (short message service) originally used to distribute ‘tweets’. SMS involved sending messages on the signalling paths that controlled telephone traffic when they were unused, to minimise costs. However, the formats used for signalling restricted the length of the message; at the point when Twitter started to use this technology, this meant a maximum length of 140 characters for the message, with a further 20 reserved for a username.

This distribution format specified the ‘micro’; the remainder of the specification was drawn from the previous technology of ‘blogging’. This involved creating a chronological ‘web log’ (shortened to blog) of posts in chronological order, as a kind of public diary or series of thoughts on contemporary topics.

Additional functionality was added that changed the way in which people could interact with tweets. Any tweet can be read by anyone and can be searched for using hashtags. Additionally, users can direct posts to other users by using the ‘@’ symbol as a prefix to their username and can declare themselves ‘followers’ of other users in order to receive notifications when that person posts. This functionality was established by users of Twitter, and so could be seen as a matter of practice, not infrastructure; however, arguably, it should be understood as being part of the installed base because these conventions were adopted from previously established practices that took place on Internet Relay Chat (IRC) platforms (Rogers 2013).

The value of hashtags, @ addresses and ‘following’ remains a topic for debate; although Twitter clearly supports new ways to author short texts and connect to other people, analysis has also suggested that ‘most of the links declared within Twitter were meaningless from an interaction point of view’ (Huberman et al. 2009). Other functionality included the ability to ‘like’ a tweet or to forward it on (‘retweet’) so that it received further attention.

There are two further features of the installed base that are relevant to this paper. The first concerns the assumptions made about the purpose of tweets. Drawing from blogging, tweets were originally assumed to be status updates; they were subjective reports about individuals’ experiences and feelings. As such, it was assumed that anyone could declare anything; posts are not expected to be formally reviewed, checked or substantiated. (The prompt for submissions changed in 2009 from, ‘what are you doing?’, to, ‘what’s happening?’, trying to shift the emphasis away from individual users and towards current events—although this did not change the nature of the majority of posts made (Rogers 2013)).

This freedom to post is reflected in the assertion on Twitter’s ‘values’ pageFootnote 1:

We believe in free expression and think every voice has the power to impact the world.

If post-truth political rhetoric is characterised by appeals to emotion and belief rather than fact (Lynch 2017), then it should hardly be surprising that Twitter has become such an important political arena. Tweets are assumed to be personal opinions and are there to be liked and shared; there is no provision structured into the logic of Twitter for ‘fact checking’, verification, review or marshalling evidence. Twitter was designed for the very rhetorical features that characterise post-truth politics, foregrounding popularity whilst hiding any lack of substance by omitting any indication of whether there is evidence for the claim or not.

The second feature is the material asymmetry with which Twitter operates. Although the values of Twitter are around free expression and an apparent democratisation of knowledge, not even Twitter as a company is able to bring these data together in one place, relying instead on a complex material foundation of databases and caches, the company network, intersections with over 3000 other networks, and so on (Hashemi 2017). Even if individuals can search tweets, they cannot store and review them all; there is an unavoidable material overhead in terms of storage and processing power (Starosielski 2014). This means that already-privileged groups with access to resources are able to make categorically different claims about trends and developments on Twitter than less affluent groups or individuals. Consequently, on Twitter, everyone is able to speak, but rich corporations can speak for others in a way that disenfranchised groups or individuals cannot afford to.

Software Layer

The installed base provides the foundations of Twitter, materially and socially; the software layer is what makes Twitter ‘programmable’. Bogost and Montfort (2009: 3) place considerable emphasis on this idea as a defining feature of platform studies, which they see as ‘the study of computational or computing systems that allow developers to work creatively on them’. Being programmable allows the system to be customised, developed and adapted to uses that were unanticipated by the original platform developers.

Twitter’s software layer has been designed to share information, but it does so in ways that prioritise certain messages over others. In order to analyse the influences of this prioritisation, I will draw on the concept of attention.

Attention, Zhang et al. argue (2018), forms a basis for power, creating an audience, allowing a speaker to speaker to change or mobilise opinions, and then using this mobilisation to achieve other forms of power (such as political, through civic engagement or economic, through marketing). Citton (2017) has discussed various ways in which attention can be understood as an economy that shapes society, building on ideas such as the principle of valorization through attention (‘the simple fact of looking at an object represents a labour that increases the value of that object’ (47), and the self-reinforcing circular dynamic that ‘attention attracts attention’ (48). He illustrates how these ideas, taken together, neatly explain the emergence of celebrities, the ways in which people court attention through outrageous comments, and so on.

These ideas can also explain how the software infrastructure of Twitter promotes certain messages at the expense of others. Twitter’s software layer has created a very particular economy of attention, using algorithms to rank accounts in terms of followers, and tweets in terms of their exposure, including ‘re-tweeting’ where a user forwards someone else’s posting on to their own followers.

There are two consequences to this. Firstly, these algorithms create an environment in which it is possible to ‘amplify’ particular messages. In this context, Zhang et al. (2018: 3166) define amplification as intentional or unintentional actions that increase measures (such as 'likes') that mark a message or person as being worthy of further attention or actions such as further distribution of content.

Secondly, these algorithms help resource-rich elites to measure—and through this classificatory act, produce (Bowker and Star 2000)—social groups, so that predictive analytics can be used to influence what they attend to (Harsin 2015). This is a political economy, in multiple senses: it is about the creation of power through the definition and consolidation of publics, but it is also about making money out of political issues. For example, the producers and curators of ‘fake news’ (fictions never intended to be verifiable) generate advertising revenue by supplying content that they believe will appeal to groups of users defined through algorithmic analysis (Spohr 2017).

However, as Pettman points out (2016: 39–40), ‘it would be a waste of breath to condemn any one group as ‘manipulators’, unless we gesture in the vague direction of ‘the owners of the means of production’. The problem is, in the age of the prosumer, we are all complicit.’ On Twitter, following people, re-tweeting posts, liking one thing but not another, all contribute to algorithmic classifications, and to the creation of personalised ‘echo chambers’ in which all people see are posts that reinforce the views they already hold. Illustrating this in relation to opinion polls, Pettman observes:

Rather than simply being reported […] these new polls are customizable, searchable, reconfigurable, filterable and otherwise ‘operable’. Which of course makes them all the more fascinating to us, as the permanent feedback loop of real-time data provides a four-dimensional mirror in which to catch our reflection: a mass selfie of four billion people at once. (Pettman 2016: 34)

This selective exposure—the predisposition to consume media that already aligns with a user’s views and belief—is an important contributor to the polarisation of peoples’ views (Spohr 2017). This is what allowed social media users on Facebook to be surprised by the outcome of the UK’s vote on Brexit: users polarised into two well-separated communities, each acting as its own echo chamber, reinforcing the incompatible belief that both groups held that the majority of the population agreed with their views (del Vicario et al. 2017).

However, Pettman’s proposal that we are all complicit in the creation of this system is slightly undermined when the two consequences of Twitter’s software layer are brought together. Twitter does in fact allow individuals and groups to act as ‘manipulators’, not just unintentionally (through the aggregation of ‘likes’ and ‘follows’) but also in more direct ways. Users are able to re-programme Twitter in ways that amplify some posts at the expense of others. As a platform, Twitter uses open APIs—application programming interfaces that allow other software services to build on top of the platform. This makes it is possible to create ‘bots’, software applications that post or re-post tweets automatically. Citton (2017: 61) proposes that such infrastructures allow an over-economy of scale, which means in this case that specific messages benefit from ‘the dead attention of machines’ to multiply their effects. Those with the computational or economic resources to create such bots are therefore able to manipulate Twitter—and specifically, its ranking algorithms—to create a disproportionate influence for posts they favour.

A motivated attacker can easily orchestrate a distributed effort to mimic or initiate this kind of organic spreading behavior, and with the right choice of inflammatory wording, influence a public well beyond the confines of his or her own social network. (Ratkiewicz et al. 2011: 297)

Woolley and Guilbeault, for example, analysed Twitter posts made during the 2016 US election, demonstrating how bots were used to manufacture apparent consensus where there was none. They concluded that ‘armies of bots built to follow, retweet, or like a candidate’s content make that candidate seem more legitimate, more widely supported, than they actually are’ (Woolley and Guilbeault 2017: 8). This particularly suited Trump’s agenda, which was reported to them by a digital strategist who worked on the campaign as follows:

Trump’s goal from the beginning of his candidacy has been to set the agenda of the media. His strategy is to keep things moving so fast, to talk so loudly—literally and metaphorically—that the media, and the people, can’t keep up. (Cassidy, reported in Woolley and Guilbeault 2017: 4)

However, these bots were not necessarily owned by the Trump campaign team; Woolley and Guilbeault suggest (2017: 8) that instead, citizen-built bots probably accounted for the largest spread of propaganda, false information and political attacks during the election. Both pro-Clinton and pro-Trump bot accounts existed, but their anaylsis is that pro-Trump bots were five times more active. This not only affected the kinds of coverage each candidate received within Twitter but also shaped what was reported on other news sites: Allcot and Gentzkow (2017) argue that this pro-Trump skew remained visible in sites that drew from social media, including mainstream and ‘fake news’ sites, as well as the stories that were shared on Facebook.

Citton argues that this kind of system creates a particularly effective and insidious form of censorship: ‘everything is allowed, everything is available, but only a very small minority is visible’ (2017: 72). Consequently, individual attention is aligned with the dominant directions of collective attention, guided by the priorities structured into the system’s algorithms (and manipulated by those with the resources to ‘re-programme’ the system), without the need to actually ban or exclude anything. This illustrates how the post-truth rhetoric of recent politics was able to flourish on Twitter: attention can be directed towards specific opinions, so that questions about credibility or veracity can be drowned out.

Policy Infrastructure

Ford and Wajcman (2017) refer to the policies and laws that operate on Wikipedia, and specifically, the way in which these are evoked in order to establish authority. These are used to delimit what is sayable, enabling users with a greater understanding of policies to engineer a ‘consensus’ with other users that favours their preferred position.

Twitter’s explicit commitment to value free expression means that it has few policies about what is or is not sayable. For example, Twitter does not provide a statement of policy on dispute resolution; arguably, such a policy would be out of place with the logics of the installed base that underlie the service.

As a consequence, Twitter has been described as operating like the ‘Wild West’ (Woolley and Guilbeault 2017), and reports of bullying and harassment are widespread. High profile cases of misogyny, including rape and death threats, together with media coverage of suicides following cyberbullying, have drawn attention to the systematic inequalities and abuse that take place on Twitter (Agate and Ledward 2013).

Twitter is aware of these issues and has responded by identifying behaviours that are considered unacceptable, which might be understood as part of the policy infrastructure in that they can be invoked as rules or laws. For example, Twitter’s ‘safety’ pageFootnote 2 asserts that ‘we are committed to creating a culture of trust, safety, and respect’; this is followed by statements of the company’s commitment not to tolerate threats or violence against a person or group. The incidents described by Agate and Ledward (2013) also led to the company introducing a ‘report abuse’ button, creating a software layer enactment of the policy.

However, not everyone believes that these commitments constitute policy infrastructure in a particularly meaningful way. As a company, Twitter has funded public organisations interested in online safety, and has set up a policy action committee that donates money to US candidates and committees committed to principles such as Internet freedom and net neutrality. However, it has been criticised for failing to enact these values within its own platform. There is certainly a perception that Twitter’s own safety policies are not systematically applied; this undermines their credibility, and consequently, the authority that can be gained by invoking them.

Well-organized prompting encourages digital brownshirts to pile abuse (including offline abuse, after ‘doxxing’) on vocal opponents of white supremacist and similar groups, and on the politicians with which they ally. Given that the company Twitter has made no significant efforts to deter abuse, the platform has stabilized as a site for actions that would be illegal in many places. (Sismondo 2017: 4)

Actual evidence about the enactment of these policies is mixed. For example, Matias et al. (2015) reported that in 55 % of cases where reports of harassment were escalated to Twitter, action was taken to delete, suspend or warn the alleged harassing account. These actions mainly concerned reports of hate speech, threats of violence and nonconsensual photography.

However, this same report describes how Twitter’s policies created inequalities that favour abusers over those who report the abuse. For example, the company requires evidence of harassment before it will act, but has refused to accept screenshots as evidence. This has made it difficult to act on ‘tweet and delete’ harassment, in which the poster deletes the offensive message so that it becomes inaccessible, making the claim unverifiable. Although this demand for evidence may be understandable, given the legal risks of action against the company, it is ironic, given that no evidence is required to post in the first place. This underscores the way in which conversations on Twitter operate according to their own distinctive logic, rather than to the kinds of standard that might be expected in other contexts.

The effects of such ‘trolling’ (a term used to cover various forms of harassment through social media) were explored with a survey of 338 Twitter users following the 2016 US election. Olson and LaPoe (2017) described a ‘spiral of silence’, in which groups that were already marginal (including women, people with disabilities and people who identify as LGBTQIA) were attacked disproportionately often, and as a consequence, chose to self-censor their views online. This is far from an isolated case: as Owen et al. (2017) argue, the levels of abuse that are permitted by policies intended to encourage free speech have silenced women around the world who were previously willing to speak out on political issues. In a system driven by measures of attention, this consolidates the power of already-dominant groups; in other words, Twitter’s laissez-faire policies have exacerbated rather than mitigated existing inequalities and prejudices.

Discussion

The analysis above helps to explain the association between post-truth political rhetoric and Twitter. From its underlying logic and material substrate, through the ways in which its programmable interface has been taken up to marshal and manage attention, to the ways that values of ‘free speech’ have allowed powerful groups to silence others, Twitter seems an ideal platform for supporting political positions advanced through emotion, force and repetition.

However, treating this as a simple, deterministic position would be a mistake, ignoring the ways in which technologies can be enrolled by different social groups (Oliver 2005). This situation is historically contingent; from the outset, things could have been otherwise. As Rogers’ history illustrates (2013), elements of the installed base such as hashtags were user-initiated developments, albeit ones inherited from practices on other platforms; the installed base would have been different, had users unfamiliar with IRC conventions shaped its early stages of development. This contingency illustrates how the infrastructure of Twitter is itself a social effect, as well as an influence.

Even within the historically contingent infrastructure currently constituting Twitter, the installed base does not determine users’ practices; there are always counter-examples that show the fluidity of the system, and how things could be otherwise. For example, Twitter is an important part of many scholars’ professional practice (Veletsianos 2011), supporting academic engagement and debate, and allowing scholars to link discussions to relevant peer-reviewed work, reflecting their academic expertise. Moreover, even confrontational postings can play socially constructive roles, such as when social media became a platform for the development of citizenship, politics and coordination during the Egypt uprising and Arab Spring (Lim 2012). It can also support diversity, allowing cultural groups to communicate and coordinate. For example, Clark (2014) has shown although African-Americans have been positioned as an ‘out-group’ on Twitter, Black Twitter hashtags have created a strong presence through the repetition and manipulation of culturally significant phrases, images and wordplay associated with offline African-American experiences. Lee (2017) has further described how these hashtags have been used to redefine media images, highlight counter-narrative testimonials, and to organise and build communities that have supported education, healing and positive action.

It would be a mistake to universalise the analysis of any of the layers of Twitter’s infrastructure. For example, although bots on Twitter have been used to overwhelm expert opinion and the views of marginalised groups by amplifying powerful messages, bots on Wikipedia have been used in a very different way. There, their role was to flag areas as ‘citation needed’, effectively slowing down, interrupting or challenging authors rather than accelerating the discussion (Ford and Wajcman 2017: 520).

It would also be unwise to overplay the novelty of Twitter in supporting particular forms of political rhetoric. Allcott and Gentzkow (2017) make the point that concerns about the influence of technology on political debates predate discussions of ‘filter bubbles’, and can be traced back through the influence of television in promoting ‘telegenic’ politicians, the way radio was believed to reduce debate to soundbites, and to the rise of cheap printing in the nineteenth century, which allowed partisan newspapers to expand their reach dramatically. Kelkar (2019), developing this last point, draws attention to the historical specificity of the current belief that news should be anything other than partisan: newspapers developed from being mouthpieces for particular political interests to addressing broader audiences only after the mid-nineteenth Century, and the journalistic ideal of unbiased reporting only gained traction as a response to widespread concern about propaganda during World War I. Twitter does not represent a ‘singularity’ or even a radical break from previous media but is just the latest development in a long history. Infrastructure has always had a role to play in political debate.

Conclusions

The growth of post-truth political rhetoric can be seen as part of a wider social scepticism, and the valuing of affective response over evidence. This has epistemic consequences, allowing populism to gain ground over expertise. This development has proved hard for academics to resist: reflexive self-critique is an important part of academic expertise, and reasonable doubt is too easily recast as equivocation or uncertainty, making it easy to undermine.

It is widely asserted that social media has played a role in this, but exactly what this role consists of has been hard to specify. By analysing Twitter from a postdigital perspective—one which explores connections between the material, digital and social elements of the platform, illustrating how specific tweets sit alongside questions about server farms, bots and personal safety—two main conclusions can be drawn.

Firstly, post-truth political rhetoric exemplifies the kind of perverse critique that Latour lamented (2004), undermining scientific knowledge through and for personal or political power.

However, reframing both post-truth claims and expert knowledge alike as matters of concern draws attention to the ways in which both of these use rhetoric to enrol publics in particular views of the world. This case may therefore make a modest contribution to feminist STS scholarship and ‘third wave’ science studies, which have worked to reveal and intervene in divisive binaries such as these.

Secondly, the analysis explains why post-truth politics have been so strongly associated with Twitter. Closely related logics have been instantiated in every level of Twitter’s infrastructure. Twitter was designed to let anyone say anything, and to concentrate attention on what is already popular; in contrast to Wikipedia, Twitter’s design skews discussions away from careful debate grounded in evidence, and towards the extreme, favouring sensation, repetition and force. This analysis has therefore offered a new explanation of the structuring, normalising influence of Twitter on post-truth discussions.

It is important to add the caveat that this should not be read as a deterministic position; subversive or counter-cultural practices show that it could have been otherwise, and still could be. However, even though any technology can be understood as ‘fluid’, being configured, enacted and experienced in different ways by its users (e.g. Enriquez 2009), the material and social infrastructure of Twitter does encourage particular kinds of practice and discourage others. It may not be to blame for causing Trump’s victory—although Trump has said himself that he may not have been elected without it (Musil 2017)—but it did provide a comfortable environment in which his favourite kinds of political rhetoric were able to flourish. Lanier (2018) has described social media as a mass behaviour modification machine, rented out to politicians and brands to make money, and suggests idealistically that a new business model is needed. Sadly, whether or not this is true, it is not practical, when Twitter is already embedded in millions of users’ social practices, nor would it affect the possibility that any migration of users might recreate the logics of Twitter as part of the installed base of any replacement platform (Leigh Star 1999).

Finally, I wish to return to the fifth step that differentiates matters of care from matters of concern: the need for reflexivity and the development of new articulations that strengthen cherished ‘things’. Post-truth rhetoric substitutes emotive, populist appeals for reasoned debate and academic expertise, and it would be disingenuous to suggest that a paper such as this is entirely neutral in responding to that situation.

Indeed, Kolkar concludes (2019: 102) that whilst it is possible to pursue a neutral, symmetrical analysis of the polarisation of publics around different ‘fact-making’ institutions, ultimately, ‘disagreements over facts are often just symptoms of a wider disagreement over values, and […] these disagreements are materially, institutionally, and historically structured’. Consequently, any appeal to expertise (understood as objective, rational empiricism) is already politically aligned; work such as is produced by and strengthening of a liberally aligned demarcation of people and institutions. (And likewise, any rejection of expertise is politically aligned.)

Given that there is no way out of the politics of expertise, it is important to clarify what work this paper might do. The analytic purpose of this paper is to delineate questions about the relationship between media and expertise more clearly, in order to identify the conditions under which engagement could be developed between the polarised publics that have been constituted around different kinds of fact-making institutions. If the production of different kinds of fact is partly a question of rhetoric, one potentially generative way forward would be for all parties to broaden the repertoire of rhetorics that they currently deploy: for example, liberally aligned institutions could pursue more emotive, populist arguments, and conservatively aligned institutions could engage more in reasoned, evidence-based debate. The irony of calling for this in an academic publication is clear; this argument works within, rather than challenging, the preferences of liberally aligned institutions such as the public university that employs me. However, although this paper is unlikely to change the world, the cherished ‘thing’ that I may be able to strengthen with this is the institution of academic work. Thus, my intention here is to achieve two things: to reveal the workings of post-truth rhetoric on Twitter, which may make it easier for those working in academia to see, to name and to resist; and to suggest ways in which academics could take action against these developments.

Citton (2017) described how:

Attention is first of all structured (and spellbound) by collective enthralments, which are inextricably architectural and magnetic, and which are induced by media apparatuses circulating certain forms (rather than others) among and within us. (Citton 2017: 31)

Although Twitter has made post-truth political rhetoric easier, I suggest it may yet be possible to find ways either to resist enthralment by learning to play this game better or even by engaging with the fundamental logic that favours these specific forms in the first place. Rather than feeling limited to either opting out or remaining silent, it may instead be possible to change the debate through, for example, coopting the software layer by marshalling bots in more effective ways, developing laws that protect groups from violence and aggression (on any platform, not just Twitter), or simply by turning the sceptical gaze back onto the political moves made to discredit expertise. Action as well analysis may be required, perhaps working along the lines suggested by the manifesto for online learning (Bayne et al. 2016). The challenge of infrastructure is that the logics that favour post-truth rhetoric have already been locked in to Twitter’s design; as a result, ultimately, the effort needed to change it may prove too great to marshal. However, careful analysis may be able to create opportunities worth trying.

Notes

https://about.twitter.com/en_us/values.html; accessed 15/10/17.

https://about.twitter.com/en_us/safety.html; accessed 15/10/17.

References

Agate, J., & Ledward, J. (2013). Social media: how the net is closing in on cyber bullies. Entertainment Law Review, 24(8), 263–268.

Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31(2), 211–236. https://doi.org/10.1257/jep.31.2.211.

Altbach, P. G. (2001). Academic freedom: international realities and challenges. Higher Education, 41(1–2), 205–219. https://doi.org/10.1023/A:1026791518365.

Apperley, T., & Parikka, J. (2018). Platform studies’ epistemic threshold. Games and Culture, 13(4), 349–369. https://doi.org/10.1177/1555412015616509.

Bayne, S., Evans, P., Ewins, R., Knox, J., Lamb, J., Macleod, H., O’Shea, C., Ross, J., Sheail, P., & Sinclair, C. (2016) Manifesto for Teaching Online 2016. Available from: https://blogs.ed.ac.uk/manifestoteachingonline/

Bennett, S., Maton, K., & Kervin, L. (2008). The ‘digital natives’ debate: a critical review of the evidence. British Journal of Educational Technology, 39(5), 775–786. https://doi.org/10.1111/j.1467-8535.2007.00793.x.

BERA – British Educational Research Association (2018) Ethical guidelines for educational research, 4th edition. Available online: https://www.bera.ac.uk/researchers-resources/publications/ethical-guidelines-for-educational-research-2018. Accessed 16 September 2019.

Bogost, I., & Montfort, N. (2009). Platform studies: frequently questioned answers, Digital Arts and Culture 2009. http://bogost.com/downloads/bogost_montfort_dac_2009.pdf. Accessed 16 September 2019.

Bowker, G., & Star, S. (2000). Sorting things out: classification and its consequences. Cambridge, MA: MIT press https://www.ics.uci.edu/~gbowker/classification/. Accessed 16 September 2019.

Bowker, G., Baker, K., Millerand, F., & Ribes, D. (2010). Toward information infrastructure studies: ways of knowing in a networked environment. In J. Hunsinger, L. Klastrup, & M. Allen (Eds.), International handbook of internet research (pp. 97–117). Netherlands: Springer http://interoperability.ucsd.edu/docs/07BowkerBaker_InfraStudies.pdf. Accessed 16 September 2019.

Citton, Y. (2017). The ecology of attention (trans. Barnaby Norman). Cambridge: Polity Press.

Clark, M. (2014) To tweet our own cause: a mixed-methods study of the online phenomenon ‘Black Twitter’. Doctoral Dissertation, University of North Carolina at Chapel Hill. https://search.proquest.com/docview/1648168732. Accessed 16 September 2019.

Collins, H., Evans, R., & Weinel, M. (2017). STS as science or politics? Social Studies of Science, 47(4), 580–586. https://doi.org/10.1177/0306312717710131.

de Laet, M., & Mol, A. (2000). The Zimbabwe bush pump: mechanics of a fluid technology. Social Studies of Science, 30(2), 225–263. https://doi.org/10.1177/030631200030002002.

Del Vicario, M., Zollo, F., Caldarelli, G., Scala, A., & Quattrociocchi, W. (2017). Mapping social dynamics on Facebook: the Brexit debate. Social Networks, 50, 6–16. https://doi.org/10.1016/j.socnet.2017.02.002.

Enriquez, J. G. (2009). From bush pump to blackboard: the fluid workings of a virtual environment. E-learning and Digital Media, 6(4), 385–399. https://doi.org/10.2304/elea.2009.6.4.385.

Ford, H., & Wajcman, J. (2017). ‘Anyone can edit’, not everyone does: Wikipedia’s infrastructure and the gender gap. Social Studies of Science, 47(4), 511–527. https://doi.org/10.1107/06330613217217716769922172.

Forss, K., & Magro, L. (2016). Facts or feelings, facts and feelings? The post-democracy narrative in the Brexit debate. European Policy Analysis, 2, 12–17. https://doi.org/10.18278/epa.2.2.2.

Gore, A. (2006). An inconvenient truth: the planetary emergency of global warming and what we can do about it. New York: Rodale.

Harsin, J. (2015). Regimes of posttruth, postpolitics, and attention economies. Communication, Culture & Critique, 8(2), 327–333. https://doi.org/10.1111/cccr.12097.

Hashemi, M. (2017). The infrastructure behind Twitter: scale. Twitter, 19 January. https://blog.twitter.com/engineering/en_us/topics/infrastructure/2017/theinfrastructure-behind-twitter-scale.html. Accessed 16 September 2019.

Huberman, B., Romero, D. & Wu, F. (2009) Social networks that matter: Twitter under the microscope. First Monday, 14 (1). http://firstmonday.org/ojs/index.php/fm/article/view/2317/2063. Accessed 16 September 2019.

Igira, F., & Aanestad, M. (2009). Living with contradictions: complementing activity theory with the notion of “installed base” to address the historical dimension of transformation. Mind, Culture, and Activity, 16(3), 209–233. https://doi.org/10.1080/10749030802546269.

Jandrić, P., Knox, J., Besley, T., Ryberg, T., Suoranta, J. & Hayes, S. (2018). Postdigital science and education. Educational Philosophy and Theory, 50 (10), 893-899. https://doi.org/10.1080/00131857.2018.1454000.

Kelkar, S. (2019). Post-truth and the search for objectivity: political polarization and the remaking of knowledge production. Engaging Science, Technology, and Society, 5, 86–106. https://doi.org/10.17351/ests2019.268.

Krebs, R. R., & Jackson, P. T. (2007). Twisting tongues and twisting arms: the power of political rhetoric. European Journal of International Relations, 13(1), 35–66. https://doi.org/10.1177/1354066107074284.

Lanier, J. (2018). Ten arguments for deleting your social media accounts right now. New York: Random House.

Latour, B. (2004). Why has critique run out of steam? From matters of fact to matters of concern. Critical Inquiry, 30(2), 225–248. https://doi.org/10.1086/421123.

Latour, B. (2005). Reassembling the social: an introduction to actor-network-theory. Oxford: Oxford University Press.

Latour, B., & Woolgar, S. (1979). Laboratory life: the construction of scientific facts. Princeton, NJ: Princeton University Press.

Lim, M. (2012). Clicks, cabs, and coffee houses: social media and oppositional movements in Egypt, 2004–2011. Journal of Communication, 62(2), 231–248. https://doi.org/10.1111/j.1460-2466.2012.01628.x.

Lynch, M. (2017). STS, symmetry and post-truth. Social Studies of Science, 47(4), 593–599. https://doi.org/10.1177/0306312717720308.

Lyotard, J. F. (1984). The postmodern condition: A report on knowledge. Minnesota: University of Minnesota Press.

MacKenzie, A. & Bhatt, I. (2018). Lies, Bullshit and Fake News: Some Epistemological Concerns. Postdigital Science and Educaction, (2018). https://doi.org/10.1007/s42438-018-0025-4

Martin, A., Myers, N., & Viseu, A. (2015). The politics of care in technoscience. Social Studies of Science, 45(5), 625–641. https://doi.org/10.1177/0306312715602073.

Matias, J. N., Johnson, A., Boesel, W. E., Keegan, B., Friedman, J., & DeTar, C. (2015). Reporting, Reviewing, and Responding to Harassment on Twitter. Women, Action, and the Media. May 13, 2015. https://arxiv.org/pdf/1505.03359.pdf. Accessed 16 September 2019.

Musil, S. (2017) Twitter’s co-founder is sorry if the site helped elect Trump. CNET article: https://www.cnet.com/news/twitter-cofounder-sorry-if-site-helped-elect-trump-evan-williams/. Accessed 16 September 2019.

Nguyen, C. (2018). Echo chambers and epistemic bubbles. Episteme, 1–21. https://doi.org/10.1017/epi.2018.32.

Oliver, M. (2005). The problem with affordance. The E-Learning Journal, 2(4), 402–413. https://doi.org/10.2304/elea.2005.2.4.402.

Oliver, M. (2011). Technological determinism in educational technology research: some alternative ways of thinking about the relationship between learning and technology. Journal of Computer Assisted Learning, 27 (5), 373-384. https://doi.org/10.1111/j.1365-2729.2011.00406.x.

Olson, C., & LaPoe, V. (2017). “Feminazis,” “libtards,” “snowflakes,” and “racists”: trolling and the Spiral of Silence effect in women, LGBTQIA communities, and disability populations before and after the 2016 election. The Journal of Public Interest Communications, 1(2), 116. https://doi.org/10.32473/jpic.v1.i2.p116.

Owen, T., Noble, W., & Speed, F. (2017). Silenced by Free Speech: how cyberabuse affects debate and democracy. In T. Owen, W. Noble, & F. Speed (Eds.), New perspectives on cybercrime (pp. 159–174). Cham, Switzerland: Palgrave Macmillan.

Pettman, D. (2016). Infinite distraction. London: Polity.

Plantin, J. C., Lagoze, C., Edwards, P. N., & Sandvig, C. (2018). Infrastructure studies meet platform studies in the age of Google and Facebook. New Media & Society, 20(1), 293–310. https://doi.org/10.1177/14611444816661553.

Puig de la Bellacasa, M. (2011). Matters of care in technoscience: assembling neglected things. Social Studies of Science, 41(1), 85–106. https://doi.org/10.1177/0306312710380301.

Ratkiewicz, J., Conover, M., Meiss, M. R., Gonçalves, B., Flammini, A., & Menczer, F. (2011) Detecting and tracking political abuse in social media. In Proceedings of the Fifth International AAAI Conference on Weblogs and Social Media, (pp. 297–304). Menlo Park (CA): Association for the Advancement of Artificial Intelligence. https://www.aaai.org/ocs/index.php/ICWSM/ICWSM11/paper/viewFile/2850/3274/. Accessed 16 September 2019.

Rogers, R. (2013). Debanalizing Twitter: the transformation of an object of study. In Proceedings of the 5th annual ACM web science conference (pp. 356–365). New York: ACM press.

Shin, J., & Thorson, K. (2017). Partisan selective sharing: the biased diffusion of fact-checking messages on social media. Journal of Communication. https://doi.org/10.1111/jcom.12284.

Sismondo, S. (2017). Post-truth? Social Studies of Science, 47(1), 3–6. https://doi.org/10.1177/0306312717692076.

Spohr, D. (2017). Fake news and ideological polarization: filter bubbles and selective exposure on social media. Business Information Review, 34(3), 150–160. https://doi.org/10.1177/0266382117722446.

Star, S. L. (1999). The ethnography of infrastructure. American Behavioral Scientist, 43(3), 377–391. https://doi.org/10.1177/00027649921955326.

Starosielski, N. (2014). Media, hot & cold! The materiality of media heat. International Journal of Communication, 8(5), 2504–2508 https://ijoc.org/index.php/ijoc/article/view/3298/1268. Accessed 16 September 2019.

Taffel, S. (2016). Perspectives on the postdigital: beyond rhetorics of progress and novelty. Convergence: The International Journal of Research into New Media Technologies, 22(3), 324–338. https://doi.org/10.1177/1354856514567827.

Thompson, G., Savage, G. C., & Lingard, B. (2016). Think tanks, edu-businesses and education policy: issues of evidence, expertise and influence. Australian Educational Researcher, 43, 1–13. https://doi.org/10.1007/s13384-015-0195-y.

Veletsianos, G. (2011). Higher education scholars’ participation and practices on Twitter. Journal of Computer Assisted Learning, 28(4), 336–349. https://doi.org/10.1111/j.1365-2729.2011.00449.x.

Warner, M. (2002). Publics and counterpublics. Public Culture, 14 (1), 49–90. https://muse.jhu.edu/article/26277. Accessed 16 September 2019.

Woolley, S. & Guilbeault, D. (2017) Computational propaganda in the United States of America: manufacturing consensus online. Working Paper 2017.5. Oxford, UK: Oxford Internet Institute. Available online: http://comprop.oii.ox.ac.uk/wp-content/uploads/sites/89/2017/06/Comprop-USA.pdf. Accessed 16 September 2019.

Zhang, Y., Wells, C., Wang, S., & Rohe, K. (2018). Attention and amplification in the hybrid media system: the composition and activity of Donald Trump’s Twitter following during the 2016 presidential election. New Media & Society, 20(9), 3161–3182. https://doi.org/10.1177/1461444817744390.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Oliver, M. Infrastructure and the Post-Truth Era: is Trump Twitter’s Fault?. Postdigit Sci Educ 2, 17–38 (2020). https://doi.org/10.1007/s42438-019-00073-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42438-019-00073-8