Abstract

Purpose

Structural damage can significantly alter a system's local flexibility, leading to undesirable displacements and vibrations. Analysing the dynamic structure feature through statistical analysis enables us to discriminate the current structural condition and predict its short- or long-term lifespan. By directly affecting the system's vibration, cracks and discontinuities can be detected, and their severity quantified using the DI. Two damage indexes (DI) are used to build a dataset from the beam's natural frequency and frequency response function (FRF) under both undamaged and damaged conditions, and numerical and experimental tests provided the data-driven.

Methods

In this paper, we present the methodology based on machine learning (ML) to monitor the structural integrity of a beam-like structure. The performance of six ML algorithms, including k-nearest neighbors (kNN), Support Vector Machine (SVM), Decision Tree (DT), Random Forest (RF), and Naive Bayes (NB) are investigated.

Results

The paper discusses the challenges of implementing each technique and assesses their performance in accurately classifying the dataset and indicating the beam's integrity.

Conclusion

The structural monitoring performed with the ML algorithm achieved excellent metrics when inputting the simulation-generated dataset, up to 100%, and up to 95% having as input dataset provided from experimental tests. Demonstrating that the ML algorithm could correctly classify the health condition of the structure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Aerospace, and civil and mechanical systems commonly use beam-like structures that, under adverse conditions, can induce damage and procreate cracks. Crack damage can be considered any change in the local flexibility of a structure that creates undesirable displacements and vibrations [1]. Damage identification has been performed through periodical inspection, non-destructive testing/non-destructive evaluation, or visual observation. Structural health monitoring (SHM) has emerged to transition from offline damage identification to near real-time and online damage assessment. Information and statistical analyses of such structure allow us to determine the current structural condition for short or long periods [2, 3]. Techniques that use the correlation between the signal response of the systems and damage detection must adopt a reference feature and starting point of the monitoring stage. Therefore, a system’s condition and a signature’s reference must be considered healthy or without fault. The first step of the SHM is damage detection, followed by assessment and monitoring. Machine Learning (ML) techniques have been used to develop feasible algorithms to make potential damage predictions.

ML algorithms provide the tools needed to enhance the capabilities of SHM systems and provide intelligent solutions to past challenges. It offers efficient solutions to build models or representations for mapping input patterns in measured sensor data to output targets for a damage assessment at different levels [4]. The concept of machine learning enters the paradigm of feature selection and statistical modeling for feature discrimination as described in [5, 6]. The feature can be extracted using computer vision-based techniques, including cameras or digital images, and sensor-based techniques, which contain the vibration system information, e.g., modal parameters. Sun et al. [7] used the original train weigh-in-motion time series encoded in images to identify bridge damage. They used AdaBoost, support vector machine (SVM), k-Nearest Neighbors (kNN), and linear classification model (LC) and found that SVM leads to higher prediction accuracy and shorter computation time.

Iyer et al. [8] implemented a multi-robot, image-encoded system capable of monitoring and detecting surface defects in railroad tracks, including fractures, squats, undulations, and rust. The results were compared to four machine learning algorithms, convolutional neural networks (CNN), artificial neural networks (ANN), Random Forest (RF), and SVM. It was found that the CNN model outperformed all the algorithms under analysis. Hence, the proposed system helped eliminate the need for visual inspection, as the automated alert mechanism allowed real-time visual and location-based tracking of fault detection in railway lines. Farrar and Worden [9] used neural networks, SVM, and genetic algorithms (GA) applied in SHM. Later, Nick et al. [10] extended the ML application on SHM using Gaussian classifiers, SVM, RF, and Adaboost algorithms within the monitoring. Kurian and Liyanapathirana [6] used three ML algorithms, kNN, SVM, and RF, to predict structural damage in concrete structures with the help of sensor technology. The RF classifier algorithm generated good predictions in damaged and undamaged conditions with good accuracy compared to other algorithms.

Pratico et al. [11] used eight ML classifiers (i.e., multilayer perception (MLP), CNN, RF, and support vector classifier (SVC)) for a specific vibro-acoustic signature in different cracked road pavement, where the SVC classifier had greater accuracy among the methods. Daneshvar and Sarmadi [12] proposed a new damage detection method by multiplying each feature’s local density by its minimum distance value in the training samples. Further, they considered the nearest neighbor rule of a class, calculated through a new probabilistic method under the theory of semi-parametric extreme values (SEV). Comparative studies on two bridges revealed that the SEV method is superior to the known anomaly detectors, k-means clustering (KMC), Gaussian mixture model (GMM), and ANN delivering lower error rates and providing better damage detectability. Particle Swarm Optimization and Support Vector Machine (PSO-SVM), studied by Coung-Le et al. [13], has shown to be a good technique for identifying damage in truss and frame structures using modal parameters. He et al. [14] combined deep convolutional neural networks (DCNN) and fast Fourier transform (FFT) for damage detection in a three-story building. The experimental result showed that the proposed method achieves high precision compared to classic machine learning algorithms, such as SVM, RF, kNN, and extreme gradient boosting (XGboost).

Damage identification in beams using ML has been explored by Ghadimi and Kourehli [15]. In this study, the authors implemented a modified extreme learning machine for a supported beam, a cantilevered beam, and a fixed-simply supported beam, with and without noise effect, and frames using natural frequencies and modal shapes. The results indicated that the proposed method is very fast and accurate in the problem of detecting and estimating cracks in beam and frame structures. Gillich et al. [16] attempted to identify the location and severity of a cantilevered beam from the natural frequency. The beam had different fixation levels (ideal and non-ideal fixation). The results showed that the errors in estimating crack location and severity using the ANN method were smaller than in the RF method. Different statistical classifiers, such as Bayesian, kNN, RF, and SVM, were used in [17] to identify damage on a plate through sensors that extract signals subtracted from the baseline. The authors found that SVM and RF classifiers worked better on the constructed dataset as the error rate was the lowest. Liu and Meng [18] found that SVM is a promising method for diagnosing the damage. They applied SVM to identify and locate damage in cantilevered beams, with and without noise. Ashigbi et al. [19] implemented a Fuzzy Inference system to predict crack depth and location along the length of fifteen cracked beams, where only one was intact. The inputs to the Fuzzy system were the first natural frequency and the kurtosis of the vibration response signal. A set of Fuzzy rules was established by machine learning from the experimental datasets and applied to triangular, Gaussian, trapezoidal, and bell-shaped membership functions. This method demonstrates a practical solution to address continuous damage assessment and decision-making, both critical components of the SHM strategy.

In SHM, a typical approach is to monitor, locate, categorize, and estimate the severity of the structural damage altogether. Therefore, it is still a great challenge to establish a machine learning monitoring algorithm with a strong generalization ability for multi-task monitoring vibration-based monitoring signals. This paper addresses integrating physics-based models with machine learning algorithms for structural health monitoring. We propose a methodology that combines SHM damage indexes with ML algorithms to improve the accuracy and efficiency of damage assessment for dynamic structures. The methodology involves pre-processing and feature selection of the input data, training and validation of the ML model, and evaluating the results using appropriate metrics. Five supervised machine learning algorithms for structural damage detection are described and experimented with in the context of SHM. A scheme to build the dataset generated from random samples of the cracked beam dynamic response where parameters of the crack model were considered random variables. KNN, SVM, Decision Tree (DT), RF, and Naive Bayes (NB) algorithms are relevant in cases of features extracted from the structural responses that are affected by changes due to operational and environmental variability, such as noise and changes caused by damage. The natural frequencies and the receptance frequency response function (FRF) are used to calculate as damage index (DI) applied as input of the ML algorithms. Because a crack directly influences the system vibration, the DI can detect damage and quantifies its severity. Our findings demonstrate that the proposed methodology can assess the damage with accuracy. We discuss implications and limitations of the study and suggest future research directions for advancing the field of SHM.

Machine Learning for Health Monitoring in Beam-Like Structure

Machine learning algorithms provide the necessary tools to expand the capabilities of SHM systems [20]. It offers efficient solutions to build models or representations for mapping input patterns in measured sensor data to output targets for a damage assessment at different levels [4]. The concept of ML enters this paradigm of feature selection and statistical modeling for feature discrimination described in [2, 5]. The most common learning algorithms in ML for the SHM framework are the supervised, unsupervised, and semi- supervised methods [21]. To detect the existence and location of the damage in the structure, it is common to use supervised learning and unsupervised learning to measure the damage’s severity. Supervised learning is the most suitable method in rare scenarios where both damaged and undamaged structural data are available for engineering structures. Group classification and regression analysis are this case’s primary supervised learning methods. Hence, numerous machine learning algorithms have been implemented to perform simple to complex tasks.

In the literature between the years 1996 and 2022, approximately 92 articles were found related to this study. The research selection was performed using the Proknow-C process [22, 23], a robust method for selecting articles of theoretical references. Between 2018 and 2021, the number of related studies increased sharply, with 13 publications in 2018 and over 26 in 2021. Figure 1 shows an ascendant evolution of ML techniques applied in beam-like structure monitoring.

A bibliometric network performed on VOSviewer exhibits the link between SHM and the most used ML algorithm. VOSviewer is a tool for mapping the network of topics based on the distance between two nodes of the subject, where the distance between the nodes indicates the intensity of their relationship, and the node size represents the occurrence. Figure 2 shows a broad view of the most discussed topics within the literature, allowing us to deeply understand the research trends in the SHM and Machine Learning field. SHM, damage identification, and detection have the highest link events with the ML methods. The most used supervised algorithms shown in Fig. 2 are SVM, decision tree, random forest, artificial neural networks, and kNN. The unsupervised algorithms are k-means clustering and auto-encoders.

According to Sun et al. [24], the most used supervised learning algorithms in structural design construction and structure performance evaluation are Linear Regression, Kernel Regression, Tree-Based Algorithms, Logistic Regression, SVM, kNN, and Neural Networks. Vitola et al. [25] and Tibaduza et al. [26] used different kNN algorithms, applying a piezoelectric sensor network to obtain the database. In Vitola’s work, the authors inspect and evaluate the damage on a rectangular aluminum profile, an aluminum plate, and a composite plate. The results show that fine kNN and weighted kNN better performed among the algorithm studied. In Tibaduza’s paper, the authors analyzed a sandwich structure composed of carbon fiber reinforced polymer (CFRP) and a composite plate of CFRP, obtaining similar outcomes as Vitola according to the kNN. Lautour and Omenzetter [27] used the supervised Nearest Neighbor Classification and Learning Vector Quantization to study a 3-story laboratory rack structure assuming an undamaged and several damaged states. The results showed that both classification techniques were able to classify damage. Salehi et al. [28] studied three methods of supervised algorithms (SVM, kNN, and ANN) to evaluate the performance of the damage detection approach on the aircraft wing stabilizer subjected to dynamic loading. The results demonstrate that the SHM methodology developed using ML efficiently detects damage in a new self-powered sensor network, even with noise and incomplete binary data. Coelho et al. [29] presented a methodology for mining sensor signal data into an SHM structure for damage classification using SVM. A hierarchical decision tree structure was built for damage classification, and experiments were conducted on metallic and composite structures. They also demonstrated that using a binary tree structure reduces the computational intensity of each successive classifier and the algorithm’s efficiency. The results obtained using this classification show that this type of architecture works well for large data sets because a reduced number of comparisons is required.

Abdeljaber et al. [30] used CNN to identify damage in a four-story structure, using both damaged and undamaged boxes, where 1D-CNN was considered successful. Ebrahimkhanlou and Salamone [31] implemented auto-encoders and a CNN to locate sources of acoustic emissions (AE). They used sensors on metal plates, with reinforcements connected by rivets, to locate damage and fatigue cracks experimentally simulated by Hsu-Lapis Nielsen crack tests. Results show that both deep learning networks can learn to map AE signals to their sources. Islam and Kim [32] proposed a crack detection based on a deep convolutional neural network (DCNN), which consists of a fully convolutional neural system (FCN) with a semantic encoding classification framework that detects cracks and decoding with precision. The experimental results indicate that the proposed method is highly effective for classifying cracks. Nejad et al. [33] combined ANN with Empirical Mode Decomposition (EMD) and Discrete Wavelet Transform (DWT) by processing acceleration responses measured on an offshore jacket-like platform. The results indicated that DWT, compared to EMD, has a better reliable signal processing method in detecting damage due to better noise reduction. Nunes et al. [34] implemented a methodology that combines supervised ANN and unsupervised (k-means clustering) classification methods to build a hybrid classifier. The robustness of the proposed approach is evaluated using data obtained from numerical simulations and experimental tests carried out in the laboratory and in situ. The hybrid classifier performed well, identifying previously known behaviors and detecting new structural conditions. Hung et al. [35] applied four prominent deep learning algorithms, Multi-Layer Perception, long-term memory network, 1D-CNN, and CNN, to detect structural damage using raw data. A 1D continuous beam under random excitation, a 2D steel structure subjected to ground movement by earthquakes, and a 3D cable-stayed bridge under vehicular loads are investigated. The results emphasize the high reliability of 2D-CNN and the good balance between the accuracy and complexity of Long Short-Term Memory and 1D-CNN. Yang et al. [20] presented preliminary results of dynamic modeling of beam structures using physics-informed ANN. The authors also incorporated domain knowledge of NDI/SHM (visual inspection, impact) into the machine learning pipeline to evaluate the detection paradigm for non-contact full-field measurements for damage.

New algorithms have been tested to seek effectiveness in damage identification. Bull et al. [36] used an online classification algorithm to apply the Z24-bridge data, a machining dataset from AE, and ground vibration test measurements. They found active learning improves online classification performance in damage detection and classification experiments. Najib and Nobari [37] studied a new exogenous input model (NARXN) with a procedure based on a nonlinear autoregressive model. They investigate the method using the finite element model of a beam connected to a rigid support considering a flexible adhesive layer. The proposed method is advantageous in the damage pattern database and identification process based on a real-world model and pattern recognition. Zanatta et al. [38] detected infrastructure damage on a road bridge and proposed a new SHM approach using Spiking Neural Networks (SNN) applied to Microelectromechanical systems (MEMS) data. The SNN can effectively discriminate whether a structure is in a healthy or damaged condition with a similar level of accuracy as the ANN. Perry, Guo, and Mahmoud [39] used a Gaussian Process model to predict stress intensity factors in an automated workflow to assess the fracture mechanism of steel structures using inspection imaging. A U-Net was designed to find the pixel location of a crack. The proposed workflow allows an easy inspection of steel cracks and predicts the crack propagation from raw images. This paper mainly focused on the kNN, SVM, NB, RF, and DT multiclass classification supervised learning algorithms, used from the open-source library scikit-learn [40] (sklearn).

Machine Learning Applied in SHM

Structural monitoring is performed in two types of damage. The first is numerically generated by changing the depth of the crack in a cantilevered beam. The second damage is the mass loss in the beam reinforced with mass. The assessment used machine learning (ML) algorithms with a multiclass approach, such as kNN, SVM, Decision Tree, RF, and Naive Bayes. Figure 3 shows the ML algorithm chart flow used to identify and quantify the damage. The process starts with dataset extraction, followed by splitting the data for training and testing, 80% and 20%, respectively, and applying the ML algorithm to classify and provide information on damage identification and quantification. Hence, the cross-validation was performed with 80% of the data samples. All-damage detection ML implementation uses scikit-learn [40] Machine Learning in Python.

The algorithms available in the scikit-learn package provide a binary or multiclass classification. It is essential to make a correct model selection for the proper use of this tool and to decide what kind of algorithm, supervised or unsupervised, will best address the problem. Then, a subset of variables is chosen to train these models without any hyper-parameter adjustment, looking for the best metric, usually with less training time [48]. In this context, performance metrics are essential in several stages of the modeling process, e.g., selecting the model type, evaluating the final model, and monitoring, among others. The available metrics are the accuracy, precision, recall, and F1 score, which evaluate the performance of a classification model and are defined by

where y and \(\hat{y}\) represent the true and predicted labels. The scikit-learn computes the four metrics from the average parameter of the precision recall f-score support function available in the sklearn package, where the parameter values used were micro, macro, and none. By selecting the micro value, metrics are computed by counting true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN) globally without distinguishing by class. The macro value calculates the metrics for all classes and an arithmetic average between the values of the classes, and the none value only calculates the metrics for each class without doing any weighting [40].

The algorithm’s general performance is evaluated by the four metrics: the proportion of health and damage conditions correctly classified. Aside from the metrics, the confusion matrix is also used to track the dataset classification. The confusion matrix has rows and columns representing the class prediction of the damage severity. This matrix allows us to understand the damage detection patterns and errors of the ML techniques classification. The matrix elements indicate the data conditions as TP, TN, FP, and FN. The diagonal of the confusion matrix represents the correct damage detection rate. Thus, the ideal model has high values on the diagonal and minimum values elsewhere. In this work, the evaluation metrics of the ML algorithm are addressed to compare the damage detection capability through their accuracy, precision, recall, f1-score, and the confusion matrix.

K-Nearest-Neighbor Classifier

K-nearest neighbor is one of the simplest supervised learner methods [21, 41] and widely used for pattern recognition [6]. kNN can be used for classification and regression, where data with discrete labels usually use classification and data with continuous labels regression. The classification is calculated from a simple majority vote of the nearest neighbors of each point: a query point is assigned the data class with more representatives within the nearest neighbors of the point. For this, a metric between the points is used spaces [41].

The most regarded KNN method is the classification based on estimating the Euclidian distance because of its ease of use, better productivity, and efficiency. The Euclidian distance between two vectors xi and xj can be calculated as shown in Eq. (5) [42].

where xi and xj are objects represented by vectors in ℜd space, and xil and xjl are elements of the vectors, which correspond to the values of the coordinate (attributes).

The kNN algorithm, in its simplest version, only considers exactly one nearest neighbor, which is the closest training data point to the point we want to predict. The prediction is the known output for this training point. Depending on the hyper-parameter ‘k’ value, each sample is compared to find similarity or closeness with ‘k’ surrounding samples. For example, when k = 3, the individual samples undergo comparison with the nearest three samples, and hence the unknown sample is classified accordingly (see Fig. 4) [41]. The optimal choice of the value of ‘k’ is highly data-dependent, in general, a larger suppresses the effects of noise but makes the classification boundaries less distinct. Thus, with hyper-parameter set as ‘k = 3’, values of the weight function are defined as uniform, representing that all points in each neighborhood are weighted equally. The sheet size that affects query construction and speed is set to 30.

Decision Tree and Random Forest

Decision tree supervised algorithm can target categorical variables such as the classification of a damaged or undamaged statement and continuous variables as regression to compare the signal with the healthy state of the system [21]. Learning a decision tree means learning the sequence of if/else questions that gets us to the true answer most quickly. A tree contains a root node representing the input feature(s) and the internal nodes with significant data information. Each node (a leaf or terminal node) represents a question containing the answer. The interactive process is repeated until the last node (leaf node) is reached such that the node becomes impure [41].

The data get into the form of binary features in our application, and a classification procedure is performed. However, selecting the root node and the internal node is not trivial for multiple damage severity cases. The satisfactory results of the decision tree learning algorithm will depend on the criteria of selected attributes. These criteria selections are performed according to statistical measures of the most relevant attributes for the classification. This work uses the Gini Index [21], a well-known metric. It is a statistical dispersion index that measures the heterogeneity of the data. The Gini index Eq. (6) indicated for a dataset S, which contains n records, each with a class C − i, one has

If the set S is partitioned into two or more subsets Si, the Gini index of the partitioned data is defined by

where pi is relative probability of class Ci in S, n is the number of records in the set S, K is the number of classes, and ni is the number of records in the subset Si.

The random forest ML algorithm is an ensemble classifier that consists of many decision trees where the class output is the node composed of individual trees. The RF has high prediction accuracy, robust stability, good tolerance of noisy data, and the law of large numbers without overfitting. It has been used for structural damage detection and shown a better performance [43]. The parameters of these algorithms are characterized by the number of trees in the forest of a hundred and the maximum depth set to three. The minimum sample split is two, which denotes the minimum number of samples needed to split an internal node. The minimum sample leaf represents the training samples on each right branch, and the minimum sample leaf values are set to be one. The maximum features value is set to ‘auto’, representing the number of features to consider when looking for the best split.

Support Vector Machine

Support Vector Machines are supervised machine learning techniques developed from the statistical learning theory that can be used for classifying and regressing clustered data. In the case of linear classification, with two classes, let {(xi, yi), …, (xn, yn)}, a training dataset with n observations, where xi represents the set of input vectors and yi(+ 1, 1) is the class label of xi, the hyperplane is a straight line that separates the two classes with a marginal distance (as seen in Fig. 5). The purpose of an SVM is to construct a hyper- plane using a margin, defined as the distance between the hyperplane and the nearest points that lie along the marginal line termed as support vectors [45].

One can define the hyperplane by Eq. (8), where we have the dot product between x and w added to the term b:

where x represents the points within the hyperplane, w is the weight that determines the orientation of the hyperplane, and b is the bias or displacement of the hyperplane. When c = 0, the separating hyperplane is in the middle of the two hyperplanes with c = 1 and 1. The SVM aims to maximize the data separation margin by minimizing w. This optimization problem can be obtained as the quadratic programming problem given by

where w is the Euclidean norm.

The configuration hyper-parameters of this algorithm used the linear kernel function and a grid search to determine the C = 100, which is a penalty parameter. The multiclass strategy used was one against one.

Naive Bayes

Naive Bayes methods are a set of ML algorithms, a probabilistic classification method based on the Bayes theorem assuming independence between attributes. It is considered a simple technique for building classifiers with models that assign class labels to problem instances, represented as vectors of attribute values, where the class labels are drawn from some finite set. Naive Bayes classifiers work very well in many real situations, requiring a small amount of training data to estimate the desired parameters. The Naive Bayes class adopted in this work was the Gaussian distribution, which implements the Naive Gaussian Bayes algorithm for sorting, expressed as [46].

where σy and µy are estimated using maximum likelihood. Naive Bayes classifiers are highly scalable, requiring several linear parameters in the function of variables when applied to a learning problem. Maximum-likelihood training can be done by evaluating a closed-form expression [47].

Damage Assessment in a Numerical Simulated Beam

A reliable dataset generation to feed the machine learning algorithms starts with defining and identifying a problem. In our case, a cantilevered beam monitoring. The data are based on the modal structure parameters (natural frequency and frequency response) normalized in damage indices. Figure 6 illustrates the step-by-step outline of the methodology of this work. Starting with the dynamic response acquisition, in this analysis, through a simulation interface, followed by estimation of the damage indices to generate the database to feed the ML algorithms. The next step is training and testing the algorithms applied to identify and quantify the structural damage. The last step consists of evaluating the structural monitoring performed by ML algorithms.

Dataset Based on Damage Index

Damage detection methods have been applied to locate and quantify structural damage through changes in the system feature signature, e.g., dynamic characteristics change. When a crack propagates in a structure, it modifies local stiffness, damping, and mass, altering the system’s dynamic response and modal parameters. Therefore, those changes in dynamic characteristics can be used as indicators of damage when compared to the original signal. Hence, damage indices based on the beams’ natural frequency and FRF are used for damage detection.

The simulated system is a cantilever beam modeled by the spectral element method, briefly described in Appendix A. The beam is excited with a unitary force applied on the free edge, and the inertance response function is obtained at the same point, as shown in Fig. 7. The beam has an L = 1 m length, a width of 0.01 m, and a height of 0.03 m. The crack is located at L1 = 0.5L, and its depth varies from 5 to 35% of the beam’s cross section. Material properties are Young’s modulus of 2.1 GPa and mass density of 7800 kg/m3. Structural crack reduces the system stiffness inducing a shift in the resonance frequencies, which can affect different modal shapes depending on the crack location. Figure 7(LHS) demonstrates the effect of a crack with different severity levels on the dynamic beam’s response, which in this case, the fourth-, fifth-, and sixth-mode shapes were the most affected by the damage. Further, the FRFs and the natural frequencies, estimated from the dynamic response, are employed to calculate the DIs.

The damage index (DI) is formulated by comparing a reference signal, usually derived from the system considered undamaged or with a healthy signature, to the one provided by the system under the presence of discontinuing or damage [49, 50]. Various DI approaches have been developed to extract signal features in different domains aiming at structural damage identification based on an indicator that describes the damage. The DIs are associated with the estimation techniques for damage quantification and reveal important information about the structural health condition. Therefore, the DI is presented in values between zero and unity, where the unit accuses no damage. A lower value up to zero indicates the presence of a crack and its severity within the analysis scenario. This work uses the DI as structure information for the training and testing data in the multiclass ML algorithms.

Dataset 1: DI Estimated from Natural Frequency

Numerous methods have considered natural frequency changes to detect structural anomalies and damage. Structural damage reduces its local stiffness and induces a natural frequency shift [51]. A DI estimated with natural frequency is described in [52], which relates the natural frequency of the undamaged system with under damaged state. Thereby, it is employed to create an indicator to classify the structure’s integrity.

Equation (11) compares the natural frequency of damaged (\({\omega }_{i}^{d}\)) and undamaged (\({\omega }_{i}^{u}\)) beam. The dataset was built using three natural frequencies of the beam, that for the undamaged state are ω4 = 865 Hz, ω5 = 1430 Hz, and ω6 = 2136 Hz, related to the fourth-, fifth-, and sixth-mode shapes. Random DIs values were generated to train and test ML algorithms with 160 samples for each crack severity on dataset elaboration. The crack flexibility employed to model the crack was considered random with normal distribution and a 10% coefficient of variation. Table 1 lists the damaged beam’s natural frequencies with a crack depth of 5, 10, 15, 20, 25, 30, and 35% and their respective DI.

The dataset consists of three natural frequencies (ω4, ω5, and ω6) for the undamaged and damaged beams considering all crack depths, four groups of damage indices samples (DI1,2,3,4), and the multiple classifications which relate to the damage severity. Figure 8 shows a scatter plot of the DIs correlating dataset cluster for the cracks sizing 5, 10, 15, and 20%. Particularly, Fig. 8a and d presents the correlation between DI1 and DI2, Fig. 8b and e correlating DI3 and DI2, and Fig. 8c and f correlating DI4 and DI2, all of them calculated for the fourth- and fifth-mode shapes. From the cloud points, it is unclear to classify the crack severity up to 10%. The crack depth of 15 and 20% has the scatter plot spread over the DIs range. Therefore, it is not clear, a priori, if the ML algorithm can classify the damage severity correctly. DI between 1 and 0.98 is considered a healthy state of the structure, and lower DI values indicate a damaged condition.

Figure 9 shows a scatter plot of the correlated dataset obtained for the damaged beam with cracks depth of 25, 30, and 35%. Figure 9a displays the DI2 and DI1 correlation calculated with the fourth natural frequency, Fig 9d with the fifth natural frequency, and Fig 9g with the sixth natural frequency. Crack depths of 25, 30, and 35% the DIs tend to gather around 0.97, 0.95, and 0.92, respectively. Still, a false positive estimation can happen in the prognoses process because of the high dots in the correlation for all natural frequencies. Henceforth, by following the DI values, the dataset was labeled into four classes of health, 25-Damage, 30-Damage, and 35-Damage. DI higher than 0.98, comprising crack severity between 1 and 20%, was assumed health condition.

Dataset 2: DI Estimated from the FRF

The response function has also been used to detect structural damage and calculate damage indexes. The Frequency Response Assurance Criterion (FRAC) [53] is used in this work. FRAC is a damage index that correlates FRF signals, where a strong correlation is indicated by a unity representing no damage state. In contrast, the lowest correlation to zero means damage condition and severity. Equation (12) formulates the FRAC that compares the FRF signal of the cracked beam (Hdij) and for healthy beam indicated by (Huij). Because crack directly influences the system vibration, the DI can detect and quantify the damage.

where * defines the complex conjugate operator. The excitation is applied at the jth coordinate, and the response function at the ith coordinate. The index compares the FRFs of cracked and pristine beams, thus the entire spectrum energy response information.

The FRAC DIs were calculated using the beam’s FRF under undamaged and damaged conditions for the beam crack sizing 10, 15, 20, 25, and 30% of the beam cross section. In this simulation, 3% of white noise was incorporated into the FRFs to investigate the robustness of the ML algorithm in damage detection. Figure 10a–f shows the correlation between FRAC DI2 and DI1,3,4 obtained with the dataset contain 240 samples. FRAC DI shows a better classification of the cloud dots regarding crack severity and DI than the DI natural frequencies. From the cloud dots, all cracks follow a tendency around the correspondent DI range. Therefore, it is expected that the ML algorithm will classify the damage severity correctly, where DI between 1 and 0.98 is considered a healthy state of the structure, crack depths of 10, 15, 20, 25, and 30% are associated with 0.0.95, 0.8, 0.7, 0.6 and 0.5, respectively. Henceforth, by following the DI values, the multiclass dataset was labeled health, 10-Damage, 15-Damage, 20-Damage, 25-Damage, and 30-Damage.

Beam Damage Assessment

The structural monitoring is performed in the cantilever beam datasets 1 and 2 described in "Dataset based on damage index" using kNN, SVM, Decision Tree, RF, and Naive Bayes. Each algorithm has hyper-parameters that must be set and tested for their ideal performance in the application cases. In [59], the authors investigated the best hyper-parameter of each algorithm applied in the damage assessment analyses. For the SVM algorithm, linear kernel, rbf, and poly were tested. For the KNN algorithm, the metrics were defined as Euclidean, Bray–Curtis, Manhattan, and Cosine. We used Gini and Entropy index criteria in the RF and DT algorithms. The Gaussian-NB, Bernoulli-NB, and Multinomial-NB cases were defined in the Naive Bayes class. According to the research, linear SVM, Euclidean KNN, RF, and DT Gini index and Naive Bayes Gaussian algorithms were defined as good evaluation metrics.

Table 2 summarized the hyper-parameters and features of each ML algorithm. In the SVM with linear kernel, a grid search was used to determine the penalty parameters as C = 100. The multiclass strategy used was one against one. Tolerance for the stopping criterion is defined as 1e−3, enough to satisfy the error criterion. For the KNN, the number of neighbors is set to k = 3, and the metric is defined as Euclidean. The value of the function weights is set to uniform, meaning that all points in each neighborhood are weighted equally, and the leaf size that affects query construction and speed is set to 30. In the RF and DT, the number of trees in the forest is 100, and the maximum depth is set to 3. The minimum sample split is set to 2, which denotes the minimum number of samples needed to split an internal node. The minimum sample leaf represents the training samples on each of the right branches, and the minimum sample leaf values are set to 1. The Max features value is set to ‘auto’, representing the number of features to consider when searching for the best split, and the criteria Gini index was used. In the Naive Bayes class, the Gaussian-NB case was defined.

Figure 3 shows the ML algorithm chart flow used to identify and quantify the damage. The process starts with dataset extraction, presented in "Dataset based on damage index", followed by splitting the data for training and testing, 80% and 20%, respectively, and applying the ML algorithm to classify and provide information on damage identification and quantification. All-damage detection ML implementation uses scikit-learn Machine Learning in Python. Damage prediction is performed from DIs extracted from natural frequencies, and FRAC shows the efficiency of the ML algorithms in each approach. After the machine learning algorithm completes its estimation, it becomes crucial to assess the stability and accuracy of the model. This validation process involves confirming the quantified relationships between variables, which can be accomplished by examining metrics, such as accuracy, score, precision, and recall. However, it’s important to note that these metrics primarily reflect the ML model’s performance on the data it was trained on. Therefore, the ML model’s cross-validation using a separate dataset is necessary to ensure that it successfully captures the underlying patterns in the data, and a reliable validation set indicates a model with low bias or variance. In the damage assessment, the validation and the cross-validation of the ML algorithms are explored. The evaluation metrics of the ML algorithm are addressed to compare the damage detection capability through their accuracy and the confusion matrix. Accuracy close to 100% is considered a good performance. In all cases presented in this paper, the ML model exhibits excellent performance, as evidenced by a standard deviation of 5% in the cross-validations obtained through five different cluster data.

Beam Damage Assessment Using DI Natural Frequency

Damage quantification using DI natural frequency considered the beam undamaged and damaged with crack severity of 25, 30, and 35%, thus including four classes on the damage identification. The algorithm classification result defined for test data is evaluated by the metric criteria Accuracy, Precision, Recall, and F1 score, shown in Table 3. The metric precision represents how well the model correctly guessed all positive class classifications. The recall represents the number of positive class predictions made from all positive examples in the dataset, and the F1 score is the mean between precision and recall. The damage detection estimation accuracy comparison varies between 59 and 94% among the ML algorithms for the three DIs’ natural frequencies, ω4, ω5, and ω6. In all cases, the NB algorithm had the highest metrics performance reaching 90, 81, and 91%, for DI(ω4), DI(ω5), and DI(ω6), respectively. SVM, kNN, RF, and Decision tree present good metrics values in detecting the beam structural conditions using the information of DI(ω4) and DI(ω6). The SVM gave the lowest error in estimating the beam damage with around 40% of error using the information of the DI(ω5). The metrics precision, recall, and F1 score followed the accuracy results, validating the algorithm’s damage estimation. The cross-validation of the algorithm varies from 56 to 88% and follows the tendency of validation metrics.

Figure 11a–o shows the confusion matrices containing values and percentages predicted by the ML techniques. Figure 11a–c is estimated with SVM, Fig. 11d–f with kNN, Fig. 11g–i with Naive Bayes, Fig. 11j–l using RF, and Fig. 11m–o Decision tree. The accuracy of the SVM algorithm (see Fig. 11a) for DI(ω4) reached 84% due to two classification errors in the sample for the 30 damage condition, with a sample assumed as 35-damage and four samples with no damage rated as 25-damage. For kNN (see Fig. 11f), the accuracy was 88% for DI(ω6) due to three misclassifications, one for the 25-damage condition, with a sample assumed to be undamaged, one sample classified as 30-damage while 35-damage condition, and two samples classified as undamaged for the 35-damage condition.

Analyzing the confusion matrix of Fig. 11g, in the NB algorithm, the accuracy was 91% for DI(ω4) due to a classification error in the sample for the 25-damage condition, with one sample classified as undamaged and two samples undamaged rated 25-damage. Therefore, the best accuracy was achieved in the DI(ω4) sample classification data. The NB algorithm was considered more robust than the other algorithms in this case study.

Beam Damage Assessment Using FRAC DI

Damage assessment using FRAC DI considered the undamaged and damaged beam with crack severity of 10, 15, 20, 25, and 30%, totaling six classes for the damage identification. All ML techniques achieved good damage detection and quantification, according to the accuracy, precision, recall, and F1 score metrics. However, the results indicate that SVM and kNN can efficiently detect damage, including its severity, the SVM with 100% accuracy with and without noise and the SVM with 100% for DI(Noise Free) and 94% for DI(3% noise), compared to other methods as shown in Table 4.

The confusion matrix gives detailed information about the performance of the ML classifiers in labeling the beam structural condition. Figure 12 shows the multiclass confusion matrices of the beam dataset without noise and contaminated with 3% noise, where Fig. 12a and b is estimated with SVM, Fig. 12c and d from kNN, Fig. 12e and f with Naive Bayes, Fig. 12g and h using RF, and Fig. 12I and j Decision tree. The diagonal matrix represents the correct values, so the accuracy of SVM and kNN for FRAC DI without noise is 100%. The decision tree algorithm’s accuracy reached 92% because of a classification mistake in the sample for the 25-damaged condition, with a class assumed as 20-damaged and two samples classified as 30-damaged. Therefore, all algorithms are considered robust for both datasets with and without noise. For the algorithm, the cross-validation standard deviation is 5%.

Damage Assessment in a Physical Beam Reinforced with Masses

The physical structure consists of a beam with attached mass, aiming imposes a reinforcement along its length. The experimental setup, shown in Fig. 13(top), consists of a steel cantilever beam of length L = 0.38 m, a width of 0.0254 m, and a height of 0.00475 m. Material properties are Young’s modulus of 2.1 GPa and density of 7800 kg/m3. The reinforcement masses in a total of six neodymium magnets comprise 10.41% of the total mass of the rein- forced beam, which weighs 429.37 g. The beam is excited near to the clamped edge with an impact hammer (PCB 086CO3), and the acceleration response is acquired at the free edge of the beam by an accelerometer (PCB 353B03). The acquisition system is PolytecSoft which provides the inertance FRFs.

Damage in the structure is considered by losing the mass of the reinforcement. Two hundred and eight measurements were performed in the beam, considering health and damage. In each measurement, the six masses are positioned at different places along the beam, as shown in Fig. 13 (Bottom). The masses’ position is considered a random variable under the support of Uniform distribution. The mean value is the deterministic value of the masses positions shown in Fig. 14a, and the coefficient of variation is assumed to be 10%.

Figure 14 shows the undamaged structure and the three damaged states of the beam with reinforcements by considering the mass loss of the reinforcements. Figure 14a shows the schematic representation of the experiment when the beam is in healthy condition. The six masses are located at positions with initial distances of 5 cm of the clamped edge. Thus, the masses 1, 2, 3, 4, 5, and 6 are located at the deterministic positions of L1 = 5 cm, L2 = 10 cm, L3 = 15 cm, L4 = 20 cm, L5 = 25 cm, L6 = 30 cm, respectively. Figure 14a shows the first damaged beam condition with a mass loss of 2.96% of the total mass, where the mass from position 1 was removed. Figure 14b shows a second damaged beam condition with a mass loss of 5.92% of the total mass. In this case, the masses from positions 1 and 3 were removed. Figure 14c shows the third damaged beam condition with a mass loss of 8.84% of the total mass. The masses from positions 1, 3, and 5 were removed. A total of 280 samples of FRFs were measured considering all beam conditions.

Beam-reinforced mass loss reduces its rigidity and mass, inducing changes in resonance frequencies and affecting the dynamic response. Figure 15 shows the effect of gradual mass loss on the inertance response of the beam considering mass in a random position. Figure 15a shows the inertance FRFs of the beams in undamaged condition, Fig. 15b for the damaged beam with mass loss of 2.96% of the total mass, Fig. 15c for the damaged beam with mass loss of 5.92%, and Fig. 15d for the damaged beam with mass loss of 8.84%. Likewise, the numerical case, damage influences the dynamic response of physical beams in higher frequency ranges. Hence, for the DI calculation, we used a frequency band comprising the second, third and fourth mode shapes most impacted by the structural damage. The driven dataset used in this paper is available in [60].

Experimentally measured inertance FRFs of the beam with reinforced mass. The blue curve is the deterministic position of the masses and the gray curve considers randomness in the mass position. a Undamaged beam FRFs; b damaged beam FRFs with mass loss of 2.96% of total mass; c Damaged beam FRFs with mass loss of 5.92% of total mass; and d damaged beam FRFs with mass loss of 8.84% of total mass

DI Estimated from Experimental Dataset

The change in dynamic characteristics of the beam reinforced with masses can be used as damage indicators. In the numerical study, the FRAC (Eq. 12) is shown to perform a better damage indicator because it uses the spectrum energy to the whole response signal. Hence, only FRAC calculates the DIs using the experimental dataset. The DIs are estimated by correlating the FRFs undamaged and damaged beam conditions with the removal of 2.96, 5.92 8.84% of the total mass of the beam. The FRF of the undamaged with deterministic masses positions is considered the reference signal, correlated to the FRFs of undamaged and damaged with a random mass location.

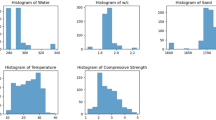

In the experimental case, the challenge was dealing with a damage assessment ranging from 2 to 8.84%. Monitoring damage using vibrational-based responses within this damage level is critical because the perturbation is minimal in the mode shapes. The dataset pre-processing and DIs cluster were performed by the K-mean algorithm associated with data balancing. Figure 16 shows the scatter plot of the correlation between FRAC DI1 and FRAC DI2 obtained with the dataset containing 280 samples. The DIs values cluster around 0.1 and 0.9, demonstrating that the DI data values have a high correlation with each other and can indicate a generating of false positive estimating when using the ML algorithm. The DI values of the multiclass dataset were labeled as undamaged, 2.96-damage, 5.92-damage, and 8.84-damage, which is the data input of ML algorithms.

Beam with Reinforced Mass Damage Evaluation

Once the FRFs are placed, the DI is estimated in the assembled database as the input for the ML algorithms, further monitoring the structure. Figure 17 illustrates the methodology process for structure monitoring via machine learning. The process starts with the acquisition of the dynamic response of the structure, which in this section uses experimental tests, followed by estimation and verification for normalization using the DIs and generating the database to feed the ML algorithms. The last step is the training and testing phase of the algorithms and using the test data to identify and quantify damage to the structure.

The quantification of the experimental damage using FRAC DI considered the beam undamaged and damaged with mass loss of 2.96, 5.92, and 8.84% of the total mass of the beam. Four classes in identifying the damage were linked to each beam condition. Table 5 details the comparison of damage detection validation metrics ranging from 79 to 95% between ML algorithms and cross-validation with 5% of standard deviation. The KNN and SVM algorithms performed better than the others, reaching an accuracy of 93%, precision of 95%, Recall of 91%, and F1 score of 93%. Therefore, the evaluation metrics reached more than 79% of accuracy for all algorithms, which is considered a good value for the experimental test dataset.

Figure 18 shows the confusion matrices containing values and percentages predicted by ML techniques. Figure 18a–e shows the confusion matrix estimated with SVM, KNN, NB, RF, and DT. The results show that more samples were correctly classified, except for the DT algorithm. KNN and SVM present the highest accuracy among the other algorithms with 95% due to misclassification in the sample for the 8.87-damage condition and two samples assumed to be 5.92-damage. For the 2.96-damage condition, four samples assumed 5.92 damage. In undamaged conditions, one sample was assumed to be 5.92 damaged, and one sample was assumed to be 8.87-damage. The confusion matrix for other tested algorithms followed the tendency classification of the KNN. The ML techniques could state the damaged condition of the structure. Some improvements in the algorithm can be implemented in the hyper-parameters of each algorithm to limit the classification error and improve the metrics criteria. In this experimental case, aside from the hyper-parameters study, the K-mean algorithm was used to cluster the DIs.

Conclusion

This study investigated the effectiveness of six supervised ML techniques (kNN, SVM, NB, RF, and DT) in detecting damage in a cantilever beam using vibration-based signatures. The study evaluated the metrics of each ML algorithm and used two vibration-based signatures, natural frequency, and FRF, for both numerical simulation and experimental evaluation. The results showed that FRAC DI outperformed natural frequency DI for numerical simulation, and NB accurately detected and quantified data applied to DI natural frequency. When applying FRAC DI, SVM could detect and quantify data with and without noise. While the accuracy value reached 95% for the experimental tests, it should be noted that this value may be affected by the noise associated with experimental data samples. The cross-validation of the ML algorithm assures 95% of the corrected generation of a data pattern. Overall, the findings suggest that combining ML techniques and vibration-based signatures can effectively detect damage in the structure analyzed. Future studies with larger sample sizes could help improve the accuracy of the ML metrics when using experimental data-driven, such as optimizing the algorithms’ hyper-parameters.

Data availability

The driven dataset used in this paper is available in [60].

References

Léonard F, Lanteigne J, Lalonde S et al (2001) Free-vibration behavior of a cracked cantilever beam and crack detection. Mech Syst Signal Process 15:529–548

Farrar CR, Worden K (2013) Structural health monitoring: a machine learning perspective. Wiley, Hoboken

da Silva M´FM (2017) Machine learning algorithms for dam- age detection in structures under changing normal conditions. Dissertation (Masters), Federal University of Par´a, Institute of Technology, Post graduate Program in Electrical Engineering, Bel´em, Brazil

Rytter A (1993) Vibrational based inspection of civil engineering structures. Doctoral dissertation, Dept. of Building Technology and Structural Engineering, Aalborg University

Machado MR, Adhikari S, Dos Santos JMC (2017) A spectral approach for damage quantification in stochastic dynamic systems. Mech Syst Signal Process 88:253–273. https://doi.org/10.1016/j.ymssp.2016.11.018

Kurian B, Liyanapathirana R (2020) Machine learning techniques for structural health monitoring. Lecture notes in mechanical engineering. Springer, Singapore. https://doi.org/10.1007/978-981-13-8331-1-1

Sun Z, Santos J, Caetano E (2022) Vision and support vector machine-based train classification using weigh-in-motion data. J Bridg Eng 27(6):1–8. https://doi.org/10.1061/(asce)be.1943-5592.0001878

Iyer S, Velmurugan T, Gandomi AH, Noor Mohammed V, Saravanan K, Nandakumar S (2021) Structural health monitoring of railway tracks using IoT-based multi-robot system. Neural Comput Appl 33(11):5897–5915. https://doi.org/10.1007/s00521-020-05366-9

Farrar CR, Worden K (2007) An introduction to structural health monitoring. Philos Trans R Soc A Math Phys Eng Sci 365(1851):303–315

Nick W, Asamene K, Bullock G, Esterline A, Sundaresan M (2015) A study of machine learning techniques for detecting and classifying structural damage. Int J Mach Learn Comput 5(4):313–338

Praticò FG, Fedele R, Naumov V, Sauer T (2020) Detection and monitoring of bottom-up cracks in road pavement using a machine-learning approach. Algorithms 13(4):1–16. https://doi.org/10.3390/a13040081

Hassan Daneshvar M, Sarmadi H (2022) Unsupervised learning- based damage assessment of full-scale civil structures under long- term and short-term monitoring. Eng Struct. https://doi.org/10.1016/j.engstruct.2022.114059

Cuong-Le T, Nghia-Nguyen T, Khatir S, Trong-Nguyen P, Mirjalili S, Nguyen KD (2021) An efficient approach for damage identification based on improved machine learning using PSO-SVM. Eng Comput. https://doi.org/10.1007/s00366-021-01299-6

He Y, Chen H, Liu D, Zhang L (2021) A framework of structural damage detection for civil structures using fast fourier transform and deep convolutional neural networks. Appl Sci (Switzerland). https://doi.org/10.3390/app11199345

Ghadimi S, Kourehli SS (2017) Crack detection of structures using modified extreme learning machine (MELM). Inverse Probl Sci Eng 25(7):995–1013. https://doi.org/10.1080/17415977.2016.1212026

Gillich N, Tufisi C, Sacarea C, Rusu CV, Gillich G-R, Praisach Z-I, Ardeljan M (2022) Beam damage assessment using natural frequency shift and machine learning. Sensors 22:1118. https://doi.org/10.3390/s22031118

Rathod VT, Mukherjee S, Deng Y (2020) Machine learning enabled damage classification in composite laminated beams using mode conversion quantification. May 2020, 11. https://doi.org/10.1117/12.2559677

Liu L, Meng G (2005) Localization of damage in beam-like structures by using support vector machine. IEEE Xplore, pp 919–924

Ashigbi DM, Sackey MN, Fiagbe YAK, Quaye-Ballard J (2021) Vibration responsenbased crack diagnosis in beam- like structures using fuzzy inference system. Sci Afr. https://doi.org/10.1016/j.sciaf.2021.e01051

Yuan F-G, Zargar SA, Chen Q, Wang S (2020) Machine learning for structural health monitoring: challenges and opportunities. 1137903(April 2020), 2. https://doi.org/10.1117/12.2561610

Malekloo A, Ozer E, AlHamaydeh M, Girolami M (2021) Machine learning and structural health monitoring overview with emerging technology and high-dimensional data source highlights. Struct Health Monit. https://doi.org/10.1177/14759217211036880

Ensslin L, Ensslin SR, Pacheco GC (2012) Um estudo sobre segurança em estádios de futebol baseado na análise bibliométrica da literatura internacional. Perspectivas em Ciência da Informaçcão 17(2):71–91

Ensslin SR, Ensslin L, Moreira ACS, Pereira VLDP (2014) Evidenciação do estado da arte da avaliação da segurança do trabalho em empreendimentos da construção civil. Interciencia 39(1):16–23

Sun H, Burton HV, Huang H (2021) Machine learning applications for building structural design and performance assessment: State-of-the-art review. J Build Eng 33(July 2020):101816. https://doi.org/10.1016/j.jobe.2020.101816

Vitola J, Pozo F, Tibaduiza DA, Anaya M (2017) A sensor data fusion system based on k-nearest neighbor pattern classification for structural health monitoring applications. Sensors (Switzerland) 17(2):417. https://doi.org/10.3390/s17020417

Tibaduiza D, Torres-Arredondo MA, Vitola J, Anaya M, Pozo F (2018) A damage classification approach for structural health monitoring using machine learning. Complexity. https://doi.org/10.1155/2018/5081283

De Lautour OR, Omenzetter P (2010) Nearest neighbor and learning vector quantization classification for damage detection using time series analysis. Struct Control Health Monit 17(6):614–631. https://doi.org/10.1002/stc.335

Salehi H, Das S, Chakrabartty S, Biswas S, Burgueno R (2018) A methodology for structural health diagnosis and assessment using machine learning with noisy and incomplete data from self-powered wireless sensors. In: Proceedings of SPIE 10598, sensors and smart structures technologies for civil, mechanical, and aerospace systems 2018. https://doi.org/10.1117/12.2295990

Coelho CK, Das S, Chattopadhyay A (2009) A hierarchical classification scheme for computationally efficient damage classification. Proc Inst Mecha Eng Part G J Aerosp Eng 223(5):497–505. https://doi.org/10.1243/09544100JAERO428

Abdeljaber O, Avci O, Kiranyaz MS, Boashash B, Sodano H, Inman DJ (2018) 1-D CNNs for structural damage detection: verification on a structural health monitoring benchmark data. Neurocomputing 275:1308–1317. https://doi.org/10.1016/j.neucom.2017.09.069

Ebrahimkhanlou A, Salamone S (2018) Single-sensor acoustic emission source localization in plate-like structures using deep learning. Aerospace. https://doi.org/10.3390/aerospace5020050

Manjurul Islam MM, Kim JM (2019) Vision-based autonomous crack detection of concrete structures using a fully convolutional encoder–decoder network. Sensors (Switzerland) 19(19):1–12. https://doi.org/10.3390/s19194251

Mansouri Nejad N, Beheshti Aval SB, Maldar M, Asgarian B (2021) A damage detection procedure using two major signal processing techniques with the artificial neural network on a scaled jacket offshore platform. Adv Struct Eng 24(8):1655–1667. https://doi.org/10.1177/1369433220981663

Andrade Nunes L, Piazzaroli Finotti Amaral R, Souza Barbosa FD, Abrahão Cury A (2021) A hybrid learning strategy for structural damage detection. Struct Health Monit 20(4):2143–2160. https://doi.org/10.1177/1475921720966943

Dang HV, Raza M, Nguyen TV, Bui-Tien T, Nguyen HX (2021) Deep learning-based detection of structural damage using time-series data. Struct Infrastruct Eng 17(11):1474–1493. https://doi.org/10.1080/15732479.2020.1815225

Bull LA, Rogers TJ, Wickramarachchi C, Cross EJ, Worden K, Dervilis N (2019) Probabilistic active learning: an online framework for structural health monitoring. Mech Syst Signal Process 134:106294. https://doi.org/10.1016/j.ymssp.2019.106294

Fathalizadeh Najib M, Salehzadeh Nobari A (2021) An efficient technique for extraction of nonlinear dynamic features in a model-based feature extraction scheme for machine learning-based structural health monitoring. JVC J Vib Control 27(7–8):865–878. https://doi.org/10.1177/1077546320933744

Zanatta L, Barchi F, Burrello A, Bartolini A, Brunelli D, Acquaviva A (2021) Damage detection in structural health monitoring with spiking neural networks. In: 2021 IEEE international workshop on metrology for industry 4.0 IoT (MetroInd4.0 IoT), pp 105–110. https://doi.org/10.1109/MetroInd4.0IoT51437.2021.9488476

Perry BJ, Guo Y, Mahmoud HN (2022) Automated site-specific assessment of steel structures through integrating machine learning and fracture mechanics. Autom Constru 133(September 2021):104022. https://doi.org/10.1016/j.autcon.2021.104022

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12:2825–2830

Cutler J, Dickenson M (2020) Introduction to machine learning with Python. O’Reilly Media, Inc., Sebastopol. https://doi.org/10.1007/978-3-030-36826-5-10

Kataria A, Singh MD (2013) A review of data classification using K-nearest neighbour algorithm. Int J Emerg Technol Adv Eng 3(6):354–360

Zhou Q, Ning Y, Zhou Q, Luo L, Lei J (2013) Structural damage detection method based on random forests and data fusion. Struct Health Monit 12(1):48–58. https://doi.org/10.1177/1475921712464572

Machado MR, Dos Santos JMC (2021) Effect and identification of parametric distributed uncertainties in longitudinal wave propagation. Appl Math Model. https://doi.org/10.1016/j.apm.2

Otchere DA, Arbi Ganat TO, Gholami R, Ridha S (2021) Application of supervised machine learning paradigms in the prediction of petroleum reservoir properties: comparative analysis of ANN and SVM models. J Pet Sci Eng. https://doi.org/10.1016/j.petrol.2020.108182

Flach PA, Lachiche N (2004) Naive Bayesian classification of structured data. Mach Learn 57(3):233–269. https://doi.org/10.1023/B:MACH.0000039778.69032.ab

Russell S, Norvig P (2003) Artificial intelligence: a modern approach, 2nd edn. Prentice Hall, Hoboken ([1995])

Géron A (2019) Hands-on machine learning with scikit-learn, keras, and tensorflow: Concepts, tools, and techniques to build intelligent systems. O’Reilly Media, Inc., Sebastopol

Machado MR, Dos Santos JMC (2015) Reliability analysis of damaged beam spectral element with parameter uncertainties. Shock Vib. https://doi.org/10.1155/2015/574846

Barreto LS, Machado MR, Santos JC, Moura BB, Khalij L (2021) Damage indices evaluation for one-dimensional guided wave-based structural health monitoring. Latin Am J Solids Struct 1:1–10

Machado MR, Adhikari S, Dos Santos JMC (2018) Spectral element-based method for a one-dimensional damaged structure with distributed random properties. J Braz Soc Mech Sci Eng 40(9):1–16. https://doi.org/10.1007/s40430-018-1330-2

Sinou JJ (2009) A review of damage detection and health monitoring of mechanical systems from changes in the measurement of linear and non-linear vibrations. In: Mechanical vibrations: measurement, effects and control, Nova Science Publishers, Inc., pp 643–702

Heylen W, Lammens S (1996) FRAC: a consistent way of comparing frequency response functions. Identif Eng Syst Swansea 1:48–57

Soares YMF, Machado MR, Dutkiewicz M (2022) The spectral approach of Love and Mindlin-Herrmann theory in the dynamical simulations of the tower-cable interactions under the wind and rain loads. Energies 15:7725

Colherinhas GB, Morais MV, Machado MR (2022) Spectral model of off-shore wind turbines and vibration control by pendulum tuned mass dampers. Int J Struct Stab Dyn 22(5):2250053

Moura BB, Machado MR, Mukopadhyay T, Dey S (2022) Dynamic and wave propagation analysis of periodic smart beams coupled with resonant shunt circuits: passive property modulation. Eur Phys J Spec Top 231:1415–1431

Machado MR, Moura BB, Dey S, Mukhopadhyay T (2022) Bandgap manipulation of single and multi-frequency smart metastructures with random impedance disorder. Smart Mater Struct 1:1

Tada H, Paris PC, Irwin GR (1973) The stress analysis of cracks. Handbook. Del Research Corporation, Hellertown

Sousa AASR (2023) Monitoramento da Integridade Estrutural de Vigas utilizando Técnicas de Aprendizado de Máquina, 2023. (FGA/UnB Gama, Mestrado em Integridade de Materiais da Engenharia)—Universidade de Brasília, Brasília

Sousa AASR, Coelho JS, Machado MR, Dutkiewicz M (2023) Damage assessment in a physical beam reinforced with masses—dataset. Zenodo. https://doi.org/10.5281/zenodo.8081690

Acknowledgements

The authors would like to acknowledge the research support of the POLONEZ BIS project SWinT, reg.no. 2022/45/P/ST8/02123 co-funded by the National Science Centre and the European Union Framework Programme for Research and Innovation Horizon 2020 under the Marie Sklodowska-Curie grant agreement no.945339. M.R. Machado would like to acknowledge the support from the FAPDF and CNPq (Grants no.00193-00000766/2021-71 and no. 404013/2021-0).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Beam Spectral Element

Appendix A: Beam Spectral Element

The Formulation of a model for a structure using the Spectral Element Method (SEM) is similar to the Finite Element Method. SEM consists of the exact displacement of the wave equation of the analytical solution in the frequency domain, making it more suitable for solving the crack problem. The advantage of SEM is the reduced number of elements needed to model the system compared to other computational methods, and the exact solution of the behavior of a beam at high frequency can be obtained with a low computational effort. SEM has been used in many structural dynamic applications overhead transmission [54], smart material [56, 57], and structural monitoring [44, 55], among others.

Beam Spectral Element

The nodal displacements are vˆ and ϕˆ, and the nodal forces Vˆ and Mˆ present in the length beam L. Figure

19 illustrates a two-node healthy beam spectral element model with two degrees of freedom (DOF) per node and two nodal loads per node.

The beam is assumed as slender with transversal and rotational nodal displacement, shear, and momentum nodal forces. By neglecting shear deformations, the differential equation of movement in its spectral form can be written as

The general waveform solution for this element is given by

where

for L being the beam length. The wavenumbers, k, k1, and k2 are given where ω is the circular frequency, E is the Young’s modulus, A is the cross- section area, ρ is the density, I is the inertia moment, and i = \(\sqrt{-1}\). Using a complex Young’s modulus, Ec = E(1 + iη), internal structural damping is introduced where η is the hysteretic structural loss factor. The spectral nodal displacements and the spectral nodal rotations of an Euler–Bernoulli beam can be allocated to a displacement vector d as at node 1 (x = 0) and at node 2 (x = L)

The frequency-dependent displacement within an element is interpolated from the nodal displacement vector d by eliminating the constant vector, it is expressed as

where \(\mathrm{a}={\mathrm{H}}_{\mathrm{B}}{\left({\varvec{\upomega}}\right)}^{-1}{\varvec{d}},\) and

The frequency-dependent displacement within an element is interpolated from the nodal displacement vector d by eliminating the constant vector, it is expressed as

Shear forces and bending moments defined for the beam are related to the defined forces and moments in a spectral nodal form as

where by applying boundary conditions has

By relating the nodal forces to the nodal displacement, one has

where \({S}_{B}\left(\upomega \right)=G\left(\upomega \right)\left(\upomega \right)\) is the dynamic stiffness matrix of the Euler– Bernoulli beam spectral element.

Crack Beam Spectral Element

The crack is modeled by local dimensionless flexibility represented by θ which is calculated by the Castigliano theorem and fracture mechanics law [58]. The element contains two nodes with two degrees of freedom (DOF) each, where L is the length, L1 is the crack position relative to node 1 and a is the length (depth) of the crack, illustrated in Fig.

20.

For the cracked beam, the solution of Eq. (A1) is given by

where \({\hat{v}}^{l}\) and \({\hat{v}}^{r}\) are the vertical displacements to the left and to the right of the crack, respectively. The A1; B1; C1; D1; A2; B2; C2; and D2 coefficients are determined by boundary conditions. From the displacement and nodal loads, the stiffness matrix is obtained in a similar fashion to the healthy beam spectral element. However, the dynamic stiffness matrix of the cracked beam spectral element is written as

where \(a={e}^{ik{L}_{1}},b={e}^{-ik{L}_{1}},c={e}^{-ik\left(L-{L}_{1}\right)},d={e}^{\left(L-{L}_{1}\right)},f={e}^{-ikL},g={e}^{-kL}\)

The flexibility coefficient of crack \(\uptheta\) is obtained using Castigliano’s method, where flexibility at the crack position for a spectral element of single dimension beam may be obtained by:

where \(c\) coefficient is used to obtain the crack flexibility, b is the base, and h is the height of the cross section of the beam; α is the variation of crack depth; M is the bending moment at the crack position, and f is a correction function of the stress intensification factor of the mode I that is written as

and \(\overline{a }=\overline{a}/h \,\, {\text {and}} \,\, \overline{a }=\overline{\alpha }/h\) local dimesionless flexibility is given by

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Sousa, A.A.S.R., da Silva Coelho, J., Machado, M.R. et al. Multiclass Supervised Machine Learning Algorithms Applied to Damage and Assessment Using Beam Dynamic Response. J. Vib. Eng. Technol. 11, 2709–2731 (2023). https://doi.org/10.1007/s42417-023-01072-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42417-023-01072-7