Abstract

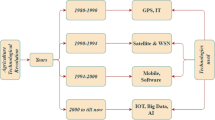

The agricultural community is confronted with dual challenges; increasing production of nutritionally dense food and decreasing the impacts of these crop production systems on the land, water, and climate. Control of plant pathogens will figure prominently in meeting these challenges as plant diseases cause significant yield and economic losses to crops responsible for feeding a large portion of the world population. New approaches and technologies to enhance sustainability of crop production systems and, importantly, plant disease control need to be developed and adopted. By leveraging advanced geoinformatic techniques, advances in computing and sensing infrastructure (e.g., cloud-based, big data-driven applications) will aid in the monitoring and management of pesticides and biologicals, such as cover crops and beneficial microbes, to reduce the impact of plant disease control and cropping systems on the environment. This includes geospatial tools being developed to aid the farmer in managing cropping system and disease management strategies that are more sustainable but increasingly complex. Geoinformatics and cloud-based, big data-driven applications are also being enlisted to speed up crop germplasm improvement; crop germplasm that has enhanced tolerance to pathogens and abiotic stress and is in tune with different cropping systems and environmental conditions is needed. Finally, advanced geoinformatic techniques and advances in computing infrastructure allow a more collaborative framework amongst scientists, policymakers, and the agricultural community to speed the development, transfer, and adoption of these sustainable technologies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Estimates indicate that current agricultural production must increase 60–100 percent all else unchanged (food waste levels, current trends in population, diet) to meet nutritional needs of a future human population of 9–10 billion (Folely 2011; Martin and Li 2017; Mattoo 2014). It is obvious that increased production of plant-based foods will play a key role in meeting these challenges. The endosperm of the cereal staples—rice, wheat, and maize alone provide approximately 23, 17, and 10%, respectively, of total calories for humans globally. In addition to macronutrients (fat, protein, carbohydrate) supplying these calories, plant foods are major sources of micronutrients (vitamins, essential amino acids, essential lipids) and mineral elements essential for human nutrition. Plant foods also contain a varied range of bioactive compounds (alkaloids, carotenoids, organosulfur compounds, phenolic compounds, phytosterols) that have been associated with the prevention of chronic diseases including heart and circulation problems, cancer, diabetes, cataracts, and age-related functional decline (Martin and Li 2017; Mattoo 2014; Roberts and Mattoo 2019).

Crop production cannot meet the nutritional needs of future populations simply by increasing land acreage devoted to agriculture. Competition for land use with urbanization, and the loss of land to salination and desertification will reduce suitable land available for agricultural production (Federoff et al. 2010; Foley et al. 2005, 2011). It is also unlikely that productivity of current plant production systems can be increased by using the agricultural intensification methods of the past. Food production doubled worldwide over the past 35 years; largely by leveraging high-yielding plant germplasm with extensive use of synthetic fertilizer, pesticides, and irrigation (Pingali 2012; Reganold and Wachter 2016; Roberts and Mattoo 2018; Triplett and Dick 2008). Feedstocks for synthetic fertilizer production are dwindling and there are increasing water shortages in many regions of the world (Adeyemi et al. 2017; Dimpka and Bindraban 2016; Rosegrant and Cline 2003; Tilman et al. 2002). In addition, agricultural intensification over the past decades has had negative environmental impacts, such as increased soil erosion and decreased soil fertility, pollution of ground water and eutrophication of rivers, lakes, and coastal ecosystems, and increased greenhouse gases (Foley et al. 2011; Matson et al. 1997; Tilman et al. 2001, 2002). Adding to the challenge is the changing and increasingly erratic global climate (Ray et al. 2015, 2019). More frequent and more severe weather events such as heat waves, drought periods, or flooding have been predicted by climate models. Heat waves, drought, salinity stress, and higher ozone levels due to global climate change, as well as the associated development of new pest and pathogen problems, are predicted to be a drag on crop yields (Long et al. 2006; Tester and Langridge 2010; Zhao et al. 2017). Of equal concern are the negative impacts of elevated temperature and CO2 levels on the nutritional quality of many crops (Fedoroff et al. 2010; Myers et al. 2014; Schlenker et al. 2009).

Clearly, measures that decrease yield losses to plant diseases will help meet this challenge of increasing the quantity of food available for human consumption. It is estimated that pathogens and pests cause losses of 10–40% in the staple crops that provide approximately 50% of global calorie intake. Yield-loss range estimates globally were 10.1–28.1% for wheat, 24.6–40.9% for rice, 19.5–41.1% for maize, 8.1–21.0% for potato, and 11.0–32.4% for soybean. Disease control measures that reclaim yield lost to disease would contribute to increased food availability and improve food security in certain regions of the world as the greatest yield loss estimates were in regions of the world with fast-growing populations and food insecurity (Savary et al. 2019).

Disease control methods to function within sustainable, regenerative cropping systems

Going forward, disease control measures need to be compatible with sustainable and climate-resilient crop production systems. These next-generation crop production systems will rely in some cases on big data-driven precision agriculture to reduce inputs, and biologically based technologies to improve soil health and control soil erosion, as well as control pathogens and pests (Adeyemi et al. 2017; Delgado et al. 2019; Pingali 2012; Roberts and Mattoo 2018, 2019). New crop germplasm is needed that has increased tolerance/resistance for these pathogens and pests but also increased tolerance for abiotic stresses due to an erratic climate; that offers higher yields but uses less water, fertilizer, and other inputs; and has higher nutritional quality for the consumer (Fatima et al. 2016; Martin and Li 2017; Mattoo 2014; Mattoo and Teasdale 2010; Pingali 2012; Roberts and Mattoo 2018, 2019). In short, these disease control measures need to be developed in such a way that the resulting crop production system can regenerate soil resources and protect our water and air, and the new crop germplasm produce more food of high nutritional quality.

Geoinformatic systems and big data analytics for development and use of next-generation cropping systems and advanced germplasm

Managing data spatially coupled with big data analytics is inherent to the development and use of next-generation, environmentally friendly, climate-resilient crop production systems using the precision agriculture approach (Delgado et al. 2019). Agricultural fields are highly heterogeneous spatially, regarding pathogen and pest populations and other field properties that impact plant health and growth such as topography, soil type, soil fertility, and soil moisture (Roberts and Kobayashi 2011). These field properties interact with climate, greatly influencing drainage, water and nutrient availability, and pathogen and pest outbreaks and their spatial distribution. Soil sensors and robots within the field and commercial satellite, aerial, and drone-based sensing systems have been developed that can monitor some of these factors. Geographic information systems (GIS) as the organizing principle for spatial data in combination with big data (defined as a combination of a variety of data, the velocity of data, and/or volume of data) management and analytics now allows us to use data from these varied sensor systems and link information regarding pathogens, pests, crop yield, soil fertility factors, water, etc. with climate factors to develop correlations between disease, crop yield, soil type, and crop and management (Bestelmeyer et al. 2020; Delgado et al. 2019; Janssen et al. 2017). Further, analytics performed on spatial data from these sensing platforms can potentially be used to effectively manage application rates of pesticide in addition to other resources such as seed, fertilizer, and irrigation. As a result, farmers can stop treating crop production fields as a uniform management unit and instead treat the field as a heterogeneous substrate for plant growth (Delgado et al. 2019). In this way, farmers can maximize disease control, crop productivity, and profitability and more efficiently use pesticides and other inputs resulting in less loss of these resources from agricultural fields and associated harmful environmental impacts (Gebbers and Adamchuk 2010; Mahlein 2016).

It is obvious that continued advances in sensing and big data technology will aid in further development of this precision agriculture approach which is reliant on synthetic pesticides and other synthetic inputs. We argue that GIS combined with big data analytics will enhance development of precision sustainable, regenerative agricultural systems, where the precision agriculture approach is applied to sustainable, regenerative cropping systems using biological inputs, and advanced germplasm with increased tolerance to disease, etc.; as well as the speed of development and adoption of these new technologies. The following sections are not intended as exhaustive reviews of the literature, rather we provide literature and case studies, mostly from work associated with our units on field crops, to illustrate these points.

Disease control tactics with reduced environmental impact

Precision disease control for increased cropping system sustainability

As discussed above, due to the patchiness of disease in production fields, the precision agriculture approach for disease management can potentially decrease pesticide use by applying pesticides only as needed in the field and thereby decrease environmental impact of the crop production system (Oerke 2020). Encouragingly, in a French study with 946 non-organic farms, high profitability remained with low pesticide use in 77% of the farms analyzed. The authors estimated that pesticide use could be reduced 42% without any negative effects on productivity in 59% of the farms (Lechenet et al. 2017). Precision agriculture could also decrease disease, hence pesticide use, in production fields by improving management of cropping system components such as fertilization and irrigation. Unbalanced fertilization and improper water conditions in field soils have been shown to increase the presence of certain diseases and pests (Cook and Papendick 1972; Rotem and Palti 1969; Veresoglou et al. 2000; Walters and Bingham 2007).

Sensor systems for disease detection and precision agriculture-based disease control. Many methods are available to determine the spatial distribution of pathogens and disease in grower fields with visual determination by human experts being the most common (Mahlein et al. 2018). However, disease monitoring by human experts takes substantial effort and can be prone to errors and inconsistency. An additional issue is that such visual diagnosis does not scale well. A human expert cannot efficiently evaluate all plants within a large area (Wu et al. 2019). Surveillance with aerial optical sensor platforms are attractive for this role as they offer the potential to capture and analyze, using the latest analytical tools, data from large crop acreage for disease diagnosis and determination of disease severity (Mahlein 2016; Oerke 2020). However, there are challenges with the remote sensing approach. Variable environmental conditions impact sensed plant characteristics and there is a need to differentiate among various abiotic and biotic stresses that may share similar spectral signatures. Also, intrinsic plant properties such as plant architecture and growth phase variation, and high sensitivity required for decision-making in disease control create challenges (Gold et al. 2020; Mahlein 2016; Mahlein et al. 2018; Oerke 2020; Wu et al. 2019; Yang 2020).

In field crops, diseases that occur in patches, cause enough morphological and physiological changes in crops, are of economic importance, and can be controlled effectively (e.g. effective fungicides, mechanical removal) are good candidates for detection using optical sensors followed by precision agriculture approaches for disease control (Mahlein 2016; Oerke 2020; Yang 2020). Aerial sensors have been used to detect Cotton Root Rot, damage from root-knot nematodes, Late Blight on tomato, Northern Leaf Blight on corn, diseases on perennial/orchard crops, and other diseases in fields (Chen et al. 2007; Cook et al. 2001; Mattupalli et al. 2018; Oerke 2020; Wu et al. 2019; Yang 2020; Zhang et al. 2005). Of these diseases, remote sensing is effective for precision management optimization for soilborne diseases and nematodes because root damage leads to visible changes in foliage characteristics, infections often cluster in the field, pathogen mobility in soil is slow impeding spatial disease spread in the field, there is scarce introduction of new disease foci in the field, and disease maps from one season can be applicable in future cropping seasons (Oerke 2020). For example, Cotton Root Rot tends to occur within the same area in a grower’s field from year to year allowing the use of maps of the disease from previous years to direct site-specific application of pesticide for control (Yang 2020). However, for wilt diseases caused by soilborne pathogens, at least two daily measurements are needed to distinguish disease from wilting caused by transient water shortage during high irradiation periods.

With high-value perennial crops (citrus, pome fruits, grapevines, coffee, etc.) remote sensing of disease affecting roots or those caused by invasive pathogens such as Xylella fastidiosa allows removal as early as possible to prevent further spread of the pathogen (Albetis et al. 2017; Oerke 2020; Zarco-Tejada et al. 2018). For example, spectral plant-trait characteristics of disease caused by X. fastidiosa in olive were detected pre-visually, a critical requirement for mechanical eradication of trees in time to contain spread of the pathogen, in this case via an insect vector (Zarco-Tejada et al. 2018). The key here is the time it takes between detection of a specific disease and application of an effective control measure relative to disease progression and spread (Mahlein 2016; Mahlein et al. 2018; Oerke 2020). Remote sensing is useful in cases other than with active disease control efforts. Sensors may be used for estimating the disease loss within large areas and to determine which areas to leave out of production next growing season due to the presence of soilborne pathogens that cannot be controlled except by crop rotation (Oerke 2020).

Going forward: Precision agriculture approaches for disease control with synthetic pesticides. In short, variable rate and site-specific pesticide application have potential to reduce pesticide application and thereby reduce environmental impacts. However, considerable research is needed to make these precision agricultural approaches applicable to all diseases (Mahlein 2016; Mahlein et al. 2018; Oerke 2020). In many cases, by the time disease is evident using aerial sensors it is too late to apply control measures to prevent further damage to the crop (Yang 2020). For example, even though Late Blight on tomato can be detected remotely, wind and rain-borne dissemination of infective propagules of the causal agent, Phytophthora infestans, can lead to disease in portions of the field, or neighboring fields, far from where it occurred previously. For this reason, research into pre-symptomatic detection of disease using different sensor systems is ongoing (Mahlein 2016; Mahlein et al. 2019). In these cases, pre-symptomatic detection could be used to trigger management responses before inoculum propagule production or vector spread to prevent further propagation of disease (Gold et al. 2020; Zarco-Tejada et al. 2018). Changes in photosynthetic activity, transpiration, and increased respiration due to presence of the pathogen can be detected prior to generation of visible disease symptoms (Martinelli et al. 2015).

Rumpf et al. (2010) were able to detect Cercospora leaf spot, powdery mildew, and rust on sugar beet before the appearance of visible symptoms. Gold et al. (2020) used hyperspectral imaging to detect and distinguish two important pathogens of potato, P. infestans and Alternaria solani, prior to visible symptoms on the potato plant in growth chamber experiments (Gold et al. 2020). These are important first steps, but it needs to be determined if these approaches can be scaled to applications using aerial imaging platforms. Encouragingly, pre-symptomatic detection of X. fastidiosa on olive was possible at the landscape level (Zarco-Tajeda et al. 2018).

A caveat regarding precision disease control is that in addition to connectivity and other infrastructure needs, there is a need to consider the increase in complexity of management of disease and the cropping system with this approach. These precision disease control systems need to be developed and deployed with the farmer in mind as many technologies are necessary for their implementation. Not all farmers have the knowledge and skills required to integrate all necessary technologies into a disease management system (Yang 2020).

Biologicals for disease control and increased cropping system sustainability

Biological control, the use of biologicals (microbes, cover crops, etc., and/or their extracts) for disease control, has been researched for decades (Fravel 2005; Glare et al. 2012; Köhl et al. 2019). Biological controls are of interest because they are thought to be more environmentally benign than synthetic pesticides for disease control (Glare et al. 2012). They are a particularly important tool for cropping system sustainability when plant resistance to pathogens is lacking and effective cultural controls, such as crop rotation, inadequate. Biologicals (cover crops, microbes) also provide benefits to crop production systems in addition to disease control; with cover crops providing ecosystems services and certain microbials promoting plant growth and/or increased availability of essential plant nutrients such as phosphate (Berg 2009; Compant et al. 2005; Morrissey et al. 2004; Vassilev et al. 2016). Cover crops will be discussed in another section below.

Many microbial biological control agents are commercially available for control of a variety of plant diseases and uses (e.g. https://www.canr.msu.edu/news/biopesticides_for_use_in_greenhouses_in_the_us; https://nevegetable.org/table-24-microbial-disease-control-products). However, greater grower adoption of biological controls is necessary. For greater adoption, they must compare favorably in disease control efficacy and reliability to synthetic pesticide compounds over a wide range of environmental conditions (Fravel 2005; Glare et al. 2012). Biological control efficacy is often inconsistent due to the inherent complexity of the interaction between the biological control agent, the pathogen, and the soil and plant environments where biological control interactions occur (Compant et al. 2005; Roberts and Kobayashi 2011). These soil and plant surfaces are highly heterogeneous regarding compounds and conditions that impact expression of genes important to biological control, influencing reliability and efficacy of disease control (Roberts and Kobayashi 2011). One approach to improving reliability and efficacy is to combine biological control microbes in individual formulations or through multiple treatments targeting strategic points in the disease cycle (Ji et al. 2006; Lemanceau and Allabouvette 1991; Pierson and Weller 1994; Roberts and Mattoo 2018). It is thought that a combination of microbes, with different ecological adaptations and mechanisms of disease control, are more likely to express traits important for disease control over a wider range of environmental conditions than an individual microbe (Compant et al. 2005; Lemanceau and Alabouvette 1991; Roberts and Kobayashi 2011).

Development of a reliable, efficacious biological control strategy for S. sclerotiorum on oilseed rape in China. Hu et al. (2011, 2013, 2014, 2015, 2016, 2017, 2019) are developing microbial biological control agents to apply at strategic points during the S. sclerotiorum disease cycle since cultural practices are limited in effectiveness and breeding for resistance to this pathogen challenging. S. sclerotiorum has a broad host range and long persistence in soil making crop rotation unreliable (Hededus and Rimmer 2005; Mahini et al. 2020; Nelson 1998) while breeding strategies for plant disease resistance are hampered by limited oilseed rape gene pools and the need for multigenic resistance (Bardin and Huang 2001; Wu et al. 2013). Although commercial biological control products for control of Sclerotinia have been developed (de Vrije et al. 2001) they are not used in China, possibly due to monetary exchange rates (Hu et al. 2005).

S. sclerotiorum overwinters as sclerotia in soil and produces mycelia or apothecia upon sclerotial germination. Ascospores produced from apothecia are the primary inoculum for most diseases of S. sclerotiorum. These ascospores typically germinate on senescing flower petals, leading to infection of healthy leaf and stem tissue, and plant death (Abawi and Grogan 1979; Boland 2004). Application points for biological control agents in this disease cycle are as a seed treatment, where the biological control agent is expected to endophytically or epiphytically colonize the oilseed rape plant and persist for the growing season; as a foliar spray at oilseed rape flowering to position the biological control agent in the plant canopy to prevent infection by germinating ascospores; and as a spray on the field prior to planting to allow a mycoparasitic biological control agent to colonize and kill sclerotia prior to release of primary inoculum. In field trials conducted at several locations, seed treatment formulations of three Bacillus isolates (B. subtilis BY-2 and Tu-100, B. megaterium A6), and spray applications of two of these isolates at flowering, compared favorably to the chemical control carbendazim applied at flowering (Hu et al. 2013, 2014). Fungal mycoparasites of sclerotia of S. sclerotiorum were also developed (Hu et al. 2015, 2016, 2017). Spray application of one mycoparasite, Aspergillus aculeatus Asp-4, to the soil prior to sowing rice in a rice-oilseed rape rotation resulted in a significant reduction in disease incidence and apothecia formation compared with the non-treated control; suggesting that colonization and degradation of sclerotia by Asp-4 and subsequent reduction in ascospore production led to disease control (Hu et al. 2016).

Although more work needs to be done, the use of multiple biological control agents to improve disease control efficacy and reliability showed promise for control of S. sclerotiorum on oilseed rape, and for increasing oilseed rape seed yield (Hu et al. 2019). Treatments containing all three Bacillus isolates (A6, BY-2, Tu-100) were applied individually and in combinations as seed treatments and tested in field trials conducted at four locations with different soils. The treatment containing one isolate, B. subtilis BY-2, resulted in significant reductions in disease at all four locations. There was also an incremental, although not statistically significant, reduction in disease with increasing number of strains in the treatments (3 strains > 2 strains > 1 strain in terms of disease reduction) at three field trial locations. In plant growth promotion studies conducted in pots, the seed treatment containing all three isolates resulted in seed yield greater than the non-treated control in four of the five soils used (an additional soil not used in field trials was added to this study). A seed treatment containing both isolates A-6 and Tu-100 significantly increased yield in one soil. No other treatment containing a single isolate, or two isolates, significantly increased yield in these pot studies (Hu et al. 2019). Plant growth promotion may be due to production of the plant hormone indole acetic acid (IAA) or increased phosphate availability as these strains produced IAA and increased soil phosphate levels (Hu et al. 2019).

Hu et al. are currently determining if combining different biological agents applied at different points in the disease cycle improves biological control of S. sclerotiorum on oilseed rape. An experiment is underway at several locations where a seed treatment containing all three Bacillus isolates (A6, BY-2, Tu-100) is being combined with a spray application of the mycoparasite A. aculeatus Asp-4 to soil prior to planting to see if disease control is improved relative to the seed treatment or spray applied alone (Hu et al. unpublished). The hypothesis is that these two application methods will reinforce each other regarding disease control. The application of mycoparasites prior to planting oilseed rape would reduce initial inoculum of S. sclerotiorum, resulting in less disease to be controlled with the Bacillus seed treatment. Likewise, the follow-up use of the Bacillus seed treatments would minimize disease caused by pathogen inoculum that escaped the soil spray with the mycoparasite.

Another approach to increasing sustainability of disease control is to combine microbial biological control agents with reduced rates of synthetic pesticides (Glare et al. 2012). For example, a formulation of the mycoparasite, Trichoderma sp. Tri-1, sprayed onto the soil prior to planting was tested in combination with reduced application rates of the chemical pesticide carbendazim, applied at oilseed rape flowering, for control of S. sclerotiorum (Hu et al. 2016). Encouragingly, treatments containing formulated Tri-1 combined with carbendazim applied at 75% the recommended rate reduced incidence of disease to levels obtained with the treatment containing carbendazim applied at the recommended rate in field trials. An added benefit of this combined approach is that development of pathogen resistance to the both the biological control agent and chemical pesticide could be slowed. Although both biological control agents and chemical pesticides are susceptible to development of resistance by the target pathogen or pest, the use of two or more unrelated tactics should decrease development of resistance to each tactic (Glare et al. 2012; Siegwart et al. 2015; Tomasetto et al. 2017). It is also possible that combinations of biological control agents with synthetic pesticides provide additive, if not synergistic effects (Xu et al. 2011).

Going forward: Precision agriculture approach for use of biologicals for disease control. Decreasing costs associated with biologicals is another way, in addition to improving disease control efficacy and reliability, to increase grower adoption (Glare et al. 2012). Higher costs associated with biologicals due to pricing of the product or the necessity for multiple treatment applications (persistence of biological activity in the environment is typically short; Glare et al. 2012) could be reduced using the precision-agriculture approach. Variable rate and site-specific spray application or seed-treatment delivery of biologicals to only the region of the field where needed can easily be envisioned as more cost-effective than delivery of these biologicals to an entire grower field. As with precision application of chemical pesticides, wider use of the precision approach for biological control agents is dependent on detection of disease in time to restrict widespread dissemination of pathogen inoculum.

Approaches detailed here for biological control agents substantially increase the complexity of cropping system management and are more knowledge-intensive in relation to those using synthetic pesticides, creating another impediment to grower adoption (Chandler et al. 2011; Glare et al. 2012). Combinations of treatments (e.g. soil spray prior to planting, seed treatment, spray at flowering) need to be aligned with crop rotation, crop development, etc., that can vary with region, weather, and other factors. Handling and application of microbial biological control agents may differ with the microbial used; and clearly differ with those associated with chemical pesticides. To encourage grower adoption, communication with the grower, grower education, and management decision support tools (DSTs) to simplify grower decision making are needed (Barratt et al. 2018; Chandler et al. 2011; Goldberger and Lehrer 2016; Marrone 2007).

Cover crops for sustainable, regenerative cropping systems and disease control

Cover crops collectively provide a diverse array of ecosystem services including control of pathogens, insect pests, and weeds (Abawi and Widmer 2000; Larkin 2013, 2015; Mattoo and Teasdale 2010; Teasdale et al. 2004; Vukicevich et al. 2016). Cover crops also fix atmospheric CO2 and build soil organic matter when decomposed, contributing to soil health. Cover crops capture excess nutrients preventing nutrient loss from soil, prevent soil erosion, and increase rainfall infiltration and soil water holding capacity. Leguminous cover crops such as vetch, clover, peas, and beans fix atmospheric nitrogen and thereby improve soil fertility (Mattoo and Teasdale 2010). Mixtures of cover crops are being developed to increase the number of ecosystem services provided. For example, small grains cover crops, such as cereal rye, produce substantial biomass, build soil organic matter, prevent soil erosion, and provide reliable weed suppression as a living cover crop and after termination as a surface mulch (Poffenbarger et al. 2015; Snapp et al. 2005). While hairy vetch, a legume, fixes atmospheric nitrogen and releases nitrogen into the soil during decomposition but grows slowly in the fall and decomposes rapidly making it less effective for weed suppression. Mixtures of hairy vetch and cereal rye have been shown to provide greater above ground biomass and weed suppression than hairy vetch monocultures and release greater amounts of nitrogen into the soil than cereal rye monocultures (Hayden et al. 2012; 2014; Poffenbarger et al. 2015).

Cover-crop-based production system for delivery of ecosystem services and disease control. A sustainable tomato cropping system developed by Abdul-Baki and Teasdale (1993) is an example of the use of cover crops to decrease cropping system environmental impact while providing a measure of disease and pest control. Conventional, field-grown tomato production systems in the mid-Atlantic region of the United States typically consist of tomato transplanted into raised soil beds. Farmers rely on tillage and black polyethylene plastic for weed control and synthetic fertilizer to maintain nitrogen fertility levels (Abdul-Baki et al. 1996). Synthetic pesticides are used to control disease and insect pests. Excess nitrogen in the form of fertilizer is sometimes applied to maximize yield, potentially contributing to surface and groundwater pollution as nitrogen recovery by the tomato plant is low (Abdul-Baki et al. 1996). Abdul-Baki and Teasdale integrated the leguminous cover crop hairy vetch into the field-grown tomato cropping system to increase sustainability. In this production system, hairy vetch is planted into raised soil beds in the fall, in early spring the hairy vetch is killed by spraying with a light herbicide dose, and subsequently tomato seedlings are no-till planted into the dead hairy vetch mulch covering the entire production field. Black plastic was omitted from the sustainable tomato production system.

Notably, the need for fungicide application was decreased with the sustainable tomato production system due to delayed development of tomato early blight disease caused by Alternaria solani, a major disease problem with growing tomatoes in the mid-Atlantic region of the US. The hairy vetch-mulch inhibited splash dissemination of soil containing inoculum into the tomato canopy (Mills et al. 2002a, b). There was also greater resistance of the crop to invasion and damage by the Colorado Potato Beetle (Teasdale et al. 2004). The sustainable cropping system also had a broad impact on tomato plant physiology. In field and greenhouse experiments, gene transcripts and proteins involved in diverse biological processes were differentially up-regulated in leaves of hairy vetch-grown tomato plants relative to control plants grown with black plastic (Kumar et al. 2004, 2005; Mattoo and Abdul-Baki 2006). Up-regulated genes included ribulose bisphosphate carboxylase/ oxygenase (Rubisco), nitrogen-responsive glutamate synthase, stress-defense related protein glucose-6-phosphate dehydrogenase, chaperone proteins (HSP70 and ER protein BiP) that stabilize native proteins, and the plant defense anti-fungal proteins chitinase and osmotin. Up-regulation of these plant defense genes may have increased disease resistance of tomato (Kumar et al. 2004; Mills et al. 2002a, b). Current work is directed at determining what sustainable cropping system components cause this change in tomato physiology resulting in enhanced resistance to tomato early blight (Mattoo et al. unpublished).

The tomato production system was decreased in environmental impact in addition to being more robust to pathogens and pests. There was a reduction in synthetic fertilizer inputs. Minimum nitrogen fertilizer rates necessary to achieve maximum tomato yield were reduced to 80 lb/acre compared to 170 lb/acre associated with the conventional black plastic system (Abdul-Baki et al. 1997). Soil erosion and water runoff were also reduced. The hairy vetch-mulch allowed more water infiltration into soil than the impervious black plastic. Additionally, pesticide loads released from fields were substantially reduced (Rice et al. 2001, 2002). Importantly, tomato yield, and economic return with this hairy vetch production system were greater or equivalent to that obtained with the conventional tomato production system (Abdul-Baki et al. 1996; Kelly et al. 1995).

Going forward – Precision approach for delivery of cover crops for disease control and other ecosystem services. As can be seen, use of cover crops can add to the resilience of the cropping system to disease as well as deliver other ecosystem services to help restore soil health. However, significant challenges remain regarding management of cover crop and cover crop mixtures to optimize ecosystem services provided and minimize the cover crop competing for resources with the subsequent cash crop as a weed (Mirsky et al. 2013; 2017a, b; Wallace et al. 2017). Cover crops, like cash crops, respond to environmental conditions in fields that impact crop biomass and quality—two performance factors tightly correlated with benefits that cover crops deliver (Finney et al. 2016; Mirsky et al. 2017a, b). Therefore, current efforts are directed at integrating cover crop use into a precision framework to facilitate cropping system management. New sensing technologies coupled with big data analytics can quantify cover crop performance factors and spatial variability and impacts on ecosystem service delivery. Remote sensing offers many tools relevant to cover crops, including populating landscape-level models with estimates of percentage of land cover, Normalized Difference Vegetation Index (NDVI) based on reflectance of cover crops to evaluate soil cover and cover-crop biomass, and lidar technology to map structure, including vegetation height, density, and other characteristics across a region (Hively et al. 2009; Prabhakara et al. 2015; Saha et al. 2018).

Geospatial tools to aid cropping system management

As illustrated in the sections above, efforts to increase cropping system sustainability while providing disease control can increase management complexity of the farming operation. This is particularly true considering that cover crops could be combined with biological control agents, or biological control agents combined with other biological control agents or reduced rates of synthetic pesticides, in an integrated, sustainable disease management approach. Additionally, use of biologicals, such as cover crops and microbial biological control agents, introduces increased environmental influences on their performance that must be managed (Siegwart et al. 2015). Other approaches for more sustainable disease control such as mixtures and multi-lines of cash crop cultivars for increased genetic heterogeneity and reduced use of pesticide may also introduce cropping system management complexity. An important pathogen of rice was controlled by alternating rows of two rice varieties within the field (Zhu et al. 2002). This intermingling of cash crop genotypes can alter the disease-resistance profile of the grower field and decrease or eliminate the pathogen (Browning and Frey 1969; Garrett and Mundt 1999; Wolfe 1985).

Ultimately the impact of sustainable cropping system approaches on our sustainable future depends on grower adoption. Geospatial tools are being developed with grower adoption of sustainable cropping system approaches in mind (Kanatas et al. 2020; Lindblom et al. 2017; Rose et al. 2016). Technologies that are consistent with norms, are not complex, have observable impacts, and are easy to implement tend to be adopted more readily than the opposite (Lindblom et al. 2017). Sustainable farming approaches are clearly the opposite, even regarding observable impacts. It can take years for observable improvements in soil health, etc. For this reason, geospatial tools are available or being developed that decrease the information burden on the farmer, and thus management issues, resulting from complex, more technology-driven sustainable farming operations.

Use of sensing technologies within a precision framework allows designing geospatial tools that provide real-time, site-specific, decision-making support (O’Grady and O’Hare 2017). Examples of currently available geospatial tools include autosteering, designed to reduce input (fertilizer, pesticide, etc.) application overlap and conserve energy as farm machinery moves through the field, and the Yara N-sensor, designed to optimize site-specific nitrogen fertilizer application. The Yara N-sensor couples canopy reflectance in the red and near infrared EM spectrum, to determine crop nitrogen and biomass status, with a fertilizing algorithm to inform variable-rate farm equipment (Lindblom et al. 2017). Geospatial decision support tools (DSTs) are interactive and lead users through decision steps with various expected outcomes to aid in effective decision making (Dicks et al. 2014). In this case, they translate scientific information into farm management decisions, which necessitates integration of different models, scales, and scientific disciplines. Most are based on a subset of research studies, data sets, or models designed to represent the system in question (Dicks et al. 2014; Parker et al. 2002). Certain software tools can facilitate effective farm management by recording data efficiently, analyzing it, and generating a series of evidence-based recommendations (Rose et al. 2016; Rossi et al. 2014). Available geospatial tools typically do not consider the management complexities arising from use of biologicals in precision sustainable cropping systems. For this reason, Mirsky and Reberg-Horton et al. are developing geospatial DSTs where data important to cover crop performance is integrated from field-based, robot-based, and aerial-based sensors; that will provide the farmer with real-time, site-specific information for decisions concerning optimization of ecosystem services delivered by the cover crops (Melkonian et al. 2017; Roberts et al. 2020).

Geospatial tools and DSTs have not enjoyed widespread use by the farmer despite the perceived benefits of their use by the researchers and others who developed them (Kanatas et al. 2020; Lindblom et al. 2017; O’Grady and O’Hare 2017; Rose et al. 2016). Many studies blame this lack of use on non-participation of the farmer in tool design (Cerf et al. 2012; Ditzler et al. 2018; Lindblom et al. 2017). Existing DSTs are based on information researchers and DST developers consider as necessary, but do not actually capture all needs of farmers. Other reasons for lack of widespread use are a perceived problem of complexity by the user, level of knowledge of the users, poor user interface design, and tedious data input requirements, among others (Lindblom et al. 2017). Going forward, to increase use of geospatial tools and DSTs researchers and developers are pivoting to user-centered design, which is much more collaborative with the user (farmer). This design process includes the user in the initial research (analysis) phase as well as the final evaluation phase. The purpose of the analysis phase is to understand the need of the user and the context of use, while the evaluation phase is for verification and refinement of the product (Lindblom et al. 2017).

Development of advanced crop germplasm

Disease-resistant crop germplasm represents an efficient and environmentally friendly means of controlling disease as pesticide use can be decreased when disease-resistant germplasm is used. Use of genetically improved germplasm is also a tested method to enhance farmer adoption of a more sustainable farming approach. Farmers are very familiar with working with the new technology (seed); use of new crop germplasm is extremely easy to implement; advanced germplasm that enhances disease resistance provides an easily observable benefit, increased yield under disease pressure with less pesticide input expense; and use of disease-resistant crop germplasm decreases the complexity of farming operations, as the need for time-dependent knowledge regarding pesticide application and disease forecasting is reduced (Mahlein et al. 2019).

The use of GMO-seed is the sometime exception to grower adoption, as some parts of the world extensively use GMO crops while GMO crops are resisted elsewhere. Thirty countries produce GMO crops with five countries (United States, Brazil, Argentina, Canada, and India) accounting for approximately 90% of total GMO crop production (Van Acker et al. 2017). In the United States herbicide-tolerant (HT), insect-resistant (Bt), or stacked GMO seed containing both HT and Bt traits are extensively used (≥ 90% of acreage planted to GMO) in corn, cotton, and soybean but also widely used in alfalfa, canola, and sugar beet production (USDA-ERS 2020). Practical concerns around GMO crops decreasing grower interest include insect-pest- and weed-resistance to pesticides that are partnered with the GMO-integrated traits. Other concerns include broad seed variety access for farmers, rising seed costs, and dependency on multinational seed companies (Van Acker et al. 2017). Also, citizens in many countries are opposed to GMO crops due to perceived negative impacts on human health and the environment (e.g. Jayarman et al. 2012). Consideration of techniques used for development of crop germplasm (e.g. crop breeding vs. GMO technologies) is important as adoption by farmers and consumers could be highly impacted.

GIS combined with big data management and analytics will speed the development of advanced crop germplasm by enabling high throughput screening systems, data analysis, and collaboration amongst scientists. Development of crop varieties with resistance to biotic (pathogens, pests) and abiotic stresses traditionally has been slow and challenging as effects of stresses on crops are variable and complex in the case of combinations of stresses (Suzuki et al. 2014; Taranto et al. 2018). These challenges are compounded by the need to develop crop germplasm that more efficiently uses cropping system inputs and yields edible tissues of higher nutritional quality. Crop germplasm development will require large collections of germplasm as well as large plant populations resulting from breeding or advanced biotechnology techniques, such as gene editing or genetic engineering; necessitating the use of high-throughput operations for screening for desired traits (Taranto et al. 2018).

Screening operations are thought to be the rate limiting factor in development of advanced crop germplasm (Araus et al. 2018; Taranto et al. 2018). As with disease assessments for precision agriculture, disease assessment phenotyping in germplasm development is typically done manually leaving phenotype assessments open to subjectivity, human error, and repeatability issues. High-throughput phenotyping methods are being developed for disease assessment in these screening operations based on image processing (inherently a geospatial technique), machine learning, and big data management and analytics to increase speed and accuracy (Araus et al. 2018). Imaging technology can quantitate percent leaf area diseased and several studies have demonstrated digital phenotyping on crop or canopy scale (Mahlein et al. 2019). Big data management and analytics will also support advanced molecular technologies used in advanced plant germplasm development such as QTL mapping; genomics, transcriptomics, and proteomics; genome editing; and bioinformatic analysis (Taranto et al. 2018).

Geographic information systems and big data analytics to leverage collaboration

Research efforts directed at increasing cropping system sustainability and resilience to disease are heavily reliant on interdisciplinary collaboration, sharing data, and access to the most advanced analytic tools. For example, development of advanced crop germplasm requires extensive collaboration to provide skills in plant breeding, molecular biology, engineering, and bioinformatics. Also needed are collaborations across regions to test resulting advanced crop germplasm for expression of disease resistance and other traits under varying environmental conditions (Taranto et al. 2018). Research directed at implementing precision approaches in disease control and cover crop management are also heavily reliant on cross-disciplinary collaborative efforts spread across large geographical regions.

Agricultural research outcomes system (AgCROS).

To enable collaboration and speed development of technology, the USDA-Agricultural Research Service (ARS) has developed AgCROS. AgCROS is a GIS-based platform designed to foster the sharing of research data, the development of DSTs for both scientists and producers, and to provide a framework for expediting the development of agricultural models and algorithms. Based on technology from Esri, AgCROS fuses real-time data from IoT sensor networks, field data collected in situ, and remotely sensed data from UAVs and spaceborne sensors into a single platform that can be used by scientists to rapidly develop and deploy new techniques to producers who can provide immediate feedback to improve the science.

Built on Microsoft’s Azure cloud, AgCROS utilizes a suite of core components from Esri, built on a loosely organized data infrastructure. Unlike traditional data warehouses that require significant upfront design costs, the data management approach is to start by ensuring data are captured in the cloud in a data pool, organized around a scientific objective or project, and linked or contextualized using GIS at its core. Because most of the data in AgCROS are geospatial, the Esri components are designed in a multi-tenant architecture to manage a variety of data types including tabular, vector, and raster data that is often voluminous (i.e., big data).

Projects act as tenants allowing scientists to control their environment before releasing or publishing results to the community on the AgCROS platform or exposing data through APIs to other platforms. Because Esri is designed to operate in a federation or “system of systems” called WebGIS, data can be easily shared as a webservice for use to and from AgCROS, thereby reducing unnecessary data movement that can be significant in the context of the “big data” world. This is also important when pushing information products in the form of mobile DSTs to the edge in remote areas where bandwidth is limited, but decisions must be made quickly.

Use of AgCROS to foster collaboration between USDA-ARS and the agricultural community to control Citrus Greening in the United States

In the disease management arena and as an example of this new paradigm in use as one of the tenants, AgCROS is being used by the CRAFT Foundation, Inc. to bring together constituencies from the over $3 billion Florida citrus industry to combat the spread of Huanglonbing (HLB) or Citrus Greening, which is transmitted via citrus psyllids. Covering over 2000 acres, over 40 growers across 14 Florida counties are collaborating with the USDA-ARS, state inspectors, and 3rd party contractors to develop new disease control practices while new disease resistant cultivars and other disease control measures are being developed. Because the threat to the business is so imminent, producers uncharacteristically have agreed to share their data publicly around their farm management practices to provide immediate feedback to researchers regarding the efficacy of their scientific recommendations.

The use of AgCROS for HLB disease control development provides a spatial perspective of the on-farm network of volunteer growers who sign up via the interface in Fig. 1 and provide data around the land’s intended use, planned planting and management practices, pest/disease management used, cost analysis, etc. With this information, researchers can approve applications based on scientific merit and begin the experimental design process for tree planting using GIS tools in AgCROS. Because the system is public, growers can monitor the results of other growers’ practices to quickly improve their own results. These design patterns shown in Fig. 2 are created to test a variety of potential remedies for psyllid control including reflective mulch, individual protective covering (IPC), and Kaolin Clay Spray as opposed to traditional uses of chemical control. With the ability to capture data continuously from growers via a mobile application, collected data can be aggregated and correlated to treatment outcomes as displayed in Fig. 3. While correlating treatments to outcomes at the field level support confidence and best practices adoption, monitoring tree health and HLB transmission from a regional perspective is key to providing data around Industry economic health to authorities like the Florida Department of Agriculture Consumer Services (FDACS).

As with any pathogen, early detection of HLB is key to preventing long-term loss. Recent research into the use of canines for early detection (Gottwald 2020) shows promise when coupled with the aforementioned field data collection and GIS techniques as an early detection system. Additionally, combining UAV-based phenotyping using imagery with field data collection and novel management practices gives researchers an overall picture of the disease. This is also done in AgCROS through the on-farm network via times-series-data-based NDVI and NDRE indices (Ampatzidis et al. 2019). Specifically, as shown in Fig. 4, growth metrics like canopy area and volume from identified trees sourced from UAV flights can be linked back to plant nutrition and diagnostics collected from FDACS.

While UAV flights provide the necessary spectral, temporal, and spatial resolution for the on-farm network, they are too cost prohibitive when viewing HLB from a national and global scale. As suggested in Lu et al. (2015), satellite data from WorldView-3 can be used at scale through the use of Machine Learning (ML) techniques applied to tree identification because of its high spatial, spectral, and temporal resolution. In the CRAFT example in Fig. 4, identified trees with associated ground truth can be fed into the ML models as training data for later application to other parts of the world. As an example of this approach, USDA Crop Data Layer (CDL) from CropScape (Han et al. 2012) can be used to identify areas of the country where citrus or orange groves exist. These areas identified in Fig. 5 are potential candidates for testing the ML models, sources of further ground truth or training data beyond the CRAFT sites, and potential future CRAFT partners or test sites for the on-farm network. Once field sites are identified, a data science environment using Jupyter Notebooks on AgCROS is constructed as shown in Fig. 6 to train an ML model based on training data that is defined by examining and identifying trees in the multispectral imagery using polygons around the known tree area. In this example, using a similar approach to Ampatzidis et al. (2019), 4-band NAIP imagery was used from USDA and object detection in ArcGIS Pro was applied to train a Convolutional Neural Network (CNN) to extract the precise location of the trees in the grove. Once trained, spectral indices were extracted that indicate certain metrics on tree health at the tree location, resulting in a map of the relative health of the trees. As a result of applying Esri ArcGIS Pro’s ML framework, individual trees were identified and shown as red circles in Fig. 7 with an accuracy of greater than 90% over 10 epochs of training. Additionally, NDVI can be used on the selected trees from the previous object identification process as an indirect indicator of health as shown in Esri’s ArcGIS Pro in Fig. 8 where green shows high values of NDVI and red low values.

By combining the individual trees shown in Fig. 7 with NDVI, individual tree health is indicated by color

From a ML perspective, the CRAFT project provides a mechanism for modelers to continuously collect training data for improving detection accuracy, leading to the opportunity to further quantify at tree level an accuracy beyond the CDL’s focus on quantifying Citrus cropland. In other words, the symbiotic collaboration between the growers and scientists operating on a common geoinformatics platform like AgCROS not only improves the science, but it also provides a practical environment for rapidly deploying and monitoring solutions to HLB. Given the cloud’s ability to scale to a national and global level, these techniques can provide a more accurate and wholistic view of the global citrus industry.

Going forward: collaboration amongst the agricultural community on a global scale

Of course, the citrus industry does not operate in a vacuum, as there are complex interactions amongst many agroecosystems at a variety of scales. To model these interactions in a virtual world, the WebGIS pattern or framework can connect the agricultural community at the global scale allowing regional applications, like the HLB example presented, to provide food security in underserved areas of the world. Simultaneously, remote data repositories and remote models operating in the cloud can be pushed to the edge and made available to farm advisors, farmers, etc. with devices that can access the cloud. Just as in the case of the Florida growers, these devices can provide critical ground truth back to the cloud for analysis by scientists from any part of the world. Acting as a “Digital Twin”, AgCROS in this context moves from being a multi-tenant data repository to being an automated ML environment that adapts in alignment with changes in the real world.

Conclusions

A second Green Revolution is needed that is broadened in scope to include not only increasing crop yield but also sustainable, regenerative cropping systems that yield plant foodstuffs with a higher nutritional dose. Included in this are more sustainable approaches for plant disease control compatible with this improved crop germplasm and these cropping systems. The digital revolution will be key as it provides new opportunities for smarter use of agricultural resources (Pingali 2012). As outlined above, GIS as the organizing principle, coupled with big data management and analytics will contribute not only as component parts of new plant disease management and cropping system approaches but as infrastructure aiding in speeding the development and adoption of needed new technology and approaches. Importantly, adoption by the farmer will be driven not only by geospatial tools to decrease complexity of disease management and cropping system management, but also by GIS and other information technologies enabling involvement of the farmer in the development of these technologies.

The first Green Revolution (1965–1985) and the post Green Revolution period (1985≈2005) resulted in tremendous yield growth in staple crops, helping to feed the world despite large population increases and decreasing land availability for agriculture. Yield increases in developing countries were 208% for wheat, 109% for rice, 157% for maize, 78% for potatoes, and 36% for cassava (Pingali 2012). Contributing to the success of the Green Revolution and post Green Revolution periods was the establishment of Consultative Group on International Agricultural Research (CGIAR) to help transfer technology and knowledge from food-secure nations to national agricultural programs located across the developing world for subsequent dissemination and regional adaptation. Adoption of modern germplasm reached the majority of croplands. This resulted in poverty reduction and lower staple food prices allowing food diversification to micronutrient dense foods, resulting in better nutrition for many (Eveson and Gollin 2003; Pingali 2012; Renkow and Byerlee 2010).

As with the first Green Revolution, spillover of technology from food-secure nations to regions of the world without food security is needed going forward. Here the digital revolution can have a tremendous impact on solving world food security and environmental problems by augmenting transfer of information from not only institutions such as CGIAR but, as illustrated with the above HLB example, also from platforms such as AgCROS located anywhere in the world. Geoinformatic decision support tools can aide in agricultural management decisions, and help sustainably increase crop yield, in lesser developed regions of the world without the considerable infrastructure needed for precision agricultural approaches. It can be envisaged that information from models hosted on another continent; using local inputs regarding soils, weather, crop genetics, etc.; can be pushed back to the edge via cloud-hosted services, accessed over the network from a Web browser running on a mobile phone or tablet; to provide best practices to local farmers (Janssen et al. 2017). Thus, the digital revolution, can contribute to sustainably feeding the future world population in many different, regionally-specific ways. All of which will be needed to sustainably feed the world population.

References

Abawi GS, Grogan RG (1979) Epidemiology of diseases caused by Sclerotinia species. Phytopathology 69:899–904

Abawi GS, Widmer TL (2000) Impact of soil health management practices on soilborne pathogens, nematodes and root disease of vegetable crops. Appl Soil Ecol 15:37–47

Abdul-Baki AA, Teasdale JR (1993) A no-tillage tomato production system using hairy vetch and subterranean clover mulches. HortSci 28:106–108

Abdul-Baki AA, Teasdale JR, Korcak RF (1997) Nitrogen requirements of fresh-market tomatoes on hairy vetch and block polyethylene mulch. HortSci 32:217–221

Abdul-Baki AA, Teasdale JR, Korcak RF, Chitwood DJ, Huettel RN (1996) Fresh-market tomato production in a low-input alternative system using cover-crop mulch. J Amer Soc Hort Sci 31:65–69

Adeyemi O, Grove I, Peets S, Norton T (2017) Advanced monitoring and management systems for improving sustainability in precision irrigation. Sustainability 9:353. https://doi.org/10.3390/su9030353

Albetis J, Duthoit S, Guttler F, Jacquin A, Goulard M, Poilvé H, Féret JB, Dedieu G (2017) Detection of flavescence dorée grapevine disease using unmanned aerial vehicle (UAV) multispectral imagery. Remote Sens 9:308

Ampatzidis Y, Partel V, Meyeiring B, Albrecht U (2019) Citrus rootstock evaluation utilizing UAV-based remote sensing and artificial intelligence. Comput Electron Agricult 164:104900. https://doi.org/10.1016/j.compag.2019.104900

Araus JL, Kefauver SC, Zaman-Allah M, Olsen MS, Cairns JE (2018) Translating high-throughput phenotyping into genetic gain. Trends Plant Sci 23:451–466

Bardin SD, Huang HC (2001) Research on biology and control of Sclerotinia diseases in Canada. Can J Plant Pathol 23:88–98

Barratt BIP, Moran VC, Bigler F, van Lenteren JC (2018) The status of biological control and recommendations for improving uptake for the future. Biocontrol 63:155–167

Berg G (2009) Plant-microbe interactions promoting plant growth and health: perspectives for controlled use of microorganisms in agriculture. Appl Microbiol Biotechnol 84:11–18

Bestelmeyer BT, Marcillo G, McCord SE, Mirsky SB, Boglen GE, Neven LG, Peters DC, Sohoulande Djebou DC, Wakie T (2020) Scaling up agricultural research with artificial intelligence. IEEE IT Profess 22:32–38

Boland GJ (2004) Fungal viruses, hypovirulence, and biological control of Sclerotinia species. Can J Plant Pathol 26:6–18

Browning JA, Frey KJ (1969) Multiline cultivars as a means of disease control. Annu Rev Phytopathol 7:355–382

Cerf M, Jeuffroy M-H, Prost L, Meynard J-M (2012) Participatory design of agricultural decision support tools: taking account of the use situations. Agron Sustain Dev 32:899–910

Chandler D, Bailey AS, Tatchell GM, Davidson G, Greaves J, Grant WP (2011) The development, regulation and use of biopesticides for integrated pest management. Phil Trans R Soc B 366:1987–1998

Chen X, Ma J, Qiao H, Cheng D, Xu Y, Zhao Y (2007) Detecting infestation of take-all disease in wheat using Landsat Thermatic Mapper imagery. Int J Remote Sens 28:5183–5189

Compant S, Duffy B, Nowak J, Clément C, Barka E (2005) Use of plant growth-promoting bacteria for biocontrol of plant diseases: Principles, mechanisms, of action, and future prospects. Appl Environ Microbiol 71:4951–4959

Cook CG, Escobar DE, Everitt JH (2001) Utilizing airborne video imagery in kenaf management and production. Ind Crops Prod 9:205–210

Cook RJ, Papendick RI (1972) Influence of water potential of soils and plants on root disease. Annu Rev Phytopathol 10:349–374

Delgado JA, Short NM Jr, Roberts DP, Vandenberg B (2019) Big data analysis for sustainable agriculture on a geospatial cloud framework. Front Sustain Food Syst 3:54. https://doi.org/10.3389/fsufs.2019.00054

de Vrije T, Antoine N, Buitelaar RM, Bruckner S, Dissevelt M, Durand A, Gerlagh M, Jones EE, Luth P, Oostra J, Ravensberg WJ, Renaud R, Rinzema A, Weber FJ, Whipps JM (2001) The fungal biocontrol agent Coniothyrium minitans: production by solid-state fermentation, application and marketing. Appl Microbiol Biotechnol 56:58–68

Dicks LV, Walsh J, Sutherland WJ (2014) Organising evidence for environmental management decisions: a ‘4S’ hierarchy. Trends Ecol Evol 29:607–613

Dimpka CO, Bindraban PS (2016) Fortification of micronutrients for efficient agronomic production: a review. Agron Sust Dev 36:7. https://doi.org/10.1007/s13593-015-0346-6

Ditzler L, Klerkx L, Chan-Dentoni J, Posthumus H, Krupnik TJ, López Ridaura S, Andersson JA, Baudron F, Groot JCJ (2018) Affordances of agricultural systems analysis tools: a review and framework to enhance tool design and implementation. Agric Sys 164:200–230

Eveson RE, Gollin D (2003) Assessing the impact of the green revolution, 1960 to 2000. Science 300:758–762

Fatima T, Sobolev AP, Teasdale JR, Kramer M, Bunce J, Handa AK, Mattoo AK (2016) Fruit metabolite networks in engineered and non-engineered tomato genotypes reveal fluidity in a hormone and agroecosystem specific manner. Metabolomics 12:103. https://doi.org/10.1007/s11306-016-1037-2

Fedoroff NV, Battisti DS, Beachy RN, Cooper PJM, Fischoff DA, Hodges CN, Knauf VC, Lobell D, Mazur BJ, Molden D, Reynolds MP, Ronald PC, Rosegrant MW, Sanchez PA, Vonshak A, Zhu J-K (2010) Radically rethinking agriculture for the 21st Century. Science 327:833–834

Finney DM, White CM, Kaye JP (2016) Biomass production and carbon/nitrogen ratio influence ecosystem services from cover crop mixtures. Agron J 108:39–52

Foley JA, DeFries R, Asner GP, Barford C, Bonan G, Carpenter SR, Chapin FS, Coe MT, Daily GC, Gibbs HK, Helkowski JH, Holloway T, Howard EA, Kucharik CJ, Monfreda C, Patz JA, Prentice IC, Ramankutty N, Snyder PK (2005) Global consequences of land use. Science 309:570–574

Foley JA, Ramankutty N, Brauman KA, Cassidy ES, Gerber JS, Johnston M, Mueller ND, O’Connel C, Ray DK, West PC, Balzer C, Bennett EM, Carpenter SR, Hill J, Monfreda C, Polasky S, Rockström J, Sheehan J, Siebert S, Tilman D, Zaks DPM (2011) Solutions for a cultivated planet. Nature 478:337–342

Fravel DR (2005) Commercialization and implementation of biocontrol. Annu Rev Phytopathol 43:337–359

Garrett KA, Mundt CC (1999) Epidemiology in mixed host populations. Phytopathology 89:984–990

Gebbers R, Adamchuk VI (2010) Precision agriculture and food security. Science 327:828–831

Glare T, Caradus J, Gelernter W, Jackson T, Keyhani N, Köhl J, Marrone P, Morin L, Stewart A (2012) Have biopesticides come of age? Trends Biotechnol 30:250–258

Gold KM, Townsend PA, Chlus A, Herrmann I, Couture JJ, Larson ER, Gevens AJ (2020) Hyperspectral measurements enable pre-symptomatic detection and differentiation of contrasting physiological effects of late blight and early blight in potato. Remote Sensing 12:286. https://doi.org/10.3390/rs12020286

Goldberger JR, Lehrer N (2016) Biological control adoption in western U.S. orchard systems: results from grower surveys. Biol Contrl 102:101–111

Gottwald T, Poole G, McCollum T, Hall D, Hartung J, Bai J, Luo W, Posny D, Duan Y, Taylor E, Da Graça J, Polek M, Louws F, Schneider W (2020) Canine olfactory detection of a vectored phytobacterial pathogen, Liberibacter asiaticus, and integration with disease control. Proc Nat’l Acad Sci USA 117:3492–3501

Han W, Yang Z, Di L (2012) CropScape: A web service-based application for exploring and disseminating US conterminous geospatial cropland data products for decision support. Comp Electron Agricult 84:111–123

Hayden ZD, Brainard DC, Henshaw B, Ngouajio M (2012) Winter annual weed suppression in rye-vetch cover crop mixtures. Weed Technol 26:818–824

Hayden ZD, Ngouajio M, Brainard D (2014) Rye-vetch mixture proportion tradeoffs: cover crop productivity, nitrogen accumulation, and weed suppression. Agron J 106:904–914

Hededus DD, Rimmer SR (2005) Sclerotinia sclerotiorum: When ‘to be or not to be’ a pathogen? FEMS Microbiol Lett 251:177–184

Hively WM, Lang M, McCarty G, Keppler J, Sadeghi A, McConnell L (2009) Using satellite remote sensing to estimate winter cover crop nutrient uptake efficiency. J Soil Water Cons 64:303–313

Hu X, Roberts DP, Jiang M, Zhang Y (2005) Decreased incidence of disease caused by Sclerotinia sclerotiorum and improved plant vigor of oilseed rape with Bacillus subtilis Tu-100. Appl Microbiol Biotechnol 68:802–807

Hu X, Roberts DP, Maul JE, Emche SE, Liao X, Guo X, Liu Y, McKenna LF, Buyer J, Liu S (2011) Formulations of the endophytic bacterium Bacillus subtilis Tu-100 suppress Sclerotinia sclerotiorum on oilseed rape and improve plant vigor in field trials conducted at separate locations. Can J Microbiol 57:539–546

Hu X, Roberts DP, Xie L, Maul JE, Yu C, Li Y, Zhang S, Xing L (2013) Bacillus megaterium A6 suppresses Sclerotinia sclerotiorum on oilseed rape in the field and promotes oilseed rape growth. Crop Protection 52:151–158

Hu X, Roberts DP, Xie L, Maul JE, Yu C, Li Y, Jing M, Liao X, Zhe C, Liao X (2014) Formulations of Bacillus subtilis BY-2 suppress Sclerotinia sclerotiorum on oilseed rape in the field. Biol Contr 70:54–64

Hu X, Roberts DP, Xie L, Maul JE, Yu C, Li Y, Zhang Y, Qin L, Liao X (2015) Components of a rice-oilseed rape production system augmented with Trichoderma sp. Tri-1 control Sclerotinia sclerotiorum on oilseed rape. Phytopathology 105:1325–1333

Hu X, Roberts DP, Xie L, Yu C, Li Y, Qin L, Hu L, Zhang Y, Liao X (2016) Use of formulated Trichoderma sp. Tri-1 in combination with reduced rates of chemical pesticide for control of Sclerotinia sclerotiorium on oilseed rape. Crop Protect 79:124–127

Hu X, Qin L, Roberts DP, Lakshman DK, Gong Y, Maul JE, Xie L, Yu C, Li Y, Hu L, Liao X, Liao X (2017) Characterization of mechanisms underlying degradation of sclerotia of Sclerotinia sclerotiorum by Aspergillus sp. Asp-4 using a combined qRT-PCR and proteomic approach. BMC Genomics 18:674. https://doi.org/10.1186/s12864-017-4016-8

Hu X, Roberts DP, Xie L, Qin L, Li Y, Liao X, Gu C, Han P, Liao X (2019) Seed treatment containing Bacillus subtilis BY-2 in combination with other Bacillus isolates for control of Sclerotinia sclerotiorum on oilseed rape. Biol Contr 133:50–57

Janssen SJC, Porter CH, Moore AD, Athanasiadis IN, Foster I, Jones JW, Antle JM (2017) Towards a new generation of agricultural system data, models, and knowledge products: information and communication technology. Agric Sys 155:200–212

Jayarman K, Jia H (2012) GM phobia spreads in south Asia. Nature 30:1017–1019

Ji P, Campbell HL, Kloepper JW, Jones JB, Suslow TV, Wilson M (2006) Integrated biological control of bacterial speck and spot of tomato under field conditions using foliar biological control agents and plant growth-promoting rhizobacteria. Biol Contr 36:358–367

Kanatas P, Travlos IS, Gazoulis I, Tataridas A, Tsekoura A, Antonopoulos N (2020) Benefits and limitations of decision support systems (DSS) with a special emphasis on weeds. Agronomy 10:548. https://doi.org/10.3390/agronomy10040548

Kelly TC, Lu Y-C, Abdul-Baki AA, Teasdale JR (1995) Economics of a hairy vetch mulch system for producing fresh-market tomatoes in the mid-Atlantic region. J Amer Soc Hort Sci 120:854–860

Köhl J, Kolnaar R, Ravensberg WJ (2019) Mode of action of microbial biological control agents against plant diseases: relevance beyond efficacy. Front Plant Sci 10:385. https://doi.org/10.3389/fpls.2019.00845

Kumar V, Abdul-Baki AA, Anderson JD, Mattoo AK (2005) Cover crop residues enhance growth, improve yield, and delay leaf senescence in greenhouse-grown tomatoes. HortSci 40:1307–1311

Kumar V, Mills DJ, Anderson JD, Mattoo AK (2004) An alternative agriculture system is defined by a distinct expression profile of select gene transcripts and proteins. Proc Natl Acad Sci USA 101:10535–10540

Larkin RP (2015) Soil health paradigms and implications for disease management. Annu Rev Phytopathol 53:199–221

Larkin RP (2013) Green manures and plant disease management. CAB Rev 8:37. https://doi.org/10.1079/PAVSNNR20138037

Lechenet M, Dessaint F, Py G, Makowski D, Munier-Jolain N (2017) Reducing pesticide use while preserving crop productivity and profitability on arable farms. Nat Plants 3:17008. https://doi.org/10.1038/nplants2017.8

Lemanceau P, Alabouvette C (1991) Biological control of Fusarium diseases by fluorescent Pseudomonas and non-pathogenic Fusarium. Crop Prot 10:29–286

Lindblom J, Lundström C, Ljung J, Jonsson A (2017) Promoting sustainable intensification in precision agriculture: review of decision support systems development and strategies. Prec Agric 18:309–331

Long SP, Ainsworth EA, Leakey ADB, Nösberger J, Ort DR (2006) Food for thought: lower-than-expected crop yield stimulation with rising CO2 concentrations. Science 312:1918–1921

Lu X, Lee W, Minzan L, Ehsani R, Mishra A, Yang C, Mangan R (2015) Feasibility study on huanglongbing (citrus greening) detection based on worldview-2 satellite imagery. Biosyst Eng 132(2015):28–38. https://doi.org/10.1016/j.biosystemseng.2015.01.009

Mahini RA, Kumar A, Elias EM, Fiedler JD, Porter LD, McPhee KE (2020) Analysis and identification of QTL for resistance to Sclerotinia sclerotiorum in pea (Pisum sativum L). Front Gen 11:587968. https://doi.org/10.3380/fgene.2020.587968

Mahlein A-K (2016) Plant disease detection by imaging sensors—parallels and specific demands for precision agriculture and plant phenotyping. Plant Dis 100:241–251

Mahlein A-K, Kuska MT, Behmann J, Polder G, Walter A (2018) Hyperspectral sensors and imaging technologies in phytopathology: State of the art. Annu Rev Phytopathol 56:535–558

Mahlein A-K, Kuska MT, Thomas S, Wahabzada M, Behmann J, Rascher U, Kersting K (2019) Quantitative and qualitative phenotyping of disease resistance of crops by hyperspectral sensors: seamless interlocking of phytopathology, sensors, and machine learning is needed! Curr Opin Plant Biol 50:156–162

Marrone PG (2007) Barriers to adoption of biological control agents and biological pesticides. CAB Rev. https://doi.org/10.1079/PAVSNNR20072051

Martin C, Li J (2017) Medicine is not health care, food is health care: plant metabolic engineering, diet and human health. N Phytol 216:699–719

Martinelli F, Scalenghe R, Davino S, Panno S, Scuderi G, Ruisi P, Villa P, Stroppiana D, Boschetti M, Goulart LR, Davis CE, Dandekar AM (2015) Advanced methods of plant disease detection. A review. Agron Sustain Dev 35:1–25

Matson PA, Parton WJ, Power AG, Smith MJ (1997) Agricultural intensification and ecosystem properties. Science 277:504–509

Mattoo AK (2014) Translational research in agricultural biotechnology—enhancing crop resistivity against environmental stress alongside nutritional quality. Front Chem 2:30. https://doi.org/10.3389/fchem.2014.00030

Mattoo AK, Abdul-Baki AA (2006) Crop genetic responses to management: evidence of root-to-shoot communication. In: Fernandes E, Ball AS, Herren H, Uphoff N (eds) Biological approaches to sustainable soil systems. Taylor and Francis, Boca Raton, FL, pp 221–330

Mattoo AK, Teasdale JR (2010) Ecological and genetic systems underlying sustainable horticulture. Hort Rev 37:331–362

Mattupalli C, Moffet CA, Shan KN, Young CA (2018) Supervised classification of RGB aerial imagery to evaluate the impact of a root rot disease. Remote Sens 10:917

Melkonian J, Poffenbarger H, Mirsky SB, Ryan M, Bianca M-C (2017) Estimating nitrogen mineralization from cover crop mixtures using the precision nitrogen management model. Agronomy J. https://doi.org/10.2134/agronj2016.06.0330

Mills DJ, Coffman B, Teasdale JR, Everts KL, Abdul-Baki AA, Lydon J, Anderson JD (2002a) Foliar disease in fresh-market tomato grown in differing bed strategies and fungicide spray programs. Plant Dis 86:955–959

Mills DJ, Coffman B, Teasdale JR, Everts KL, Anderson JD (2002b) Factors associated with foliar disease of staked fresh market tomatoes grown under differing bed strategies. Plant Dis 86:356–361

Mirsky SB, Ackroyd V, Cordeau S, Curran WS, Hashemi M, Reberg-Horton SC, Ryan MR, Spargo JT (2017a) Hairy vetch biomass across the eastern United States: effects of latitude, seeding rate and date, and termination timing. Agron J. https://doi.org/10.2134/agronj2016.09.0556

Mirsky SB, Ryan MR, Teasdale JR, Curran WS, Reberg-Horten CS, Spargo JT, Wells MS, Keene CL, Moyer JW (2013) Overcoming weed management challenges in cover crop-based organic rotational no-till soybean production in the eastern United States. Weed Technol 27:31–40

Mirsky SB, Spargo JT, Curran WS, Reberg-Horton CS, Ryan MR, Schomberg HH, Ackroyd VJ (2017b) Characterizing cereal rye biomass and allometric relationships across a range of fall available nitrogen rates in the eastern United States. Agron J 109:1520–1531

Morrissey JP, Dow JM, Mark GL, O’Gara F (2004) Are microbes at the root of a solution to world food production? EMBO Rep 5:922–926

Myers SS, Zanobetti A, Kloog I, Huybers P, Leakey ADB, Bloom AJ, Carlisle E, Dietterich LH, Fitzgerald G, Hasegawawa T, Holbrook NM, Nelson RL, Ottman MJ, Raboy V, Sakai H, Sartor KA, Schwartz J, Seneweera S, Tausz M, Usui Y (2014) Increasing CO2 threatens human nutrition. Nature 510:139–143

Nelson B (1998) Biology of Sclerotinia. In: Proceedings of the 10th International Sclerotinia Workshop, p 1–5, 21 January 1998, Fargo, North Dakota, USA. North Dakota State University Department of Plant Pathology, Fargo, N.D.

Oerke E-C (2020) Remote sensing of diseases. Annu Rev Phytopathol 58:225–252

O’Grady MJ, O’Hare GMP (2017) Modelling the smart farm. Inform Process Agric 4:179–187

Parker P, Jakeman LA, Beck MB, Harris G, Argent RM, Hare M, Pahl-Wostl C, Voinov A, Janssen M, Sullivan P et al (2002) Progress in integrated assessment and modelling. Environ Model Software 17:209–217

Pierson EA, Weller DM (1994) Use of mixtures of fluorescent pseudomonads to suppress take-all and improve the growth of wheat. Phytopathology 84:940–947

Pingali PL (2012) Green revolution: Impacts, limits, and the path ahead. Proc Natl Acad Sci USA 109:12302–12308

Poffenbarger HJ, Mirsky SB, Weil RR, Maul JE, Kramer M, Spargo JT, Cavigelli MA (2015) Biomass and nitrogen content of hairy vetch-cereal rye cover crop mixtures as influenced by species proportions. Agron J 107:2069–2082

Prabhakara K, Hively WD, McCarty GW (2015) Evaluating the relationship between biomass, percent groundcover and remote sensing indices across six winter cover crop fields in Maryland, United States. Int J Appl Earth Obs Geoinform 39:88–102

Ray D, Gerber JS, MacDonald GK, West PC (2015) Climate variation explains a third of global crop yield variability. Nat Comms 6:5989. https://doi.org/10.1038/ncomms6989

Ray D, West PC, Clark M, Gerber JS, Prishchepov AV, Chatterjee S (2019) Climate change has likely already affected global food production. PLoS ONE 14(5):e0217148. https://doi.org/10.1371/journal.pone.0217148

Reganold JP, Wachter JM (2016) Organic agriculture in the twenty-first century. Nat Plants 2:15221. https://doi.org/10.1038/nplants.2015.221

Renkow M, Byerlee D (2010) The impacts of CGIAR research: a review of recent evidence. Food Pol 35:391–402

Rice PJ, McConnell LL, Heighton LP, Sadeghi AM, Isensee AR, Teasdale JR, Abdul-Baki AA, Harman-Tetcho JA, Hapeman CJ (2001) Runoff loss of pesticides and soil: a comparison between vegetative mulch and plastic mulch in vegetable production systems. J Environ Qual 30:1808–1821

Rice PJ, McConnell LL, Heighton LP, Sadeghi AM, Isensee AR, Teasdale JR, Abdul-Baki AA, Harman-Fetcho JA, Hapeman CJ (2002) Comparison of copper levels in runoff from freshmarket vegetable production using polyethylene mulch or a vegetative mulch. Environ Tox Chem 21:24–30