Abstract

A wide variety of orthographic coding schemes and models of visual word identification have been developed to account for masked priming data that provide a measure of orthographic similarity between letter strings. These models tend to include hand-coded orthographic representations with single unit coding for specific forms of knowledge (e.g., units coding for a letter in a given position). Here we assess how well a range of these coding schemes and models account for the pattern of form priming effects taken from the Form Priming Project and compare these findings to results observed with 11 standard deep neural network models (DNNs) developed in computer science. We find that deep convolutional networks (CNNs) perform as well or better than the coding schemes and word recognition models, whereas transformer networks did less well. The success of CNNs is remarkable as their architectures were not developed to support word recognition (they were designed to perform well on object recognition), they classify pixel images of words (rather than artificial encodings of letter strings), and their training was highly simplified (not respecting many key aspects of human experience). In addition to these form priming effects, we find that the DNNs can account for visual similarity effects on priming that are beyond all current psychological models of priming. The findings add to the recent work of Hannagan et al. (2021) and suggest that CNNs should be given more attention in psychology as models of human visual word recognition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Skilled visual word identification requires extensive experience with written words. It entails identifying the visual characteristics of letters (e.g. oriented lines), mapping these features onto letters, coding for letter order, and eventually selecting word candidates from one’s vocabulary (Carreiras et al., 2014). The process of representing the identity and orders of letters in a letter string is referred to as orthographic coding and it constitutes a crucial component of word identification, with different models of word identification adopting different orthographic coding schemes.

Much of the empirical research directed at distinguishing different orthographic coding schemes and models of word identification more generally comes from priming studies that vary the similarity of the prime and target. Various priming procedures have been used, but the most common is the masked Lexical Decision Task (LDT) as introduced by Forster et al. (1987). The procedure involves measuring how quickly people classify a target stimulus as a word or nonword when it is briefly preceded by a prime. The basic finding is that orthographically similar prime strings speed responses to the targets relative to unrelated prime strings (e.g. Schoonbaert and Grainger 2004; Burt and Duncum 2017; Bhide et al. 2014). The assumption is that the greater the priming, the greater the orthographic similarity between the prime and the target. A variety of different models of letter coding and models of word identification more broadly have been developed in an attempt to account for more variance in masked form priming experiments.

The primary objective of the current study is to investigate to what extent artificial Deep Neural Network (DNN) models developed in computer science with architectures designed to classify images of objects can account for masked form priming data, and in addition, compare their successes to some standard models of orthographic coding and word recognition. In all, we test 11 DDNs (different versions of convolutional and transformer networks), five orthographic coding schemes, three models of visual recognition, as well as five control conditions, as detailed below.

Orthographic Coding Schemes and Models of Word Identification

Any model of word recognition needs to include a series of basic processes. This includes encoding the letters and their order, a process of mapping the ordered letters onto lexical representations, and finally a manner of selecting one lexical entry from others. Different models adopt different accounts of these basic processing steps. Most relevant for present purposes, models in the psychological literature have taken three basic approaches to encoding letters and letter orders, namely, slot-based coding, context-based coding, and context-independent coding.

On slot-based coding schemes, separate slots for position-specific letter codes are assumed. For example, the word CAT might be coded by activating the three letter codes C1, A2, and T3, whereas the word ACT would be coded as A1, C2, and T3 (where the subscript indexes letter position). Because letter codes are conjunctions of letter identities and letter position, the letter A1 and A2 are simply different letters (accordingly, CAT and ACT only share one letter, namely T3).

In context-based coding schemes, letters are coded in relation to other letters in the letter string. For example, in open bigram coding schemes (e.g. Grainger and Whitney 2004), a letter string is coded in terms of all of the ordered letter pairs that it contains. For example CAT is coded by the letter pairs CA, AT, and CT, whereas ACT is coded as AC, AT, and CT. Various different versions of context-based coding schemes (and different versions of open bigrams) have been proposed, and these again impact how transposing letters and other manipulations impact the orthographic similarity between letter strings.

Finally, in context-independent coding schemes, letter units are coded independently of position and context. That is, a node that codes the letter A is activated when the input stimulus contains an A, irrespective of its serial position or surrounding context (the same C, A, and T letter units are part of CAT and ACT). The ordering of letters is computed on-line (in order to distinguish between CAT and ACT), and this is achieved in various ways. For example, in the spatial coding scheme (Davis, 2010b), the precise time at which units are activated codes for letter order. Again, various versions of context-independent coding schemes have been proposed with consequences for the orthographic similarity of letter strings.

These different orthographic coding schemes form the front end of more complete models of visual word identification that include processes that select word representations from these orthographic encodings. For example, the Interactive Activation (IA) Model (McClelland and Rumelhart, 1981), the overlap model (Gomez et al., 2008), and the Bayesian Reader (Norris, 2006) all adopt different versions of slot coding; the open bigram (Grainger et al., 2004) and Seriol (Whitney, 2001) models use different context-based encoding schemes; the spatial coding (Davis, 2010b) and SOLAR models (Davis, 2001) uses context-independent encoding scheme. Note that the predictions of masked priming in these models are the product of both the encoding schemes and the additional processes that support lexical selection. In addition to these models, the Letters in Time and Retinotopic Space (LTRS; Adelman 2011) model is agnostic to the encoding scheme and instead makes predictions based on the rate at which different features of the stimulus (that could take different forms) are extracted. We will consider how well various orthographic coding schemes as well as models of word identification account for the masked priming results reported in the form priming project (Adelman et al., 2014).

Deep Neural Network

DNNs are a type of artificial neural network in which the input and output layers are separated by multiple (hidden) layers, forming a hierarchical structure. Two of the most common types of DNNs are convolutional neural networks (CNNs) and transformers.

CNNs are inspired by biological vision (Felleman and Van Essen 1991; Krubitzer and Kaas 1993; Sereno et al. 2015). The convolutions in CNNs refer to a set of feature detectors that repeat at different spatial locations to produce a series of feature maps (analogous to simple cells in V1, for example, where the same feature detector — e.g. a vertical line detector — repeats at multiple retinal locations). The convolutions are followed by a pooling operation in which corresponding features in nearby spatial locations are mapped together (analogous to complex cells that map together corresponding simple cells in nearby retinal locations), after which more feature detectors and pooling operations are applied, hierarchically, to form more and more complex feature detectors that are more invariant to spatial location. Different CNNs differ in various ways, including the number of hidden layers (many models have over 100 hidden layers), but they generally include ‘localist’ or ‘one hot’ representations in the output layer such that a single output unit codes for a specific category (e.g. an object class such as banana, or in the current case, a specific word), and CNNs tend to be trained through back-propagation as is the case with older parallel distributed processing models (Rumelhart et al., 1986).

In contrast, Vision Transformers (ViTs; Dosovitskiy et al. 2020) do not include any convolutions but instead introduce a self-attention mechanism. In object classification, this mechanism divides the input image into patches, and a similarity score between each and every patch is computed. These scores are used to compute a new representation of the image that emphasises the most relevant features for the task at hand. Overall these models have much more complicated architectures, and describing them is beyond the scope of this paper. But again, the models include multiple layers, tend to include localist output codes, and are trained with back-propagation.

Importantly for present purposes, CNNs and ViTs are not only highly successful engineering tools that support a wide range of challenging AI tasks, but they are also often claimed to provide good models of the human visual system, and indeed, they are the most successful models in predicting judgements of category typicality (Lake et al., 2015) and predicting object classification errors (Jozwik et al., 2017) and human similarity judgements for natural images (Peterson et al., 2018) on several datasets. DNNs have also been good at predicting the neural activation patterns elicited during object recognition in both human and non-human primates’ ventral visual processing streams (Cichy et al., 2016; Storrs et al., 2021). A benchmark called Brain-Score has been developed to assess the similarities between biological visual systems and DNNs (Schrimpf et al., 2020). The best performing models on the Brain-Score benchmarks are often described as the best models of human vision, and CNNs are currently the best performing models on this benchmark. More recently, Biscione and Bowers (2022a) have demonstrated that CNNs can acquire a variety of visual invariance phenomena found in humans, namely, translation, scale, rotation, brightness, contrast, and to some extent, viewpoint invariance.

More relevant for the present context, Hannagan et al. (2021) demonstrated that training a biologically inspired, recurrent CNN (CORnet-Z; Kubilius et al. 2018) to recognise words led the model to reproduce some key findings regarding visual-orthographic processing in the human visual word form area (VWFA) as observed with fMRI, such as case, font, and word length invariance. In addition, the model’s word recognition ability was mediated by a restricted set of reading-selective units. When these units were removed to simulate a lesion, it caused a reading-specific deficit, similar to the effects produced by lesions to the VWFA.

The current work explores this topic further by determining to what extent CNNs and ViTs account for human orthographic coding as measured through masked form priming effects. Given past reports of DNN-human similarity, it might be predicted that DNNs will account for some form priming effects. What is less clear is how well DNNs will capture orthographic similarity effects in comparison to various orthographic coding schemes and models of word identification specifically designed to explain form priming effects, among other findings. Given that DNNs do not include any hand-built orthographic knowledge and are trained to classify pixel images of words, it would be impressive if these models did as well. This would be particularly so given that we trained the models in a highly simplified manner that ignores many important features of how humans learn to identify words, as described below.

Methods

Human Priming Data

Human priming data was sourced from the Form Priming Project (FPP; Adelman et al. 2014) and was used to assess how well various psychological DNN models account for orthographic similarity. FPP contains reaction times for 28 prime types across 420 six-letter word targets, gathered from over 924 participants. The prime types and priming effects are shown in Table 1. To measure the priming effect size, the mean reaction time (mRT) of each prime condition is compared to the mRT of unrelated arbitrary strings (e.g. ‘pljokv’ for the word ‘design’).

Orthographic Coding Schemes and Word Recognition Models

As discussed above, numerous orthographic coding schemes and word recognition models have been developed to account for orthographic priming effects in humans. Here we assess how well five coding schemes and three models of word identification account for the priming effects reported in the FPP dataset, namely:

-

1.

Orthographic coding schemes

-

2.

Full priming models

In order to determine the degree of priming we used the match value calculator created by Davis (2010a) which implements the five orthographic coding schemes. For each coding scheme, the calculator takes two strings as input and returns a match value that indicates their predicted similarity. It is assumed that the greater the orthographic similarity, the greater the priming. Note, for any given coding scheme and priming condition, the match value for any target word is the same across target words. For example, in the final deletion condition noted in Table 1, the example target given is DESIGN, but the same exact similarity score is computed for all the targets in this condition (because the prime and target all share the first 5 letters, with only the final letter mismatching).

For the models of visual word identification we assessed orthographic similarity on the basis of their predicted priming scores. IA and SCM implementations were taken from Davis (2010b) and the LTRS model was taken from a simulator developed by Adelman (2011).Footnote 1 For each model, the mRT was computed for each related prime condition and subtracted from the mRT in the unrelated arbitrary condition to produce the priming score.

DNN Models

We trained seven common convolutional networks (CNNs) and four Vision Transformer networks (ViTs). The convolutional models belong to the families of AlexNet (Krizhevsky et al., 2012), VGG (Simonyan and Zisserman, 2014), ResNet (He et al., 2016), DenseNet (Huang et al., 2016) and EfficientNet (Tan and Le, 2019), and all Transformers were from the ViTs family (Dosovitskiy et al., 2020). All models were pretrained on ImageNet (Deng et al., 2009) to initialise the weights. The ViTs listed in Table 2 vary in their complexities and a number of properties including number of layers and the way that attention is implemented.

After pretraining on ImageNet, the final classifier layer was removed and the models were trained to classify images of 1000 different words (the same number used by Hannagan et al. 2021), with each word represented locally. All 420 six-letter words from Form Priming Project were used and the remaining 580 were sourced from Google’s Trillion Word Corpus (Google, 2011). All words were presented in upper case letters and the lengths of the 580 words were evenly distributed between three, four, five, seven, and eight letters, with 116 words chosen at each length. As with the Form Priming Project’s 420 words, the 580 words are chosen to not contain the same letter twice. All words were trained in parallel (there was no age-of-acquisition manipulation) and for the same number of trials (there was no frequency manipulation). The complete list is available through the GitHub repository of the current study.Footnote 2

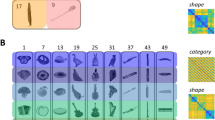

We employed data augmentation techniques to diversify the visual representation of each word by manipulating font, size, rotation of letters, and translation by changing the position of the image in space (see Fig. 1 for some examples; for details of augmentation see Fig. 5). We generated 6000 images for each word, resulting in a dataset containing 6,000,000 images. 5,000,000 images are used for training, while the remaining 1,000,000 are used for performance validation. The algorithm for generating datasets is described in detail in Appendix B. For training the Adam optimizer and the cross-entropy loss function are used. A hyperparameter search was performed for the learning rate using a random grid-search, yielding a value of 1e-5. When the training average loss stops improving by a specified threshold of 0.0025, the training was terminated. The accuracy of the models on the validation set of words is reported in Table 2.

We then generated a dataset of prime words. To generate this dataset, each of the 420 Form Priming Project words was transformed into 28 prime types. For example, the target word ‘ABDUCT’ was transformed into ‘baduct’ for the ‘initial transposition’ condition, ‘abdutc’ for the ‘final transposition’ condition, and so forth. Each prime is used to generate an image using the Arial font, resulting in 11,760 images (420 target words \(\times\) 28 prime types). No rotation or translation variations at the letter level are introduced, and all strings are positioned at the centre of the image using the same font size of 26, which is the average size used for training.

Measuring Orthographic Similarity of the Various DNNs

To measure the orthographic similarity between prime-target images we compared the unit activations at the penultimate layer using Cosine Similarity (CS) after each image was presented as an input to the network:

CS ranges from -1 (opposite internal representation) to 1 (identical internal representation). We then computed the overall relation between human priming scores and model cosine similarity scores by calculating the correlation (\(\tau\)) between the mean human priming scores and the mean cosine similarities across conditions. We also consider the relation between humans and models by exploring the (mis)match in priming and cosine similarity scores in the individual conditions, as discussed below.

We also included five baseline conditions to better understand the priming effects observed. First, the CS between the pixels of the prime and target images was computed. This served as a baseline for determining the extent to which the models contribute to orthographic similarity scores beyond the pixel values between stimuli. In addition, we used ImageNet pretrained models (without any training on letter strings) and ImageNet pretrained models fine-tuned on 1000 classes of six-letter random strings for the CNNs and ViTs as four additional baselines. Rather than reporting tau for the individual models we report the average correlation tau across all the CNNs and ViTs, respectively. This was done to assess the role of training DNNs on English words on the pattern of form priming effects obtained. Using the aforementioned method, the mean cosine similarity score for each model condition was calculated, and the average correlation coefficient was used for each model class.

Priming (correlation) scores between model predictions and human data over the prime types for each DNN, coding scheme, priming model, and baseline. The term ‘LTRS’ refers to the Letters in Time and Retinotopic Space. To obtain the standard error for each bar, we computed tau 1000 times by randomly sampling 28 mean cosine similarity scores with replacement across conditions. The error bar corresponds to the standard error of this vector

Distributions of Human Priming and the DNN Models Perception. For each subplot, the x-axis is the metric specific similarity measure and the y-axis is the prime condition. Prime types are ordered according to the size of priming effect in the human data (largest to smallest), as in Table 1. As illustrated in the first row, the ‘identity’ condition has the strongest priming effect as it has the highest priming score of 42.69ms. Priming score for a condition is the difference of its mRT and the ‘unrelated arbitrary’ condition. See Table 1 for the index of the 28 conditions

Results

Figure 2 plots Kendall’s correlation, over the 28 prime types, between the human priming data and the various orthographic coding schemes (as measured by match values), priming models (as measured by predicted priming scores), DNNs (as measured by cosine similarity scores), as well as various baseline measures of similarity.

The most striking finding is that the CNNs did a good job in predicting the pattern of human priming scores across conditions, with correlations ranging from \(\tau\) = .49 (AlexNet) to \(\tau\) = .71. (ResNet101) with all p values < .01. Indeed, the CNNs performed similarly to the various orthographic coding schemes and word recognition models, and often better. This contrasts with the relatively poor performance of the Transformer networks, with \(\tau\) ranging from .25 to .38.

Distributions of Human Priming and the coding schemes. For each subplot, the x-axis is the metric specific similarity measure and the y-axis is the prime conditions. See Table 1 for the index of the 28 conditions. The Interactive Activation and Spatial Coding Model values are made negative to obtain a positive correlation coefficient with human priming data, as they represent estimated RTs that is negatively correlated with priming effect size

Importantly, the good performance of the CNNs was not due to the pixel value similarity between the prime-target images, as the pixel control condition (pixCS) has no significant correlation with the human priming data. It is also not simply the product of the architectures of the CNNs, as the predictions were much poorer for the CNNs that were pretrained on ImageNet but not trained on English words. Rather, it is the combination of the CNN architectures with training on English words that led to good performance. For a complete set of correlations between human priming data, DNNs’ cosine similarity scores, orthographic coding similarity scores, and priming scores in psychological models, see Appendix B, Fig. 6.

A more detailed assessment of the overlap between DNNs, orthographic coding schemes, and word identification models is provided in Figs. 3 and 4 that depict the distribution of responses in each condition for all models as well as summarise the priming results per condition. From this, it is clear that all the CNNs had particular difficulty in predicting the priming in ‘half’ condition (as indicated by the red arrow) in which either the first three letters or the final three letters served as primes (the CNNs substantially underestimated the priming in this condition). A similar difficulty was found in many of the psychological models as well, but the effect was not quite so striking. There were no other prime conditions that led to such a large and consistent error in any model or coding scheme.

One surprising result from the form priming project was that there was little evidence that external letters were more important than internal letters. For instance, final and initial substitutions produced more priming than medial substitutions, and similar priming effects were obtained for final, medial, and initial transpositions, with slightly less priming for initial transpositions. This contrasts with the common claim that external letters are more important than medial letters for visual word identification (Estes et al., 1976), although the past evidence for this in masked priming is somewhat mixed (e.g. Perea and Lupker 2003). Interestingly, most of the CNNs and ViTs showed similar effects across the three substitution conditions, and slightly less priming in the initial transposition condition, and thus also predicted little extra importance attributed to external letters.

A key feature of all the psychological models is that they code letters in an abstract format such that there is no variation in visual similarity between letters (different letters are simply unrelated in their visual form). This manifests itself in the fact that there is no distribution in priming scores in each of the 28 prime conditions for the orthographic priming schemes and the LTRS model. For the IA and Spatial Coding model there is variation in priming score in each condition, but this reflects the impact of lexical access in the models (e.g. the impact of word frequency or lexical competition) rather than any influence of visual similarity.

By contrast, in the case of the CNNs and ViTs, the input is an image in pixel space, and accordingly, it is possible that the visual similarity is contributing to the distribution of priming scores observed in each of the priming conditions. To test for this, we obtained human visual similarity ratings between upper case letters (Simpson et al., 2012) and assessed whether these scores correlate with the cosine similarity scores observed in the initial, middle, and final substitution conditions. In these conditions, the visual similarity of all the letters is the same other than the substituted letter, and the question is whether the similarity score computed with the model correlates with the similarity scores produced by humans. As can be seen Table 3, there was a strong correlation, for all models in all the letter substitution conditions. This is an advantage of DNNs over current psychological models given that masked priming in humans is also sensitive to visual similarity of letter transpositions (Kinoshita et al., 2013; Forster et al., 1987; Perea et al., 2008).

Discussion

A wide number of orthographic coding schemes and models of visual word identification have been designed to account for masked form priming effects that provide a measure of the orthographic similarities between letter strings. Here, we assessed how well these standard approaches account for the form priming effects reported in the Form Priming Project (Adelman et al., 2014) and compared the results to two different classes of DNNs (CNNs and Transformers). Strikingly, the CNNs we tested did similarly, and in some cases better, than the psychological models specifically designed to explain form priming effects. This is despite the fact that the CNN architectures were designed to perform well in object identification rather than word identification, despite the fact that the models were trained to classify pixel images of words rather than hand-built and artificially encoded letter strings, and despite the fact that the models were trained to classify 1000 words in a highly simplified manner.

By contrast, we found that visual transformers are less successful in accounting for form priming effects in humans, suggesting that these models are identifying images of words in a non-human like way. Still, both CNNs and Transformers did better than psychological models in one important respect, namely, they can account for the impact of the visual similarity of primes and targets on masked priming. By contrast, all current psychological models cannot, given that their input coding schemes treat all letters as unrelated in form. This highlights a key advantage of inputting pixel images into a model (similar to a retinal encoding) as opposed to abstract letter codes that lose relevant visual information.

In some respects the poorer performance of Transformers compared to CNNs is surprising given past findings that the pattern of errors observed in object recognition is more similar between visual transformers and humans compared to CNNs and humans (Tuli et al., 2021). But at the same time, our findings are consistent with the finding that CNNs provide the best predictions of neural activation in the ventral visual stream during object recognition as measured by Brain-Score (Schrimpf et al., 2020) and other brain benchmarks. Indeed, visual transformers (similar to the ones we tested here) do much worse on Brain-Score compared to CNNs, with the top performing transformer model performing outside the top-100 models on the current Brain-Score leaderboard. To the extent that better performance on Brain-Score reflects a greater similarity between DNNs and humans, our finding that CNNs do a better job of accounting for masked form priming makes sense. But what specific features of CNNs lead to better performance is currently unclear.

Our findings are also consistent with recent work by Hannagan et al. (2021) who found that a CNN trained to classify images of words and objects showed a number of hallmark findings of human visual word recognition. This includes CNNs learning units that are both selective for words (analogous to neurons in the visual word form area) as well as invariant to letter size, font, or case. Furthermore, lesions to these units led to selective difficulties in identifying words (analogous to dyslexia following lesions to the visual word form area). Interestingly, the authors also provided evidence that the CNN learned a complex combination of position-specific letter codes as well as bigram representations. It seems that these learned representations are also able to account for a substantial amount of form priming effects observed in humans. The observation that CNNs not only account for a range of empirical phenomena regarding human visual word identification but, in addition, perform well on various brain benchmarks for visual object identification lends some support to the “recycling hypothesis” according to which a subpart of the ventral visual pathway initially involved in face and object recognition is repurposed for letter recognition (Hannagan et al., 2021).

Despite these successes, it is important to note that there is a growing number of studies highlighting that CNNs’ fail to capture most key low-level, mid-vision, and high-level vision findings reported in psychology (Bowers et al., 2022). Indeed, most CNNs that perform well on brain-score do not even classify objects on the basis of shape, but rather, classify objects on the basis of texture (Geirhos et al., 2018). And when models are trained to have a shape bias when classifying objects they have a non-human shape bias (Bowers et al., 2021a). In addition, when tested on stimuli designed to elicit Gestalt effects, most CNNs exhibit a limited ability to organise elements of a scene into a group or whole, and the grouping only occurs at the output layer, suggesting that these models may learn fundamentally different perceptual properties than humans (Biscione and Bowers, 2022b).

How is it possible to obtain such high performance benchmarks as Brain-Score and account for a few findings from psychology? Bowers et al. (2022) (also see Bowers et al. 2021b) argued that the good performance on these benchmarks may provide a misleading estimate of CNN-human similarity with good performance reflecting two very different systems picking up on different sources of information that are correlated with each other. For instance, it is possible that texture representations in CNNs are used not only to identify objects but also to predict the neural activation of the ventral visual system that identifies objects based on shape. Indeed, Bowers et al. (2021b) have run simulations showing that CNNs that are designed to recognise objects in a very different way nevertheless can support good predictions of brain activations based on confounds that are commonplace in image datasets.

In some ways, this makes the current findings all the more impressive, as the CNNs are doing reasonably well at accounting for a complex set of priming conditions that were specifically designed to contrast different hypotheses regarding orthographic coding schemes. Of course, there were some notable failures in the CNNs’ accounting for form priming (most notably, all the CNNs underestimated the amount of priming in the half condition in which the primes were composed of the first three or final three letters of the target), and there may be many additional priming conditions that prove problematic for CNNs. Nevertheless, the current results do highlight that CNNs should be given more attention in psychology as models of human visual word recognition. It is possible that developing new CNN architectures motivated by biological and psychological findings and adopting more realistic training conditions will lead to even more impressive performance and new insights into human visual word identification.

Data Availability

The codes that generate the data that support the findings of this study are available at https://github.com/Don-Yin/Orthographic-DNN.

Code Availability

The codes that support the findings of this study are available at https://github.com/Don-Yin/Orthographic-DNN.

References

Adelman, J. S. (2011). Letters in time and retinotopic space. Psychological Review, 118(4), 570–582. https://doi.org/10.1037/a0024811

Adelman, J. S., Johnson, R. L., McCormick, S. F., McKague, M., Kinoshita, S., Bowers, J. S., Perry, J. R., Lupker, S. J., Forster, K. I., Cortese, M. J., Scaltritti, M., Aschenbrenner, A. J., Coane, J. H., White, L., Yap, M. J., Davis, C., Kim, J., & Davis, C. J. (2014). A behavioral database for masked form priming. Behavior Research Methods, 46(4), 1052–1067. https://doi.org/10.3758/s13428-013-0442-y

Bhide, A., Schlaggar, B. L., & Barnes, K. A. (2014). Developmental differences in masked form priming are not driven by vocabulary growth. Frontiers in Psychology, 5. https://doi.org/10.3389/fpsyg.2014.00667

Biscione, V., & Bowers, J. S. (2022a). Learning online visual invariances for novel objects via supervised and self-supervised training. Neural Networks, 150, 222–236. https://doi.org/10.1016/j.neunet.2022.02.017

Biscione, V., & Bowers, J. S. (2022b). Mixed evidence for gestalt grouping in deep neural networks. Computational Brain & Behavior. https://doi.org/10.48550/arXiv.2203.07302

Bowers, J. S., Malhotra, G., Dujmovic, M., Llera Montero, M., Tsvetkov, C., Biscione, V., Puebla, G., Adolfi, F. G., Hummel, J., Heaton, R. F., Evans, B. D., Mitchell, J., & Blything, R. (2022). Deep problems with neural network models of human vision. PsyArXiv. https://doi.org/10.31234/osf.io/5zf4s

Bowers, J. S., Dujmovic, M., Hummel, J., & Malhotra, G. (2021a). The contrasting shape representations that support object recognition in humans and cnns. bioRxiv. https://doi.org/10.1101/2021.12.14.472546

Bowers, J. S., Dujmovic, M., Hummel, J., & Malhotra, G. (2021b). Human shape representations are not an emergent property of learning to classify objects. bioRxiv. https://doi.org/10.1101/2021.12.14.472546

Burt, J. S., & Duncum, S. (2017). Masked form Priming is Moderated by the Size of the Letter-Order-Free Orthographic Neighbourhood. Quarterly Journal of Experimental Psychology, 70(1), 127–141. https://doi.org/10.1080/17470218.2015.1126289

Carreiras, M., Armstrong, B. C., Perea, M., & Frost, R. (2014). The what, when, where, and how of visual word recognition. Trends in Cognitive Sciences, 18(2), 90–98. https://doi.org/10.1016/j.tics.2013.11.005

Cichy, R. M., Khosla, A., Pantazis, D., Torralba, A., & Oliva, A. (2016). Comparison of deep neural networks to spatio-temporal cortical dynamics of human visual object recognition reveals hierarchical correspondence. Scientific Reports, 6(1). https://doi.org/10.1038/srep27755

Davis, C. J. (2001). The self-organising lexical acquisition and recognition (SOLAR) model of visual word recognition. Dissertation Abstracts International: Section B: The Sciences and Engineering, 62(1-B), 594. https://psycnet.apa.org/record/2001-95014-128

Davis, C. J. (2010a). The spatial coding model. Psychological Review, 117, 713–758. http://www.pc.rhul.ac.uk/staff/c.davis/SpatialCodingModel/

Davis, C. J. (2010b). The spatial coding model of visual word identification. Psychological Review, 117(3), 713–758. https://doi.org/10.1037/a0019738

Deng, J., Dong, W., Socher, R., Li, L. -J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition (pp.248–255). IEEE.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., & Houlsby N. (2020). An image is worth 16x16 words: Transformers for image recognition at scale. arXiv. https://doi.org/10.48550/arXiv.2010.11929

Estes, W. K., Allmeyer, D. H., & Reder, S. M. (1976). Serial position functions for letter identification at brief and extended exposure durations. Perception & Psychophysics, 19(1), 1–15. https://doi.org/10.3758/bf03199379

Felleman, D. J., & Van Essen, D. C. (1991). Distributed hierarchical processing in the primate cerebral cortex. Cerebral Cortex, 1(1), 1–47. https://doi.org/10.1093/cercor/1.1.1

Forster, K. I., Davis, C., Schoknecht, C., & Carter, R. (1987). Masked priming with graphemically related forms: Repetition or partial activation? The Quarterly Journal of Experimental Psychology Section A, 39(2), 211–251. https://doi.org/10.1080/14640748708401785

Geirhos, R., Rubisch, P., Michaelis, C., Bethge, M., Wichmann, F. A., & Brendel, W. (2018) ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. arXiv. https://arxiv.org/abs/1811.12231

Gomez, P., Ratcliff, R., & Perea, M. (2008). The overlap model: A model of letter position coding. Psychological Review, 115(3), 577–600. https://doi.org/10.1037/a0012667

Google. (2011). Google Books Ngram Viewer. https://books.google.com/ngrams/info

Grainger, J., & Van Heuven, W. J. B. (2004) Modeling letter position coding in printed word perception. In Bonin, P. (ed.) Mental lexicon: "Some words to talk about words", pp. 1–23. Nova Science Publishers. https://psycnet.apa.org/record/2004-15128-001

Grainger, J., & Whitney, C. (2004). Does the huamn mnid raed wrods as a wlohe? Trends in Cognitive Sciences, 8(2), 58–59. https://doi.org/10.1016/j.tics.2003.11.006

Hannagan, T., Agrawal, A., Cohen, L., & Dehaene, S. (2021) Emergence of a compositional neural code for written words: Recycling of a convolutional neural network for reading. Proceedings of the National Academy of Sciences (PNAS). https://doi.org/10.1101/2021.02.15.431235

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/cvpr.2016.90

Huang, G., Liu, Z., van der Maaten, L., & Weinberger, K. Q. (2016). Densely connected convolutional networks. arXiv. https://doi.org/10.48550/arXiv.1608.06993

Jozwik, K. M., Kriegeskorte, N., Storrs, K. R., & Mur, M. (2017). Deep convolutional neural networks outperform feature-based but not categorical models in explaining object similarity judgments. Frontiers in Psychology, 8. https://doi.org/10.3389/fpsyg.2017.01726

Kinoshita, S., Robidoux, S., Mills, L., & Norris, D. (2013). Visual similarity effects on masked priming. Memory and Cognition, 42(5), 821–833. https://doi.org/10.3758/s13421-013-0388-4

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. Communications of the ACM, 60(6), 84–90. https://doi.org/10.1145/3065386

Krubitzer, L. A., & Kaas, J. H. (1993). The dorsomedial visual area of owl monkeys: Connections, myeloarchitecture, and homologies in other primates. The Journal of Comparative Neurology, 334(4), 497–528. https://doi.org/10.1002/cne.903340402

Kubilius, J., Schrimpf, M., Nayebi, A., Bear, D., Yamins, D. L. K., & DiCarlo, J. J. (2018). CORnet: Modeling the neural mechanisms of core object recognition. bioRxiv. https://doi.org/10.1101/408385

Lake, B., Zaremba, W., Fergus, R., & Gureckis, T. (2015). Deep neural networks predict category typicality ratings for images. Cognitive Science Society. https://cogsci.mindmodeling.org/2015/papers/0219/index.html

McClelland, J. L., & Rumelhart, D. E. (1981). An interactive activation model of context effects in letter perception: I. An account of basic findings. Psychological Review, 88(5): 375–407. https://doi.org/10.1037/0033-295x.88.5.375

Norris, D. (2006). The Bayesian reader: Explaining word recognition as an optimal Bayesian decision process. Psychological Review, 113(2), 327–357. https://doi.org/10.1037/0033-295x.113.2.327

Perea, M., & Lupker, S. J. (2003). Does jugde activate COURT? transposed-letter similarity effects in masked associative priming. Memory & Cognition, 31(6), 829–841. https://doi.org/10.3758/bf03196438

Perea, M., Duabeitia, J. A., & Carreiras, M. (2008). R34d1ng w0rd5 w1th numb3r5. Journal of Experimental Psychology: Human Perception and Performance, 34(1), 237–241. https://doi.org/10.1037/0096-1523.34.1.237

Peterson, J. C., Abbott, J. T., & Griffiths, T. L. (2018). Evaluating (and improving) the correspondence between deep neural networks and human representations. Cognitive Science, 42(8), 2648–2669. https://doi.org/10.1111/cogs.12670

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323(6088), 533–536. https://doi.org/10.1038/323533a0

Schoonbaert, S., & Grainger, J. (2004). Letter position coding in printed word perception: Effects of repeated and transposed letters. Language and Cognitive Processes, 19(3), 333–367. https://doi.org/10.1080/01690960344000198

Schrimpf, M., Kubilius, J., Hong, H., Majaj, N. J., Rajalingham, R., Issa, E. B., Kar, K., Bashivan, P., Prescott-Roy, J., Geiger, F., Schmidt, K., Yamins, D. L. K., & DiCarlo, J. J. (2020) Brain-Score: Which artificial neural network for object recognition is most brain-like? https://doi.org/10.1101/407007

Sereno, M. I., McDonald, C. T., & Allman, J. M. (2015) Retinotopic organization of extrastriate cortex in the owl monkey-dorsal and lateral areas. Visual Neuroscience, 32. https://doi.org/10.1017/s0952523815000206

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv. https://doi.org/10.48550/arXiv.1409.1556

Simpson, I. C., Mousikou, P., Montoya, J. M., & Defior, S. (2012). A letter visual-similarity matrix for Latin-based alphabets. Behavior Research Methods, 45(2), 431–439. https://doi.org/10.3758/s13428-012-0271-4

Storrs, K. R., Kietzmann, T. C., Walther, A., Mehrer, J., & Kriegeskorte, N. (2021). Diverse deep neural networks all predict human inferior temporal cortex well, after training and fitting. Journal of Cognitive Neuroscience, 33(10), 2044–2064. https://doi.org/10.1162/jocna01755

Tan, M., & Le, Q. V. (2019). EfficientNet: Rethinking model scaling for convolutional neural networks. arXiv. https://doi.org/10.48550/arXiv.1905.11946

Tuli, S., Dasgupta, I., Grant, E., & Griffiths, T. L. (2021). Are convolutional neural networks or transformers more like human vision? arXiv. https://doi.org/10.48550/arXiv.2105.07197

Whitney, C. (2001). How the brain encodes the order of letters in a printed word: The SERIOL model and selective literature review. Psychonomic Bulletin and Review, 8(2), 221–243. https://doi.org/10.3758/bf03196158

Funding

This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement no. 741134).

Author information

Authors and Affiliations

Contributions

J.S.B and V.B were responsible for the design and supervision of the study. D.Y specified the statistical approach and wrote the scripts for data generation and analyses. All authors contributed to the analysis of the data and interpretation and writing of the paper.

Corresponding author

Ethics declarations

Ethics statement

The present study was approved by the School of Psychological ScienceResearch Ethics Committee.

Consent to Participate

Not applicable

Consent for Publication

We certify that the paper contains no personal information (names, initials, or any other information which could identify an individual person) that would infringe upon that person’s right to privacy.

Conflict of Interest

The authors declare no competing interests.

Appendices

Appendix A: Similarity values

Illustration of the process of generating training/validation images from target words (e.g. ‘ABDUCT’). The word is drawn with a random font and size. Each letter is then rotated and translated randomly. This procedure is applied on each target word to generate 6000 images, resulting in 6,000,000 images (1000 words \(\times\) 6000 images)

Pairwise Correlation Matrix Between Human Priming Data, the DNN Models and Other Similarity Metrics. The values represent Kendall’s \(\tau\) correlation coefficients. Priming-ARB is the human priming size using the arbitrary unrelated prime condition as the baseline; pixCS is the Pixel cosine similarity; SCM is the Spatial Coding Model. It should be noted that, in order to obtain a positive correlation value, the LDist and SCM’s values are made negative.\({ }^{*} p<.05 .{ }^{* *} p<.01 .{ }^{* * *} p<.001\)

Appendix B: Data generation

Figure 5 illustrates how the 1000 target words are used to generate 6000 images per word algorithmically, which involves the following steps:

-

1.

Apply one of the ten common fonts (e.g. Arial). The complete list can be found at the Github repository of the current study.

-

2.

Apply one of ten sizes selected from \(\{x \in 2 Z: 18 \le x<38\}\)

-

3.

Draw the target word as an image.

-

4.

Add random rotation to individual letters using an angle determined by the normal distribution \(N \left( 0, \frac{2 \pi }{45}\right)\).

-

5.

Add random translation to individual letters so that the updated letter coordinates (x, y) meet the condition shown in Eq. B.1, where (a, b) are the letter’s initial coordinates.

-

6.

Transform the image into grayscale.

-

7.

Resize image to 224 \(\times\) 224 pixels.

These operations ensure sufficient variation between the generated images to simulate human perceptual invariance to rotation and spacing. At the end of this process, 6,000,000 images are generated (1000 words \(\times\) 6000 images). 5,000,000 images are used for training and the remaining 1,000,000 are used for validating the DNN models’ performance.

Figure 6 illustrates the pairwise Kendall’s correlation matrix between the human priming-ARB and the DNN models and baseline similarity metrics (similarity values are listed in Appendix A).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yin, D., Biscione, V. & Bowers, J.S. Convolutional Neural Networks Trained to Identify Words Provide a Surprisingly Good Account of Visual Form Priming Effects. Comput Brain Behav 6, 457–472 (2023). https://doi.org/10.1007/s42113-023-00172-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42113-023-00172-7