Abstract

Two prominent types of uncertainty that have been studied extensively are expected and unexpected uncertainty. Studies suggest that humans are capable of learning from reward under both expected and unexpected uncertainty when the source of variability is the reward. How do people learn when the source of uncertainty is the environment’s state and the rewards themselves are deterministic? How does their learning compare with the case of reward uncertainty? The present study addressed these questions using behavioural experimentation and computational modelling. Experiment 1 showed that human subjects were generally able to use reward feedback to successfully learn the task rules under state uncertainty, and were able to detect a non-signalled reversal of stimulus-response contingencies. Experiment 2, which combined all four types of uncertainties—expected versus unexpected uncertainty, and state versus reward uncertainty—highlighted key similarities and differences in learning between state and reward uncertainties. We found that subjects performed significantly better in the state uncertainty condition, primarily because they explored less and improved their state disambiguation. We also show that a simple reinforcement learning mechanism that ignores state uncertainty and updates the state-action value of only the identified state accounted for the behavioural data better than both a Bayesian reinforcement learning model that keeps track of belief states and a model that acts based on sampling from past experiences. Our findings suggest a common mechanism supports reward-based learning under state and reward uncertainty.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Humans are capable of dealing with different types of uncertainty when learning (Soltani & Izquierdo, 2019) and making decisions (Platt & Huettel, 2008). Two forms of uncertainty that have attracted interest in the psychology and neuroscience literature include reward uncertainty and state uncertainty. Reward uncertainty occurs when reward outcomes are generated stochastically from a probability distribution. State uncertainty (also often referred to as perceptual uncertainty; Bruckner et al., 2020) arises when the agent cannot tell for sure what the current state of the world is: its perceptual system might be noisy (e.g. noise in the brain or in sensors); the observations are ambiguous (e.g. reading road signs in challenging weather conditions) or the information about the state is incomplete (e.g. playing card games without knowing the cards dealt to the other opponents or their play strategies).

In addition, uncertainty can also be categorised into expected uncertainty and unexpected uncertainty. Uncertainty is considered expected when the rules governing the environment are stochastic but predictable, due, for example, to rewards being generated from a stable probability distribution (Bland & Schaefer, 2012). This definition covers both the case where the uncertainty arises from reward outcomes (we refer to this here as expected reward uncertainty; also sometimes called risk as, for example in Bruckner et al., 2022) and the case where the uncertainty arises from the environment’s states (called here expected state uncertainty; though in some previous work, expected uncertainty has been equated with stochasticity arising from reward outcomes as in Soltani & Izquierdo, 2019). Though definitions of unexpected uncertainty differ, this type of uncertainty refers to the case where there is a sudden and fundamental change in the environment such as when there is a non-signalled change in the rules of the task, which invalidates the previous acquired rules (Bland & Schaefer, 2012). Another well-studied uncertainty type that is closely related to unexpected uncertainty but fundamentally different is volatility. Volatility occurs when there are frequent non-signalled changes and the frequency of these changes varies across time. More precisely, it captures the variance in the frequency of fundamental changes (Bland & Schaefer, 2012; Piray & Daw, 2021).

Several authors have proposed psychological and neural accounts of reward-based learning under uncertainty when the source of uncertainty lies in the reward outcomes (i.e. when rewards are stochastic; for reviews, see Bland & Schaefer, 2012; Bruckner et al., 2022; Soltani & Izquierdo, 2019). For instance, Yu and Dayan (2005) developed an influential account of the computation of expected and unexpected uncertainty in reward outcomes, where the signalling of these forms of uncertainty is mediated by different neurotransmitter systems (acetylcholine and norepinephrine for expected and unexpected uncertainty, respectively). Other authors have assessed the extent to which people adjust their learning rate according to the volatility of the reward environment, and compared human behaviour to that of an (approximately) optimal Bayesian learner (Behrens et al., 2007; Nassar et al., 2010; Mathys et al., 2011; for a more recent theoretical work, see also Piray & Daw, 2020, 2021).

While such studies have provided important insights into the psychological and neural responses to reward uncertainty, little is known about how people learn from reward when the source of uncertainty is the environment’s state and rewards themselves are deterministic (though see Bruckner et al., 2020, for a recent attempt to explore reward-based learning under state uncertainty). This situation arises when the agent cannot tell for sure what the current state of the world is, and presents the agent with a difficult challenge: should an unexpected reward (or lack of an expected reward) be used to update action values for what the agent believes to be the current environmental state, or does the unexpected reward outcome carry information about the accuracy of identification of the uncertain state itself? Unexpected uncertainty (e.g. in the form of an unexpected change in the state-action mappings) further adds a challenge: an unexpected reward outcome may be down to identifying the state incorrectly or to a change in the environment.

To illustrate, consider an animal that has to decide which food patch to forage in. The animal needs to quickly assess the nutritional value and predation risk of the patch based on uncertain sensory cues (whether visual, auditory or olfactory), even before feeding begins (Mella et al., 2018). For simplicity, say the animal has to learn to choose between two types of patches, A or B, each of which provides reward with a fixed probability (expected reward uncertainty). Assume that patch A is, on average, more rewarding (e.g. more nutritious or safer) for the animal than patch B and that the animal can only detect the type of patch based on the sensory cues with a certain fixed probability (expected state uncertainty). Framing this as a reinforcement learning problem, the animal’s task here would be to learn the value of each patch type (i.e. its long term expected reward, also known as state-value) so that it can maximise its reward from the food patch visits.

One main problem that the animal faces under (expected) state uncertainty conditions is as follows: which state should an obtained reward be assigned to when updating the state-values? Should it be assigned to the patch type that was perceived by the animal as the true state, or to both patch types proportionally to the animal’s beliefs about the true state? This problem is compounded under unexpected uncertainty. For instance, suppose there is an unexpected change in the reward distributions of the two food patches, so patch B becomes better than patch A (perhaps patches of type A suddenly start attracting more predators or have become less nutritious due to some special environmental conditions). Now the lack of an expected reward may be down to either identifying the state incorrectly, or to a change in the environment so that the state-action mapping has changed. How can the animal learn the correct state-action mapping under expected state uncertainty and adapt to the unexpected uncertainty arising from a change in the state-action mapping? How can RL models account for learning under these forms of uncertainty?

Larsen et al. (2010) proposed two plausible models for how agents might learn from reward under state uncertainty. One model was a simple RL model that assumes that people commit to one state being the true state (e.g. animal identifying one patch type as the true state based on uncertain sensory cues), then update the state-action value of only that identified state. Larsen et al. (2010) also suggested a more complex Bayesian RL model where the learner is assumed to update the state-action value of each possible state according to the posterior probability of that state being the true state, given the observed reward. The main difference between these two models is that the Bayesian approach uses the current state-action values along with the observed reward to help inform the beliefs about the true state, whereas the simple model just uses the state information from the environment to make a best guess about the true state. Accordingly, under the simple model, only one state-action value will be updated, whereas the Bayesian model can use the observed reward to update information about all states.

Larsen and colleagues showed that the two models behave differently under expected and unexpected uncertainty. Under expected uncertainty, Larsen et al. proved that the Bayesian model converges to the correct state-action values, whereas the non-Bayesian model converged to incorrect values unless the expected rewards for the different state-action pairs were all equal. Under unexpected uncertainty—such as a sudden reversal of stimulus-response contingencies—the simple RL model adapted to the environmental change by updating its state-action value estimates to match the environmental change (even though these value estimates were not correct), while the Bayesian RL model did not (unless the state uncertainty was made small; see Larsen et al., 2010, for further details).

Both models are candidates for model-free reward-based learning under the different types of uncertainty we have reviewed above. Following on from Larsen et al.’s (2010) work and predictions, we had several aims in this study. First, we wanted to understand whether human participants use reward feedback to update both their state estimates and state-action mapping—as stipulated by the Bayesian RL model—or whether they update the state-action mapping for only the estimated state, as assumed in a simple RL model. Second, if participants behave in line with the Bayesian RL model, they are likely to fail to adapt to unexpected uncertainty (i.e. a change in the state-action mapping). Therefore, we wanted to see whether participants will successfully adapt to unexpected uncertainty. Third, we wanted to assess people’s learning under state uncertainty and reward uncertainty separately, allowing a direct comparison of how people deal with expected and unexpected uncertainty in states versus rewards.

A final aim was to compare the two RL models to another type of model that has emerged as a plausible mechanism for learning under uncertainty: models that act based on sampling from past experiences. Sampling models assume that the learner chooses actions that have produced the best payoff in small samples of past trials, usually taken from the recent past (e.g. Chen et al., 2011; Stewart et al., 2006). Recent research has suggested a superiority of such models over classic RL models in explaining participants’ learning behaviour in tasks involving reward uncertainty (Bornstein et al., 2017; Bornstein and Norman, 2017; Hochman & Erev, 2013; Plonsky et al., 2015). For instance, Bornstein et al. (2017) developed a simple sampling model that assumes that the decision maker computes the values of the different choice options by using one outcome sampled from the past. This model fit participants’ choices and neural signals better than a classic temporal difference-based RL model. They also showed that the sampling process could be biased by reminding participants of particular trial outcomes, which cannot be accounted for under the RL framework.

In considering the sampling models, we also entertained a variety of Bayesian (approximate-) normative models of learning under uncertainty. These include, for example the hierarchical Bayesian model of Behrens et al. (2007), the approximate-normative Bayesian model of Payzan-LeNestour and Bossaerts (2011), the hierarchical Gaussian filter of Mathys et al. (2014) and the more recent Kalman-based models of Piray and Daw(2020, 2021). Although these models are relevant to the study of human learning under uncertainty, these have been built primarily to study learning under volatility. Given that our manipulation of unexpected uncertainty takes the form of a single change in the state-action mapping, we do not consider volatility in this paper.

We set up two experiments to investigate how people deal with expected and unexpected state and reward uncertainty. In the first experiment, we focus on state uncertainty, and present a psychophysical reward task in which the states are signalled imperfectly with noisy stimuli so that participants cannot identify the true state with certainty. The states perfectly determine the rewards delivered by different actions. This paradigm allows us to test whether participants can learn the correct rules under this state uncertainty condition. We also introduce a switch (i.e. reversal) of stimulus-response contingencies for some participants without telling them, to see if they can adapt to the unsignalled change (unexpected uncertainty) despite the presence of state uncertainty. In the second experiment, we tackle the question of whether learning under state uncertainty and reward uncertainty is supported by the same mechanism, by adding a condition in which the source of uncertainty is the reward signal instead of the state cues (i.e. the reward function is stochastic while states are deterministic).

We used these data to compare the three computational learning approaches—Bayesian RL, non-Bayesian RL and sampling models—in their account of the learning of different participants. Based on the theoretical predictions from Larsen et al. (2010), it might be expected that in the state uncertainty condition, the pattern of learning of the participants who adapt to the switch will be better described by the simple RL in comparison with the Bayesian model, while the learning behaviour of those who fail to adapt to the switch, or adapt only slowly, will be better explained by the Bayesian RL model. We expected the sampling model of Chen et al. (2011) to be able to handle a variety of adaptation patterns due to its greater flexibility (at the expense of increased computational complexity).

The contribution of this work is twofold. First, our work offers behavioural and computational insights into how people learn under conditions of (expected) state uncertainty. Second, we compared how people learn under expected and unexpected uncertainty when the source of uncertainty lies in the reward versus state. That is, we assessed whether the mechanisms for dealing with expected and unexpected reward uncertainty, generalise to situations in which the uncertainty arises from the state.

Experiment 1

In most previous demonstrations of reward-based learning under expected and unexpected uncertainty, the stimuli were perceptually unambiguous, while the reward was uncertain (being drawn from a probability distribution). In contrast, in Experiment 1, we paired a deterministic reward function with ambiguous stimuli to study the effect of a rule reversal on the ability to perform reward-based learning under state uncertainty.

Participants and Design

Twenty-four participants were tested in Experiment 1. Participants were students from the University of Bristol, or other volunteers recruited using the University email announcement system. Twelve of the participants served as a control group for whom we did not switch the state-action mapping rules; for the remaining twelve participants, we switched the mapping half way through the task. All participants had normal or corrected-to-normal vision. Participants were reimbursed a fixed amount of £4 for participation, and in addition earned a variable amount of up to £5, which depended on their performance on all trials (£0.025 per correct response). The study was approved by the Faculty of Science Human Research Ethics Committee at the University of Bristol.

Material and Procedures

Experimental Setup and Stimuli

Participants were seated in front of a 21-in. Viewsonic G225fB monitor with a resolution of 1024 × 768 resolution and a refresh rate of 85 Hz. The experiment was programmed and run using MATLAB with the Psychophysics Toolbox (Brainard, 1997; Pelli, 1997). The viewing distance was set to \(\sim 57\) cm.

In each trial, participants saw a patch filled with a variable proportion of white and black dots, in the middle of a gray background (see Fig. 1). When the majority of the dots were white, the patch appeared lighter than the background (“light” state). On the other hand, when the majority of the dots were black, the patch was darker than the background (“dark” state). More precisely, the patch contained 400 by 400 white or black pixels (15 × 15∘). The luminances for the black, grey and white pixels were respectively equal to 0.1cd/m2, 42cd/m2 and 85cd/m2, so that the black and white pixels were approximately equidistant from the background luminance.

Sequence in one trial from the calibration phase. Firstly, the subject saw a fixation cross on the centre of the screen for 506 ms (43 frames). Then the stimulus was displayed for 247 ms (21 frames). After that, the subject had to choose between two shapes depending on the state of the patch. The next trial started following a blank screen that was displayed for 506 ms (43 frames). The centres of the response shapes—equilateral triangle of side length 175 px (a visual angle of 7∘) or a rectangle of height 350 px (13∘) and width 175 px (7∘)— were positioned randomly on a virtual circle of radius 250 px (10∘)

As the stimulus was noisy, the learner could only identify the correct state with a certain probability ρ called level of state uncertainty. In our experiment, we aimed to have ρ around 0.65. Due to individual variation in visual sensitivity, we conducted a preliminary measurement to calibrate the proportion of light and dark pixels in the stimuli per participant, so that performance was at the desired level of ρ.

Procedure

The procedure was the same for the control and test group, except that for the control group, we did not switch the state-action mapping rules at any point. The control condition was set up to examine whether people are able to perform reward-based learning under state uncertainty (similarly, results from the test group before the switch can be used to answer this question), whereas the test condition should inform us about people’s ability to respond to an unsignalled reversal in state-action mapping rules in the presence of state uncertainty, and how this change would affect their learning performance. Participants from both groups completed two phases: a calibration phase and a learning phase.

Calibration Phase

This phase aimed to determine, for each participant, the proportion of white/black pixels that corresponds to the level of state uncertainty ρ ≈ 0.65. That is, we aimed to identify the mixture of dark and light pixels that the participant could accurately identify with an accuracy of 65%. For that, we measured a full psychometric function—that is the proportion of “light” responses as a function of the proportion of white dots—using the method of constant stimuli with 9 proportions of white dots that varied between 0.4 and 0.6 (0.4 corresponding to an easily identifiable dark state, and 0.6 corresponding to an easily identifiable light state) spaced at 0.02 intervals (Kingdom and Prins, 2009). There were 25 presentations at each of the nine contrast levels, for a total of 225 trials. Note that the positions of the white and black pixels varied randomly from trial to trial, hence two stimuli can have the same proportion of white and black dots, but might appear different.

In each trial (Fig. 1), after being presented with the stimulus, participants used the mouse to click on one of two shapes (triangle or rectangle), depending on whether they believed that the state of the patch was light or dark. The (initial) mapping of shape to light/dark response was counterbalanced across participants, so that half of the participants were asked to click on the triangle if they thought the patch was light and the rectangle if the patch was dark; the other half had to use the reversed mapping. To minimise spatial response bias, on each trial the two response stimuli were positioned randomly on a virtual circle and were always on two opposing circle quarters, with the mouse cursor initially placed at the centre of the circle.

Once participants had completed all 225 trials, a cumulative Gaussian psychometric function was fit to the obtained frequencies of light responses for each participant using the Palamedes Toolbox in MATLAB (Prins & Kingdom, 2018); an example is shown in Fig. 2. Using each participant’s fitted function, proportions of white dots that corresponded to the level of state uncertainty ρ = 0.65 for both the light and dark state were computed (for the dark state, this was obtained from the figure by computing the proportion of white dots that corresponded to a frequency of light response equal to 1 − ρ).

Example data and fitted psychometric function. Frequency of light responses as a function of the proportion of white dots for nine contrast levels. On the x-axis, as the percentage of white dots increases, the stimulus would appear lighter to participants. The error bars represent binomial standard error bars. The solid curve shows the fitted psychometric function. The dashed lines illustrate how the ρ-thresholds for both the light and dark states are computed using the the fitted function

Learning Phase

The learning phase was used to train participants on the state-value associations. A schematic trial sequence is shown in Fig. 3. Presentation of the stimulus and choice options were as in the calibration phase. In the learning phase, however, participants were not told about the correct mapping between light or dark states and the two response options, so the mapping needed to be learned. In order to avoid any interference from the state-action mapping used in the calibration phase, the new response options were a circle and square instead of rectangle and triangle (the mapping of shape to correct response was counterbalanced across participants, as in the calibration phase). Each trial provided a reward opportunity: in trials where the subject chose the correct response, they received positive reward feedback (£0.025) in the form of a moneybag icon that appeared for 1.52 s (129 frames). In trials where the incorrect response was selected, no reward feedback was given and the next trial was presented immediately after the response and the inter-trial interval of 506 ms (43 frames). Subjects were assigned the task of maximising their rewards, and at the end of the experiment were paid the total accumulated reward across all trials in addition to their reimbursement. The learning phase contained 200 trials.

The sequence in one trial from the learning phase looked similar to that from the calibration phase. The main difference here is that participants received a positive reward feedback (winning £0.025) if they responded correctly. Another difference is that the response shapes were changed to a circle and a square instead of a triangle and rectangle. The circle’s diameter and square’s side length were both equal to 175px (i.e. within a visual angle of 7∘)

Participants in the control condition were exposed to the same reward mapping throughout the task. In the test condition, the mapping rules were switched (without signalling this change to participants) at the 101st trial, so that if the correct (reward-generating) response for the light state (resp., dark state) before the switch was circle (resp., square), after the switch the correct response would become square (resp., circle). Participants were not given any instructions about whether or not a switch might occur prior to the start of the experiment.

Analysis

Learning Curves

To visually evaluate the dynamics of participants’ learning performance and their ability to adapt to the switch in the mapping rules, we calculated a learning curve for each participant by computing a moving average of the proportion of rewarded responses with a window size of 30 trials over the course of the learning phase (i.e. the averaging was performed for every 30 trials on the binary variable that encodes whether a response was rewarded or not). Since the learning phase consisted of 200 trials, there are 171 values of average values for each participant, corresponding to the 171 windows of length 30, and the sequence of these 171 values is henceforth called the participant’s learning curve.

Statistical Analyses

To evaluate participants’ learning statistically and understand what factors influenced their learning performance, we used generalised additive mixed modelling.

Generalised additive models (GAMs; Hastie & Tibshirani, 1990; Wood, 2017) are the natural choice for modelling a response variable that varies in a complex non-linear fashion with time as is the case for our data. These models can adapt to a much wider range of shapes than polynomial regression, with the latter being restrictive, especially with regards to the location of the inflexion points (for example, in cubic polynomial regression the location of the first inflexion point will often dictate the location of the other inflexion point). This is an important consideration to take into account for our data since for a participant who adapts to the switch, the fitted curve should have an inflection point around the 101st trial to account for the decrease in performance after the switch, while the location of the second inflection point could be at any of the next 100 trials depending on how fast the participant adapts to the switch. GAMs overcome this restriction by dividing the data range into smaller intervals then applying local polynomial regression separately in each of those intervals.

Concretely, the regression equation in a GAM can be formally expressed as:

where Y is the response variable, which follows a distribution from the exponential family (e.g. binomial distribution), E() is the expected value operator, X1,...,Xp are the explanatory variables, g is a link function (e.g. logistic), β0 is the intercept, 𝜖 is a random error that is assumed to have a constant variance and a null mean. f1,...,fp are unknown smooth functions to be estimated from the data along with β0 as would be the case in a standard generalised linear model (GLM). Each smooth function—also called a smoothing spline—is composed of a weighted sum of basis functions:

where the basis functions are piecewise-defined polynomial functions. An example of smoothing bases are cubic regression splines which are formed by connecting polynomial segments of degree 3. The points where the segments connect are called knots, whose number determines the wiggliness of the fitted curve and can be set either manually or using cross validation with the objective of minimizing a penalized residual sum of squares.

Our analysis also required the inclusion of random effects since our data contained repeated measurements of the same participants at multiple trials. GAMs, similarly to GLMs, can be extended with random effects with even more flexibility with regards to how they can be captured. Our generalised additive mixed effects models (GAMMs) thus contained by-participant factor smooths for trial order (the non-linear counterpart to random slopes and random intercepts). We started with a saturated model that contained all fixed effects of interest, including their interactions, as well as the (random) by-participant factor smooths for trial order. From the saturated model, we sequentially removed fixed effects using a backward-selection approach based on the Akaike Information Criterion (AIC), that is we removed variables that led to the largest decrease in AIC and we stopped when the AIC could no longer be improved.

The fixed structure of the statistical model incorporated the experimental condition factor (control versus test condition) and state match factor: whether or not the current (true) state matches the previous (true) state. We introduced state match as it generates a prediction that could help discern between our computational models. Under the simple RL framework (and similarly for the sampling model), updates occur at the level of state-action pairs (see “The WTA-RL Model” section for more details), which should result in the dependence of reward acquisition on the nature of the previous state. This is because visiting a state and receiving reward feedback on that visit is likely to improve the learner’s estimate of the action values associated with that state, which in turn, should promote the selection of the most rewarding action on the next visit of that state. In contrast, if the state is not visited, the learner’s knowledge about how to deal with that state is not updated, and hence no improvement should be observed. Under the Bayesian RL framework, state-action values of both states are updated (according to their posterior probability of being the true state; more details are provided in “The PWRL Model” section). Here, the state match effect should be attenuated since reward information—which can potentially improve learning—will flow to the next state irrespective of whether the next state matches the previous one. In other words, under the Bayesian RL model, the positive effect of re-encountering a state on learning performance is likely to be weaker than under the simple RL model because updates will take place for all states.

To account for the possible interaction between experimental condition, state match and trial order, we included the non-linear interaction by-(condition×state match) factor smooth for trial order.Footnote 1 We also added the factor reward acquisition at t-1 (whether or not reward was received in the previous trial), primarily to remove autocorrelation in the residuals, but also to assess whether learning effectively took place (a positive effect would provide support for this). The GAMMs were run in R version 4.1.2 (R Core Team, 2021) using the bam() function from the mgcv package version 1.8-40 (Wood, 2017). Interaction graphics were generated using the itsadug package version 2.4.1 (van Rij et al., 2020).

Results

We first analysed the behavioural results to see (1) how well participants learned the task in the control condition (and test condition prior to the switch) given the expected state uncertainty, and (2) whether they managed to adapt to the unexpected uncertainty in the test condition despite the presence of state uncertainty. We then performed a more in-depth analysis using GAMMs to assess participants’ learning statistically and understand the impact of the unexpected reversal and other factors on their learning performance.

Learning Performance Under Expected Uncertainty

Figure 4 shows that participants from both the control and test (before the switch) group performed, in general, at the level of the state uncertainty, and that they were able to learn the correct state-action mapping despite the presence of state uncertainty.

Running average score of the “average subject” in the control (solid green line) and test group (solid red line) with a window size of 30. The shading along each curve represents the standard error of the mean, while the light grey region represents an approximate 95% confidence interval (CI) of the moving average of a Bernoulli variable with probability of success equal to 0.65. The CI is constructed to give an indication of the normal range of performance in each window of 30 trials for a subject who has learned the task rules (it is a simple measure to assess learning/unlearning for each point in isolation). A point in the learning curve that is below the CI range suggests that it is unlikely that the (aggregated) participant was using the correct mapping during that window, while a point above the CI range suggests that the participant was performing at a higher level than the target level of uncertainty. The vertical dashed line indicates the trial where the switch occurs in the test condition

Learning was confirmed at the individual level when we compared the proportion of rewarded responses on the first block of 20 trials to the proportion of rewarded responses on the last block of 20 trials preceding the “switch trial” (i.e. the 101th trial in both conditions). In fact, as can be seen from Fig. 5 (left pane), before the “switch trial”, 20 out of 24 participants from both groups showed improved performance by the end of the first 100 trials, as indicated by points above the equality line in the figure (note also that the performance of one participant did not increase in the last 20 trials because their reward acquisition rate was already above 75% in the first 20 trials). Also, 20 participants reached a reward acquisition rate above chance in the last block of 20 trials preceding the switch. This suggests that the majority of our participants not only managed to learn the task (without the switch), but were able to do so within 100 trials.

Learning Performance Under Unexpected Uncertainty

Participants were also able, in general, to adapt to the unsignalled change in state-action mapping as illustrated in Fig. 4 (solid red learning curve). As expected, reward proportion dropped to the level 1 − ρ after the switch, and seems to have recovered slowly to arrive again at a level near of the level of state uncertainty ρ = 0.65. Figure 5 (right pane) indicates that most participants from the test group (11 out of 12) performed better in the last 20 trials in comparison with the first 20 trials following the switch; however, only 8 participants reached a performance above chance by the end of the testing sequence, and hence could be counted as successful in dealing with unexpected uncertainty.

Individual differences were evident in how participants adapted to the switch. For example, some participants adapted quickly to the switch (within about 30 trials), while other participants adapted late or did not adapt at all to the switch even though they managed to learn the task rules before the switch (see S.1 for a full analysis that constructs individual learning curves and groups them using hierarchical clustering). In addition, a few participants seemed to have performed at a higher level than the targeted level of uncertainty ρ = 0.65 for extended periods of time (see, for example participants 7 and 8 in Fig. S.2).

Analysis of Learning Performance Using Statistical Modelling

The GAMM model for reward acquisition that we retained after backward variable selection is presented in Table 1. The proportion of rewarded responses increased over time prior to the switch in the test condition, suggesting a learning trend as was expected (Fig. 6A). A positive trend also occurred in the control condition but did not reach significance (p = .104), attributable to the relatively small sample size in each group. In fact, re-running the same model with data from both conditions restricted to the first 100 trials showed a significant increase with trial order (edf = 1, p = .003). Obtaining reward in the previous trial increased the likelihood of obtaining reward in the current trial, also suggesting that (recency-based) learning took place (β = 0.16, SE = 0.07, z = 2.40, p = .016). Reward likelihood was significantly lower in the test condition than in the control condition for most of the second block of trials (between the 102th and 188th trials as highlighted with the red segment in Fig. 6B), confirming that the introduction of the switch negatively impacted participants’ learning performance, and that, overall, the recovery from the switch effect occurred gradually and slowly. There was a very strong positive effect of the state match factor (β = 0.71, SE = 0.06, z = − 11.09, p < .001), indicating that participants were more likely to get rewarded—in other words, respond correctly—when they were presented with the same state as the previous trial.

Partial effect of the significant predictor (corresponds to the contribution of Trial order f(Trial) that would be used in Eq. 1) along with the interaction effect in the generalised additive mixed effects model of reward acquisition in Experiment 1. A Nonlinear regression lines for the test condition. The grey shadings along the curves represent pointwise 95% confidence intervals around the curve estimates. B Difference in fitted logit-transformed proportion of rewarded responses between the control condition and test condition (here the difference excludes the random effects). The red segments indicate where the difference is significant, as assessed on the basis of the 95% CI

Discussion

Participants were, overall, able to learn successfully under expected state uncertainty due to the ambiguous nature of the psychophysical stimuli, and the unexpected uncertainty due to the non-signalled reversal. The speed of adaptation to the switch in the state-action mapping varied considerably across participants. An additional finding was that participants’ learning benefited from re-encountering the same state in two consecutive trials, which could be due to a number of reasons. As we explained when we introduced the state match factor, this could be a simple by-product of how participants keep track of the rules of the task, with value updates occurring at the level of state-action pairs as implicitly assumed in RL models. In such frameworks, getting reward feedback on a visit to a given state should promote selection of the action that will give reward on the next visit of that state, while if the state is not visited, the task knowledge is not updated, and hence no improvement should be observed. The effect of re-encountering a state is likely to be weaker under the Bayesian RL model than under the simple RL model because updates will take place for all states irrespective of which state the learner visits, and thus the effect of the update in the previous trial should be smaller. This prediction is tested later through computational modelling of the data (see S.6 in the Supplementary Material). Another explanation of the positive effect of state match is that participants learned to disambiguate the states better by using reward feedback; that is getting feedback on trial t helped correctly identify the state on trial t + 1. This interpretation is partially supported by the observation that some participants performed at a higher level than the target uncertainty level, suggesting that they continued to improve in the perceptual discrimination during the learning phase. We address this shortcoming in experiment 2.

Experiment 2

Experiment 2 introduced a reward uncertainty condition, and also provided a close replication of Experiment 1. Moreover, we attempted to address one shortcoming in the first experiment, which is that some participants performed at a higher level than the targeted level of uncertainty, potentially due to either an imprecision in the estimation of the psychometric function in the calibration phase, or to an improvement in the detection of the noisy states in the learning phase using reward information. Accordingly, we made three changes: we introduced feedback in the calibration phase; increased the number of contrast levels used for the estimation of the ρ-thresholds from 9 to 10; and increased ρ from 0.65 to 0.7 to reduced the state uncertainty (see “Material and Procedure” section for more details).

Participants

Experiment 2 included 61 participants: 31 were randomly allocated to the state uncertainty condition, while the remaining 30 participants were allocated to the reward uncertainty condition. Participants were recruited from the University of Western Australia. As in Experiment 1, participants’ payment included a fixed amount ($5) and an extra bonus payment that depended on their performance (up to $8). The study was approved by the Human Research Ethics Committee at the University of Western Australia. The number of participants was chosen based on previous studies of reward-based learning under uncertainty (e.g. Behrens et al., 2007; Nassar et al., 2010; Wilson & Niv, 2011). In addition, model simulations indicated that 30 participants would be enough to differentiate between the two RL models used in our study in the state uncertainty condition (see S.2).

Material and Procedure

State Uncertainty Condition

The structure and the design of the task in the state uncertainty condition was similar to that of the first experiment with three major modifications, mainly in the calibration phase, as follows.

First, to assist participants in learning to disambiguate the states in the learning phase, we introduced feedback in the calibration phase. More specifically, after each response, participants were told whether their identification of the patch state (light or dark) was correct or not by displaying on the screen either the word “Correct” in green or “Incorrect” in red. Participants were told to use the feedback to improve their discrimination ability in later trials.

To improve the precision of the estimation of the ρ-thresholds for both the light and dark states in the calibration phase, we increased the number of contrast levels from 9 to 10. We also added 100 trials at the beginning of the calibration to let participants “warm up”. In addition, these warm up trials were used to produce an initial, rough estimate of the slope of the psychometric function, which then determined the exact contrast levels to use in the actual calibration phase (250 trials: 25 repetitions for each of the 10 contrast levels). Both the feedback and the increased number of trials served a common goal: to ensure that most of perceptual learning for state classification is complete by the time the state-action learning phase started.

We increased ρ from 0.65 to 0.7, to reduce the extent of individual differences. We expected that the increase in ρ would enable most participants to adapt to the switch, allowing a cleaner comparison of performance between the state uncertainty and reward uncertainty conditions. Nevertheless, a value of ρ = 0.7 still represents a considerable amount of state uncertainty, so that the models can be differentiated. Indeed, initial simulations (see S.2) showed that a level of 0.7 made it easier to differentiate between models when model fitting artificial behavioural data, but also led to more accurate model recovery than the lower value of ρ.

Reward Uncertainty Condition

In the new reward uncertainty condition, the states were unambiguous while rewards were stochastic. The patches used were either completely white (light state) or completely black (dark state), and of the same size as the patches used in the state uncertainty condition. As in the state uncertainty case, participants were required to learn the correct mapping between the two states and two response options by using the reward feedback given to them after each of their responses. To match the uncertainty level used in the state uncertainty condition, we generated rewards following a Bernoulli(0.7) distribution for correct responses and a Bernoulli(0.3) for incorrect responses. The task was otherwise similar to the learning part of the state uncertainty condition in all other aspects, except that obviously there was no calibration phase to construct the light and dark patches.

Results

The structure of this section largely follows that of the results section of Experiment 1. We start by visualising the average learning curves under the different forms of uncertainty. The patterns of learning will then be tested statistically using the GAMM approach, focusing in particular on the comparison between state and reward uncertainty.

Learning Performance Under Expected Uncertainty

In addition to the previously used reward-based learning curves, we also included learning curves that are based on accuracy (see Fig. 7). Both types of learning curves were again constructed using running averages with a window size of 30 trials. The main reason for introducing accuracy-based learning curves was that the learning curves in the reward uncertainty condition provide a different perspective on learning performance than the reward-based learning curves, given the stochasticity in the reward function but not in the accuracy function. That is, a response can be accurate but not rewarded, which can never be the case in the state uncertainty condition (hence the match between the two curves under state uncertainty in the left figure panel). In the reward uncertainty condition, a participant’s accuracy-based learning curve should reach 1 when the participant has learned and has been using the correct rules. The reward-based learning curve should fluctuate around 0.7 in the case of successful rule acquisition, assuming participants exploit this rule with limited or no exploration. In what follows, we will primarily analyse reward-based performance (proportion of rewarded responses) but will make reference to accuracy-based performance when needed during the analysis of the reward uncertainty condition data.

Overall running average scores of the proportion of rewarded responses (red) and correct responses (green) in the state uncertainty (left) and reward uncertainty (right) condition. The running averages were computed using a window size of 30. The shading along each curve represents the standard error of the mean, while the light grey region represents an approximate 95% confidence interval of the moving average of a Bernoulli variable with probability of success equal to 0.7. The vertical dashed line indicates the trial where the switch occurs. Note that the reward-based running average matches the accuracy-based running average in the reward uncertainty condition

First, consider the case of (expected) state uncertainty in the first 100 trials before the switch. The aggregated learning curve in Fig. 7 (left panel) suggests that, overall, participants were able to learn the task within the first 100 trials and performed at the target level of state uncertainty ρ = 0.7. Looking at participants individually in Fig. 8 (left), the majority of participants (26 out of 31) reached a reward acquisition rate above chance (0.5) in the last 20-trial block before the switch. Twenty out of 31 participants showed improved performance relative to the first 20-trial block (a further 5 participants did not show performance improvement even with a score above 65% in the last block because they had reached an even higher score in the first block of 20 trials). These results confirm our findings in the first experiment, i.e. the majority of participants were able to deal with expected uncertainty when the uncertainty comes from the states.

In the case of reward uncertainty, participants performed well below those from the state uncertainty group in the first 100 trials, and also below our uncertainty threshold (red curve in the right panel of Fig. 7). The finding that the aggregated accuracy-based curve (in green colour) is well below 1 confirms that participants, in general, were not exclusively employing the optimal response mapping. However, the upward trends in the learning curves provide evidence that some learning took place. The individual data presented in Fig. 8 (left) shows that 22 participants out of 30 reached a performance score above the chance level (points above 0.5), but only half of the participants showed improved performance (points above the equality line in the figure). The results are therefore more mixed in the reward uncertainty condition in comparison with state uncertainty, with no clear majority for those who learned the mapping rules. It is possible that some participants may have learned the task rules but continued to explore because they may think that the rules might change at some point or because they were behaving in accordance with probability matching (Vulkan, 2000).

Learning Performance Under Unexpected Uncertainty

Responses to the switch (in trials 101–200) in the state uncertainty condition were similar to those for Experiment 1 (see the left panel in Fig. 7 and the right panel in Fig. 8). The data suggest that the majority of our participants managed to adapt to the switch (20 out of 31 participants performed above chance in the last 20-block post-switch and showed improved performance in comparison with the first block post-switch; a further 3 participants did not show improvement because their performance score was already high in the first 20 trials).

For reward uncertainty, the impact of the unexpected switch on overall performance was less pronounced than in state uncertainty because performance was already low before the switch. However, performance remained around the chance level for most of the trials post-switch (right panel in Fig. 7). The accuracy-based learning curve in green also shows that performance did not recover back to the level it was at prior to the switch. The average proportion of rewarded responses in reward uncertainty was lower than that in the state uncertainty condition except in the first 30 trials post-switch. Figure 8 (right panel) shows that, again, only half of our participants improved their performance relative to the first 20-trial block post-switch and ended up with a reward acquisition score above chance. These results suggest that the switch had a negative impact on participants’ performance in the reward uncertainty condition as well, with even more participants failing to deal with the unexpected switch in comparison with state uncertainty.

Analysis of Learning Performance Using Statistical Modelling

The saturated model was selected as the best model based on backward model selection. This model (see Table 2) included the three-way non-linear interaction between trial order, uncertainty condition and state match (recall that this was coded as a two-way non-linear interaction by combining uncertainty and state match as a single factor), as well as reward acquisition in the previous trial (used mainly to remove temporal residual autocorrelation). Figure 9 presents the significant partial effects involving trial order along with the interaction effects. First, in the state uncertainty condition (SU, panel A), the effect of trial order on the likelihood of receiving a reward, when the states do not match, followed the same pattern encountered in the previous exploratory analyses: an increase in the likelihood of being rewarded with trial order until the occurrence of the switch, then an abrupt decrease in performance before the performance recovers after a few trials. The likelihood of receiving a reward remained significantly higher for matched states in comparison with unmatched states for most of the trials, can be seen in panel C.

Partial effects (fixed effects only) of the significant predictors along with the interaction effects in the generalised additive mixed effects model of reward acquisition in Experiment 2. A–B Each panel depicts the nonlinear regression line for a combination of uncertainty condition and state match level, with pointwise 95% confidence intervals (grey shading). C Difference in fitted logit-transformed values between matching and non-matching states in the state uncertainty condition. D Same as C but the difference is calculated for responses from the reward uncertainty condition. E Difference in fitted logit-transformed proportion of rewarded responses between state uncertainty and reward uncertainty when the state on the present trial matches that on the previous trial. F Same as E but the difference is calculated for trials where the states do not match. All difference terms were calculated with random effect terms set to 0. The red segments indicate where the differences are significant

In the reward uncertainty condition, the effect of trial order on reward likelihood was non-significant for the unmatched state (p = .274), but was significant for matched states (p = .015), most likely due to the initial large dip in the non-linear regression line; the large dip is due to the high proportion of rewarded responses in the second trial where the state matched that of the first trial (see Fig. 9B). The interaction graph in Fig. 9D shows that the effect of trial order on reward likelihood was almost the same for both matched and unmatched states.

Figure 9E–F compare the state and reward uncertainties. For matched states (panel E), reward likelihood was significantly higher in state uncertainty than in reward uncertainty for all but the first three trials. When the states at t and t − 1 were different (panel F), the reward likelihood was significantly higher in the state uncertainty condition for 28 trials in the middle of the first block of 100 trials (i.e. under expected uncertainty), but the reward likelihood was significantly lower in state uncertainty during the first 40 trials in the second 100-trial block (i.e. after unexpected uncertainty has been introduced). The difference between the state and reward uncertainty conditions was otherwise non-significant.

Discussion

Participants’ performance was better in state uncertainty when measured by reward received. The difference between the two conditions, however, was mainly observed in the first block of 40 trials after the switch when participants encountered a different state than in the previous trial (reward uncertainty was associated with higher reward likelihood during the first few trials post switch, mainly because performance was already low prior to the switch and, therefore, did not get affected much by the change in response mapping). Participants also displayed different switch adaptation patterns in reward uncertainty in comparison with state uncertainty (and often less obvious; see Fig. S.6 in S.1). Moreover, participants’ behaviour appeared to be more exploratory in the reward uncertainty case. These combined effects present a challenge to the computational models, notably the interaction between uncertainty condition and state match as well as the observed pattern of adaptation to the switch for unmatched states in the state uncertainty condition.

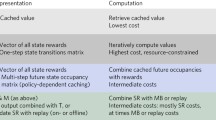

Computational Models

To explain participants’ performance in the two experiments, we compared three computational models. One is a Bayesian temporal-difference-based model, which assumes the learner can use the reward feedback to resolve state uncertainty (Larsen et al., 2010). The second is a simple temporal-difference-based model in which the learner ignores the state uncertainty, and updates only the Q-value (predicted reward) of the state that they believe is the true state (this corresponds to “the winner takes all” model described in Larsen et al., 2010). The third is a simplified version of the learning-by-sampling model of Chen et al. (2011). We aimed to determine whether or not the same computational mechanism underlies learning under both state and reward uncertainty. Below, we describe each model together with its mathematical formulation under both uncertainty conditions.

Description of Models and Modelling Procedure

The PWRL Model

Formulation Under State Uncertainty

PWRL (Posterior Weighted Reinforcement Learning; Larsen et al., 2010) shows how to augment temporal difference learning to deal with state uncertainty. The model allocates reward to each state according to the posterior probability of each state being the true state having observed the reward. The basic idea behind this scheme is that rewards carry information about the true state as do observations, and hence should be used to estimate state probabilities. More precisely, the state-action values Qt(s,a), which here estimate the expected reward for action a (circle or square) in state s (light or dark) at time t, are updated as follows:

where updates are for all possible states and only the chosen action at, α is the learning rate, it is the state that is identified by the learner (it might be different from the true state st), Qt is the vector of all state action values, and \(\mathbb {P}(S_{t}=s|r_{t},a_{t},i_{t},\boldsymbol {Q}_{\boldsymbol {t}})\) is the posterior probability that the true state is s having observed the reward rt.

Expanding \(\mathbb {P}(S_{t}=s|r_{t},a_{t},i_{t},\boldsymbol {Q}_{\boldsymbol {t}})\) using Bayes rule produces the following updating rule for the Q-values (for more detail, see S.3):

where ρt(s,i) is the probability with which the learner identifies i as the true state, such that their identification i is correct with probability ρ:

For action selection, we assume that the learner uses the softmax selection rule to compute the probability of each action given the current (true) state, that is:

where where T is a temperature parameter, which determines the degree of stochasticity in action selection.

To sum up, the steps followed by the learner in each trial under this model are as follows: First, they observe a noisy input from the environment (whose state can be correctly identified with probability ρ). Second, they select an action according to the softmax choice function (see Eq. 6). Third, the reward feedback is used to calculate the posterior probability of each state being the correct state (i.e. \(\mathbb {P}(S_{t}=s|r_{t},a_{t},i_{t},\boldsymbol {Q}_{\boldsymbol {t}})\)). Fourth, they update the state-action values using Eq. 4. This cycle is repeated with each new stimulus.

Although this model makes optimal use of reward feedback to get information about the current state before updating the state-action values, it can suffer from difficulties when a change in mapping rules is introduced, as shown in Larsen et al. (2010). In fact, consider the following example, which is taken from the same paper and adapted to our case. Let us first denote by sL and sD the light and dark state respectively, and by aL and aD the correct “light” and “dark” response before the switch. Prior to the switch—in our experimental setting—the reward for choosing aL in state sL is 2.5, whereas it is 0 if aD is selected in sL. In trial 101, the rewards switch, so that Rt(sL,aL) = 0 and Rt(sL,aD) = 2.5, for t > 100. Assume that by t = 100, the model has learned the correct state-action values, so that Q100(sL,aL) = 2.5 and Q100(sL,aD) = 0. Suppose also that the true state at t = 101 is sL, so that the probabilities of detecting each state as the true state are: ρt(sL,sL) = 0.65 and ρt(sL,sD) = 0.35 (from now on, we consider t to be equal to 101). Let us say the learner chooses at t what they believe to be the best action, aL. Because the mapping rules have switched, their action would result in a null reward. Thus, the posterior probability that the state is sL is given by (from Eq. S.2):

This means that the learner would believe with certainty, after observing the null reward, that the true state is sD rather than sL, and hence would not change Qt(sL,aD), thereby continuing to use the outdated state-action mapping.

Note that, in this example, we have assumed that the estimated state-action values have converged to the correct ones to illustrate why the PWRL model might get stuck with using the same mapping after the switch. The model can potentially overcome this, for example by using a very low value for the learning rate to avoid convergence. However, a low learning rate slows down adaptation to the switch, because too much of the irrelevant (pre-switch) reward history is integrated in the value estimates. Note that the actual rate of adaptation is not just determined by the learning rate, but also by the rate of exploration (for a discussion of the intercorrelation between learning and exploration rates in RL models, see Daw, 2011). Accordingly, we expect adaptation in the PWRL model to be absent or slow if the model successfully switches after trial 100.

Formulation Under Reward Uncertainty

In the reward uncertainty condition, the PWRL model becomes equivalent to a standard SARSA (State-Action-Reward-State-Action) model (Rummery and Niranjan, 1994), with state-action values updated on each trial as follows:

where α is the learning rate, rt is the Bernoulli generated reward received at t, st and at refer respectively to the current state and current action. Equation 8 follows from Eq. 3 given that the posterior probability that the true state is st given that the reward is rt is \(\mathbb {P}(S_{t}=s|r_{t},a_{t},i_{t},\boldsymbol {Q}_{\boldsymbol {t}}) = 1\).

The WTA-RL Model

Formulation Under State Uncertainty

One simple approach to solve reward-based learning tasks with state uncertainty is to ignore the uncertainty and simply update the Q-value of the identified state in each trial. This is the basic idea behind the “winner takes all” reinforcement learning (WTA-RL) model presented by Larsen et al. (2010). More specifically, the model assumes that, in trial t, the learner identifies the true state as it (via a sampling process that correctly identifies the true state with probability ρ), then updates the Q-value for only that identified state. Thus, in our experimental setting, the learner would update the right state-action value, on average, with frequency ρ. Under this scheme, the updating equation for the state-action values is given by:

Using the state-action values, the learner selects actions according to the softmax selection rule defined in Eq. S.6. This model is hence similar to a SARSA model with the difference being that it updates Qt(it,at) instead of the Q-value for the current state st, Qt(st,at).

One advantage of this model is that it adapts well to a switch of contingencies, as it does not suffer from the problem of self-confirming beliefs encountered with the PWRL model. However, the WTA-RL model is not assured to converge to the correct state-action values (Larsen et al., 2010). Nevertheless, learning incorrect Q-values does not necessary result in incorrect actions, and hence the model can still be applied to solve learning tasks with state uncertainty.

Formulation Under Reward Uncertainty

Just like the PWRL model, WTA also becomes equivalent to a SARSA model under reward uncertainty. In fact, the SARSA’s updating rule of state-action value (Eq. 8) is obtained from the that of the WTA model (Eq. 9) by replacing the identified state it by the true state st since the states are unambiguously identified by the learner.

On this account, both the WTA and PWRL models predict that humans would follow a SARSA-type learning behaviour in the reward uncertainty condition, while predicting different behaviours in the state uncertainty condition. In this condition, under PWRL, either no or very slow adaptation would be observed, whereas the opposite is expected under WTA-RL.

The BI-SAW Model

Formulation Under State Uncertainty

We extend the sampling-based BI-SAW model (Bounded memory, Inertia, Sampling and Weighting; Chen et al., 2011), which was originally developed to account for human reward-based learning in binary stateless bandit tasks, to the case of multiple-state environments. For consistency with the two RL models, we replace the exploration rule in the original model (𝜖-greedy followed by a Bernoulli-distributed choice between the two actions) with a softmax exploration rule. We also exclude the assumption of inertia (i.e. tendency to repeat the last choice).

The model assumes that the learner chooses actions based on small samples of experiences drawn from the recent past, while also considering the overall trend of rewards from all past trials. The extent to which the learner relies on a small sample of the most recent trials or the overall reward trend is modulated by a weight parameter that is subject-specific. This property of the model makes it well suited to capture different possible learning behaviours in tasks that include an unexpected change as the one we use here. For example, a fast adaptation to the switch can be simulated by increasing the weight associated with the small sample component, while a slow adaption to the switch can be simulated by increasing the weight of the overall reward trend.

More specifically, each state-action pair is associated with an Estimated Subjective Value (ESV) that is computed on each trial t > 1 as follows:

where it is the identified state on trial t. Index a refers to each possible action (light or dark response in our case). Parameter ω determines the weight that is given to GrandMt(it,a), the grand average payoff choosing action a in state it in all past experience. SampleMt(it,a) is the average reward for choosing action a in state it within a sample of μ past payoffs drawn from the most recent b trials. The sampling process is assumed to be independent and with replacement such that, in each draw, the most recent trial (t − 1) has a probability pr to be chosen. All the remaining (b − 1) trials have an equal probability to be selected. To simplify the model further, we restrict μ to be equal to b—that is the learner samples with replacement exactly b payoffs from the b most recent ones. Finally, the learner is assumed to select an action according to the softmax rule using the computed ESVs.

The model is similar to the WTA-RL model in that both assume that the learner identifies one of the states as the true state, then calculates the value(s) associated with that state. The main difference between the two models is that the subjective values in the BI-SAW model are not updated based on previous values but are calculated afresh on each new trial.

Formulation Under Reward Uncertainty

In the reward uncertainty case, the learner can identify the correct state with certainty, and will be positively rewarded for choosing the correct response with probability ρ and positively rewarded with probability 1 − ρ even if they select the incorrect response. Therefore, the sampling model predicts that people’s learning behaviour should be identical in the two uncertainty conditions, since the distribution of the sequences of rewards is identical in the two conditions.

Model Evaluation

To compare the models in their account of participants’ choices, we estimated the free parameters of each of the three models separately for each participant using (approximate) maximum likelihood estimation. More specifically, we selected model parameters that maximised the log-likelihood of observed actions conditioned on the previously encountered observations and rewards (Daw, 2011). A detailed description of how the likelihood functions were computed and implemented is provided in the Supplementary Material in S.4.

To compare the goodness of fit of the models, we computed the Bayesian information criterion (BIC) for each subject and model using the maximised log-likelihood for that subject. To find out which model provides substantial evidence for the observed data from each participant, we converted the BIC scores of each pair of models into a Bayes factor using the following approximation (Kass and Raftery, 1995):

where BF12 is the Bayes factor for model M1 against M2. We then considered a model as the winning model if its Bayes factor against the model with the second highest BIC score is greater than 3.2, indicating substantial evidence for the model according to Jeffreys’s (1961) scale of evidence. Bayes factors lower than 3.2 were taken as providing inconclusive evidence for differentiating between the models, and in this case no model was declared as the best fitting model.

The analysis of participants’ learning curves reported earlier indicated that several participants performed at a significantly higher level than ρ in the state uncertainty conditions, suggesting that their real level of state uncertainty might deviate from the targeted level (i.e. ρ = 0.65 in Experiment 1 and ρ = 0.7 in Experiment 2). To accommodate such deviations, we fit the models with and without ρ as a free parameter, and found that considering ρ as a free parameter produced better fits for all models and conditions, as judged by the BIC scores. Hence in the main text, we only present the fitting results with ρ as free parameter (the fitting results with ρ fixed are presented in the Supplementary Material in S.9).

Results and Discussion

Here, we focus on the model fitting results for the second experiment, which are summarised in Table 3 (we report the results for the first experiment in S.7 since they were inconclusive). The estimated ρ in the state uncertainty condition was markedly higher than the targeted level of 0.7 and consistent across all three models. This suggests that despite the measures introduced in the second experiment to control the uncertainty level, some participants still managed to improve their capability of discriminating between the noisy states. Furthermore, the estimated exploration rate T provides a hint as to the reason for the lower performance in the reward uncertainty condition, as exploration for all three models was greater in the reward uncertainty condition than in the state uncertainty condition. For example, WTA-RL, the best-fitting model as will be shown below, had a significantly lower exploration rate under state uncertainty (Mdn = 5.04) in comparison with reward uncertainty (Mdn = 8.12), as indicated by a Wilcoxon rank-sum test, W = 296,p = .015,r = −.31. Learning rate α did not differ significantly between state uncertainty (Mdn = 0.34) and reward uncertainty (Mdn = 0.33); W = 450,p = .834,r = −.03.

A comparison between observed and simulated learning curves in both uncertainty conditions (see Fig. 10) shows that all computational models qualitatively captured the behavioural choices well, certainly in the state uncertainty condition. In this condition, the simple RL model (WTA-RL) provided a better qualitative account of participants’ choices, most noticeably in the post-switch trials. In the reward uncertainty condition, the RL and sampling-based models were hard to differentiate. In addition, both the RL and sampling models seem to have underestimated the proportion of rewarded responses for most trials, suggesting a lower quality of model fit in comparison with state uncertainty. This is partly explained by the complete misfit of several participants’ learning curves as shown in Figs. S.18 and S.19 (e.g. subj 13, 16, 18, 24, 27 and 30 where the models simply behaved randomly).

Comparison of the reward-based learning curves of participants and fitted models in both the state uncertainty (left) and reward uncertainty (right) conditions. To draw model learning curves, we first constructed a learning curve for each subject by averaging over 100 simulations with her fitted parameters, then averaged across participants to get the overall learning curve. The light grey region represents an approximate 95% confidence interval of the moving average of a Bernoulli variable with probability of success equal to 0.70. The vertical dashed line indicates the trial where the switch occurs

To compare the model fits quantitatively, we evaluated the BIC values of our models and computed the number of participants best fitted by each model based on Bayes factors, as described in the model evaluation section. The results are summarised in Fig. 11. In the state uncertainty condition, the WTA-RL model had a better overall BIC score and was the best-fitting model for twice as many participants as were best fit by PWRL (14 versus 7). None of the participants’ data was best fit by the BI-SAW model. In the reward uncertainty condition, the SARSA model—the equivalent version of the Bayesian and simple RL models used in the state uncertainty condition—was the best fitting model for twice the number of participants as were best fit by the sampling model (16 versus 8), though the median BIC score slightly favoured the sampling model. These results demonstrate that the simple RL model explained participants’ choices the best in both state uncertainty and reward uncertainty conditions. We link individual differences in the learning patterns to the model fits in S.5 of the Supplementary Material. We also show in Section S.6 that the simple RL model was able to recover the effects observed in the GAMM analysis of the behavioural data from Experiment 2 reported in “Analysis of Learning Performance Using Statistical Modelling” section.

General Discussion

Summary of the Results

We aimed to assess and compare how people learn from reward feedback under conditions of expected and unexpected uncertainty, and compared their learning behaviour under conditions of state and reward uncertainty. Previous studies have investigated expected and unexpected uncertainty arising from reward and mostly focused on volatility (e.g. Behrens et al., 2007; Nassar et al., 2010; Payzan-LeNestour et al., 2013; Piray & Daw, 2020, 2021; Wilson & Niv, 2011; Yu & Dayan, 2005), but not when the source of uncertainty is the environment’s state (Bach & Dolan, 2012; Ma & Jazayeri, 2014; Mathys et al., 2014). Importantly, we matched carefully the degree of reward uncertainty and state uncertainty, and subjected performance under both conditions to the same analyses so that it may be compared directly. We showed that most participants were able to adapt successfully to all three types of uncertainty; participants managed to learn the correct state-action mapping under expected state uncertainty and expected reward uncertainty, and managed to adapt to the unexpected uncertainty due to a non-signalled reversal in the state-action reward contingency. This is in line with and goes beyond previous work that looked at reward-based learning under expected reward uncertainty (Don et al., 2019), expected state uncertainty (Bruckner et al., 2020) and unexpected reward uncertainty (Brown & Steyvers, 2009). Surprisingly, participants collected more reward under state uncertainty than under reward uncertainty, partly due to their higher exploration rate under the reward uncertainty condition.

To identify the psychological mechanisms underlying participants’ behaviour, we used two RL models for learning under state uncertainty that were described in Larsen et al. (2010). The first model, named WTA-RL (Winner-Takes-All), is a simple RL model that, on each trial, selects an action based on the state identified by the learner. Importantly, this model only updates the state-action value for the identified state even though the state may have been identified incorrectly. The second model is PWRL (Posterior Weighted RL), which is a Bayesian RL model that selects actions based on belief states, and updates on all states rather than the one state that is identified (Jaakkola et al., 1995). These two RL models become identical in the reward uncertainty condition, where the state is known with certainty. We also included a third model—BISAW (Bounded memory, Inertia, Sampling and Weighting; Chen et al., 2011)—that selects actions based on a small sample of past rewards as well as the average reward over all trials. We adapted this model to the case of state uncertainty by ignoring the state uncertainty and using only the state that was identified by the learner on each trial, as assumed under the simple RL model. Our modelling results showed that participants’ learning patterns were well-captured by the simple RL model in both state and reward uncertainty, suggesting a common mechanism for dealing with these two types of uncertainty.

More specifically, in state uncertainty, the simple RL was by far the model that accounted for participants’ choices the best. However, the Bayesian RL model captured the behaviour of those who did not adapt to the switch and some participants who adapted slowly. None of the participants was best fit by the sampling model. In the reward uncertainty condition, the RL framework explained how participants learned to maximise reward better than the sampling model at the level of individual subjects, although there was some indication that for the sample as a whole, the BISAW model was competitive.

Relationship with Belief-State-Based Learning

Several recent studies have found that belief-state-based models provide a good account of the dopaminergic response to reward prediction errors in animals (Babayan et al., 2018; Lak et al., 2017, 2020; Starkweather et al., 2017). However, most of these studies used prior rather than posterior beliefs over states and none compared their belief-state-based models to a RL model that commits to a state during both the decision and update stages as the WTA-RL model does. Therefore, a direct comparison between a posterior belief state–based RL model and a state “committing” RL model has not been carried out in any of these studies. Starkweather et al. (2017) administrated a Pavlovian conditioning task to mice where state uncertainty arise from temporal (as opposed to perceptual) cues, as cue-reward interval lengths were varied and reward was occasionally omitted. They show that a (posterior) belief state temporal difference model explains mice’ dopamine reward prediction errors better than a standard temporal difference model (with a complete serial compound representation (see, for example Moore et al., 1998).

In a follow-up study, Babayan et al. (2018) proposed a different Pavlovian task where state uncertainty was due to ambiguous visual cues. Their belief-state model is quite similar to the PWRL model, but with values being computed over continuous belief vectors through linear approximation (their task was designed in such as way that the nature of state could be inferred from the reward signal). They found that this model fit the dopaminergic response in mice better than a stateless RL model (though a state-“committing” model could have been included for a more stringent test of the belief-state model). Interestingly, the values extracted from their fitted belief-state model also accounted well for the anticipatory licking patterns of mice, even though the licking data were not used in model fitting. Lak et al. (2017, 2020) provided support for belief-state models by linking measures of response confidence extracted from their models to the dopaminergic response in monkeys and mice, respectively.