Abstract

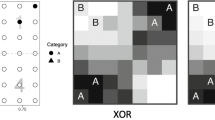

An important question in the cognitive-psychology of category learning concerns the manner in which observers generalize their trained category knowledge at time of transfer. In recent work, Conaway and Kurtz (Conaway and Kurtz, Psychonomic Bulletin & Review 24:1312–1323, 2017) reported results from a novel paradigm in which participants learned rule-described categories defined over two dimensions and then classified test items in distant transfer regions of the category space. The paradigm yielded results that challenged the predictions from both exemplar-based and logical-rule-based models of categorization but that the authors suggested were as predicted by a divergent auto-encoder (DIVA) model (Kurtz, Psychonomic Bulletin & Review 14:560–576, 2007, Kurtz, Psychology of learning and motivation, Academic Press, New York, 2015). In this article, we pursue these challenges by conducting replications and extensions of the original experiment and fitting a variety of computational models to the resulting data. We find that even an extended version of the exemplar model that makes allowance for learning-during-test (LDT) processes fails to account for the results. In addition, models that presume a mixture of salient logical rules also fail to account for the findings. However, as a proof of concept, we illustrate that a model that assumes a mixture of strategies across subjects—some relying on exemplar-based memories with LDT, and others on salient logical rules—provides an outstanding account of the data. By comparison, DIVA performs considerably worse than does this LDT-exemplar-rule mixture account. These results converge with past ones reported in the literature that point to multiple forms of category representation as well as to the role of LDT processes in influencing how observers generalize their category knowledge.

Similar content being viewed by others

Data Availability

The datasets generated and analyzed during the current study are available at https://osf.io/m79qd/. The individual-subject data from the experiments reported in this article, as well as other relevant information, are available at https://osf.io/m79qd/.

Code Availability

The FORTRAN subroutines used for generating predictions from the Divergent Autoencoder (DIVA) model and the mixed LDT-exemplar-plus-rule model are available at https://osf.io/m79qd/. All other model source codes are available from the first author upon request.

Notes

As described in our Formal Modeling Analyses section, we formalize “logical rule models” by using a multidimensional signal-detection framework in which the decision criteria are orthogonal to the coordinate axes of the stimulus space (e.g., Ashby & Gott, 1988).

We refer to the transfer quadrants W, Y, and Z as “far away” or “distant” because they include values on the component dimensions that go completely outside the range of values experienced during the training phase. Indeed, for quadrant Y, these far-away values occur on both dimensions of the transfer stimuli. Admittedly, the descriptors “far-away” and “distant” may be less appropriate for quadrant X.

In these analyses, we computed proportion correct giving equal weight to each individual training-item token. The same statistically significant pattern of results is obtained, however, if proportion correct is computed separately for the A tokens and B tokens and then averaged.

The Greenhouse–Geisser correction was applied for violation of the sphericity assumption.

This is analogous to the common procedure of forming median splits on subject performance across conditions and focusing modeling analysis, say, on the upper median of each condition. In any case, this detailed subject-retention procedure affects retention of a very small proportion of subjects. Had we retained for modeling analysis, all subjects from Condition 2 who met the 83% correct criterion, any differences in patterns of model-fitting results would have been minor, and none of our conclusions would change.

The Equation-10 log-likelihood statistic assumes that the distribution of responses into the frequency distributions follows a multinomial distribution. It is straightforward to show that minimizing the log-likelihood statistic of Eq. 10 is equivalent to minimizing the Kullback–Leibler (KL) divergence (Kullback & Leibler, 1951), a measure of the extent to which one probability distribution is different from a second. However, unlike KL-divergence, use of the log-likelihood statistic allows one to use measures of fit that penalize models for their use of free parameters, such as the AIC and BIC statistics discussed later in this section.

For completeness, we also fitted a mixed exemplar-plus-rule model that did not make allowance for exemplar-based learning-during-test (with default parameter settings of α = 0 and ltmstr = 1). Not surprisingly, this model yielded substantially worse AIC and BIC fits to our Conditions 1 and 2 data than did the mixed LDT-exemplar-plus-rule model (Condition 1: AIC = 894.9, BIC = 947.4; Condition 2: AIC = 873.6, BIC = 928.7). The reason is that it failed to predict the tails in the response-frequency distributions of quadrants W, Y, and Z (for reasons that we have explained previously in discussing the baseline exemplar model and the mixed-rule model). The AIC and BIC fits of the mixed exemplar-plus-rule model (without LDT) were similar to those of the LDT version for Conaway and Kurtz’s Experiment-2A data, because the W, Y, and Z response-frequency distributions had smaller tails in that experiment than in the current experimental conditions.

For completeness, we should note that Conaway and Kurtz (2017, Experiment 1) reported another version of their paradigm in which the transfer items from quadrants W, Y, and Z were not tested. Instead, subjects were tested only with items from the lower-right region of the Fig. 1 structure, including items from the training regions and transfer-quadrant X. The proportion of subjects making a large number of reduced-category (B) responses in quadrant X was somewhat greater in this version of the paradigm than in the version that has been the focus of this article. We report in Appendix B, however, that with appropriate parameter settings, both the pure mixed-rule model and the LDT-exemplar model provide good accounts of the main data patterns reported by Conaway and Kurtz for this version of the paradigm. Without the requirement that the models also fit the generalization patterns in Quadrants W, Y, and Z, there are fewer constraints on the models, and the more complex mixed LDT-exemplar-plus-rule model is not required.

We learned of the application of CAL to the partial-XOR paradigm only after submitting this article and our work was conducted independently.

References

Akaike, H. (1974). A new look at the statistical model identification. IEEE Transactions on Automatic Control, 19(6), 716–723.

Ashby, F. G., & Gott, R. E. (1988). Decision rules in the perception and categorization of multidimensional stimuli. Journal of Experimental Psychology: Learning, Memory, and Cognition, 14(1), 33.

Ashby, F. G., & Maddox, W. T. (1993). Relations between prototype, exemplar, and decision bound models of categorization. Journal of Mathematical Psychology, 37(3), 372–400.

Ashby, F. G., & Townsend, J. T. (1986). Varieties of perceptual independence. Psychological Review, 93(2), 154.

Ashby, F. G., Alfonso-Reese, L. A., & Waldron, E. M. (1998). A neuropsychological theory of multiple systems in category learning. Psychological Review, 105(3), 442.

Canini, K. R., Griffiths, T. L., Vanpaemel, W., & Kalish, M. L. (2014). Revealing human inductive biases for category learning by simulating cultural transmission. Psychonomic Bulletin & Review, 21(3), 785–793.

Conaway, N., & Kurtz, K. J. (2017). Similar to the category, but not the exemplars: A study of generalization. Psychonomic Bulletin & Review, 24(4), 1312–1323.

Danileiko, I., Lee, M. D., & Kalish, M. L. (2015). A Bayesian latent mixture approach to modeling individual differences in categorization using General Recognition Theory. In D. C. Noelle & R. Dale (Eds.), Proceedings of the 37th Annual Conference of the Cognitive Science Society (pp. 501–506). Cognitive Science Society.

Donkin, C., Newell, B. R., Kalish, M., Dunn, J. C., & Nosofsky, R. M. (2015). Identifying strategy use in category learning tasks: A case for more diagnostic data and models. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41(4), 933.

Erickson, M. A., & Kruschke, J. K. (1998). Rules and exemplars in category learning. Journal of Experimental Psychology: General, 127(2), 107.

Erickson, M. A., & Kruschke, J. K. (2002). Rule-based extrapolation in perceptual categorization. Psychonomic Bulletin & Review, 9(1), 160–168.

Fific, M., Little, D. R., & Nosofsky, R. M. (2010). Logical-rule models of classification response times: A synthesis of mental-architecture, random-walk, and decision-bound approaches. Psychological Review, 117(2), 309.

Gibson, B. R., Rogers, T. T., & Zhu, X. (2013). Human semi-supervised learning. Topics in Cognitive Science, 5(1), 132–172.

Hooke, R., & Jeeves, T. A. (1961). “Direct Search” solution of numerical and statistical problems. Journal of the ACM (JACM), 8(2), 212–229.

Hunt, E. B., Marin, J., & Stone, P. (1966). Experiments in induction. Academic Press.

Johansen, M. K., & Palmeri, T. J. (2002). Are there representational shifts during category learning? Cognitive Psychology, 45(4), 482–553.

Krefeld-Schwalb, A., Pachur, T., & Scheibehenne, B. (2021). Structural parameter interdependencies in computational models of cognition. Psychological Review, 129(2), 313.

Kruschke, J. K. (1992). ALCOVE: An exemplar-based connectionist model of category learning. Psychological Review, 99(1), 22.

Kurtz, K. J. (2007). The divergent autoencoder (DIVA) model of category learning. Psychonomic Bulletin & Review, 14(4), 560–576.

Kurtz, K. J. (2015). Human category learning: Toward a broader explanatory account. Psychology of learning and motivation (Vol. 63, pp. 77–114). New York: Academic Press.

Little, D. R., Nosofsky, R. M., & Denton, S. E. (2011). Response-time tests of logical-rule models of categorization. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37, 1–27.

Little, D. R., Nosofsky, R. M., Donkin, C., & Denton, S. E. (2013). Logical rules and the classification of integral-dimension stimuli. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39, 801–820.

McDaniel, M. A., Cahill, M. J., Robbins, M., & Wiener, C. (2014). Individual differences in learning and transfer: Stable tendencies for learning exemplars versus abstracting rules. Journal of Experimental Psychology: General, 143(2), 668.

Medin, D. L., & Schaffer, M. M. (1978). Context theory of classification learning. Psychological Review, 85(3), 207.

Medin, D. L., Wattenmaker, W. D., & Michalski, R. S. (1987). Constraints and preferences in inductive learning: An experimental study of human and machine performance. Cognitive Science, 11(3), 299–339.

Nosofsky, R. M. (1986). Attention, similarity, and the identification–categorization relationship. Journal of Experimental Psychology: General, 115(1), 39.

Nosofsky, R. M. (1991). Typicality in logically defined categories: Exemplar-similarity versus rule instantiation. Memory & Cognition, 19(2), 131–150.

Nosofsky, R. M. (1992). Similarity scaling and cognitive process models. Annual Review of Psychology, 43(1), 25–53.

Nosofsky, R. M., & Palmeri, T. J. (1997). An exemplar-based random walk model of speeded classification. Psychological Review, 104(2), 266.

Nosofsky, R. M., & Palmeri, T. J. (1998). A rule-plus-exception model for classifying objects in continuous-dimension spaces. Psychonomic Bulletin & Review, 5(3), 345–369.

Nosofsky, R. M., & Zaki, S. R. (2002). Exemplar and prototype models revisited: Response strategies, selective attention, and stimulus generalization. Journal of Experimental Psychology: Learning, Memory, and Cognition, 28(5), 924.

Nosofsky, R. M., Clark, S. E., & Shin, H. J. (1989). Rules and exemplars in categorization, identification, and recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition, 15(2), 282.

Nosofsky, R. M., Palmeri, T. J., & McKinley, S. C. (1994). Rule-plus-exception model of classification learning. Psychological Review, 101(1), 53.

Nosofsky, R. M. (2011). The generalized context model: An exemplar model of classification. In Pothos, E. M., & Wills, A. J. (Eds.), Formal approaches in categorization (pp. 18–39). Cambridge University Press.

Palmeri, T. J., & Flanery, M. A. (1999). Learning about categories in the absence of training: Profound amnesia and the relationship between perceptual categorization and recognition memory. Psychological Science, 10(6), 526–530.

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323(6088), 533–536.

Schlegelmilch, R., Wills, A. J., & von Helversen, B. (in press). A cognitive category-learning model of rule abstraction, attention learning, and contextual modulation. Psychological Review.

Schwarz, G. (1978). Estimating the dimension of a model. The Annals of Statistics, 6(2), 461–464.

Shepard, R. N. (1964). Attention and the metric structure of the stimulus space. Journal of Mathematical Psychology, 1(1), 54–87.

Shepard, R. N. (1987). Toward a universal law of generalization for psychological science. Science, 237(4820), 1317–1323.

Smith, J. K. (1980). Models of identification. In Nickerson, R. S. (Ed.), Attention and performance VIII (pp. 129–158). Lawrence Erlbaum Associates.

Townsend, J. T., & Nozawa, G. (1995). Spatio-temporal properties of elementary perception: An investigation of parallel, serial, and coactive theories. Journal of Mathematical Psychology, 39(4), 321–359.

Trabasso, T., & Bower, G. H. (1968). Attention in Learning: Theory and Research. Wiley.

Yang, L.-X., & Lewandowsky, S. (2003). Context-gated knowledge partitioning in categorization. Journal of Experimental Psychology: Learning, Memory, and Cognition, 29, 838–849.

Zaki, S. R., & Nosofsky, R. M. (2004). False prototype enhancement effects in dot pattern categorization. Memory & Cognition, 32(3), 390–398.

Zaki, S. R., & Nosofsky, R. M. (2007). A high-distortion enhancement effect in the prototype-learning paradigm: Dramatic effects of category learning during test. Memory & Cognition, 35(8), 2088–2096.

Acknowledgements

The authors would like to thank Ken Kurtz for extremely helpful discussions and tutoring pertaining to the implementation of the DIVA model.

Author information

Authors and Affiliations

Contributions

Robert Nosofsky wrote the article and developed and fitted the formal models. Mingjia Hu programmed the experiments, conducted the statistical analyses, constructed the figures, confirmed the predictions from the best-fitting versions of the models, and assisted in the writing of the article.

Corresponding author

Ethics declarations

Ethics Approval

This study was approved by the Indiana University Bloomington Institutional Review Board.

Consent to Participate

The informed consent obtained from study participants was written.

Consent for Publication

Not applicable.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Appendix A. Illustration of the learning-during-test summed-similarity computation

Here we illustrate the LDT-exemplar model’s computation of the overall summed similarity to category B of an item presented on trial 5 of the test phase. The example assumes that the subject classified the test items from test trials 2 and 4 into category B, while classifying the test items from test trials 1 and 3 into category A. The short-term memory strength of a test item presented n trials back from the current test item on trial 5 is given by αn. The relative long-term memory strength for all exemplars presented during the training phase is denoted ltmstr.

Trial number | 1 | 2 | 3 | 4 | 5 |

Memory strength | \({\alpha }^{4}\) | \({\alpha }^{3}\) | \({\alpha }^{2}\) | \(\alpha\) | - |

Category response | A | B | A | B | - |

Thus, in this example, the overall summed similarity of the trial-5 test item [i(5)] to all category B exemplars, Si(5),B, would be given by.

where \({s}_{i\left(5\right),j}\) denotes the similarity of test item i(5) to each training-phase exemplar j from category B; and \({s}_{i\left(5\right),i\left(k\right)}\) denotes the similarity of test item i(5) to the test item presented on trial k of the test phase.

Appendix B. Fits of mixed-rule and LDT-exemplar models to the data from Conaway and Kurtz’s (2017) Experiment 1

In their Experiment 1, Conaway and Kurtz (2017) used the same training phase as in their Experiment 2A. However, at time of test, subjects were presented only with items from the lower-right portion of the Fig. 1 stimulus space. Specifically, they created a 7 × 7 grid of 49 stimuli occupying the lower-right region. This region included the six training items from the training phase as well as all items from the Quadrant-X transfer region. A total of 30 subjects were tested. The relative proportion of subjects who made each number of reduced-category (B) response in Quadrant X is listed in the “Observed” row of the Quadrant-X panel of our Table

5. Conaway and Kurtz did not report a frequency-distribution of correct responses for the training stimuli during the test phase; however, based on results reported in their article and in their Table 1, a reasonable estimate is that their subjects achieved mean accuracy roughly 0.93 on the training stimuli during the test phase. As an approximation, we assume that 13 of the 30 subjects (p = 0.433) classified 5 of the training stimuli correctly and that 17 of the 30 subjects (p = 0.567) classified all 6 of the training stimuli correctly. These estimated values are listed in the “Observed” row of the training-stimuli panel of our Table 5.

We fitted the mixed-rule and LDT-exemplar models to these data using the same procedures as described previously. The models are the same as described previously, although the sequence of stimuli experienced during the test phase (and that serves as input to the models) is different than in our applications to Experiment 2A. The maximum-likelihood predictions from the models for both the Quadrant-X and training-stimuli distributions are reported in Table 5. The best-fitting parameters from the models and their maximum-likelihood summary fits are reported in the Table 5 note. (These parameter settings are merely illustrative as a wide variety of parameter settings achieve roughly the same overall quantitative fit to the data.) As can be seen from inspection of Table 5, both models provide reasonably good accounts of the observed data. Because this version of the mixed-rule model uses fewer free parameters than does this version of the LDT-exemplar model, the fit that it achieves is more parsimonious than the one from the LDT-exemplar model. The main take-home message, however, is that both models do a reasonable job of accounting for the observed data.

Appendix C. Further details regarding the DIVA model-fitting procedure and results

Following Conaway and Kurtz (2017, p. 1314), the training exemplars (A and B in Fig. 8) for DIVA were represented within a ± 1 space, and we used linear interpolation and extrapolation to set the dimension values for the transfer stimuli in the generalization quadrants. Also following Conaway and Kurtz (2017, p. 1314), for any given simulation DIVA was initialized with random weights in the range (− R, + R) and trained with a random presentation sequence of the training stimuli that satisfied the constraints of the experimental design (12 blocks of each of the 6 training stimuli, with each of the B training exemplars presented twice). Following training, the weights were held fixed, and the trained network was used to classify each of the items presented during the transfer phase. Specifically, following Kurtz (2007, p. 565), for any given item i, one computes the sum-squared-error between the output activations and the teaching signals (input-pattern values) of each set of category output nodes, SSEA(i) and SSEB(i); the predicted probability that item i is classified in category A is then given by

The predicted response-frequency distributions were then built using the same procedures as already described for the exemplar and rule-based models.

We fitted DIVA using a three-phase procedure. In the first phase, we used a coarse-grained grid search across the free parameters in the model, with the grid specified in Table

6. In the second phase, we conducted a more fine-grained grid search with the finer grid centered around the best-fitting parameters from the coarse-grained search. Third, using the best-fitting parameters from the fine-grained grid search as starting values, we used the Hooke and Jeeves parameter-search algorithm to search for the final set of best-fitting parameters. In addition, we used 50 other random starting configurations of the parameters in these Hooke and Jeeve searches. The best-fitting parameters from the model for the three data sets are reported in Table 6.

Some comments are in order regarding the relation between our DIVA-fitting results and the presentation from Conaway and Kurtz (2017). First, in their Fig. 4, Conaway and Kurtz present a schematic, illustrative DIVA network that predicts that subjects will make predominantly reduced-category (B) responses in quadrant X. The authors acknowledge that the values depicted in the network are purely illustrative. However, the activation value for the illustrative hidden node is set at − 1, which is impossible for the logistic activation function (the activation value will be bounded between 0 and 1); therefore, it seems that an actual DIVA network would not be able to achieve this illustration. Second, in their Fig. 3, Conaway and Kurtz (2017) report results from simulations of DIVA showing that there is a small space of parameter values that allow DIVA to predict at least six (of nine possible) reduced category responses in quadrant X. It does not follow from this simulation, however, that there are parameter settings in DIVA that allow it to simultaneously predict high accuracy in the training region and the patterns of bimodal response-frequency distributions exhibited in the four generalization quadrants.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nosofsky, R.M., Hu, M. Generalization in Distant Regions of a Rule-Described Category Space: a Mixed Exemplar and Logical-Rule-Based Account. Comput Brain Behav 5, 435–466 (2022). https://doi.org/10.1007/s42113-022-00151-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42113-022-00151-4