Abstract

Teachers’ technology-related skills are often measured with self-assessments. However, self-assessments are often criticised for being inaccurate and biased. Scenario-based self-assessment is a promising approach to make self-assessment more accurate and less biased. In this study with N = 552 inservice and student teachers, we validated a scenario-based self-assessment instrument IN.K19+ for teachers. The instrument enables scenario-based self-assessment of instrumental and critical digital skills and technology-related teaching skills for teachers. In a confirmatory factor analysis, we show that the instrument has sufficient factorial validity. To test the predictive validity of the instrument, we examined the instruments’ relationship to the frequency of technology use during teaching and teacher-initiated student learning activities involving digital technologies. Results from structural equation modelling show that instrumental digital skills and technology-related teaching skills are positively related to the frequency of digital technology use during teaching, while critical digital skills are not. In terms of the initiation of student learning activities, instrumental and critical digital skills show relationships with initiating student learning activities that include lower cognitive engagement. Technology-related teaching skills are related to initiating learning activities that indicate higher cognitive engagement. The results show that instrumental and critical digital skills play an important role with respect to the basic use of digital technologies in the classroom, while technology-related teaching skills turn out to be crucial for more complex scenarios of digital technology use. This pattern of findings supports the predictive validity of the IN.K19+ instrument.

Zusammenfassung

Medienbezogene Kompetenzen von Lehrkräften werden häufig mittels Selbsteinschätzungen gemessen. Selbsteinschätzungen werden jedoch oft dafür kritisiert, dass sie ungenau und Verzerrungen ausgesetzt sind. Szenarien-basierte Selbsteinschätzung ist ein vielversprechender Ansatz, um Selbsteinschätzung genauer und weniger verzerrt zu gestalten. In dieser Studie mit N = 552 Lehrkräften und Lehramtsstudierenden haben wir ein szenarien-basiertes Selbsteinschätzungsinstrument für Lehrkräfte IN.K19+ validiert. Das Instrument ermöglicht Lehrkräften eine szenarien-basierte Selbsteinschätzung instrumenteller und kritisch-reflexiver Medienkompetenzen sowie medienbezogener Lehrkompetenzen. In einer konfirmatorischen Faktorenanalyse zeigen wir, dass das Instrument zufriedenstellende faktorielle Validität aufweist. Um die prädiktive Validität des Instruments zu testen, untersuchten wir die Zusammenhänge zwischen dem Instrument und der Häufigkeit des Medieneinsatzes während des Unterrichts sowie der von Lehrkräften mithilfe digitaler Medien initiierten Lernaktivitäten der Schüler*innen. Ergebnisse aus einer Strukturgleichungsmodellierung zeigen, dass instrumentelle Medienkompetenzen und medienbezogene Lehrkompetenzen positiv mit der Häufigkeit des Medieneinsatzes während des Unterrichtens zusammenhängen, kritisch-reflexive Medienkompetenzen hingegen nicht. In Bezug auf die Initiierung von Lernaktivitäten der Schüler*innen zeigen instrumentelle und kritisch-reflexive Medienkompetenzen Zusammenhänge zur Initiierung von Lernaktivitäten, die ein geringeres kognitives Engagement anzeigen. Medienbezogene Lehrkompetenzen stehen in Zusammenhang mit der Initiierung von Lernaktivitäten, die ein höheres kognitives Engagement anzeigen. Die Ergebnisse zeigen, dass instrumentelle und kritisch-reflexive Medienkompetenzen eine wichtige Rolle im Hinblick auf die grundlegende Nutzung digitaler Medien im Klassenzimmer spielen, während sich medienbezogene Lehrkompetenzen als entscheidend für komplexere Szenarien der Nutzung digitaler Medien erweisen. Dieses Ergebnismuster unterstützt die prädiktive Validität des IN.K19+ Instruments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In order to prepare students for the challenges and opportunities of a digital world, teachers need technology-related skills (Lachner et al. 2019; Sailer et al. 2021a), more specifically, instrumental digital skills (Fraillon et al. 2014; Senkbeil et al. 2013), critical digital skills (Ferrari et al. 2012; van Laar et al. 2017), and technology-related teaching skills (DCB 2017; Schmidt et al. 2009). To help teachers acquire these skills systematically, tools which help to measure the status quo and identify specific needs are of great value.

On the basis of the anchoring vignettes method by King et al. (2004), Sailer et al. (2021b) developed a promising measurement approach in which scenarios provide the basis for participants to contextualise and thereby improve the accuracy of their self-assessment. Indeed, research has shown that scenario-based measurement provides promising validation results (Kastorff et al. 2022; Sailer et al. 2021b).

In this study, we adopt this approach and present a validation of a scenario-based self-assessment instrument called IN.K19+ by assessing its factorial and predictive validity. The instrument is based on the K19 framework (DCB 2017) and is an extension of the IN.K19 instrument which measures technology-related teaching skills (Sailer et al. 2021b). In this study, we revalidated IN.K19 with a larger sample, which allowed us to address previous limitations. In addition, we extended IN.K19 by developing scenario-based self-assessment items for instrumental as well as critical digital skills and also present a validation of this extension. With IN.K19+, we aim to provide teachers with an easily accessible, reliable, and valid tool for assessing their technology-related skills, thereby supporting them in their professional development.

1.1 Measuring teachers’ technology-related skills

Most of the instruments currently available use self-assessments to measure teachers’ technology-related skills (see e.g. Ghomi and Redecker 2019; Rubach and Lazarides 2021; Schmidt et al. 2009; Valtonen et al. 2017). This is, they measure the extent to which participants feel confident that they possess a particular skill (Scherer et al. 2017). This measurement approach is popular because self-assessments promise high accessibility, easy implementation and cost-effectiveness while providing richness in information and a reliable and valid measure of self-assessment (Seufert et al. 2021). Moreover, self-assessments of teachers’ technology-related skills are thought to be closely related to teachers’ intentions to use digital technologies and are thus directed toward future behaviour. Therefore, self-assessments also play a role in teacher education and training (Scherer et al. 2017).

However, the validity of self-assessment instruments has often been criticised. Because self-assessments are strongly related to self-efficacy beliefs about potential performance, they make it difficult to predict participants’ actual performance (Lachner et al. 2019; Scherer et al. 2017). Moreover, participants’ self-assessments are sensitive to individual and contextual differences (Scherer et al. 2017; van Vliet et al. 1994) and thus can be biased and inaccurate (van Soest et al. 2011; van Vliet et al., 1994). Another point of criticism is that the formulation of items in self-assessment instruments is often vague (Scheiter 2021) which might lead to different interpretations of the items.

As a consequence, scenario-based approaches based on the anchoring vignettes method of King et al. (2004) were proposed as a means to make self-assessments more accurate while maintaining their advantages (Kastorff et al. 2022; Sailer et al. 2021b) as they present participants with authentic situations, mostly problems, and ask them to assess their skills in dealing with them. By enriching items with detailed contextual information, a frame of reference is given which prevents participants from making widely differing interpretations (King and Wand 2007). This is of particular relevance for teachers’ technology-related skills which are frequently assessed with vague item formulations (Scheiter 2021). Indeed, such an approach could be shown to both produce promising validation results for the self-assessment of technology-related teaching skills (Sailer et al. 2021b) and yield measurement results which are closer to objective test results than is the case with simple self-assessment (Kastorff et al. 2022).

1.2 Modelling teachers’ technology-related skills

The competent use of digital technologies in the classroom requires a complex set of different types of knowledge and skills. In the past, several comprehensive frameworks were developed which model these skills for the sake of teacher training and research.

According to the TPACK framework, teachers need pedagogical knowledge (PK), content knowledge (CK), and technological knowledge (TK) (Mishra and Koehler 2006). At the intersections of these three different knowledge areas four more components emerge (PCK, TPK, TCK, TPCK). Technological knowledge (TK) and technological pedagogical knowledge (TPK) are most relevant in the present context, as the focus lies on technology-related skills irrespective of content. Different frameworks have operationalised these knowledge areas by answering the question of what exactly teachers have to be able to do with digital technologies in their lessons (DCB 2017; Redecker 2017).

One of these is the K19 framework, which systematises the core skills teachers need in their classrooms in a digital world (DCB 2017). It models only those technology-related skills that are relevant for all teachers irrespective of subject matter or type of school. The K19 framework elaborates the knowledge areas of TK and TPK in detail and adds an action-oriented perspective to them. By distinguishing between basic digital skills (the action-oriented equivalent of TK) and technology-related teaching skills (the action-oriented equivalent of TPK), the framework encompasses two crucial dimensions of teachers’ professional technology-related skills.

1.2.1 Basic digital skills: Instrumental and critical digital skills

Basic digital skills comprise the skills needed by all citizens for full participation in a digital world (OECD 2015). They can be differentiated into instrumental digital skills and critical digital skills (Hobbs et al. 2011; Newman 2009; van Laar et al. 2017). The former enable the use of digital technologies in the first place like operating and applying technology, searching for and processing information, communicating and collaborating, and producing and presenting information with technology (Fraillon et al. 2014; Lachner et al. 2019; Senkbeil et al. 2013). However, for full participation in the digital world, those skills have to be complemented by critical digital skills (e.g. Buckingham 2003; Ferrari 2012; Hobbs et al. 2011; Newman 2009; Rubach and Lazarides 2021; van Laar et al. 2017). These comprise the understanding, analysis, evaluation, and critical reflection of media messages and digital technologies and of their social, economical, and institutional impact on individual people and society as a whole (e.g. Kersch and Lesley 2019; van Laar et al. 2017).

In view of the pedagogical challenges and chances of the digital condition (Stalder 2018) it seems obvious that teachers need both sets of skills and that, as a consequence, they have to be an integral part of teacher education (e.g. Ferrari 2012; Fraillon et al. 2014; Hobbs et al. 2011; Krumsvik 2011).

1.2.2 Technology-related teaching skills

However, to guarantee effective teaching with and about digital technologies teachers are supposed to be in need of technology-related teaching skills (DCB 2017; Mishra and Koehler 2006; Sailer et al. 32,33,a, b). The K19 framework allows for the specification of these particular skills by identifying 19 technology-related teaching skills that can be assigned to four typical phases of classroom-related actions which teachers perform, namely planning, implementing, evaluating, and sharing (DCB 2017; Sailer et al. 2021b).

1.3 Teaching with digital technologies

To capture aspects of teaching with digital technologies, research has often focussed on the relationship between the frequency of technology use in the classroom and instrumental digital skills and has found positive relationships (e.g. Eickelmann and Vennemann 2017; Fraillon et al. 2014; Law et al. 2008; Sailer et al. 2021a). Less research has been conducted on the relationship between critical digital skills and frequency of technology use during teaching, although positive relationships have been found for certain dimensions of critical digital skills such as social media skills (European Comission 2013) or skills of analysis, reflection, safety, and security involving digital technologies (Rubach and Lazarides 2021). Positive relationships have also been found between technology-related teaching skills and frequency of technology use (Endberg and Lorenz 2017; Sailer et al. 2021b).

Beyond this, however, technology-related skills enable teachers to use digital technologies more successfully to foster their students’ learning. In particular, digital technologies have been shown to have the potential of promoting active learning approaches conducive to students’ acquisition of knowledge and skills (Tamim et al. 2011). In this respect, the ICAP framework provides a helpful systematisation of learning activities (Chi 2009; Chi and Wylie 2014) which can be adapted productively to teaching and learning with digital technologies (Sailer et al. 32,33,a, b). The ICAP framework distinguishes four levels of learning activities: passive, active, constructive, and interactive (Chi and Wylie 2014). It is assumed that different learning activities correlate with different cognitive processes and different stages of cognitive engagement (Chi et al. 2018) with passive learning activities representing the lowest and interactive learning activities the highest level (Chi 2009). According to the ICAP framework, different learning objectives call for different learning activities and therefore it is important to carefully select and orchestrate different types of learning activities according to learning objectives in different stages and contexts of knowledge and skill development (Sailer et al. 2021a).

Research has shown that technology-related skills help teachers implement student-centred teaching approaches and actively engage the students in using digital technologies in the classroom (Ertmer and Ottenbreit-Leftwich 2010). In this respect, such skills help teachers to effectively initiate technology-related learning activities that are located at the upper end of the ICAP order (Sailer et al. 32,33,a, b). In accordance with the view that basic digital skills can be seen as fundamental preconditions for teaching with and about technology, there are indicators that they are also an important basis in relation to student-centred teaching practices (Rubach and Lazarides 2021) and teacher-initiated levels of learning activities (Sailer et al. 2021a). However, for the specific differentiation between instrumental and critical digital skills, the relations have not yet been investigated in empirical studies. In contrast, it can be shown that the full range of students’ learning activities involving digital technologies can be achieved only in a combination of basic digital skills and technology-related teaching skills (Sailer et al. 2021a). Accordingly, it was shown that technology-related teaching skills are of particular importance for the initiation of constructive and interactive learning activities (Sailer et al. 32,33,a, b).

1.4 The present study

The research goal of the present study was to validate a scenario-based self-assessment instrument based on the K19 frameworkFootnote 1 (DCB 2017) that provides comprehensive insight into the generic skills which teachers need in a digital world. The present instrument builds upon version 1.0 of the IN.K19Footnote 2 instrument whose factorial and predictive validity could be shown in a previous study (Sailer et al. 2021b). However, as this first study was conducted on a rather small sample, we now replicate it with a larger sample. This allows us to address previous limitations. Firstly, we now can validate the entire model of technology-related teaching skills instead of validating the four phases of the model separately (see Sailer et al. 2021b). Secondly, we extended the scope of IN.K19 by adding (+) basic digital skills, differentiated into instrumental digital skills and critical digital skills.Footnote 3 Instrumental digital skills were measured with 9 scenarios and critical digital skills with 10. We derived the basic assumption of the need for instrumental and critical digital skills from the K19 model (DCB 2017). We will also present a validation of this extension.

To assess the measurement quality of the scenario-based self-assessment instrument IN.K19+, we tested for its factorial validity (i.e., that the hypothesised latent constructs can be found in the observed responses) and for its predictive validity (i.e., whether the scale is empirically related to external real-world criteria) (DeVellis and Thorpe 2021). As it has been established that teachers’ technology-related skills are related to frequency of technology use and initiation of different types of learning activities, we used these aspects as our criteria for demonstrating predictive validity and conducting exploratory studies.

Regarding factorial validity, we investigated the following research questions: Do the factors instrumental digital skills (RQ1), critical digital skills (RQ2), and technology-related teaching skills (RQ3) have good psychometric properties?

Regarding the instrument’s predictive validity, we first investigated the following research questions related to the frequency of teaching with digital technologies: To what extent are instrumental digital skills (RQ4.1), critical digital skills (RQ4.2), and technology-related teaching skills (RQ4.3) related to the frequency of technology use during teaching?

On the basis of the findings of previous research (see Sect. 1.3), we expected instrumental digital skills, critical digital skills, and technology-related teaching skills to be positively related to the frequency of teaching with digital technologies.

H4.1:

Instrumental digital skills are positively related to the frequency of technology use during teaching.

H4.2:

Critical digital skills are positively related to the frequency of technology use during teaching.

H4.3:

Technology-related teaching skills are positively related to the frequency of technology use during teaching.

As a second criterion for predictive validity, we also considered the following research questions concerning the initiation of different types of learning activities involving digital technologies: To what extent are instrumental digital skills (RQ5.1), critical digital skills (RQ5.2), and technology-related teaching skills (RQ5.3) related to the initiation of different types of learning activities while teaching with digital technologies?

Theoretical conceptualisations postulate that basic digital skills are an important prerequisite for the initiation of learning activities involving digital technologies (DCB 2017). However, as empirical research on this is still rather scarce (see Sect. 1.3), we want to investigate RQ5.1 and RQ5.2 in an exploratory way.

In contrast, it can be shown (see Sect. 1.3) that technology-related teaching skills are necessary for addressing the full spectrum of learning activities, especially for those at the upper end of the ICAP order. Given this, and following the approach of Sailer et al. (2021b), we hypothesised that technology-related teaching skills would be most strongly related to the initiation of interactive and constructive learning activities and less strongly related to the initiation of active and passive learning activities.

H5.3:

Technology-related teaching skills are most strongly related to the initiation of interactive learning activities involving digital technologies, followed by constructive, active, and passive learning activities. [I > C > A > P]

2 Method

2.1 Sample and procedure

We conducted a cross-sectional study as an online questionnaire hosted on the Unipark platform (Tivian XI GmbH 2022). Data were collected between January 2020 and January 2022. Our target group consisted of inservice and student teachers with teaching experience in schools. Participants also needed to be fluent in German, as the survey was conducted in German. The recruitment of inservice teachers was carried out through contacts in our teacher education network, online advertisement, advertisement on training events for inservice teachers, and postgraduate study courses for inservice teachers who returned to university. As for the student teachers, we advertised our study in courses that student teachers typically take in advanced semesters when they are already done with their internships at schools. In addition, we placed online advertisements. As an incentive, participants were given feedback after completing the questionnaire. This feedback was provided in the form of an automatically generated graphic of the K19 skill model, showing participants’ respective levels of the self-assessed skills. An example of these graphics is given in Fig. 1. Participants were also offered the opportunity to consult with experts for more information about what their individual feedback graphic meant. From October 2020 to November 2020, they were also offered the opportunity to receive a compensation for participating.

We began our study by collecting demographic data from our participants. Then, we asked participants about the frequency of their digital technology use and the initiation of students’ learning activities during their teaching in a typical lesson. This was followed by the scenario-based self-assessment of instrumental and critical digital skills and then technology-related teaching skills.

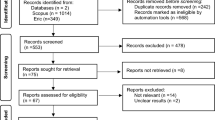

We stopped collecting data when 500 fully completed questionnaires were reached and a substantial amount of at least 100 inservice teachers had participated. Our survey was publicly accessible, and a total of N = 1,277 people started the survey. For our final sample, we included only participants who completed the whole questionnaire, and we excluded participants who stated they were younger than 18 or older than the maximum retirement age of 70. As our focus lies on participants with teaching experience, we included only student teachers with teaching experience in schools and excluded participants who reported having less than 1 month of teaching experience in schools (Sailer et al. 2021b). Our final sample consisted of N = 552 participants. Of these participants, 75.7% (n = 418) were female, and 23.2% (n = 128) were male. No participants reported being diverse, and 6 participants (1.1%) did not specify their gender.

The final sample consisted of n = 119 (21.6%) inservice teachers and n = 433 (78.4%) student teachers. Inservice teachers were on average 38 years old (M = 38.39; SD = 9.23) and had 119 months of teaching experience in schools (M = 119.39; SD = 92.95). Student teachers were on average 23 years old (M = 23.48; SD = 4.46) and had 6 months of teaching experience in schools (M = 5.92; SD = 7.40). On average, student teachers were in the 5th semester of their studies (M = 5.11; SD = 2.17).

The participants in our final sample took 28 min on average to complete the survey (M = 27.61; SD = 14.93).

2.2 Measures

To assess the frequency of technology use, the initiation of students’ learning activities, and technology-related skills, we used measures that have been used in other studies before (Sailer et al. 32,33,a, b). We conducted our study with IN.K19+ version 1.1, which is available in German and English on an open science repository https://osf.io/95xaj/.

2.2.1 Frequency of digital technology use during teaching

We assessed the frequency of digital technology use during teaching with a single-item measure (Sailer et al. 32,33,a, b). Participants were asked to think of a typical lesson of theirs and to estimate how much of this typical lesson was designed by using or supported by digital technologies. Values from 0% to 100% could be selected.

2.2.2 Initiation of students’ learning activities involving digital technologies

To measure the initiation of students’ learning activities involving digital technologies, we also used measures employed in previous studies (Sailer et al. 32,33,a, b). We presented a brief description of each ICAP learning activity describing students’ engagement in passive, active, constructive, and interactive learning activities. On the basis of these descriptions, we asked participants to estimate for each learning activity how often they use digital technologies like this in a typical lesson. Participants had to rate the frequency on a 5-point Likert scale ranging from ‘never’ (0) to ‘very often’ (4). To calculate the proportions of each teacher-initiated learning activity, we divided the resulting Likert scale score of the single learning activities by the sum score of all four learning activities. By multiplying these proportions by the frequency of technology use during teaching, we got the percentage of time that certain learning activities were initiated in a typical lesson involving digital technologies. This procedure resulted in four variables: the self-assessed initiation of students’ (1) passive, (2) active, (3) constructive, and (4) interactive learning activities involving digital technologies. These variables can be interpreted as percentages.

2.2.3 Instrumental digital skills and critical digital skills

Inspired by the anchoring vignettes method by King et al. (2004) and using the previous version of the instrument IN.K19 version 1.0 (Sailer et al. 2021b), we developed 9 scenarios for instrumental and 11 scenarios for critical digital skills. The scenarios were based on the framework of the Department of Media Education of the State Institute for School Quality and Educational Research in Munich (DCB 2017; ISB 2017). Each scenario described an example everyday situation in which the respective skill must be applied. We asked participants for each skill to rate 3 statements that followed the scenarios. The statements covered the following areas: having the respective knowledge, being able to perform a certain skill, and being able to give advice to others so that they could perform a certain skill. Participants rated each statement on a 5-point Likert scale ranging from ‘strongly disagree’ (1) to ‘strongly agree’ (5). In order to specify the context of the following self-assessment, this subsection was preceded by the following explanation: ‘The scenarios and statements refer to your use of digital technologies independent of the school context’. To give an example, Fig. 2 presents a scenario illustrating an instrumental digital skill and Fig. 3 a critical digital skill.

For this part of the instrument, we conducted think-aloud interviews with one university student and two experts on teaching with digital technologies. On the basis of the results, we revised the instrument. Further, Kastorff et al. (2022) showed that the instrument could significantly predict ICT measures. In the final instrument, self-assessed instrumental digital skills consisted of 27 items (reliability was α = .94). Self-assessed critical digital skills consisted of 33 items (α = .95).

2.2.4 Technology-related teaching skills

The self-assessment of technology-related teaching skills was based on the previous version of the instrument IN.K19 version 1.0 (Sailer et al. 2021b). These scenarios and statements were improved orthographically and linguistically. Three scenarios in the previous version consisted of two sub-scenarios. We combined the two sub-scenarios for these three scenarios into one each.

In the final instrument version 1.1, we presented 19 scenarios (DCB 2017) to participants and asked them to rate the statements that followed on a 5-point Likert scale ranging from ‘strongly disagree’ (1) to ‘strongly agree’ (5). As with instrumental and critical digital skills, example scenarios were presented in which the respective skill was required. Since this section of the survey was related to technology-related teaching skills, the scenarios depicted teaching situations and were preceded by the following clarification: ‘The scenarios and statements that now follow relate to your use of digital technologies in the school context’. Participants had to rate 3 items that covered the areas of knowledge, action, and advice for each scenario. Considered separately for the four phases of teachers’ classroom-related actions of the K19 model, planning consists of 27 items (reliability was α = .96) and implementing of 15 items (α = .93), evaluating of 6 items (α = .93) and sharing of 9 items (α = .94). All in all, self-assessed technology-related teaching skills consisted of 57 items (α = .98). Figure 4 presents a scenario illustrating a technology-related teaching skill.

2.3 Statistical analysis

We used a variety of statistical analyses to investigate our research questions about instrumental and critical digital skills and technology-related teaching skills. We used confirmatory factor analysis and latent modelling as outlined by Bollen (1989) to test the validity of the measurement models. Factorial validity was established for instrumental digital skills, critical digital skills, and technology-related teaching skills. Predictive validity was established through multiple latent regressions in which instrumental digital skills, critical digital skills, and technology-related teaching skills were used to predict the frequency of digital technology use during teaching and the initiation of student learning activities involving digital technologies. Model fit was determined by standard fit indices such as root mean square error of approximation (RMSEA) and confirmatory fit index (CFI), with acceptable fit indicated by values less than 0.08 and values greater than 0.90 respectively. In addition, Sattora-Bentler corrections were applied to all chi-square values according to Satorra and Bentler (2010). Analyses were computed using R4.03, and syntax files are available in the open science repository https://osf.io/95xaj/.

3 Results

3.1 Factorial validity

For basic digital skills, we built models for instrumental digital skills and critical digital skills (Models 1–2). For technology-related teaching skills, we built models for each of the four phases of teachers’ classroom-related actions of the K19 model: planning, implementing, evaluating, and sharing (Models 3–6). Table 1 shows that none of Models 1–6 fit the data well. Thus, we refined them by adding a scenario-specific factor to the measurement models of Models 1–6, as there was considerable covariance among items that referred to the same scenario which means that they measure different aspects of the same skill (Sailer et al. 2021b). The scenario-specific factor is built by all three items of each scenario. In this way, scenario-specific covariance was captured, and Models 7–12 emerged. Latent correlations between all scenario-specific factors and technology-related skills were set to zero, which led to a substantial improvement in the fit of the models to the data. This approach led to significant improvements (all p < .001) in the model fit for all models. In a next step, the models for the four phases of teaching with digital technologies were combined into a single second order model of technology-related teaching skills (Model 13) that showed acceptable fit as can be seen in Table 1.

Models 7–8 and 13 were retained as the measurement models for all further analyses. The results support the instrument’s factorial validity for the factors instrumental digital skills, critical digital skills, and technology-related teaching skills, as the models showed good psychometric properties as long as a scenario-specific factor was taken into account.

3.2 Predictive validity

We used multiple latent regressions to test the predictive validity of the instrument. Table 1 (lower part) shows that technology-related skills predicted the frequency of digital technology use during teaching (Model 14) and the initiation of students’ learning activities involving digital technologies (Model 15). Factor loadings were constrained to the values estimated in the final measurement models (Model 7, 8, 13) to avoid interpretational confounding (Bollen 2007; Burt 1976). Both models (Model 14 + 15) showed good fit to the data (see Table 1).

Model 14 is illustrated in Fig. 5. In this SEM, instrumental digital skills (β = .133; p = .005) and technology-related teaching skills (β = .138; p < .001) were related to the frequency of technology use during teaching, whereas critical digital skills were not. These results support hypotheses 4.1 and 4.3, which state that instrumental digital skills and technology-related teaching skills are positively related to frequency of digital technology use during teaching, but are not consistent with hypothesis 4.2, which states that critical digital skills are positively related to frequency of digital technology use.

Structural equation model (SEM) showing the frequency of technology use during teaching, instrumental digital skills, critical digital skills, and technology-related teaching skills. Latent variables are represented by circles, and measured variables by rectangles. Continuous lines describe significant relationships, whereas broken lines represent nonsignificant relationships. The values indicated on the lines are standardised beta values

Figure 6 shows the SEM for Model 15 concerning the initiation of different types of learning activities. For the exploratory part, which addresses the research questions of the extent to which instrumental digital skills (RQ5.1) and critical digital skills (RQ5.2) are related to the initiation of different types of learning activities involving digital technologies, the SEM showed that instrumental digital skills were related to the initiation of passive (β = .169; p = .002) learning activities. No significant relationships were found for the initiation of active, constructive, and interactive learning activities. The same applied to critical digital skills, for which a relationship with only the initiation of active learning activities (β = .139; p = .026) could be found. However, critical digital skills were not related to the initiation of passive, constructive, and interactive learning activities involving digital technologies.

Further, we hypothesised that technology-related teaching skills would show the strongest relationship with the initiation of interactive learning activities, followed by constructive, active, and passive learning activities. Following the approach of Sailer et al. (2021b), we have compared the standardised beta values in the SEM descriptively and found a negative relationship with the initiation of passive learning activities (β = −.090; p = .005) and positive relationships with the initiation of constructive (β = .338; p < .001) and interactive (β = .303; p < .001) learning activities. No significant relationship was found with the initiation of active learning activities. Because the hypothesised order of relationships (i.e., increasing from the initiation of passive learning activities to interactive learning activities) was not reflected in the SEM exactly as hypothesised, the results are not consistent with Hypothesis 5.3.

Structural equation model (SEM) showing the initiation of students’ learning activities involving digital technologies, instrumental digital skills, critical digital skills, and technology-related teaching skills. Latent variables are represented by circles, and measured variables by rectangles. Continuous lines describe significant relationships, broken lines represent nonsignificant relationships. The values indicated on the lines are standardised beta values

4 Discussion

In this study, we validated the scenario-based self-assessment instrument IN.K19+, for measuring basic digital skills and technology-related teaching skills. Regarding its factorial validity, the factor structure and internal consistency of the instrument show good to very good results if a scenario-specific factor is taken into account (Sailer et al. 2021b). With regard to the instrument’s predictive validity, instrumental digital skills and technology-related teaching skills were positively related to the frequency of digital technology use during teaching, while critical digital skills were not. An exploratory analysis concerning the initiation of students’ learning activities showed that instrumental digital skills were significantly related to the initiation of passive learning activities, whereas critical digital skills were associated with active learning activities. Technology-related teaching skills had a negative relationship with the initiation of passive learning activities and positive relationships with constructive and interactive learning activities.

The goal of our study was to provide a valid instrument using scenarios as a common and concrete frame of reference for participants (King and Wand 2007; van Soest et al. 2011). The results showed the approach’s suitability for self-assessment, as the hypothesised constructs were observed in the responses, confirming factorial validity. Regarding predictive validity, we only conducted an exploratory study for instrumental and critical digital skills and our results for teaching skills do not exactly match our hypothesis. Nevertheless, from an exploratory point of view, we think that the results as a whole tentatively reflected the expected patterns: Instrumental and critical digital skills emerged as part of the basic use of digital technologies during teaching in both passive and active learning activities. For more complex scenarios of digital technology use during teaching (e.g. the initiation of constructive and interactive learning activities), technology-related teaching skills turned out to be crucial. Moreover, these skills actually showed a reduction in the proportion of passive learning activities that are initiated, thus increasing the likelihood that students will be stimulated to engage more actively in learning during lessons. This pattern reflects the order of the ICAP framework (Chi 2009; Chi and Wylie 2014).

However, unexpected findings emerged. Instrumental and critical digital skills were not related to the initiation of constructive and interactive learning activities. Possibly these skills may be more relevant to shallow learning processes, while technology-related teaching skills are associated with deep learning. Furthermore, it was found that critical digital skills are not related to the frequency of technology use during teaching. There is still little research on these relationships, so we can only speculate as to how the results might be explained. One of our assumptions would be that this finding is possibly related to the cautious nature of critical digital skills, which encompasses considering the appropriateness of technology use. This point also raises the question of how teaching with digital technologies is related to teaching about digital technologies.

The IN.K19+ instrument was designed to be applicable across various subjects and types of schools, encompassing knowledge- and action-oriented facets of technology-related skills. Unlike other self-assessment tools (see e.g. Ghomi and Redecker 2019), IN.K19+ goes beyond technology-related teaching skills by also incorporating skills to be taught to students and aspects of media education. It provides teachers with graphical feedback of the current level of their skills, while giving them access to a comprehensive skill framework (DCB 2017), thus supporting their professional growth throughout different stages of teacher education and training. The instrument’s benefits also extend beyond individual teachers: It can serve as a basis for advisories and allows for the collection of comprehensive empirical data as a contribution to school development and educational policy making.

However, it should be kept in mind that scenario-based self-assessment is more time-intensive than regular self-assessment. The development of a shorter scale could lead to a more economic use. Yet, in contrast to objective measures, scenario-based self-assessment is still an easy-to-implement and accessible alternative. In this respect, further research should gain more insight into the relationships between pure self-assessment, scenario-based self-assessment, and objective measurement.

This point also leads to some limitations of the present study that need to be mentioned. The previous version of the instrument set the criteria for determining predictive validity, but it is debatable whether these criteria are also appropriate for the newly added critical digital skills with their specific characteristics. Furthermore, the measures used for validation should themselves be critically reviewed, although there are consistent results across studies (Sailer et al. 2021b). For example, frequency of digital technology use was assessed with a single-item measure, which makes the measurement susceptible to inaccurate estimation. However, as we believe that it is not enough to look at the quantity of digital technology use, we have also measured its quality. Yet, there are also limitations to be aware of. For example, technology-related teaching skills show a less strong relation to the initiation of interactive than constructive learning activities. Possibly participants understand interactive learning activities in different ways, even though they actually refer to very specific learning processes (Chi et al. 2018; Sailer et al. 2021a). Overall, it should be noted that it is difficult to adequately determine predictive validity as long as the relations between technology-related skills and the quality of digital technology use are themselves not entirely clear. Another limitation that needs to be noted concerns open science processes. Both our instrument and our data are freely available, which is an important step towards open science. However, although we closely followed the approach of Sailer et al. (2021b), this study was not pre-registered which would have contributed to strengthen our procedure.

Yet, from our perspective, the instrument can already provide a valid way of building on the advantages of self-assessment, while at the same time providing an anchor for participants’ self-assessments through the use of scenarios. We therefore believe that the scenario-based instrument IN.K19+ serves as a valuable tool for teachers, supporting them in acquiring and advancing their technology-related skills to meet the challenges of the digital world.

Notes

German: K19 = 19 Kernkompetenzen—19 core skills.

German: IN = Instrument—IN.K19 = instrument for the assessment of 19 core teaching skills.

IN.K19+ = instrument for the assessment of 19 core teaching skills plus basic digital skills.

References

Bollen, K. A. (1989). Structural equations with latent variables. John Wiley & Sons. https://doi.org/10.1002/9781118619179.

Bollen, K. A. (2007). Interpretational confounding is due to misspecification, not to type of indicator: Comment on Howell, Breivik, and Wilcox (2007). Psychological Methods, 12(2), 219–228. https://doi.org/10.1037/1082-989x.12.2.219.

Buckingham, D. (2003). Media education: Literacy, learning, and contemporary culture. Polity Press.

Burt, R. S. (1976). Interpretational confounding of unobserved variables in structural equation models. Sociological Methods & Research, 5(1), 3–52. https://doi.org/10.1177/004912417600500101.

Chi, M. T. H. (2009). Active-constructive-interactive: A conceptual framework for differentiating learning activities. Topics in Cognitive Science, 1(1), 73–105. https://doi.org/10.1111/j.1756-8765.2008.01005.x.

Chi, M. T. H., & Wylie, R. (2014). The ICAP framework: Linking cognitive engagement to active learning outcomes. Educational Psychologist, 49(4), 219–243. https://doi.org/10.1080/00461520.2014.965823.

Chi, M. T. H., Adams, J., Bogusch, E. B., Bruchok, C., Kang, S., Lancaster, M., Levy, R., Li, N., McEldoon, K. L., Stump, G. S., Wylie, R., Xu, D., & Yaghmourian, D. L. (2018). Translating the ICAP theory of cognitive engagement into practice. Cognitive Science, 42(6), 1777–1832. https://doi.org/10.1111/cogs.12626.

DCB (Forschungsgruppe Lehrerbildung Digitaler Campus Bayern) [Research Group Teacher Education Digital Campus Bavaria] (2017). Kernkompetenzen von Lehrkräften für das Unterrichten in einer digitalisierten Welt [Core competencies of teachers for teaching in a digital world. Merz Medien + Erziehung: Zeitschrift für Medienpädagogik, 4, 65–74.

DeVellis, R. F., & Thorpe, C. T. (2021). Scale development: Theory and applications. SAGE.

Eickelmann, B., & Vennemann, M. (2017). Teachers’ attitudes and beliefs regarding ICT in teaching and learning in European countries. European Educational Research Journal, 16(6), 733–761. https://doi.org/10.1177/1474904117725899.

Endberg, M., & Lorenz, R. (2017). Selbsteinschätzung medienbezogener Kompetenzen von Lehrpersonen der Sekundarstufe I im Bundesländervergleich und im Trend von 2016 bis 2017 [Teachers’ self-estimations of technology-related competences comparing different German federal districts and developments from 2016 to 2017]. In R. Lorenz, W. Bos, M. Endberg, B. Eickelmann, S. Grafe, & J. Vahrenhold (Eds.), Schule digital – der Länderindikator 2017 (pp. 151–177). Waxmann.

Ertmer, P. A., & Ottenbreit-Leftwich, A. T. (2010). Teacher technology change. How knowledge, confidence, beliefs, and culture intersect. Journal of Research on Technology in Education, 42(3), 255–284. https://doi.org/10.1080/15391523.2010.10782551.

European Comission (2013). Survey of schools: ICT in education. Benchmarking access, use, and attitudes to technology in Europe’s schools. European Commission. https://doi.org/10.2759/94499.

Ferrari, A. (2012). Digital competence in practice: An analysis of frameworks. Publications Office of the European Union. https://doi.org/10.2791/82116.

Ferrari, A., Punie, Y., & Redecker, C. (2012). Understanding digital competence in the 21st century: An analysis of current frameworks. In A. Ravenscroft, S. Lindstaedt, C. D. Kloos & D. Hernández-Leo (Eds.), 21st century learning for 21st century skills. 7th European conference on technology enhanced learning, EC-TEL 2012, Saarbrücken, September 18–21. 2012 Proceedings, Vol. 79–92. Germany: Springer.

Fraillon, J., Ainley, J., Schulz, W., Friedman, T., & Gebhardt, E. (Eds.). (2014). Preparing for life in a digital world: IEA international computer and information literacy study. International report. Springer. https://doi.org/10.1007/978-3-319-14222-7.

Ghomi, M., & Redecker, C. (2019). Digital competence of educators (DigCompEdu): Development and evaluation of a self-assessment instrument for teachers’ digital competence. In Proceedings of the 11th International conference on computer supported education (CSEDU 2019) (Vol. 1, pp. 541–548). SciTePress. https://doi.org/10.5220/0007679005410548.

GmbH, T. X. I. (2022). EFS survey, version EFS Fall 2022. Tivian XI.

Hobbs, R., Felini, D., & Cappello, G. (2011). Reflections on global developments in media literacy education: Bridging theory and practice. Journal of Media Literacy Education, 3(2), 66–73.

ISB (Staatsinstitut für Schulqualität und Bildungsforschung München, & Referat Medienbildung) [State Institute for School Quality and Educational Research Munich, Department of Media Education] (2017). Kompetenzrahmen zur Medienbildung an bayerischen Schulen [Competence framework for media education in Bavarian schools]. https://mebis.bycs.de/assets/uploads/mig/2_2017_03_Kompetenzrahmen-zur-Medienbildung-an-bayerischen-Schulen-1.pdf. Accessed 22 Sep 2023

Kastorff, T., Sailer, M., Vejvoda, J., Schultz-Pernice, F., Hartmann, V., Hertl, A., Berger, S., & Stegmann, K. (2022). Context-specificity to reduce bias in self-assessments: Comparing teachers’ scenario-based self-assessment and objective assessment of technological knowledge. Journal of Research on Technology in Education. https://doi.org/10.1080/15391523.2022.2062498.

Kersch, D., & Lesley, M. (2019). Hosting and healing: A framework for critical media literacy pedagogy. Journal of Media Literacy Education, 11, 37–48. https://doi.org/10.23860/JMLE-2019-11-3-4.

King, G., & Wand, J. (2007). Comparing incomparable survey responses: Evaluating and selecting anchoring vignettes. Political Analysis, 15(1), 46–66. https://doi.org/10.1093/pan/mpl011.

King, G., Murray, C. J. L., Salomon, J. A., & Tandon, A. (2004). Enhancing the validity and cross-cultural comparability of measurement in survey research. American Political Science Review, 98(1), 191–207. https://doi.org/10.1017/s000305540400108x.

Krumsvik, R. J. (2011). Digital competence in the Norwegian teacher education and schools. Högre Utbildning, 1(1), 39–51.

van Laar, E., van Deursen, A. J. A. M., van Dijk, J. A. G. M., & de Haan, J. (2017). The relation between 21st-century skills and digital skills: A systematic literature review. Computers in Human Behavior, 72, 577–588. https://doi.org/10.1016/j.chb.2017.03.010.

Lachner, A., Backfisch, I., & Stürmer, K. (2019). A test-based approach of modeling and measuring technological pedagogical knowledge. Computers & Education, 142, 103645. https://doi.org/10.1016/j.compedu.2019.103645.

Law, N., Pelgrum, W. J., & Plomp, T. (2008). Pedagogy and ICT use in schools around the world. Findings from the IEA SITES 2006 Study. Springer. https://doi.org/10.1007/978-1-4020-8928-2.

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017–1054. https://doi.org/10.1111/j.1467-9620.2006.00684.x.

Newman, T. (2009). A review of digital literacy in 3–16 Year olds: Evidence, developmental models, and recommendations. Part C: Catalogue of evidence. https://kipdf.com/a-review-of-digital-literacy-in-3-16-year-olds-evidence-developmental-models-and_5aaf5c161723dd349c80a11b.html. Accessed 22 Sep 2023

OECD (2015). Students, computers, and learning. Making the connection. OECD Publishing. https://doi.org/10.1787/9789264239555-en.

Redecker, C. (2017). European framework for the digital competence of educators: DigCompEdu. Publications Office of the European Union. https://doi.org/10.2760/159770.

Rubach, C., & Lazarides, R. (2021). Addressing 21st-century digital skills in schools—Development and validation of an instrument to measure teachers’ basic ICT competence beliefs. Computers in Human Behavior, 118, 106636. https://doi.org/10.1016/j.chb.2020.106636.

Sailer, M., Murboeck, J., & Fischer, F. (2021a). Digital learning in schools: What does it take beyond digital technology? Teaching and Teacher Education, 103, 103346. https://doi.org/10.1016/j.tate.2021.103346.

Sailer, M., Stadler, M., Schultz-Pernice, F., Franke, U., Schöffmann, C., Paniotova, V., Husagic, L., & Fischer, F. (2021b). Technology-related teaching skills and attitudes: Validation of a scenario-based self-assessment instrument for teachers. Computers in Human Behavior, 115, 106625. https://doi.org/10.1016/j.chb.2020.106625.

Satorra, A., & Bentler, P. M. (2010). Ensuring positiveness of the scaled difference chi-square test statistic. Psychometrika, 75(2), 243–248. https://doi.org/10.1007/s11336-009-9135-y.

Scheiter, K. (2021). Lernen und Lehren mit digitalen Medien: Eine Standortbestimmung [Technology-enhanced learning and teaching: An overview]. Zeitschrift für Erziehungswissenschaft, 24(5), 1039–1060. https://doi.org/10.1007/s11618-021-01047-y.

Scherer, R., Tondeur, J., & Siddiq, F. (2017). On the quest for validity: Testing the factor structure and measurement invariance of the technology-dimensions in the technological, pedagogical, and content knowledge (TPACK) model. Computers & Education, 112, 1–17. https://doi.org/10.1016/j.compedu.2017.04.012.

Schmidt, D. A., Baran, E., Thompson, A. D., Mishra, P., Koehler, M. J., & Shin, T. S. (2009). Technological pedagogical content knowledge (TPACK): The development and validation of an assessment instrument for preservice teachers. Journal of Research on Technology in Education, 42(2), 123–149. https://doi.org/10.1080/15391523.2009.10782544.

Senkbeil, M., Ihme, J. M., & Wittwer, J. (2013). The test of technological and information literacy (TILT) in the national educational panel study: Development, empirical testing, and evidence for validity. Journal for Educational Research Online, 5, 139–161. https://doi.org/10.25656/01:8428.

Seufert, S., Guggemos, J., & Sailer, M. (2021). Technology-related knowledge, skills, and attitudes of pre- and in-service teachers: The current situation and emerging trends. Computers in Human Behavior, 115, 106552. https://doi.org/10.1016/j.chb.2020.106552.

van Soest, A., Delaney, L., Harmon, C., Kapteyn, A., & Smith, J. P. (2011). Validating the use of anchoring vignettes for the correction of response scale differences in subjective questions. Journal of the Royal Statistical Society: Series A (Statistics in Society), 174(3), 575–595. https://doi.org/10.1111/j.1467-985X.2011.00694.x.

Stalder, F. (2018). The digital condition. Polity Press.

Tamim, R. M., Bernard, R. M., Borokhovski, E., Abrami, P. C., & Schmid, R. F. (2011). What forty years of research says about the impact of technology on learning: A second-order meta-analysis and validation study. Review of Educational Research, 81(1), 4–28. https://doi.org/10.3102/0034654310393361.

Valtonen, T., Sointu, E., Kukkonen, J., Kontkanen, S., Lambert, M. C., & Mäkitalo-Siegl, K. (2017). TPACK updated to measure pre-service teachers’ twenty-first century skills. Australasian Journal of Educational Technology, 33(3), 15–31. https://doi.org/10.14742/ajet.3518.

van Vliet, P. J. A., Kletke, M. G., & Chakraborty, G. (1994). The measurement of computer literacy: A comparison of self-appraisal and objective tests. International Journal of Human-Computer Studies, 40(5), 835–857. https://doi.org/10.1006/ijhc.1994.1040.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

J. Vejvoda, M. Stadler, F. Schultz-Pernice, F. Fischer, and M. Sailer have no competing interests to declare that are relevant to the content of this article.

Acknowledgement

This research was supported by a grant from the German Federal Ministry of Research and Education (Grant No. 01JA 1810). The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Data and material

Data and material is available under https://osf.io/95xaj/.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vejvoda, J., Stadler, M., Schultz-Pernice, F. et al. Getting ready for teaching with digital technologies: Scenario-based self-assessment in teacher education and professional development. Unterrichtswiss 51, 511–532 (2023). https://doi.org/10.1007/s42010-023-00186-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42010-023-00186-x

Keywords

- Technology-related teaching skills

- Instrumental digital skills

- Critical digital skills

- Scenario-based assessment

- Self-assessment

- Student learning activities