Abstract

When the 2022 Russian full-scale invasion of Ukraine forced millions of people to leave their homes, officials worldwide scrambled to estimate the number of people who would seek refuge in their countries. There were a limited number of official tools in place to lean on to help determine this estimate. In this article, we investigate the possibility of using various publicly available organic (i.e. non-designed) data to predict forced movement from Ukraine early in the crisis. In particular, we establish Ukrainian-language insecurity and contextual indicators from multiple data sources, namely Google Trends, Twitter/X, local newspapers, the ACLED database, and the GDELT database. We compare the usefulness of these indicators in predicting forced migration into three neighboring countries: Poland, Slovakia, and Hungary. To minimize the challenge of temporal misalignment between the organic data and actual movement, we develop a lagging and aggregation framework. Findings reveal Google Trends variables are a robust leading indicator of observed forced migration for this conflict. While other indicators are less strong, they still capture shifts in forced migration flows, highlighting the potential for using publicly available organic data during emerging forced displacement crises.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

According to the United Nations High Commissioner for Refugees (UNHCR), more than 110 million people were displaced from their homes at the end of 2022 [1]. Even though there are many drivers of forced migration, such as political instability, violence, and environmental change [2, 3], measuring them as a crisis emerges is difficult. Forced migration often occurs in places that are hard for researchers to reach. As a result, modeling forced migration using aggregated data across time and space is insufficient for assessing the dynamics of emerging crises.Footnote 1

This paper assesses whether and how new sources of information can be used for predicting forced displacement. These new sources capture displacement and its drivers at a daily temporal scale, permitting us to investigate emerging displacement related to the Russian invasion of Ukraine—the largest displacement crisis in Europe since the second world war. This conflict is also unprecedented in terms of its attention from governments, international organizations, and the media. Initial displacement occurred internally within Ukraine, from the east and south toward the west and north, and internationally, as Ukrainians crossed into nearby countries and to other countries in the European Union [5].

Previous work has shown that Twitter/X [6,7,8], Meta [9,10,11], and Google Trends [12,13,14,15,16] can be used to measure forced and planned migration. However, these studies do not assess the relative strengths and weaknesses of various indicators across digital sources for the same displacement situation. In this article, we are interested in the digital trail of organic (that is, public, non-design) data sets that can be used to predict forced out-migration. We conduct a comparative analysis of different indicators constructed from different public organic sources of data: Global Database of Events, Language, and Tone (GDELT; a database that identifies events and tone from news and other internet data), Twitter/X (social media discussion), Armed Conflict Location and Event Data Project (ACLED; a manually compiled database containing information on conflict events), local/regional newspapers, and Google Trends (aggregated web search data). We develop an Indicator Construction and Assessment Framework that supports the creation and evaluation of conceptually different indicators from organic data and includes a component that accounts for temporal misalignment between an indicator and the ground truth data which, in this study, consists of estimates of border crossings collected by Ukraine’s State Border Guard Service and subsequently compiled by UNHCR. We assess whether and how these indicators predict or nowcast migration, focusing on border crossings from Ukraine to Hungary, Poland and Slovakia.Footnote 2

We find that model-based estimates created from indicators using Google search data related to travel, health insecurity, and physical insecurity, align closely to UNHCR border crossing estimates. In addition, although less strong, other indicators still capture major shifts in displacement. Together, these findings highlight the potential for using a combination of publicly available organic sources during emerging crises of displacement. This is particularly important when traditional data sources are not available.

The main contributions of this article are as follows:

-

We assess the predictive ability of indicators (constructed from different organic data sources) that indirectly represent one or more drivers of forced displacement.

-

We propose an Indicator Construction and Assessment Framework that addresses the temporal misalignment problem in small-timescale prediction via lagging and aggregation, expanding emerging forced migration analytics to finer timescales.

-

We find that, in Ukraine, searches for travel-related terms prior to leaving are the strongest indicator of movement, suggesting use of these types of signals in future crises in places that have sufficient internet penetration.

The remainder of the paper is organized as follows. Section “Migration analytics background” provides an overview of existing studies that predict migration in general and forced migration in particular, including documenting how researchers use organic data for predicting movement. Section “Data” presents the five organic data sources we consider, and the border crossing data we use as a proxy for persons displaced from Ukraine. In Section “Indicator construction and evaluation”, we introduce our general framework for predicting forced displacement with organic data, before describing the statistical methods we use in Section “Statistical methods”. We present results from the analysis in Section “Results” and summarize them in Section “Discussion”, before discussing the steps required to use such a framework to help predict displacement during an emerging crisis. Section “Limitations” describes our limitations. We offer some substantive and methodological conclusions in Section “Conclusions” and ethical considerations in Section “Ethical considerations”.

Migration analytics background

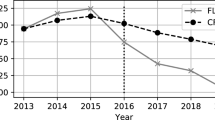

As of April 2024, UNHCR reported more than 6 million refugees from Ukraine.Footnote 3 More than one million refugees have been recorded in Germany, Poland, and Russia. Most Ukrainians left by crossing the border into neighboring countries; others have been forcibly taken to Russia [17]. Although early on many Ukrainians left their homes, after the end of the first phase of the war (early April 2022), the Ukrainian government encouraged people to return. Compared to the 2016 Syrian refugee crisis, many countries in the European Union (EU) activated the EU Temporary Protection Directive which permits protection of people displaced by Russian’s invasion of Ukraine. Consequently, in these EU countries, Ukrainians receive residence and work permits. Figure 1 shows the number of people crossing the border into three neighboring countries.Footnote 4 For these countries, the initial surge that occurred immediately after the war subsided by mid-March 2022, and border crossings became fairly stable for the next five months.

The large-scale displacement seen in Fig. 1 requires significant resources in host countries to meet the food and shelter needs of refugees. If host countries had the capacity to predict out- and in-flows, especially early in displacement situations, they would have greater capacity to respond with humanitarian and other relief. In contrast to planned migration, forced displacement occurs in contexts that do not allow for real-time survey data collection. One consequence is that, in most displacement situations, researchers are unable to capture the temporal granularity needed to understand the rapidly-changing early dynamics of displacement. Our work seeks to fill this gap by understanding whether and how indirect indicators that rely on different organic data sources capture displacement at a fine temporal scale at the outset of a crisis.

Traditional approaches for understanding Ukrainian displacement

As described above, collecting forced displacement data is difficult and rarely timely. Some data may be collected by UNCHR, the UN Agency for Refugees; other data may be collected by IOM (International Organization for Migration), the UN Agency for Migrants. Yet, how much data are collected and recorded for use by others depends on funding and other factors.

Field surveys are the key tool used to understand the dynamics of forced migration, but they typically yield data that describe movement across years rather than weeks or days (e.g. [18, 19]). Since Ravenstein’s laws of migration in 1889, migration theory has relied on survey data [20, 21]. More recently, longitudinal micro-level data about international migration have become more commonplace [22]. In contrast, this paper relies on organic data, which are not designed to understand forced migration, and assesses their utility given they have much shorter lag times.

Organic data in migration

Given the speed and scale of the Ukraine crisis, traditional indicators of bilateral migration could not aid in early decision making. Using novel data sources, including organic data, for understanding displacement has been identified as an important direction [23,24,25]. To be useful, organic data sources have to be available to international researchers and be leading indicators of movement. If both requirements are met, computational frameworks producing indicators could ideally predict the scale, source, and destination of migratory flows. Therefore, finding ways to use these data are of great interest for the computational study of forced migration [26]. Yet using these data to predict social or behavioral phenomena is not without its pitfalls [27] and ethical complexities [28, 29].

A wide variety of organic data sources exist (see [30] for a review in the context of migration) and migration scholars have begun to test which of these are most promising as indicators of movement. One large category of such indicators are geotagged data, where location information is used directly to measure migration flows. We begin with an overview of these studies, but also note that geolocated data have limitations (see [30]). For example, there was so little usage of geotagged tweets that in 2019, Twitter removed geotagging from tweets, allowing only images to be tagged with precise geolocation.Footnote 5 As a consequence, other studies—which we discuss subsequently—utilize organic data in novel ways, to extract additional information (e.g., xenophobic sentiment) and circumvent the sparsity of geotags (e.g., by using location mentions to capture spatial specificity [8, 15, 16]).

Some authors use digital data to directly measure migration. [31] uses LinkedIn to estimate changes of residency. Meta/Facebook collects information on individuals who report a change in location. These data have been used as an estimate of human mobility [32, 33] In Rwanda, [34] produced estimates on the basis of mobile phone data. Similarly, geotagged email data have been used to predict planned migration [35]; and geolocated tweets were used by [6] to estimate planned migration to OECD countries. More recently, [36] used geolocated Twitter data to estimate out-migration from Venezuela. [37] use geolocated data from the Facebook Advertising Platform to generate real-time estimates of mobility in the European Union and [38] use the same data to capture daily population displacement in Ukraine. In addition, [39] use Facebook data to model the impacts of the United Kingdom’s Brexit on out-migration.

While these studies emphasize the usefulness of geolocated data, it is not clear that robust indicators of geolocated movement are publicly available for most emergent crises. As such, migration scholars have looked for other information to extract from organic data. For example, some authors use data from Facebook ads which count the expected number of users of a given demographic profile to study differences in xenophobic attitudes related to U.S. immigration [9] or to study migration in the aftermath of the 2019 hurricane in Puerto Rico [10]. Others have used the Global Database of Events, Language, and Tone (GDELT, see Sect. 3.2) to measure conflicts and social unrest in origin countries, using them as signals of “push” factors to predict asylum-related migration [40]. Newspapers, particularly local newspapers in the origin or destination region of interest, also have relevant information as they may influence the perceptions of prospective migrants or describe events that operate as push or pull factors [41, 42].

Another important organic data source is web search activity. Web search activity may offer a window into the perceptions, intentions and insecurities of individuals in an origin country. Several studies use Google Trends data [43, 44] to predict migration. In these studies, indicators related to the economy, in particular labor markets, correlate with labor migration [13, 45, 46], as well as detect the intentions of Syrian migrants in Turkey to move on to European countries [47]. Other studies, such as [48], found that queries made in the language of origin, but from the destination country can help reveal migration patterns. For example, [49] recorded the search intensity of Croatian phrases searched for in Austria. More broadly, Google Trends data have been successfully incorporated in models predicting asylum flow to European countries [40] and combined with survey data to predict international migration to OECD (Organization for Economic Cooperation and Development) countries [50].

However, other authors have questioned the utility of certain Trends-based indicators, with [51] finding limited utility in the context of international migration. [52] cautiously endorses the utility of Google trends when studying migration from Japan to Europe, noting some potential drawbacks.

One recent study [53] examines the use of Google trend data in the Ukrainian crisis from February to June 2022. The authors examine the relationship between the total searches up to May 2022 for “weather" in Ukrainian or Russian and the number of Ukrainian refugees registered within a given host nation over the same time period as reported by UNHCR, finding a strong correlation of \(R^2=0.93\). Additionally, they study the use of the search term “evacuation" (again in Ukrainian/Russian) within Ukraine’s Oblasts over two month-long periods, finding that the usage qualitatively seems to correlate with the locations where most of the fighting occurs. This study is complementary to ours since we use a more detailed temporal granularity that measures dynamics at a daily level, enabling high resolution early warning for supporting timely humanitarian response. The detailed resolution also necessitates developing a more sophisticated statistical framework that can account for temporal mismatch between organic indicators and border crossing data.

Some studies have also attempted to blend multiple organic data sources or blend organic sources with administrative or traditional survey data. This is crucial for reducing the biases and gaps that may exist when using a single organic data sources. Singh and colleagues used topic buzz indicators from tweets and regional newspapers and conflict event counts in different locations in Iraq to predict internal displacement in Iraq [8]. Organic data have also been blended with official statistics to predict planned migration between European countries [11]. Ref. [54] combines Twitter data with the American Community Survey to predict internal migration flows between states within the US. While these studies highlight the viability of organic data for predicting displacement, they do not compare the quality of the different indicators to assess which ones may be more beneficial when a crisis emerges.

Finally, we note that while many studies report successful usage of organic data to predict migration movements, matters of sample selection remain salient in the literature. All sources of organic data are biased (e.g., due to skewed user characteristics that make social media and other organic sources non-representative samples). One possible explanation for the success of organic data, despite biased samples, is that migration scholars use them to measure when conditions are changing. That is, variation is caused by events or conditions that affect many members of society. In such situations, the temporal variation might be captured sufficiently by one or more groups.

Data

In this section, we describe the different data sources used in our analysis. We begin by describing the border crossing data we use as ground truth forced movement data. We then describe the organic predictor data, and finally, show the relationships between the different organic data sources. Note that the study period is February 1, 2022 to September 1, 2022, and that all data sources are captured daily.

Border crossing data

We use daily border crossing data from Ukraine to its three neighboring countries obtained from UNHCR. Such fine-grain data are typically not available during acute migration crises, and as such we have a rare opportunity to investigate at a high level of temporal granularity the relationship between various indicators and an (imperfect) measure of “ground truth”. Figure 1 shows the border crossings for the first 6 months of the conflict, beginning on February 24, 2022. These figures include Ukrainian citizens and third country nationals, but do not count citizens of the destination country returning home from Ukraine. We see that the movement is characterized by a hump at the beginning of the crisis in all observed destination countries followed by a period of more constant, stable movement. A close look at the stable period reveals significant daily seasonality. Figure 4 shows that crossings peak during the middle of the week and taper off during the weekend. Given this variation, a model representing these data should include a day-of-week effect.

Predictor data

We evaluate indicators developed from five different organic data sources: Twitter/X, Google Trends, online newspapers, Armed Conflict Location and Event Data Project (ACLED), and Global Database of Events, Language, and Tone (GDELT). We construct topic buzz indicators from Twitter/X, Google Trends and online newspapers. From GDELT, we capture sentiment, and from ACLED, we capture events and fatality counts.

More specifically, to compute topic buzz, we construct seven topics, three that capture insecurity (Health Insecurity, Food Insecurity, Physical Insecurity), three that capture context (Environmental Context, Economic Context, Political Context), and finally a direct measure of intentions to leave the country (Flee or Travel). Each topic is defined as a collection of relevant words and phrases that were manually identified by social scientists on our research team and are presented in Appendix B. To construct each topic indicator, we searched each post or newspaper article for the topic words and incremented the daily count correspoding to a given topic each time one of those words was found in the text. These topics were created in English and then translated into Ukrainian. The Ukrainian words were used to determine the topic buzz from Ukrainian language text. We use a dictionary-based approach for topics to maintain a high level of interpretability, and use Ukrainian language posts and articles because previous literature shows that organic signals in the primary language of the origin country are more informative than English text [8].

Twitter/X

We use Twitter as an example of a social media data source. For this study we use the Twitter Decahose API (Application Programming Interface), a daily ten percent sample of posts/tweets.Footnote 6 Ideally, we would use tweets authored only in Ukraine. However, given that images, not posts were geotagged when the crisis began, we focused on posts with location mentions. We filter tweets to include only Ukrainian language content and further filter them to include only those tweets that mention a major city in Ukraine, thereby focusing on only spatially relevant discussion. Subsequently, for each topic, we count the number of posts on a given day containing any of that topic’s words. This gives us an integer-valued indicator for each day for each topic.Footnote 7 Therefore, our Twitter indicators are measuring the topical content of tweets, without reporting on the sentiment or emotional components.Footnote 8

In our analysis, we have no expectation that Twitter users represent a random sample of society. Ref. [55] reported that Twitter users are more likely to be from densely populated areas, be male and have race differing from the general population in a complex, spatially-dependent manner across the United States (US). On the other hand, [56] found globally that slightly more Twitter users were female on the basis of the genders traditionally associated with self-reported first names. More recently, a Pew study [57] found that US Twitter users are disproportionately young, educated and Democrat leaning. Furthermore, we do not expect all Twitter users to author tweets with the same frequency; indeed [57] found that 10% of users generate 80% of tweets. More generally, [58] argue that minorities feel unwelcome on big content sites and consequently post less. The Decahose sample represents a random sample of (public) tweets. However, because of differences in tweeting frequency across various groups, it will not be a random sample of Twitter users. In 2021, 60% of Ukrainians were registered on at least one social network [59, 60], and of those aged 18–29 13% use Twitter [61, 62]. We also pause to mention that using Twitter/X (or any social media platform) means that we only capture the conversations of those who want to share information. For our methods to be successful, it is not necessary for everyone to post about and event or discuss a topic. But it is necessary for a sufficient number to post so that the changing dynamics at a location can be captured. In other words, we do not need everyone posting about a bridge that is not passable. But we do need a higher number discussing the bridge than would discuss it if nothing had happened to it.

Google trends

What people search for may also give insight into different movement patterns. Therefore, we construct variables using Google Trends [44].Footnote 9 Google Trends returns an intensity score for each provided search term, indicating the relative frequency of searches for that term in the region of interest, in our case Ukraine.Footnote 10 For each topic, we conduct a Google Trends search on each of its words individually. Google Trends allows at most five words to be searched simultaneously, so we followed a procedure of keeping one word common in subsequent searches and use the intensities associated with the common words to make the intensities across searches comparable. After downloading the intensities of each individual word, we define the intensity associated with a topic for a given day as the average of the word intensities associated with that day.Footnote 11

Local and regional newspapers

Given war has been at the forefront of text-based news media in Ukraine, we use the NewsAPIFootnote 12 to collect newspaper articles from 23 local and regional Ukrainian news outlets.Footnote 13 We deploy the same sets of topics from our Twitter/X analysis on the Ukrainian language newspaper articles. Again, we construct daily variables defined as the number of articles containing any word associated with a specific topic. These variables capture regional dynamics rather than those associated with individual people, thereby offering insight about subgroups that may or may not use social media or search engines. We acknowledge that different news sources capture different types of content and have their own agendas and leanings (see, e.g. [64, 65]). This can impact the indicators we create. For this reason, we use many different news outlets when building the indicators.

ACLED

The ACLED database aggregates reports of armed conflicts throughout the world,Footnote 14 and their Ukraine dataset goes back to January 1, 2018. According to their website,Footnote 15 ACLED’s analysts use traditional and new media sources as well as local partners to manually curate a dataset of significant conflict events. For this study, we use their Ukraine dataset. While ACLED categorizes events, e.g. an armed conflict or a nonviolent protest, for this study we simply counted the daily number of events of any type reported by ACLED in Ukraine to construct an event count variable. Because ACLED also provides daily estimates of fatalities associated with each event, we constructed a fatalities indicator by summing over all events identified in Ukraine. We expect that many of the armed conflict events recorded by ACLED will be associated with people’s decisions to leave Ukraine.

GDELT

GDELT [67] is an aggregator of newspaper and blog data.Footnote 16 In contrast to ACLED, GDELT automatically maps information in news articles into discrete events. Their data collection includes articles from 1979 to the present. Each event has various computed characteristics, including negative or positive tonality, whether it is violent or not, and ratings based on conflict study indicators. For the purposes of our study, we collect GDELT data associated with each Ukrainian administrative region (i.e. oblast) and separately record the positive and negative sentiments on a given day. These values are generated by GDELT using the text associated with the different events. We do not construct them from the text directly.Footnote 17

We then aggregate them by summing across all of Ukraine to produce two indicators for each day, a positive sentiment indicator and a negative sentiment indicator. Since GDELT aggregates both over text representing higher quality information in newspapers and potentially lower quality information in blogs, there is the potential for varying quality in the sentiment indicators. However, because all these news sources are read, both high and low quality information sources are impacting the perception of those who may decide to move, making it interesting to compare against our manually curated newspaper data set.

The correlation between organic variables; blue indicates a positive correlation, red indicates a negative correlation, and higher shade intensity indicates a stronger correlation. Each block corresponds to a given data source, labeled on the x-axis, and each row corresponds to a given topic labeled on the y-axis

Relationships across datasets

Figure 2 shows the correlation structure of the daily indicators we created from each of our data sources during the study period. Along the diagonal squares, which indicate to what extent different indicators from the same data source correlate with each other, we find that newspaper indicators are highly positively correlated with one another, as are the Twitter/X indicators to a lesser extent. This suggests that these data sources are primarily measuring a single latent construct. GDELT’s tone indicators are also positively correlated with each other. The only exception here is Google Trends, which correlate negatively and positively with different indicators, suggesting the possibility of distinct phenomena being measured. For example, as physical threat terms increase, economic terms decrease. Notably, the correlation between datasets is generally low, with larger correlations observed between Google Trends and GDELT and ACLED.

The Indicator Construction and Assessment Framework. A Organic data are collected from publicly available sources and organic indicators are calculated. B Topics and sentiment/events for a given day are computed before being combined and shifted by the “laggregator”. C A statistical model is fitted on the basis of the laggregated predictor data and the ground truth data. D The best fitting lags and moving average windows are selected in a supervised manner. E The predictions are then evaluated using held out, future ground truth data

Indicator construction and evaluation

When conducting a study based on digital indicators, it is important to consider the target population, research question, unit of analysis, and coding strategy [68]. We target digital data platforms for the purpose of understanding if there are digital traces that have a relationship to migration behavior. Our unit of analysis is aggregated to the daily level, and as detailed below, we use a topic-modeling coding strategy. These considerations are especially important for platforms that do not provide researchers with detailed, individual level data, e.g. Google trends.

To assess different indicators constructed from organic data streams, we use a framework that incorporates both indicator construction and assessment of the strength of the indicator for prediction. Figure 3 shows the main components of the framework. We begin by identifying the public organic data sources that may contain useful direct or indirect information about whether people are being forced to move from one location to another. For this study, most of our sources contain textual data, e.g. Google Search terms, local newspapers, and tweets. Therefore, the framework incorporates a data translation step that helps researchers define a mapping from the raw text to variables that represent aggregated counts at temporal and spatial scales of interest. This corresponds to the first layer of indicator construction identified by [69], namely that of mapping from unstructured data into structured data.

We focus on three types of variables from the organic data: topic buzz, event occurrences, and perception variables [24]. We use different data sources to capture these different types of indicators (see Sect. 3). Topic buzz attempts to measure the amount of discussion about specific direct and indirect drivers of forced movement; examples of direct drivers include topics that capture discussion about fleeing or moving from one place to another. On the other hand, discussions about specific insecurities (health, physical, and food) or discussions about traditional macro- or-meso drivers of forced displacement such as the economy, politics, or the environment are indirect drivers. Topic buzz variables are constructed by building topic lists containing words and phrases that are indicative of a specific theme of interest.Footnote 18 Event variables measure the count of events about a specific topic in a location of interest. For example, it may be the number of bombs or the number of terrorist attacks. Some event data sources also provide the number of fatalities that result from violence-related events. Finally, perception variables measure the perceived threat of individuals during conflict. We capture perception by measuring the tone/sentiment or the stance of the conversation about insecurities or traditional macro- or meso-drivers.

There are two related concerns when topic and perception indicators are constructed in this way: (1) who is in the sample, and (2) who is not? When focusing on who is in the sample, if there is global interest in the crisis, counts may be inflated in a biased way. For example, [7] reported significant global interest in social media during the Arab Spring which might lead to overrepresentation of the digital imprints of individuals directly participating in that event for a naive indicator. While there are a number of ways to mitigate bias, restricting the language analyzed and/or capturing data from the regions of interest are two strategies to help reduce such bias. With respect to considering who is missing from the sample, this is not always clear. For example, in some regions of the world, where men have more access to the internet, women are more likely to be missing.Footnote 19 For these reasons, it is important to use a variety of different data sources during an emerging crisis, including newspapers that are likely to reflect community or regional topics and perceptions. By using different sources we can attempt access to a sufficient number of different subpopulations in a region.

One way to assess a variable’s value for measuring displacement would be to calculate its correlation with a measure of migration flow. But such a simple approach would miss indicators that, for example, may not perfectly temporally align with migrant flows but may still contain important information. To this end, after our indicators are constructed, we use the “Laggregator” (described in the next section) to create a data set with a range of lags, leads, and temporal granularities for each location. After identifying the best lag/aggregation combination for each indicator, we test between indicators without assuming a uniform lag and aggregation. This corresponds to [69]’s second layer of indicator construction: creating statistically relevant information. Finally, we evaluate predictions using test data, i.e. ground truth data, to determine the strength of each indicator for forecasting migration. This prepares them to serve as part of [69]’s third layer of indicator construction: when it actually augments traditional variables. We note that it is more likely that a combination of the signals will be the best predictors of emerging movement. However, before building a complete model, this type of assessment can help us learn about the individual relationship between each organic signal and movement.

Statistical methods

We begin this section by describing the time-aggregation technique we used on the raw indicator variables and then presenting the statistical model used to assess the predictive value of each indicator.

Lagging and aggregation

Given the significant variability in geography and transport infrastructure, people leaving their homes from different areas of a country will take different amounts of time to reach a border. For example, we expect someone leaving from a city near the Polish border to take less time to cross the Ukraine–Poland land border relative to someone fleeing from a western town near the Russian border. However, if these two hypothetical individuals make up their mind to leave on the same day, we expect to see an internet trail simultaneously.

To account for this variability, we propose a lagging and aggregation, or laggregation. The lagging accounts for the fact that it will take some amount of time for anyone deciding to leave Ukraine to actually cross the border, and the aggregation accounts for the variability in time such a trip takes depending on the individual’s circumstances.

Aggregation is implemented via a moving average of size \(2K+1\), where \(K\in \{1,\ldots ,30\}\) is the radius of the window, meaning our upper bound for averaging is 2 months, which we expect to be more than required to complete a trip from inside Ukraine to one of its borders. The lagging is implemented simply by shifting the dates of the organic variables relative to the border crossing data. Previous studies have explored lagging or aggregating digital trails to predict standard migratory flows (e.g. [13]), but they determined the amount of aggregation without reference to ground truth data. By contrast, we estimate the laggregation parameters in a supervised manner via enumeration of all possible models and selection of the model with the greatest \(r^2\). This approach allows us to conduct a detailed sensitivity analysis for parameter selection. Lag was estimated in a similar manner (considering lags 23 days ahead or behind and windows of size up to 30).Footnote 20 We thus can estimate the lag or lead of a given indicator with respect to forced migration by fitting a univariate model and estimating those parameters. In addition to the theoretical motivation, this is empirically important for smoothing because changes in some indicators appeared to be more sudden than changes in the border crossing data.

Time series model

In general, we expect variation in the amount of migration occurring on a given day of the week. Figure 4 reveals such day-of-week seasonality in the three countries we study. We incorporate this into our model by introducing day-of-week effect variables that take a value 1 on a given day of the week and 0 otherwise.

While a robust statistical model that incorporates all the relevant variables at different temporal and spatial resolutions is important for predicting forced displacement, to assess the value of each individual indicator, we use straightforward linear models. Specifically, we deploy a linear regression model that takes into account weekly seasonality as well as a laggregated indicator. We model the order of magnitude of border crossings as a linear combination of our predictors and the seasonality variables with a Gaussian error structure.

Ultimately, our model is expressed as follows:

where \(y_t\) represents the outflow on day t in units of individuals, \(\beta _0\) is the intercept term, \(\beta _x\) is the slope and \(z_t = \frac{1}{2W+1} \sum _{\omega =-W}^W x_{t+\tau +\omega }\) the lagged and aggregated organic variable, where \(\tau\) denotes the lag in days and W is the moving average window radius. Finally, \(\delta\) represents the day of week effect. We estimate the parameters via least squares on the log scale.

Preliminary experiments revealed that using Poisson or Negative Binomial generalized linear models, which may be viewed conceptually as more appropriate since they explicitly model the count nature of the data, lead to very similar predictions and conclusions as using a Gaussian linear model on the log of flow (likely due to the large number of border crossings). As a result, we use the simpler log-Gaussian model. In addition, while our focus is on univariate models, the inclusion of additional variables presents no practical or conceptual difficulties as long as they are required to share the laggregation parameters \(\tau\) and W. Otherwise, the search space increases exponentially.

Results

Experimental design

We implement our methodology in Python using the statsmodels [71] package.Footnote 21 The Python code implementing these analyses and producing the figures is available online.Footnote 22

In addition to evaluating retrospective fit of organic variables to migratory flows, we conduct prediction experiments which consist of using organic variables to nowcast [72,73,74] flow given some initial period of observed flow and organic data. Specifically, we gave the model described in Sect. 5 data on flows and organic variables up to a given point in time and then asked it to predict the flow for the following 3 weeks with the organic data available. We conduct one prediction exercise in the early stage of the crisis where the border crossings were quite dynamic (up to March 10 2022), as well as one during the steady state of the crisis beginning on May 25th. Because the steady state lasts longer than the acute period, our prediction task for this state consists of three separate test periods which we average to determine predictive performance. At each of these time points we measure the out-of-sample mean absolute error for univariate models for each indicator in terms of predictive mean squared error in the original scale of the data.

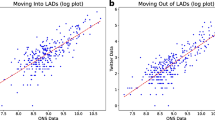

Best fit analysis

We first present results evaluating retrospective fit of the best performing indicator for each organic data source. Figure 5 shows five panels. In each panel, the solid blue line represents border crossings from Ukraine to Hungary, Slovakia, or Poland. Each panel also shows the best fitting univariate model for each organic data source (dashed orange line). The best fitting variable from Twitter/X is the economic indicator which to some extent captures the qualitative behavior of the data with a small hump at the beginning of the conflict, followed by a more stationary period. However, it is not quite able to match the relative size of the hump and stable periods. Overall, the Google Trend travel indicator has the best fits, which, with appropriate lag and aggregation, is able to fit the data qualitatively (\(r^2=0.86\)). Among the ACLED events and fatalities indicators, the fatalities indicator has a better fit, but still fails to capture both phases of the conflict. News data, on the other hand, are best represented by the Health insecurity topic but are not quite able to provide as good a fit with this simple model.

Lead lag analysis

We next examine the lags and window sizes estimated for each data source and indicator. The left subfigure of Fig. 6 shows the best fitting lag for each indicator. Each dot represents an indicator. The color of the dot shows which organic data source the indicator was generated from. The x-axis shows the estimated lag/lead for each indicator and the y-axis shows the fit of the model. We see that the Google Trends indicators tend to be leading and generally have good fits, with the travel indicator having an \(r^2\) above 0.8 and a lead of approximately eight days. The negative tone indicator of GDELT also in this quadrant seems to have a slightly bigger lag. The best fitting lagging variable is the economic Twitter/X indicator which has an \(r^2\) just under 0.8 and seems to lag behind the crossing data by approximately one week. The right subfigure shows the best window sizes for each indicator. The travel indicator with the best fit has a window size of approximately 2 weeks and the window sizes with best fit for most indicators varies between 5 days and 3 weeks. However, a number of indicators have their window estimated at the edge of the allowable values. These indicators generally had mediocre fit and may not be accurately capturing the relationship. They are could be more useful if they are combined with other indicators.

Prediction Exercise. The left figure shows the total outflow from Ukraine during the first few months of the crisis, as well as the test periods given by vertical lines (see text). The right figure shows the normalized predictive performance during each period. The color of the dot shows the type of indicator, and a dashed line connects the same indicator at the two time points. Lower is better

Predictive analysis

We next present the results of the prediction analysis. Recall, one prediction task is during the more volatile early stage of the war and the second is during the steady-state period. We provide indicator and border crossing data up to a given date as training data, and evaluate look-ahead mean squared prediction error for the following three weeks, providing indicators but not border crossing data. The first prediction task gives training data going up to March 10th and measures how well our organic variables perform at the beginning of a crisis. The second prediction task consists of three prediction periods during the stationary phase of the crisis. We combine the predictive error of all three periods to estimate the performance of an indicator in this second stage. Because predictions are much harder to make in the beginning phase of a crisis than during the chronic phase, we standardize the errors to have mean 0 and variance 1 at each of time point. The right panel of Fig. 7 shows the top five from each of these two prediction exercises. We notice that four of the trends indicators are are among the best predictors, as is the health insecurity indicator from our news data set, the GDELT negative tone indicator, and the ACLED fatalities indicator. In contrast, there is no Twitter/X indicator in the list of best predictors. As a reference point, we also show the predictive performance of the predictor-free strategy of simply propagating the previous week’s mean border crossing numbers forward for the next 3 weeks. Although the strategy is in the middle of the pack during the initial dynamic period, it dominates all model-based strategies in the subsequent period, highlighting that as forced displacement becomes more constant, using previous movement data (if available) are more useful than using organic data.

Discussion

This article builds on the nascent literature on forced migration forecasting and nowcasting during an emerging crisis. Specifically, we considered individual indicators constructed from different organic data sources to identify which best capture forced migration. Focusing on the Ukraine conflict, we find that certain organic variables, in particular those constructed from Google Trends data related to travel and insecurities, provide good fits to border crossing data and can serve as leading indicators for predictive analysis. We also found that during the early stage of the crisis, the News-Health, GDELT-Negative-Sentiment, and ACLED-Fatalities indicators had less prediction error when compared to the naive strategy of propagating forward previous migration levels in Fig. 7. The Twitter indicators did not seem to correlate with the particular ground truth data we used in this analysis at our level of granularity.

These findings suggest that organic variables, especially those constructed from Google Trends, may serve as indirect measures of displacement in an emerging crisis. As we discussed in Sect. 3.2, there is an ongoing discussion in the migration analytics community as to the usefulness of Google Trends. In this context, we found it has strong potential in forecasting forced displacement, as Google trends indicators, when properly shifted and aggregated, had the best fit to the data and qualitatively followed the trajectory of the migration. An advantage of Google Trends indicators is that they are unobscured by the changing dynamics of discourse (Twitter/X) or journalism (GDELT, Local Newspapers); instead they simply aggregate individual searches that track the ‘intent to leave.’ None of our other data sources were able to provide as good a fit over the entire data period.

Notably, since weekly estimates of migration are not available for many historical forced migration crises, and certainly most crises are not as closely monitored by the international community as is the Ukraine crisis, we see much potential for organic data in such data-limited contexts. We found that all the indicators spiked during the initial period of the conflict. However, some of the signals were much weaker than others. While our statistical modeling structure was not able to exploit this to provide a quantitatively accurate estimate as was the case with Google Trends indicators, it may still be useful to have a sense that something is amiss qualitatively.

It is not surprising that the ACLED death count indicator performed best as a lagging indicator. It was likely more surprising that the ACLED events indicator was not as strong. On the one hand, this refugee crisis is ultimately being driven by the armed combat that ACLED records. But most of the individuals leaving the country may not be displaced by a particular occurrence of conflict, but rather by the threat of conflict [42]. This may explain the observed minimal correlation. The GDELT negative tone indicator was stronger than positive tone. Separating sentiment into two indicators was important for seeing this relationship. Still, alignment to forced displacement was not as strong as other indicators, perhaps this is expected since armed conflict is likely to generate large volumes of press coverage with negative sentiment.

It is also interesting that the News-Health Insecurity indicator was the most predictive during the early phase, but not at all so during the late phase. Looking at the Health insecurity news fit (Figure A.4 of the Appendix), we see that the news indicators are fairly flat, reflecting the intense and continuous media interest during the first six months of war. In this study, it is possible that news-based indicators were of limited utility as they may have been reporting on protracted fighting in locations from which most people had already fled. Previous research has shown the utility of news-based indicators in situations where worldwide attention was not as prolonged.

Another important finding was that organic variables were more useful during the early, dynamic part of crisis. Once the displacement flow is more constant, using the previous week’s mean is a reasonable measure. We suspect that this pattern may be present in some other crises as well.

The massive escalation of Russian involvement in Ukraine in February 2022 led to the largest European refugee crisis since the Second World War and forced many to suddenly leave their homes. As of writing, the conflict continues with no clear end in sight. The next phase of the war is highly uncertain and, although border crossings appear stationary for the moment, it is possible that a Russian escalation of the invasion or successful Ukrainian counteroffensives may lead to another wave of movement (though it is difficult to imagine one of the same initial scale). In such an event we will continue to monitor organic variables to see if they are informative and continue to capture the changing dynamics of this large-scale forced displacement.

Limitations

We view the limitations of this study as falling primarily into two categories: first, those related to restrictions of the scope of the migration data and processes we studied, and second, those related to the generation and deployment of our organic data indicators. We begin by examining the former.

Our analysis is limited to border crossings from Ukraine to Slovakia, Hungary and Poland, but the Ukrainian refugee exodus has been a truly global phenomenon, and it would be interesting to perform a similar analysis while extending the scope of destinations considered. Quite plausibly, the digital imprints of someone intending to move around the world are very different from those of someone intending to only just get on the other side of a land border. For instance, we might expect searches for plane tickets to correlate more with global forced migration, and searches for road directions or bus routes to correlate with forced migration somewhere closer. Conversely, our analysis treated Ukraine as monolithic, but actually, the experiences of refugees leaving the country will differ vastly depending on exactly where in Ukraine they originate. The heaviest fighting has been in the East of the country, and, especially later in the crisis, leaving home was a much riskier affair than it would be for a resident of Kyiv. This presents two main challenges for future work. Firstly, we will need to increase the spatial resolution of our organic indicators to account for these more subtle variations in migration experience. And second, we need to develop a model which can simultaneously consider data about internal migration, giving us an understanding of what is happening within Ukraine itself, with international data of refugees actually leaving Ukraine. Developing this model is complicated by the fact that internal and external data are collected by two different United Nations entities (IOM and UNHCR, respectively), which report data with different levels of temporal and spatial granularity and use different methodologies for data collection. Thus, such a unified model represents a distinct challenge. Additionally, we relied on a source of fine resolution ground truth data in order to form and evaluate our indicators. However, organic data are much more widely available than ground truth data, and a pertinent question is how to form indicators using only the former. One approach would be to study the commonalities between different contexts that do have ground truth data available to determine if there are any general features that could be exploited even without access to ground truth. We leave this as future work.

Now we turn to the second class of limitations, those pertaining to our organic indicators, which we further subcategorize into firstly the restrictions of our current study and secondly constraints on the possibility of expanding this analytic approach to other migration contexts.

For this study, we considered only a subset of the possible data sources in constructing our organic indicators. Namely, qualitatively different platforms, such as Facebook, Telegram, mobile phone data, emails, and LinkedIn to name a few, could contain information complementary to the data sources we studied. It would furthermore be interesting to compare indicators developed from Twitter/X and Google Trends to competing platforms (Mastadon, Bluesky, Telegram, etc. in the case of Twitter/X and Yandex in the case of Google).

Additionally there are limitations to our text analytics. We used a dictionary approach for its interpretability. We acknowledge that this predicates our analysis on a particular instantiation of each of our topics via our choice of keywords. Both a different choice of topic keywords or text preprocessing could lead to changes in the analysis [75]. Considering less interpretable techniques for determining topics such as topic modeling and use of large language models can help alleviate some of these concerns [76]. Finally, there are limitations related to the availability and quality of corporate data indicators. The data may only be made available for a limited time, or may be subject to variation in how the indicators are defined or computed. For example, in Google trends, researchers have found variation in results returned for identical queries at different times [77, 78].

We now consider what difficulties may be encountered in porting this analytic framework to migration beyond the 2022 Ukrainian context. An exciting implication of our results is that it may be possible to gain higher resolution insight into a migration crisis that is receiving comparatively little coverage from the international community. This is because organic data would still be available in such situations, although potentially different platforms would be most salient in different countries. But future work is necessary to determine the best temporal and spatial mapping between different organic indicators and flow for different regions of the world. Furthermore, in this study, we found that a single indicator was suitable to adequately model the migration process. But in other case studies, this is unlikely to be so. Therefore, it is important to consider multiple indicators in a single model. While straightforward from an implementation perspective, the paucity of data on migration means it is statistically challenging to achieve this without fear of overfitting. Future work will need to determine how best to combine several indicators in this framework without incurring unacceptable estimation risk. And finally, the utility of internet data in predicting migration from a given country is predicated on that country having sufficient internet penetration. In Ukraine, internet use has steadily grown from 2008 to 2019 (22–71%) [59, 79, 80], and there is evidence of significant uptick since then due to the COVID-19 pandemic [59, 60]. This places Ukraine above the 2022 global average of 66% penetration [81].

Conclusions

Reliable measures and indicators of human movements are hardest to obtain in exactly those situations in which they are most needed: armed conflict, natural disaster or political collapse. This article has shown the potential to use organic data to fill this gap when deployed as part of an analytic framework that can account for the temporal discrepancies between when organic data are generated in the course of migration and when that migration is actually tallied by officials (via laggregation). Through a case study on the Ukraine crisis, we found that Google Trends data pertaining to intentions to travel mapped very closely to border crossings recorded to Slovakia, Hungary and Poland from Ukraine. We also found that other organic data sources, namely newspapers, GDELT, ACLED, and Twitter/X capture some of the dynamics, but were not as strong individually. Combined within a single model, they may be more quantitatively informative.

We are optimistic about the potential to use similar analyses to gain insight into forced migration crises across the world, particularly those for which there are fewer available official statistics. Important future work remains in determining how to calibrate indices for different crises, as well as which indices to consult in different regions of the world. There is also great potential for using this approach in the early stages of a migration crisis, but equally important future work in determining how to estimate model parameters when limited training data are available and the data are of coarse granularity. While there is still much future work, this research is an important step to effectively using organic data when other data are not available.

While we certainly recognize that each individual refugee crises deserves special consideration as to which platforms would be most appropriate, we think that there are some general conclusions suggested by this case study. First, Ukraine is a country with relatively elevated internet penetration, and one in which the majority those fleeing did not fear the government of their country. We suspect in circumstances where internet access is limited or where individuals fleeing are monitored by a government, those displaced may be less amenable to using Google search, reducing the value of the Google trends indicator that was so prominent in this study. And while use of a given platform by migrants is a necessary condition for that platform’s usefulness, so too is data availability. While Google trends offers a public API, the same is not true of all search engines, and in certain contexts it may be required to use a platform with a secondary or tertiary market position due to data availability concerns.

Ethical considerations

We know that using organic data, particularly social media data raises different ethical concerns. To mitigate these concerns, all our indicators are created using publicly available datasets. Even though our data are all from public sources, we store the raw data on a protected Cloud infrastructure that only researchers on our team can access. Our goal is to protect individual privacy and follow FAIR guidelines [82]. For our analysis, we only use daily aggregate counts, further protecting individual privacy. To support reproducability and privacy, we share the daily aggregate counts (as opposed to the raw data) in a data repository. The border crossing data we use are simply counts per day and do contain reference to any individual information. This study was conducted under Georgetown University IRB STUDY00000579.

Data availability

The processed organic indicators will be available for download at https://portals.mdi.georgetown.edu/public.

Notes

The Gravity Modeling framework [4] is commonly used for such analysis.

The selection of these countries is based on available ground truth border crossing data at a daily temporal scale.

We gratefully acknowledge UNHCR for these data.

A small number of accounts have enabled the geotagging feature at the account level. However, collecting individual account information is costly when the analysis unit is posts.

This study was conducted prior to the change in ownership of Twitter/X. The Decahose API is no longer available to the academic community and new APIs have been made available, though at greater cost. Future work is needed to assess the viability of using these data after the ownership change for forced migration analytics.

We also considered using the number of users who post and find that both numbers are highly correlated, so either would be appropriate for this analysis.

For the current study, we only focus on topic buzz from Twitter/X. Other signals may be interesting to consider in future work, including sentiment, emotion, and stance.

We use the Python package pytrends—https://pypi.org/project/pytrends/.

Google trends allows users to go back to 2004, though with the caveat that the data collection processes have changed during that time. According to their user interface, the most recent such change was implemented on January 1st, 2022, meaning that our study was conducted entirely within a single published process. Due to privacy concerns, this platform will not report searches made by few people, which is more likely to influence data produced by minority groups. Furthermore, a search for e.g. plane tickets is predicated both on access to the internet and the prospect of affording plane tickets, potentially leading to an overrepresentation of those of higher socioeconomic status.

Compared to GDELT (see Sect. 3.2), which has a much larger collection of papers and blogs that are not rated on reliability, this selection of newspapers allows us to use reliable and popular sources.

Data downloaded from their website [66].

We used Google’s BigQuery to access GDELT’s database.

Topics can be built using unsupervised or semi-supervised algorithms or manually. We discuss our approach in Sect. 3.2.

Ref. [70] find this to be the case in Saudi Arabia.

These numbers were empirically determined. Predictive studies in Sect. 6.4 show that this does not result in overfitting.

We use the OLS function to estimate the log of the number of border crossings on the day of the week along with the lagged and aggregator organic variables.

References

UNHCR. (2023). Five takeaways from the 2022 UNHCR global trend report. https://www.unrefugees.org/news/five-takeaways-from-the-2022-unhcr-global-trends-report/?SF_monthly=701Rf000004DYWGIA4 &SF_onetime=701Rf000004DeQRIA0. Accessed 11 July 2023.

Bauer, T., & Zimmermann, K. (1998). Causes of international migration: A survey. In C. Gorter, P. Nijkamp, & J. Poot (Eds.), Crossing borders: Regional and urban perspectives on international migration (Vol. 1, pp. 95–127). Routledge.

Betts, A. (2013). Survival migration: Failed governance and the crisis of displacement. Ithaca: Cornell University Press.

Anderson, J. E. (2011). The gravity model. Annual Revista de Economia, 3(1), 133–160.

UNHCR (2023). Ukraine Situation Flash Update 54. https://data.unhcr.org/en/documents/details/103134. Accessed 11 July 2023.

Zagheni, E., Garimella, V. R. K., Weber, I., & State, B. (2014). Inferring international and internal migration patterns from twitter data. In Proceedings of the 23rd international conference on world wide web (pp. 439–444).

Tufekci, Z. (2014). Big questions for social media big data: Representativeness, validity and other methodological pitfalls. In Proceedings of the international AAAI conference on web and social media, vol. 8 (pp. 505–514).

Singh, L., Wahedi, L., Wang, Y., Wei, Y., Kirov, C., Martin, S., Donato, K., Liu, Y., & Kawintiranon, K. (2019). Blending noisy social media signals with traditional movement variables to predict forced migration. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining. KDD ’19 (pp. 1975–1983). New York, NY: Association for Computing Machinery.

Zagheni, E., Weber, I., & Gummadi, K. (2017). Leveraging facebook’s advertising platform to monitor stocks of migrants. Population and Development Review, 43(4), 721–734.

Alexander, M., Polimis, K., & Zagheni, E. (2019). The impact of hurricane maria on out-migration from Puerto Rico: Evidence from facebook data. Population and Development Review, 45(3), 617–630.

Rampazzo, F., Bijak, J., Vitali, A., Weber, I., & Zagheni, E. (2021). A framework for estimating migrant stocks using digital traces and survey data: An application in the United Kingdom. Demography, 58(6), 2193–2218.

Vicéns-Feliberty, M. A., & Ricketts, C. F. (2016). An analysis of Puerto Rican interest to migrate to the United States using Google Trends. The Journal of Developing Areas, 50(2), 411–430.

Avramescu, A., & Wiśniowski, A. (2021). Now-casting Romanian migration into the United Kingdom by using Google search engine data. Demographic Research, 45, 1219–1254.

Golenvaux, N., Alvarez, P. G., Kiossou, H. S., & Schaus, P. (2020). An LSTM approach to forecast migration using Google Trends. arXiv preprint arXiv:2005.09902

Donato, K. M., Singh, L., Arab, A., Jacobs, E., & Post, D. (2022). Misinformation about covid-19 and Venezuelan migration: Trends in twitter conversation during a pandemic. Harvard Data Science Review. https://doi.org/10.1162/99608f92.a4d9a7c7

Wycoff, N., Arab, A., Donato, K., Singh, L., Kawintiranon, K., Liu, Y., & Jacobs, E. (2023). Forecasting Ukrainian refugee flows with organic data sources. International Migration Review. https://doi.org/10.1177/019791832312039

Fujimura, C. (2023). The sad legacy of Russian orphans lies behind Putin’s troops kidnapping tens of thousands of ukrainian children. Fortune. https://fortune.com/europe/2023/07/07/why-is-russia-kidnapping-ukrainian-children-vladimir-putin-soviet-book-author/. Accessed 11 July 2023.

Aslany, M., Carling, J., Mjelva, M. B., & Sommerfelt, T. (2021). Systematic review of determinants of migration aspirations. Changes, 1, 18.

De Jong, G. F. (2000). Expectations, gender, and norms in migration decision-making. Population Studies, 54(3), 307–319.

Ravenstein, E. G. (1889). The laws of migration. Journal of the Royal Statistical Society, 52(2), 241–305.

Wanner, P. (2020). Collection and analysis of quantitative data in the field of migration. past trends, current status and future prospects. NCCR On the Move Working Paper.

Liu, M.-M., Creighton, M. J., Riosmena, F., & Muñoz, P. B. (2016). Prospects for the comparative study of international migration using quasi-longitudinal micro-data. Demographic Research, 35, 745.

Cesare, N., Lee, H., McCormick, T., Spiro, E., & Zagheni, E. (2018). Promises and pitfalls of using digital traces for demographic research. Demography, 55(5), 1979–1999.

Martin, S., & Singh, L. (2019). Big data and early warning of displacement (pp. 129–150).https://doi.org/10.2307/j.ctvpr7r1q.9

Martin, S. F., & Singh, L. (2022). Environmental change and human mobility: Opportunities and challenges of big data. International Migration, 61(5), 29–46.

Rampazzo, F., Rango, M., & Weber, I. (2022). New migration data: Challenges and opportunities. In E. Bertoni, M. Fontana, L. Gabrielli, S. Signorelli, & M. Vespe (Eds.), Handbook of computational social science for policy (pp. 345–359). Springer.

Lazer, D., Kennedy, R., King, G., & Vespignani, A. (2014). The parable of google flu: Traps in big data analysis. Science, 343(6176), 1203–1205.

Taylor, J., & Pagliari, C. (2018). Mining social media data: How are research sponsors and researchers addressing the ethical challenges? Research Ethics, 14(2), 1–39.

Singh, L., Polyzou, A., Wang, Y., Farr, J., & Gresenz, C. R. (2020). Social media data-our ethical conundrum. In A quarterly bulletin of the IEEE computer society technical committee on database engineering. http://sites.computer.org/debull/A20dec/p23.pdf.

Donato, K., Jacobs, E., Singh, L.O., Arab, A., & Wycoff, N. (2024). Using organic data in migration research. To appear in International Migration Review.

State, B., Rodriguez, M., Helbing, D., & Zagheni, E. (2014). Migration of professionals to the us: Evidence from linkedin data. In Social informatics: 6th International conference, SocInfo 2014, Barcelona, Spain, November 11–13, 2014. Proceedings (Vol. 6, pp. 531–543). Springer.

Spyratos, S., Vespe, M., Natale, F., Ingmar, W., Zagheni, E., & Rango, M. (2018). Migration data using social media: a European perspective. EUR 29273 EN. JRC112310. Luxembourg: Publications Office of the European Union. https://doi.org/10.2760/964282.

Spyratos, S., Vespe, M., Natale, F., Weber, I., Zagheni, E., & Rango, M. (2019). Quantifying international human mobility patterns using facebook network data. PLoS ONE, 14(10), 0224134.

Blumenstock, J. E. (2012). Inferring patterns of internal migration from mobile phone call records: evidence from rwanda. Information Technology for Development, 18(2), 107–125.

Zagheni, E., & Weber, I. (2012). You are where you e-mail: using e-mail data to estimate international migration rates. In Association for Computing Machinery, ACM (Ed.), Proceedings of ACM WebSci 2012, June 22–24, 2012, Evanston, Illinois, USA (pp. 497–506). New York, NY: ACM. ISBN 978-1-4503-0267-8.

Mazzoli, M., Diechtiareff, B., Tugores, A., Wives, W., Adler, N., Colet, P., & Ramasco, J. J. (2020). Migrant mobility flows characterized with digital data. PLoS ONE, 15(3), 0230264.

Yildiz, D., Wiśniowski, A., Abel, G. J., Weber, I., Zagheni, E., Gendronneau, C., et al. (2024). Integrating traditional and social media data to predict bilateral migrant stocks in the European Union. International Migration Review. https://doi.org/10.1177/01979183241249969.

Leasure, D. R., Kashyap, R., Rampazzo, F., Dooley, C. A., Elbers, B., Bondarenko, M., Verhagen, M., Frey, A., Yan, J., & Akimova, E. T. (2023). Nowcasting daily population displacement in Ukraine through social media advertising data. Population and Development Review, 49(2), 231–254.

Rampazzo, F., Bijak, J., Vitali, A., Weber, I., & Zagheni, E. (2024). Assessing timely migration trends through digital traces: a case study of the UK before Brexit. International Migration Review. https://doi.org/10.1177/01979183241247009.

Carammia, M., Iacus, S. M., & Wilkin, T. (2022). Forecasting asylum-related migration flows with machine learning and data at scale. Scientific Reports, 12(1), 1457.

Wei, Y., Singh, L., Buttler, D., & Gallagher, B. (2018). Using semantic graphs to detect overlapping target events and story lines from newspaper articles. International Journal of Data Science and Analytics, 5, 41–60.

Collmann, J., Blake, J., Bridgeland, D., Kinne, L., Yossinger, N. S., Dillon, R., Martin, S., & Zou, K. (2016). Measuring the potential for mass displacement in menacing contexts. Journal of Refugee Studies, 29(3), 273–294.

Jun, S.-P., Yoo, H. S., & Choi, S. (2018). Ten years of research change using Google trends: From the perspective of big data utilizations and applications. Technological Forecasting and Social Change, 130, 69–87.

Stephens-Davidowitz, S., & Varian, H. (2014). A hands-on guide to Google data. Technical Report. https://people.ischool.berkeley.edu/~hal/Papers/2015/primer.pdf. Accessed 11 July 2023.

Wanner, P. (2021). How well can we estimate immigration trends using Google data? Quality & Quantity, 55(4), 1181–1202.

Fantazzini, D., Pushchelenko, J., Mironenkov, A., & Kurbatskii, A. (2021). Forecasting internal migration in Russia using google trends: Evidence from Moscow and Saint Petersburg. Forecasting, 3(4), 774–803.

Lif, O. (2016). Migration in the internet age: The 21st century: When refugees went online. LUP Student Papers. https://lup.lub.lu.se/student-papers/search/publication/8873467. Accessed 11 July 2023.

Wladyka, D. K. (2017). Queries to google search as predictors of migration flows from Latin America to Spain. Journal of Population and Social Studies [JPSS], 25(4), 312–327.

Jurić, T. (2022). Forecasting migration and integration trends using digital demography—A case study of emigration flows from Croatia to Austria and Germany. Comparative Southeast European Studies, 70(1), 125–152.

Böhme, M. H., Gröger, A., & Stöhr, T. (2020). Searching for a better life: Predicting international migration with online search keywords. Journal of Development Economics, 142, 102347.

Qi, H., & Bircan, T. (2023). Can google trends predict asylum-seekers’ destination choices? EPJ Data Science, 12(1), 41.

Leysen, B., & Verhaeghe, P.-P. (2023). Searching for migration: estimating Japanese migration to Europe with google trends data. Quality & Quantity, 57(5), 4603–4631.

Adema, J., Guha, M., & Adema, J.A.H. (2022). Following the online trail of Ukrainian refugees through google trends. In CESifo forum (Vol. 23, pp. 62–66). IFO Institut-Leibniz-Institut für Wirtschaftsforschung an der.

Hsiao, Y., Fiorio, L., Wakefield, J., & Zagheni, E. (2023). Modeling the bias of digital data: An approach to combining digital with official statistics to estimate and predict migration trends. Sociological Methods & Research. https://doi.org/10.1177/00491241221140144

Mislove, A., Lehmann, S., Ahn, Y. -Y., Onnela, J. -P., & Rosenquist, J. (2021). Understanding the demographics of Twitter users. In Proceedings of the International AAAI Conference on Web and Social Media, vol. 5, no. 1 (pp. 554–557). https://doi.org/10.1609/icwsm.v5i1.14168.

Sloan, L., Morgan, J., Housley, W., Williams, M., Edwards, A., Burnap, P., & Rana, O. (2013). Knowing the tweeters: Deriving sociologically relevant demographics from twitter. Sociological Research Online, 18(3), 74–84.

Wojcik, S., & Hughes, A. (2019). Sizing up twitter users. PEW Research Center, 24, 1–23.

Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM conference on fairness, accountability, and transparency (pp. 610–623).

Kuzyk, O. (2023). Internet tools in marketing communications of agribusinesses in Ukraine. Universal Journal of Agricultural Research, 11(2), 217–229.

Kondratenko, M. (2021). During the quarantine year, the number of Ukrainians on social networks increased by seven million. https://p.dw.com/p/3qkD3. Accessed 11 July 2023.

Statista: Most popular social media by age Ukraine 2021 (2022). https://www.statista.com/statistics/1256255/most-popular-social-media-by-age-ukraine/. Accessed 11 July 2023.

Racek, D., Davidson, B. I., Thurner, P. W., Zhu, X. X., & Kauermann, G. (2024). The Russian war in Ukraine increased Ukrainian language use on social media. Communications Psychology, 2(1), 1.

Types of contextual advertising and its role in business (2018). https://webprofit.com.ua/vydy-kontekstnoyi-reklamy-ta-yiyi-rol-dlya-biznesu/. Accessed 11 July 2023.

Starkey, G. (2017). Balance and bias in journalism: Representation, regulation and democracy. Basingstoke: Bloomsbury Publishing.

Ward, S.J. (2019). Objectivity and bias in journalism. In Oxford research encyclopedia of communication

ACLED. (2023). FAQs: ACLED sourcing methodology. https://acleddata.com/knowledge-base/faqs-acled-sourcing-methodology/. Accessed 11 July 2023.

Leetaru, K., & Schrodt, P. A. (2013). GDELT: Global data on events, location, and tone. ISA annual convention. http://data.gdeltproject.org/documentation/ISA.2013.GDELT.pdf. Accessed 11 July 2023.

Amaya, A., Bach, R., Keusch, F., & Kreuter, F. (2021). New data sources in social science research: Things to know before working with reddit data. Social Science Computer Review, 39(5), 943–960.

Salvatore, C., Biffignandi, S., & Bianchi, A. (2024). Augmenting business statistics information by combining traditional data with textual data: A composite indicator approach. METRON. https://doi.org/10.1007/s40300-023-00261-4

Simsim, M. T. (2011). Internet usage and user preferences in Saudi Arabia. Journal of King Saud University-Engineering Sciences, 23(2), 101–107.

Seabold, S., & Perktold, J. (2010). Statsmodels: econometric and statistical modeling with Python. In Walt, S., & Millman, J. (Eds.), Proceedings of the 9th Python in science conference (pp. 92–96). https://doi.org/10.25080/Majora-92bf1922-011.

Giannone, D., Reichlin, L., & Small, D. (2008). Nowcasting: The real-time informational content of macroeconomic data. Journal of Monetary Economics, 55(4), 665–676.

Ettredge, M., Gerdes, J., & Karuga, G. (2005). Using web-based search data to predict macroeconomic statistics. Communications of the ACM, 48(11), 87–92.

Choi, H., & Varian, H. (2009). Predicting the present with google trends (technical report). Google.

Uysal, A. K., & Gunal, S. (2014). The impact of preprocessing on text classification. Information Processing & Management, 50(1), 104–112.

Churchill, R., & Singh, L. (2022). The evolution of topic modeling. ACM Computing Surveys, 54(10s), 1–35.

Cebrián, E., & Domenech, J. (2023). Is google trends a quality data source? Applied Economics Letters, 30(6), 811–815.

Cebrián, E., & Domenech, J. (2024). Addressing google trends inconsistencies. Technological Forecasting and Social Change, 202, 123318.

Association, U. I. (2021). Data of the research on the Internet audience of Ukraine. https://inau.ua/proekty/doslidzhennya-internet-audytoriyi. Accessed 11 July 2023.

UIA has completed a study of the online advertising market in 2020 and announces the results (2021). https://inau.ua/news/novyny-inau/inau-zavershyla-doslidzhennya-rynku-internet-reklamy-za-pidsumkamy-2020-roku-i. Accessed 11 July 2023.

Union, I. T. (2022). Two-thirds of the world’s population uses the Internet, but 2.7 billion people remain offline. https://www.itu.int/itu-d/reports/statistics/2022/11/24/ff22-internet-use/. Accessed 11 July 2023.

Wilkinson, M. D., Dumontier, M., Aalbersberg, I. J., Appleton, G., Axton, M., Baak, A., Blomberg, N., Boiten, J.-W., Silva Santos, L. B., & Bourne, P. E. (2016). The fair guiding principles for scientific data management and stewardship. Scientific data, 3(1), 1–9.

Acknowledgements

We are grateful to Georgetown University’s Institute for the Study of International Migration, Massive Data Institute, and Office for Global Engagement, as well as Project Liberty for the generous support of this project.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Fits for all indicators

A.1 Fits for Twitter/X variables

A.2 Fits for ACLED variables

A.3 fits for GDELT variables

A.4 Fits for newspaper variables

A.5 Fits for google trends variables

Appendix B: Topic keywords