Abstract

This paper presents a classification system (risk Co-De model) based on a theoretical model that combines psychosocial processes of risk perception, including denial, moral disengagement, and psychological distance, with the aim of classifying social media posts automatically, using machine learning algorithms. The risk Co-De model proposes four macro-categories that include nine micro-categories defining the stance towards risk, ranging from Consciousness to Denial (Co-De). To assess its effectiveness, a total of 2381 Italian tweets related to risk events (such as the Covid-19 pandemic and climate change) were manually annotated by four experts according to the risk Co-De model, creating a training set. Each category was then explored to assess its peculiarity by detecting co-occurrences and observing prototypical tweets classified as a whole. Finally, machine learning algorithms for classification (Support Vector Machine and Random Forest) were trained starting from a text chunks x (multilevel) features matrix. The Support Vector Machine model trained on the four macro-categories achieved an overall accuracy of 86% and a macro-average F1 score of 0.85, indicating good performance. The application of the risk Co-De model addresses the challenge of automatically identifying psychosocial processes in natural language, contributing to the understanding of the human approach to risk and informing tailored communication strategies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The human approach to risk is a complex and multifaceted issue. Psychosocial research has shown that to gain a better understanding of how it functions, it is crucial to investigate the psychological and cultural factors involved, such as individual characteristics, emotions, feelings, psychological distance, denial, and moral disengagement [1,2,3,4,5,6]. These factors include psychosocial processes that can be expressed in natural language as means of communication [7] and provide insight into how individuals perceive and respond to risks. This assumption underlies, for example, sentiment analysis in its many applications [8], or the identification of psychosocial processes beyond emotions/emotional states such as morality in Linguistic Inquiries and Word Count [9, 10], or even behavior patterns [11]. The massive source of data available on social media presents an opportunity to study how these processes are manifested in language in a worldwide dataset.

However, automatically identifying the psychosocial processes in text is very complex, and remains an open challenge due to the ambiguous and context-dependent nature of human language. Two main approaches exist for this purpose: dictionary-based methods and machine learning-based methods. Dictionary-based methods are the most widely used in the field of social psychology. According to these methods, the presence or absence of the process is given by an index indicating the presence/absence of a list of words that together define the process. Even if they have proven useful, limitations arise from their inability to capture the nuances of language use in context, leading to a less refined or coarse-grained identification of psychosocial processes. In contrast, machine learning-based methods have demonstrated the ability to overcome these limitations [12]; however, they require significant domain-specific knowledge and effort to develop a training set allowing automatic identification of the processes [11, 13].

This paper proposes a model that operationalizes psychosocial processes, defining stance towards risk using machine learning to capture their specific features, and examines how they are expressed in natural language, providing a training set for their automatic classification on social media. The model categories for text classification focus on moral disengagement, distancing, and denial in relation to environmental and health risks (climate change and the Covid-19 pandemic).

Psychosocial processes related to stance towards risk

The following paragraphs describe certain psychosocial processes which, according to pertinent literature, are relevant to risk perception and provide a more or less effective coping mechanism in the face of risk events. They are processes of moral disengagement, psychological distance (construal level theory), and states of denial. The decision to focus on these psychosocial processes over others—linked nonetheless to perception of risk and willingness to act—is based on the fact that these in particular concern the role of individuals and their acknowledgement or otherwise (at different levels) of the risk in question (summarized here as risk stance). This enables their joint consideration and comparison.

Processes of moral disengagement

Moral disengagement [5] is grounded in the agentic perspective of social cognitive theory. It is referenced as the mechanism through which people selectively disengage their moral self-sanctions from their detrimental behavior. In general, it defines the role of the individual with respect to an event or an issue, in this instance, a risk. According to the author, various processes work together at both individual and social levels, and can be identified based on where the moral self-sanctions are focused: on behavior, agency, or effect [5]. When the focus is on behavior, these processes are moral, social, and economic justification, used to attribute honorable purposes to practices regarded as harmful but in pursuit of a just end (from a moral, social or economic point of view). Similarly, the process of advantageous comparison is one of framing an issue as the lesser of two evils, making it not only acceptable but even morally right. When the focus is on agency, the process involved is the displacement of responsibility, that is to say obscuring or understating the true agentive role in causing harm. In this case the agentive role is attributed to someone else. Finally, focusing on the effects, moral disengagement is accomplished by downplaying or discrediting evidence of harm (disregarding, minimizing, and disputing detrimental effects).

As regards risk, mechanisms of moral disengagement have been studied in the context of terrorism or environmental sustainability [5], and of risk behaviors related to health [14, 15]. Several studies show that engaging in these processes leads to justifying one's ineffective risk management behavior, for example not observing prevention rules during the Covid-19 pandemic [15] or being unwilling to make changes that could mitigate the harm inflicted on future generations in the case of environmentally degrading practices [5].

Construal-level theory of psychological distance

The process of psychological distance formulated within the Construal Level Theory [16, 17] is used to understand how people represent and manage a concept that cannot be experienced in the here and now. The reference point for psychological distance “is the self in the here and now, and the different ways in which an object might be removed from that point—in time, in space, in social distance, and in hypotheticality—constitute different distance dimensions” [17]. According to the authors, the process of abstracting an object or event (or a risk, in this instance) involves both a simplification of and an attribution of new meanings to that object or event, related to the cognitive processes involved in the selfsame process (e.g., categorization). The ability to abstract and thus imagine an object or event is needed if one is to manage it, and thus orient oneself toward prediction, evaluation, and behavior [16]. Thus, imagining an object as more or less distant determines one’s position relative to the object in terms of both space and disposition and, consequently, coping with it. In this sense, psychological distance is related to risk stance. Four dimensions of psychological distance are identified, related to each other but considered as distinct [17]. The temporal dimension refers to when an event occurs (near or remote in time). The spatial dimension refers to where it occurs (near or remote in space); the social dimension, to whom it occurs (to me, or someone else from a social group to which I do or do not belong). Finally, the hypothetical dimension refers to whether or not an event occurs. So, the shorter the distance, the more likely its imagined occurrence; the greater the distance, the less likely.

The link between psychological distance, risk perception, and willingness to act when faced with such risk has been widely studied. Many studies have focused on climate change [18], others more recently on the Covid-19 pandemic [19]. Research has shown that perceiving a risk as distant can be linked to low concern and readiness to act [4, 19]. Recent experimental studies have shown the importance of reducing psychological distance to promote effective responses and concern over a risk. For example, Jones et al. [20] show that climate communications framed in such a way as to reduce psychological distance can increase public engagement with climate change. According to Chu & Yang [21], distance framing influenced the readiness of people to evaluate—and react to—climate change messages: distancing the impact of a phenomenon would increase ideological polarization, whereas reducing the distance has the opposite effect.

States of denial

According to Cohen [22, 23] the process of denial involves cognitive, emotional, and action components. Cohen classifies denial into three types. The first is literal denial, where something did not happen or is not true. This form of denial aligns with the dictionary definition and corresponds to non-acknowledgment of facts. The second form of denial is interpretive, where the facts are acknowledged but given a different meaning from what others perceive. The third type of denial, implicatory denial, acknowledges the facts but denies psychological, political, or moral responsibility, which implies an inability to do anything about the situation.

The above-mentioned forms of denial have been referenced in literature—by the same author [23], and others—mainly in the context of studies on atrocities and war crimes. However, the denial construct is studied extensively in relation to risk in the field of social psychology, demonstrating perhaps one of the best known and most intuitive antecedents to ineffective coping in the face of risk events. Risk denial has been extensively studied in relation to climate change [6, 24], health [25, 26] and more generally, science denial [27,28,29].

Toward an integrated model of psychosocial processes defining stance towards risk

Stance classification and detection is a frequently explored area in Natural Language Processing, often entailing the categorization of attitudes towards the object of study as positive, negative, or sometimes neutral. However, the operationalization of stance often varies across different research studies, resulting in a lack of consistency [30]. Currently, there is no a unique and standard method for operationalizing this construct, including the incorporation of a psychosocial perspective. Consequently, using stance classification systems designed for other purposes in a specific study can pose challenges. This underscores the importance of clarifying the theoretical perspective guiding the operationalization of this construct. As previously explained, in this paper, the construct of risk stance refers to the role of individuals and their acknowledgment or lack thereof of the risk in question. It encompasses the psychosocial processes described above, namely moral disengagement, psychological distance (construal level theory), and states of denial, as explained below.

All the above-mentioned psychosocial processes were investigated in the cited studies, mainly using validated measurement scales for the construct. There are only a few attempts to identify some of these processes in language. For example, Snefjella and Kuperman [31] show that abstractness (references to geographically more distant cities, chronologically more remote times in the past or future) can be a proxy for geographic and temporal distance in language. However, there is no systematic guidance on how to identify all these processes in relation to risk in natural language. The first step in pursuing this purpose is to create a reference model that defines the categories pertaining to psychosocial processes.

In short, all of the above-mentioned psychosocial processes: (I) concern the acknowledgement or otherwise of the risk and (II) one’s own role with respect to the risk; (III) are indicative of willingness to act when faced with a risk event; (IV) can be expressed in natural language. Some processes may be applicable conceptually to multiple theories, and thus overlap. For example, hypothetical distance (i.e., the question of whether or not a risk exists) overlaps with. Or again, the attitudes of disregarding, minimizing, and disputing detrimental effects that typify moral disengagement can be considered also as expressions of interpretive denial; likewise the displacement of responsibility and implicatory denial processes, which both question the responsibility of the individual in dealing with the risk.

To create an integrated model that allows the identification of stance towards risk from a psychosocial perspective, the processes indicated above have been systematized, highlighting the tendency to overlap and the specific features of each one (Table 1). In the model, stances towards risk imply acknowledgement or otherwise and role of the individual with respect to the risk, and have been conceptualized as different stances in a continuum from consciousness to denial (Co-De).

The model is composed of four macro-categories containing nine micro-categories (i.e., the psychosocial processes). At the first pole of the continuum, there is Consciousness, i.e., acknowledgement that the risk in question exists. Next in sequence is the Justification macro-category. This category implies acknowledgment of the risk, but the disinclination to deal with it (i.e., one’s own role) is justified through three different processes (a unique label was applied where there are several overlapping processes from different theories, as summarized in Table 1): Moral, Social, and Economic Justification; Advantageous Comparison; and Displacement of Responsibility. The third macro-category, Distance, implies acknowledgement of a risk, albeit one that does not concern the person or related groups since it is considered remote in terms of time (Temporal Distance), space (Geographical Distance), or affected population/group (Social Distance). At the opposite end of the continuum to Consciousness, finally, there is Denial, which indicates lack of awareness (i.e., no reason to take up any risk-aware stance) and comprises two micro-categories: Interpretive Denial, in which the risk is defined differently from “what is generally perceived”; and Literal Denial, in which the very existence of the risk is called into question.

Testing the risk Co-De model

The fundamental concept underlying this study is that if psychosocial processes can be articulated using language, then they can be discerned in natural language. The challenge lies in determining whether it is feasible to automate the identification process that an expert would employ. If the risk Co-De model suggested here is effective, it is expected that distinct categories—namely, the psychosocial processes—can be distinguished through the use of machine learning. This is because it is expected that these processes/categories will be characterized by specific combinations of linguistic features that can be automatically identified through machine learning algorithms. To test the effectiveness of the model automatically identifying the proposed categories (i.e., the psychosocial processes), the steps outlined below were followed (Fig. 1).

First it was necessary to create a training set, that is to say a set of texts—tweets in this case, pertaining to the issue in question, namely risk—and labeled according to the proposed categories. First, tweets pertaining to Covid-19 and climate change were collected (Phase 1, Fig. 1), selected, and labeled manually according to the risk Co-De model categories (Phase 2, Fig. 1). Then, each category was explored to observe its peculiarity as reflected in co-occurrences of the most used nouns, adjectives, and (sometimes) verbs, and in terms of prototypical tweets classified as a whole (Phase 3b, Fig. 1). Finally, starting from the training set, machine learning algorithms were trained to test the effectiveness of the risk Co-De model, i.e., whether on the basis of the previously classified tweets it would be possible to identify recurring linguistic features that would in turn allow automatic classification according to the risk Co-de model. This means that the corpus constituting the training set was divided into training and validation sets to see if different algorithms were able to make an accurate prediction with respect to the pre-labeled categories (Phase 3a, Fig. 1).

Data collection

To create the training set, Italian tweets pertaining to risk were gathered utilizing the rtweet R package [32]. Given that the model is concerned with categorizing stance towards risk, prominent risks in online discourse were defined at the time of collection, namely the Covid-19 pandemic and climate change, and tweets were selected and collected based on inherent hashtags that were trending and relevant. In a multi-risk perspective, various risks coexist and interact within the same context [33], and therefore are not necessarily separated in the online discourses. In fact, even though tweets were collected using hashtags related to climate change, these same tweets discuss Covid-19 (and vice versa) and, sometimes, the Russian–Ukrainian conflict. All of these risks coexisted in online discourse in Italy at the time of collection although, as evident from the marked difference in the number of tweets collected, the attention paid to them is not uniform.

The process of Twitter scraping resulted in 216,624 tweets related to the Covid-19 pandemic and 6087 tweets regarding climate change, based on their respective hashtags. The Covid-19 tweets covered two time periods—end of January/February 2020 and March/April 2022—while climate change tweets spanned the period March-September 2022. From this initial collection, a subset of 29,304 tweets was selected for manual labeling. Given the disparity in the number of tweets collected for each topic, the sample comprised all 6,087 of the climate change tweets, plus a randomly selected subset of 23,217 tweets related to the Covid-19 pandemic. This selected sample was then analyzed and categorized manually according to the risk Co-De model, resulting in a final corpus of 2381 classified tweets (8.1% of the analyzed sample).

Analyses

Manual labeling and exploration of the categories

The sub-sample of collected tweets was divided into four parts and analyzed manually by four independent expert judges (social and clinical psychologists instructed by the authorFootnote 1). Their task was to label each tweet with the appropriate category from the risk Co-De model, discarding those that were not pertinent. If any of the psychosocial processes could be identified in a tweet, the entire tweet was labeled accordingly, otherwise it was excluded. The attribution of the tweets to be analyzed took place in several successive stages, to keep track of the ratio of analyzed to attributed tweets, and balance the categories found as far as possible. During the analysis process, the judges debated the wording of the categories in the language until they reached an agreement. The total number of labeled tweets (2813) was then rechecked manually by the author and further debated. Among them, potentially ambiguous categorizations (432 tweets) were discarded and 274 tweets were re-discussed and the labels were changed by mutual agreement. Finally, a total of 2381 tweets were classified, divided into the macro and micro-category as shown in Table 2.

The labeled tweets constituting the training set corpus were cleaned by removing links and punctuations and normalized by replacing uppercase with lowercase letters. The entire corpus comprises 67,025 word tokens (total number of words) and 10,685 word types (words as they appear in the dictionary). The average number of word tokens per tweet is 28.15.

To understand what the categories include, so that the result of manual analysis could be presented, the most recurrent nouns and adjectives and the associations between them were identified. The R package UDPipe was applied [34] to lemmatize texts. Part of Speech (PoS) was tagged through the pre-trained model PoSTWITA (2022-11-15 v2.11) [35], built on Universal Dependencies treebanks and made up of Italian annotated tweets. Then co-occurrences of lemmas (adjective, nouns, and—if explicative—verbs) were identified and represented. Co-occurrences make it possible to see how relevant lemmas (selected on the basis of the PoS tagging) are used in the same sentence or next to each other. By observing relevant lemmas in their context of use (i.e., the whole tweet—concordance analysis) it was possible to display certain relevant tweets classified in each category.

Model training

Starting from the training set corpus (cleaned and normalized, but not lemmatized) the training procedures designed to test the risk Co-De model were performed. The trainings are presented in detail only for the four macro-categories, but were also conducted for the micro-categories.Footnote 2 However, the micro-categories still contain too few texts classified to conduct reliable analyses. The procedure followed was the same.

The analyses carried out in this paper start from a vectorial representation of texts commonly used in bag-of-words approaches, that is to say, a numerical representation of a set of text documents commonly beginning with a document-term matrix (DTM). In this representation, each document is embodied as a row of the matrix, and each unique term present in all documents constitutes a column. The representation obtained from the DTM allows comparison of documents based on common words they contain, as in the case of co-occurrences, calculated to explore categories. Starting from more complex matrices, e.g., by replacing the columns (i.e., the terms) with other text features, such as linguistic features, further representations of texts can be obtained. To this end, before employing machine learning techniques to test the effectiveness of the risk Co-De model, the corpus constituting the training set was turned into a document-feature matrix, as already accomplished in previous studies with the same final aim of classification through machine learning [36, 37].

Applying the procedure on R, use was made of the document representation known as Authors Multilevel N-gram Profiles (AMNP) [37], a procedure developed for authorship attribution. In this instance it is applied to the attribution of categories that constitute psychosocial processes. The underlying assumption of the procedure is, as already intimated, that the corpus can be represented by a matrix of documents (i.e., in this application text chunks made up of tweets) x features. The AMNP procedure combines information (i.e., features) from different linguistic levels (semantic, syntactic, morphological, phonological), taking into account the complementary nature of the information at character and word levels, and, instead of considering a single type of feature, uses a combined vector of both character and word n-grams of different sizes. This solution has proven to be very efficient compared to a single n-gram feature [37].

The aim of applying machine learning is to find out whether or not the process of abstraction employed by experts in identifying psychosocial processes in tweets can be replicated automatically. This involves identifying recurring linguistic features and their combinations in tweets that can be used to classify them— automatically—according to the categories of the risk Co-De model using machine learning algorithms. The underlying assumption is that if the process (i.e., the category) is expressed in language, it can be represented as a combination of linguistic features. Therefore, by representing the text as a document (text chunks)-feature matrix, machine learning can discern how this expression occurs by detecting the features and their combinations in the given categories, and then automatically identify them.

The training set corpus was divided into the four macro-categories of the risk Co-De model by merging the tweets and dividing the text into chunks of equal dimension (400 words). As tweets are particularly short and heterogeneous texts, it has been demonstrated that performance is enhanced by utilizing homogeneous sample sizes of merged tweets [37].

Accordingly, the training set corpus was divided into text chunks of 400 words, which is the unit used for identifying linguistic features. The linguistic features comprise the 400 most frequent word unigrams, the 400 most frequent word bigrams, the 400 most frequent character bigrams, and the 400 most frequent character trigrams (corresponding to the different linguistic levels). These features are then extracted from the text chunks and arranged in a matrix with dimensions of 163 rows (corresponding to the text chunksFootnote 3) and 1600 columns (representing the linguistic features). A normalized frequency was calculated for all features to avoid text length bias in subsequent calculations. Values with low variance are excluded to reduce noise in the classification process.

Two machine learning algorithms for classification were then applied: Support-Vector Machines (SVM) [38, 39] and Random Forest (RF) [40, 41]. SVM (discriminative classifiers) fit a margin-maximizing hyperplane between classes. This has been shown to be less prone to overfitting and provides better generalization [12]. RF consists of a learning method based on generating a multitude of randomized and uncorrelated decision trees. It is considered a good choice for social media datasets [12]. The algorithms utilized a fivefold cross-validation technique. This involved conducting training on 4/5 of the available training set and then using the remaining 1/5 as a validation set. This process was repeated five times, with each iteration using a different set of text chunks as the validation data. The final outcome of the cross-validation procedure was determined by calculating the arithmetic mean of the test results from each of the five iterations.

Results

Manual labeling and exploration of categories

The exploration of manually categorized tweets provides insight into the abstraction process employed by expert judges to identify how categories are expressed and what they encompass, as well as the characteristics that define these categories.

Consciousness

The category of consciousness primarily comprises tweets that acknowledge the existence of the risk in question, stating the situation and describing what to do (Fig. 2). For instance, a tweet stating “Deforestation in the Amazon and pollution of the sea are irreversibly changing the climate” falls under this category.

Justification

In the Justification macro-category there are tweets expressing awareness of the risk, but in a way that suggests there is no need to counter it. In the specific context of the micro-category of Moral, Social, and Economic Justification, it is argued that individuals should accept the risk (e.g., not adhering to the safety measures imposed during the pandemic crisis) to mitigate more significant problems such as the repercussions on the economy (Fig. 3). An example of a tweet classified under this micro-category is the following: “Remove all restrictions on Covid now or it will be economic carnage now that there is a war.”

In the micro-category of Advantageous Comparison, apparent disregard for a risk is justified by the existence of other problems seen as more pressing or significant (Fig. 4). An example of a tweet classified under this category is “These idiots then cry panic over the coronavirus. It would be better to control the deaths caused by pollution”, or “Italy: 0 victims per day due to coronavirus, 6 victims per day due to femicide. Open your mind, please. #coronavirus”.

In the Displacement of Responsibility micro-category, the responsibility for doing something to counter the risk is attributed to others, often politicians or, in general, the government (Fig. 5). An example of a tweet classified under this micro-category is “You dedicate a day to the earth because in reality you do nothing but tweet #EarthDay #WorstGovernment #MilitarySpending”.

Distance

In the Distance category, there are tweets in which the risk is presented as remote from the author of the tweet, in whatever sense. From a temporal perspective (Temporal Distance micro-category), the distance is expressed through statements indicating mainly that the risk is over, such as “at least Covid19 has disappeared”. In this category, the non-existence of the risk (mainly Covid-19 in this case) is expressed through specific verbs such as “end” and “decrease”, which have been maintained for clarity in the representation of co-occurrences (Fig. 6).

In the Geographical Distance micro-category, the risk is placed elsewhere, affecting someone else somewhere else, for example in China, where the first cases of Covid-19 occurred, or in Africa, in connection with migration flows, in which case the solution would be simply to close airports and seaports (Fig. 7). An example tweet classified under this category is “Covid 19 will never arrive in Italy from China.”

In the Social Distance micro-category, the risk does not concern the writer but others, belonging to different social categories, such as the elderly or people with health problems, in the case of Covid-19 (Fig. 8). Reference is made to categories considered typically or supposedly vulnerable, as in the tweet “Covid-19 kills the elderly.”

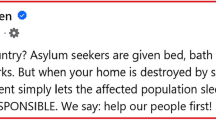

Denial

The Denial macro-category includes the micro-category of Literal Denial, reflected in expressions that describe the risk as something invented, and explicitly deny the existence of the risk in question, such as “coronavirus is all a hoax” or “there is no climate crisis”. Words such as “hoax” and “nonsense” feature in this category (Fig. 9). Several frequently occurring words in this category are highly critical of the government (e.g., “criminal government”). In fact, many tweets classified within this category assert that the government has lied about the existence of the risk in question, which—according to the tweets’ authors—does not exist.

The micro-category of Interpretive Denial contains expressions that reinterpret the situation by stating the real truth, or by minimizing or discrediting the alleged risk through euphemisms, irony, etc. For example, the event that defines the risk is actually a tool for other purposes, such as SARS-CoV-2 being described as a virus created ad hoc to distract people, or climate change used as an excuse for the convenience of others. Consequently, messages contain words such as “truth”, or give indicators of what is really being talked about, for example a (normal) “flu” (Fig. 10). Some tweets classified within this category include “Covid19, the biggest weapon of mass distraction ever created: finally, the truth”, and “Influenza mistaken for pandemic”, or “what they secretly want in accordance with the EU: expropriate homes to give them to banks, insurance companies, entities, etc. and reduce everyone to renting via the land registry and green bomb Greta Thunberg, but when will Italians wake up?”.

Model training

To verify the effectiveness of the risk Co-De model, SVM and RF algorithms were trained starting from the training set corpus. As regards the four macro-categories: Consciousness (C); Denial (De); Distance (Di); Justification (J), the confusion matrix obtained after the training showed that the average accuracy obtained in classification is 86% with SVM and 70% with RF (Table 3). The final values used for the SVM model were degree = 1, scale = 0.1 and C = 0.25. The final value used for the RF model was mtry = 1600. Training with SVM performs considerably better than with RF (Fig. 11).

With SVM the number of correct predictions across all predictions (accuracy) is > 90% in all four categories (Table 4). The F1 score, which is a harmonic mean of precision and recall, indicates how many positive predictions made by the classifier are correct (true positives), as well as the number of positive cases the classifier correctly identified in relation to all positive cases in the data. The F1 score is highest for the Consciousness category and lowest for the Distance category. This is because the distance category is often misclassified by inclusion in other categories (low recall). It is worth noting that this category has the lowest number of tweets (and thus text chunks) that were classified. With RF the results are similar, but performance is inferior (Table 5).

The training was also conducted with the micro-categories,Footnote 4 obtaining an overall accuracy of 56% for SVM and 65% for RF, with F1 scores of 0.48 and 0.42 respectively.Footnote 5 Although there are few tweets classified in the training set (and thus few text chunks), probably to achieve satisfactory performance with more categories, it is interesting to note that in this case the better overall accuracy is achieved with RF.

Discussion

The purpose of this study is to provide a training set that will train machine learning algorithms to identify stance towards risk. It is based on a model (risk Co-De model) created from psychosocial processes related to risk perception that are described in existing literature [5, 17, 23]. The risk Co-De model comprises four macro-categories (Consciousness; Justification; Distance; Denial); these can be divided into nine more detailed micro-categories (Consciousness; Moral, Social, and Economic Justification; Advantageous Comparison; Displacement of Responsibility; Temporal Distance; Geographical Distance; Social Distance; Interpretive Denial; Literal Denial).

The premise of the study is that if it is possible to express psychosocial processes in language, then it is possible to detect them. The risk Co-De model provides a framework for identifying specific psychosocial processes in text, based on their theoretical conceptualization. The challenge lies in determining whether the identification process that an expert would employ can be replicated automatically. Given the complexity of this process, a training set was generated to enable its implementation via machine learning. To this task, machine learning-based approaches have proven to be more efficient than dictionary-based methods [12], as they are capable of capturing the nuances of language use in context. Therefore, for the development of a new classification system and theoretical model, it was decided to proceed with the former approach. The results show that when considering the four macro-categories, the performance is quite satisfactory, even compared to other studies that have used categories related to psychosocial processes [11, 13], especially with SVM (86% overall accuracy, macro-average F1 score 0.85), confirming the effectiveness and usefulness of the risk Co-De model. This result demonstrates that categories can be distinguished on the basis of linguistic patterns, and therefore, as a combination of linguistic features, these processes can be identified automatically in natural language. Moreover, this result highlights the reliability of the manual labelling process and of the creation of the training set.

However, the training set is still too limited to give satisfactory results when considering the micro-categories, consequently it is not yet possible to train with all nine micro-categories. Unsatisfactory classification can be due more to a question of categories with few tweets than to the actual content of the tweets. However, considerations regarding potential redefinitions of the micro-categories can only be made with a larger training set. The effort required to obtain labeled data for a specific domain and the complexity of the proposed categories must be taken into account. Therefore, the overall result can be considered promising and suggests that the proposed line could be pursued further.

Some limitations emerge that must be evaluated in future developments of the study. The data collected for manual tagging is probably too general, as the ratio of items classified to items discarded is very high (with only 10% approximately of the tweets being retained). However, since the aim was to create a training set, potentially ambiguous tweets were initially discarded in favor of maximizing the effectiveness of the training. In addition, the percentage of categories found is not balanced. Although attention was paid to this aspect during manual labeling, it was not possible to find sufficient classifiable tweets within certain categories in some cases (e.g., Temporal Distance). This may be due to the data collection periods, which consider only certain phases of the Disaster Risk Management (DRM) cycle. Collecting data that takes the DRM factor into account could help in this respect, as different psychosocial processes may or may not be expressed depending on the different phases of the DRM cycle. For example, temporal distance from the risk related to the Covid-19 pandemic may be less likely to figure during the crisis phase.

In addition, the dataset is unbalanced towards one type of risk despite the intention to consider a multi-risk perspective. In effect, during the data collection period, despite the evident coexistence of different risks, attention was focused more on one risk, for example the Covid-19 pandemic compared to climate change, due both to media exposure and to its being more directly experienced [42, 43], which may influence classification. This observation also highlights another important point: the framework underlying the classification system of the risk Co-De model adopts a multi-risk approach. Consequently, the analyzed tweets do not always exclusively pertain to a single risk event, and the training dataset should encompass various interconnected topics that correspond to these risk events. However, it is important to note that the training set has not yet undergone testing with a separate test set. This testing is essential to evaluate potential overfitting and—together with further analyses—for cross-topics validation (where the topics considered, in this case, are different risk events) [44] in alignment with the multi-risk approach that forms the basis of the risk Co-De model.

Finally, with this type of classification, psychosocial processes are treated as mutually exclusive, attributing the presence or absence of the corresponding category to the tweet. Further study developments could consider the presence of different processes simultaneously in the same tweet, labeling not the entire tweet but the specific expression of the process [45]. Despite the limitations identified, the precision and expertise with which the training set was constructed have led to very promising results, which are difficult to find elsewhere in literature and can be used for further studies.

Concluding remarks

The automatic identification of psychosocial processes in natural language presents an open and highly complex challenge. Given the large volume of data available through social media, and the role of the latter in risk perception and management, such identification can be crucial for studying and understanding complex phenomena, such as human approach to risk, as well as to inform policy and build tailored communication. This study presents a model (risk Co-De model) capable of addressing the challenge in question, albeit in the simplest of forms, with four macro-categories. With the aid of the risk Co-De model, it becomes possible to identify different stances towards risk in social media automatically, ranging from consciousness to denial, as expressed in natural language. Once the functionality of the risk Co-De model has been demonstrated, the training set can be expanded and developed in different languages.

Data availability

Dataset is available in the Iris repository https://hdl.handle.net/11573/1679485 from the corresponding author upon request.

Change history

13 December 2023

The original version is updated due to the a spell error in the title of Table 1. The text marco and micro has been updated as macro and micro categories.

Notes

The judges were instructed on the definition of the macro and micro categories of the risk Co-De model. For each category, they were provided with general examples of how the psychosocial processes corresponding to the categories are expressed in natural language, as well as at least two examples (tweets) from the dataset. Judges were required to label the tweet if they identified the presence of the psychosocial processes corresponding to the category; in case of any doubts, the tweet was flagged and subsequently discussed with the other judges.

Since the Distance micro-categories contain too few texts to be able to continue with this analysis, they were considered all together in both the trainings, resulting in a total of 7 micro-categories.

Each text chunk retains the label information of the merged tweets it comprises. Out of 163 text chunks, 49 are labeled as Consciousness, 54 as Justification, 21 as Distance, and 39 as Denial in terms of macro categories. Regarding the micro-categories (considering the Distance micro-categories as a single category), 49 text chunks are labeled as Consciousness, 10 as Moral, Social, and Economic Justification, 19 as Advantageous Comparison, 25 as Displacement of Responsibility, 21 as Distance, 32 as Interpretive Denial, and 7 as Literal Denial.

As regards the micro-categories the final values used for the SVM model were degree = 1, scale = 0.1 and C = 0.5, while the final value used for the RF model was mtry = 1600.

Although the average F1 score is the same for both models, the F1 scores obtained in the micro-categories show some differences. Specifically, the F1 scores in the micro-categories are more evenly distributed with SVM, while they are more polarized with RF. Both models have lower average F1 scores for categories with fewer classified tweets, but in RF the scores can be close to 0. Therefore, the SVM model may provide more consistent performance across different subcategories, while the RF model may be more sensitive to variations in data distribution.

References

Lerner, J. S., & Keltner, D. (2001). Fear, anger, and risk. Journal of Personality and Social Psychology, 81(1), 146–159. https://doi.org/10.1037/0022-3514.81.1.146

Slovic, P., Finucane, M. L., Peters, E., & MacGregor, D. G. (2004). Risk as analysis and risk as feelings: Some thoughts about affect, reason, risk, and rationality. Risk Analysis, 24(2), 311–322. https://doi.org/10.1111/j.0272-4332.2004.00433.x

Weber, E. U. (2010). What shapes perceptions of climate change? Wiley Interdisciplinary Reviews: Climate Change, 1(3), 332–342. https://doi.org/10.1002/wcc.41

Spence, A., Poortinga, W., & Pidgeon, N. (2012). The psychological distance of climate change. Risk Analysis, 32(6), 957–972. https://doi.org/10.1111/j.1539-6924.2011.01695.x

Bandura, A. (2016). Moral disengagement: How people do harm and live with themselves. Worth Publishers.

Wong-Parodi, G., & Feygina, I. (2020). Understanding and countering the motivated roots of climate change denial. Current Opinion in Environment Sustainability, 42, 60–64. https://doi.org/10.1016/j.cosust.2019.11.008

Seih, Y.-T., Beier, S., & Pennebaker, J. W. (2017). Development and examination of the linguistic category model in a computerized text analysis method. Journal of Language and Social Psychology, 36(3), 343–355. https://doi.org/10.1177/0261927X16657855

Godsay, M. (2015). The process of sentiment analysis: A study. International Journal of Computer Applications, 126(7), 26–30. https://doi.org/10.5120/ijca2015906091

Pennebaker, J. W., Francis, M. E., & Booth, R. J. (2001). Linguistic Inquiry and Word Count (LIWC): LIWC2001. Lawrence Erlbaum Associates.

Pennebaker, J. W., Booth, R. J., Boyd, R. L., & Francis, M. E. (2015). Linguistic Inquiry and Word Count: LIWC2015. Austin, TX: Pennebaker Conglomerates. Retrieved from www.LIWC.net

Fernandez, M., Piccolo, L., Maynard, D., Wippoo, M., Meili, C., & Alani, H. (2017). Pro-environmental campaigns via social media: Analysing awareness and behaviour patterns. The Journal of Web Science, 3 (2017). https://doi.org/10.34962/jws-44

Hartmann, J., Huppertz, J., Schamp, C., & Heitmann, M. (2019). Comparing automated text classification methods. International Journal of Research in Marketing, 36(1), 20–38. https://doi.org/10.1016/j.ijresmar.2018.09.009

Cheatham, S., Kummervold, P. E., Parisi, L., Lanfranchi, B., Croci, I., Comunello, F., & Gesualdo, F. (2022). Understanding the vaccine stance of Italian tweets and addressing language changes through the COVID-19 pandemic: Development and validation of a machine learning model. Frontiers in Public Health, 10, 948880. https://doi.org/10.3389/fpubh.2022.948880

Basharpoor, S., & Ahmadi, S. (2020). Predicting the tendency towards high-risk behaviors based on moral disengagement with the mediating role of difficulties in emotion regulation: A structural equation modeling. Journal of Research in Psychopathology, 1(1), 32–39. https://doi.org/10.22098/jrp.2020.1030

Maftei, A., & Holman, A.-C. (2022). Beliefs in conspiracy theories, intolerance of uncertainty, and moral disengagement during the coronavirus crisis. Ethics and Behavior, 32(1), 1–11. https://doi.org/10.1080/10508422.2020.1843171

Liberman, N., & Trope, Y. (2008). The psychology of transcending the here and now. Science, 322(5905), 1201–1205. https://doi.org/10.1126/science.1161958

Trope, Y., & Liberman, N. (2010). Construal-level theory of psychological distance. Psychological Review, 117(2), 440–463. https://doi.org/10.1037/a0018963

Maiella, R., La Malva, P., Marchetti, D., Pomarico, E., Di Crosta, A., Palumbo, R., & Verrocchio, M. C. (2020). The psychological distance and climate change: A systematic review on the mitigation and adaptation behaviors. Frontiers in Psychology, 11, 568899. https://doi.org/10.3389/fpsyg.2020.568899

Blauza, S., Heuckmann, B., Kremer, K., & Büssing, A. G. (2023). Psychological distance towards COVID-19: Geographical and hypothetical distance predict attitudes and mediate knowledge. Current Psychology, 42(10), 8632–8643. https://doi.org/10.1007/s12144-021-02415-x

Jones, C., Hine, D. W., & Marks, A. D. G. (2017). The future is now: Reducing psychological distance to increase public engagement with climate change. Risk Analysis, 37(2), 331–341. https://doi.org/10.1111/risa.12601

Chu, H., & Yang, J. Z. (2018). Taking climate change here and now–mitigating ideological polarization with psychological distance. Global Environmental Change, 53, 174–181. https://doi.org/10.1016/j.gloenvcha.2018.09.013

Cohen, S. (1993). Human rights and crimes of the state: The culture of denial. Australian and New Zealand Journal of Criminology, 26(2), 97–115. https://doi.org/10.1177/0004865893026002

Cohen, S. (2001). States of denial: Knowing about atrocities and suffering. Wiley.

Norgaard, K. M. (2011). Living in denial: Climate change, emotions, and everyday life. Living in Denial: Climate Change, Emotions, and Everyday Life. mit Press. https://doi.org/10.2134/jeq2012.0004br

Kreitler, S. (1999). Denial in cancer patients. Cancer Investigation, 17(7), 514–534. https://doi.org/10.3109/07357909909032861

Peretti-Watel, P., Constance, J., Guilbert, P., Gautier, A., Beck, F., & Moatti, J.-P. (2007). Smoking too few cigarettes to be at risk? Smokers’ perceptions of risk and risk denial, a French survey. Tobacco Control, 16(5), 351–356. https://doi.org/10.1136/tc.2007.020362

Björnberg, K. E., Karlsson, M., Gilek, M., & Hansson, S. O. (2017). Climate and environmental science denial: A review of the scientific literature published in 1990–2015. Journal of Cleaner Production, 167, 229–241. https://doi.org/10.1016/j.jclepro.2017.08.066

Prot, S., & Anderson, C. A. (2019). Psychological processes underlying denial of science-based medical practices. In A. Lavorgna & A. Di Ronco (Eds.), Medical misinformation and social harm in non-science based health practices: A multidisciplinary perspective (pp. 24–37). Routledge.

Lewandowsky, S. (2021). Liberty and the pursuit of science denial. Current Opinion in Behavioral Sciences, 42, 65–69. https://doi.org/10.1016/j.cobeha.2021.02.024

Hui, L., Ng, X., & Carley, K. M. (2022). Is my stance the same as your stance ? A cross validation study of stance detection datasets. Information Processing and Management, 59(6), 103070. https://doi.org/10.1016/j.ipm.2022.103070

Snefjella, B., & Kuperman, V. (2015). Concreteness and psychological distance in natural language use. Psychological Science, 26(9), 1449–1460. https://doi.org/10.1177/0956797615591771

Kearney, M. (2019). rtweet: Collecting and analyzing Twitter data. Journal of Open Source Software, 4(42), 1829. https://doi.org/10.21105/joss.01829

Garcia-Aristizabal, A., Gasparini, P., & Uhinga, G. (2015). Multi-risk assessment as a tool for decision-making. https://doi.org/10.1007/978-3-319-03982-4_7

Wijffels, J. (2020). udpipe: Tokenization, Parts of speech tagging, lemmatization and dependency parsing with the “UDPipe” “NLP” toolkit. R package version 0. Retrieved from https://cran.r-project.org/package=udpipe

Sanguinetti, M., Bosco, C., Lavelli, A., Mazzei, A., Antonelli, O., & Tamburini, F. (2018). Postwita-UD: An Italian twitter treebank in universal dependencies. In: LREC 2018-11th International Conference on Language Resources and Evaluation, pp. 1768–1775.

Cortelazzo, M. A., Gatti, F. M. T., Mikros, G. K., & Tuzzi, A. (2022). Does the century matter? Machine learning methods to attribute historical periods in an Italian literary corpus. Quantitative Approaches to Universality and Individuality in Language (pp. 25–36). De Gruyter.

Mikros, G. K., & Perifanos, K. A. (2013). Authorship attribution in Greek tweets using author’s multilevel N-gram profiles. In AAAI Spring symposium—Technical report, pp. 17–23.

Vapnik, V. N. (1995). The nature of statistical learning theory. Springer-Verlag.

Vapnik, V., Golowich, S. E., & Smola, A. (1997). Support vector method for function approximation, regression estimation, and signal processing. In: M. Mozer, J. M., & P. T. (Eds.), Advances in Neural Information Processing Systems. Cambridge, MA, pp. 281–287.

Ho, T. K. (1995). Random decision forests. In: Proceedings of the International Conference on Document Analysis and Recognition, ICDAR, Vol. 1, pp. 278–282. https://doi.org/10.1109/ICDAR.1995.598994.

Breiman, L. (2001). Random forests. Machine Learning, 45(1), 5–32. https://doi.org/10.1023/A:1010933404324

Fuentes, R., Galeotti, M., Lanza, A., & Manzano, B. (2020). COVID-19 and climate change: A tale of two global problems. Sustainability (Switzerland), 12(20), 1–14. https://doi.org/10.3390/su12208560

Manzanedo, R. D., & Manning, P. (2020). COVID-19 : Lessons for the climate change emergency. Science of the Total Environment, (January). https://doi.org/10.1016/j.scitotenv.2020.140563

Allaway, E., & Mckeown, K. (2023). Zero-shot stance detection : Paradigms and challenges. Frontiers in Artificial Intelligence, 5, 1070429. https://doi.org/10.3389/frai.2022.1070429

Neves, M., & Ševa, J. (2021). An extensive review of tools for manual annotation of documents. Briefings in Bioinformatics, 22(1), 146–163.

Acknowledgements

I would like to thank Alessandro Meneghini, Jessica Neri, and Laura Soledad Norton for their commendable contribution as experts in identifying the categories. I also thank Mauro Sarrica, Georgios Markopoulos, Franco M. T. Gatti, Andrea Sciandra, and Arjuna Tuzzi for their support in defining and reviewing the analyses and research project.

Funding

Open access funding provided by Università degli Studi di Roma La Sapienza within the CRUI-CARE Agreement. This work was supported by Sapienza University of Rome.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author has no competing interests to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version is updated due to a spell error in the title of Table 1. The text marco and micro has been updated as macro and micro categories.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rizzoli, V. The risk co-de model: detecting psychosocial processes of risk perception in natural language through machine learning. J Comput Soc Sc 7, 217–239 (2024). https://doi.org/10.1007/s42001-023-00235-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42001-023-00235-6