Abstract

For reliable supervision in multiphase processes, the droplet size represents a critical quality attribute and needs to be monitored. A promising approach is the use of smart image flow sensors since optical measurement is the most commonly used technique for droplet size distribution determination. For this, two different AI-based object detection methods, Mask RCNN and YOLOv4, are compared regarding their accuracy and their applicability to an emulsification flow process. Iterative optimization steps, including data diversification and adaption of training parameters, enable the models to achieve robust detection performance across varying image qualities and compositions. YOLOv4 shows better detection performances and more accurate results which leads to a wider application window than Mask RCNN in determining droplet sizes in emulsification processes. The final droplet detection model YOLOv4 with Hough Circle (HC) for feature extraction determines reliable droplet sizes across diverse datasets of liquid-liquid flow systems (disperse phase content 1–15 vol.-%, droplet size range 5–150 μm). Evaluating the adjustment of Confidence Scores (CS) ensures statistical representation of even smaller droplets. The droplet detection performance of the final YOLOv4 model is compared with a manual image processing method to validate the model in general as well as its accuracy and reliability. Since YOLOv4 in combination with Hough Circle (HC) shows an accurate and robust detection and size determination, it is applicable for online monitoring and characterization of various liquid-liquid flow processes.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The products of liquid-liquid processes, such as emulsification processes, cover a wide range of daily-life products such as cosmetics, food products, or pharmaceuticals. Designing, operating, and controlling such processes requires knowledge of Critical Quality Attributes (CQA) since those affect the efficiency of the process and the quality of the product. For emulsification processes, the Droplet Size Distribution (DSD) is important to define the quality, consistency, and stability of the product and may thus be used during process control [1,2,3]. Monitoring the DSD requires suitable processing methods for size characterization. The most common techniques to measure droplets are optical droplet detections using image processing, which can be performed using external methods or internal probe systems [4,5,6,7]. Besides the usage of probe systems, measurement flow cells provide the opportunity for the characterization of microreactors and micromixers in terms of their efficiency, flow behavior as well as process and system properties [8,9,10,11]. Optical access to the process as well as sufficient image quality concerning image size, illumination, color, and contrast are required. For additional variation of process parameters (formulation etc.) image detection methods must ensure robustness and trustworthiness across a wide operation window [12, 13]. Manual image processing methods are unsuitable for fast DSD evaluation and process monitoring [7]. Therefore the use of automated, Artificial Intelligence (AI)-based image processing methods are increasingly used for droplet detection in liquid-liquid systems in the process industry and show high potential for process monitoring [14,15,16,17,18,19]. Neuendorf et al. (2023) [16, 17] trained and applied Mask RCNN (Mask Region Convolutional Neural Network) to evaluate average droplet sizes to monitor a solvent extraction process, while Suh et al. (2021, 2022) [20, 21] used Mask RCNN to observe dropwise condensation and boiling heat transfer. Sibirtsev et al. (2023, 2024) [18, 19] presented a generalized model of a Mask RCNN implementation used for droplet detection in liquid-liquid systems. There, the trained and tested model shows a good detection accuracy regarding Sauter mean diameter d32 and is applicable in a size range of 0.2–3.2 mm.

In the work of Rutkowski et al. (2022) [22], the applicability of CNNs based on the You Only Look Once (YOLO) architecture and Faster R-CNN for droplet detection in microfluidics was investigated and compared to classical methods such as ImageJ and Hough Circle Transform. For a droplet size range of d = 50–100 μm, the results of the CNNs were in a similar range to the classical methods. Among the CNNs, YOLOv5 provided the best results due to its accuracy and speed. Moreover, Zhang et al. (2022) [23] presented a combination of instance segmentation and shape fitting to determine the droplet diameter of emulsions. The segmentation of the droplets was carried out via Mask RCNN. Ellipses were fitted to the generated masks. The difference in the mean values between the manually determined sample with ImageJ and the distribution from the above-mentioned method was 0.14 μm for a droplet size of d = 60 μm. For droplets in the size range of 40 μm, this difference was 0.21 μm. All of the mentioned methods show the applicability of deep-learning methods in the field of droplet detection and size determination for liquid-liquid processes. Thereby, the focus is on single droplet detection to characterize the process behavior. The resulting droplet images show, in general, individual droplets, that are not overlapping or in different focus levels. In addition, the droplet sizes range from 40 to 3200 μm. In a variety of industrial processes, such as emulsification or extraction, there is an overlap of droplets during the evaluation of an internal sample, or several focal planes are resolved. A large detection range is required to characterize a large number of different formulations. This includes a high diversity concerning the droplet sizes as well as the disperse phase content. These parameters influence the image composition like the exposure and the contrast between the disperse and continuous phase, which can result in limitations in the optical evaluation [5]. The detection of small droplets (< 50 μm) as well as the detection of overlapping droplets is crucial for the evaluation of some emulsification processes.

In this contribution, the challenge of small droplets (5–40 μm) as well as the effect of overlapping droplets in different focus levels is investigated in flow. A first proof of concept as well as the method for optical access to the emulsification process are presented in our previous contribution [11, 24]. In this, an AI-based image detection method using YOLO version 4 (YOLOv4) in combination with a feature extraction method, Hough Circle (HC), for droplet size determination is introduced. The application is limited to the emulsion with a low disperse phase content, thus just a proof of concept is given. Additionally, an optical measurement flow cell is presented to overcome the challenges of optical access to emulsion formulations with varying disperse phase content. This method provides the possibility to monitor and evaluate the emulsification process in flow.

This work aims to train, compare, and optimize two different models for droplet detection and droplet size determination in emulsification processes. The focus is to extend the application window from the previous work and to show general applicability for process characterization. For this purpose, different optimization steps during data acquisition, training, detection, and size determination were performed. The resulting model aims to show reliable and accurate droplet detection for a wide application window. This includes data similar to that used in training as well as new unseen data with different image qualities and features to test the robustness of the AI-based detection, making it applicable for online monitoring and characterization of various liquid-liquid flow processes. The approach addresses the evaluation of smaller droplet size ranges as well as the evaluation of overlapping droplets. Furthermore, the feasibility for the online characterization and monitoring of emulsification processes is evaluated.

Materials & methods

The experimental setup as well as the construction of the different datasets, which are used to train, test, and examine the robustness of the two different deep-learning models are presented in the following chapter. The network implementation of YOLOv4 and Mask RCNN as well as the different methods for size determination are explained. The working principles of the two object detection algorithms are based on different approaches, which can be seen in Fig. 1.

Workflow for object detection and size determination with statistical evaluation in the emulsification process. Mask RCNN combines object detection and instance segmentation. The generated mask contains the pixel-wise information of the detected object, which is used for the droplet size calculation. The output of YOLOv4 are bounding boxes that contain the coordinates of the detected object. With a Hough Circle (HC) detection in each bounding box, the droplet radius is determined. From both methods, the generated output (droplet diameter) is further statistically evaluated with median, mean, min/max value, IQR, etc., which are saved in a.csv file and visualized as a histogram and boxplot

The workflow in Fig. 1 shows the mask generation step as well as the annotation using Mask RCNN for object detection. The output of the instance-segmentation method is an object mask, which contains the pixel-wise information of the detected object. This mask is used for size determination, which assumes perfectly spherical droplets. The detection and classification using YOLOv4 are performed in a single step. The output of YOLOv4 are bounding boxes that contain the coordinates of the detected object. To gain information about the droplet sizes, an additional feature extraction method needs to be performed. HC is applied on each detected droplet by YOLOv4 resulting in a list of diameters. For this purpose, the position defined by the bounding box is used to identify the coordinates to which HC is applied on the image, before repeating this procedure for the total image. The output of the AI-based detection method and additional feature extraction method are droplet sizes for the detected droplets. As a last step, a statistical evaluation is performed. Based on this statistical evaluation, the size determination of both models is compared to each other. For result validation, a reference image analysis method is introduced.

The performance of the model is assessed based on the implemented hardware and software elements. The Computer Processing Unit (CPU) is an Intel Xeon with 3.3 GHz, 10 cores, and 128 GB of Random Access Memory (RAM). The Graphical Processing Unit (GPU) is an NVIDIA Quadro RTX 5000 with 16 GB of Video Random Access Memory (VRAM) and 3072 Compute Unified Device Architecture (CUDA) parallel processing cores.

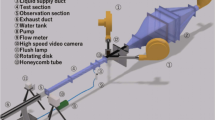

Experimental setup

The data in this paper was obtained from a former publication [24]. Additional data was newly produced from dispersion experiments using an optical measurement flow cell analog to our previous work. Therefore, the experimental methodologies will only be discussed briefly [11].

For all dispersion experiments, an emulsion of commercially available sunflower oil and deionized water with a varying oil content of up to 15 vol.-% is produced in a 1 L glass vessel with an integrated heating jacket. The production of the emulsion is facilitated by mechanical dispersion (rotor-stator system, HG-15D, witeg Labortechnik GmbH, Wertheim, Germany and HT1018, witeg Labortechnik GmbH). The rotor and stator have a diameter of 20 mm and 25 mm, respectively, resulting in a gap width of 0.25 mm. The setup includes a bypass with an integrated optical measurement flow cell. The mixture is circulated by a peristaltic pump (Watson Marlow, Rommerskirchen, Germany) passing the optical measurement flow cell, which is placed under a microscope (Bresser Science ADL 601P, Bresser GmbH, Rhede, Germany) with a digital camera (Z6, Nikon, Tokyo, Japan) attached to the microscope using a 10-fold magnification. In the experiments, the continuous and disperse phases were fed into the emulsion vessel, where the emulsification process takes place. Data was collected in flow along the full process [11, 24].

Dataset construction and data conversion

The image database for training used in this work is publicly available [25] and contains 120 images in total with a resolution of 1,980 × 1,024 pixels and 3,840 × 2,160 pixels. These images cover a disperse phase content of 1–15 vol.-%, a disperser speed of Udisperser= 5000–10,000 rpm, and an emulsifying time of tprocess= 2–30 min. All images are captured using a 3D printed optical measurement flow cell and thus represent a frozen point in time during an emulsification process. The specifications of the training datasets are listed in Table 1.

Training Dataset 1 is presented in [24] and forms the basis for this work. Training Dataset 2 contains new images captured in the setup presented in [24] depicting emulsions with a disperse phase content of 1–10 vol.-% and the same settings for the disperser speed. Training Dataset 3 is captured in the optical measurement flow cell presented in [11] and shows an increased number and diversity of emulsion images.

The use of different datasets results in an iterative adaption of the presented neural networks, which increases the number of validation images and the overall diversity. In addition, only cropped, square images are used in the final adaptation and extension, as both networks would otherwise scale the images, which would lead to distortion [26, 27].

Additional datasets were captured for further testing of the models and to investigate their applicability to images that differ from training datasets. Sample images of the datasets to evaluate training are given in Fig. 2 together with images to test the applicability of the best model.

By choosing Testing and Generalization Datasets C–F to examine the applicability, a wide range of challenges in droplet detection in technical applications are tested, such as different sizes, coloring, contrast, and varying the phase content of disperse phase resulting in overlapping droplets. Training Evaluation Datasets A and B include data that is similar to the data the model is trained on (Training Dataset 1–3). The corresponding output for each input must be specified to train a Convolutional Neural Network (CNN), as illustrated in the workflow in Fig. 3.

Workflow of dataset construction including labeling and label format information. a) shows the dispersion experiments using different setups [11, 24] with different parameter settings. Emulsion experiments are performed in flow. b) illustrates the preparation for labeling in COCO-JSON format (c)). For a better comparison of the results, the labeled images are converted to YOLO format (d)). During training in YOLO, an automated and random augmentation is performed

Each CNN needs a specific label format. Mask RCNN uses a single.json file for the entire dataset while YOLOv4 requires the labels of each image to be in a separate.txt file. To ensure that both models receive the same dataset, the data is labeled only once (Fig. 3a)-c)). The resulting.json file in COCO format is then converted in to.txt which in comparison contains less information, as illustrated in Fig. 3d). The presented training datasets were labeled using Visual Geometry Group Image Annotator (VIA) for marking the droplets on each image. High image resolutions go hand in hand with higher hardware requirements. To make sure that no information is lost because of image resizing, the emulsion images are cut into sub-images of 640 × 640 pixels. In general, in the case of YOLOv4, the image resolution must be a multiple of 32, and in the case of Mask RCNN, a multiple of 64. In these partial images, only completely visible droplets are labeled. The droplet segments at the edges are not labeled, as they should not be detected later. In principle, the labels are placed as close as possible to the droplets to ensure that only a single droplet is in the center of each label. It is also important that all droplets are labeled in the image section, otherwise the training metrics will lose their significance later on. In this study, droplets that were not clearly recognized as droplets and cut-off droplets at the edges of the images were not labeled.

You only look once version 4 - YOLOv4

YOLOv4 is the fourth iteration of the YOLO object recognition algorithm, which is a real-time single-stage deep learning-based object detection framework performing both detection and classification in a single pass. YOLO is a regression-based algorithm since there is no selection of interesting Regions of Interest (ROI) in the image. It predicts classes and bounding boxes for the entire image at once [26]. The YOLOv4 architecture can be divided into a backbone, neck, and head. A detailed description of the YOLO framework is given in [26], and an overview of the further information for this work is given in SI, Chapter S1.

For neural network implementation, the performance of the model is assessed based on the hardware and software elements. The most crucial software parts for implementing object detection and training with YOLOv4 will be discussed. Python 3.8 is used as the programming language for the implementation. The compatibility of the hardware and software must match, making the software dependencies essential. The version of openCV used is 4.5.1, which is built with NVIDIA CUDA, and NVIDIA CUDA Deep Neural Network library (cuDNN) is the backend. The Application Programming Interface (API) CUDA enables the development of high-performance GPU applications developed by NVIDIA. The CUDA version used is 11.0. The GPU accelerator library for deep neural networks cuDNN is used in 8.0.3 version and offers finely tuned implementations for common procedures such as forward and backward convolution, and activation layers. It was specifically developed for the construction and training of neural networks. Darknet is an open-source framework written in C and CUDA, which can model any type of neural network such as CNNs or Deep Neural Networks (DNN). It is used for training YOLOv4 [26, 28, 29].

Feature extraction is a technique to get noteworthy characteristics from an image. The output of the object detection method YOLOv4 is a bounding box containing the detected droplet. Using a feature extraction method is required to quantify the information about the detected droplet edges. Based on the coordinates of the bounding boxes, corresponding image sections of droplets are transferred to Hough Circle in the second step of detection to get the actual droplet size [24]. A more detailed description of the operating principle is given in SI Chapter S.3.

HC is implemented using openCV [30]. The following input parameters are selected for the edge and circle detection function:

-

image: The input image must be converted to an 8-bit grayscale image,

-

dp: Resolution of the image, set to 1; no changes in resolution,

-

minDist: Specifies the minimum distance between two detected circles; in this case, this value should be as high as possible, as only one circle should be detected per image section; set to 1,000,

-

param1: Threshold value for the brightness gradient for edge detection; set to 5 (after optimization, see Chap. 3),

-

param2: Threshold value for circle detection; defines how much of an edge must be detected to be detected as a circle; set to 15 (after optimization, see Chap. 3),

-

min- and maxRadius: Specification of an area in which the radius of the circle should be. In this case, the side length of the bounding box is used for orientation.

The output of Hough Circle is the center coordinates, a and b, of the detected circle and the radius R of it [a, b, R].

Mask RCNN

Mask RCNN (Mask Region Convolutional Neural Network) is a two-stage object recognition architecture and was published as an advancement of Faster RCNN by He et al. [27]. In addition to detection and classification, Mask RCNN offers a segmentation function that separates the detected objects from the background. The generated object mask is a layer that has the identical size of the input image and assigns the pixels of the objects. Mask RCNN consists of the backbone, the Region Proposal Network (RPN), the Region of Interest (RoI) Align, the Fully Connected Layers, and the Mask Branch. A detailed description of the Mask RCNN framework is given in [27], an overview of the most important information for this work in SI, Chapter S2 [27, 31].

The neural network implementation is adapted to [32] and based on the following software, and frameworks. Python 3 is used as the programming language for the implementation with Tensorflow 2.2.0 as a framework for Machine Learning (ML) and AI. Keras 2.3.1 is used as an interface with another framework, in this case Tensorflow. The version of openCV used is 4.5.1. The CUDA version used is 10.1, and the cuDNN version is 7.6.5. As the backbone for the neural network ResNet 50 and Resnet 101 are examined.

For the feature extraction method and size determination, a binary segmentation mask is generated for the feature map. The segmentation mask is a tensor where the prediction of each pixel is assigned one of the two possible truth values True and False. The pixels that are assigned to the object are given the value True. A separate segmentation mask is generated for each object class to avoid conflicts when assigning a pixel to different classes. This mask enables the size of the detected droplets to be determined. The total area of the droplet results from the sum of the truth values. Assuming that spherical droplets are present, the droplet diameter ddroplet is determined using the area of a circle Acircle (see Eq. 1).

Reference image analysis with ImageJ

In reference to the previous work [24], a comparative image analysis methodology is used to assess the trustworthiness of the resulting droplet size distributions and, thus, to evaluate the presented models for droplet detection and droplet size determination. For this purpose, an independent evaluation of the images is performed manually in ImageJ (version 1.53k; Java 1.8.0_172) [33]. The evaluation is performed qualitatively based on the droplet size distributions q1.

Training parameter and optimization step

This contribution aims to generate a robust and generalizable method for emulsion characterization. Thus training and model modification are necessary. Figure 4 illustrates which different parameters can be adjusted during model development. A detailed description of the different steps is explained in the following chapters.

Workflow of optimization and validation of the object detection models. Parameter adjustment can be carried out during dataset construction, training and detection, and feature extraction. The last step is the manual validation of the trained models on similar data (A-D) and to evaluate the robustness of the resulting detection model (E-F)

Training and evaluation metrics

The training aims to make the desired features accessible to the models for subsequent detection. A representative quantity and diversity of the states to be learned in the training set is necessary to achieve a generalization of the model. The models presented in this paper are used for single droplet detection including a size determination for emulsification processes, whereby different emulsion formulations need to be characterized. Evaluation Metrics (EM) are used to determine the performance of the respective models. During training, the Mean Average Precision (mAP) and the loss are calculated, providing a reference for evaluating the training performance of different configurations. The mAP is a metric used in object recognition to evaluate the accuracy of the model using a separate validation dataset. It indicates how well the model can recognize and localize objects. The mAP calculates the mean of the Average Precision (AP) for all classes i and gives an overall score across all classes, see Eq. 2.

A higher mAP indicates a better accuracy of the model. Based on a defined IoU (Intersection over Union) threshold, for example 0.5, predictions are classified as correct or incorrect. True positives are correct predictions that exceed the IoU threshold, while false positives are predictions that do not match a ground truth or by not reaching the IoU threshold. The average precision of a class corresponds to the area below the precision-recall curve. The loss indicates the deviation of the predictions of the model from the actual labels. In YOLOv4, the loss includes both localization and classification. Successful training is described by an increase in mAP and a decrease in loss. A high mAP indicates high precision and accuracy across different classes. A low loss means that the predictions of the model are close to the actual objects in the training dataset. The highest possible mAP and the lowest possible loss do not guarantee successful training. A visual inspection of detections must also be carried out for a final evaluation of the actual success [28]. The first step of training and optimization comprises the previously described step of preparing the dataset (see Chap. 2.2). This process is identical for both algorithms.

Training - YOLOv4

To produce a fine-tuned set of weights for the presented use case, the model was initialized from pre-trained COCO weights available in the YOLOv4 repository [26, 28]. Various parameters can be varied during the training of the model, which can be general or layer-specific. General parameters determine, for example, the behavior of the learning rate or the input size. The parameters of the convolutional layers can be used to make settings for feature extraction, while the parameters of the YOLO layers can be used to influence the final class, position, and sizes of the detected objects [28]. As part of the optimization, YOLOv4 is trained on Training Dataset 2 in the first step. Due to the smaller size of the images (400 × 450 pixels or 640 × 512 pixels) and the reduced number of objects per image, a larger number of configurations are trained in a shorter time. Based on this, configurations are selected for Training Dataset 3, which shows the highest diversity. The modified configurations that produce the best detection results for Training Dataset 3 are listed in Table 2. An overview of all varied configurations is given in SI Table S 2 and S 3.

Training– Mask RCNN

The training configurations for Mask RCNN are similar to the configuration for YOLOv4. The initialization of the training is performed on the pre-trained COCO weights. The depth of the network can be set by selecting the backbone, here ResNet 50 and RestNet 101. In addition, it can be determined whether the entire network or only the head layers are used for training. If a pre-trained weight is already being used, the head layers may be sufficient. For training a new weight the entire network must be run through. In general, the hardware requirements during training are significantly higher than with YOLOv4, and therefore parameters can only be optimized slightly compared to the default settings. The modified configurations that produce the best detection results are listed in Table 3. An overview of all varied configurations is given in SI Table S 5 and Table S 6. The training of Mask RCNN is performed on Training Dataset 2 and 3.

Detection using YOLOv4 and Mask RCNN

There are essentially three parameters that are decisive for detection: the network size, the confidence, and the threshold. For the Confidence Score (CS) and threshold, the specifications of 0.9 and 0.5 respectively are used, whereby the influence of the CS is investigated for the final model. The network size influences the computing effort as well as the information density per image, hence individual consideration is essential.

For the detection in YOLOv4, the resolution of the network is set to 1,667 × 1,667 pixels by default. However, as the images in this work for testing have a resolution of 3,840 × 2,160 pixels and 6,014 × 4,016 pixels, a lower scaling means a loss of information. Accordingly, the network resolution should be selected in a similar range to the image resolution. In this case, 3,552 × 3,552 pixels and 4,096 × 4,096 pixels respectively, provided the best detection results using full-size images.

The detection in Mask RCNN is only performed on images sections with the size of 1,000 × 1000 pixels since the computational effort is too high. In addition, the focus during image capturing is mainly in the center anyway, therefore it is ensured that the region of interests are covered by the image sections alone.

Optimization - Feature extraction method HC

As illustrated in Fig. 1 the final characterization of emulsions is also based on the performance during feature extraction. The optimization of feature extraction in Mask RCNN is dependent on the generated mask and thus dependent on training and detection performance. In the YOLOv4 approach size determination is done by HC following the object detection. Despite the assumption that the droplets are spherical, a feature extraction method must be used to determine the size of the objects. It is not possible to simplify the size determination using the length of the bounding box, as the bounding box does not always have to be square. At this point, rectangular BBs result from the non-uniform shadow formation of the droplets on the existing images. The fact that the images are taken in a flow and that there is some motion blur on the images further emphasizes this effect. Furthermore, the aspect of overlapping droplets should be mentioned, which potentially covers parts of a bounded droplet. This leads to a slight optical distortion of the overlapping droplet area, potentially resulting in a rectangular BB. Based on the coordinates of each individual bounding box detected by YOLOv4, the corresponding image sections of the droplets are transferred to the Hough Circle in order to determine the actual droplet size. The size determination using HC is therefore only performed for the objects detected by YOLOv4.

Recognition of edges is performed based on brightness gradients [34]. The input images for feature extraction are defined by the set bounding boxes, i.e. the output of AI-based object detection using YOLOv4. The aim of this is to achieve a better delimitation of the droplet edges compared to the surroundings. For this purpose, the input parameters of the feature extraction technique defined in Chap. 2.3 are examined.

The number of detected circles using HC depends on the parameters param1 and param2. Param1 represents the necessary brightness gradient which has to be exceeded for an edge detection. Param2 represents a threshold value for the circle center point accumulator. The lower this value is, the less of a droplet edge must be detected in order to detect a complete circle. This is particularly helpful on images with overlapping droplets [34]. The image is optimized concerning brightness, contrast, and sharpness. As the input images have different brightness and contrast values, the histogram cutting method is used. First, the distribution of brightness values in the image is analyzed in the image processing pipeline. Secondly, it is determined how areas with extreme grey values (black and white) need to be restricted in order to increase the contrast of all other areas. The result is an image in which the edges of the individual droplets are significantly more prominent [30, 34]. In addition, the noise in an image can interfere with edge detection. Gaussian blurring or median filtering can be useful. It should be noted that this is always accompanied by a loss of information, meaning that the reduction should not be too significant. The influence of image pre-processing before applying the Hough Circle is illustrated in Fig. 1, showing the feature extraction step. The optimized and set parameters for the Hough Circle Transform are defined in Chap. 2.3. Besides the optimization of the input parameters, the application of feature extraction has been improved. The size determination of the droplet is performed using Hough Circle after each detection of a droplet. To visualize the result, the droplet is marked on the input image. Drawing the droplet influences the next detection, especially if there is a large number of droplets. The optimization provides two separate images, one for detection and one for visualization. For this, a copy of the input image is created for the visualization.

Results & discussion

In this section, the results of the comparison of YOLOv4 and Mask RCNN for droplet detection and size determination in emulsification processes including the different optimization steps and manual validation are presented. Additionally, the results for generalization and robustness by comparing a manual method with an object detection method applied on different, unseen datasets are discussed.

Training and testing of models

Both models are trained with different configurations and detection is evaluated on unseen data. To evaluate the success of the training, the course of the mAP of the validation dataset and the loss of the training dataset are considered. In addition, the detection performance is assessed, as datasets of varying complexity are trained and challenges such as overfitting or underfitting can occur.

Training results - YOLOv4

The training process is based on different steps, the first training iteration of YOLOv4 is presented in [24]. Based on this configuration, the first training is performed on Training Dataset 2. The aim is to determine potential configurations that fulfill the requirements for stable training on the more diverse Training Dataset 3. The configuration and training results for this initial training are presented in the SI, Chap. 4. The training on Training Dataset 3 considers the influence of the anchors, the influence of the order of masks, the random scaling, and the limitation of object numbers. The graphical illustration of the training effort (mAP and loss) is shown in Figure S 2 in SI. An evaluation of the best weights on the detection performance of emulsion images (Training Evaluation Dataset A and B) is carried out. Table 4 shows the number of objects detected by YOLOv4 and the feature extraction method HC for the best training configuration B3. The results of the further configuration are presented in the SI, Chap. 4.

The best weights are found for iteration 2,000 (mAP 86%) for the configuration B3. In addition, an iteration in the middle was considered, since YOLOv4 determines the best weights just based on the highest mAP, not considering the trainings loss. Especially the loss is higher at the beginning of the training. When considering small droplets that are close together, overlapping bounding boxes are associated with greater uncertainty, therefore the detection performance should be considered even for a low CS (0.6). The CS is made up of the certainty in determining the location and the class of an object. Since only one class is considered here, lower confidence is associated with greater uncertainty regarding the location of the bounding boxes.

Configuration B3 offers two promising weights, with iteration 10,000 detecting the most objects of all weights with 272 and 427 for a confidence of 0.9 and 0.6 respectively. The high number of objects with a high confidence is particularly striking. As the focus is on the detection of small droplets at higher disperse phase fractions, detections were also carried out with all weights on emulsion images with 10 vol.-% of disperse phase. Configuration B3, iteration 10,000 offers the highest number of detected objects at 306 or 839, as it was already the case for the image with 1 vol.-% disperse phase. These weights provide the basis for detecting droplets of different sizes across all regions of the image, which enables the determination of representative distributions. It should be noted that although iteration 2,000 of the same configuration has a higher mAP (86%), it performs significantly worse in detection. The lower mAP (76.4%) of iteration 10,000 initially indicates that the performance on the validation dataset decreases. Since the validation data consists of 22 images, not all visual scenarios of the application are covered. The dataset extensions during training generate increasingly complex images and shift the detection performance from the validation dataset to a better generalization. Additionally, the performance of the feature extraction method HC decreases with increasing disperse phase content as well as with decreasing CS. The decrease in droplet detection with increasing disperse phase content is due to the decrease in the contrast between the phases and the overlapping of individual droplets. The difference in HC detection within the evaluation of the example image with 10 vol.-% oil is noticeable. The feature extraction method shows an object detection of 306 objects at a CS of 0.9, whereby a diameter could be determined for 93 droplets. As the CS is decreased, the diameter determination drops to 0. The optimization of the HC method has not been implemented at this point and shows that a potential overlapping of the bounding boxes for detected objects during the application of the HC method can cause problems.

Example images using the best weights for configuration B1-B4 during detection are shown in SI Figure S 3 and Figure S 4.

Training results - Mask RCNN

The configuration and training results for the initial training on Training Dataset 2 are presented in the SI, Chap. 3. In contrast to YOLOv4, only one image is processed per run; configurations with more than one image result in a training cancellation. A similar situation occurs with the number of anchors. In the YOLOv4 configuration, up to nine sizes can be specified, with Mask RCNN the limit is five. Consequently, detection assistance is provided only for a smaller set of sizes. Another disadvantage compared to YOLOv4 is the limit on the number of objects to be trained per image. The limit is 100; a higher number leads to the training being canceled. In Training Dataset 3, the maximum number is 400, thus the potential of the dataset for particularly small droplets is not completely utilized. Table S 7 in SI shows the mAP and loss curves for configuration C2 for 100 epochs. As with all the configurations examined, it can be seen that the mAP reaches a maximum of approx. 20%, which is significantly lower in comparison to YOLOv4. Although the loss already reaches values < 1 after 20 epochs, the performance on the validation dataset is consistently low.

Training on Training Dataset 2 has shown that significantly higher values can be achieved for the mAP (48–73%). The new Training Dataset 3 is too complex for the network or the parameters, in particular max instances, cannot be selected to enable effective training due to hardware limitations. When training YOLOv4, the removal of the limit on objects per image resulted in a significant gain in detection performance. With the maximum possible max instances for Mask RCNN, approximately three-quarters of all droplets in images with particularly small droplets are not used for training. To check this, the training was performed again with configuration C2, but this time some of the images with particularly many small droplets were removed from the validation dataset. The maximum mAP increased from 22.2 to 38.1%. This means that effective training is not possible, especially on the images that were added to expand the scope of the application.

Detection and comparison of models

For model comparison, images with a disperse phase fraction of 1 and 10 vol.-% of Training Evaluation Dataset A and B were used. Figure 5 shows the detection results for a CS of 0.9 on droplet images with a size of 1,000 × 1,000 pixels. The detection result of Mask RCNN is illustrated in (a) and c), the detection result using YOLOv4 and Hough Circle as feature extraction method is shown in (b) and d).

For Training Evaluation Dataset A (1 vol-%, Fig. 5a) and b)), the number of detected objects is in the same range for both detection methods (74 vs. 88) and that the generated mask in some cases even exceeds the outer edges of the droplets. This is particularly noticeable when comparing the statistical evaluation of the droplet sizes, see Table 5. For Training Evaluation Dataset B (10 vol.-% disperse phase, Fig. 5c) and d)), almost no droplets are detected using Mask R CNN, which further confirms the assumption that no effective training with Mask RCNN is possible on these images due to the high number of objects. Investigation of further configurations shows similar results.

Considering the variance and location parameters of the statistical evaluation, the values determined with Mask RCNN deviate from the reference method ImageJ. The segmentation method results in a median of 38.31 μm and a mean value of 38.79 μm, which differs from the reference by 5.22 μm and 4.79 μm micrometers respectively. The droplet sizes determined using YOLOv4 show a good approximation and deviate in a range of 0.83 μm and 0.55 μm regarding the median and mean.

In conclusion, an expansion of the detection range cannot be realized for Mask RCNN. In contrast to YOLOv4, detection on images with full resolution is not possible. The segmentation feature of Mask RCNN does not offer any added value for this specific application. Although the area of the objects and thus the diameter can be determined without any further intermediate step, this is only possible on images with limited oil content or a limited number of droplets. Additionally, a significant deviation from the validation method was observed in the droplet sizes. YOLOv4 and Hough Circle will be validated for further work concerning size determination and the generalization of the methodology will be discussed.

Evaluation of final model

This section evaluates the capabilities of the final YOLOv4 model. A validation of its accuracy in droplet size detection and the robustness of the model is performed. The final model combines the adjustments of the YOLO network and the optimization of the HC feature extraction method. The image processing pipeline includes the following steps:

-

1.

Reading the video and extracting frames.

-

2.

Detection with YOLOv4 and size determination with Hough Circles, successively and repeated until the entire image is processed.

-

3.

Saving the droplet diameters of each frame and calculating and saving important statistical parameters in a.csv file.

-

4.

Plotting the results (overall distribution).

The final YOLOv4 model is validated concerning its detection results and the final size determination as well as its usability for emulsification flow process monitoring. The overall detection results of the different optimization steps are illustrated in Fig. 6.

Evolution of optimization steps for YOLOv4 and Hough Circle on an example image of Training Evaluation Dataset B. (a) detection using the presented state of the art [24], (b) detection with new weights, (c) detection after optimization of HC preprocessing steps, (d) detection for optimized image input size (3,552 × 3,552 pixels), (e) showing the same with highlighted image section of 1,000 × 1,000 pixels, and (f) showing the corresponding DSD illustrated in a boxplot. Detection was performed with a CS of 0.9

The optimization due to further training (Fig. 6b)) is already described in Chap. 4.1. In Fig. 6c) the number of detected droplets is in a similar size range, but the number of detected droplets using HC is improved (93 vs. 306) due to HC parameter adjustment. The optimization of the image input size shows a further improvement in detection performance. A higher resolution promises a better representation of the image information resulting in an increase of detected droplets up to 653 (see Fig. 6d)). The final distribution in the form of a histogram is given in Fig. 6f). A comparison to a manual method as well as to an image section with a size of 1,000 × 1,000 pixels is given since the droplets are primarily detected in the center of the image. The statistical evaluation shows that the reference method and the detection on the image section of 1,000 × 1,000 pixels are closer together than using the total image for droplet detection. A more detailed view of the final detection on Training Evaluation Dataset A and B is shown in SI Figure S 5 and Figure S 6.

The optimization steps during training, detection, and HC implementation show an increase in the number of detected droplets. Thus, the resulting model offers a possibility for a representative statistical evaluation of droplet sizes within an emulsification process. The trustworthiness of the results needs to be validated for further application. The validation of the resulting DSD is performed using manual detection as a reference. This benchmark method is necessary to ensure the application range of the AI approach. Table 6 contains the statistical evaluation of the presented example image of Fig. 6 using YOLOv4 and ImageJ.

The deviation of the median is d50 = 2.88 μm and the mean is \(\bar d\)= 2.79 μm for the detection of the entire image compared to the reference method. The frequency density of classes around the mean value is higher using YOLOv4 than using the reference method. The narrower distribution results in a reduced standard deviation and a narrower IQR. The maximum detected diameter of dmax= 61.65 μm is also larger compared to the reference (dmax= 44.43 μm). This is due to two or even more circles at the edge of the image being detected as one droplet. A region of interest should be considered since the focus of the captured image is just in the middle of it. The edges of the droplet become more blurred and the contrast between the phases decreases, resulting in a less precise droplet diameter determination with HC. This is because of the presented experimental setup and the used magnification. Just a small deviation in the optical flow cell placement results in a blurred image section. Considering a 1000 × 1000 pixels section in the center of the image at a CS of 0.9, 314 droplets are detected, which is almost half the number detected on the entire image. Here, all the parameters determined are now closer to the evaluation using ImageJ. The median and mean are almost identical at d50 = 19.63 and \(\bar d\)= 19.96 μm respectively, which can be seen also in Fig. 6f). The frequency density of classes further away from the mean value, which leads to a larger standard deviation. In addition, the minimum and maximum values are significantly closer to the actual values. The results show that the droplets detected outside the center of the image are slightly larger on average and therefore not fully representative.

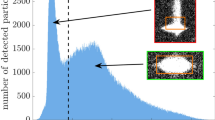

The droplet size determination was generally validated using YOLOv4 on Training Evaluation Dataset B, which has shown that the droplet size determination using a combination YOLOv4 and HC is representative and trustworthy. In the following, the influence of the CS is investigated, since it is indispensable. In order to define and validate the application range of a method, a comparison to a benchmark or to an already validated and established method is necessary. Figure 7 shows a comparison of the detection on Training Evaluation Dataset A and B for two different CSs. These were selected as 0.6 and 0.9 to ensure reliable detection. Detection on Dataset A is continued on the entire image, as there are generally fewer droplets present because of the low disperse phase content. In addition, the reference evaluation using ImageJ is shown in Fig. 7 to carry out a final assessment of the reliability of the results on Training Evaluation Datasets A and B.

Comparison of detection performance using statistical parameters for Training Evaluation Dataset A (a) and Training Evaluation Dataset B (b). Evaluation for Training Evaluation Dataset A is performed using the full image size and an input size for detection of 3,552 × 3,552 pixels. ImageJ comparison is also performed using the total image size. Detection and evaluation for Training Evaluation Dataset B is performed using an image section of 1,000 × 1,000 pixels, also for ImageJ evaluation. The image input size for detection is 1024 × 1024 pixels. An image stack of at least 5 images from the total set is used for the comparison with ImageJ

Comparing the distributions, it is noticeable that both CS show a reliable result for the DSD. Similar results are observed for the median with a maximal deviation of Δd50, A= 0.40 μm and Δd50, B= 0.22 μm between the detection results of CS = 0.6 and the reference, respectively. Comparing the mean for detection with CS of 0.6 and 0.9 to the reference shows a maximal deviation of Δ\(\bar d\)A, CS= 06 = 0.78 μm and Δ\(\bar d\)B, CS= 09 = 0.41 μm. However, the standard deviation and the IQR of the distribution, which was determined with a CS of 0.6, are larger and wider respectively. A detection with smaller CS values is more sensitive to smaller droplets since detecting those is associated with greater uncertainty. Therefore, more bounding boxes are detected for smaller droplets in a fixed size range, which potentially leads to confusion. The plotted distributions for a CS = 0.6 in Fig. 7 are shifted to smaller droplet sizes for both evaluated datasets. In this case, higher uncertainty results in a less representative distribution since the fit to the reference method is better for a higher CS. Table 7 contains the statistical evaluation of the presented histograms in Fig. 7 using YOLOv4 and ImageJ.

For Training Evaluation Datasets A and B, it is noticeable that the distribution detected with a CS of 0.9 shows a better fit to the reference. Depending on the general size range and especially on the disperse phase content the detection should be continued with a CS of 0.6 to make sure that there is no leak of information regarding smaller droplet sizes.

In general, the AI-based object detection method using YOLOv4 and HC provides the possibility to analyze and characterize liquid-liquid processes in flow. Besides the detection accuracy, the detection is much faster (~ 3 s per image using YOLOv4 and HC compared to ~ 10 min per image using a manual method), making it possible to analyze a liquid-liquid flow process close to real-time. For this, a video sequence of 5 s was used to evaluate the processing time of the total image-processing pipeline. The image-processing pipeline handles the video and extracts one image per second, resulting in 5 droplet images for the evaluation. The different CS of 0.6 and 0.9 were considered again during the evaluation, as the number of droplets detected differs with this variation and influences the process time. The processing time analysis is based on the difference between the initialization time and the detection time. The initialization time describes the time required to initialize the YOLO network. This step is only performed once independently of the number of videos to be processed. The detection time includes the object detection and the size determination of the droplets using HC as well as the creation and storage of the statistical values in a.csv file. With a CS of 0.6, the processing time is 18.99 s for the detection of 4,721 droplets. With a CS of 0.9, the processing time decreases to 15.97 s for the detection of 3,636 droplets. The initialization time for both detections is 19 s. In total, a maximum processing time of 38 s for the detection of 4,721 droplets results. Accelerating process evaluation in this way enables the real-time evaluation of emulsion processes. In this context, a sweet spot must be identified regarding the quantity of images to be evaluated in order to analyze a sample as large as achievable and to keep the evaluation time as short as possible.

Generalization of final model

The model was tested on further datasets to establish a reliable size characterization. The results of the application on Testing Datasets C and D are presented first. This data is qualitatively more similar to the training data and differs mainly in the recording conditions and the droplet sizes. Figure 8 shows an example image of Testing and Generalization Datasets C-F before (top) and after (bottom) detection for each dataset as well as the corresponding results of the statistical evaluation with different CS (0.6 vs. 0.9) as well as the evaluation using the reference method. The detection on Testing and Generalization Dataset C-E is performed using an image section of 1,000 × 1,000 pixels, which is also used for ImageJ evaluation. The images of the Testing and Generalization Dataset F have a size of 4,500 × 2,500 pixels. The image input size used for detection is adapted to the network requirements such that it is dividable by 32 (1024 × 1024 and 4,096 × 2,240 pixels respectively).

Comparing the results of Testing Dataset C, the droplet sizes determined with a CS = 0.6 deviate towards smaller droplet sizes, as can be seen in Table 8. In addition, the standard deviation and the IQR are larger. Just as for Training Evaluation Datasets A and B, a CS of 0.6 is more sensitive to smaller droplets, as the box of the boxplot is enlarged towards smaller sizes. In particular, the results of the CS = 0.9 show a better approximation to the reference method concerning the determined median, average, and standard deviation.

The statistical evaluation of Testing Dataset D shows an approximation of the results of CS = 0.6 to the reference method. Both, the median and the mean deviate only by Δd50 = 1.28 μm and Δ\(\bar d\) = 1.73 μm. The standard deviation of the distribution for both CS is in a similar size range compared to the reference, including the minimum and maximum detected droplet size. All in all, regarding Testing Dataset D, the detection of smaller droplets is crucial to statistically represent the entire size range of the distribution. Consequently, a lower CS is necessary to provide a representative and reliable result. This leads to the point that depending on the general droplet sizes and the disperse phase content the CS needs to be determined individually for further application.

Finally, the generalization of the YOLOv4 model to datasets that differ qualitatively from the training data is examined. Generalization Datasets E and F are used for this evaluation. The results of Generalization Datasets E and F verify the previous results. The evaluation using the CS of 0.6 deviates less from the reference method and also depicts smaller droplets more representatively in the distribution. If the maximum droplet diameter of the datasets is considered, it is noticeable that it deviates from the reference. This is critical, as individual droplets may be combined on these datasets during detection, and is illustrated by the outliers in the box plot. Consequently, the distributions show a greater tailing towards larger droplets. This false detection has a potential impact on the resulting DSD and increases with a decrease in CS. There is a trade-off to be found here, as lower CS will include smaller droplets in the distribution.

In summary, a generalization is possible and a wide range of applications is achieved for the analysis of DSD in emulsification flow processes. For the evaluated datasets, the model performed well, and the resulting DSDs are in the same size range as the reference method. The model is capable of analyzing different image qualities of emulsification flow processes, allowing the characterization of further liquid-liquid flow processes. Nevertheless, the localization and classification of droplets need to be critically reviewed if the detection is carried out on unknown datasets. For this purpose, a validation using a reference method is useful, so that the statistical size determination is secured for further uses. A potential definition of the CS based on the number of droplets detected in combination with changes in the distribution is just feasible to a secured, validated application window.

Conclusions & outlook

This contribution presents two different AI-based image detection methods, YOLOv4 and Mask RCNN, for droplet size determination in emulsification flow processes. Training and optimization of these models as well as a final comparison for the use case are presented. Training data diversification and adaption of the anchors during training are important factors to obtain better detection results in the flow cell. Additionally, the input size of the image for detection together with the preprocessing of the detected droplets before applying the HC algorithm are decisive characteristics for the generalization of the detection method. In general, both models show feasibility for the tested use case, but YOLOv4 appears more robust compared to Mask RCNN. Further, YOLOv4 has a wider application window since also emulsion images with higher disperse phase content can be analyzed.

The final model, YOLOv4 in addition with Hough Circle (HC) for feature extraction, was tested on different datasets to validate its accuracy in droplet size determination as well as its trustworthiness and robustness regarding different image qualities and compositions. For this, a comparison of the detection performance was carried out using different Confidence Scores (CS) and a reference method. The result shows a reliable AI-based method for droplet size determination of liquid-liquid flow processes, which can be used for emulsions with different properties and droplet size ranges (d = 5–115 μm, disperse phase content up to at least 15 vol.-%), since for all tested datasets a good detection accuracy (Δsmax= 1.24, Δd50, max=4.01 μm) was obtained. In general, the CS needs to be adapted depending on the use case to make sure that also smaller droplets are statistically represented in the evaluated data. To get an idea about these values, a first validation of the resulting droplet size distribution for different CS in comparison to a reference method is suggested. Future work focuses on an implementation of the approach on later YOLO models. This aims to achieve a further increase in model accuracy and to transfer the method to an edge device.

Abbreviations

- AI:

-

Artificial Intelligence

- AP:

-

Average Precision

- API:

-

Application Programming Interface

- CNN:

-

Convolutional Neural Network

- CPU:

-

Computer Processing Unit

- CQA:

-

Critical Quality Attribute

- CS:

-

Confidence Score

- CUDA:

-

Compute Unified Device Architecture

- cuDNN:

-

CUDA Deep Neural Network

- DSD:

-

Droplet Size Distribution

- EM:

-

Evaluation Metrics

- GPU:

-

Graphical Programming Unit

- HC:

-

Hough Circle

- IoU:

-

Intersection Over Union

- mAP:

-

Mean Average Precision

- Mask RCNN:

-

Mask Region Convolutional Neural Network

- PMMA:

-

Poly(Methyl 2-Methylpropenoate)

- RAM:

-

Random Access Memory

- RoI:

-

Region Of Interest

- RPN:

-

Region Proposal Network

- VIA:

-

Visual Geometry Group Image Annotator

- VRAM:

-

Video Random Access Memory

- YOLO:

-

You Only Look Once

References

Mcclements DJ (2007) Critical review of techniques and methodologies for characterization of emulsion stability. Crit Rev Food Sci Nutr 47:611–649. https://doi.org/10.1080/10408390701289292

Paul EL, Atiemo-Obeng VA, Kresta SM (2004) Handbook of Industrial Mixing - Science and Practice. Wiley, New Jersey

Tadros TF (2016) Emulsions: formation, stability, industrial applications. Walter de Gruyter GmbH & Co KG, Berlin, Boston

Panckow RP et al (2017) Photo-optical in-situ measurement of drop size distributions: applications in research and industry. Oil Gas Sci Technol 72:14. https://doi.org/10.2516/ogst/2017009

Emmerich J et al (2019) Optical inline analysis and monitoring of particle size and shape distributions for multiple applications: scientific and industrial relevance. Chin J Chem Eng 27:257–277. https://doi.org/10.1016/j.cjche.2018.11.011

Maaß S et al (2012) Automated drop detection using image analysis for online particle size monitoring in multiphase systems. Comput Chem Eng 45:27–37. https://doi.org/10.1016/j.compchemeng.2012.05.014

Abidin MIIZ, Raman AAA, Nor MIM (2013) Review on measurement techniques for drop size distribution in a stirred vessel. Ind Eng Chem Res 52:16085–16094. https://doi.org/10.1021/ie401548z

Dinter R et al (2024) 3D-printed open-source sensor flow cells for microfluidic temperature, electrical conductivity, and pH value determination. J Flow Chem. https://doi.org/10.1007/s41981-024-00319-y

Glotz G, Kappe CO (2018) Design and construction of an open source-based photometer and its applications in flow chemistry. React Chem Eng 3:478–486. https://doi.org/10.1039/C8RE00070K

von Vietinghoff N et al (2020) Photoelectric sensor for fast and low-priced determination of bi- and triphasic segmented slug flow parameters. Sensors 20:6948. https://doi.org/10.3390/s20236948

Burke I, Assies C, Kockmann N (2024) Rapid prototyping of a modular optical flow cell for image-based droplet size measurements in emulsification processes. J Flow Chem. https://doi.org/10.1007/s41981-024-00323-2

Chen C et al (2020) Improving the generalizability of convolutional neural network-based segmentation on CMR images. Front Cardiovasc Med 7:105. https://doi.org/10.3389/fcvm.2020.00105

Urolagin S, Prema K, Reddy NVS (2012) Advanced Computing, Networking and Security - Generalization Capability of Artificial Neural Network Incorporated with Pruning Method. In: Advanced Computing, Networking and Security: International Conference, ADCONS 2011, Surathkal, India, December 16–18, 2011, Selected Papers. Springer Berlin Heidelberg, pp 171–178

Khosravi H et al (2024) Artificial intelligence and classic methods to segment and characterize spherical objects in micrographs of industrial emulsions. Int J Pharm 649:123633. https://doi.org/10.1016/j.ijpharm.2023.123633

Ghafari M, Mailman D, Hatami P, Peyton T, Yang L, Dang W, Qin H (2022) A Comparison of YOLO and Mask-RCNN for Detecting Cells from Microfluidic Images. In: 2022 International Conference on Artificial Intelligence in Information and Communication (ICAIIC). IEEE, pp 204–209

Neuendorf LM et al (2023) Convolutional Neural Network (CNN)-based measurement of properties in liquid–liquid systems. Processes 11:1521. https://doi.org/10.3390/pr11051521

Neuendorf LM, Khaydarov V, Schlander C, Kock T, Fischer J, Urbas L, Kockmann N (2023) Artificial Intelligence-based Module Type Package-compatible Smart Sensors in the Process Industry. Chem Ing Tech 95:1546–1554. https://doi.org/10.1002/cite.202300047

Sibirtsev S et al. (2023) Mask R-CNN based droplet detection in liquid–liquid systems, Part 2: methodology for determining training and image processing parameter values improving droplet detection accuracy. Chem Eng J 473:144826. https://doi.org/10.1016/j.cej.2023.144826

Sibirtsev S, Zhai S, Jupke A (2024) Mask R-CNN based droplet detection in liquid–liquid systems, Part 3: model generalization for accurate processing performance independent of image quality. Chem Eng Res Des 202:161–168. https://doi.org/10.1016/j.cherd.2023.12.005

Suh Y et al (2021) A deep learning perspective on dropwise condensation. Adv Sci 8:2101794. https://doi.org/10.1002/advs.202101794

Suh Y, Bostanabad R, Won Y (2021) Deep learning predicts boiling heat transfer. Sci Rep 11:5622. https://doi.org/10.1038/s41598-021-85150-4

Rutkowski GP et al (2022) Microfluidic droplet detection via region-based and single-pass convolutional neural networks with comparison to conventional image analysis methodologies. Mach Learn Appl 7:100222. https://doi.org/10.1016/j.mlwa.2021.100222

Zhang S et al (2022) Precise and fast microdroplet size distribution measurement using deep learning. Chem Eng Sci 247:116926. https://doi.org/10.1016/j.ces.2021.116926

Burke I, Youssef AS, Kockmann N (2022) Design of an AI-supported Sensor for Process Relevant Parameters in Emulsification Processes. 16 Dresdner Sensor-Symposium 2022 218–223. https://doi.org/10.5162/16dss2022/P48

Burke I, Dhayaparan T, Youssef AS (2024) GitHub - TUDoAD/DropletDetection_YOLOv4. GitHub. https://github.com/TUDoAD/DropletDetection_YOLOv4. Accessed 5 July 2024

Bochkovskiy A, Wang C-Y, Liao H-YM (2020) YOLOv4: Optimal Speed and Accuracy of Object Detection. https://doi.org/10.48550/arXiv.2004.10934

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask R-CNN. https://doi.org/10.48550/arXiv.1703.06870

Bochkovskiy A (2021) GitHub - AlexeyAB/darknet. GitHub. https://github.com/AlexeyAB/darknet/tree/master. Accessed 5 July 2024

Sowa P, Izydorczyk J (2022) Darknet on OpenCL: a multiplatform tool for object detection and classification. Concurr Comput 34:e6936. https://doi.org/10.1002/cpe.6936

Bradski G (2000) The OpenCV Library. In: Dr. Dobb’s Journal of Software Tools. https://docs.opencv.org/4.x/index.html. Accessed 12 Mar 2024

Girshick R, Fast (2015) R-CNN. https://doi.org/10.48550/arXiv.1504.08083

Waleed A (2017) Mask R-CNN for Object Detection and Instance Segmentation on Keras and TensorFlow. In: GitHub. https://github.com/matterport/Mask_RCNN. Accessed 12 Mar 2024

Schneider CA, Rasband WS, Eliceiri KW (2012) NIH image to imageJ: 25 years of image analysis. Nat Methods 9:671–675. https://doi.org/10.1038/nmeth.2089

Bradski GR, Kaehler A (2008) Learning openCV: computer vision with the OpenCV library. O’Reilly Media, Inc

Acknowledgements

The authors thank Carsten Schrömges for his technical support and the German Federal Ministry for Economic Affairs and Climate Action (BMWK) for funding this research as part of AiF (Support Code: KK5168501 PR0).

Funding

The authors thank the German Federal Ministry for Economic Affairs and Climate Action (BMWK) for funding this research as part of AiF (Support Code: KK5168501 PR0).

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding authors state that there is no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Burke, I., Dhayaparan, T., Youssef, A.S. et al. Two deep learning methods in comparison to characterize droplet sizes in emulsification flow processes. J Flow Chem (2024). https://doi.org/10.1007/s41981-024-00330-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41981-024-00330-3