Abstract

Spiking neural P systems (SNPS) are variants of the third-generation neural networks. In the last few decades, different variants of SNPS models have been introduced. In most of the SNPS models, spikes are represented using an alphabet with just one letter. In this paper, we use a deterministic SNPS model with coloured spikes (i.e. the alphabet representing spikes contains multiple letters), together with neuron division rules to demonstrate an efficient solution to the SAT problem. As a result, we provide a simpler construction with significantly less class resources to solve the SAT problem in comparison to previously reported results using SNPSs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Membrane computing is a well-known natural computing model. The computing models in membrane computing are inspired by the working of biological cells. In the last decades, many researchers have constructed different variants of membrane computing models inspired by different biological phenomena [1]. One such type of cell inspiring many computational models is the neuron. The structure and function of biological neurons communicating via sending impulses (spikes) was the main motivation behind the construction of a popular variant of membrane computing model known as the spiking neural P system (SNPS) [2, 3]. Since SNNs (i.e. spiking neural networks) [4] belong to third-generation neural networks, SNPS models can also be considered third-generation neural networks.

Membrane computing models are mainly categorised into three types, i.e. (1) cell like; (2) tissue like; (3) neural like. One of the most popular directions of research in membrane computing is solving computationally hard problems using different variants of P systems. A comprehensive survey on the use of variants of membrane computing models for solving NP-hard problems, i.e. NP-complete (SAT, SUBSET-SUM) and PSPACE-complete problems (QSAT, Q3SAT) can be found in [5, 6]. For instance, SNPS with pre-computed resources has been used to solve QSAT, Q3SAT problems [7], SUBSET-SUM problem [8,9,10], SAT & 3SAT problems [10, 11] in a polynomial or even linear time. SNPS with neuron division and budding can solve the SAT problem in a polynomial time with respect to the number of literals n and the number of clauses m. Moreover, these SNP systems can solve the problem in a deterministic manner [12, 13]. Similarly, an SNPS with budding rules [14] and an SNPS with division rules [15] can solve the SAT problem in polynomial time and a deterministic manner. Furthermore, SNPSs with structural plasticity and time-free SNPS models can solve the SUBSET-SUM problem in a feasible time [16, 17]. In [18], it has been proved that SNPS with astrocytes producing calcium can solve the SUBSET-SUM problem in a polynomial time. Recently, a linear time uniform solution for the Boolean SAT problem using self-adapting SNPS with refractory period and propagation delay is derived in [19]. Finally, SNPS models have been used to solve maximal independent set selection problems from distributed computing [20].

In this paper, we construct a new variant of SNPS model, i.e. spiking neural P system with coloured spikes and division rules. The idea of coloured spikes was introduced by Song et al. [21] to simplify the construction of a SNPS solving complex tasks. Division rules were introduced for the first time in [12, 22] to solve the SAT problem using SNPS with division rules and budding. Both the spiking rules and the division rules used here to solve the SAT problem are deterministic in nature. In this paper, we show that with this combined variant, we can solve the SAT problem efficiently using a lower number of steps as well as less amount of other resources.

The paper is organized in the following manner: in Sect. 2, we discuss the structure and function of the SNP system with coloured spikes and neuron division. In Sect. 3, we describe a solution to the SAT problem using this variant of SNPS. In Sect. 4, we give a brief comparison of descriptional and computational complexity of two other SNPS models with division rules, in the amount of resources necessary to solve the SAT problem. Section 5 is conclusive in nature.

2 Spiking neural P system with coloured spikes and neuron division

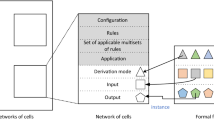

In this section, we introduce the new variant of SNPS model, i.e. spiking neural P systems with coloured spikes and neuron division, which we mentioned in Sect. 1. More precisely, this variant combines properties of two existing SNPS models, i.e. SNPS with neuron division [12] and SNPS with coloured spikes [21]. Division rules are used to obtain an exponential workspace in polynomial time to apply the strategy of trading space for time, while the use of coloured spikes allows for a simpler and more efficient construction of the SNPS. In the sequel we denote by L(E) the language associated to E, where E is a regular expression over an alphabet S.

Definition 1

A spiking neural P system with coloured spikes and neuron division of degree \(m \ge 1\) is a construct of the form \(\Pi = (S, H, syn, \sigma _1, \sigma _2, \ldots , \sigma _m, R,\) in, out) where

-

\(m \ge 1\) represents the initial degree, i.e. the number of neurons initially present in the system;

-

\(S = \{a_1, a_2, \ldots , a_g\}, g \in \mathbb {N}\) is the alphabet of spikes of different colours;

-

H is the set containing labels of the neurons;

-

\(syn \subseteq H \times H\) represents the synapse dictionary between the neurons where \((i, i) \notin syn\) for \(i \in H\).

-

\(\sigma _1, \sigma _2, \ldots , \sigma _m\) are neurons initially present in the system, with \(\{1,\dots ,m\}\subseteq H,\) where each neuron \(\sigma _i = \langle n_1^{i}, n_2^{i}, \ldots , n_g^{i}\rangle ,\) for \(1 \le i \le m,\) contains initially \(n_{j}^{i} \ge 0\) spikes of type \(a_j\) \((1 \le j \le g)\);

-

R represents the set containing the rules of the system \(\Pi\). Each neuron labelled \(i\in H\) contains rules denoted by \([r]_i.\) The rules in R are divided into three categories:

-

Spiking rule: \([E / a_1^{n_1} a_2^{n_2} \ldots a_g^{n_g} \rightarrow a_1^{p_1} a_2^{p_2} \ldots a_g^{p_g}; d]_i\) where \(i \in H\), E is a regular expression over S; \(n_j \ge p_j \ge 0\) \((1 \le j \le g)\); \(d \ge 0\) is called delay. Furthermore, \(p_j>0\) for at least one j, \(1 \le j \le g.\)

-

Forgetting rule: \([a_1^{t_1} a_2^{t_2} \ldots a_n^{t_n} \rightarrow \lambda ]_i\) where \(i \in H\), and \(a_1^{t_1} a_2^{t_2} \ldots a_n^{t_n} \notin L(E)\) for each regular expression E associated with any spiking rule present in the neuron i.

-

Neuron division rule: \([E]_i \rightarrow []_j\,||\,[]_k\) where E is a regular expression over S and \(i, j, k \in H\).

-

-

in and out represent the input and output neurons, respectively.

A spiking rule \([E / a_1^{n_1} a_2^{n_2} \ldots a_g^{n_g} \rightarrow a_1^{p_1} a_2^{p_2} \ldots a_g^{p_g}; d]_i\) is applicable when the neuron \(\sigma _i\) contains spikes \(a_1^{c_1} a_2^{c_2} \ldots a_n^{c_n} \in L(E)\) and \(c_j \ge n_j\) \((1 \le j \le g)\). After application of the rule, \(n_j\) copies of the spike \(a_j\) \((1\le j\le g)\) are consumed while \((c_j - n_j)\) copies remains inside the neuron \(\sigma _i\). Furthermore, \(p_j\) spikes \((1\le j\le g)\) are sent to all neurons \(\sigma _i\) is connected to. These are either neurons \(\sigma _k\) such that \((i,k)\in syn,\) or neurons to which \(\sigma _i\) inherited synapses during neuron division, as described bellow. If the delay \(d = 0\), then the spikes leave the neuron i immediately. However, if \(d \ge 1\) and the rule is applied at time t, then the neuron i will be closed at step \(t, t+1, t+2, \ldots , t+ d -1\). During this phase, the neuron cannot receive any spike from outside nor can apply any rule. At step \((t + d)\), the neuron spikes and it can also receive spikes from other neurons and apply a rule.

The spiking rule \([E / a_1^{n_1} a_2^{n_2} \ldots a_g^{n_g} \rightarrow a_1^{p_1} a_2^{p_2} \ldots a_g^{p_g}; d]_i\) is simply written as \([a_1^{n_1} a_2^{n_2} \ldots a_g^{n_g} \rightarrow a_1^{p_1} a_2^{p_2} \ldots a_g^{p_g}; d]_i\) if \(E = a_1^{n_1} a_2^{n_2} \ldots a_g^{n_g}\). If \(d = 0\), then it becomes \([E/a_1^{n_1} a_2^{n_2} \ldots a_g^{n_g} \rightarrow a_1^{p_1} a_2^{p_2} \ldots a_g^{p_g}]_i\).

A forgetting rule \([a_1^{t_1} a_2^{t_2} \ldots a_n^{t_n} \rightarrow \lambda ]_i\) is applicable when the neuron \(\sigma _i\) contains exactly the spikes \(a_1^{t_1} a_2^{t_2} \ldots a_n^{t_n}\). Then all these spikes are consumed in the neuron \(\sigma _i\).

A division rule \([E]_i \rightarrow []_j\,||\,[]_k\) is applicable if a neuron \(\sigma _i\) contains spikes \(a_1^{c_1} a_2^{c_2} \ldots a_n^{c_n} \in L(E)\). Then after application of the rule, two new neurons labelled j and k are created from \(\sigma _i\) and the spikes are consumed. Child neurons contain developmental rules from R labelled j and k, respectively, and initially they do not contain any spikes. Also, child neurons inherit synaptic connections of the parent neuron. If there exists any connection \((\sigma _t, \sigma _i)\) (i.e. \(\sigma _t\) is connected to \(\sigma _i\)), then after division there exist connections \((\sigma _t, \sigma _j)\) and \((\sigma _t, \sigma _k)\). Similarly, if there exists a connection \((\sigma _i, \sigma _t)\), then \((\sigma _j, \sigma _t)\) and \((\sigma _k, \sigma _t)\) will exist after division. Moreover, the child neurons will create synaptic connections which are provided by the synapse dictionary.

The configuration of the system provides information about the number of spikes present in the neurons, the synaptic connections with the other neurons and whether the neuron is open/closed (i.e. whether it can receive/send spikes). The SNPS models work as a parallel distributive computing model where the rules present in all neurons are applied in parallel synchronized manner and the configuration moves to the next configuration. Each computation starts from the initial configuration and stops at a final configuration (i.e. when no rules are further applicable in any of the neurons). If a neuron has more than one rule which is applicable in a given step, then one of these rules is non-deterministically chosen. However, in this work, such a situation never occurs and rules in the neurons are applied in a deterministic manner.

Since already the introductory variant of the SNPS presented in [2] is computationally universal in the Turing sense, and as our variant of the SNPS is an extension of this basic model, its computational universality follows by the results presented already in [2]. In the next section, we use the deterministic SNPS with coloured spikes and neuron division to obtain a uniform solution to the SAT problem in a linear time.

3 A solution to the SAT problem

Many decision problems have been solved using spiking neural P systems in uniform as well as semi-uniform ways [7, 9,10,11,12,13, 15,16,17]. Let M be a decision problem. If the problem M is solved in a semi-uniform manner, then for each instance I of M, a spiking neural P system \(\Pi _{I, M}\) is constructed in polynomial time (with respect to the size of the instance I). The structure and initial configuration of the system \(\Pi _{I, M}\) depends on the instance I. Moreover, the system \(\Pi _{I, M}\) halts (or it may spike a specified number of spikes within a time interval) if and only if I is a positive instance of the decision problem M. A uniform solution of M contains the family \(\{\Pi _{M}(n)\}_{n \in \mathbb {N}}\) of SNP systems where for each instance \(I \in M\) of size n, polynomial number of spikes based on n is introduced into a specified input neuron of \(\Pi _{I, M}\). Moreover, the system \(\Pi _{I, M}\) halts if and only if I is a positive instance of M. The uniform solutions are strictly associated with the structure of the problem instead of being associated with only one instance of the decision problem. This feature makes the uniform solutions to decision problems more preferable than the semi-uniform solutions. Also, in order to obtain a semi-uniform solution, the SNP system does not require any specific input neuron. However, in the case of uniform solutions, the system must have a specified input neuron(s) which receives the description of an instance of the decision problem in the form of a spike train.

The SAT problem (or the Boolean satisfiability problem) [23] is a well-known NP-complete decision problem. Each instance is a formula in propositional logic with variables obtaining values TRUTH or FALSE. The SAT decision problem is the problem of determining whether there exists an assignment of truth values to the variables such that the whole formula evaluates to TRUTH.

Let us consider an instance represented by the formula \(\gamma _{n, m} = C_1 \wedge C_2 \wedge \cdots \wedge C_m\) in the conjunctive normal form, where \(C_i\) \((1 \le i \le m)\) represent the clauses. Each clause is a disjunction of literals of the form \(x_j\) or \(\lnot x_j\), where \(x_j\) are logical variables, \(1\le j \le n.\) Moreover, the class of SAT instances with n variables and m clauses is denoted by SAT(n, m). So \(\gamma _{n,m} \in SAT(n,m)\). In order to solve the SAT problem, at first we have to encode the instance \(\gamma _{n,m}\) using spikes in the SNPS, so that the encoded instance could be sent to the input neuron. In this work, we consider the SNPS model with coloured spikes, i.e. different variables are encoded by different types of spikes. The encoding of the instance \(\gamma _{n,m}\) is as follows:

where

In addition to \(\alpha _{i,j},\) the encoding of the instance contains other auxiliary spikes. The term \(a^{n+1}\) is added at the beginning in order to give the system a necessary initial period during which it generates an exponential workspace with \(2^n\) neurons. The encoding of each clause is separated by \(a_c\) and the end of the encoding is identified by \(a_f\).

The spiking neural P system with coloured spikes and division rules solving the instances in SAT(n, m) is described below.

3.1 The SNPS description

The structure of the SNPS with coloured spikes and division rules is as follows: \(\Pi _{n,m} = (S, H, syn, \sigma _{1_0}, \sigma _{1_0'}, \sigma _{n+1}, \sigma _{n+2}, \sigma _{n+3}, \sigma _{n+4}, \sigma _{n+5}, R, in, out)\) where

-

\(S = \{ a_i, a_i'\ |\ 1\le i\le n\} \cup \{a, a_s, a_c, a_f\}.\)

-

\(H = \{i, i', i_0, i_0' \ |\ i = 1, 2, \ldots , n \}\) \(\cup\) \(\{ n+1, n+2, n+3, n+4, n+5\}\) \(\cup\) \(\{in, out\}\) \(\cup\) \(\{t_i, f_i\ |\ i = 1, 2, \ldots , n \}\).

-

\(syn = \{(i, t_i), (i', f_i)\ |\ i = 1, 2, \ldots , n\}\) \(\cup\) \(\{ (n+2, n+1), (n+2, n+3), (n+3, n+2), (n+4, n+2), (n+4, n+3) \}\) \(\cup\) \(\{ (in, 1_0), (in, 1_0'), (in, n+5), (n+5, t_1), (n+5, f_1) \}\) \(\cup\) \(\{(t_1, out), (f_1, out) \}.\)

-

the initial configuration of the system contains neurons with labels in, out, \(1_0, 1_0', n+1, n+2, n+3, n+4, n+5\). The neurons with labels \(1_0, 1_0', n+2, n+3\) and \(n+4\) contain the spike a, and the remaining neurons contain no spike.

-

Rules in R are divided into four modules: (1) generating module; (2) input module; (3) checking module; (4) output module.

-

(1)

Rules in the generating module:

-

\([a]_{i_0} \rightarrow []_{i}\,||\,[]_{(i+1)_0}; i = 1, 2, \ldots , n-2\)

-

\([a]_{(n-1)_0} \rightarrow []_{n-1}\,||\,[]_{n}\)

-

\([a]_{i_0'} \rightarrow []_{i'}\,||\,[]_{(i+1)_0'}; i = 1, 2, \ldots , n-2\)

-

\([a]_{(n-1)_0'} \rightarrow []_{(n-1)'}\,||\,[]_{n'}\)

-

\([a]_{t_i} \rightarrow []_{t_{i+1}}\,||\,[]_{f_{i+1}}; i = 1, 2, \ldots , n-1\);

-

\([a]_{f_i} \rightarrow []_{t_{i+1}}\,||\,[]_{f_{i+1}}; i = 1, 2, \ldots , n-1\);

-

\([a]_{n+1} \rightarrow []_{t_1}\,||\,[]_{f_1}\)

-

\([a \rightarrow a]_{n+2}\)

-

\([a^{2} \rightarrow \lambda ]_{n+2}\)

-

\([a \rightarrow a]_{n+3}\)

-

\([a^{2} \rightarrow \lambda ]_{n+3}\)

-

\([a \rightarrow a; n+1]_{n+4}\)

-

\([S^* / a_c \rightarrow a_c]_{n+5}\)

-

\([S^* / a_f \rightarrow a_f]_{n+5}.\)

-

-

(2)

Rules in the input module:

-

\([a_i \rightarrow a_i]_{in}; i = 1, 2, \ldots , n\)

-

\([a_i' \rightarrow a_i']_{in}; i = 1, 2, \ldots , n\)

-

\([a \rightarrow a]_{in}\);

-

\([a_c \rightarrow a_c]_{in}\);

-

\([a_f \rightarrow a_f]_{in}\);

-

\([S^{*} / a_i \rightarrow a]_i; i = 1, 2, \ldots , n\)

-

\([S^{*} / a_i' \rightarrow a]_{i'}; i = 1, 2, \ldots , n\)

-

Note: the last two rules are not seen in Fig. 1 as the neurons \(\sigma _i\), \(i = 1, 2, \ldots , n,\) appear during the generating phase.

-

-

(3)

Rules in the checking module:

-

\([a_saa / a \rightarrow a_s]_{t_n}\)

-

\([a_sa_c \rightarrow \lambda ]_{t_n}\)

-

\([a_sa_ca / a_ca \rightarrow a_s]_{t_n}\)

-

\([a_sa_f \rightarrow a]_{t_n}\)

-

\([a_saa / a \rightarrow a_s]_{f_n}\)

-

\([a_sa_c \rightarrow \lambda ]_{f_n}\)

-

\([a_sa_ca / a_ca \rightarrow a_s]_{f_n}\)

-

\([a_sa_f \rightarrow a]_{f_n}.\)

-

-

(4)

Rules in the output module:

-

\([a_s^{+} a^{+} / a \rightarrow a]_{out}.\)

-

-

(1)

The initial structure of the SNPS with coloured spikes and division rules solving an instance of the SAT problem using contains 9 neurons (see Fig. 1). Computation of the SNPS is divided into four stages: (1) generating; (2) input; (3) checking; (4) output.

In the generating stage, the division rules are used to create an exponential number of neurons which are further used during the input and checking stages. In the input stage, the input neuron receives the encoded instance of the SAT problem. This stage overlaps with the checking stage during which the SNPS verifies whether any assignment of values of the variables \(x_1, x_2, \ldots , x_n\) satisfies all the clauses \(C_i\) \((1 \le i \le m)\) present in the proposition formula \(\gamma _{n,m}\). Finally, if the output neuron spikes, it confirms that the formula \(\gamma _{n,m}\) is satisfiable.

3.2 Generating stage

Neurons \(1_0\) and \(1_0'\) contain initially the spike a and the rule \([a]_{1_0} \rightarrow []_{1}\,||\,[]_{2_0}\) and \([a]_{1_0'} \rightarrow []_{1'}\,||\,[]_{2_0'},\) respectively. Thus, the neuron \(\sigma _{1_0}\) creates two neurons with labels 1 and \(2_0,\) and the neuron \(\sigma _{1_0'}\) creates two neurons with labels \(1'\) and \(2_0'\) at time \(t=1\).

The input neuron has synaptic connections to the neurons with labels \(1_0\) and \(1_0',\) which are inherited by the neurons \(1, 1',\) \(2_0\) and \(2_0'.\) At times \(i=1,2,\dots ,n+1,\) the input neuron receives the spike a from the input spike train and at times \(i=2,3,\dots ,n-1\) it sends the spike to neurons \(i_0\) and \(i_0'\) \((2\le i\le n-1).\) At times \(i=n,\) \(n+1\) and \(n+2,\) the input neuron sends spikes, too, but no neurons with labels \(i_0\) and \(i_0'\) \((n\le i\le n+2\) exist.)

Neurons \(i_0\) and \(i_0'\) \((2\le i\le n-2)\) contain the rule \([a]_{i_0} \rightarrow []_{i}\,||\,[]_{(i+1)_0}\) and \([a]_{i_0'} \rightarrow []_{i'}\,||\,[]_{(i+1)_0'},\) i.e. the neuron \(\sigma _{i_0}\) creates two neurons with labels i and \((i+1)_0,\) and the neuron \(\sigma _{i_0'}\) creates two neurons with labels \(i'\) and \((i+1)_0'\) \((2 \le i \le n-1).\)

Finally, at step \(t=n-1,\) neurons with labels \((n-1)_0\) and \((n-1)_0'\) divide and create neurons with labels \(n-1,\) n, \((n-1)'\) and \(n'.\) So after application of all these division rules, there is a layer of neurons \(\sigma _i,\) \(\sigma _{i'}\) \((1\le i\le n)\) shown in Fig. 7, and the input neuron has synaptic connections to all of them.

Simultaneously, the neurons with labels \(n+1, n+2, n+3\) and \(n+4\) create subsequently \(2^{n}\) neurons which will be used in the checking stage. The circuit controlling the generating stage is depicted in Fig. 2. Initially, the neurons \(n+2, n+3\) and \(n+4\) contain one spike. At time \(t = 1\), \(\sigma _{n+2}\) and \(\sigma _{n+3}\) spike and \(\sigma _{n+1}\) receives a spike at time \(t = 2\). At \(t = 2\), the rule \([a]_{n+1} \rightarrow []_{t_1}\,||\,[]_{f_1}\) is applied (Fig. 3). So at \(t = 3\), two new neurons with labels \(t_1\) and \(f_1\) are created (Fig. 4).

Note that the neuron \(\sigma _1\) (resp. \(\sigma _{1'}\)) is connected to \(\sigma _{t_1}\) (resp. \(\sigma _{f_1}\)) using the synaptic connections from synapse dictionary. Moreover, \(\sigma _{t_1}\) and \(\sigma _{f_1}\) are connected to the output neuron with the synaptic connections from the synapse directory (see Fig. 4). The neuron \(\sigma _{t_1}\) contains the rule \([a]_{t_1} \rightarrow []_{t_2}\,||\,[]_{f_2}\) and neuron \(\sigma _{f_1}\) contains \([a]_{f_1} \rightarrow []_{t_2}\,||\,[]_{f_2}\).

At the same time \(t=3,\) neurons \(\sigma _{t_1}\) and \(\sigma _{f_1}\) receive the spike a from \(\sigma _{n+2}\). Hence, at \(t = 4\), each of \(\sigma _{t_1}\) and \(\sigma _{f_1}\) is divided into \(\sigma _{t_2}\) and \(\sigma _{f_2}\). Again, these neurons receive one spike from \(\sigma _{(n+2)}\) and they are divided at the next step. This process will continue until \(t = n+2\). Newly created neurons \(t_i, f_i\) \((1\le i\le n)\) will also get further synapses due to the synapse dictionary, as described above. After \((n+2)\) steps, we will show a system in Fig. 7.

Finally, the rule \(a \rightarrow a; n+1\) in \(\sigma _{n+4}\) is applied at time \(t = n+2\) and thus both \(\sigma _{n+2}\) and \(\sigma _{n+3}\) receive one spike at \(t = n+3\). Next, the rule \(a^{2} \rightarrow \lambda\) is applied and both spikes in \(\sigma _{n+2}\) and \(\sigma _{n+3}\) are consumed. So no spikes remain inside them and they are inactive from now on.

3.3 Input stage

Recall that the input neuron receives the encoding of an instance of the SAT problem with n variables and m clauses, i.e. \(\gamma _{n,m}\), where

The encoding of each clause is ended by \(a_c\) and after the input is completely read, it ends with \(a_f\). The initial buffer \(a^{n+1}\) is used to delay the input of the clause by \(n+1\) steps, giving the SNPS enough time to generate \(2^{n}\) checking neurons. The input neuron in has the following rules: (1) \(a \rightarrow a;\) (2) \(a_i \rightarrow a_i\ (1 \le i \le n);\) (3) \(a_i' \rightarrow a_i'\ (1 \le i \le n);\) (4) \(a_c \rightarrow a_c;\) (5) \(a_f \rightarrow a_f\). Initially, the input neuron is empty and when it receives \(a, a_i, a_i', a_c\) or \(a_f\) as input, it spikes and sends the same spike to neurons with labels \(i, i'\) \((1 \le i \le n)\) and \(n+5\).

At time \(t = n + 2\), the input neuron receives \(\alpha _{1,1}\) as input. Since the input neuron contains the rules \([a_i \rightarrow a_i]_{in}; [a_i' \rightarrow a_i']_{in}\) \((1 \le i \le n)\), it will spike immediately. The neurons with label i contain the rule \([S^{*} / a_i \rightarrow a]_i\) \((1 \le i \le n)\) and neurons with label \(i'\) contain the rule \([S^{*} / a_i' \rightarrow a]_{i'}\) \((1 \le i \le n)\). These rules are activated upon the existence of the literal \(x_i\) (resp. \(\lnot x_i\)) in a clause. If \(\sigma _i\) spikes using the rule \([S^{*} / a_i \rightarrow a]_i\), it signals that the literal \(x_i\) is present in a clause. Similarly, the use of the rule \([S^{*} / a_i' \rightarrow a]_{i'}\) in \(\sigma _{i'}\) signals the presence of \(\lnot x_i\) in a clause.

3.4 Checking stage

After the generating stage, the input neuron is connected to neurons \(\sigma _i\) and \(\sigma _{i'}\ (1 \le i \le n)\). These neurons, in turn, are connected to the checking layer consisting of \(2^n\) neurons labelled \(t_n\) or \(f_n,\) see Fig. 7. Each of the checking neurons \(\sigma _{t_n}\) or \(\sigma _{f_n}\) has exactly n incoming synapses from the input neurons \(\sigma _i\) or \(\sigma _{i'}\ (1 \le i \le n)\). These synapses represent one of the \(2^{n}\) possible assignments of ‘TRUTH’ or ‘FALSE’ to the n variables of the formula. The structure of these synapses was created by either inherited synapses or by the synapse dictionary during the generating stage. The incoming synapses are indicated under checking neurons in Figs. 4, 5, 6, and 7 by expressions in parentheses. Let us consider the assignment \(t_1 t_2 f_3 \ldots f_n\) (i.e. the first two variables have ‘TRUTH’ and the remaining ones have ‘FALSE’). The corresponding checking neuron has synapses from neurons \(\sigma _1,\) \(\sigma _2,\) \(\sigma _3',\) \(\dots ,\) \(\sigma _n',\) in the input module.

The input module receives encoded clauses one by one. Spiking of the neuron \(\sigma _i\) in the input module signals the presence of the literal \(x_i\) in the clause, and spiking of the neuron \(\sigma _{i'}\) signals the presence of the literal \(\lnot x_i.\) Therefore, each of the neurons \(\sigma _{t_n}\) or \(\sigma _{f_n}\) obtains one or more spike a if its assignment satisfies the clause. These neurons contain rules \((1) a_s a a / a \rightarrow a_s;\ (2) a_s a_c \rightarrow \lambda ;\ (3) a_s a_c a / a_c a \rightarrow a_s;\ (4) a_s a_f \rightarrow a\).

Initially, neurons in the checking module contain the spike \(a_s.\) If it receives the spike a from the input module, no rule can be applied. When another a spike comes, the rule \(a_s aa / a \rightarrow a_s\) is applied and only one spike a remains in the neuron. When the encoding of the clause is read completely, the spike \(a_c\) is received from \(\sigma _{n+5}\). If the assignment of a checking neuron satisfies the clause, the neuron now has the spikes \(a_s a_c a\) and the rule \(a_s a_c a / a_c a \rightarrow a_s\) is applied. Otherwise, the neuron contains the spike \(a_s a_c\) and they are consumed by the rule \(a_s a_c \rightarrow \lambda\). Since the neuron looses the spike \(a_s\), no further computation will take place inside it.

3.5 Output stage

The output neuron can receive spikes \(a_s\) due to the application of the rules \(a_saa / a \rightarrow a_s\) and \(a_sa_ca / a_ca \rightarrow a_s\) in checking neurons during the checking stage. However, these spikes are ignored. Finally, when the formula is completely read, the checking neurons receive the spike \(a_f\) from \(\sigma _{n+5}\). If any of them still has the spike \(a_s\) (meaning that its assignment satisfies all clauses), it spikes using the rule \(a_sa_f \rightarrow a\) and the spike a is sent to the output neuron. Next, the rule \(a_s^{+} a^{+} / a \rightarrow a\) is applied in the output neuron, confirming that the formula is satisfiable.

4 Discussion

In this section, we compare parameters of our solution to the SAT problem using a SNPS with coloured spikes and division rules with two other published papers [13, 15] presenting similar solutions to the SAT with SNP systems. Both papers use neuron division rules, the authors of [13] use also neuron dissolution rules. We compare five parameters of descriptional complexity of the used SNP systems and also the running time complexity. The results are summarized in the table below assuming a solution to an instance of SAT(n, m), i.e. with m clauses and n variables.

A more detailed analysis of the running time shows that the generation stage in our paper requires \((n+1)\) steps when the exponential workspace is created. The total number of steps required for the following input stage reading the encoded formula via the input neuron is \(m(n+1) + 1.\), The checking stage largely overlaps with the input one and requires two more steps to complete. Finally the output stage required only one step.

It follows that all descriptional complexity parameters used in our model have significantly lower values than in the two compared papers. More specifically, the number of neurons, number of neuron labels, size of the synapse dictionary and number of rules used in our solution are significantly lower than in previous solutions and we are able to achieve this result using only 5 initial spikes. Especially, the number of rules is linear to n, while in the previously reported solutions it is quadratic or even exponential. Note that the coloured spikes largely help to organize the work of the system and allow for these simplifications.

The only exception is the running time which is \(\mathcal {O}(n+m)\) in [13], while our SNPS runs in time \(\mathcal {O}(nm).\) The explanation is simple: the authors of [13] use m input neurons and a special encoding of the formula where each clause is sent in parallel to the n designated input neuron in just one step. Therefore, the input stage in [13] takes only \(\mathcal {O}(m)\) steps. We conjecture that such an input encoding can be employed also in our construction, diminishing the total running time to \(\mathcal {O}(n+m).\)

Resources | Wang et al. [15] | Zhao et al. [13] | This paper |

|---|---|---|---|

Initial number of neurons | 11 | \(3n + 5\) | 9 |

Initial number of spikes | 20 | \(2m + 3\) | 5 |

Number of neuron labels | \(10 n + 7\) | \(2^{n} + 11\) | \(6n + 7\) |

Size of synapse dictionary | \(6n + 11\) | \(5n + 5\) | \(2n + 12\) |

Number of rules | \(2n^{2} + 26 n + 26\) | \(n 2^{n}+ \frac{1}{3} (4^{n} - 1)\) + \(9n + 5\) | \(8n + 16\) |

Time complexity | \(4n + nm + 5\) | \(2n + m + 3\) | \(nm + n+m + 5\) |

Number of neurons generated throughout the computation | \(2^{n} + 8n\) | \(2^{n+1} - 2\) | \(2^{n} + 2n\) |

5 Conclusion

We presented a deterministic spiking neural P system with coloured spikes and division rules which has been used to solve the SAT problem in linear time. We have shown that our model uses significantly less resources than those reported in [13, 15] to solve the SAT problem. It is fair to note that we use a linear number of different spikes with respect to the number n of variables in SAT, while the two mentioned papers use just one type of spike. However, most types of spikes in our construction is used just to encode the input formula. We could use a similar input encoding and an input module with multiple input neurons as that in [13] with only a few changes which would lower the number of different spikes (= colours) in our model to 5. Furthermore, this input module would restrict our running time to \(\mathcal {O}(n+m).\) This is left for future research. As another possible future research direction one could focus on an efficient solution to PSPACE-complete problems using a similar SNPS model as that discussed in this paper.

Data availability

No datasets were generated or analysed during the current study.

References

Paun, G., Rozenberg, G., & Salomaa, A. (2010). The Oxford handbook of membrane computing. Oxford University Press Inc.

Ionescu, M. ., Păun, G. ., & Yokomori, T. . (2006). Spiking neural P systems. Fundamenta Informaticae, 71(2–3), 279–308.

Leporati, A., Mauri, G., & Zandron, C. (2022). Spiking neural P systems: Main ideas and results. Natural Computing, 21(4), 629–649.

Ghosh-Dastidar, S., & Adeli, H. (2009). Spiking neural networks. International Journal of Neural Systems, 19(04), 295–308.

Song, B., Li, K., Orellana-Martín, D., Pérez-Jiménez, M. J., & Pérez-Hurtado, I. (2021). A survey of nature-inspired computing: Membrane computing. ACM Computing Surveys (CSUR), 54(1), 1–31.

Sosík, P. (2019). P systems attacking hard problems beyond NP: A survey. Journal of Membrane Computing, 1, 198–208.

Ishdorj, T.-O., Leporati, A., Pan, L., Zeng, X., & Zhang, X. (2010). Deterministic solutions to QSAT and Q3SAT by spiking neural P systems with pre-computed resources. Theoretical Computer Science, 411(25), 2345–2358.

Gutiérrez Naranjo, M. Á., & Leporati, A. (2008). Solving numerical NP-complete problems by spiking neural P systems with pre-computed resources. In Proceedings of the Sixth Brainstorming Week on Membrane Computing (pp. 193–210). Sevilla, ETS de Ingeniería Informática, 4-8 de Febrero, 2008

Leporati, A., & Gutiérrez-Naranjo, M. A. (2008). Solving Subset Sum by spiking neural P systems with pre-computed resources. Fundamenta Informaticae, 87(1), 61–77.

Leporati, A., Mauri, G., Zandron, C., Păun, G., & Pérez-Jiménez, M. J. (2009). Uniform solutions to SAT and Subset Sum by spiking neural P systems. Natural computing, 8(4), 681.

Ishdorj, T.-O., & Leporati, A. (2008). Uniform solutions to SAT and 3-SAT by spiking neural P systems with pre-computed resources. Natural Computing, 7, 519–534.

Pan, L., Păun, G., & Pérez-Jiménez, M. J. (2011). Spiking neural P systems with neuron division and budding. Science China Information Sciences, 54, 1596–1607.

Zhao, Y., Liu, X., & Wang, W. (2016). Spiking neural P systems with neuron division and dissolution. PLoS One, 11(9), 0162882.

Ishdorj, T.-O., Leporati, A., Pan, L., & Wang, J. (2010). Solving NP-complete problems by spiking neural P systems with budding rules. In Membrane Computing: 10th International Workshop, WMC 2009, Curtea de Arges, Romania, August 24–27, 2009. Revised Selected and Invited Papers 10 .(pp. 335–353). Springer.

Wang, J., Hoogeboom, H. J., & Pan, L. (2011). Spiking neural P systems with neuron division. In Membrane Computing: 11th International Conference, CMC 2010, Jena, Germany, August 24–27, 2010. Revised Selected Papers 11 (pp. 361–376). Springer.

Cabarle, F. G. C., Hernandez, N. H. S., & Martínez-del-Amor, M. Á. (2015). Spiking neural P systems with structural plasticity: Attacking the Subset Sum problem. In: Membrane Computing: 16th International Conference, CMC 2015, Valencia, Spain, August 17–21, 2015, Revised Selected Papers 16 (pp. 106–116). Springer.

Song, T., Luo, L., He, J., Chen, Z., & Zhang, K. (2014). Solving Subset Sum problems by time-free spiking neural P systems. Applied Mathematics & Information Sciences, 8(1), 327.

Aman, B. (2023). Solving Subset Sum by spiking neural P systems with astrocytes producing calcium. Natural Computing, 22, 3–12.

Zhao, Y., Liu, Y., Liu, X., Sun, M., Qi, F., & Zheng, Y. (2022). Self-adapting spiking neural P systems with refractory period and propagation delay. Information Sciences, 589, 80–93.

Xu, L., & Jeavons, P. (2013). Simple neural-like P systems for maximal independent set selection. Neural Computation, 25(6), 1642–1659.

Song, T., Rodríguez-Patón, A., Zheng, P., & Zeng, X. (2017). Spiking neural P systems with colored spikes. IEEE Transactions on Cognitive and Developmental Systems, 10(4), 1106–1115.

Pan, L., Paun, G., & Pérez Jiménez, M. J. (2009). Spiking neural P systems with neuron division and budding. In Proceedings of the Seventh Brainstorming Week on Membrane Computing (Vol. II, pp. 151–167). Sevilla, ETS de Ingeniería Informática, 2–6 de Febrero, 2009.

Rintanen, J. (2009). Planning and SAT. Handbook of Satisfiability, 185, 483–504.

Acknowledgements

This work was supported by the Silesian University in Opava under the Student Funding Plan, project SGS/9/2024.

Funding

Open access publishing supported by the National Technical Library in Prague.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Paul, P., Sosík, P. Solving the SAT problem using spiking neural P systems with coloured spikes and division rules. J Membr Comput (2024). https://doi.org/10.1007/s41965-024-00153-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41965-024-00153-0