Abstract

In this study, the class imbalance issue in vehicle detection was addressed. Specifically, certain classes such as Tow Truck were found to have significantly fewer samples compared to others such as normal trucks. This imbalance could be adversely impacted algorithm performance, favouring abundant classes over underrepresented ones. After thorough analysis, an adaptive dataset augmentation approach was proposed for the underrepresented classes. Evaluation was first performed on classic and state-of-the-art object detection methods. All experiments were undertaken on a tiny dataset called Multimedia University Diversity Dataset (MMUVD). The fastest training process and the highest mean average precision (mAP), which stood at 0.686 for mAP50 and 0.439 for mAP50-95, were demonstrated by You Only Look Once version 8 nano (YOLOv8n). By applying adaptive oversampling to the dataset and retesting it again on YOLOv8n, mAP50 was improved to 0.950 and mAP50-95 to 0.717, respectively. Notably, the contribution lay in identifying the optimal detection algorithm for vehicle detection, and the proposed adaptive oversampling method ensured consistent performance across all classes, enhancing the overall accuracy and reliability of the system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As the world continues to rapidly develop, transportation plays an increasingly vital role. Nevertheless, the rise in the quantity of vehicles on roadways has led to significant traffic congestion, particularly in metropolitan areas. Reducing vehicle exhaust pollution and energy consumption is crucial for enhancing the sustainability of social development. However, the prevailing focus of energy-efficient and regenerative energy recovery methods lies primarily within the realm of vehicle control, overlooking their wider implications on the overall traffic environment [1]. Therefore, the Intelligent Transportation System (ITS) was suggested in reducing traffic congestion, improve driving experiences, and increase the safety, efficiency, and sustainability of transportation networks [2].

In the realm of computer vision, deep learning has gained popularity and is being used for vehicle classification, autonomous vehicle, vehicle tracking, pothole detection, helmet detection and many more [3,4,5,6,7]. Object detection can identify and locate things in images or videos and vehicle detection is a crucial task in various applications, such as autonomous driving and intelligent transportation systems. It is found that the improvement of the ITS by using Convolutional Neural Network (CNN) in deep learning can achieved a remarkable accuracy [8].

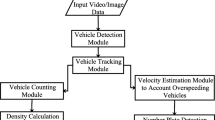

Deep learning-based traffic object detection, which makes use of video cameras, is a popular technique nowadays in the area of autonomous automobiles and self-driving applications [9]. As a result, relevant authorities are placing greater importance on vehicle detection, which is a critical component of ITS. The advancements in sensor and computer technology have led to the ongoing evolution of vehicle detection methods and technologies. By using sensors, cameras, and other technologies, vehicle detection can identify vehicles on the road and collect data to monitor traffic flow and pinpoint areas of congestion.

In this study, the focus is on identifying vehicle types commonly found on Malaysian roads and providing specific categorization of each vehicle type. Seventeen classes, including traffic lights, have been identified. An imbalance in vehicle representation is exhibited by the dataset, with certain classes (Sedan, Motorcycle, Red, and SUV) being overrepresented, while all other vehicle classes (excluding Micro, Truck, and Unknown) are underrepresented. The dataset contains numerous underrepresented classes, which negatively impact the efficacy of the algorithm. To address this issue, an adaptive oversampling technique is proposed. The paper makes three distinct contributions. Firstly, a customized dataset comprising exclusively of vehicles is collected. Secondly, the most suitable vision-based object detection method for detecting vehicles is investigated, and the benefits and drawbacks of each approach are analyzed. Thirdly, a cost-effective solution to tackle the class imbalance problem in vehicle detection is presented.

2 Literature review

First and foremost, the vehicle detection sensors and algorithms used in vehicle detection were reviewed in Sect. 2.1. After that, the two most common methods used in vehicle detection, namely video-based and radar-based detection methods, were discussed in Sects. 2.2 and 2.3, respectively. Additionally, the vehicle dataset used for vehicle detection was reviewed, with details provided in Sect. 2.4. Finally, a comprehensive summary of the paper discussed in Sect. 2.5 was presented, along with an accompanying table that enhanced overall understanding.

2.1 Review on common methods and algorithms

In this section, the aim was to explore the most common method suitable for vehicle detection in the context of Malaysian traffic. Wang et al. [10] introduced the vehicle detection sensors including machine vision and (Light Detection and Ranging) LiDAR. The paper mentioned that deep learning algorithms based on Convolutional Neural Network (CNN) had absolute advantages while the LiDAR was always incorporated with deep learning which was mentioned in [14,15,16]. Nevertheless, the study noted that the volume of LiDAR point cloud data was substantial, and that these enormously sparse, unequal, and unstructured data made using LiDAR more costly. Hence, it could be concluded that machine vision approach was most suitable for our use cases in terms of effectiveness, while the algorithms could be categorized into 2 groups: 1-stage and 2-stage detection algorithms.

After reviewing from [10], the decision was made to further research the deep learning method. First and foremost, Dodia et al. [11] compared the performance of YOLO-v3, YOLO-v5, and YOLO-v7 in vehicle detection using an open-source video dataset. YOLO-v3, showed the lowest accuracy (mAP 84.30%) and slower detection speed (0.072 s per image). The newer model, YOLO-v5, showed improvements in both accuracy (mAP 94.76%) and speed (0.054 s per image). YOLO-v7 outperformed all with a mAP of 95.74%, high recall (93.13%), F1 score (93.67%), and fast detection speed (0.025 s per image). Finally, the paper recommended exploring the latest YOLOv8 version. On the other hand, Manojkumar et al. [12] reviewed and compared Region-based Convolutional Neural Network (R-CNN), Fast R-CNN, Single Shot Detector (SSD), and introduced YOLOv4 as an enhanced version achieving optimal speed and accuracy. YOLOv4, utilizing advanced techniques, outperformed others with 15 ms processing time, 67 FPS, an AP of 43.5, and IOU of 0.65 on COCO.

2.2 LiDAR based detection

LiDAR was a popular technology for radar-based detection. It could detect vehicles based on their shape, size, and distance from the sensor. The use of LiDAR has been quite common for detection in the past, but after the evolution of deep learning, computer vision became a more favorable option because it was less expensive. For example, Ding et al. [13] proposed object recognition at long distances, especially in nighttime military scenarios which combined LIDAR and YOLO to extract the maximum information from LIDAR data. The paper highlighted that existing LiDAR vehicle identification methods relied on manual feature extraction or shallow learning approaches. Unfortunately, these methods could only capture shallow image features, resulting in weak recognition ability, poor robustness, and limited generalization. Additionally, their manual feature extractor design led to high computational load and slow detection speed, hindering real-time dynamic detection. Hence, the research combined the high-sensitivity Gm-APD LiDAR with deep learning method named LIDAR-YOLO which showed that it had high accuracy and speed. After that, Dual-Branch CNNs and 3D LiDAR data were used in the vehicle detection and tracking system [14]. With the aid of two separately trained convolutional neural networks, the system was able to segment vehicles based on a dual-view representation of the 3D LiDAR point cloud. When tested on the KITTI benchmarks, the dual-branch classifier beat the single-branch classifier and was able to compete with other cutting-edge LiDAR-based techniques. However, LIDAR could be expensive and might require a large amount of computing power to analyze the point cloud data.

2.3 Vision based detection

On the other hand, video-based detection used cameras to capture real-time video footage of a given area, such as a highway or an ordinary road. The system then employed computer vision algorithms such as YOLO to analyze video feed and detect vehicles based on their shape and size. Common object detection methods could be divided into one-stage and two-stage methods. The one-stage method used CNN directly for object detection, leading to faster recognition speed but lower accuracy. In contrast, the two-stage method employed a complete CNN to extract features and then leveraged these features to locate target objects within candidate regions. Although this approach significantly improved accuracy, it ran slower in terms of running speed. [10].

2.3.1 One stage detector

For one stage detection, the commonly used method are YOLO and SSD. When it came to YOLO, the latest version with great performance were YOLOv7 and YOLOv8. Munir et al. [15] introduced YOLOv7 with hyperparameter tuning for the detection and classification of 21 vehicle types in Bangladesh, using the Dhaka AI dataset with 3002 images and 24,368 annotations. They employed data augmentation techniques for dataset balance and diversity. Results indicated a 13.52% precision improvement and a 36.96% reduction in training loss with hyperparameter tuning. After that, a group of five YOLOv8 variants were assessed by Afdhal et al. [16] using a challenging dataset consisting of mixed-traffic driving videos. These videos contained a wide range of objects, differing in scale, occlusions, and unpredictable interactions. The evaluation was conducted under three distinct conditions: normal daylight, daylight with blurriness, and night with low-light and glare. YOLOv8x emerged as the top performer in accuracy and f-measure, whereas YOLOv8n excelled in fast inference time and high FPS. When it comes to SSD, Xiaoying et al. [17] introduced a modified SSD algorithm for vehicle detection, which leveraged ResNet50 as backbone and SENet to increase the feature extraction and fusion capabilities. It achieved an average accuracy of 83.09%, which was 3.23% higher than the original network. Liu et al. [18] paper proposed an image vehicle detection method that combined GhostNet and SSD. A comparison was made between the proposed method, SSD, and YOLOv3 utilising the VOC2007 dataset in the research. The findings revealed that the proposed approach outperformed the other approaches in both mean average precision (mAP) and average detection time.

2.3.2 Two stage detector

For two stage detection, the commonly used method would be Faster R-CNN due to it is an improvement of R-CNN and Fast R-CNN. Harianto et al. [19] suggested Faster R-CNN as the primary model and VGG-16 as the feature extractor for vehicle detection. The paper applied six data augmentation methods to the training images, such as rotation, flipping, brightness adjustment, perspective transform, and so on which enhance the image quality and correct the shooting angle, resulting in better performance of the model. The paper employed Google Open Image Dataset v6 for both training and testing the model. The proposed method achieved a 19–24% improvement compared to the baseline without data augmentation.

2.4 Vehicle dataset

An investigation was initially conducted to determine the availability of a similar dataset that could be utilized, due to the dataset being based on the Malaysian context. Wang et al. [20] collected and established a dataset of motor vehicle exhaust pictures, including different types of vehicles and exhausts. The dataset was expanded by using Cutout and saturation transformation to improve the robustness of the network. The data augmentation improved the detection accuracy of the model by 8.5%. Finally, another paper proposed a custom dataset (C-DSO). The dataset contained various vehicle images collected from different sources and focused on the Chennai area vehicles moving on Chennai Metropolitan city roadways. The dataset had two versions: C-DSO-1 with depth angle view images and C-DSO-2 with 1080p video frames [21].

3 Summary

After all the studies performed above, it was observed that sensors commonly used for vehicle detection included LiDAR and cameras [10]. LiDAR was considered expensive and not cost-effective. Hence, a vision-based approach utilizing cameras would be a better option. From the observations in [11,12,13], it was concluded that one-stage detection algorithm such as YOLO had become more generally used for vehicle detection nowadays. The accuracy of the two-stage detection method was generally higher than the one-stage method. In general, the two-stage detection approach outperformed the one-stage approach in terms of accuracy. But when it comes to real-time performance, which was essential for vehicle detection, the one-stage approach dominates. As a result of the one-stage method's ongoing development, tiny targets and poor detection accuracy have progressively been resolved, and it is now a widely used vehicle detection model [10]. Based on our research, no relevant dataset that fits our situation could be found. However, it was discovered that the authors in [27][28] were using self-collected dataset due to the road situation and use case varying in different countries. Finally, a comprehensive summary of the literature review was provided in Table 1.

4 Methods

The main aim of this research is to experiment with finding the most suitable object detection algorithm for vehicle detection and to address the underrepresentation of certain classes in the dataset by employing an adaptive oversampling technique.

4.1 Dataset description

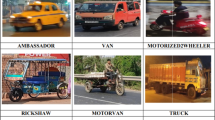

In this study, a customized dataset for vehicles was collected using CCTV cameras on Malaysia urban roads and highways. The video is recorded 24 h every day at a frame rate of 25 fps, and it was extracted at 25 fps per image using a python library called OpenCV so that it is easier to label and feed as input into the object detection model. The annotation process is performed using LabelImg, an annotation tool designed to label objects of interest in an image [22]. The labelling is done manually through visual inspection. During the annotation phase, vehicles that are too small or too far away within the image are excluded, while blurry or unidentifiable vehicles are tagged as “unknown”. A verification step is performed to confirm the accuracy of the labelling. The dataset contains a total of 2336 images including vehicles and traffic lights that are categorized into 17 classes. There are a total of 14,563 annotations in this dataset.

This dataset has been introduced specifically for vehicle detection, as there is no official dataset available for this purpose. While the COCO and Pascal VOC datasets exist, they encompass a wide range of general object categories, and our focus is solely on vehicle types. For ease of reference, the collected dataset will be known as MMUVD Dataset henceforth. Samples of the different vehicle classes in the proposed MMUVD Dataset are presented in Fig. 1.

4.2 Experimental environment

To assess the dependability of the object detection outcome, an evaluation of the experimental environment was conducted on the Windows 11 platform, utilizing a 13th Generation Intel® Core™ i9-13900 K processor and an NVIDIA GeForce RTX 4070 Ti graphics card. The RAM capacity of this system is 128 GB. Python version 3.10.0 is utilized. For testing algorithms such as Faster R-CNN and SSD MobileNet v3, open-source object detection toolbox paddle Detection was utilized. After that, the official GitHub repositories for YOLO-v7 and YOLO-v8 were subsequently downloaded. Finally, torch-cu121 is the PyTorch version utilized in every experiment.

4.3 Model implementation

In this paper, 4 types of state-of-the-art object detection methods including YOLOv8, Faster R-CNN and SSD MobileNet v3, were investigated. These architectures are selected due to their popularity and suitability for real-time video-based detection applications. The details for each approach are presented, and their performance are compared.

YOLO is a widely used object identification technique that may be used in multiple fields, such as autonomous driving, vehicle detection, and pedestrian detection. One of the contributing factors to YOLO's widespread adoption is its utilisation of an end-to-end neural network for simultaneous predicting class probabilities and bounding boxes. It differs from prior object detection algorithms, which reused classifiers to identify objects. YOLO significantly outperformed current real-time object identification algorithms, achieving state-of-the-art performance by using a fundamentally innovative approach to discover things. YOLO uses a single fully connected layer to accomplish all of its predictions, as opposed to Faster R-CNN and other algorithms that use the region proposal network (RPN) to find possible areas of interest and then classify those regions on their own. Models that use RPN execute many iterations for the same picture, but YOLO just needs one which makes it faster. Three elements comprise the YOLO loss function: localization loss, classification loss, and confidence loss [23]. The localization loss, which is located on first and second row in Eq. (1), measures the errors in predicted boundary box location and sizes. It only counts the box responsible for detecting the object. On the other hand, the confidence loss, which located on the third row in Eq. (1), assesses the accuracy of the objectness score, which indicates the presence of an object in a given region. The classification loss, which located in the final row in Eq. (1) computes the error in classifying the objects within the boxes. In the equation, the value of term \({1}_{ij}^{\text{obj}}\) will be 1 if box j and cell i match, and 0 otherwise. On the contrary, the term \({1}_{ij}^{\text{noobj}}\) will be 1 if box j and cell i do not match; and 0 otherwise.

Faster R-CNN is an enhanced version that builds upon the advancements made in both R-CNN and Fast R-CNN [24]. Faster R-CNN distinguishes itself by the presence of Region Proposal Network (RPN), which enhances its speed even further. There are two task layers within the Faster R-CNN architecture, which are RPN and Fast R-CNN layer. The loss function of Faster R-CNN is a multitask loss function where the classification loss and the bounding box regression loss are combined. \({L}_{cls}\) in Eq. (2) refers to the logarithmic loss function across two classes where the regions are classified into only two classes, which is the background or the object [25]. On the other hand, \({p}_{i}^{*}{L}_{\text{reg}}\) refers to the regression loss is activated only for positive anchors \(\left({p}_{i}^{*}=1\right)\) and is disabled otherwise \(\left({p}_{i}^{*}=0\right)\).

SSD MobileNet has gained popularity as an object detection method in recent years. One and two stage object detectors are the two major types of object detection approaches [26]. R-CNN is a two-stage detector, while SSD and YOLO are one stage object detectors. An advantage of one stage detector is that it uses less computational power and is faster in terms of speed as only one shot is required. As a result, one stage detector is often used for real time object detection. SSD MobileNet V3 is famous for its one-stage detection feature. It features a slim network and new depth-wise separable convolutions, making it suitable for low-computing devices such as mobile phones. Despite its speed, this model achieves a high level of accuracy. As a single convolution network, the SSD architecture is trained to simultaneously predict bounding box coordinates and classify them. This enables the model to be trained end-to-end. The loss function used in SSD MobileNet includes the localization loss, Lloc, and confidence loss, Lconf, which are shown in Eqs. (3) and (4) [27]. Lloc in Eq. (3) is the smooth L1 loss between the predicted box and the ground-truth box parameters, these parameters include the offsets for the center point (cx, cy), width (w) and height (h) of the bounding box, while Lconf inequation (4) is the softmax loss over multiple classes confidences (c).

4.4 Adaptive oversampling

According to [15] [19], data augmentation is an effective solution to increase training samples with operations like horizontal flip, scale, crop, histogram equalization, contrast, and brightness adjustments. In this paper, an adaptive oversampling technique derived from the principles of data augmentation is proposed. This method adaptively oversamples the underrepresented class in a dataset characterized by class imbalance. As a result, the dataset expands from 2336 images and 14,563 annotations to 17,796 images and 156,432 annotations.

The proposed method takes two parameters: label_list and balance_rate. The label_list is a list of labels (e.g., class names) associated with their corresponding sample files, and the balance_rate is the ratio of the maximum class value to the total number of samples in the dataset. The method first creates a dictionary called dict_data where the keys are the class labels, and the values are the number of samples associated with each class. After that, the function from line 2 to line 12 will read an XML file containing object annotations and count the occurrences of different class names. Then, it calculates the maximum class value, the total number of samples, and a list called augmented_classes that contains the labels of the under-represented classes.

If there is only one class in the dataset, the function returns augmented_classes, the maximum class value, and the dict_data. Otherwise, it iterates through the keys of the dict_data and checks if the ratio of the current class value to the total number of samples is less than or equal to the balance_rate divided by the number of classes. If the condition is met, the function adds the key to the augmented_classes list. The pseudocode of the algorithm is shown in Algorithm 1.

Experimental fairness was ensured by subjecting all algorithms to testing using the same validation dataset prior to conducting augmentation. Figs. 2 and 3 visually depict the categorization of classes both before and after the implementation of data augmentation techniques, providing a comparative analysis of the dataset's composition and distribution across different classes pre- and post-augmentation.

.

5 Results and discussion

This section can be divided into 4 sections. Sect. 4.1 discusses the evaluation of classic object detection methods, including YOLOv3, YOLOx, RetinaNet and Cascade R-CNN, to compare the performance between one-stage and two-stage algorithms. Following this, Sect. 4.2 evaluates the effectiveness of adaptive sampling. In Sect. 4.3, a comparison is made with state-of-the-arts methods, encompassing the latest versions of YOLO, namely YOLOv7-tiny and YOLOv8n, as well as Faster R-CNN and SSD MobileNet v3. All experiments were conducted using the MMUVD dataset. Finally, the discussion is presented in Sect. 4.4.

5.1 Evaluation of classic object detection models

An experiment was conducted on classic object detection algorithm including one-stage and two-stage algorithm such as YOLOv3, YOLOx, RetinaNet and Cascade R-CNN for 100 epochs to observe their performance. The comparison between training duration and accuracy was provided in Table 2. From the data in Table 2, it can observe that classic two-stage algorithm (Cascade R-CNN) has a mAP50 of 0.593 and mAP50-95 of 0.362, which is the highest among all the algorithms in this section. However, it also demands a longer training duration, lasting around 258 min to complete, nearly twice as long as YOLOv3.

On the other hand, the one-stage algorithms, including YOLOv3 and YOLOx, have slightly lower accuracy but much shorter training times, which only requires 142.80 and 193.80 min respectively. This suggests a trade-off between accuracy and speed when choosing between one-stage and two-stage algorithms. Additionally, among all the tested models, RetinaNet exhibited the lowest performance in terms of mAP scores among all the tested models. This discrepancy in performance could be attributed to the characteristics of the dataset, which may contain small or occluded objects. RetinaNet, as a one-stage detector, tends to struggle with accurately detecting small objects compared to its two-stage counterparts. Consequently, this limitation may have contributed to its lower performance in this evaluation.

However, it is worth noting that when it comes to state-of-the-arts one-stage algorithms discussed in Sect. 4.3, YOLOv8n has demonstrated remarkable superiority over the classic two-stage algorithm, including Cascade R-CNN in this experiment, as well as the state-of-the-art Faster R-CNN in Sect. 4.3. This noteworthy achievement underscores the significance of ongoing enhancements in algorithm design and the optimization of neural network architectures in one-stage algorithm. These continual improvements have enabled the one-stage algorithm to effectively address the longstanding challenges of poor detection accuracy and limited target range, thus solidifying its position as a highly reliable and extensively utilized vehicle detection model across a diverse array of real-world applications and scenarios.

5.2 Evaluating the effectiveness of adaptive oversampling

This section assesses the performance of the suggested adaptive oversampling approach. The dataset was trained using the best algorithm from the comparison with state-of-the-art methods in Sect. 4.3 which is YOLOv8n. It observed that the performance in terms of mAP50 and mAP50-95 after performing adaptive oversampling has significantly increased, as shown in Table 3. This increase might be caused by the creation of more instances of the underrepresented classes, which can help to balance the dataset.

Figures. 4 and 5 illustrate the difference that was shown between the confusion matrix before and after adaptive oversampling using YOLOv8n. The actual and predicted categories are illustrated by the rows and columns of the confusion matrix, respectively. The numbers in the diagonal zone indicate the accuracy of category predictions, while the values in the other areas show the percentage of incorrect category predictions. The diagonal section of the confusion matrix in Fig. 4 exhibits a higher level of darkness after the implementation of data augmentation. This signifies an enhancement in the model's capacity to accurately forecast the category of vehicles.

The class with less sample for example Trailer Truck exhibits an increase from 0.68 to 0.90 for confusion matrix after applying data augmentation and evaluated on the same validation dataset. Similarly, other classes such as “Bus”, “Truck”, “SUV” and “Pedestrian” show significant improvements in their confusion matrix values, demonstrating the effectiveness of data augmentation in addressing class imbalances and enhancing the model’s ability to generalize to diverse conditions.

The results underscore the significant positive impact of adaptive oversampling on the effectiveness of object detection models. It also demonstrates that adaptive oversampling can increase the variability which can help the model to generalized better to different conditions and enhanced the robustness of the model. This is substantiated by the notable enhancements observed in the confusion matrix values for various vehicle classes, indicating improved classification accuracy and reduced ambiguity in model predictions.

5.3 Comparison with state-of-the-arts

After reviewing on [11,12,13], the decision was made to experiment with comparable work. The comparison between training duration and accuracy is provided in Table 3. According to the findings, YOLOv8n performs better than other state-of-the-art object detection algorithms, with mAP50 and mAP50-95 scores of 0.686 and 0.439, respectively. On the other hand, YOLOv7-tiny gets scores of 0.575 and 0.344. The enhanced efficacy of YOLOv8 compared to YOLOv7 may be credited to the novel Darknet-53 backbone network included into YOLOv8. This network is substantially faster and more accurate than the previous backbone used in YOLOv7. Besides, YOLOv8 also replaced the traditional coupled head to decoupled head that separate the classification and bounding box head which may let the model converged faster.

In contrast, Faster R-CNN also achieves a good result with 0.632 for mAP50 and 0.384 for mAP50-95 for 100 epochs, but it still falls behind YOLOv8. Moreover, it takes far longer training time as compared to YOLOv8. This is because Faster R-CNN needs to pass through the network multiple times to detect regions of interest and classify them, while YOLO only requires one pass. SSD MobileNet v3 obtains 0.287 for mAP50 and 0.128 for mAP50-95. It has been found to have lower performance compared to the other object detection methods, which could be due to its architecture. In comparison to other models, SSD MobileNet v3 has a lesser number of layers and parameters due to its lightweight and quick nature. This could make it more difficult to detect objects accurately, especially in scenarios with multiple objects or occlusions (Table 4).

5.4 Discussions

Several important observations were made during the experiments conducted in this study:

-

Although two-stage algorithms like Cascade R-CNN initially demonstrated slightly better performance compared to one-stage algorithms in the evaluation of classic object detection methods, the rapid development of one-stage algorithms such as YOLOv8n has led to comparable or even superior performance in terms of mean Average Precision (mAP) and training duration when compared to two-stage algorithms.

-

Four types of comparable object detection methods were investigated: YOLOv8, YOLOv7, Faster R-CNN, and SSD MobileNet v3. Their accuracy and training time on the MMUVD dataset were compared.

-

It was observed that YOLOv8n delivers the best results for vehicle detection, exhibiting the greatest mAP and fastest training speed across all the experiments.

-

By generating a broader variety of training samples, adaptive oversampling can improve the ability of the model to generalise effectively to new and unfamiliar data.

6 Conclusion

The aim of this research was to identify and categorize vehicles, since this is crucial for the functioning of ITS. Automobiles have unique characteristics that set them apart from other general objects. The research in this domain was facilitated by the accessibility of a dataset including a diverse array of vehicle types. In the first trial, YOLOv8n demonstrated superior performance compared to other object detection algorithms, achieving a mAP50 score of 0.686 and a mAP50-95 score of 0.439. The seventeen classes' inconsistent distribution presented a difficulty for the object detection methods. Upon implementing data augmentation, the mAP50 and mAP50-95 improved to 0.950 and 0.717 respectively, using the YOLOv8n model.

Building on the promising findings of the adaptive oversampling approach and the performance of YOLOv8n, further research might look at how these developments can be integrated into real-time ITS applications. Besides, the future work also aims to improve the vehicle detection model’s generalization ability by expanding the dataset to include a wider variety of scenarios, perspectives, and high-quality video/image captures, particularly under ideal lighting conditions. Further study may also look at the scalability of the suggested methodologies over a variety of traffic circumstances, as well as the introduction of other vehicle types, which is an intriguing prospect for future studies.

Data availability statement

The MMUVD Dataset is available at the public Kaggle repository : https://www.kaggle.com/datasets/michaelgoh/roadvehicle.

References

Chen J, Zhang Y, Teng S et al (2023) ACP-based energy-efficient schemes for sustainable intelligent transportation systems. IEEE Trans Intell Veh 8:3224–3227. https://doi.org/10.1109/TIV.2023.3269527

Zhu F, Lv Y, Chen Y et al (2020) Parallel transportation systems: toward iot-enabled smart urban traffic control and management. IEEE Trans Intell Transport Syst 21:4063–4071. https://doi.org/10.1109/TITS.2019.2934991

Lamba A, Kumar V (2023) A novel image model for vehicle classification in restricted areas using on-device machine learning. Int J Inf Technol 15:3037–3043. https://doi.org/10.1007/s41870-023-01346-z

Babaei P, Riahinia N, Ebadati EOM, Azimi A (2023) Autonomous vehicles’ object detection architectures ranking based on multi-criteria decision-making techniques. Int J Inf Technol. https://doi.org/10.1007/s41870-023-01517-y

Sreedhar S, Philip AO, Sreeja MU (2023) Autotrack: a framework for query-based vehicle tracking and retrieval from CCTV footages using machine learning at the edge. Int J Inf Technol 15:3827–3837. https://doi.org/10.1007/s41870-023-01415-3

Anandhalli M, Tanuja A, Baligar VP, Baligar P (2022) Indian pothole detection based on CNN and anchor-based deep learning method. Int J Inf Technol 14:3343–3353. https://doi.org/10.1007/s41870-022-00881-5

Nandhini C, Brindha M (2023) Transfer learning based SSD model for helmet and multiple rider detection. Int J Inf Technol 15:565–576. https://doi.org/10.1007/s41870-022-01058-w

Yu M (2022) Construction of regional intelligent transportation system in smart city road network via 5G network. IEEE Trans Intell Transport Syst. https://doi.org/10.1109/TITS.2022.3141731

Wan S, Xu X, Wang T, Gu Z (2021) An intelligent video analysis method for abnormal event detection in intelligent transportation systems. IEEE Trans Intell Transport Syst 22:4487–4495. https://doi.org/10.1109/TITS.2020.3017505

Wang Z, Zhan J, Duan C et al (2023) A review of vehicle detection techniques for intelligent vehicles. IEEE Trans Neural Netw Learn Syst 34:3811–3831. https://doi.org/10.1109/TNNLS.2021.3128968

Dodia A, Kumar S (2023) A comparison of yolo based vehicle detection algorithms 2023. International conference on artificial intelligence and applications (ICAIA) alliance technology conference (ATCON-1). IEEE, Bangalore India, pp 1–6

Manojkumar PC, Kumar LS, Jayanthi B (2023) Performance comparison of real time object detection techniques with YOLOv4. 2023 International conference on signal processing, computation, electronics, power and telecommunication (IConSCEPT). IEEE, Karaikal India, pp 1–6

Ding Y, Qu Y, Du D et al (2022) Long-distance vehicle dynamic detection and positioning based on Gm-APD lidar and LIDAR-YOLO. IEEE Sens J 22:17113–17125. https://doi.org/10.1109/JSEN.2022.3193740

Vaquero V, Del Pino I, Moreno-Noguer F et al (2021) Dual-branch cnns for vehicle detection and tracking on LiDAR data. IEEE Trans Intell Transport Syst 22:6942–6953. https://doi.org/10.1109/TITS.2020.2998771

Munir NS, Hossain N, Zame RR, Sarowar G (2023) Vehicle detection of bangladesh using YOLOv7 with Hyper-parameter tuning. 2023 3rd International conference on artificial intelligence and signal processing (AISP). IEEE, VIJAYAWADA India, pp 1–5

Afdhal A, Saddami K, Sugiarto S et al (2023) Real-time object detection performance of YOLOv8 models for self-driving cars in a mixed traffic environment. 2023 2nd International conference on computer system, information technology, and electrical engineering (COSITE). IEEE, Banda Aceh Indonesia, pp 260–265

Xiaoying G, Qiaoling L, Zhikang Q, Yan X (2021) Target Detection of Forward Vehicle Based on Improved SSD. 2021 IEEE 6th International Conference on Cloud Computing and Big Data Analytics (ICCCBDA). IEEE, Chengdu China, pp 466–468

Liu J, Cong W, Li H (2020) Vehicle Detection Method Based on GhostNet-SSD. 2020 International Conference on Virtual Reality and Intelligent Systems (ICVRIS). IEEE, Zhangjiajie China, pp 200–203

Harianto RA, Pranoto YM, Gunawan TP (2021) Data Augmentation and Faster RCNN Improve Vehicle Detection and Recognition. 2021 3rd East Indonesia Conference on Computer and Information Technology (EIConCIT). IEEE, Surabaya Indonesia, pp 128–133

Wang C, Wang H, Yu F, Xia W (2021) A high-precision fast smoky vehicle detection method based on improved Yolov5 network. 2021 IEEE International conference on artificial intelligence and industrial design (AIID). IEEE, Guangzhou China, pp 255–259

Anitha R, Prabakaran P (2023) Vehicle detection and classification based on C-DSO dataset using YOLO v3 with SRBD method for intelligent transportation applications. 2023 Third International conference on advances in electrical, computing, communication and sustainable technologies (ICAECT). IEEE, Bhilai India, pp 1–5

Tzutalin (2015) LabelImg

Jonathan H (2018) Real-time Object Detection with YOLO, YOLOv2 and now YOLOv3. https://jonathan-hui.medium.com/real-time-object-detection-with-yolo-yolov2-28b1b93e2088. Accessed 12 Mar 2023

Gad A (2020) Faster R-CNN Explained for Object Detection Tasks. In: Paperspace Blog. https://blog.paperspace.com/faster-r-cnn-explained-object-detection/. Accessed 12 Mar 2023

Ren S, He K, Girshick R, Sun J (2016) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149

Mehta V (2021) Object Detection using SSD Mobilenet V2. https://vidishmehta204.medium.com/object-detection-using-ssd-mobilenet-v2-7ff3543d738d

Sik-Ho T (2018) Review: SSD—Single Shot Detector (Object Detection). https://towardsdatascience.com/review-ssd-single-shot-detector-object-detection-851a94607d11

Acknowledgements

The research is funded by TM R&D fund (MMUE/220023), 2022–2024.

Funding

The unding agency should be written in full, followed by the grant number in square brackets and year. TM R&D Fund,MMUE/220023,Kah Ong Michael Goh,Fundamental Research Grant Scheme,MMUE/220041,Kah Ong Michael Goh

Author information

Authors and Affiliations

Contributions

Conceptualization, M.K.O.G.; methodology, T.C.; software, C.H.L.; validation, C.H.L.; formal analysis, T.S.O; data curation, C.H.L. and M.K.O.G.; writing—original draft preparation, C.H.L.; writing—review and editing, T.C., T.S.O., M.K.O.G.; supervision, T.C.; funding acquisition, M.K.O.G.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lim, C.H., Connie, T., Ong, T.S. et al. Visual-based vehicle detection with adaptive oversampling. Int. j. inf. tecnol. (2024). https://doi.org/10.1007/s41870-024-01977-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41870-024-01977-w