Abstract

The paper presents an innovative corpus study on Personal Experience as a pragmatic-discursive resource to express power in anonymous online interactions. Specifically, we explore a corpus-driven methodology to extract lexico grammatical features typical of Personal Experience in representative samples (around 160,000 words) of 3 online fora. The method is rooted in Part of Speech and semantic domain keyness (Rayson in Int J Corpus Linguist 13(4):519–549, 2008), which we combine in Corpus Language Queries to extract statistically relevant patterns in the data. Results show that the 3 datasets share a “core” set of key lexico-grammatical features. Furthermore, our findings align with the scientific literature exploring personal experiences and narratives in many different genres. This strongly supports the idea that our inductive protocol can be reliably used to break down the discursive textual function of Personal Experience into lower-level, scalable features. In other words, we suggest that our method can be used to extract “form” (i.e. lexical, grammatical, and syntactical units) from “function” (i.e. pragmatic and discursive annotations). Findings are discussed in the context of language and power in online interactions and in the context of building automatic feature detectors for the analysis of larger cross-genre corpora.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The relationship between power and language can be traced back to Aristotle (2012), and it has continued to be a topic of debate for intellectuals such as Foucault (1970), Habermas (1984), and Bourdieu (1991). Scholars generally agree that in the relationship between power and its expression through language, ‘power constitutes and reproduces discourse, while at the same time being reshaped by discourse itself’ (Antonio et al., 2011: 139). Even though it is recognised that discourse itself shapes power relationships, most previous work on language and power has predominantly focused on settings with clear, identifiable power structures, with extensive contextual information that can be used to map power in communicative interactions. Fairclough (2001) famously discusses the relationship between power and language distinguishing between power in discourse (i.e., the ways in which language is used to assert power) and power behind discourse (i.e., how social structures and hierarchies shape and influence language use).

In the contexts of observable power settings, a wide range of social and communicative and social settings have been analysed, including education (e.g., Sinclair & Coulthard, 1975; Craig & Pitts, 1990), workplace (e.g., Darics, 2020; Kacewicz et al., 2014), healthcare (e.g., Ainsworth-Vaughn, 1998), and legal settings such as police interviews and the courtroom (e.g., Conley et al., 1979; Haworth, 2010). Other studies have instead focused on the realisation of power according to classic sociolinguistic variables such as class (e.g. Alexander, 2005; Kubota, 2003), gender (e.g., Gal, 2012; Lakoff, 2003), and age (e.g., Blum-Kulka, 1990). These studies typically set up a binary contrast between powerful and powerless individuals to investigate how inequality is both supported and revealed through language use. The language of these individuals is analysed in its low-level (often lexical) features, such as the use of intensifiers, hedges, tag questions, hesitations (Conley et al., 1979; Holtgraves & Lasky, 1999) or of plural vs singular personal pronouns (Kacewicz et al., 2014). In all these studies, however, extralinguistic variables such as social status are actively used to “measure” how powerful or powerless a certain individual is in conversation.

But what about situations in which none of this contextual information is given, such as in anonymous online communities? The advent of computer mediated communication (CMC) has in fact allowed users to interact anonymously on designated platforms (like chatrooms, fora, etc). Crucially, in the online world, people can construct and present their online identity selectively (Hu et al., 2015; Kim et al., 2011) or create entirely new online personas which differ substantially from offline identities (For an in-depth review of the latest theories of online identity construction, see Huang et al., 2021). This uncertainty in identity construction is especially true in the context of anonymous criminal or dark web interactions, where users take extra measures to ensure their privacy is protected (Marwick, 2013).

The present study aims to contribute to the study of power in anonymous online spaces by building on a new framework of analysis developed by Newsome-Chandler and Grant (2024). The authors inductively developed a list of pragmatic-discursive textual functions that signal power (or experience) in interactions, called Power Resources. This innovative concept of different linguistic power tools in interaction allows for a nuanced understanding of how power is performed in anonymous online fora. In fact, Power Resources were inductively retrieved by manually coding a 160,000-word sample of 3 fora of different genres: a parenting discussion forum, a white nationalist forum, and a dark web child sexual abuse forum (see sections “Language as power in anonymous online communities” and “Data and methods” for more details).

Newsome-Chandler and Grant’s (2024) methodology is innovative and tackles many open questions in the literature. However, Power Resources are extracted from a comparatively small corpus (circa 160,000 words), and their annotation require a lot of time from human coders. The question on how to possibly scale this analysis to automatic or semiautomatic tagging of larger quantities of text remains unsolved. We aim to address this limitation by using corpus linguistics tools and methods to test if power resources can be successfully broken down into lists of scalable features for automatic feature extraction.

As a case study to illustrate our method, we present here an in-depth analysis on one of these pragmatic-discursive functions, Personal Experience. Personal Experience as a power resource is here defined as posts (or parts of them) in which the writer claims to have expertise in the topic discussed via direct personal experience. For example, in a parenting discussion forum (see Sect. 2.1), a poster asks advice on whether it is best to adopt domestically or internationally. Users of the forum respond giving different types of advice, mostly based on their own experiences of adoption, such as the following in example (1).

-

(1)

We adopted a mixed race (white and Asian) baby in <country>. Feel free to PM me if you’d like more info.

Here, the writer explicitly claims to have authority in the debate by providing evidence that they experienced a similar situation, therefore positioning themselves as an expert in the matter. We set out to answer the following research questions:

-

(1)

Can the pragmatic-discursive Power Resource of Personal Experience in anonymous online interactions be systematically “broken down” into retrievable syntactic and/or patterns? In other words, can we retrieve form from function?

-

(2)

What—if any—are the syntactic-semantic features typical of the Power Resource Personal Experience?

In the next sections we will provide some background on the theoretical underpinnings of this work. The remainder of this section will outline the main trends in the analysis of language and power in online settings (section “Language as power in anonymous online communities”), and the linguistic expression of personal experiences as a form of expertise (1.2). Sections “Data and methods” and “Data analysis and results” will respectively describe the data and the methods used in the study, while Sect. 4 discusses the corpus analysis and results. Section 5 closes the paper with our conclusions and directions for future work.

Language as Power in Anonymous Online Communities

The concept of “linguistic power” is elusive at best, and this is especially true in online contexts where only intralinguistic features are available and no extralinguistic information is given. As mentioned, scholars researching language and power in non-CMC contexts have focused on low-level linguistic features. In these contexts, low-level features work well as they are supplemented by external data that inform the linguistic interaction. Scholars interested in power in online spaces, on the other hand, have variously recognised the need for a more nuanced approach in analysing how power and expertise is expressed and negotiated in language. Power in these contexts is defined as an interactional notion emerging through the exchange (i.e. Fairclough’s power in discourse) rather than reflecting external institutional or social power (i.e. Fairclough’s power behind discourse).

For example, Bolander’s (2013) study of blogs shows that power is exercised via conversational control (turn-taking, speakership, and topic control) and type of argument proposed. McKenzie (2018) looks at discourses of authority and motherhood on Mumsnet using feminist poststructuralism. The author is interested in how users negotiate, resist, and subvert socio-cultural discourses of motherhood, and finds different discursive and linguistic mechanisms through which contributors position themselves in different ways within this debate around identity and motherhood. Paulus et al. (2018) investigate online learning spaces with Conversation Analysis and focus on how learners use agreement, disagreement, and personal experiences. The authors find that these discursive tools are used in online discussions to “perform a variety of functions”, such as supporting and strengthening academic arguments, affiliating or distancing from other users, and claiming expertise and personal involvement in the topic discussed.

Notably, Perkins (2021) experimentally showed for the first time that low-level linguistic features are inadequate to capture power in online contexts, failing to clearly map onto social and institutional power differences in a dataset of emails from a hierarchical institution. Perkins’ work was focused only on low-level linguistic features, including Part-Of-Speech- Tags, intensifiers, pronouns, hedging, politeness, and taboo words. The study showed that it is very difficult to untangle “power” from other social factors and influences in language with low-level features and no contextual information. As already highlighted by Paulus et al. (2018) and others (Graham, 2007; Buttny, 2012), contextual information is fundamental to understand how power is expressed through language.

Building on these findings, Newsome-Chandler and Grant (2024) developed a new approach to understand and analyse power performance in anonymous online interactions rooted in interactional pragmatics (Locker and Graham, 2010). In this framework, power performance is understood as a multifaceted discursive phenomenon that consists of multiple discursive resources that individuals can draw upon to make claims attempting “to persuade the original poster (and other interactants present in the fora) to listen to their point of view and potentially to act in a particular fashion” (Newsome-Chandler & Grant, 2024: 4). In other words, within one interaction users can combine different power resources to elicit different facets of expertise and persuade others to listen to them.

The authors manually annotated a 160,000 words dataset, sampled from 3 online anonymous fora. Using insights from discourse analysis and pragmatics, they developed a list of 10 different discursive power resources (henceforth: PwR) that are routinely employed by users to perform power and expertise in interaction. These are: Community Expertise, Community Specific Initialisms, Technological Expertise, Veteran Power, Accredited Expertise, Topic Expertise Through Personal Experience, Broad Topic Expertise, Private Knowledge, Citing a Secondary Source for Authority, Subject of Law Enforcement/Investigations (for an in-depth discussion on all of these, see Newsome-Chandler & Grant, 2024: 6-10). PwRs are functional and pragmatic strategies that showcase different facets of linguistic power and expertise. The authors point out that the fact that PWRs have been identified across very different datasets (see section “Data and methods”), “highlights the transferability and potential generalisability of our resource model of power in interaction” (Newsome-Chandler & Grant, 2024: 20). However, larger datasets need to be coded with semi-automated methods to both validate the coding set and prove scalability. The present paper aims at contributing to this question by investigating whether discursive functions of linguistic power can be represented by formal features for automatic detection. We present an in-depth case study to illustrate how corpus linguistics can be used to extract syntactic and/or semantic features typical of power resources. Specifically, we present our analysis of the PwR of Topic Expertise Through Personal Experience, which as mentioned refers to claims of direct personal involvement in the topic discussed. Personal experience was chosen as a first case study for a two-fold reason. Firstly, it is the only category among Newsome-Chandler and Grant’s (2024) Power Resources that has been extensively studied in the literature—hence allowing us to compare our findings with previous works. This will provide a better foundation for future work on less-studied resources of power performance. Secondly, Personal Experience is the resource with more data among the 10 found in Newsome-Chandler and Grant (2024), and therefore findings are more generalisable. This makes Personal Experience the perfect candidate to illustrate our method and contribute significantly to the scholarship.

Personal Experience as Expertise

The expression of personal anecdotal experience has been recognised by the literature as a source of expertise More specifically, it has been widely demonstrated by different areas in social science and linguistics that sharing personal experiences in a conversational settings give interlocutors authority, hence providing them with power in the context of the interaction. Personal narratives are an important tool to establish bonds and legitimacy in a social group (Fine, 2013; Polanyi, 1985), and they have been found to be an important tool that professional use to position themselves as experts and construct their institutional identities (Dyer and Keller-Cohen, 2000). The construction and negotiation of new kind of identities is also discussed by Jones (2016), who shows how participants of the It Gets Better campaign use different types of personal narratives to gain “textual authority”.

The expression of personal experience has been also linked to a “narrative evidence” function (Hong & Park, 2012) and to “personal expertise” used to engage with others while commenting on health science news articles (Shanahan, 2010). Buttny (2012) further argues that “experience is a resource that we can draw upon” (Buttny, 2012: 604). That is, the use of personal experience is seen as an asset that the individual uses malleably in different contexts. Constructivist learning theories also acknowledge that personal narratives serve as a valuable foundation for learning interactions, as personal experiences may be regarded by peers as the most trustworthy form of evidence. (Leont’ev, 1979). Moreover, research has also shown that resorting to anecdotal and personal rather than factual expertise is also more engaging for an “invisible audience” (Lester & Paulus, 2011; Stokoe et al., 2013).

Although most of the research in this area focuses on “offline genres”, with the advent of widespread use of the Internet for user-generated content—the so-called Web 2.0 (Allen, 2013; Han, 2011)—scholars have become more and more interested in how online communities construct and share personal narratives. For example, Page (2018) uses discourse analysis to develop a framework for analysing large-scale narratives on four social media platforms, Wikipedia, Facebook, Twitter, and YouTube. Stone et al. (2022) conduct experimental research to investigate why young adults share personal experiences on social media, finding social reasons to be the primary motive.

Overall, the literature recognises that personal experience as a discursive function is a tool available to interactants to express authority and/or expertise in a subject matter. Research on online spaces is growing, but the area is still vastly understudied.

But what is personal experience from a linguistic perspective? Interestingly, studies from different areas (such as linguistics, psychology, media studies) all seem to converge towards a “core” group of syntactic and semantic features of personal experience. The main syntactic and semantic features of personal narratives and experiences are the overuse of first-person pronouns and past tense verbs (Bamberg, 1987; Hayes & Casey, 2002; Hudson & Shapiro, 1991). Additionally, other textual markers of narrative building include specifications of setting of the event (to “set the scene”) linguistic items that enhance cohesiveness—such as pronominalization and interclausal conjunctions—, (Hayes & Casey, 2002; Hudson & Shapiro, 1991) and viewpoint markers that depict personal stance, including verbs of cognition, perception, and emotion (Pulvermacher & Lefstein, 2016; Sacks, 1986; Stivers, 2008; van Krieken, 2018).

Personal experience and narratives have been widely investigated in the linguistic and social sciences literature. This breadth of literature, however, is lacking in several respects. Despite recognising experience as expertise, there are no studies—to the best of our knowledge—specifically on anonymous online contexts. Furthermore, the linguistic features of personal experience have also been discussed in offline and/or non-anonymous genres. We set out to fill this gap in the research by exploring the linguistic features of personal experience following Newsome-Chandler and Grant’s (2024) coding scheme.

Data and Methods

Materials

As data, we use the sample of anonymous online interaction used in Newsome-Chandler and Grant (2024). Specifically, the corpus used is a sample from 3 multi-million words corpora of online fora of different genresFootnote 1. The first forum is a well-established clear web parenting discussion forum (henceforth: PD)Footnote 2. The platform is perfectly legal and a well known community used to discuss issues and advice regarding but not limited to parenthood. The second forum focuses on white nationalist and racist ideologies (henceforth: WN). The site attracts users who are interested in topics such as white supremacy, conspiracy theories, mysogyny and homophobia. Although the website is on the open web, it is centred around ideologies and themes generally rejected by society and public opinion, and includes discussions of criminal activities. The third and last forum is a child sexual abuse forum from the dark web (henceforth: DW). Users on this forum discuss highly illegal topics related to paedophilia, and are hence very careful of their online anonymity.

These 3 online fora were first sampled by the authors by extracting 8 prompting interrogative phrases in opening posts that elicited advice in other users (e.g., “How do I…?”, “How do you…?”, “Do you know…?”, “Why did..?”, etc.). In fact, the authors observed that users responding to explicit questions for advice would try to present themselves as reliable and trustworthy by claiming authority with different pragmatic. From this first subcorpus, a smaller dataset of 160 threads was selected by extracting the 20 most recent threads from each prompting phrase. The dataset was sampled for manual analysis by selecting the first 3 threads for each of these different lengths: 5 to 9 posts, 10 to 19 posts, 20 to 49 posts. The final corpus used by Newsome-Chandler and Grant amounts to 72 threads and around 160,000 words (Newsome-Chandler & Grant, 2024: 5-6).

From this dataset we manually extracted all threads containing one or more posts tagged as Personal Experience. This resulted in 258 posts across corpora (138 PD, 70 WN, 50 DW), across all 72 threads. The dataset was further divided in a traditional 70:30 train/test split, which is the common rule to deal with small size dataset in machine learning. That is, corpus analyses were performed on threads containing 70% of posts coded as Personal Experience (181 total posts, 97 PD, 49 WN, 35 DW, over 37 different threads) while the remaining 30% is left as test data for the computational stage of the project (Htait et al., 2024. See Sect. 5). Table 1 reports total number of tokens and words for the data.

The resulting materials were analysed with an inductive corpus-driven methodology, as we made “minimal a priori assumptions regarding the linguistic features that should be employed for the corpus analysis (…) [C]ooccurrence patterns among words, discovered from the corpus analysis, are the basis for subsequent linguistic descriptions.” (Biber, 2015: 196).

Methods

As mentioned, the overarching aim of the study is to illustrate how to break down functional annotations of PwR in automatically retrievable linguistic patterns. To do so, an inductive protocol to extract syntactic and/or semantic patterns from manually annotated posts was developed, combining different corpus linguistics methodologies rooted in keyness analysis (Rayson, 2008). More specifically, we combine keyness analysis for Part of Speech (POS) tags and for semantic domains with Corpus Query Language (CQL) queries.

Keyness analysis is a technique widely used in corpus linguistics that compares items “which occur with unusual frequency in a given text […] by comparison with a reference corpus of some kind’ (Scott, 1997: 236). Scholars conduct keyness analysis at different levels of linguistic analysis. While the most common is certainly key words, the analysis of key part of speech tags and semantic domains is also attested in the literature (Busso & Vignozzi, 2017; Culpeper, 2009; Egbert & Biber, 2023; Rayson, 2008). CQL is a formal languages that can be used to conduct searches on different corpus processing software. It is a powerful tool to retrieve complex patterns and structures from a concordancer tool. In their simplest form, CQL queries take the form of pairs of attributes and values, structured as [attribute= “value”] (e.g. [lemma= “go”]) (Klyueva et al., 2017).

Individually, these methodologies are widely used in corpus linguistics research. However, this is the first study—to the best of the author’s knowledge—that combines them into a unified protocol for corpus exploration. The protocol includes:

-

(1)

Keyness analysis of part-of-speech (POS) tags on Sketchengine (Kilgarriff et al., 2004; tagset: English Penn Treebank, Marcus et al., 1993; Keyness score: simple math, Kilgarriff, 2009)

-

(2)

Keyness analysis of semantic domains on WMatrix 5 (Rayson, 2008). This analysis is performed using the USAS tagger (Rayson et al., 2004) which automatically assigns semantic fields to each word or multiword expression in the data (Keyness score used: Log Likelihood).

-

(3)

CQL queries of patterns based on results of the previous 2 keyness analyses.

The use of all these three tools allows the researcher to triangulate evidence from morpho- syntax (POS tags), semantic-pragmatics (semantic domain analysis), and the interaction between the two in the form of complex retrievable patterns of syntax + semantics (see section “Data analysis and results” below).

As a preliminary step for all analyses, data for each of the 3 fora were further subdivided into two subcorpora: a focus corpus which includes all posts or parts of posts coded as “Personal Experience” (PersExp) in Newsome-Chandler and Grant’s (2024) dataset, and a reference corpus which includes all the rest of the thread, which we call “Non-Personal Experience” (NonPersExp). In this way, linguistic elements that are statistically more relevant in the PwR are foregrounded with respect to the rest of the data.

For example, in the simplified and redacted excerpt below in (2) from a post in the PD forum (with simplified XML tags), the first paragraph would be put in the focus corpus “PersExp”, since it is explicitly tagged as suchFootnote 3. The post comes from a thread on adoption, particularly discussing how to behave around children adopted by close family, who get overwhelmed by the attention very easily. In example (2) we can see the poster explicitly using their experience in the matter as “evidence” to make other users pay attention to their opinion, expressed at the very beginning of the post (i.e., that parents sometimes overestimate the child’s resilience). The rest of the post—which is tagged as another power resource, Community Expertise—directs the original poster to another thread in the same forum. Both the initial advice (which is not tagged) and this last sentence would be categorised in the reference “NonPersExp” subcorpus.

2) They may have overestimated the child and their resilience. <personal_experience> (…)I was eager to have family meet my baby but struggled sometimes to assert myself (…)! I still remember crying in my kitchen after a well-intended cousin kept getting in her space (…). It took months to feel like I could just say no. </segment> <community_expertise> It’s similar to the threads you see on the board where new mothers struggle to tell no to relatives wanting hugs and kisses etc. </segment>

The resulting subcorpora are quite balanced, with PersExp amounting to 27,525 tokens (47% of the corpus), and NotPersExp to 31,117 tokens (53% of the corpus).

All keyness analyses (POS tags and semantic domains) were performed comparing the two subcorpora against each other. CQL queries were also conducted separately in the two subcorpora, to compare frequency of occurrence of the retrieved grammatical patterns (see Appendix 1 for the complete list).

For the keyness analysis of semantic domains, only items above statistical significance threshold for Log Likelihood (i.e., a score of 6.6) were selected. Furthermore, since semantic domains are highly data-dependent, only non-context dependent semantic domains were used. For example, since PD obviously discusses many issues regarding children, parenthood, and family, all semantic domains concerning kids and family relations were discarded. All significant semantic domains were manually checked before discarding.

After using keyness analysis to reveal salient grammatical and semantic categories in the data, results from the two were integrated into CQL searches. In this way, we combine key syntactic (i.e., POS) and semantic (i.e., semantic domains) features into retrievable patterns. For example, we established that determiners are a key part of speech and particulisers are a key semantic domain in the DW forum, so we can combine this information into one CQL search that looks for particulisers belonging to the POS of determiners: [tag= “DT” & lemma= “a few|some|several|both”]. These feature can then be used to automatically detect PwRs in new untagged data.

Data analysis and results

Keyness analysis of POS tags

As described in section “Data and methods”, a keyness analysis of salient POS was performed using all posts coded as Personal Experience as the focus corpus and all the rest of the threads as reference corpus. Table 2 reports the key POS tags respectively for PD, WN, and DW, with results ordered by keyness score (threshold: 1)Footnote 4. The tables are color-coded as follows:

-

In green: linguistic items which are shared across the 3 datasets.

-

In blue: linguistic items which are shared by 2 out of 3 datasets.

-

In orange: items that are not identically present in the 3 datasets but that refer to a same general linguistic ‘category’ (e.g.: coordinating and subordinating conjunctions).

-

In red: linguistic items idiosyncratic to one of the datasets.

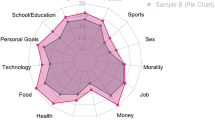

Table 2 shows that, beside idiosyncratic items for each dataset, there is a high number of shared features across datasets. Features of Personal Experience that are shared across these three very different datasets suggest general trends of how users convey their own lived experience through language: referring to past events (past tense verbs) and situations (existential “there”) that happened to them (possessive pronouns). Parataxis is preferred to hypotaxis (coordinating conjunctions), and actions are modified by adverbs. Figure 1 visualises shared features across the 3 datasets.

These results almost perfectly align with previous literature investigating features of personal narratives.

As we mentioned in section “Introduction”, personal narratives tend to use first-person pronouns and past tense (Bamberg, 1987), interclausal conjunctions (Hudson & Shapiro, 1991), location, temporal and stance markers (Stivers, 2008; Van Krieken, 2018).

This finding is crucial for two important interrelated reasons. First, the genres we are exploring are very different from any genre previously studied in the scholarship on personal narratives. Furthermore, the fora being anonymous, there is no way of controlling for variables such as native language or education level. Despite this, we still find features of Personal Experience that largely overlap with the scholarship, strengthening the idea of a “core” linguistic strategies of expressing personal experience that transcends genre, native language, and other potential extraneous sociolinguistic variables. Second, this independent result also functions as a validation of Newsome-Chandler and Grant’s (2024) coding scheme.

Keyness analysis of semantic domains

Analysis of key semantic domains was performed on overused domains in the focus corpus above the statistical significance threshold for Log Likelihood score. Since—as mentioned—semantic domains can be data-dependent, all the relevant domains were manually checked: only semantic domains (1) Represented by a congruous number of non-hapax words and (2) Not context dependent were selected. Figures 2a and b exemplify respectively a viable and a non- viable semantic domain according to these criteria.

Table 3 reports the domains analysed, which were obtained setting a working frequency threshold of 3. Figure 3 plots shared and idiosyncratic features across datasetsFootnote 5. Since WMatrix semantic domains can be quite specific (as seen in table 3), we grouped them under the same general umbrella category, like “Quantity”, or “Time”.

Shared and idiosyncratic semantic domains across the 3 datasets. The categories’ names are the original semantic domains from the USAS tagset used in WMatrix. For example, “Grammatical Bin” in WN refers to grammatical items (preposition, adverbs, conjunctions), that the system was not able to categorise in other groups. “Exclusion” refers to general or abstract terms denoting a level of exclusion, etc.

Semantic keyness analysis expand findings on key POS tags. Particularly, the general pattern that seems to emerge is that shared semantic features of Personal Experience across corpora include referring to time, especially in the past, adverbs of quantity, and have as an auxiliaryFootnote 6. All three fora also exhibit similar semantic domains that provide an evaluative component of the situation reported (“Thought/Belief”, “Seem”). This finding is again consistent with a lot of the relevant literature, showing how personal stance is a common feature in personal narratives (Baynham, 2011; Stivers, 2008; Van Krieken, 2018), and with Labov and Waletzky’s (1997) findings on how narratives fulfil both a referential and an evaluative function. That is, not only the syntactic, but also the semantic keyness analysis seem to suggest that there are some very recognisable cross-genre linguistic features of Personal Experience. This is encouraging in view of our overarching to build automatic feature detectors.

Beside shared features, it is also interesting however to note the idiosyncrasies of each forum. For example, WN and DW do not share any semantic domains beside the core ones. This suggests that the two fora express personal experiences with different strategies, and for different reasons. DW and PD, on the other hand, share a number of additional features. This would appear to suggest that the two fora use Personal Experience in a similar way.

Figure 4 helps to visualise similarities and difference in how users employ Personal Experience in the 3 fora. Semantic domains and POS tags were grouped into inductive categories to avoid an overpopulated graph. This means, for example, that all forms of adjectives (base, comparative, superlative, wh-determiner) are grouped under the same umbrella category of “Adjectives”. In the same way, all expressions of time (Time:present, Time:past, Time:future, Frequent, etc) were similarly grouped under the category of “Time”.

As it can be very easily seen from Fig. 4, each dataset uses Personal Experience features differently. PD and DW both use a high number of adverbs, while WN appears to use them less. On the other hand, WN overuses action verbs (i.e., semantic domains of “Using”, “Moving”, “Finding”) with respect to PD and DW.

In sum, users seem to give advice and express expertise through Personal Experience using a “core” of cross-genre shared linguistic features, both at the syntactic and the semantic level (e.g., the use of the past tense, and referring to time in general). Beside this shared core, there are fuzzy categories of features that are shared at an abstract level but realised differently from dataset to dataset (e.g., the way of providing evaluation to the event), and features that can be considered completely idiosyncratic to a certain dataset (e.g., the use of modal verbs in WN). If we group the features in larger categories and plot them, we can easily visualise variation and similarities across datasets. For example, from the first mosaic plot in Fig. 4 we can see that PD uses way fewer participial forms than DW and WN. Similarly, in the second mosaic plot we can see that DW uses expressions of Quantity much more than the other two corpora.

CQL Queries

Corpus query systems present a powerful and popular concept for linguistics and more generally digital humanities. Different tools are available, with slightly different syntax. Among these, CQL (Rychlý, 2007) is one of the most widely used (Culpeper, 2009). CQL is a regular expression-based “query language used (…) to search for complex grammatical or lexical patterns” (SketchEngine documentation).

In this study, we use CQL queries to combine the key syntactic and semantic features into retrievable syntactic-semantic patterns. For example, knowing that in PD one of the key POS tags are adverbs and that particularisers are semantically relevant, two can be combined in the pattern: [tag= “RB.*” and lemma= “just| very| so| really| also| well”], i.e. “all the listed lemmas that are adverbs—in base, comparative or superlative form”. We also know that personal pronouns, past tense, and verbs of cognition (“Thought and Belief” in Table 2) are key features. Hence, we can combine them in a single search: [tag= “PP”and lemma= “I|we”] []{0,1}[tag= “V.D” and lemma= “have|think|feel”], i.e. “patterns that have a personal pronoun as a subject– either I or we—followed by have, think, or feel in the past tense”.

All retrieved concordances were manually checked and analysed, and frequency lists of lemmas and POS tags on the right or left of the KWIC were performed. This helped expand the list of searches by inductively exploring the corpus for frequent repeated patterns.

Queries were run for all three corpora separately. The list of all CQL searches for the 3 fora is provided in the Appendix, but it is worth noting that it is not meant to be exhaustive. The list provided is intended to give the reader an idea of the type of searches that can be devised following this protocol.

To verify whether the patterns retrieved are indeed typical of the PwR of Personal Experience, CQL queries’ frequencies were extracted from both the PersExp and NonPersExp subcorpus, and a one tail paired sample t-test was performed. Frequency data was log transformed to approximate a normal distribution. Results confirm that frequency of the CQL queries in PersExp is significantly higher than in NotPers (t = 5.44, p <0.001). This suggests that all patterns extracted are indeed salient features of the PersExp subcorpus.

Conclusions and Future Work

This study set out to achieve a two-fold aim. First, we presented an in-depth case study on one of the 10 power resources found by Newsome-Chandler and Grant (2024): Personal Experience. We described the linguistic features of Personal Experience as a power resource, combining pragmatic annotations with corpus linguistics to retrieve key lexico-grammatical patterns of pragmatic functions in text. Secondly, we introduced an innovative corpus-driven protocol that combines POS tags and semantic domains keyness analysis with regex-based corpus searches (CQL).

From a content perspective, the study presented innovative insights into the use of personal experiences as expertise in online anonymous interactions. Particularly, by analysing a textual type (anonymous forum threads) which is very far from the typical genres explored by the literature, we still found features that align with and expand on the scholarship. This strongly suggests that (1) There is a “core” of semantic and/or syntactic features of personal experience which seems to be genre-independent; and that (2) Our corpus-driven protocol is useful to retrieve syntactic and semantic features of high level, pragmatic annotations (in our case, claims to power and power performance). These features can be then used as cues to automatically retrieve the pragmatic functions in new, untagged data. This methodological contribution is crucial to the intersection of pragmatics and corpus linguistics, as it allows the researcher to scale pragmatic annotations—notoriously a laborious and time-consuming annotation process—to bigger datasets.

Despite its preliminary nature, the study shows that this approach is promising and offers a novel way of combining pragmatics with corpus and computational linguistics. In fact, we have been able to identify consistent sets of retrievable linguistic features of Personal Experience. These are obtained by triangulating evidence from keyness analysis of part-of-speech tags and semantic domains, and from CQL queries that bring together syntactic and semantic features into retrievable patterns.

The reliability of our findings is supported by a vast literature on personal narratives that generally converge on the same type of features, although coming from vastly different genres (section “Data analysis and results”). This—we argue—strongly suggests that we were able to extract “core” characteristics of the linguistic expression of personal experiences and expertise. Albeit further work is needed, our findings support the idea that this “core” set is mostly genre-independent and could be effectively generalized and applied to different types of anonymous online interactions. Our findings also align with claims in Functional and Usage-Based linguistics on the interrelatedness of linguistic form and function. In fact, a recent area of research in cognitive linguistics has started to see even textual genres as constructions (i.e., structural units of form and meaning, as seen in Östman & Fried, 2005). Like genre-specific constructions, we can conceptualise our features as “function”-specific constructions, as we showed that the functional category of Personal Experience is consistently expressed through a set of syntactic and semantic units.

Beside the core group of shared features, there are also a number of idiosyncratic features for each forum. This is true especially in the key semantic domains analysis (section “Keyness analysis of semantic domains”), as there are fewer shared semantic categories than POS tags. This is partly due to the simple fact that there are more semantic categories than POS tags, and partly due to polysemy factors. Although our focus has been on identifying features shared cross-genres, another fruitful research area might look into genre-specific and idiosyncratic features of personal experiences.

Given that our aim is to create scalable features for computational feature detection, we are currently exploring using the corpus results as training for automatic feature extraction algorithms to detect PwRs in both the test data and new corpora. Although this phase of the project is still in its preliminary steps, some encouraging results are already being achieved. Htait et al. (2024) details how machine learning algorithms have been developed from corpus features for automatic feature detection.

As far as possible applications of our methodology, the primary purpose of this study is forensic in nature. For example, a natural application of the corpus-driven method presented here would be the analysis of large dark web criminal fora to find powerful users. In their sample, Newsome-Chandler and Grant were already able to individuate different users who would make more and more diversified claims to power and authority than other users (Newsome-Chandler and Grant, 2024: 10 ff). With corpus linguistics and machine learning (Htait et al., 2024) we would be able to replicate that result on a much larger scale, individuating users that present as more or less powerful than others and creating a hierarchy of power performance. However, our study has potential to be useful beyond the forensic applications, as the theoretical and methodological framework developed can also be fruitfully used in analysing CMC more widely. In fact, our protocol for feature extraction can potentially be used in any situation in which researchers want to extend and generalise a qualitative analysis based on pragmatic or discursive annotations (such as Move Analysis, Appraisal, Critical Discourse Analysis, etc).

Data Availability

The 3 multimillion words corpora are available on the online repository fold.ac.uk curated by the Aston Institute for Forensic Linguistics. The specific sample used for this study is available upon request to the authors.

Notes

To prevent presenting the reader with distressing language, linguistic examples in the remainder of this paper will be taken from PD whenever possible. Only when no example of a phenomenon is found in PD other datasets will be used.

To prevent traceability of users for data coming from the clear web, all examples from PD and WN have been slightly redacted in ways that do not significantly alter the content. For instance, an example that originally states: “I have been diagnosed with depression” might be changed to “I have been diagnosed with a mental health condition”.

The POS tagset used is the English Penn Treebank with modifications, available at https://www.sketchengine.eu/english-treetagger-pipeline-2/

The framework used is the UCREL semantic analysis system (USAS), the tagset automatically used by WMatrix for automatic semantic analysis (Available at https://ucrel.lancs.ac.uk/usas/usas_guide.pdf)

The domain “Getting and possession” mostly include the verb “have” used as an auxiliary, or verbs like “get” and “take” in light verb constructions.

References

Alexander, N. (2005). ‘Language, class and power in post-apartheid South Africa’. Harold Wolpe Memorial Trust Lecture, Wolpe Trust, Cape Town, October 27, 2005. Accessed on 23/02/2024 via Microsoft Word - CT2005 Oct_Alexander_Transcript.doc (escr-net.org).

Allen, M. (2013). What was Web 2.0? Versions as the dominant mode of internet history. New Media and Society, 15(2), 260–275.

Antonio, J., Berard, F., & Holzscheiter, A. (2011). The power of discourse and the discourse of power. SAGE Publications Ltd.

Aristotle. (2012). Rhetoric Kindle Edi. Vook Inc.

Bamberg, M. G. W. (1987). The acquisition of narratives: learning to use language. Walter de Gruyter and Co.

Baynham, M. (2011). Stance, positioning, and alignment in narratives of professional experience. Language in Society, 40(1), 63–74.

Biber, D. (2015). Corpus-based and corpus-driven analyses of language variation and use. In B. Heine & H. Narrog (Eds.), The Oxford Handbook of Linguistic Analysis. Oxford Academic.

Blum-Kulka, S. (1990). You don’t touch letuce with your fingers: parental politeness in family discourse’. Journal of Pragmatics, 14(2), 259–288.

Bolander, B. (2013). Language and power in blogs: Interaction, disagreements and agreements. John Benjamins Publishing Company.

Bourdieu, P. (1991). Language and symbolic power. Harvard University Press.

Busso, L., & Vignozzi, G. (2017). Gender stereotypes in film language: a corpus-assisted analysis. In Conferenza Italiana di Linguistica Computazionale 2017. CEUR-WS. org.

Buttny, R. (2012). Talking problems: Studies of discursive construction. State University of New York Press.

Conley, J., O’Barr, W. M., & Lind, E. A. (1979). The power of language: Presentational Style in the courtroom. Duke Law Journal, 6, 1375–1399.

Craig, D., & Pitts, M. K. (1990). The dynamics of dominance in tutorial discussions. Linguistics, 28, 125–138.

Culpeper, J. (2009). Keyness: Words, parts-of-speech and semantic categories in the character talk of Shakespeare’s Romeo and Juliet. International Journal of Corpus Linguistics., 14(1), 29–59.

Darics, E. (2020). E-leadership or “How to be boss in Instant Messaging?” The role of nonverbal communication. International Journal of Business Communication, 57(1), 3–29.

Dyer, J., & Keller-Cohen, D. (2000). The discursive construction of professional self through narratives of personal experience. Discourse Studies, 2(3), 283–304.

Egbert, J., & Biber, D. (2023). Key feature analysis: a simple, yet powerful method for comparing text varieties. Corpora, 18(1), 121–133.

Fairclough, N. (2001). Language and power. Pearson Education.

Fine, G. A. (2013). Public narration and group culture: Discerning discourse in social movements. In Social movements and culture (pp. 127-143). Routledge.

Foucault, M. (1970). The archaeology of knowledge. Information, 9(1), 175–185.

Gal, S. (2012). Language, gender, and power: An anthropological review. In S. Gal (Ed.), Gender articulated (pp. 169–182). Routledge.

Habermas, J. (1984). The theory of communicative action vol.: Reason and the rationalization of society. Boston: Beacon Press.

Han, S. (2011). Web 2.0. Routledge.

Haworth, K. (2010). Police interviews in the judicial process: Police interviews as evidence. In M. Coulthard & A. Johnson (Eds.), Routledge handbook of forensic linguistics. Routledge.

Hayes, D. S., & Casey, D. M. (2002). Dyadic versus individual storytelling by preschool children. The Journal of Genetic Psychology, 163(4), 445–458.

Holtgraves, T., & Lasky, B. (1999). Linguistic power and persuasion. Journal of Language and Social Psychology, 18(2), 196–205.

Hong, S., & Park, H. S. (2012). Computer-mediated persuasion in online reviews: Statistical versus narrative evidence. Computers in Human Behavior, 28(3), 906–919.

Htait, A., Busso, L., & Grant, T. (2024). Hierarchies of Power: Identifying Expertise in Anonymous Online Interactions. In N. Naik, P. Jenkins, P. Grace, L. Yang, & S. Prajapat (Eds.), Advances in Computational Intelligence Systems. UKCI 2023. Advances in Intelligent Systems and Computing (pp. 133–139). Springer.

Hu, C., Zhao, L., & Huang, J. (2015). Achieving self-congruency? Examining why individuals reconstruct their virtual identity in communities of interest established within social network platforms. Computers in Human Behavior, 50, 465–475.

Huang, J., Kumar, S., & Hu, C. (2021). A literature review of online identity reconstruction. Frontiers in Psychology, 12, 696552.

Hudson, J. A., & Shapiro, L. R. (1991). From knowing to telling: The development of children’s scripts, stories, and personal narratives.

Jones, R. H. (2016). Generic intertextuality in online social activism: The case of the It Gets Better project. Language in Society, 44(3), 317–339.

Kacewicz, E., et al. (2014). Pronoun use reflects standings in social hierarchies’. Journal of Language and Social Psychology, 33(2), 125–143.

Kilgarriff, A. (2009). Simple Maths for Keywords. Proc Corpus Linguistics.

Kilgarriff, A., Rychly, P., Smrz, P., & Tugwell, D. (2004). Itri-04-08 the sketch engine. Information Technology, 105(116), 105–116.

Kim, H. W., Zheng, J. R., & Gupta, S. (2011). Examining knowledge contribution from the perspective of an online identity in blogging communities. Computers in Human Behavior, 27(5), 1760–1770.

Klyueva, N., Vernerová, A., and QasemiZadeh, B. (2017). Querying multi-word expressions annotation with CQL. In Proceedings of the 16th International Workshop on Treebanks and Linguistic Theories pp. 73–79.

Kubota, R. (2003). New approaches to gender, class, and race in second language writing. Journal of Second Language Writing, 12(1), 31–47.

Labov, W., & Waletzky, J. (1997). Narrative analysis: Oral versions of personal experience. Journal of Narrative and Life History, 7(1–4), 3–38.

Lakoff, R. (2003). Language, gender, and politics: Putting “women” and “power” in the same sentence. The Handbook of Language and Gender, 78, 161–178.

Leont’ev, A. N. (1979). The problem of activity in psychology. In J. V. Wertsch (Ed.), The concept of activity in Soviet psychology (pp. 37–71). Sharpe.

Lester, J. N., & Paulus, T. M. (2011). Accountability and public displays of knowing in an undergraduate computer mediated communication context. Discourse Studies, 13(6), 671–686.

Locher, M. A., & Graham, S. L. (Eds.). (2010). Interpersonal Pragmatics. De Gruyter Mouton.

Mackenzie, J. (2018). Language, gender and parenthood online: Negotiating motherhood in Mumsnet talk. Routledge.

Marcus, M., Santorini, B., & Marcinkiewicz, M. A. (1993). Building a large annotated corpus of English: The Penn Treebank. Computational Linguistics, 19(2), 313–330.

Marwick, A. E. (2013). Online identity. In J. Hartley, J. Burgess, & A. Bruns (Eds.), A Companion to New Media Dynamics (pp. 355–364). Wiley-Blackwell.

Newsome-Chandler, H., & Grant, T. (2024). Developing a resource model of power and authority in anonymous online criminal interactions. Language and Law. https://doi.org/10.21747/21833745/lanlaw/9_1

Östman, J. O., & Fried, M. (2005). Construction discourse. Construction Grammars. Cognitive Grounding and Theoretical Extensions. Benjamin’s Publishing Company.

Page, R. (2018). Narrative Online: Shared Stories in social media. Cambridge University Press.

Paulus, T., Warren, A., and Lester, J. (2018). Using conversation analysis to understand how agreements, personal experiences, and cognition verbs function in online discussions. Language@ Internet, 15(1).

Perkins, R. (2021). Power and influence: Understanding linguistic markers of power in criminal persuasive contexts, Conference Presentation at ‘Influence, Manipulation, Seduction - Interdisciplinary Perspectives on Persuasive Language’, University of Basel.

Petyko, M., Busso, L., Grant, T., & Atkins, S. (2022). The Aston forensic linguistic databank (fold). Language and Law/Linguagem e Direito, 9(1), 9–24.

Polanyi, L. (1985). Telling the American story: A structural and cultural analysis of conversational storytelling. MIT Press.

Pulvermacher, Y., & Lefstein, A. (2016). Narrative representations of practice: What and how can student teachers learn from them? Teaching and Teacher Education, 55, 255–266.

Rayson, P., Archer, D., Piao, S. L. and McEnery, T. (2004). The UCREL semantic analysis system. In Proceedings of the Workshop on Beyond Named Entity Recognition Semantic labelling 548 Paul Rayson for NLP tasks in association with 4th International Conference on Language Resources and Evaluation (LREC 2004), 25th May 2004, Lisbon, Portugal. Paris: European Language Resources Association. pp. 7–12.

Rayson, P. (2008). From key words to key semantic domains. International Journal of Corpus Linguistics., 13(4), 519–549.

Rychlý, P. (2007). Manatee/Bonito-A Modular Corpus Manager. In: P. Sojka, A. Horák (Eds.). Proceedings of Recent Advances in Slavonic Natural Language Processing. Masaryk University, Brno. pp. 65–70.

Sacks, H. (1986). Some considerations of a story told in ordinary conversation. Poetics, 15, 127–138.

Scott, M. (1997). PC analysis of key words—and key key words. System, 25(2), 233–245.

Shanahan, M. C. (2010). Changing the meaning of peer-to-peer? Exploring online comment spaces as sites of negotiated expertise. Journal of Science Communication, 9(1), 1–13.

Sinclair, J., & Coulthard, M. (1975). Toward an Analysis of Discourse: the English Used by Teachers and Pupils. Oxford University Press.

Stivers, T. (2008). Stance, alignment, and affiliation during storytelling: When nodding is a token of affiliation. Research on Language and Social Interaction, 41(1), 31–57.

Stokoe, E., Benwell, B., & Attenborough, F. (2013). University students managing engagement, preparation, knowledge and achievement: Interactional evidence from institutional, domestic and virtual settings. Learning, Culture and Social Interaction, 2, 75–90.

Stone, C. B., Guan, L., LaBarbera, G., Ceren, M., Garcia, B., Huie, K., Stump, C., & Wang, Q. (2022). Why do people share memories online? An examination of the motives and characteristics of social media users. Memory, 30(4), 450–464. https://doi.org/10.1080/09658211.2022.2040534

Toma, C. L., Hancock, J. T., & Ellison, N. B. (2008). Separating fact from fiction: an examination of deceptive self-presentation in online dating profiles. Personality and Social Psychology Bulletin, 34, 1023–1036.

Van Krieken, K. (2018). Multimedia storytelling in journalism: Exploring narrative techniques in Snow Fall. Information, 9(5), 123.

Acknowledgments

NA

Author information

Authors and Affiliations

Contributions

I am the sole author of the paper and I conducted all analyses described and drafted the manuscript

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1: CQL searches for all 3 corpora

Appendix 1: CQL searches for all 3 corpora

Corpus | Query | Example |

|---|---|---|

DW | [tag= "V.*" & lemma= "feel|think|seem"] | I think |

DW | [tag= "V.G|V.N"] | Doing, done |

DW | [tag= "RB.*" & lemma= “just|only”] | just |

DW | [tag= "DT" & lemma= “a few|some|several|both”] | several |

DW | [tag= "IN" & lemma= “why|cause|because”] | why |

DW | [tag= "V.*" & lemma= "think|believe|suspect"] | think |

DW | [tag= "V.*" & lemma= "think|believe|suspect|feel"] [tag= “PP”] | Think I |

DW | [tag= "V.*" & lemma= "find|discover|open|show"] | show |

DW | [tag= "V.*" & lemma= "check|search|look for"] | check |

DW | [tag= "JJ.*" & lemma= "good|great|fine"] | great |

DW | [tag= "RB.*" & lemma= "never|again|often|still"] | never |

DW | [lemma= "my|our"][]{0,2}[lemma= "experience"] | My experience |

DW | [lemma="why|because|cause"] | because |

DW | [lemma="use"] | using |

WN | [tag= "PP"] | He |

WN | [tag= "PP"][tag= "V.D"] | He got |

WN | [tag= "PP"& lemma= "I|we"] []{0,1}[tag= "V.D"] | We just got |

WN | [tag= "V.*" & lemma= "feel|think|seem"] | I think |

WN | [tag="PP" & lemma= "I|me"] | I |

WN | [tag= "PP"& lemma= "us|we"] | we |

WN | [tag="EX"][tag= "VB.*"] | There is |

WN | [tag="IN"][]{0,1}[tag= "V.*"] | If you went |

WN | [lemma= "I|we"][]{0,3}[lemma= "think"] | I think |

WN | [lemma= "I|we"][]{0,3}[lemma= "know"] | I (don’t) know |

WN | [lemma= "in"][tag="PPZ"][]{0,2}[lemma="opinion"] | In my opinion |

WN | [lemma= "very|so|much|really"] | Really |

WN | [lemma= "all|any|entire|every"] | any |

WN | [tag= "MD"][]{0,1}[tag= "V.D|V.N"] | Could have been |

WN | [tag= "IN" & lemma= "if"] | if |

WN | [lemma= "will"][]{0,1}[tag="V.*"] | Will not go |

WN | [word= "going"][tag= "TO"][]{0,1}[tag="V.*"] | Going to do |

WN | [tag="V.G"] | doing |

WN | [tag="V.N"] | done |

WN | [lemma= "go|come"] | Go |

WN | [tag= "PP"][]{0,2}[lemma= "go|come" | I could easily go |

WN | [tag= "IN"][tag!="CD" & tag= "V.* "] | To do |

WN | [tag= "V.*"][tag= "RP"] | Go out |

PD | [tag= "PP"][tag= "V.D"] | He got |

PD | [tag= "PP"& lemma= "I|we"] []{0,1}[tag= "V.D"] | We just got |

PD | [tag= "PP"& lemma= "I|we"] []{0,1}[tag= "V.D" & lemma= “have|think|feel”] | We always thought |

PD | [tag= "PP"][]{0,1}[ [tag= "V.*" & lemma= "feel|think"] | I felt |

PD | [tag= "PP"& lemma= "us|me"] | me |

PD | [lemma= "my|our"][]{0,2}[lemma= "experience"] | my own experience |

PD | [lemma= "base" & tag= "V.*"] [lemma= “on”] | Based on |

PD | [tag= "JJR|JJS|RBR|RBS"] | Greener, best |

PD | [tag= "JJS|RBS"] | Greenest, best |

PD | [tag= "V.*" & lemma= "wish"|want”] | wish |

PD | [tag= "V.*" & lemma= "do|make”] | make |

PD | [tag= "V.*" & lemma= "go|step|get"] | go |

PD | [tag="EX"][tag= "VB.*"] | There was |

PD | [tag= "V.*" & lemma= "underst&|realise"] | understand |

PD | [tag= "RB.*" & lemma= "just|very|so|really|also|well"] | Very |

PD | [tag= "RB.*" & lemma= "then|first|last|next|eventually"] | Then |

PD | [tag= "RB.*" & lemma="most.*|absolutely|completely|totally"] | Mostly |

PD | [tag= "RB.*" & lemma="most.*|absolutely|completely|totally"][]?[tag="JJ|V.N"] | Absolutely the best |

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Busso, L. Power and Personal Experience in Online Anonymous Communities: A Corpus-Driven Exploration. Corpus Pragmatics (2024). https://doi.org/10.1007/s41701-024-00169-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41701-024-00169-y