Abstract

As part of a design-based research project, we designed, developed, and evaluated a web-based microlearning intervention in the form of a comic into the problem of COVID-19 online misinformation. In this paper, we report on our formative evaluation efforts. Specifically, we assessed the degree to which the comic was effective and engaging via responses to a questionnaire (n = 295) in a posttest-only non-experimental design. The intervention focused on two learning objectives, aiming to enable users to recognize (a) that online misinformation is often driven by strong emotions like fear and anger, and (b) that one strategy for disrupting the spread of misinformation can be the act of stopping before reacting to misinformation. Results indicate that the comic was both effective and engaging in achieving these learning objectives.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Misinformation — false or partially false information which can be spread both unintentionally and intentionally — has long been a problem (O’Connor & Weatherall, 2019), as it has serious negative impacts on many aspects of society, from driving xenophobic discourses (Chenzi, 2020) to undermining efforts to address the global climate crisis (Treen et al., 2020). During the COVID-19 pandemic, misinformation, and particularly health misinformation, has reached a crisis point with significant implications for public health and the health of individuals (Gabarron et al., 2021). The World Health Organization (WHO, 2020) has named this crisis an “infodemic” and called for a myriad of strategies to address it, both within the context of the pandemic and beyond.

Scholars from a variety of disciplines have proposed various interventions to reduce the spread and impact of COVID-19 misinformation. Fact-checking and debunking efforts have remained prominent (Hotez et al., 2021; Schuetz et al., 2021; Siwakoti et al., 2021), but concerns about the efficacy of fact-checking and the possible risk of the “boomerang” effect — when fact-checking inadvertently reinforces misinformation (Lewandowsky et al., 2012) — have directed attention to alternative methods of intervention (Chou et al., 2021). Some scholars propose adjustments to online platforms including information flags and algorithmic filters to block or counter COVID-19 misinformation (Choudrie et al., 2021; Young et al., 2021), but such interventions ultimately rely on action by the private corporations that produce online platforms. Rather than targeting the presence or removal of misinformation, other scholars have emphasized the importance of enhancing citizens information literacy (Vraga et al., 2020) and have developed ways to provide people with the tools and competencies to assess the credibility and accuracy of COVID-19 information (Agley et al., 2020; Brodsky et al., 2021). Such work is ongoing, and the U.S. National Science Foundation has recently provided funding to further support such efforts (NSF, 2021a, b).

An important part of increasing information literacy is helping people understand and reflect upon the key roles that emotions like fear and anger play in how they process and share COVID-19-related information online (Martel et al., 2020). People experiencing negative emotions when encountering COVID-19 misinformation have been shown to believe and spread such misinformation more often (Han et al., 2020). Mindfulness, “a characteristic of mental states that emphasizes observing and attending to current experiences, including inner experiences such as thoughts and emotions” (Hill & Updegraff, 2012, p. 81), has been shown to increase emotional awareness (Hill & Updegraff, 2012; Nielsen & Kaszniak, 2006), and, as a result, impact individuals’ emotional responses. Emotional awareness, the awareness individuals have of their own emotions and that of others, would, therefore, be an essential part of emotional regulation (Ciarrochi et al., 2003). The possible role of emotions on how individuals engage with information has led some scholars to call for more attention to be directed towards the emotional aspects of COVID-19 misinformation (Chou & Budenz, 2020; Heffner et al., 2021). Recognizing that fear and anger may lead to sharing misinformation, we designed and developed an educational intervention to raise competencies in emotional mindfulness (i.e., to increase individuals’ awareness of their emotions and thoughts as well as of their responses to them) as a way to reduce the spread of — and increase individual resilience to — COVID-19 misinformation. In this paper, we report on the formative evaluation of this intervention and examine the degree to which it was effective and engaging.

Framework for the Intervention

In order to address the emotional aspect of misinformation, we build on the SIFT framework (Caulfield, 2019) as a tool for enabling students to navigate complex information environments online. The framework operates through four steps tied to information literacy: stop; investigate the source; find better coverage (i.e. verify and cross-check source); trace claims to original context or media. For example, when a person comes across a social media post about the dangers of vaccine misinformation, the first step in SIFT is to stop before clicking, commenting on, or sharing the post. From there, the person is guided to determine where the information came from, determine if there are credible sources pertaining to it, and find the original source of the media. While each step is important, the first step is fundamental, and this is true of other information literacy frameworks as well. However, it can easily be overlooked by the need to advance the more technical skills associated with information literacy. As a result, the first step (stop) remains under-addressed in many frameworks. Through a focus on emotional awareness as a means to initiate the first step of stopping, our research effort aimed to iteratively design, develop, and evaluate a short intervention to support individuals in stopping through first recognizing their emotions when encountering emotionally charged information online. Fostering emotional mindfulness has been shown to be successful in other contexts (Charoensukmongkol, 2016; Sebastião, 2019), but we have been unable to identify any tools or interventions that have been created to develop this particular aspect of information literacy. Consequently, our intervention is meant to support the initial step of SIFT and other frameworks that first require individuals to stop before proceeding with other strategies.

Our intervention was grounded on microlearning techniques. Microlearning focuses on learning objectives that can be addressed using small chunks of instruction that enable incidental learning to take place easily (McLoughlin & Lee, 2011). The brevity and accessibility of such interventions means that they can potentially be mobilized on social media and subsequently iterated into other formats. Brevity is made up for with active engagement so that “the learning or performance improvement portion of microlearning is a direct result of some type of action or activity being evoked” (ibid, p. 50).

To design and develop our intervention, we followed customary design-based research steps (Wang & Hannafin, 2005), which included the development of an extensive theoretical framework that focused on research about effective communication of complex and controversial scientific topics (Houlden et al., 2022), as well as a thorough development of the design principles which informed the creation of the intervention (Veletsianos et al., in press). Based on our research, we designed an intervention that consisted of a single page comic that combined images and text to facilitate our desired learning outcomes (Fig. 1).

The comic was iteratively developed, beginning first as an infographic that was then transformed into a narrative style of instruction. The design, which was intended for women, and more specifically mothers, described the experience of a mother coming across emotionally charged health misinformation on her social media and provided strategies for navigating emotions that arise in response to that misinformation. Our audience was specifically mothers because earlier research has demonstrated that mothers are the primary decision-makers for the health of their families (Matoff-Stepp et al., 2014) and are, therefore, particularly vulnerable to being targeted with health misinformation (Zadrozny & Nadi, 2019). As health misinformation has proliferated during the pandemic (WHO, 2020), mothers are an important audience to focus upon. Versions of the intervention were circulated and reviewed by associates of the authors who fit the targeted participant group (i.e. they were themselves mothers who use social media). Their feedback was incorporated into the revised intervention, and this paper reports on a larger scale evaluation of that version of the intervention.

The comic begins with a narrator stating that COVID-19 misinformation is driven by emotions and then tells the story of a woman named Jenny who grapples with her anger towards an Instagram post about COVID-19. Jenny is tempted by her indignation to share the post, but the narrator informs readers that when angry about online information, it is best to pause before reacting. Jenny practices this advice, deciding to take a break from social media by reading a book. The comic ends by reinforcing the message that Jenny helped reduce the spread of misinformation by pausing before reacting.

There is a wide array of variables upon which educational interventions can be evaluated. Our evaluation focused on the degree to which this intervention was effective and engaging. Many researchers (see, for example, Honebein & Reigeluth, 2021; Merrill, 2013; Wilson et al., 2008) argued for a multi-dimensional evaluation of instruction, with Merrill in particular highlighting that “good” instruction is effective, engaging, and efficient — what he calls e3 or e to the power of 3. Our evaluation draws from Romero-Hall et al. (2019), who developed a framework for evaluating a web-based, role-playing simulation as a means to iteratively improve upon it prior to implementation. In their work, effectiveness, efficiency, and usability were key areas examined as part of this process. Our evaluations focused on effectiveness and engagement.

For this intervention, effectiveness was measured in three ways. First, by how successfully the learning outcomes were achieved. As the purpose of this research project was to conduct a formative evaluation of the learning intervention into misinformation, we evaluated whether the intervention did what it set out to do (i.e. whether it was effective) and assessed participants’ perceptions toward it in terms of effectiveness and engagement. Following the intervention, learners should, therefore, be able to:

-

Name the role that anger and fear play in the spread of misinformation.

-

Identify a strategy for interrupting the spread of misinformation driven by fear or anger.

Effectiveness was also measured by using Likert scale questions to assess how readily participants felt the learning outcomes were achieved, especially in terms of clarity (thereby underscoring perceptions of effectiveness) and through the assessment of participants’ perceptions around aesthetics and the experience of reading the comic. Engagement was measured by asking what participants would do with the comic if they came across it on their social media. As with the research by Romero-Hall et al. (2019), specific questionnaire questions were tied to each of these areas, which are outlined in the results below.

Research Questions

Evaluating the effectiveness of the intervention relied on assessing the degree to which the learners met these two learning outcomes. Therefore, the research questions we posed were the following:

-

RQ1: To what extent is the learning intervention effective and engaging?

-

RQ2: What are participants’ perceptions towards the learning intervention?

Methods

To evaluate the effectiveness of the learning intervention, we developed a questionnaire that consisted of 24 questions (see Appendix 1). The questionnaire began with the learning intervention in the form of the comic, was followed by Likert scale and open-ended questions, and ended with a final section that gathered participants’ demographic information. The questionnaire was developed by two researchers. To assess its face and content validity, four researchers were provided with a description of the study and its goals, and were asked to review it for readability, clarity in language, style, formatting, and the degree to which they believed the questions covered all aspects of the constructs being measured. The qualitative feedback arising from these four individuals was used to improve the clarity and precision of the questions. A revised questionnaire was then distributed to three individuals who fit the demographic characteristics of the participant group and asked to complete the questionnaire and offer their opinion. Their responses were evaluated by two researchers, leading to minor linguistic adjustments to the final questionnaire.

Participants

Participants (n = 295) were recruited using Prolific, an on-demand platform connecting researchers with potential participants (see “Data Collection” section). The majority of respondents resided in Canada (60.68%) and the remaining were in the USA (38.98%); one respondent did not identify their location. Two respondents indicated that they did not consider themselves as part of the gender binary while the remaining identified as women. Most participants were between the ages of 18 and 44 (18 − 24 — 26.78%; 25 − 34 — 33.22%; and 35 − 44 — 22.03%, respectively). Additionally, 11.52% of participants were between the ages of 45 and 54, 4.41% were between 55 and 64, and 2.03% were above 65 years in age. Participants identified with a wide range of heritages, the majority identifying as European (42.71%), North American (37.97%), and Asian (31.86%). Footnote 1Most participants held a Bachelor’s degree (43.4%), while 19.7% completed a Master’s or Doctoral degree, and 12.6% completed high school or less. The remaining participants had completed a professional degree or diploma (2.4%) or some college (14.6%).

Because the context of this study centers around social media microlearning interventions and participant interventions to evaluate information they encounter around COVID-19, we also invited them to respond to questions around (a) the social technologies they most commonly used, and (b) how confident they felt in their ability to assess the accuracy of information they encounter about COVID-19. Participants were invited to select as many social platforms as they were using. All participants reported using at least one social platform, the predominant of which were email (92.2%), by Facebook (82.3%), Instagram (76.9%), and Reddit (64.0%). The least used platforms were WhatsApp (40.1%) and Weibo (2.0%). The majority of participants used social media daily (91.0%). Participants responded that they were most likely to be somewhat (66.8%) or extremely confident (23.1%) in their ability to assess the accuracy of COVID-19 information online. A minority reported that they were neither confident or unconfident (7.1%) or somewhat unconfident (3.1%) in their ability to assess the accuracy of COVID-19 information online.

Data Collection

Data were collected using a questionnaire distributed by the online participant recruitment firm Prolific on May 21, 2021. Potential participants were defined to be those who fit the demographic factors of gender (female), age (18 + years old), and location (Canada and the USA). A random subset of individuals who had signed up to Prolific expressing interest in completing questionnaires and fit this profile received an email invitation through Prolific to participate in the study. In total, 300 questionnaires were completed. Five were disqualified as the consent they provided was incomplete, leaving a final sample size of 295 respondents. The use of Prolific in recruiting participant pools is well-documented in the broader literature (Palan & Schitter, 2018), and used extensively in a variety of disciplines and research efforts, including in efforts to combat misinformation through learning (Basol et al., 2021).

Data Analysis

Quantitative and qualitative data were analysed using descriptive statistics and open coding respectively. Much of the data analysis was descriptive in nature: one researcher completed the analysis using Excel and a second verified the process and results. One researcher analysed the qualitative data in two different ways. For the open-ended responses to the questions “What, if anything, do you like/dislike about this comic?”, the researcher used an open coding approach to create categories of likes and dislikes (Strauss & Corbin, 1990). First, the researcher read through each questionnaire response and separated likes and dislikes into two columns. Next, they read the first item in the likes column and created a code to describe it. The code was then compared to the second item. If the code described the second item, they assigned the code to this item and moved to the next. If the code did not describe it, they created a second code that captured its meaning. Every time a new code was created, the researchers compared the new code to all earlier coded items to identify whether the new code could be used to describe them. This process of constantly comparing items and coded continued until all items were coded. Once the likes column was completely coded, the researcher followed the same steps to code the dislikes column. To limit the incidence and impact of bias, the codes in both columns were reviewed by a second researcher. The two researchers then discussed, reviewed, revised where necessary, and compiled the codes into themes.

To examine if the learning outcomes were achieved, the questionnaire included two kinds of assessments in order to gather different kinds of evidence: (1) two multiple-choice questions (described below), and (2) the following open-ended question: “Based on this comic, what did you learn about misinformation?” To analyse data in response to the open-ended question and examine respondents’ answers in accordance with the learning objectives, two researchers developed a pass-fail rubric. If a participant’s response described relationships between emotions, anger, or fear in relation to misinformation, it was coded as pass (i.e. as evidence of them being able to “name the role that anger and fear play in the spread of misinformation”). Similarly, if a response mentioned any practical action that could be taken to end, pause, mitigate, or otherwise interrupt misinformation, it was also coded as pass (i.e. as evidence that a participant was able to “identify a strategy for interrupting the spread of misinformation driven by fear or anger”). Responses that did not name the role that emotions play in misinformation or actions that could be taken to mitigate misinformation, were coded as fail. A third researcher was trained in the use of the rubric and examined all open-ended responses using the rubric provided. Once this researcher reviewed all participant responses, the accuracy of the coding was verified by one of the two researchers who developed the rubric.

Results

RQ 1: To What Extent Is the Learning intervention Effective and Engaging?

Effectiveness — Assessment of Learning Outcomes

Three questionnaire questions evaluated the effectiveness of the comic — two were multiple-choice, and one was open-ended as described above. Results indicate that the comic was effective as most participants successfully met both learning outcomes.

To evaluate whether the intervention was effective in addressing the first learning outcome, participants were asked to recall and identify what drives the spread of misinformation (Table 1). The vast majority, 86.7%, identified the correct answer and indicated that, according to the comic, strong emotions like fear or anger were drivers. Those who identified erroneous answers identified social media as the driver (12.2%), and less than one percent identified trolls, pranksters, and other bad actors (0.7%) or sharing social media posts with family (0.3%).

To evaluate whether the intervention was effective in addressing the second learning outcome, participants were asked to recall the steps that the individual in the comic took to interrupt the spread of misinformation (Table 2). The vast majority of respondents (95.0%) identified the correct answer, while the rest selected erroneous answers.

Finally, we examined participants’ responses to the following open-ended question: Based on this comic, what did you learn about misinformation? We used this knowledge check as an additional way to assess what, if anything, they learned about misinformation as it relates to the intervention (Table 3). Of 292 respondents, 19.52% provided answers that indicated they met both learning outcomes; 47.26% only named the role that fear and anger play in the spread of misinformation (first learning outcome); and, 13.3% only named a strategy for interrupting the spread of misinformation driven by fear or anger (second learning outcome). In other words, approximately 80% of participants met at least one learning outcome. The responses of 19.9% participants did not address either of the learning outcomes. Their answers named other things that they learned about misinformation, such as that COVID-19 misinformation is a problem and that misinformation spreads easily on social media.

Effectiveness — Participant Perceptions

To assess perceptions of effectiveness, we invited participants to respond to a number of statements gauging their opinions on the comic (Table 4). In general, respondents expressed positive opinions about the comic, generally agreeing that the comic was clear (i.e. it clearly explained concepts, concepts of what drives misinformation, and information on the relationship between emotions and misinformation). Results showed similarly high levels of agreement across statements aimed to capture participants’ opinions on the importance of the information contained in the comic, the comic’s ability to help them understand the relationship between emotions and information, and whether the comic was organized in a way that helped them learn.

Finally, perceptions of effectiveness were also measured by asking participants how likely they were to apply the suggestions to pause when they came across something online that made them angry or upset (Table 5). More than three quarters of participants reported that they were either likely (31.2%) or extremely likely (47.8%) to pause. Less than 10% indicated they were neither likely/unlikely to pause, and the remaining 10% were unsure, somewhat unlikely, or extremely unlikely to pause.

Finally, we provided participants with a list of factors and asked them to identify which factor(s) would improve the likelihood of them following the recommendations arising from the comic (i.e. to pause/stop when feeling angry or upset). We asked this question in order to determine what could be done with the comic to make it more effective (Table 6). Participants were able to make more than one selection. The majority of individuals identified factors that related to expertise around the COVID-19 and misinformation. For instance, participants noted that if the comic came from public health officials (60.0%), official government sources (57.3%), or a doctor’s office or clinic (46.4%), they would be more likely to follow its recommendations.

Engagement

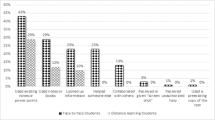

We assessed engagement by seeking to understand whether and how participants would engage with the comic if they came across it outside of this study. To do so, we asked respondents to select among a number of actions they would take if the comic came across their social media feed (Table 7). Participants were able to make more than one selection. Over three-quarters indicated they would read it; nearly half (45.1%) indicated they would check the source, and almost a third (32.2%) said they would look for more information. Another 28.5% said they would like it on social media, and 20.0% said they would ignore it. Other results include those who said they would discuss it with friends or family (18.3%), those who would share it on social media (17.0%), and another 2.7% who said they would share it on a messaging app.

RQ 2: What Are Participants’ Perceptions Towards the Learning Intervention?

The second research question aimed to examine participants’ general perceptions towards the comic. This was initially examined through ten different claims meant to measure how appealing respondents found the comic and what specific qualities they liked or disliked (Table 8). Some results worth highlighting included participants evaluation of the comic as having a clear purpose (M = 4.16, SD = 0.77), wabeings attractive (M = 3.95, SD = 0.87), and having a topography that was easy to read (M = 4.16, SD = 0.66). Table 8 presents the results of participants’ perceptions of the comic.

Perceptions of the relevance of the information in the comic, as well as level of trust in the information in the comic were also assessed. Almost half of participants (49.8%) felt that the information was somewhat relevant to them as Internet users, with 31.5% finding it extremely relevant. On the opposite side, only 2.7% found it to be extremely irrelevant. In terms of trust, nearly 40% found the comic somewhat trustworthy, and another 20.5% found it extremely trustworthy. A small percentage (3.8%) found it extremely untrustworthy, and another 11.3% found it somewhat untrustworthy, with the remaining (24.6%) finding it neither trustworthy or untrustworthy.

Finally, we used two open-ended questions to assess what, if anything, participants liked or disliked about the comic (Table 9). Our analysis generated two themes to describe what participants’ liked: aesthetics and message/content. The graphics and art style were coded most often in the aesthetics theme (38%), with 6.8% of participants specifically mentioning that they liked the inclusion of the cat in the comic and 4.8% specifically liking that the comic used a woman of colour. The second most popular quality was the readability and typography (17.5%) of the comic, with the third being the approachability of it (11.3%). Content and message qualities that participants liked included agreement with the message (26.4%), the simplicity of the message (24.0%), and the message’s relatability (18.1%). Finally, 3.8% of participants indicated they liked the comic character’s success, and 2.4% appreciated that it offered practical advice.

In the second open-ended question about what participants disliked, the same two categories emerged, as well as a third in which participants indicated that there was nothing they disliked (26.9%). Significantly more items were coded in the message/content theme (50.0%), compared to aesthetics theme (26.6%). Dislike of the message and content had a wide range of responses, but by far, the strongest dislike was that participants felt the comic teaches ignorance instead of verification (19.9%). Others felt it was too simplistic or vague (8.4%) or condescending (5.2%). Some disliked that the source of the comic was not provided (5.2%), and others disliked why the comic did not explain or define misinformation (3.5%). Several participants disagreed with the message of the comic (3.1%), and some felt it was unrelatable (2.8%). Five participants felt that the emotions of the character were justifiable. The codes associated with aesthetic qualities of the comic that participants disliked included readability/typography (8.7%), perceived childishness or unprofessional-looking (8.7%), the use of the cat (1.4%), and graphics/art style (6.3%). Four individuals noted racial concerns, three of which noted that it may inadvertently perpetuate a racial stereotype.

Discussion

The formative evaluation presented here is the first assessment of an intervention targeting misinformation within a broader design-based research effort. Based on the outcomes of the evaluation described above and synthesized in the paragraphs that follow, we understood that the short intervention we produced was generally appealing and quite effective in enabling individuals to recall information enabling them to mitigate the flow of misinformation. Importantly, the formative evaluation process we engaged in enabled us to identify what could be improved in future iterations of our design. First, we learned that the design needs to be more explicit about the strategies that users should employ to interrupt the flow of misinformation. More explicit instruction would be beneficial not just for learners, but also for improving alignment between instruction and assessment. Second, we identified that our assessment of learning outcomes ought to be more complex to identify learning outcomes with greater granularity. We are considering the following ways to address this in future iterations: (a) a pre-post assessment to enable us to measure changes in knowledge; (b) an assessment that evaluates transfer of knowledge in new situations or scenarios, and (c) exploring ways in which learning in situ could be assessed. With respect to the latter, while a high proportion of individuals in our study are indicating that following this experience, they are likely to pause when they encounter instances of misinformation, assessing the degree to which they actually do so remains an open question. Third, our assessment reiterated the significant role played by the source of instruction, as a large proportion of users indicated that they would be more likely to follow the recommendations in the design if it came from a public health official, government, doctor, health clinic, or friend. This finding leads us to consider situating the design in one of these contexts to increase its chances of success. For instance, a future iteration of this design could be delivered through a local health clinic or the design itself could be framed in the context of a health professional offering recommendations for mitigating the flow of misinformation. On the other hand, users indicated that some contexts would not increase the likelihood of adoption (e.g. if the instruction was delivered by a celebrity or as a flyer at a local gym), providing us with guidance as to contexts which we should avoid. Significantly, this finding supports guidance from the broader literature as to the effectiveness of different public figures in public health education (e.g. Abu-Akel et al., 2021), suggesting for example that celebrities may not be the most effective spokespeople in public health education. We explore these issues in further detail, and in the context of our findings, below.

To assess learning, we used two multiple choice and one open-ended question. Study participants scored high on the two multiple choice questions. Responses to the open-ended question were also promising, as about 80% of participants met at least one learning outcome. Yet, only around 19.5% of participants (57 of 292) provided answers meeting both learning outcomes, i.e. the role of fear and anger in the spread of misinformation and a strategy to disrupt the spread of misinformation. While both the multiple choice and the open-ended questions focused on information recall, rather than transfer or application of knowledge, fewer respondents were able to state what they learned than identify the correct answer. Several explanations exist for this discrepancy. First, the open-ended question offered no details on what was expected, other than to ask, “what did you learn about misinformation?” It did not scaffold participants, provide any further specificity, or ask participants to state several things that they learned. Therefore, even if learning about misinformation occurred, it might not have aligned with the learning outcomes that the evaluation rubric was assessing for. Second, it is likely that the multiple-choice questions were simple enough for some participants to be able to identify the correct answers.

Participants appeared to find the intervention effective as well. Notably, while participants felt that the comic clearly presented information on the relationship between emotions and misinformation, they stated that an understanding of that relationship was not as clearly developed by the comic. This response seems to reflect the fact that the precise dynamics of how emotionally charged information elicits particular responses on social media is a complex matter (Nesi et al., 2021). Although this discussion is beyond the scope of the intervention, it does, nonetheless, point to an opportunity for future iterations to pursue explicit learning outcomes around those dynamics. Of the statements evaluating the effectiveness of how the comic conveyed key ideas, participants were largely in agreement that the comic indicated points to remember more than that it was either organized in a way helpful to learning or that concepts were explained clearly. This latter finding suggests that future iterations might consider more carefully the ways in which concepts are presented. Assumptions about understanding of relevant concepts should be watched for and if necessary, unpacked as part of the learning intervention itself.

Although the comic itself was short, findings suggest that the learning outcomes were achieved at high rates. When asked how likely respondents were to apply the suggestions to pause before reacting, 31.2% indicated they were extremely likely and 47.8% indicated they were somewhat likely. While these responses are encouraging, future research should endeavour to verify the actual enactment of these strategies, possibly through experimental designs that assess the learning outcomes in practice rather than through self-reported data. If mindfulness does indeed impact people’s engagement with emotional information online as recent literature is beginning to suggest (Charoensukmongkol, 2016; Sebastião, 2019), developing ways to encourage mindfulness, particularly at scale, could have a notable impact on the effects and spread of misinformation. Relatedly, respondents also indicated that they would be more likely to follow the recommendations for a variety of reasons, but especially if the source of the recommendations came from places of institutional authority such as public health officials, official government sources, and doctors’ offices. Thus, education about mindfulness and online health information could gain in credibility (and therefore potentially effectiveness) if created, endorsed, or otherwise shared by such sources. This suggests that there is opportunity for public health and government communication programs to use social media and other communication pathways to promote emotional awareness as part of information literacy.

Engagement was assessed based on what respondents would do if the comic came across their feed. Most responded that they would read it (75.6%), suggesting at minimum that the comic format may be a valuable tool for developing education strategies to circulate on social media. However, one-fifth said they would ignore it, which suggests an opportunity for improvement. This improvement might be found through consideration of participants’ perceptions of the learning intervention, which was answered in our second main research question. Of particular interest were the statements about interest and aesthetics, which revealed that a large number of participants did not see themselves in the comic, thereby throwing into question the relevance of the comic. Improving the narrative in such a way that makes it possible for participants to identify themselves in the story more easily may yield improvements in the results and would be a worthwhile modification for future iterations.

The open-ended responses were quite compelling and suggestive of both successes and failures. For example, the graphics and art style were generally noted as appealing and likable (38.0%), with 20 people specifically mentioning the cat figure as positive. In terms of the factors that participants found unappealing, there are several important areas to note. A fifth of participants (19.9%) felt the comic taught ignorance instead of verification. This is important to reflect on as research shows that verification is not always a priority for social media users and is thus not the only useful way to engage with online information (Gruzd & Mai, 2020). Nevertheless, for some participants to perceive the intervention as teaching ignorance suggests that further messaging around the purpose of mindfulness is needed. Additionally, tone was noted as an element disliked by a number of participants, with 15 suggesting it was condescending and 25 that it was childish or unprofessional. As such, further consideration for tone in relation to the comic (or other storytelling) format needs to be carefully considered in future iterations lest it alienate people. Finally, participants took issue with Jenny being a black woman as in the comic Jenny gets angry, which four individuals interpreted as a stereotype of an angry black woman. While just a few individuals noted these comments, sensitivity to what could be perceived as racial stereotypes (or any stereotypes at all) should be a priority for all future interventions.

Limitations

There are a few limitations to this research. Despite high rates of effectiveness, there are limitations as to how the intervention effectiveness was assessed. For instance, although a pre- and post-test could have offered a more robust discussion on the effectiveness of the intervention, this exploratory study succeeded in examining whether the intervention was at all effective, thus providing the foundation for further investigation. Additionally, there was minimal time delay between exposure to the intervention and testing the learning outcomes. Future research efforts should evaluate effectiveness in more robust ways through deeper application of the learning outcomes or increased delay between intervention and testing. Indeed, within this research, the assessment of how effectively the learning outcome was achieved focuses on recalling information and not on whether respondents were able to apply what was learned. To do an assessment of whether they can actually apply what was learned as opposed to whether they can recall its content is challenging because, to assess deeper forms of learning, respondents would need to be situated where they encounter misinformation as they do in the real world. Potential solutions to this would be to devise ways to self-report, such as having regular check-ins with participants throughout a set time during which they are online or through novel methodological approaches that track the relationship between individual’s arousal levels and their social media activity as has been done for research into social information on Facebook, for example (Wise et al., 2010).

Further limitations were also shaped by the methodology and in particular by the use of the questionnaire. While our participant pool was constrained according to set parameters, more specificity could be beneficial given the different ways people in different groups use social media (Auxier & Anderson, 2021). In other words, more granular targeting to specific groups could potentially shift the results in favour of improvement or otherwise. Additionally, given this possibility of improved targeting, a comic or other form of intervention could be developed by people with lived experience from the group for whom the intervention is meant. For example, while there are two (white) mothers on the research team for this project who were able to draw on their experience as mothers for the comic, there are further identity factors that could be relevant such as sexuality, socioeconomic status, or race.

Conclusion

As part of our design research, we evaluated a short comic about mindfulness and misinformation through a questionnaire shared with mothers. While the comic was successful in achieving the project’s learning outcomes, the formative evaluation process we engaged in offered insights for future improvement. Our next iteration of this research considers these areas as we continue to develop new tools for engaging and educating the public on the topic of online misinformation.

Notes

For the question on heritage, participants were asked to choose all that applied; therefore, results presented here are not cumulative (i.e. do not total 100%).

References

Abu-Akel, A., Spitz, A., & West, R. (2021). The effect of spokesperson attribution on public health message sharing during the COVID-19 pandemic. PLoS ONE, 16(2), e0245100.

Agley, J., Xiao, Y., Thompson, E.E., et al. (2020). COVID-19 misinformation prophylaxis: Protocol for a randomized trial of a brief information intervention. JMIR Research Protocols 9(12), e24383.

Auxier, B., & Anderson, M. (2021). Social media use in 2021. Pew Research Center. Retrieved from https://www.pewresearch.org/internet/2021/04/07/social-media-use-in-2021/

Basol, M., Roozenbeek, J., Berriche, M., Uenal, F., McClanahan, W. P., & Linden, S. V. D. (2021). Towards psychological herd immunity: Cross-cultural evidence for two prebunking interventions against COVID-19 misinformation. Big Data & Society, 8(1), 20539517211013868.

Brodsky, J. E., Brooks, P. J., Scimeca, D., Galati, P., Todorova, R., & Caulfield, M. (2021). Associations between online instruction in lateral reading strategies and fact-checking COVID-19 news among college students. AERA Open. https://doi.org/10.1177/23328584211038937

Caulfield, M. (2019, June 19). SIFT (the four moves). Hapgood. Retrieved February 11, 2022, from https://hapgood.us/2019/06/19/sift-the-four-moves/

Charoensukmongkol, P. (2016). Contribution of mindfulness to individuals’ tendency to believe and share social media content. International Journal of Technology and Human Interaction (IJTHI), 12(3), 47–63.

Chenzi, V. (2020). Fake news, social media and xenophobia in South Africa. African Identities, 19(4), 502–521. https://doi.org/10.1080/14725843.2020.1804321

Chou, W. -Y. S., & Budenz, A. (2020). Considering emotion in COVID-19 vaccine communication: Addressing vaccine hesitancy and fostering vaccine confidence. Health Communication. https://doi.org/10.1080/10410236.2020.1838096

Choudrie, J., Banerjee, S., Kotecha, K., Walambe, R., Karende, H., & Ameta, J. (2021). Machine learning techniques and older adults processing of online information and misinformation: A covid 19 study. Computers in Human Behavior, 119, 106716. https://doi.org/10.1016/j.chb.2021.106716

Chou, W. -Y. S., Gaysynsky, A., & Vanderpool, R. C. (2021). The COVID-19 misinfodemic: Moving beyond fact-checking. Health Education & Behavior, 48(1), 9–13. https://doi.org/10.1177/1090198120980675

Ciarrochi, J., Caputi, P., & Mayer, J.D. (2003). The distinctiveness and utility of a measure of trait emotional awareness. Personality and Individual Differences, 34(8), 1477–1490. https://doi.org/10.1016/S0191-8869(02)00129-0.

Gabarron, E., Oyeyemi, S. O., & Wynn, R. (2021). COVID-19-related misinformation on social media: A systematic review. Bulletin of the World Health Organization, 99(6), 455–463A. https://doi.org/10.2471/BLT.20.276782.

Gruzd, A., & Mai, P. (2020). Inoculating against an infodemic: A Canada-wide COVID-19 news, social media, and misinformation survey. Retrieved from https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3597462

Han, J., Cha, M., & Lee, W. (2020). Anger contributes to the spread of COVID-19 misinformation. Harvard Kennedy School Misinformation Review 1(3).

Heffner, J., Vives, M. -L., & FeldmanHall, O. (2021). Emotional responses to prosocial messages increase willingness to self-isolate during the COVID-19 pandemic. Personality and Individual Differences, 170, 110420. https://doi.org/10.1016/j.paid.2020.110420

Hill, C. L., & Updegraff, J. A. (2012). Mindfulness and its relationship to emotional regulation. Emotion, 12(1), 81–90.

Honebein, P. C., & Reigeluth, C. M. (2021). To prove or improve, that is the question: The resurgence of comparative, confounded research between 2010 and 2019. Educational Technology Research and Development, 69(2), 465–496.

Houlden, S., Veletsianos, G., Hodson, J., Reid, D., & Thompson, C.P. (2022). COVID-19 health misinformation: using design-based research to develop a theoretical framework for intervention. Health Education. Online First. https://doi.org/10.1108/HE-05-2021-0073

Hotez, P., Batista, C., Ergonul, O., Figueroa, J. P., Gilbert, S., Gursel, M., Hassanain, M., Kang, G., Kim, J. H., Lall, B., Larson, H., Naniche, D., Sheahan, T., Shoham, S., Wilder-Smith, A., Strub-Wourgaft, N., Yadav, P., & Bottazzi, M. E. (2021). Correcting COVID-19 vaccine misinformation: Lancet commission on COVID-19 vaccines and therapeutics task force members*. EClinicalMedicine. https://doi.org/10.1016/j.eclinm.2021.100780

Lewandowsky, S., Ecker, U.K.H., Seifert, C.M., Schwarz, N., & Cook, J. (2012). Misinformation and its correction: Continued influence and successful debasing. Psychological Science in the Public Interest, 13(2), 106–131.

Martel, C., Pennycook, G., & Rand, D.G. (2020). Reliance on emotion promotes belief in fake news. Cognitive Research: Principles and Implications 5(47), 1–20.

Matoff-Stepp, S., Applebaum, B., Pooler, J., & Kavanagh, E. (2014). Women as health care decision-makers: Implications for health care coverage in the United States. Journal of Health Care for the Poor and Underserved, 25(4), 1507–1513.

McLoughlin, C., & Lee, M. (2011). Pedagogy 2.0: Critical challenges and responses to Web 2.0 and social software in tertiary teaching. In Web 2.0-Based E-Learning: Applying Social Informatics for Tertiary Teaching (pp. 43–69). IGI Global. https://doi.org/10.4018/978-1-60566-294-7

Merrill, M. D. (2013). First principles of instruction: Identifying and designing effective, efficient, and engaging instruction. Pfeiffer.

Nesi, J., Rothenberg, W. A., Bettis, A. H., Massing-Schaffer, M., Fox, K. A., Telzer, E. H., Lindquist, K. A., & Prinstein, M. J. (2021). Emotional responses to social media experiences among adolescents: Longitudinal associations with depressive symptoms. Journal of Clinical Child & Adolescent Psychology, 1–16.

Nielsen, L., & Kaszniak, A. W. (2006). Awareness of subtle emotional feelings: A comparison of long-term meditators and nonmeditators. Emotion, 6(3), 392–405.

NSF. (2021a). NSF Award Search: Award # 2137530 - NSF Convergence Accelerator track F: Adapting and scaling existing educational programs to Combat inauthenticity and instill trust in information. (n.d.). Retrieved September 29, 2021a, from https://www.nsf.gov/awardsearch/showAward?AWD_ID=2137530

NSF. (2021b). NSF Award Search: Award Abstract # 2137519 NSF Convergence Accelerator Track F: Co-designing for trust: Reimagining online information literacies with underserved communities. Retrieved September 29, 2021b, from https://www.nsf.gov/awardsearch/showAward?AWD_ID=2137519

O’Connor, C., & Weatherall, J. O. (2019). The misinformation age: How false beliefs spread. Yale University Press.

Palan, S., & Schitter, C. (2018). Prolific. ac—A subject pool for online experiments. Journal of Behavioral and Experimental Finance, 17, 22–27.

Romero-Hall, Adams, L., & Osgood, M. (2019). Examining the effectiveness, efficiency, and usability of a web-based experiential role-playing aging simulation using formative assessment. Journal of Formative Design in Learning 3(2), 123–132.

Schuetz, S. W., Sykes, T. A., & Venkatesh, V. (2021). Combating COVID-19 fake news on social media through fact checking: Antecedents and consequences. European Journal of Information Systems, 30(4), 376–388. https://doi.org/10.1080/0960085X.2021.1895682

Sebastião, L. V. (2019). The effects of mindfulness and meditation on fake news credibility [MA thesis, Universidade Federal do Rio Grande do Sul]. https://lume.ufrgs.br/handle/10183/197895

Siwakoti, S., Yadav, K., Bariletto, N., Zanotti, L., Erdogdu, U., & Shapiro, J. N. (2021). How COVID drove the evolution of fact-checking. Harvard Kennedy School Misinformation Review. https://doi.org/10.37016/mr-2020-69

Strauss, A., & Corbin, J. (1990). Basics of qualitative research: Grounded theory procedures and techniques. Sage Publications.

Treen, K. M. D. I., Williams, H. T. P., & O’Neill, S. J. (2020). Online misinformation about climate change. Wires Climate Change, 11(5), e665. https://doi.org/10.1002/wcc.665

Veletsianos, G., Houlden, S., Reid, D. Hodson, J., & Thompson, C. (in press). Design principles for an educational intervention into online vaccine misinformation. Tech Trends.

Vraga, E. K., Tully, M., & Bode, L. (2020). Empowering Users to Respond to Misinformation about Covid-19. Media and Communication, 8(2), 475–479. https://doi.org/10.17645/mac.v8i2.3200

Wang, F. & Hannafin, M.J. (2005). Design-based research and technology-enhanced learning environments. Educational Technology Research and Development, 53(4), 5-23.

Wilson, B., Parrish, P., & Veletsianos, G. (2008). Raising the bar for instructional outcomes: Towards transformative learning experiences. Educational Technology, 48(3), 39–44.

Wise, K., Alhabash, S., & Park, H. (2010). Emotional responses during social information seeking on Facebook. Cyberpsychology, Behavior, and Social Networking, 13(5), 555–562.

WHO. (2020). Resolution WHA 73.1: COVID-19 response. https://apps.who.int/gb/ebwha/pdf_files/WHA73/A73_R1-en.pdf

Young, L. E., Sidnam-Mauch, E., Twyman, M., Wang, L., Xu, J. J., Sargent, M., Valente, T. W., Ferrara, E., Fulk, J., & Monge, P. (2021). Disrupting the COVID-19 misinfodemic with network interventions: Network solutions for network problems. American Journal of Public Health (1971), 111(3), 514–519. https://doi.org/10.2105/AJPH.2020.306063

Zadrozny, B., & Nadi, A. (2019). How anti-vaxxers target grieving moms and turn them into crusaders against vaccines. NBC News. https://www.nbcnews.com/tech/social-media/how-anti-vaxxers-target-grieving-moms-turn-them-crusaders-n1057566

Acknowledgements

This work was supported by the Canadian Institutes of Health Research (CIHR) grant number 170367.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Veletsianos, G., Houlden, S., Hodson, J. et al. An Evaluation of a Microlearning Intervention to Limit COVID-19 Online Misinformation. J Form Des Learn 6, 13–24 (2022). https://doi.org/10.1007/s41686-022-00067-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41686-022-00067-z