Abstract

A growing body of literature argues that digital models do not just help organizational leaders to predict the future. Models can inadvertently produce the very future they purport to describe. In this view, performativity is a side-effect of digital modeling. But digital twins turn such thinking on its head. Digital twins are digital models that are designed to be performative—changes in the model are supposed to produce corresponding changes in the world the model represents. This is what makes digital twins useful. But for decision-makers to act in ways that align the world outside the model with the predictions contained within, they must first believe that the model is a faithful representation. In other words, for a digital twin to become performative, it must first be taken-for-granted as “real”. In this paper, we explore the technological and organizational characteristics that are likely to shape the level of taken-for-grantedness of a digital twin.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Prediction is a key organizational activity. Because neither the past nor people’s instincts are reliable predictors of the future, organizations are increasingly turning toward digital models that use simulations to estimate the likelihood that events, processes, or outcomes will occur (Brayne 2017; Dodgson et al. 2007; Faraj et al. 2018; Leonardi 2012; Thomke 2003). Although digital models are, by their nature, imperfect characterizations of the likelihood of real-world outcomes, even marginally accurate predictions of the future are believed to give those who use them an edge in the present.

But a growing body of research on performativity within organizations suggests that predictions made by digital models do not just represent a possible future state of a system; rather, they can perform their predictions by sparking a chain of events that can lead to the construction of the very reality they purport to describe (Beunza and Ferraro 2019; Knorr-Cetina and Grimpe 2008; Mackenzie and Millo 2003; Orlikowski and Scott 2014). Studies conducted under this performative rubric describe how predictions made in domains as diverse as, and through models as different as those used in, atmospheric science (Barley 2015), automotive engineering (Leonardi 2012), and financial markets (Callon 1998; MacKenzie 2006)- including financial markets’ not-so-diverse sub-fields of arbitrage trading (Beunza and Stark 2008), socially responsible investing (Beunza and Ferraro 2019) and commodity auctions (Garcia-Parpet 2007), can lead to behaviors that make those predictions come true.

One increasingly common type of model used by organizations for the purpose of prediction is the digital twin. A digital twin is an interactive digital representation that has a synchronized, two-way relationship between a mathematical representation and a real-world phenomenon (van der Aalst 2021; Lyytinen et al. 2023). Like any digital model, a digital twin is a collection of algorithms that make predictions based on data. Algorithms are carefully defined procedures that take inputs and manipulate them based on certain assumptions. Digital twins use computational techniques to interact various algorithms in ways that simulate the dynamics of complex environments under certain conditions. Those interactions among the algorithms are represented by equations assumed to be the “laws” that govern the relationships between them. As the complexity of the simulated environment increases, the more algorithms we need to arrive at a detailed prediction of the future. Digital twins are increasingly adopted in a diversity of industries, including manufacturing (Kritzinger et al. 2018; Tao and Qi 2019), urban planning (Shahat et al. 2021; White et al. 2021), and healthcare (Croatti et al. 2020; Liu et al. 2019).

The extant discussion about models and performativity suggests that digital models can shape the future as a byproduct of their attempt to predict it. But when considering digital twins, it does not make sense to treat performativity as a side-effect. Performativity is, in effect, the telos of a digital twin. This is because the purpose of a digital twin is not just to represent reality, but, rather, to create it in a performative loop. The two-way synchronizations between model and real-world phenomenon means that when something happens in one, the change should immediately be reflected in the other. If, for example, an engineer on the ground changes the rate at which air is forced through the compressor in the digital twin of a jet engine, the adjustment is automatically carried out in the physical engine by either automatic flight controls or the pilot. Conversely, if a pilot increases the rate of air flow in the engine in the plane, the digital twin should immediately depict such an adjustment to maintain real-time synchronization. Achieving synchronized performative action between the two creates dual realities such that one can operate in either the digital or physical world with the same outcomes.

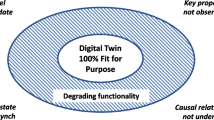

For a performative loop to work, the feedback between model and reality needs to be, or at least feel, frictionless. Lyntinen et al. (2023) ask in what way a digital representation can come to successfully stand in for another object without much critique or disbelief on the part of those who use them. They suggest that representations like digital twins work best when their “fit” with their referent is sound (p. 3). There are many factors that shape fit and therefore how “virtually perfect” a digital twin becomes (Grieves 2011). It is important, for example, that there is minimal lag between the model and referent (Wurm et al. 2023), which can be depend on the extent of automation in the referent (Lyytinen et al. 2023), or the visibility of work processes (Leonardi and Treem 2020). By addressing fit, organizations can reduce lag and achieve high-fidelity digital models through which that they can work the performative loop to experiment with and effectively manage real world phenomenon (Korotkova et al. 2023).

Despite the importance of understanding when predictions become performative (and when they do not), most studies of performativity have focused only on either the effects of performativity (Callon 1998; Garcia-Parpet 2007; Garud et al. 2018; Mackenzie and Millo 2003) or on elucidating the mechanisms through which behaviors come to align with predictions of them (Ferraro et al. 2005; Millo and Mackenzie 2009). In this paper, we expand this theorizing by asking what organizing practices produce predictions that can and will become performative. This seems a critical focus for scholarship about digital twins because if they do not become performative, they will be of little use for organizational design. Yet research shows great variation in whether people accept the reality that is simulated through digital models (Bailey et al. 2012). In some cases, questioning the representations produced through a model is important because doing so reminds viewers that the future is not already scripted and they have agency to intervene (Turkle 2009). In other cases, failure to accept that the dynamics simulated in the model are likely to occur may lead to inaction (Dunbar and Garud 2009). In still other cases, acting as though a model and the world it represents are one in the same can produce the very outcomes the model predicts (Callon 2008). No matter what the case, it is clear that the effect that digital twins can have on organizational action is critically dependent upon whether or not people take them for granted. Actors have taken a model for granted when they believe that what is happening within the model will also happen outside the model once certain decisions are made.

When something is taken for granted, its status as a “fact” is no longer questioned. As Berger and Luckmann wrote, “The reality of everyday life is taken for granted as reality. It does not require additional verification over and beyond its simple presence. It is simply there, as [a] self-evident and compelling facticity. I know that it is real” (1991, p. 37). A rich body of sociological and organizational research dating back to Berger and Luckmann’s influential work has theorized that the negotiated social orders that produce knowledge, practices, and institutions become taken for granted when they stop being questioned and start to be perceived as real (Barley 2008; Greenwood and Suddaby 2006; Heaphy 2013). This existing theory focuses on how certain ideas, values, or relations between entities become routinized and embedded into social and organizational life in ways that are no longer discussed or noticed (Colyvas and Powell 2006). But what happens when we use digital twins to simulate ideas, values or relations that people have already accepted as the way things are?Footnote 1 Existing theory does not explain how and under what conditions models of those phenomena become taken for granted as the phenomena themselves.

In this paper, we expand such theorizing by asking how and under what conditions digital twins are likely to be become taken for granted and, thus, can act performatively—just as those who adopt them hope they will.

Performativity through modeling

In 1970, JL Austin (1970) coined what he called an “ugly phrase”—performative utterances—to distinguish between those words that do something and those that simply describe something being done (p. 233). Although performative utterances appear grammatically identical to their non-performative counterparts, they have the power to effect change. Austin gave the examples of saying “I do” in a marriage ceremony, or breaking a bottle on a ship while saying, “I name this ship the Queen Elizabeth” as utterances that performed specific and important activities. These utterances do not describe the ceremony or christening; they perform them.

Since Austin’s writings, scholars have noted that it is not only utterances that are performative. Bubble chambers (Pickering 1995), economic calculations (Callon 1998), social scientific theories (Ferraro et al. 2005) and charts and graphs (Barley 2015), to name a few, can also bring into being the realities they purport to describe. Perhaps the most detailed example of performativity in practice comes from MacKenzie’s (2006) study of futures trading at the Chicago Board Options Exchange. MacKenzie followed the creation and diffusion of the Black–Scholes model of options pricing, an algorithmic model that identified inefficiencies in the market that could be exploited through arbitrage trading. His data showed that although predictions of the Black–Scholes model were inaccurate when it was first debuted 1973, its subsequent adoption by traders changed their behaviors in ways that, over time, brought options pricing into alignment with the model’s predictions. As MacKenzie summarized, the model’s usefulness in helping traders to predict arbitrage opportunities, “does seem to have helped to create patterns of prices consistent with the model” (p. 256). When something is performative it affects the very phenomenon that it measures, describes, or predicts. Consequently, the performativity thesis suggests a cycle constituting a feedback loop that aligns the physical world and the model that predicts it (Abrahamson et al. 2016; Barnes 1983; Beunza and Ferraro 2019; Garud and Gehman 2019; Marti and Gond 2019).

A common thread across studies of predictive models is that in cases when they are performative, the effect is both unintentional and fleeting (Mackenzie 2006). When, for example, Black and Scholes designed their options pricing model, they sought to infer approximate (and often “unrealistic”) predictions about under- and over-valued stocks that could make for a lucrative arbitrage opportunity (2006, p. 32). It was beyond the scope of their calculations that the adoption of the model by traders could work to align future pricing with the model’s predictions. The model’s performativity was therefore a surprise. This kind of serendipitous alignment of prediction and reality is what makes performativity so fascinating. By contrast, digital twins’ designers seek to engineer a performative outcome in a reliable and enduring way. This is important not only because a twin must maintain a synchronized, two-way link between model and reality, but also because a core utility of digital twins for organizations is that people can run endless hypothetical scenarios and assess outcomes before making any changes in the real organization (van der Aalst 2021). For such experimentation to be useful, its predictions need to bear out in reality. Thus, a digital twin’s design is predicated on its performativity. Without it, its utility quickly dissipates.

The presence of human agents complicates efforts to engineer performativity. It is one thing to build digital twins of physical assets like jet engines, where components and interactions between them can be transposed onto a digital model using physics. It is an entirely different endeavor to build a model of a phenomenon with a social component. In modeling a car crash, for example, one must account for a range of possible human reactions over a split-second incident (Leonardi 2012). Going even further into the social realm, digital twins of business processes or even whole organizations introduce such complexity so as to challenge the limits of the digital twin phenomenon (e.g., https://www.my-invenio.com/). Unlike the valves on a jet engine, people have the “capacity to make choices, learn from experience, and pursue their own objectives…[and] conflict is common” (Becker and Pentland 2022, p. 244). This means that if a user makes a change in a digital twin that relies on a human, rather than mechanical automation, to mirror the action in the real world, the virtual perfection can likely falter. Workers may not notice a task assignment from the model, or if so, may not want to carry it out because they have goals contrary to that of the digital twin. Indeed, their refusal may be the right call for themselves and the organization if they know something important that the model’s designers did not, or if they observe a safety risk that has gone digitally unaccounted for. Thus, for digital twins of organizations to achieve a level of high-fidelity synchronization that accounts for stochastic human activity, designers need to achieve more than the accurate digital replication of physical assets. They need for its users to believe that it is real (Boellstorff 2016; Østerlie and Monteiro 2020).

A sign that a digital model has become “real” to its observers is that they begin operating directly with it based on its own internal logic and they stop discussing and debating its link to dynamics or events occurring in the physical world outside the model (Bailey et al. 2012). Baudrillard (1994) argues that when such a link is lost a model is no longer “a referential being, or a substance. It is the generation… of a real without origin or reality” (p. 1). He suggests that when a model is treated as a reality in its own right, it ceases to be a simulation and becomes a simulacrum. A simulacrum is a model that is internally consistent and seemingly complete because it synthesizes multiple data streams in a way that people cannot experience through their everyday participation in the “real” world (p. 81). For this reason, Baudrillard suggests that a simulacrum begins as a copy of an original (a simulation), but that through the accretion of models that produce their own dynamics and rely on their own internal logics, it becomes a copy without an original.

If a model becomes a simulacrum—it is treated as its own reality—the referential relationship between the prediction and the model is lost. Simulacra do not make predictions about the future; they are realities in which people treat the predictions as though they were already fulfilled. To put this idea in context, options traders who acted on the prices predicted by Black’s Sheets did not ask whether the Black–Scholes model accurately described market dynamics. Instead, they took the values given on the sheets as a reality that existed in the here-and-now and to which they should orient their action (e.g., buying options in an undervalued stock). In Baudrillard’s conception, one should not ask whether the reality simulated on Black’s Sheets accurately represented a separate, external reality because such a question would miss the point that the sheets were a reality in their own right. As several scholars have suggested, the models that constitute financial markets should not be assessed in terms of how well they represent the actual behavior of markets, but instead as simulacra that are acted upon as though the futures they predict are already occurring such that the model becomes embedded in the dynamics of the market (Hertz 2000; Muniesa 2018). As Muniesa (2014, p. 127–28) observes, “economic life is cluttered with simulacra, situations of simulation…which ultimately constitute the very vehicle for the realization of business, with realization understood in the sense of becoming actual.”

The interesting case elaborated in Beunza and Ferraro’s (2019) study of the launch of a responsible investment data service at a financial data company provides empirical data showing that if actors do not view a digital model as real (as a simulacrum) they will not act in a way that makes the predictions it generates performative. The organization they studied developed a product that provided data about corporate environmental, social and governance activities (what they call, “ESG” data) that they hoped would signal to investors whether a company was responsibly managed. Their goal in providing these data was to convince investors to buy responsibly managed companies. If they did, the executives reasoned, companies would begin to align their practices with investor expectations and become more responsibly managed. As one of the principle executives of the firm observed, their goal was to “make it cheaper to do that [invest responsibly]. And then, then it becomes a self-fulfilling prophecy” (p. 525). However, as Beunza and Ferraro describe, users of the models who consumed the ESG data did not believe the model represented responsible management. This failure to believe that the model represented reality was even prevalent within the company itself, as a member of the ESG team reported: “many people in [the company] don’t believe ESG is material” (p. 527). Because people did not treat the model as real, it could not initiate the desired cycle of performativity. The model the company created certainly performed, but it was not performative. This distinction is important. As Orlikowski and Scott (2014, p. 873) observe, “performance refers to the doing of an activity in context (e.g., playing a concerto), a situated practice that is both embodied and embedded. Performativity assumes the notion of performance but points to a further claim: that reality is enacted through performance.”

Although the emerging literature on performativity has been generating knowledge about what happens when a prediction made by a model becomes performative, as opposed to simply performing (Orlikowski and Scott 2014; Beunza 2019) and the mechanisms by which performativity works (MacKenzie 2006; Beunza and Ferraro 2019; Garud and Gehman 2019), it has overlooked just how actors come to view a model as reality itself. In both of these streams, the literature takes as a point of departure that for the effects of performativity to be realized, actors need to first view predictions as real and, once they do, the cycle through which models create a reality in line with their predictions can begin. Understanding how actors begin to treat a digital model as real is, therefore, of important theoretical concern. The literature on the sociomaterial enactment of performativity provides a solid foundation for exploring this process.

As Callon (1998; 2008) has observed, performativity does not occur through discourse alone. Models become performative through a set of sociomaterial enactments, which are variously referred to as “agencements” (Callon 2016; Kuhn et al. 2019) “apparatuses” (Barad 2003; Orlikowski and Scott 2014) and “imbrications” (Leonardi 2011; Sassen 2006). Differences in nomenclature aside, the consistent idea is that a model’s performativity arises through the constitutive entanglement of the social and the material in practice (Orlikowski 2007). Models of the world are enacted through material devices that simultaneously enable and constrain their scope. In MacKenzie’s (2006) study, Black’s Sheets—a simple material device—presented to traders the value of options that had been predicted by the Black–Scholes model. Traders in the pits of the Chicago Board Options Exchange did not access the model’s algorithms directly, nor the differential equations that governed the interactions between them; instead, they drew on a simplistic output sheet that encapsulated the model. Elsewhere, MacKenzie and Millo (2003; 2009) emphasized the cognitive simplicity, physical design, and public availability of Black’s Sheets as material qualities that encouraged traders to adopt and act on their model. Similarly, in his analysis of merger arbitrage trading, Beunza (2019, p. 91) described how one of his key informants, Max, used technologies, which embedded the models underlying his work and used their algorithms to perform the complex computations necessary to make predictions, to identify the spread (i.e., the difference in price) between two companies:

Max was not using models in his head, as economic accounts appeared to imply. Instead, the model was programmed into an Excel spreadsheet and turned into a graphical form—the spreadplot—on the Bloomberg terminal. The cognitive complexity of the collared trade, as Max had said, was otherwise too high.

Other studies of financial markets have shown that information available to actors on variously formatted screens constitutes an “onscreen reality that lacks an off-screen counterpart” (Beunza and Stark 2008, p. 254), bringing certain data to traders’ attention by “rendering it interactionally present” (Knorr-Cetina and Grimpe 2008, p. 909). It is through the entanglements of discourse and materiality that predictions can begin to enact their performative effects (Gond and Brès 2020).

Orlikowski and Scott (2014) offer the most direct account of the sociomateriality of prediction and performativity. Their findings show that the material-discursive practices constituting the UK Automobile Association’s and Trip Advisor’s evaluation and prediction schemes produce two distinct apparatuses of valuation. These apparatuses were distinct because they represented different entanglements of social expectations for travel and material infrastructures. For example, the Automobile Association arrived at its predictions of hotel value through a standard formula co-produced with hoteliers and made transparent through its 65-page publication of quality standards, which helped hotels to align their actions with the categorization schemes of the apparatus. By contrast, Trip Advisor used an opaque algorithm that was constantly changing: “a relational mash of software code, weighted priorities, and filtering processes that gather, store, assemble, and distill multiple hotel reviews and ratings posted by millions of anonymous users to produce specific rankings about hotels” (2014, p. 885). Consequently, Trip Advisor’s predictions had different performative effects on hotels than those made by the Automobile Association. As Orlikowski and Scott argue, because Trip Advisor’s breadth was so great, hoteliers began to re-organize much of their hotel management program in light of its predictions, thus enacting a performativity that was distinct from that enacted by the Automobile Association’s predictions.

To the extent that they become performative, the predictions made through digital models begin to shape behavior in ways that make them real through an assemblage of social conventions and material artifacts that enable and constrain action (Marti and Gond 2019). Because most actors experience a model through technological devices that simulate the dynamics of the system, it seems plausible that these devices would also play an important role in shaping whether or not people stop treating the model as a mere representation of an external, “real”, physical world and begin operating on it as though it was the world itself (Abrahamson et al. 2016; Muniesa et al. 2007).

Understanding digital twins as sociomaterial accomplishments raises new questions about how organizations can design and use them in practice. For a digital twin to become performative the material artifact and related social conventions should enable people to act without making a distinction between representation and referent. It is not enough to achieve perfect material symmetry. Absent taken for grantedness, material symmetry dissolves upon contact with human agency. Thus, sociomaterial symmetry requires additionally that people must take for granted that the twin is the world itself and that the actions they take on and through the twin are as real in their consequences as actions on the real-world phenomenon. It seems that the ways in which models become mediated technologically plays an important role in this process.

Technological mediation of modeling

There are many kinds of models. Models that are theoretical (Muniesa et al. 2007) or purely mathematical (MacKenzie et al. 2007) are what Feldman and Pentland (2003, p. 101) call “ostensive”, which they define as an “ideal schematic form” or an “abstract, generalized idea”. The authors argue that although the ostensive aspects of phenomena can shape action in many ways, those phenomena are significantly altered when they are instantiated through technology. As abstract, generalized ideas are made concrete through the writing of computer code, the ostensive aspects of the phenomena are changed and adapted to fit within the technology’s limitations and to take advantage of its affordances (Pentland and Feldman 2008). Digital models, though they may begin as ideal forms like theoretical or mathematical models, move out of the ostensive realm when actors seek to activate them through digital technologies. Their abstract, idealized forms are changed through this instantiation because the nature of digital technologies is contingent and dynamic (Leonardi 2011). As Baskerville et al. (2019) observe, digital technologies are temporary assemblages of material and non-material objects joined together by algorithms that execute live actions of pre-specified constructions that are never performed the same way twice. To make this complicated definition more concreate, the authors provide the useful examples of account balances:

An account balance is often computed when needed rather than stored in a record. Because it is the outcome of carrying out a set of instructions, it is created as a digital object to display to humans, but then erased. Ontologically, the account balance is a temporary assemblage of material and non-material objects brought about by an algorithm at the moment of run-time. It is not real in the naïve realist sense, yet at the same time, it is real as an emergent being (p. 12).

Similarly, digital models—by virtue of the fact that they are always contingent sociomaterial accomplishments—are only ever “temporary assemblages.” The collection of algorithms and the computing infrastructures upon which those algorithms run shape the kinds of predictions a digital model makes, and they shape the way those predictions are rendered for stakeholders. To explain how digital models become taken for granted, then, it is imperative to explore the digital infrastructures that mediate between the models and their referents in the physical world.

To construct a digital model, modelers acquire data about the physical world they wish to simulate. They then represent these physical properties as a set of equations. The algorithms that constitute the digital models are themselves constructed out of equations that represents how these properties influence the performance of the object under investigation. Although the construction of a digital model may sound straightforward, each action is influenced by a set of important social choices. Data used to calibrate the model are socially negotiated proxies for dynamics that exist in the physical world and the schemes through which they are categorized affect the way they are ultimately valued (Bowker and Star 2000). The mathematical representations of digital properties are also social conventions, as are the causal relations that algorithms are programmed to represent (Dourish 2016). The ways in which the outputs of simulations are rendered digitally also reflects a series of social contestations and agreements about the best way to represent data so it is easily consumed (Leonardi 2012). For these reasons, models are not just objective digital tools or subjective social choices, they are complex sociomaterial accomplishments (Orlikowski 2007).

A useful way to understand digital models as sociomaterial accomplishments is to consider the example of a mirror. What you see in a mirror is not reality. It is a representation of reality—photons penetrate the glass and reflect off the silver atoms inside to produce a reflection. The materiality of the mirror (e.g., the curvature and thickness of the glass, the density of silver atoms, etc.) influences the character of the representation you see when looking into it. Therefore, it is more accurate to say that a mirror is a simple simulation of reality as opposed to a reflection of it. Most of the time, we forget that what something looks like in the mirror is not exactly what it looks like in the physical world. But when the materiality of the mirror changes significantly from that of the mirrors we normally use—when someone decides to make the glass concave or convex—the representation changes dramatically and it is easy to notice that the image displayed by the mirror diverges too much from our experience with reality to pass as real. This is why we laugh at the way we look in a funhouse mirror. But sometimes the materiality of the mirror shapes the simulation in ways that obscure its mediation. For example, many department stores purposefully install slightly convex mirrors in their dressing rooms that render representations of the mirror’s onlookers as slimmer than they appear in the physical world. The distortion is so slight that most people do not notice: they mistake the representation for reality and happily spend money on the clothes they think make them look so good. When viewing themselves in a funhouse mirror, the viewer retains the awareness that there is a difference between the representation and what is being represented. But when looking in the dressing room mirror, the awareness of the link between the representation and the technology used to produce it is obscured and the viewers take their reflections for granted.

When Baudrillard (1994) argues that when such a link is lost and a model is no longer “a referential being, or a substance. It is the generation… of a real without origin or reality” (p. 1), the irony that he illuminates is that a model has become taken for granted when its users no longer compare the representation to the referent. Taken-for-grantedness is accomplished when those users either forget, deny, or no longer care that the model is not equivalent to the physical world. They look through the glass without recognizing that it is an intermediary and that its materiality has created a new reality upon which they base their decisions. Baudrillard further suggests that the increasing invisibility of technological mediation is the basis upon which taken-for-grantedness can occur: “It is as though things had swallowed their own mirrors and had become transparent to themselves” (1996, p. 2).

Similarly, in presenting the data on twenty years of observations of students learning science, Turkle (2009) reported the paradox that the more present and visible digital technologies were to their users, the more their role in shaping the dynamics of the model receded from view. Although the professors she studied warned their students that the digital models they worked with were simply models, not perfect reflections of the physical world, students often could not see that the digital technologies were actively mediating the dynamics they observed:

When students claimed to be “seeing it actually happen” on a screen, their teachers were upset by how a representation had taken on unjustified authority. Faculty began conversations by acknowledging that in any experiment, one only sees nature through an apparatus, but here, there were additional dangers: the users of this apparatus did not understand its inner workings and indeed, visualization software was designed to give the impression that it offered a direct window onto nature” (Turkle 2009, p. 29).

It is not just students who are at risk of losing track of digital mediation when working with models. In their study of automotive design, Bailey and Leonardi (2015) documented how managers worried that engineers would believe that the dynamics they observed in their digital models were real, rather than representations of the behavior of a vehicle in the physical (i.e., the “real”) world. As evidence of this concern, the authors showed an image of the following reminder printed at the beginning of every chapter of engineers’ training manuals: “Don’t Believe That Model Is Reality” (p. 96).

Together, these examples demonstrate that despite the fact that the sociomateriality of digital models produces representations that necessarily distort the reality they purport to simulate, users can and do often take for granted that the dynamics happening in them will happen in the world outside them. The process of taking a model for granted may occur when stakeholders stop recognizing that their interactions with the world in the model are mediated by the digital technologies through which the model is produced and rendered. Thus, to understand how digital models become taken for granted, it is important to uncover how stakeholders lose track of technological mediation.

We have argued in this paper that the idea of designing and using a digital twins of organizations without distinguishing between representation and reality is perhaps not as farfetched as some have argued (Becker and Pentland 2022). Where faithful digital representations of the physical world are routine (Bailey et al. 2012; Dourish 2004), synchronized, two-way digital twins can complete the performative loop by becoming taken for granted. This second step is attainable, given that organizations are undergoing what Baskerville and colleagues (2019) have called an “ontological reversal,” in which the “the non-physical digital version of the reality is not just as real as the physical version, it is more so” (p. 6). People are increasingly accustomed to operating in the digital world and expecting their actions to bear out in the physical referent, if it is even necessary. Decisions made in spreadsheets enact real world salaries and budgets (Mazmanian and Beckman 2018), fashion companies launch new clothing lines on video games before they reach brick and mortar shops (McDowell 2021), and digital, rather than physical, tickets for airline flights are the only legitimate and up-to-date proof of travel (Baskerville et al. 2019). In many ways, the emergence of digital twins realizes Baudrillard’s theory of simulacra, which he wrote about decades before the technologies to achieve it existed (1994). A few months before his death, Baudrillard (2007) described a perfect simulation in terms much like those we would ascribe to a digital twin’s taken for grantedness:

At the height of simulation—in other words, at the height of the virtual and the digital—we’re in the pure operation of a world expurgated of any illusion, and hence perfectly real, technically realized (pp. 59).

Taking digital twins for granted

As people design and use digital twins to make predictions and manage organizations, studies should start with the existing explanations and find what new pathways there are to digital taken-for-grantedness. Unless organizations come to view that what happens in the digital model is “real” they will not be willing to make changes to the organization that make those changes occur. Thus, understanding how to make taken-for-grantedness happen when we want it, and how to avoid it when we do not, is a key concern for students of organizations and technology.

To understand how a model like a digital twin becomes taken for granted, we need to first be able to recognize when it has become taken for granted. But what are the indicators that actors no longer distinguish between the model and the reality outside it? Although organizational scholars have not yet answered this question, those who study important organizational processes such as the commercialization of science (Colyvas and Powell 2006), categories (Hsu and Grodal 2015; Ruef and Patterson 2009), legal practices (McPherson and Sauder 2013; Smets et al. 2012), and professional conduct (Micelotta and Washington 2013; Steele 2020) provide some indicators that point toward an answer. According to these studies, there are at least five indicators that a once contested process has become taken for granted. We summarize these traditional pathways toward taken-for-grantedness in Table 1 and we speculate about what that process might look like when the phenomena under study is not reality itself, but a technologically mediated model of it.

First, something that is taken for granted is no longer debated. That is, people do not explain why that thing happens or discuss whether it should happen. For example, Colyvas and Powell (2006) documented how early attempts by university scientists to profit from their inventions by patenting them were met with vehement opposition and charges of conflicts of interest from the scientific community. Scientists and university administrators debated the validity of approaches to science in which private inventions were developed with public funding. Over time, these debates receded and justifications for doing science in this way were no longer made or asked for—it was taken for granted that scientists could, and in some cases even should, seek to commercialize their discoveries. In the context of digital models, such debate and discussion are likely to occur over whether the dynamics simulated in the model will occur outside the model. A sign that such debate and discussion have ended would be when actors talk about the dynamics of the model using absolute (“this will happen”) rather than provisional (“this might happen”) language and their audiences do not challenge these statements (Green 2004).

Second, actors produce texts (broadly construed) that inscribe one interpretation or idea where before there were many. Texts capture the results of discussions and debates—or the preferences of those powerful enough to write the text—thereby obscuring the social processes by which they were constructed (Ashforth and Fried 1988; Palmer et al. 1993; Thornton et al. 2015). As Phillips et al. (2004) observe, the process of inscription structures messy discourse into logical patterns and flows, which then present the ideas contained in them in an authoritative way: “Discourses that are more coherent and structured present a more unified view of some aspect of social reality, which becomes reified and taken for granted” (p. 644). Digital models inscribe action and discussion not in words but in algorithms. Algorithms are routines that specify orders of operation. Structuring the relationships between variables in an algorithm gives them coherence. Through the coding of these relationships into material form, certain beliefs and values are formalized and made durable while others that are not turned into algorithms remain fuzzy, are denigrated, and eventually dropped and forgotten (Christin 2017; Dourish 2016). Also, unlike texts which can be easily read and understood, the workings of most algorithms are opaque to the average viewer, thus conferring the algorithms and the models they constitute increased authority (Kellogg et al. 2020).

Third, actors do not or cannot reflect on other possibilities. They accept actions, processes, and relationships as they are without casting a critical eye upon them (Douglas 2012; Zucker 1983). As Harmon (2019, p. 543) observes: “alternative ways of behaving [are] literally unthinkable.” Digital models are typically designed with multiple (figurative) levers that analysts can pull to simulate different dynamics, or the same dynamics built upon different assumptions (Thomke 2003). It is a presumed benefit of most digital models that testing multiple scenarios is cheap and easy (Leonardi 2012). When actors forget that the model has levers, they no longer ask to pull them, thereby stabilizing the model and removing the possibility to explore alternatives.

Fourth, roles (Barley and Tolbert 1997) and routines (Smets et al. 2012) evolve to enable and support one way of thinking and acting and to constrain others. At least two major roles have emerged in most organizations to support the creation and use of digital models. The first role is that of the person who is in charge of building the model. This person is typically well trained in knowledge of the mathematics underlying the model and use of the digital technologies through which the model is produced (Bailey et al. 2012). The second role is that of the person who interprets the model for stakeholders. People in this role connect its outputs with the decision-making structure of the organization (Boland et al. 2007). Consultations with models become a routine part of organizational action when interpreters are invited to present the model’s predictions at various meetings at which key stakeholders make important decisions (Leonardi 2011).

Fifth, when something is taken for granted it becomes the foundation upon which other action is made possible (Scott 2014). Hsu and Grodal (2015) demonstrated that as the taken-for-grantedness of the light cigarette market category increased, debate about it diminished and regulators and consumers stopped paying such close attention to the qualities of the products in that category. This leeway gave tobacco companies the opportunity to change their products and introduce new products at a rapid pace, largely free of public scrutiny. Once a model has been taken for granted, other important decisions can be built atop its predictions. For example, once energy companies take for granted the wind patterns predicted by digital models of the weather, they begin to make decisions about where to build new wind turbines, thereby increasing their commitment to the predictive power of the model (Barley 2015).

Although the prior literature is helpful in pointing to such indicators by which we would know when a digital model has been taken for granted, it stops short of explaining how digital models are taken for granted because it does not adequately theorize the role that the computing infrastructure through which such models are enacted shapes how actors interact with and respond to them. We hope that this discussion has provided some provocations about how this process might unfold.

Conclusion

The many ways in which managers use maps to chart a future for their organizations are rooted in what Weick (1990) calls “cartographic myths”. He theorized that the most important quality of maps is not the amount or quality of data inputs they have, or even their degree of accuracy. Rather, maps, are an important tool with which one can take action:

…maps are intimately bound up with action, both the action that is ongoing when the map is first invoked, and the action that occurs subsequent to the discovery of the map. It is the tight coupling between maps and action that tightens the coupling between maps and the territory (pp. 9).

Digital models are maps of a certain type that have the potential to shape action. But as we have discussed, the decoupling, rather than the coupling, between the map and the territory make it possible for mathematical models of organizational phenomena like digital twins to become taken-for-granted. Although an extensive body of research has focused on how activities, practices, and phenomena in the “real world” become taken-for-granted, we must explore how digital models of that “real world” become-taken-for-granted as the world itself. This paper contributes to theory by suggesting that how organizing actions intertwine with the development and implementation of digital models can shape whether those models are treated as maps that chart territories, or as maps whose territories they make disappear.

Availability of data and materials

Not applicable.

Notes

Berger and Luckmann (1991) suggest that when “There we go again” becomes “This is how these things are done”, something has become taken for granted: “It becomes real in an ever more massive way” (p. 77).

References

Abrahamson E, Berkowitz H, Dumez H (2016) A more relevant approach to relevance in management studies: an essay on performativity. Acad Manag Rev 41(2):367–381. https://doi.org/10.5465/amr.2015.0205

Ashforth BE, Fried Y (1988) The mindlessness of organizational behaviors. Hum Relat 41(4):305–329. https://doi.org/10.1177/001872678804100403

Austin J (1970) Philosophical papers. Clarendon Press, Oxford

Bailey DE, Leonardi PM (2015) Technology choices: why occupations differ in their embrace of new technology. MIT Press, Cambridge

Bailey DE, Leonardi PM, Barley SR (2012) The lure of the virtual. Organ Sci 23(5):1485–1501. https://doi.org/10.1287/orsc.1110.0703

Barad K (2003) Posthumanist performativity: toward an understanding of how matter comes to matter. Signs J Women Cult Soc 28(3):801–831. https://doi.org/10.1086/345321

Barley S (2008) Coalface institutionalism. In: Greenwood R, Oliver C, Lawrence TB, Meyer RE (eds) The SAGE handbook of organizational institutionalism. SAGE Publications Ltd, Thousand Oaks, pp 491–518

Barley WC (2015) Anticipatory work: how the need to represent knowledge across boundaries shapes work practices within them. Organ Sci 26(6):1612–1628. https://doi.org/10.1287/orsc.2015.1012

Barley SR, Tolbert PS (1997) Institutionalization and structuration: studying the links between action and institution. Org Stud 18(1):93–117. https://doi.org/10.1177/017084069701800106

Barnes B (1983) Social life as bootstrapped induction. Sociology 17(4):524–545. https://doi.org/10.1177/0038038583017004004

Baskerville R, Myers M, Yoo Y (2019) Digital first: the ontological reversal and new challenges for is research. EBCS Articles

Baudrillard J (1994) Simulacra and Simulation. In: Judovitz D, Porter JI (eds) The body, in theory. University of Michigan Press, Ann Arbor

Baudrillard J (1996) The perfect crime. Verso, London

Baudrillard J, Valiente Noailles E (2007) Exiles from dialogue. Polity, Cambridge

Becker MC, Pentland BT (2022) Digital twin of an organization: are you serious? In: Marrella A, Weber B (eds) Business process management workshops, lecture notes in business information processing. Springer International Publishing, Cham, pp 243–254. https://doi.org/10.1007/978-3-030-94343-1_19

Berger PL, Luckmann T (1991) The social construction of reality: a treatise in the sociology of knowledge. Penguin, Harmondsworth

Beunza D (2019) Taking the floor: models, morals, and management in a wall street trading room. Princeton University Press, Princeton

Beunza D, Ferraro F (2019) Performative work: bridging performativity and institutional theory in the responsible investment field. Organ Stud 40(4):515–543. https://doi.org/10.1177/0170840617747917

Beunza D, Stark L (2008) Tools of the trade: the sociotechnology of arbitrage in a wall street trading room. In: Pinch T, Swedberg R (eds) Living in a material world. MIT Press, Cambridge

Boellstorff T (2016) For whom the ontology turns: theorizing the digital real. Curr Anthropol 57(4):387–407. https://doi.org/10.1086/687362)

Boland RJ, Lyytinen K, Yoo Y (2007) Wakes of innovation in project networks: the case of digital 3-D representations in architecture, engineering, and construction. Organ Sci 18(4):631–647. https://doi.org/10.1287/orsc.1070.0304

Bowker GC, Star SL (2000) Sorting things out: classification and its consequences. In: Bijker W, Slayton R (eds) Inside technology. The MIT Press, Cambridge

Brayne S (2017) Big data surveillance: the case of policing. Am Sociol Rev 82(5):977–1008. https://doi.org/10.1177/0003122417725865

Callon M (1998) The laws of the markets. In: Callon M (ed) Sociological review monograph. Blackwell Publishers/Sociological Review, Oxford

Callon M (2008) What does it mean to say that economics is performative? In: MacKenzie D, Muniesa F, Siu L (eds) Do economists make markets? Princeton University Press, Princeton, pp 311–357

Callon M (2016) Revisiting marketization: from interface-markets to market-agencements. Consum Mark Cult 19(1):17–37. https://doi.org/10.1080/10253866.2015.1067002

Christin A (2017) Algorithms in practice: comparing web journalism and criminal justice. Big Data Soc. https://doi.org/10.1177/2053951717718855

Colyvas JA, Powell WW (2006) Roads to institutionalization: the remaking of boundaries between public and private science. Res Org Behav 27:305–353. https://doi.org/10.1016/S0191-3085(06)27008-4

Croatti A, Gabellini M, Montagna S, Ricci A (2020) On the integration of agents and digital twins in healthcare. J Med Syst 44(9):161. https://doi.org/10.1007/s10916-020-01623-5

Dodgson M, Gann DM, Salter A (2007) ‘In case of fire, please use the elevator’: simulation technology and organization in fire engineering. Org Sci 18(5):849–864. https://doi.org/10.1287/orsc.1070.0287

Douglas M (2012) How institutions think. Routledge, London

Dourish P (2004) Where the action is: the foundations of embodied interaction. In MIT Press paperback (ed) A Bradford book, MIT Press, Cambridge

Dourish P (2016) Algorithms and their others: algorithmic culture in context. Big Data Soc. https://doi.org/10.1177/2053951716665128

Dunbar RLM, Garud R (2009) Distributed knowledge and indeterminate meaning: the case of the Columbia shuttle flight. Org Stud 30(4):397–421. https://doi.org/10.1177/0170840608101142

Faraj S, Pachidi S, Sayegh K (2018) Working and organizing in the age of the learning algorithm. Inf Organ 28:62–70

Feldman ER (2013) Legacy divestitures: motives and implications. Org Sci 25(3):815–832. https://doi.org/10.1287/orsc.2013.0873

Feldman MS, Pentland BT (2003) Reconceptualizing organizational routines as a source of flexibility and change. Adm Sci Q 48(1):94–118. https://doi.org/10.2307/3556620

Ferraro F, Pfeffer J, Sutton R (2005) Economics language and assumptions: how theories can become self-fulfilling. Acad Manag Rev 30(1):8–24. https://doi.org/10.5465/AMR.2005.15281412

Garcia-Parpet M-F (2007) The social construction of a perfect market: the strawberry auction at Fontaines-en-Sologne. In: MacKenzie D, Muniesa F, Siu L (eds) Do Economists make markets? On the performativity of economics. Princeton University Press, Princeton

Garud R, Gehman J (2019) Performativity: not a destination but an ongoing journey. Acad Manag Rev 44(3):679. https://doi.org/10.5465/amr.2018.0315

Garud R, Gehman J, Tharchen T (2018) Performativity as ongoing journeys: implications for strategy, entrepreneurship, and innovation. Long Range Plan 51(3):500–509. https://doi.org/10.1016/j.lrp.2017.02.003

Gond J-P, Brès L (2020) Designing the tools of the trade: how corporate social responsibility consultants and their tool-based practices created market shifts. Org Stud 41(5):703–726. https://doi.org/10.1177/0170840619867360

Green SE (2004) A rhetorical theory of diffusion. Acad Manag Rev 29(4):653–669. https://doi.org/10.5465/amr.2004.14497653

Greenwood R, Suddaby R (2006) Institutional entrepreneurship in mature fields: the big five accounting firms. Acad Manag J 49(1):27–48. https://doi.org/10.5465/AMJ.2006.20785498

Grieves M (2011) Virtually perfect: driving innovative and lean products through product lifecycle management. Space Coast Press, Cocoa Beach

Harmon DJ (2019) When the fed speaks: arguments, emotions, and the microfoundations of institutions. Adm Sci Q 64(3):542–575. https://doi.org/10.1177/0001839218777475

Heaphy ED (2013) Repairing breaches with rules: maintaining institutions in the face of everyday disruptions. Org Sci 24(5):1291–1315. https://doi.org/10.1287/orsc.1120.0798

Hertz E (2000) Stock markets as ‘simulacra’: observation that participates. Tsantsa 24:1291

Hsu G, Grodal S (2015) Category taken-for-Grantedness as a strategic opportunity: the case of light cigarettes, 1964 to 1993. Am Sociol Rev 80(1):28–62. https://doi.org/10.1177/000312241456539)

Kellogg KC, Valentine MA, Christin A (2020) Algorithms at work: the new contested terrain of control. Acad Manag Ann 14:366–410. https://doi.org/10.5486/annals.2018.0174

Knorr-Cetina K, Grimpe B (2008) Global financial technologies. In: Pinch T, Swedberg R (eds) Living in a material world: economic sociology meets science and technology studies. MIT Press, Cambridge

Korotkova N, Benders J, Mikalef P, Cameron D (2023) Maneuvering between Skepticism and optimism about hyped technologies: building trust in digital twins. Inform Manag 60(4):103787. https://doi.org/10.1016/j.im.2023.103787

Kritzinger W, Karner M, Traar G, Henjes J, Sihn W (2018) Digital twin in manufacturing: a categorical literature review and classification. IFAC-PapersOnLine 51(11):1016–1022. https://doi.org/10.1016/j.ifacol.2018.08.474

Kuhn T, Ashcraft KL, Cooren F (2019) introductory essay: what work can organizational communication do? Manag Commun Q 33(1):101–111. https://doi.org/10.1177/0893318918809421

Leonardi PM (2011) When flexible routines meet flexible technologies: affordance, constraint, and the imbrication of human and material agencies. MIS Q 35(1):147–167. https://doi.org/10.2307/23043493

Leonardi PM (2012) Car crashes without cars: lessons about simulation technology and organizational change from automotive design. In: Kaptelinin V, Foot KA, Nardi BA (eds) Acting with technology. MIT Press, Cambridge

Leonardi PM, Treem JW (2020) Behavioral visibility: a new paradigm for organization studies in the age of digitization, digitalization, and datafication. Org Stud 41(12):1601–1625. https://doi.org/10.1177/0170840620970728

Liu Y, Zhang L, Yang Y, Zhou L, Ren L, Wang F, Liu R, Pang Z, Deen MJ (2019) A novel cloud-based framework for the elderly healthcare services using digital twin. IEEE Access 7:49088–49101. https://doi.org/10.1109/ACCESS.2019.2909828

Lok J, de Rond M (2012) On the plasticity of institutions: containing and restoring practice breakdowns at the Cambridge University Boat Club. Acad Manag J 56(1):185–207. https://doi.org/10.5465/amj.2010.0688

Lyytinen K, Weber B, Becker MC, Pentland BT (2023) Digital twins of organization: implications for organization design. J Org Design. https://doi.org/10.1007/s41469-023-00151-z

MacKenzie DA (2006) An engine, not a camera: how financial models shape markets. In: Bijker W, Slayton R (eds) Inside technology. MIT Press, Cambridge

MacKenzie DA, Muniesa F, Siu L (2007) Do economists make markets?: On the performativity of economics. University Press, Princeton

Mackenzie D, Millo Y (2003) Constructing a market, performing theory: the historical sociology of a financial derivatives exchange 1. Am J Sociol 109(1):107–145. https://doi.org/10.1086/374404

Marti E, Gond J-P (2019) How do theories become self-fulfilling? Clarifying the process of Barnesian performativity. Acad Manag Rev 44(3):686–694. https://doi.org/10.5465/amr.2019.0024

Mazmanian M, Beckman C (2018) ‘Making’ your numbers: engendering organizational control through a ritual of quantification. Organ Sci 29(3):357–379. https://doi.org/10.1287/orsc.2017.1185

McDowell M (2021) Inside Gucci’s gaming strategy. Vogue Business, London

McPherson CM, Sauder M (2013) Logics in action: managing institutional complexity in a drug court. Adm Sci Q 58(2):165–196. https://doi.org/10.1177/0001839213486447

Micelotta ER, Washington M (2013) Institutions and maintenance: the repair work of Italian professions. Organ Stud 34(8):1137–1170. https://doi.org/10.1177/0170840613492075

Millo Y, Mackenzie D (2009) The usefulness of inaccurate models: towards an understanding of the emergence of financial risk management. Acc Organ Soc 34(5):638–653. https://doi.org/10.1016/j.aos.2008.10.002

Muniesa F (2014) The provoked economy: economic reality and the performative turn. Routledge, Milton Park. https://doi.org/10.4324/9780203798959

Muniesa F (2018) Grappling with the performative condition. Long Range Plan 51(3):495–499. https://doi.org/10.1016/j.lrp.2017.02.002

Muniesa F, Millo Y, Callon M (2007) An introduction to market devices. Sociol Rev 55(2_suppl):1–12. https://doi.org/10.1111/j.1467-954X.2007.00727.x

Orlikowski WJ (2007) Sociomaterial practices: exploring technology at work. Organ Stud 28(9):1435–1448. https://doi.org/10.1177/0170840607081138

Orlikowski WJ, Scott SV (2014) What happens when evaluation goes online? Exploring apparatuses of valuation in the travel sector. Organ Sci 25(3):868–891. https://doi.org/10.1287/orsc.2013.0877

Østerlie T, Monteiro E (2020) Digital sand: the becoming of digital representations. Inform Organ 30(1):100275. https://doi.org/10.1016/j.infoandorg.2019.100275

Palmer DA, Jennings PD, Zhou X (1993) Late adoption of the multidivisional form by large U.S. Corporations: institutional, political, and economic accounts. Adm Sci Q 38(1):100–131. https://doi.org/10.2307/2393256

Pentland BT, Feldman MS (2008) Designing routines: on the folly of designing artifacts, while hoping for patterns of action. Inf Organ 18(4):235–250. https://doi.org/10.1016/j.infoandorg.2008.08.001

Phillips N, Lawrence TB, Hardy C (2004) Discourse and institutions. Acad Manag Rev 29(4):635–652. https://doi.org/10.5465/amr.2004.14497617

Pickering A (1995) The mangle of practice: time, agency, and science. University of Chicago Press, Chicago

Ruef M, Patterson K (2009) Credit and classification: the impact of industry boundaries in nineteenth-century America. Adm Sci Q 54(3):486–520. https://doi.org/10.2189/asqu.2009.54.3.486

Sassen S (2006) Reading the city in a global digital age: the limits of topographic representation. In: Taylor P, Derudder B, Saey P, Witlox F (eds) Cities in globalization: practices, policies and theories. Routledge, London

Scott WR (2014) Institutions and organizations: ideas, interests, and identities, 4th edn. SAGE, Los Angeles

Shahat E, Hyun CT, Yeom C (2021) City digital twin potentials: a review and research agenda. Sustainability 13(6):3386. https://doi.org/10.3390/su13063386

Smets M, Morris T, Greenwood R (2012) From practice to field: a multilevel model of practice-driven institutional change. Acad Manag J 55(4):877–904. https://doi.org/10.5465/amj.2010.0013

Steele CWJ (2020) When things get odd: exploring the interactional choreography of taken-for-grantedness. Acad Manag Rev 46(2):341–361. https://doi.org/10.5465/amr.2017.0392

Tao F, Qi Q (2019) Make More Digital Twins. Nature 573(7775):490–491. https://doi.org/10.1038/d41586-019-02849-1

Thomke SH (2003) Experimentation matters: unlocking the potential of new technologies for innovation. Harvard Business School Press, Boston

Thornton PH, Ocasio W, Lounsbury M (2015) The institutional logics perspective. Emerg Trends Soc Behav Sci. https://doi.org/10.1002/9781118900772.etrds0187

Turkle S (ed) (2009) Simulation and its discontents, simplicity. The MIT Press, Cambridge

van der Aalst WMP (2021) Concurrency and objects matter! Disentangling the fabric of real operational processes to create digital twins. In: Cerone A, Ölveczky PC (eds) Theoretical Aspects of Computing—ICTAC 2021, Lecture Notes in Computer Science. Springer International Publishing, Cham, pp 3–17. https://doi.org/10.1007/978-3-030-85315-0_1

Weick KE (1990) Introduction: cartographic myths in organizations. In: Huff AS (ed) Mapping strategic thought. John Wiley & Sons Ltd., West Sussex, pp 1–9

White G, Zink A, Codecá L, Clarke S (2021) A digital twin smart city for citizen feedback. Cities 110:103064. https://doi.org/10.1016/j.cities.2020.103064

Wurm B, Becker MC, Pentland BT, Lyytinen K, Weber B, Grisold T, Mendling J, Kremser W (2023) Digital twins of organizations: a socio-technical view on challenges and opportunities for future research. Commune Assoc Inform Syst 52:552–565. https://doi.org/10.17705/1CAIS.05223

Zucker L (1977) The role of institutionalization in cultural persistence. Am Sociol Rev 42(5):726–743. https://doi.org/10.2307/2094862

Zucker L (1983) Organizations as institutions. Res Sociol Organ 2(1):1–47

Acknowledgements

Support for this research was provided by National Science Foundation Grant SES-2051896.

Funding

Support for this research was provided by National Science Foundation Grant SES-2051896.

Author information

Authors and Affiliations

Contributions

PML contributed to the design and implementation of the research and to the writing of the manuscript. VL contributed to the data analysis and writing of the manuscript. Both authors read and approved the final manuscript

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Leonardi, P.M., Leavell, V. How the map becomes the territory: prediction, performativity and the process of taking digital twins for granted. J Org Design (2024). https://doi.org/10.1007/s41469-024-00164-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41469-024-00164-2