Abstract

A standard problem in applied topology is how to discover topological invariants of data from a noisy point cloud that approximates it. We consider the case where a sample is drawn from a properly embedded  -submanifold without boundary in a Euclidean space. We show that we can deformation retract the union of ellipsoids, centered at sample points and stretching in the tangent directions, to the manifold. Hence the homotopy type, and therefore also the homology type, of the manifold is the same as that of the nerve complex of the cover by ellipsoids. By thickening sample points to ellipsoids rather than balls, our results require a smaller sample density than comparable results in the literature. They also advocate using elongated shapes in the construction of barcodes in persistent homology.

-submanifold without boundary in a Euclidean space. We show that we can deformation retract the union of ellipsoids, centered at sample points and stretching in the tangent directions, to the manifold. Hence the homotopy type, and therefore also the homology type, of the manifold is the same as that of the nerve complex of the cover by ellipsoids. By thickening sample points to ellipsoids rather than balls, our results require a smaller sample density than comparable results in the literature. They also advocate using elongated shapes in the construction of barcodes in persistent homology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Data is often unstructured and comes in the form of a non-empty finite metric space, called a point cloud. It is often very high dimensional even though data points are actually samples from a low-dimensional object (such as a manifold) that is embedded in a high-dimensional space. One reason may be that many features are all measurements of the same underlying cause and therefore closely related to each other. For example, if you take photos of a single object from multiple angles simultaneously there is a lot overlap in the information captured by all those cameras. One of the main tasks of ‘manifold learning’ is to design algorithms to estimate geometric and topological properties of the manifold from the sample points lying on this unknown manifold, in particular its homotopy type.

One successful framework for dealing with the problem of reconstructing shapes from point clouds is based on the notion of \(\epsilon \)-sample introduced by Amenta and Bern (1999). A sampling of a shape \(\mathcal {M}\) is an \(\epsilon \)-sampling if every point \(\texttt {P}\) in \(\mathcal {M}\) has a sample point at distance at most \(\epsilon \cdot \text {lfs}_\mathcal {M}(\texttt {P})\), where \(\text {lfs}_\mathcal {M}(\texttt {P})\) is the local feature size of \(\texttt {P}\), i.e. the distance from \(\texttt {P}\) to the medial axis of \(\mathcal {M}\). Surfaces smoothly embedded in \(\mathbb {R}^3\) can be reconstructed homeomorphically from any 0.06-sampling using the Cocone algorithm (Amenta et al. 2002).

One simple method for shape reconstructing is to output an offset of the sampling for a suitable value \(\alpha \) of the offset parameter (the \(\alpha \)-offset of the sampling is the union of balls with centers in sample points and radius \(\alpha \)). Topologically, this is equivalent to taking the Čech complex or the \(\alpha \)-complex (Edelsbrunner and Mücke 1994). This leads to the problem of finding theoretical guarantees as to when an offset of a sampling has the same homotopy type as the underlying set. In other words, we need to find conditions on a point cloud \(\mathcal {S}\) of a shape \(\mathcal {M}\) so that the thickening of \(\mathcal {S}\) is homotopy equivalent to \(\mathcal {M}\). This only works if the point cloud is sufficiently close to \(\mathcal {M}\), i.e. when there is a bound on the Hausdorff distance between \(\mathcal {S}\) and \(\mathcal {M}\).

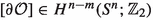

Niyogi et al. (2008) proved that this method indeed provides reconstructions having the correct homotopy type for densely enough sampled smooth submanifolds of \(\mathbb {R}^n\). More precisely, one can capture the homotopy type of a Riemannian submanifold \(\mathcal {M}\) without boundary of reach \(\tau \) in a Euclidean space from a finite \(\frac{\epsilon }{2}\)-dense sample \(\mathcal {S}\subseteq \mathcal {M}\) (meaning every point of the manifold has a sample point at most \(\frac{\epsilon }{2}\) away) whenever  by showing that the union of \(\epsilon \)-balls with centers in sample points deformation retracts to \(\mathcal {M}\).

by showing that the union of \(\epsilon \)-balls with centers in sample points deformation retracts to \(\mathcal {M}\).

Let us denote the Hausdorff distance between \(\mathcal {S}\) and \(\mathcal {M}\) by \(\varkappa \)—that is, every point in \(\mathcal {M}\) has an at most \(\varkappa \)-distant point in \(\mathcal {S}\). We can rephrase the above result as follows: whenever  , the homotopy type of \(\mathcal {M}\) is captured by a union of \(\epsilon \)-balls with centers in \(\mathcal {S}\) for every

, the homotopy type of \(\mathcal {M}\) is captured by a union of \(\epsilon \)-balls with centers in \(\mathcal {S}\) for every  . Thus the bound of the ratio

. Thus the bound of the ratio

represents how dense we need the sample to be in order to be able to recover the homotopy type of \(\mathcal {M}\).

Other authors gave variants of Niyogi, Smale and Weinberger’s result. In Bürgisser et al. (2018, Theorem 2.8), the authors relax the conditions on the set we wish to approximate (it need not be a manifold, just any non-empty compact subset of a Euclidean space) and the sample (it need not be finite, just non-empty compact), but the price they pay for this is a lot lower upper bound on  , which in their case is \(\frac{1}{6} \approx 0.167\). One can potentially improve the result by using local quantities (\(\mu \)-reach etc.) (Chazal et al. 2009; Chazal and Lieutier 2005b, 2007; Turner 2013; Attali and Lieutier 2010; Attali et al. 2013) instead of the global reach \(\tau \), at least in situations when these are large compared to \(\tau \).

, which in their case is \(\frac{1}{6} \approx 0.167\). One can potentially improve the result by using local quantities (\(\mu \)-reach etc.) (Chazal et al. 2009; Chazal and Lieutier 2005b, 2007; Turner 2013; Attali and Lieutier 2010; Attali et al. 2013) instead of the global reach \(\tau \), at least in situations when these are large compared to \(\tau \).

In practice producing a sufficiently dense sample can be difficult and computationally expensive (Dufresne et al. 2019), so relaxing the upper bound of \(\frac{\varkappa }{\tau }\) is desirable. The purpose of this paper is to prove that we can indeed relax this bound when sampling manifolds (though we allow a more general class than Niyogi et al. 2008) if we thicken sample points to ellipsoids rather than balls. The idea is that since a differentiable manifold is locally well approximated by its tangent space, an ellipsoid with its major semi-axes in the tangent directions well approximates the manifold. This idea first appeared in Breiding et al. (2018), where the authors construct a filtration of “ellipsoid-driven complexes”, where the user can choose the ratio between the major (tangent) and the minor (normal) semi-axes. Their experiments showed that computing barcodes from ellipsoid-driven complexes strengthened the topological signal, in the sense that the bars corresponding to features of the data were longer. In our paper we make the ratio between semi-axes dependent on the ellipsoid size (the length of the minor semi-axis, to be exact), and give a proof that the union of ellipsoids around sample points (under suitable assumptions) deformation retracts onto the manifold. Hence our paper gives theoretical guarantees that the union of ellipsoids captures the manifold’s homotopy type, and thus further justifies the use of ellipsoid-inspired shapes to construct barcodes.

In this paper we assume that the information about the reach of the manifold and its tangent and normal spaces in the sample points are given. In practice, these quantities can be estimated from the sample, see e.g. Aamari et al. (2019), Berenfeld et al. (2020), Zhang and Zha (2004), Kaslovsky and Meyer (2011) and Zhang et al. (2011).

The central theorem of this paper (Theorem 6.1) is the following:

Theorem

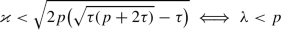

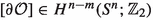

Let \(n\in \mathbb {N}\) and let \(\mathcal {M}\) be a non-empty properly embedded \({\mathcal {C}}^1\)-submanifold of \(\mathbb {R}^n\) without boundary. Let \(\mathcal {S}\subseteq \mathcal {M}\) be a subset of \(\mathcal {M}\), locally finite in \(\mathbb {R}^n\) (the sample from the manifold \(\mathcal {M}\)). Let \(\tau \) be the reach of \(\mathcal {M}\) in \(\mathbb {R}^n\) and \(\varkappa \) the Hausdorff distance between \(\mathcal {S}\) and \(\mathcal {M}\). Then for all  which satisfy

which satisfy

there exists a strong deformation retraction from  (the union of open ellipsoids around sample points with normal semi-axes of length \(p\) and tangent semi-axes of length

(the union of open ellipsoids around sample points with normal semi-axes of length \(p\) and tangent semi-axes of length  ) to \(\mathcal {M}\). In particular, \(\mathcal {M}\),

) to \(\mathcal {M}\). In particular, \(\mathcal {M}\),  and the nerve complex of the ellipsoid cover

and the nerve complex of the ellipsoid cover  are homotopy equivalent, and so have the same homology.

are homotopy equivalent, and so have the same homology.

By replacing the balls with ellipsoids, we manage to push the upper bound on  to approximately 0.913, an improvement by a factor of about 2.36 compared to Niyogi et al. (2008). In other words, our method allows samples with less than half the density.

to approximately 0.913, an improvement by a factor of about 2.36 compared to Niyogi et al. (2008). In other words, our method allows samples with less than half the density.

Due to the difficulty and length of our current work, we give the result in terms of the reach, i.e. a global feature. We leave the generalization to local features for future work.

The strategy of our proof is to define a deformation retraction from the union of ellipsoids around sample points to the manifold. On the intersection of ellipsoids, we show that the normal deformation retraction works. However, this appears too difficult to prove theoretically, so we provide a computer-assisted proof. We first reduce the general case to a set of cases which includes the “worst case scenarios”, i.e. the ones which come the closest to contradicting our desired results. At this step, we use a computer to show that these cases still satisfy our requirements. On the other hand, we define the deformation retraction outside of ellipsoid intersections by utilizing the flow of a particular vector field. We join the two parts with a suitable partition of unity.

The paper is organized as follows. Section 2 lays the groundwork for the paper, providing requisite definitions and deriving some results for general differentiable submanifolds of Euclidean spaces. In Sect. 3 we calculate theoretical bounds on the persistence parameter \(p\): the lower bound ensures that the union of ellipsoids covers the manifold and the upper bound ensures that the union does not intersect the medial axis. In Sect. 4 we explain the computer-assisted part of the proof used to show that the normal deformation retraction works on the intersections of ellipsoids. In Sect. 5 we construct the rest of the deformation retraction from the union of ellipsoids to the manifold. This section is divided into several subsections for easier reading. Section 6 collects the results from the paper to prove the main theorem. In Sect. 7 we discuss our results and future work.

1.1 Notation

Natural numbers  include zero. Unbounded real intervals are denoted by \(\mathbb {R}_{> a}\), \(\mathbb {R}_{\le a}\) etc. Bounded real intervals are denoted by \({\mathbb {R}}_{(a, b)}\) (open), \({\mathbb {R}}_{[a, b]}\) (closed) etc.

include zero. Unbounded real intervals are denoted by \(\mathbb {R}_{> a}\), \(\mathbb {R}_{\le a}\) etc. Bounded real intervals are denoted by \({\mathbb {R}}_{(a, b)}\) (open), \({\mathbb {R}}_{[a, b]}\) (closed) etc.

Glossary:

- \(d\):

-

Euclidean distance in \(\mathbb {R}^n\)

- \(\mathcal {N}\):

-

a submanifold of \(\mathbb {R}^n\)

- \(\mathcal {M}\):

-

\(m\)-dimensional

-submanifold of \(\mathbb {R}^n\), embedded as a closed subset

-submanifold of \(\mathbb {R}^n\), embedded as a closed subset - \(\mathcal {M}_{r}\):

-

open r-thickening of \(\mathcal {M}\), i.e.

- \(\overline{\mathcal {M}}_{r}\):

-

closed r-thickening of \(\mathcal {M}\), i.e.

:

:-

tangent space on \(\mathcal {M}\) at \(\texttt {X}\)

:

:-

normal space on \(\mathcal {M}\) at \(\texttt {X}\)

- \(\mathcal {S}\):

-

manifold sample (a subset of \(\mathcal {M}\)), non-empty and locally finite

- \(\varkappa \):

-

the Hausdorff distance between \(\mathcal {M}\) and \(\mathcal {S}\)

- \({\mathcal {A}}\):

-

the medial axis of \(\mathcal {M}\)

- \({\mathcal {A}}^\complement \):

-

the complement of the medial axis in \(\mathcal {M}\), i.e. \(\mathcal {M}\setminus {\mathcal {A}}\)

- \(\tau \):

-

the reach of \(\mathcal {M}\)

- \(p\):

-

persistence parameter

:

:-

open ellipsoid with the center in a sample point \(\texttt {S}\in \mathcal {S}\) with the major semi-axes tangent to \(\mathcal {M}\)

:

:-

closed ellipsoid with the center in a sample point \(\texttt {S}\in \mathcal {S}\) with the major semi-axes tangent to \(\mathcal {M}\)

:

:-

the boundary of

, i.e.

, i.e.

:

:-

the union of open ellipsoids over the sample,

:

:-

the union of closed ellipsoids over the sample,

- \(pr\):

-

the map \({\mathcal {A}}^\complement \rightarrow \mathcal {M}\) taking a point to the unique closest point on \(\mathcal {M}\)

- \(prv\):

-

the map taking a point \(\texttt {X}\) to the vector \(pr(\texttt {X}) - \texttt {X}\)

:

:-

auxiliary vector field, defined on

- \(\widetilde{V}\):

-

auxiliary vector field, defined on

- \(V\):

-

the vector field of directions for the deformation retraction

- \(\Phi \):

-

the flow of the vector field \(V\)

- \(R\):

-

a deformation retraction from

to a tubular neighbourhood of \(\mathcal {M}\)

to a tubular neighbourhood of \(\mathcal {M}\)

2 General definitions

All constructions in this paper are done in an ambient Euclidean space \(\mathbb {R}^n\), \(n\in \mathbb {N}\), equipped with the usual Euclidean metric \(d\). We will use the symbol \(\mathcal {N}\) for a general submanifold of \(\mathbb {R}^n\).

Given \(r \in \mathbb {R}_{> 0}\), we denote the open and closed r-thickening of \(\mathcal {N}\) by

By definition each point \(\texttt {X}\) of a manifold \(\mathcal {N}\) has a neighbourhood, homeomorphic to a Euclidean space or a closed Euclidean half-space. The dimension of this (half-)space is the dimension of \(\mathcal {N}\) at \(\texttt {X}\). Different points of a manifold can have different dimensions,Footnote 1 though the dimension is constant on each connected component. In this paper, when we say that \(\mathcal {N}\) is an \(m\)-dimensional manifold, we mean that it has dimension \(m\) at every point.

We quickly recall from general topology that it is equivalent for a subset of a Euclidean space to be closed and to be properly embedded.

Proposition 2.1

Let \((\mathcal {X}, d)\) be a metric space in which every closed ball is compact (every Euclidean space \(\mathbb {R}^n\) satisfies this property). The following statements are equivalent for any subset \(\mathcal {S} \subseteq \mathcal {X}\).

-

1.

\(\mathcal {S}\) is a closed subset of \(\mathcal {X}\).

-

2.

\(\mathcal {S}\) is properly embedded into \(\mathcal {X}\), i.e. the inclusion \(\mathcal {S} \hookrightarrow \mathcal {X}\) is a proper map.Footnote 2

-

3.

\(\mathcal {S}\) is empty or distances from points in the ambient space to \(\mathcal {S}\) are attained. That is, for every \(\texttt {X}\in \mathcal {X}\) there exists \(\texttt {Y}\in \mathcal {S}\) such that \(d(\texttt {X}, \mathcal {S}) = d(\texttt {X}, \texttt {Y})\).

Proof

-

\(\underline{(1 \Rightarrow 2)}\) If \(\mathcal {S}\) is closed in \(\mathcal {X}\), then its intersection with a compact subset of \(\mathcal {X}\) is compact, so \(\mathcal {S}\) is properly embedded into \(\mathcal {X}\).

-

\(\underline{(2 \Rightarrow 3)}\) If \(\mathcal {S}\) is non-empty, pick \(\texttt {S} \in \mathcal {S}\). For any \(\texttt {X}\in \mathcal {X}\) we have \(d(\texttt {X}, \mathcal {S}) \le d(\texttt {X}, \texttt {S})\), so \(d(\texttt {X}, \mathcal {S}) = d\big (\texttt {X}, \mathcal {S} \cap {d(\texttt {X}, \texttt {S})}\big )\). Since \(\mathcal {S}\) is properly embedded in \(\mathcal {X}\), its intersection with the compact closed ball \({d(\texttt {X}, \texttt {S})}\) is compact also. A continuous map from a non-empty compact space into reals attains its minimum, so there exists \(\texttt {Y}\in \mathcal {S}\) such that \(d(\texttt {X}, \texttt {Y}) = d\big (\texttt {X}, \mathcal {S} \cap {d(\texttt {X}, \texttt {S})}\big ) = d(\texttt {X}, \mathcal {S})\).

-

\(\underline{(3 \Rightarrow 1)}\) The empty set is closed. Assume that \(\mathcal {S}\) is non-empty. Then for every point in the closure \(\texttt {X}\in \overline{\mathcal {S}}\) we have \(d(\texttt {X}, \mathcal {S}) = 0\). By assumption this distance is attained, i.e. we have \(\texttt {Y}\in \mathcal {S}\) such that \(d(\texttt {X}, \texttt {Y}) = 0\), so \(\texttt {X}= \texttt {Y}\in \mathcal {S}\). Thus \(\overline{\mathcal {S}} \subseteq \mathcal {S}\), so \(\mathcal {S}\) is closed.

\(\square \)

In this paper we consider exclusively submanifolds of a Euclidean space which are properly embedded, so closed subsets. We mostly use the term ‘properly embedded’ instead of ‘closed’ to avoid confusion: the term ‘closed manifold’ is usually used in the sense ‘compact manifold with no boundary’ which is a stronger condition (a properly embedded submanifold need not be compact or without boundary, though every compact submanifold is properly embedded).

A manifold can have smooth structure up to any order  ; in that case it is called a

; in that case it is called a  -manifold. A

-manifold. A  -submanifold of \(\mathbb {R}^n\) is a

-submanifold of \(\mathbb {R}^n\) is a  -manifold which is a subset of \(\mathbb {R}^n\) and the inclusion map is

-manifold which is a subset of \(\mathbb {R}^n\) and the inclusion map is  .

.

If \(\mathcal {N}\) is at least a  -manifold, one may abstractly define the tangent space

-manifold, one may abstractly define the tangent space  and the normal space

and the normal space  at any point \(\texttt {X}\in \mathcal {N}\) (\(\texttt {X}\) is allowed to be a boundary point). As we restrict ourselves to submanifolds of \(\mathbb {R}^n\), we also treat the tangent and the normal space as affine subspaces of \(\mathbb {R}^n\), with the origins of

at any point \(\texttt {X}\in \mathcal {N}\) (\(\texttt {X}\) is allowed to be a boundary point). As we restrict ourselves to submanifolds of \(\mathbb {R}^n\), we also treat the tangent and the normal space as affine subspaces of \(\mathbb {R}^n\), with the origins of  and

and  placed at \(\texttt {X}\). The dimension of

placed at \(\texttt {X}\). The dimension of  (resp.

(resp.  ) is the same as the dimension (resp. codimension) of \(\mathcal {N}\) at \(\texttt {X}\). Because of this and because

) is the same as the dimension (resp. codimension) of \(\mathcal {N}\) at \(\texttt {X}\). Because of this and because  and

and  are orthogonal, they together generate \(\mathbb {R}^n\).

are orthogonal, they together generate \(\mathbb {R}^n\).

Definition 2.2

Let \(\mathcal {N}\) be a  -submanifold of \(\mathbb {R}^n\), \(\texttt {X}\in \mathcal {N}\) and \(m\) the dimension of \(\mathcal {N}\) at \(\texttt {X}\).

-submanifold of \(\mathbb {R}^n\), \(\texttt {X}\in \mathcal {N}\) and \(m\) the dimension of \(\mathcal {N}\) at \(\texttt {X}\).

-

A tangent-normal coordinate system at \(\texttt {X}\in \mathcal {N}\) is an \(n\)-dimensional orthonormal coordinate system with the origin in \(\texttt {X}\), the first \(m\) coordinate axes tangent to \(\mathcal {N}\) at \(\texttt {X}\) and the last \(n-m\) axes normal to \(\mathcal {N}\) at \(\texttt {X}\).

-

A planar tangent-normal coordinate system at \(\texttt {X}\in \mathcal {N}\) is a two-dimensional plane in \(\mathbb {R}^n\) containing \(\texttt {X}\), together with the choice of an orthonormal coordinate system lying on it, with the origin in \(\texttt {X}\), the first axis (the abscissa) tangent to \(\mathcal {N}\) at \(\texttt {X}\) and the second axis (the ordinate) normal to \(\mathcal {N}\) at \(\texttt {X}\).

Recall from Proposition 2.1 that distances from points to a non-empty properly embedded submanifold are attained. However, these distances need not be attained in just one point. We recall the familiar definitions of the medial axis and the reach.

Definition 2.3

The medial axis  of a submanifold \(\mathcal {N}\subseteq \mathbb {R}^n\) is the set of all points in the ambient space for which the distance to \(\mathcal {N}\) is attained in at least two points:

of a submanifold \(\mathcal {N}\subseteq \mathbb {R}^n\) is the set of all points in the ambient space for which the distance to \(\mathcal {N}\) is attained in at least two points:

The reach of \(\mathcal {N}\), denoted by  , is the distance between the manifold \(\mathcal {N}\) and its medial axis

, is the distance between the manifold \(\mathcal {N}\) and its medial axis  (if

(if  is empty,Footnote 3 the reach is defined to be \(\infty \)).

is empty,Footnote 3 the reach is defined to be \(\infty \)).

The manifold and its medial axis are always disjoint.

Definition 2.4

Let \(\mathcal {N}\) be a  -submanifold of \(\mathbb {R}^n\), \(\texttt {X}\in \mathcal {N}\) and \(\textbf{N}\) a non-zero normal vector to \(\mathcal {N}\) at \(\texttt {X}\). The

-submanifold of \(\mathbb {R}^n\), \(\texttt {X}\in \mathcal {N}\) and \(\textbf{N}\) a non-zero normal vector to \(\mathcal {N}\) at \(\texttt {X}\). The  -ball, associated to \(\texttt {X}\) and \(\textbf{N}\), is the closed ball (in \(\mathbb {R}^n\), so \(n\)-dimensional) with radius

-ball, associated to \(\texttt {X}\) and \(\textbf{N}\), is the closed ball (in \(\mathbb {R}^n\), so \(n\)-dimensional) with radius  and centered at

and centered at  , which therefore touches \(\mathcal {N}\) at \(\texttt {X}\).Footnote 4 A

, which therefore touches \(\mathcal {N}\) at \(\texttt {X}\).Footnote 4 A  -ball, associated to \(\texttt {X}\), is the

-ball, associated to \(\texttt {X}\), is the  -ball, associated to \(\texttt {X}\) and some non-zero normal vector to \(\mathcal {N}\) at \(\texttt {X}\).

-ball, associated to \(\texttt {X}\) and some non-zero normal vector to \(\mathcal {N}\) at \(\texttt {X}\).

The significance of associated  -balls is that they provide restrictions to where a manifold is situated. Specifically, a manifold is disjoint with the interior of its every associated

-balls is that they provide restrictions to where a manifold is situated. Specifically, a manifold is disjoint with the interior of its every associated  -ball.

-ball.

We will approximate manifolds with a union of ellipsoids (similar as to how one uses a union of balls to approximate a subspace in the case of a Čech complex). The idea is to use ellipsoids which are elongated in directions, tangent to the manifold, so that they “extend longer in the direction the manifold does”, so that we require a sample with lower density.

Let us define the kind of ellipsoids we use in this paper.

Definition 2.5

Let \(\mathcal {N}\) be a  -submanifold of \(\mathbb {R}^n\) and \(p\in \mathbb {R}_{> 0}\). The tangent-normal open (resp. closed) \(p\)-ellipsoid at \(\texttt {X}\in \mathcal {N}\) is the open (resp. closed) ellipsoid in \(\mathbb {R}^n\) with the center in \(\texttt {X}\), the tangent semi-axes of length

-submanifold of \(\mathbb {R}^n\) and \(p\in \mathbb {R}_{> 0}\). The tangent-normal open (resp. closed) \(p\)-ellipsoid at \(\texttt {X}\in \mathcal {N}\) is the open (resp. closed) ellipsoid in \(\mathbb {R}^n\) with the center in \(\texttt {X}\), the tangent semi-axes of length  and the normal semi-axes of length \(p\). Explicitly, in a tangent-normal coordinate system at \(\texttt {X}\) the tangent-normal open and closed \(p\)-ellipsoids are given by

and the normal semi-axes of length \(p\). Explicitly, in a tangent-normal coordinate system at \(\texttt {X}\) the tangent-normal open and closed \(p\)-ellipsoids are given by

where \(m\) denotes the dimension of \(\mathcal {N}\) at \(\texttt {X}\). If  , then these “ellipsoids” are simply thickenings of

, then these “ellipsoids” are simply thickenings of  :

:

Observe that the definitions of ellipsoids are independent of the choice of the tangent-normal coordinate system; they depend only on the submanifold itself.

The value \(p\) in the definition of ellipsoids serves as a “persistence parameter” (Ghrist 2008; Carlsson 2009; Carlsson and Zomorodian 2005; Edelsbrunner et al. 2002; Breiding et al. 2018). We purposefully do not take ellipsoids which are similar at all \(p\) (which would mean that the ratio between the tangent and the normal semi-axes was constant). Rather, we want ellipsoids which are more elongated (have higher eccentricity) for smaller \(p\). This is because on a smaller scale a smooth manifold more closely aligns with its tangent space, and then so should the ellipsoids. We want the length of the major semi-axes to be a function of \(p\) with the following properties: for each \(p\) its value is larger than \(p\), and when \(p\) goes to 0, the function value also goes to 0, but the eccentricity goes to 1. In addition, the function should allow the following argument. If we change the unit length of the coordinate system, but otherwise leave the manifold “the same”, we want the ellipsoids to remain “the same” as well, but the reach of the manifold changes by the same factor as the unit length, which the function should take into account. The simplest function satisfying all these properties is arguably  , which turns out to work for the results we want.

, which turns out to work for the results we want.

Figure 1 shows an example, how a manifold, associated balls and a tangent-normal ellipsoid look like in a tangent-normal coordinate system at some point on the manifold.

We now prove a few results that will be useful later.

Lemma 2.6

Let \(\mathcal {N}\) be a properly embedded  -submanifold of \(\mathbb {R}^n\). Let \(\texttt {X}\in \mathcal {N}\) and let \(m\) be the dimension of \(\mathcal {N}\) at \(\texttt {X}\). Assume \(0< m< n\).

-submanifold of \(\mathbb {R}^n\). Let \(\texttt {X}\in \mathcal {N}\) and let \(m\) be the dimension of \(\mathcal {N}\) at \(\texttt {X}\). Assume \(0< m< n\).

-

1.

For every \(\texttt {Y}\in \mathbb {R}^n\) a planar tangent-normal coordinate system at \(\texttt {X}\in \mathcal {N}\) exists which contains \(\texttt {Y}\). Without loss of generality we may require that the coordinates of \(\texttt {Y}\) in this coordinate system are non-negative (\(\texttt {Y}\) lies in the closed first quadrant).

-

2.

If

and \(\textbf{N}\) is a vector, normal to

and \(\textbf{N}\) is a vector, normal to  at \(\texttt {Y}\), then we may additionally assume that the planar tangent-normal coordinate system from the previous item contains \(\textbf{N}\).

at \(\texttt {Y}\), then we may additionally assume that the planar tangent-normal coordinate system from the previous item contains \(\textbf{N}\). -

3.

Let \(\mathcal {O}\) be a closed \((n-m+1)\)-dimensional ball,

-embedded in \(\mathbb {R}^n\) (in particular

-embedded in \(\mathbb {R}^n\) (in particular  is a

is a  -submanifold of \(\mathbb {R}^n\), diffeomorphic to an \((n-m)\)-dimensional sphere). Assume that

-submanifold of \(\mathbb {R}^n\), diffeomorphic to an \((n-m)\)-dimensional sphere). Assume that  and that \(\mathcal {N}\) and

and that \(\mathcal {N}\) and  intersect transversely at \(\texttt {X}\) (i.e.

intersect transversely at \(\texttt {X}\) (i.e.  and

and  linearly generate the whole \(\mathbb {R}^n\), or equivalently, these two tangent spaces intersect at a single point). Then \(\texttt {X}\) is not the only intersection point, i.e. there exists

linearly generate the whole \(\mathbb {R}^n\), or equivalently, these two tangent spaces intersect at a single point). Then \(\texttt {X}\) is not the only intersection point, i.e. there exists  .

. -

4.

Assume

. Let \(\texttt {Y}\in \mathbb {R}^n\) and let \((y_T, y_N)\) be the (non-negative) coordinates of \(\texttt {Y}\) in the planar tangent-normal coordinate system from the first item. Let \(\mathcal {D}\) be the set of centers of all

. Let \(\texttt {Y}\in \mathbb {R}^n\) and let \((y_T, y_N)\) be the (non-negative) coordinates of \(\texttt {Y}\) in the planar tangent-normal coordinate system from the first item. Let \(\mathcal {D}\) be the set of centers of all  -balls, associated to \(\texttt {X}\) (i.e. the \((n-m-1)\)-dimensional sphere within

-balls, associated to \(\texttt {X}\) (i.e. the \((n-m-1)\)-dimensional sphere within  with the center in \(\texttt {X}\) and the radius

with the center in \(\texttt {X}\) and the radius  ). Let \(\mathcal {C}\) be the cone which is the convex hull of

). Let \(\mathcal {C}\) be the cone which is the convex hull of  , and assume that

, and assume that  . Then

. Then

Proof

-

1.

Fix an \(n\)-dimensional tangent-normal coordinate system at \(\texttt {X}\in \mathcal {N}\), and let \((y_1, \ldots , y_n)\) be the coordinates of \(\texttt {Y}\). Let \(\textbf{a} = (y_1, \ldots , y_m, 0, \ldots , 0)\), \(\textbf{b} = (0, \ldots , 0, y_{m+1}, \ldots , y_n)\). If both \(\textbf{a}\) and \(\textbf{b}\) are non-zero, they define a (unique) planar tangent-normal coordinate system at \(\texttt {X}\) which contains \(\texttt {Y}\). If \(\textbf{a}\) is zero (resp. \(\textbf{b}\) is zero), choose an arbitrary tangent (resp. normal) direction (we may do this since \(0< m< n\)).

-

2.

Assume that

and \(\textbf{N}\) is a direction, normal to

and \(\textbf{N}\) is a direction, normal to  . In the \(n\)-dimensional tangent-normal coordinate system from the previous item, the boundary

. In the \(n\)-dimensional tangent-normal coordinate system from the previous item, the boundary  is given by the equation

is given by the equation

The gradient of the left-hand side, up to a scalar factor, is

The vector \(\textbf{N}\) has to be parallel to it since

has codimension 1, i.e. a non-zero \(\lambda \in \mathbb {R}\) exists such that

has codimension 1, i.e. a non-zero \(\lambda \in \mathbb {R}\) exists such that  . Hence \(\textbf{N}\) also lies in the plane, determined by \(\textbf{a}\) and \(\textbf{b}\). This proof works for

. Hence \(\textbf{N}\) also lies in the plane, determined by \(\textbf{a}\) and \(\textbf{b}\). This proof works for  , but the required modification for

, but the required modification for  is trivial.

is trivial. -

3.

Since \(\mathcal {O}\) is a compact \((n-m+1)\)-dimensional disk and

is closed, some thickening of \(\mathcal {O}\) exists—denote it by \(\mathcal {T}\)—which is diffeomorphic to an \(n\)-dimensional ball and is still disjoint with

is closed, some thickening of \(\mathcal {O}\) exists—denote it by \(\mathcal {T}\)—which is diffeomorphic to an \(n\)-dimensional ball and is still disjoint with  . With a small perturbation of \(\mathcal {N}\) around

. With a small perturbation of \(\mathcal {N}\) around  (but away from the intersection \(\mathcal {N}\cap \mathcal {O}\) which must remain unchanged) we can achieve that \(\mathcal {N}\) and

(but away from the intersection \(\mathcal {N}\cap \mathcal {O}\) which must remain unchanged) we can achieve that \(\mathcal {N}\) and  only have transversal intersections (Lee 2013). Imagine \(\mathbb {R}^n\) embedded into its one-point compactification \(S^n\) (denote the added point by \(\infty \)) in such a way that \(\mathcal {T}\) is a hemisphere. Replace the part of \(\mathcal {N}\) outside of \(\mathcal {T}\) with a copy of \(\mathcal {N}\cap \mathcal {T}\), reflected over

only have transversal intersections (Lee 2013). Imagine \(\mathbb {R}^n\) embedded into its one-point compactification \(S^n\) (denote the added point by \(\infty \)) in such a way that \(\mathcal {T}\) is a hemisphere. Replace the part of \(\mathcal {N}\) outside of \(\mathcal {T}\) with a copy of \(\mathcal {N}\cap \mathcal {T}\), reflected over  , and denote the obtained space by \(\mathcal {N}'\). This is an embedding of the so-called double of the manifold \(\mathcal {N}\cap \mathcal {T}\). Then \(\mathcal {N}'\) is a manifold without boundary, closed in the sphere, and therefore compact. If necessary, perturb it slightly around the point \(\infty \), so that \(\infty \notin \mathcal {N}'\). Hence \(\mathcal {N}'\) is a compact submanifold in \(\mathbb {R}^n\) without boundary and

, and denote the obtained space by \(\mathcal {N}'\). This is an embedding of the so-called double of the manifold \(\mathcal {N}\cap \mathcal {T}\). Then \(\mathcal {N}'\) is a manifold without boundary, closed in the sphere, and therefore compact. If necessary, perturb it slightly around the point \(\infty \), so that \(\infty \notin \mathcal {N}'\). Hence \(\mathcal {N}'\) is a compact submanifold in \(\mathbb {R}^n\) without boundary and  -smooth everywhere except possibly on

-smooth everywhere except possibly on  . The double of a

. The double of a  -manifold can be equipped with a

-manifold can be equipped with a  -structure. Therefore we can use Whitney’s approximation theorem (Lee 2013) to adjust the embedding of \(\mathcal {N}'\) on a neighbourhood of

-structure. Therefore we can use Whitney’s approximation theorem (Lee 2013) to adjust the embedding of \(\mathcal {N}'\) on a neighbourhood of  away from \(\mathcal {O}\), so that it is

away from \(\mathcal {O}\), so that it is  -smooth everywhere. The result is a compact manifold \(\mathcal {N}'\) without boundary satisfying all the properties we required of \(\mathcal {N}\), and we have \(\mathcal {N}' \cap \mathcal {O} = \mathcal {N}\cap \mathcal {O}\). This shows that we may without loss of generality assume that \(\mathcal {N}\) is compact without boundary. Any compact k-dimensional submanifold of \(S^n\) without boundary represents an element in the cohomology \(H^k(S^n; \mathbb {Z}_2)\) (we take the \(\mathbb {Z}_2\)-coeficients, so that we do not have to worry about orientation). For elements \([\mathcal {N}] \in H^m(S^n; \mathbb {Z}_2)\) and

-smooth everywhere. The result is a compact manifold \(\mathcal {N}'\) without boundary satisfying all the properties we required of \(\mathcal {N}\), and we have \(\mathcal {N}' \cap \mathcal {O} = \mathcal {N}\cap \mathcal {O}\). This shows that we may without loss of generality assume that \(\mathcal {N}\) is compact without boundary. Any compact k-dimensional submanifold of \(S^n\) without boundary represents an element in the cohomology \(H^k(S^n; \mathbb {Z}_2)\) (we take the \(\mathbb {Z}_2\)-coeficients, so that we do not have to worry about orientation). For elements \([\mathcal {N}] \in H^m(S^n; \mathbb {Z}_2)\) and  we know (see Bredon 2013, Chapter VI, Section 11 for the relevant definitions and results) that their cup-product

we know (see Bredon 2013, Chapter VI, Section 11 for the relevant definitions and results) that their cup-product  is the intersection number of \(\mathcal {N}\) and

is the intersection number of \(\mathcal {N}\) and  (times the generator). Since the cohomology of \(S^n\) is trivial except in dimensions 0 and \(n\), we have

(times the generator). Since the cohomology of \(S^n\) is trivial except in dimensions 0 and \(n\), we have  , and hence

, and hence  . But the local intersection number at the transversal intersection \(\texttt {X}\) is 1, and the intersection number is the sum of local ones, so \(\texttt {X}\) cannot be the only point in

. But the local intersection number at the transversal intersection \(\texttt {X}\) is 1, and the intersection number is the sum of local ones, so \(\texttt {X}\) cannot be the only point in  .

. -

4.

First consider the case when

, i.e. \(y_T = 0\). Then

, i.e. \(y_T = 0\). Then

Now suppose

. Then the cone \(\mathcal {C}\) is homeomorphic to an \((n-m+1)\)-dimensional closed ball. This \(\mathcal {C}\) and its boundary are smooth everywhere except in \(\texttt {Y}\) and on \(\mathcal {D}\). Let \(\mathcal {E}\) be the \((n-m+1)\)-dimensional affine subspace which contains \(\texttt {Y}\) and

. Then the cone \(\mathcal {C}\) is homeomorphic to an \((n-m+1)\)-dimensional closed ball. This \(\mathcal {C}\) and its boundary are smooth everywhere except in \(\texttt {Y}\) and on \(\mathcal {D}\). Let \(\mathcal {E}\) be the \((n-m+1)\)-dimensional affine subspace which contains \(\texttt {Y}\) and  (thus the whole \(\mathcal {C}\)). We can smooth

(thus the whole \(\mathcal {C}\)). We can smooth  around the centers of the associated balls within \(\mathcal {E}\) without affecting the intersection with \(\mathcal {N}\) since \(\mathcal {N}\) is disjoint with the interiors of the associated

around the centers of the associated balls within \(\mathcal {E}\) without affecting the intersection with \(\mathcal {N}\) since \(\mathcal {N}\) is disjoint with the interiors of the associated  -balls. If \(\texttt {Y}\in \mathcal {N}\), then

-balls. If \(\texttt {Y}\in \mathcal {N}\), then  , and we are done. If \(\texttt {Y}\notin \mathcal {N}\), then \(d(\mathcal {N}, \texttt {Y}) > 0\) since \(\mathcal {N}\) is a closed subset. Then we can also smooth

, and we are done. If \(\texttt {Y}\notin \mathcal {N}\), then \(d(\mathcal {N}, \texttt {Y}) > 0\) since \(\mathcal {N}\) is a closed subset. Then we can also smooth  around \(\texttt {Y}\) within \(\mathcal {E}\) without affecting the intersection with \(\mathcal {N}\). The boundary smoothed in this way is diffeomorphic to an \((n-m)\)-dimensional sphere, and so by the generalized Schoenflies theorem splits \(\mathcal {E}\) into the inner part, diffeomorphic to an \((n-m+1)\)-dimensional ball, and the outer unbounded part. Since \(\mathcal {N}\) intersects

around \(\texttt {Y}\) within \(\mathcal {E}\) without affecting the intersection with \(\mathcal {N}\). The boundary smoothed in this way is diffeomorphic to an \((n-m)\)-dimensional sphere, and so by the generalized Schoenflies theorem splits \(\mathcal {E}\) into the inner part, diffeomorphic to an \((n-m+1)\)-dimensional ball, and the outer unbounded part. Since \(\mathcal {N}\) intersects  and therefore also its smoothed version orthogonally in \(\texttt {X}\) (because the smoothing was done at positive distance from \(\mathcal {N}\)), this intersection is transversal. By the previous item another intersection point

and therefore also its smoothed version orthogonally in \(\texttt {X}\) (because the smoothing was done at positive distance from \(\mathcal {N}\)), this intersection is transversal. By the previous item another intersection point  exists. It cannot lie in

exists. It cannot lie in  since we would then have a manifold point in the interior of some associated ball, so \(\texttt {X}'\) must lie on the lateral surface of the cone. That is, \(\texttt {X}'\) lies on the line segment between \(\texttt {Y}\) and some associated ball center, but it cannot lie in the interior of the associated ball, so \(d(\texttt {X}', \texttt {Y})\) is bounded by the distance between \(\texttt {Y}\) and the furthest associated ball center, decreased by

since we would then have a manifold point in the interior of some associated ball, so \(\texttt {X}'\) must lie on the lateral surface of the cone. That is, \(\texttt {X}'\) lies on the line segment between \(\texttt {Y}\) and some associated ball center, but it cannot lie in the interior of the associated ball, so \(d(\texttt {X}', \texttt {Y})\) is bounded by the distance between \(\texttt {Y}\) and the furthest associated ball center, decreased by  . The furthest center is the one within the starting planar tangent-normal coordinate system that has coordinates

. The furthest center is the one within the starting planar tangent-normal coordinate system that has coordinates  . Thus

. Thus

\(\square \)

Lemma 2.7

Let \(A, B \in \mathbb {R}_{\ge 0}\) which are not both 0 and let  . Then a unique \(q \in \mathbb {R}_{> 0}\) exists which solves the equation

. Then a unique \(q \in \mathbb {R}_{> 0}\) exists which solves the equation

Moreover, this q depends continuously on A and B, and if \((A, B) \rightarrow (0, 0)\) (with \(\tau \) fixed), then \(q \rightarrow 0\).

Proof

If \(A = 0\), then clearly \(q = \sqrt{B} > 0\) works. If \(B = 0\), then the unique positive solution to the quadratic equation  is

is  .

.

Assume that \(A, B > 0\). Multiply the equation from the lemma by  and take all terms to one side of the equation to get

and take all terms to one side of the equation to get

Define the function \(f:\mathbb {R}\rightarrow \mathbb {R}\) by  . The zeros of its derivative

. The zeros of its derivative  are

are

since \(A+B > 0\), both zeros are real and one is negative, the other positive. Let z denote the positive zero. We have  and \(f'\) is \(\le 0\) on \({\mathbb {R}}_{[0, z]}\), so f cannot have a zero here, and \(f(z) < 0\). Since f is strictly increasing on \(\mathbb {R}_{> z}\) and \(\lim _{x \rightarrow \infty } f(x) = \infty \), we conclude that f has a unique zero on \(\mathbb {R}_{> z}\) and therefore also on \(\mathbb {R}_{> 0}\).

and \(f'\) is \(\le 0\) on \({\mathbb {R}}_{[0, z]}\), so f cannot have a zero here, and \(f(z) < 0\). Since f is strictly increasing on \(\mathbb {R}_{> z}\) and \(\lim _{x \rightarrow \infty } f(x) = \infty \), we conclude that f has a unique zero on \(\mathbb {R}_{> z}\) and therefore also on \(\mathbb {R}_{> 0}\).

Since q is the root of the polynomial \(q^3 + \tau q^2 - (A+B) q - \tau B\) and polynomial roots depend continuously on the coefficients, q depends continuously on A and B as well. In particular, if A and B tend to 0, then q tends to one of the roots of \(q^3 + \tau q^2\). It cannot tend to \(-\tau \) since it is positive, so it tends to 0. \(\square \)

Given a properly embedded  -submanifold \(\mathcal {N}\subseteq \mathbb {R}^n\) without boundary and a point \(\texttt {Y}\in \mathcal {N}\), the dimension of \(\mathcal {N}\) at which we denote by \(m\), let us define the continuous function \(q_{\texttt {Y}}:\mathbb {R}^n\rightarrow \mathbb {R}_{\ge 0}\) in the following way.

-submanifold \(\mathcal {N}\subseteq \mathbb {R}^n\) without boundary and a point \(\texttt {Y}\in \mathcal {N}\), the dimension of \(\mathcal {N}\) at which we denote by \(m\), let us define the continuous function \(q_{\texttt {Y}}:\mathbb {R}^n\rightarrow \mathbb {R}_{\ge 0}\) in the following way.

Definition 2.8

If  , then \({q_{\texttt {Y}}(\texttt {X}) \mathrel {\mathop :}=d(\texttt {X}, \mathcal {N})}\) (this also covers the case \(m= n\) since then necessarily \(\mathcal {N}= \mathbb {R}^n\)). Otherwise, if \(\mathcal {N}\) has dimension 0, then \({q_{\texttt {Y}}(\texttt {X}) \mathrel {\mathop :}=d(\texttt {X}, \texttt {Y})}\). If both the dimension and codimension of \(\mathcal {N}\) are positive and

, then \({q_{\texttt {Y}}(\texttt {X}) \mathrel {\mathop :}=d(\texttt {X}, \mathcal {N})}\) (this also covers the case \(m= n\) since then necessarily \(\mathcal {N}= \mathbb {R}^n\)). Otherwise, if \(\mathcal {N}\) has dimension 0, then \({q_{\texttt {Y}}(\texttt {X}) \mathrel {\mathop :}=d(\texttt {X}, \texttt {Y})}\). If both the dimension and codimension of \(\mathcal {N}\) are positive and  , we split the definition of \(q_{\texttt {Y}}\) into two cases. Let \(q_{\texttt {Y}}(\texttt {Y}) \mathrel {\mathop :}=0\). For

, we split the definition of \(q_{\texttt {Y}}\) into two cases. Let \(q_{\texttt {Y}}(\texttt {Y}) \mathrel {\mathop :}=0\). For  introduce a tangent-normal coordinate system with the origin in \(\texttt {Y}\) (it exists by Lemma 2.6(1)). Let \(\texttt {X}= (x_1, \ldots , x_n)\) be the coordinates of \(\texttt {X}\) in this coordinate system. Define \(q_{\texttt {Y}}(\texttt {X})\) to be the unique element in \(\mathbb {R}_{> 0}\) which satisfies the equation

introduce a tangent-normal coordinate system with the origin in \(\texttt {Y}\) (it exists by Lemma 2.6(1)). Let \(\texttt {X}= (x_1, \ldots , x_n)\) be the coordinates of \(\texttt {X}\) in this coordinate system. Define \(q_{\texttt {Y}}(\texttt {X})\) to be the unique element in \(\mathbb {R}_{> 0}\) which satisfies the equation

Since the sum of squares of coordinates is independent of the choice of an orthonormal coordinate system, this equation depends only on \(\texttt {X}\) and \(\texttt {Y}\). Lemma 2.7 guarantees existence, uniqueness and continuity of \(q_{\texttt {Y}}(\texttt {X})\).

The point of this definition is that (except in the case \(m= n\), when all ellipsoids are the whole \(\mathbb {R}^n\)) the unique ellipsoid of the form  which has \(\texttt {X}\) in its boundary has \(r = q_{\texttt {Y}}(\texttt {X})\), i.e.

which has \(\texttt {X}\) in its boundary has \(r = q_{\texttt {Y}}(\texttt {X})\), i.e.  .

.

Lemma 2.9

Let \(\mathcal {N}\) be a properly embedded  -submanifold of \(\mathbb {R}^n\). Let \(\texttt {X}\in \mathbb {R}^n\) and \(\texttt {Y}\in \mathcal {N}\). Then \(d(\mathcal {N}, \texttt {X}) \le q_{\texttt {Y}}(\texttt {X})\).

-submanifold of \(\mathbb {R}^n\). Let \(\texttt {X}\in \mathbb {R}^n\) and \(\texttt {Y}\in \mathcal {N}\). Then \(d(\mathcal {N}, \texttt {X}) \le q_{\texttt {Y}}(\texttt {X})\).

Proof

If  , the statement is clear, so assume

, the statement is clear, so assume  .

.

Let \(m\) be the dimension of \(\mathcal {N}\) at \(\texttt {Y}\). If \(m= 0\), then \(d(\mathcal {N}, \texttt {X}) \le d(\texttt {Y}, \texttt {X}) = q_{\texttt {Y}}(\texttt {X})\).

For \(0< m< n\) we rely on Lemma 2.6. There is a planar tangent-normal coordinate system which has the origin in \(\texttt {Y}\) and contains \(\texttt {X}\). We can additionally assume that the axes are oriented so that \(\texttt {X}\) is in the closed first quadrant. Since  , there exists \(\varphi \in {\mathbb {R}}_{[0, \frac{\pi }{2}]}\) such that the coordinates of \(\texttt {X}\) in this coordinate system are

, there exists \(\varphi \in {\mathbb {R}}_{[0, \frac{\pi }{2}]}\) such that the coordinates of \(\texttt {X}\) in this coordinate system are

where we have shortened \(q \mathrel {\mathop :}=q_{\texttt {Y}}(\texttt {X})\). Hence

Clearly, the last expression is the largest where the function \({\mathbb {R}}_{[0, \frac{\pi }{2}]} \rightarrow \mathbb {R}\), \(\varphi \mapsto 2 - (1 - \sin (\varphi ))^2\) attains a maximum which is at \(\varphi = \tfrac{\pi }{2}\). Thus the distance \(d(\mathcal {N}, \texttt {X})\) is the largest in the normal space at \(\texttt {Y}\), where we get

\(\square \)

Let us also recall some facts about Lipschitz maps that we will need later. A map f between subsets of Euclidean spaces is Lipschitz when it has a Lipschitz coefficient \(C \in \mathbb {R}_{\ge 0}\), so that for all \(\texttt {X}, \texttt {Y}\) in the domain of f we have \(\big \Vert f(\texttt {X}) - f(\texttt {Y})\big \Vert \le C \cdot \Vert \texttt {X}- \texttt {Y}\Vert \). A function is locally Lipschitz when every point of its domain has a neighbourhood such that the restriction of the function to this neighbourhood is Lipschitz.

Let f and g be maps with Lipschitz coefficients C and D, respectively. Then clearly \(C+D\) is a Lipschitz coefficient for the functions \(f+g\) and \(f-g\), and \(C \cdot D\) is a Lipschitz coefficient for \(g \circ f\) (whenever these functions exist).

For bounded functions the Lipschitz property is preserved under further operations. A function being bounded is meant in the usual way, i.e. being bounded in norm.

Lemma 2.10

Let f and g be maps between subsets of Euclidean spaces with the same domain. Assume that f and g are bounded and Lipschitz.

-

1.

If b is bilinear with the property \(\big \Vert b(\texttt {X}, \texttt {Y})\big \Vert \le \Vert \texttt {X}\Vert \, \Vert \texttt {Y}\Vert \), then the map \(\texttt {X}\mapsto b\big (f(\texttt {X}), g(\texttt {X})\big )\) is bounded Lipschitz.Footnote 5

-

2.

Assume g takes values in \(\mathbb {R}\) and has a positive lower bound \(m \in \mathbb {R}_{> 0}\). Then the map \(x \mapsto \frac{f(\texttt {X})}{g(\texttt {X})}\) is bounded Lipschitz.

Proof

Let M be an upper bound for the norms of f and g and let C be a Lipschitz coefficient for f and g. Let \(\texttt {X}\), \(\texttt {X}'\) and \(\texttt {X}''\) be elements of the domain of f and g.

-

1.

Boundedness: \(\displaystyle {\big \Vert b\big (f(\texttt {X}), g(\texttt {X})\big )\big \Vert \le \big \Vert f(\texttt {X})\big \Vert \, \big \Vert g(\texttt {X})\big \Vert \le M^2}\). Lipschitz property:

$$\begin{aligned}&\big \Vert b\big (f(\texttt {X}'), g(\texttt {X}')\big ) - b\big (f(\texttt {X}''), g(\texttt {X}'')\big )\big \Vert \\&\quad = \big \Vert b\big (f(\texttt {X}'), g(\texttt {X}')\big ) - b\big (f(\texttt {X}''), g(\texttt {X}')\big ) + b\big (f(\texttt {X}''), g(\texttt {X}')\big ) - b\big (f(\texttt {X}''), g(\texttt {X}'')\big )\big \Vert \\&\quad \le \big \Vert f(\texttt {X}') - f(\texttt {X}'')\big \Vert \, \big \Vert g(\texttt {X}')\big \Vert + \big \Vert f(\texttt {X}'')\big \Vert \, \big \Vert g(\texttt {X}') - g(\texttt {X}'')\big \Vert \\&\quad \le 2 C M \big \Vert \texttt {X}' - \texttt {X}''\big \Vert . \end{aligned}$$ -

2.

Boundedness: \(\displaystyle {\Big \Vert \frac{f(\texttt {X})}{g(\texttt {X})}\Big \Vert = \frac{\Vert f(\texttt {X})\Vert }{|g(\texttt {X})|} \le \frac{M}{m}}\). Lipschitz property:

$$\begin{aligned} \Big \Vert \frac{f(\texttt {X}')}{g(\texttt {X}')} - \frac{f(\texttt {X}'')}{g(\texttt {X}'')}\Big \Vert&= \Big \Vert \frac{f(\texttt {X}') g(\texttt {X}'') - f(\texttt {X}'') g(\texttt {X}')}{g(\texttt {X}') g(\texttt {X}'')}\Big \Vert \\&= \frac{\Vert f(\texttt {X}') g(\texttt {X}'') - f(\texttt {X}'') g(\texttt {X}'') + f(\texttt {X}'') g(\texttt {X}'') - f(\texttt {X}'') g(\texttt {X}')\Vert }{|g(\texttt {X}') g(\texttt {X}'')|} \\&\le \frac{\Vert f(\texttt {X}') - f(\texttt {X}'')\Vert \, \Vert g(\texttt {X}'')\Vert + \Vert f(\texttt {X}'')\Vert \, \Vert g(\texttt {X}'') - g(\texttt {X}')\Vert }{|g(\texttt {X}')| \, |g(\texttt {X}'')|} \\&\le \frac{2 C M}{m^2} \big \Vert \texttt {X}' - \texttt {X}''\big \Vert . \end{aligned}$$

\(\square \)

Corollary 2.11

Let \((U_i)_{i \in I}\) be a locally finite open cover of a subset U of a Euclidean space, \((f_i)_{i \in I}\) a subordinate smooth partition of unity and \((g_i:U_i \rightarrow \mathbb {R}^n)_{i \in I}\) a family of maps. Let \(g:U \rightarrow \mathbb {R}^n\) be the map, obtained by gluing maps \(g_i\) with the partition of unity \(f_i\), i.e.

Then if all \(g_i\) are locally Lipschitz, so is g.

Proof

Every continuous map is locally bounded, including the derivative of a smooth map, the bound on which is then a local Lipschitz coefficient for the map. We can apply this for \(f_i\).

Given \(x \in U\), pick an open set \(V \subseteq U\), for which the following holds: \(x \in V\), there is a finite set of indices \(F \subseteq I\) such that V intersects only \(U_i\) with \(i \in F\) and \(V \subseteq \bigcap _{i \in F} U_i\), and the maps \(f_i\) and \(g_i\) are bounded and Lipschitz on V for every \(i \in F\). Then \(\left. {g}\right| _V = \sum _{i \in F} \left. {f_i}\right| _V \;\! \left. {g_i}\right| _V\) which is Lipschitz on V by Lemma 2.10. \(\square \)

3 Calculating bounds on persistence parameter

Having derived some results for more general manifolds, we now specify the manifolds for which our main theorem holds. We reserve the symbol \(\mathcal {M}\) for such a manifold.

Let \(\mathcal {M}\) be a non-empty \(m\)-dimensional properly embedded  -submanifold of \(\mathbb {R}^n\) without boundary, and let \({\mathcal {A}}\) be its medial axis. Let \(\tau \) denote the reach of \(\mathcal {M}\). In this section we assume \(\tau < \infty \) and in Sects. 4 and 5 we assume \(\tau = 1\). We will drop these assumptions on \(\tau \) for the main theorem in Sect. 6.

-submanifold of \(\mathbb {R}^n\) without boundary, and let \({\mathcal {A}}\) be its medial axis. Let \(\tau \) denote the reach of \(\mathcal {M}\). In this section we assume \(\tau < \infty \) and in Sects. 4 and 5 we assume \(\tau = 1\). We will drop these assumptions on \(\tau \) for the main theorem in Sect. 6.

By Proposition 2.1 and the definition of a medial axis the map \(pr:\mathbb {R}^n\setminus {\mathcal {A}} \rightarrow \mathcal {M}\), which takes a point to its closest point on the manifold \(\mathcal {M}\), is well defined. We also define  , \(prv(\texttt {X}) \mathrel {\mathop :}=pr(\texttt {X}) - \texttt {X}\). We view \(prv(\texttt {X})\) as the vector, starting at \(\texttt {X}\) and ending in \(pr(\texttt {X})\). This vector is necessarily normal to the manifold, i.e. it lies in

, \(prv(\texttt {X}) \mathrel {\mathop :}=pr(\texttt {X}) - \texttt {X}\). We view \(prv(\texttt {X})\) as the vector, starting at \(\texttt {X}\) and ending in \(pr(\texttt {X})\). This vector is necessarily normal to the manifold, i.e. it lies in  . By the definition of the reach, the maps \(pr\) and \(prv\) are defined on

. By the definition of the reach, the maps \(pr\) and \(prv\) are defined on  .

.

Lemma 3.1

For every  the maps \(pr\) and \(prv\) are Lipschitz when restricted to \(\overline{\mathcal {M}}_{r}\), with Lipschitz coefficients

the maps \(pr\) and \(prv\) are Lipschitz when restricted to \(\overline{\mathcal {M}}_{r}\), with Lipschitz coefficients  and

and  , respectively. Hence these two maps are continuous on

, respectively. Hence these two maps are continuous on  .

.

Proof

The map \(pr\) is Lipschitz on \(\overline{\mathcal {M}}_{r}\) by Chazal et al. (2017, Proposition 2) with a Lipschitz coefficient  (Federer 1959, Theorem 4.8(8)). As a difference of two Lipschitz maps, the map \(prv\) is Lipschitz as well, with a Lipschitz coefficient

(Federer 1959, Theorem 4.8(8)). As a difference of two Lipschitz maps, the map \(prv\) is Lipschitz as well, with a Lipschitz coefficient  . The maps \(pr\) and \(prv\) are therefore continuous on \(\mathcal {M}_{r}\) for all

. The maps \(pr\) and \(prv\) are therefore continuous on \(\mathcal {M}_{r}\) for all  , and hence also on the union . \(\square \)

, and hence also on the union . \(\square \)

We want to approximate the manifold \(\mathcal {M}\) with a sample. We assume that the sample set \(\mathcal {S}\) is a non-empty discrete subset of \(\mathcal {M}\), locally finite in \(\mathbb {R}^n\) (meaning, every point in \(\mathbb {R}^n\) has a neighbourhood which intersects only finitely many points of \(\mathcal {S}\)). It follows that \(\mathcal {S}\) is a closed subset of \(\mathbb {R}^n\).

Let \(\varkappa \) denote the Hausdorff distance between \(\mathcal {M}\) and \(\mathcal {S}\). We assume that \(\varkappa \) is finite. This value represents the density of our sample: it means that every point on the manifold \(\mathcal {M}\) has a point in the sample \(\mathcal {S}\) which is at most \(\varkappa \) away.

Since \(\mathcal {M}\) is properly embedded in \(\mathbb {R}^n\) and \(\varkappa < \infty \), the sample \(\mathcal {S}\) is finite if and only if \(\mathcal {M}\) is compact. A properly embedded non-compact submanifold without boundary needs to extend to infinity and so cannot be sampled with finitely many points (think for example about the hyperbola in the plane, \(x^2 - y^2 = 1\)). As it turns out, we do not need finiteness, only local finiteness, to prove our results.

If the sample is dense enough in the manifold, it should be a good approximation to it. Specifically, we want to recover at least the homotopy type of \(\mathcal {M}\) from the information, gathered from \(\mathcal {S}\). A common way to do this is to enlarge the sample points to balls, the union of which deformation retracts to the manifold, so has the same homotopy type (in other words, we consider a Čech complex of the sample).

As we already discussed in the introduction, in this paper we use ellipsoids instead of balls, since a tangent space at a point is a good approximation for the manifold at that point, so an ellipsoid with the major semi-axes in the tangent directions better approximates the manifold than a ball. Consequently we should require a less dense sample for the approximation. This idea indeed pans out (as demonstrated by Theorem 6), though it turns out that the standard methods, used to construct the deformation retraction from the union of balls to the manifold, do not work for the ellipsoids.

Given a persistence parameter \(p\in \mathbb {R}_{> 0}\), let us denote the unions of open and closed tangent-normal \(p\)-ellipsoids around sample points by

As a union of open sets,  is open in \(\mathbb {R}^n\). As a locally finite union of closed sets,

is open in \(\mathbb {R}^n\). As a locally finite union of closed sets,  is closed in \(\mathbb {R}^n\).

is closed in \(\mathbb {R}^n\).

We want a deformation retraction from  to \(\mathcal {M}\). Clearly this will not work for all \(p\in \mathbb {R}_{> 0}\). If \(p\) is too small,

to \(\mathcal {M}\). Clearly this will not work for all \(p\in \mathbb {R}_{> 0}\). If \(p\) is too small,  covers only some blobs around sample points, not the whole \(\mathcal {M}\). If \(p\) is too large,

covers only some blobs around sample points, not the whole \(\mathcal {M}\). If \(p\) is too large,  reaches over the medial axis \({\mathcal {A}}\), therefore creates connections which do not exist in the manifold, so differs from it in the homotopy type. This suggests that the lower bound on \(p\) will be expressed in terms of \(\varkappa \) (the denser the sample, the smaller the required \(p\) for

reaches over the medial axis \({\mathcal {A}}\), therefore creates connections which do not exist in the manifold, so differs from it in the homotopy type. This suggests that the lower bound on \(p\) will be expressed in terms of \(\varkappa \) (the denser the sample, the smaller the required \(p\) for  to cover \(\mathcal {M}\)), and the upper bound on \(p\) will be expressed in terms of \(\tau \) (the further away the medial axis, the larger we can make the ellipsoids so that they still do not intersect the medial axis).

to cover \(\mathcal {M}\)), and the upper bound on \(p\) will be expressed in terms of \(\tau \) (the further away the medial axis, the larger we can make the ellipsoids so that they still do not intersect the medial axis).

Lemma 3.2

-

1.

Assume \(p\in \mathbb {R}_{> 0}\) satisfies

. Then

. Then  , i.e.

, i.e.  is an open cover of \(\mathcal {M}\).

is an open cover of \(\mathcal {M}\). -

2.

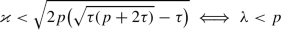

The map \(\mathbb {R}_{> 0} \rightarrow \mathbb {R}_{> 0}\),

, is strictly increasing. Thus there exists a unique \(\lambda \in \mathbb {R}_{> 0}\) such that

, is strictly increasing. Thus there exists a unique \(\lambda \in \mathbb {R}_{> 0}\) such that

for all \(p\in \mathbb {R}_{> 0}\).

Proof

-

1.

Take any \(\texttt {X}\in \mathcal {M}\). By assumption there exists \(\texttt {S}\in \mathcal {S}\) such that \(d(\texttt {X}, \texttt {S}) \le \varkappa \). We claim that

. If \(m= n\), then

. If \(m= n\), then  , and a quick calculation shows that

, and a quick calculation shows that

so

. If \(m= 0\), then

. If \(m= 0\), then  and the reach \(\tau \) is half of the distance between the two closest distinct points in \(\mathcal {M}\) (since we are assuming \(\tau < \infty \) and therefore \({\mathcal {A}} \ne \emptyset \), the manifold \(\mathcal {M}\) must have at least two points). If \(p\le 2\tau \), then

and the reach \(\tau \) is half of the distance between the two closest distinct points in \(\mathcal {M}\) (since we are assuming \(\tau < \infty \) and therefore \({\mathcal {A}} \ne \emptyset \), the manifold \(\mathcal {M}\) must have at least two points). If \(p\le 2\tau \), then

so necessarily

. If \(p> 2\tau \), then

. If \(p> 2\tau \), then

so

. Assume hereafter that \(0< m< n\). Choose a planar tangent-normal coordinate system with the origin in \(\texttt {S}\) which contains \(\texttt {X}\) (use Lemma 2.6(1)). In this coordinate system the boundary of

. Assume hereafter that \(0< m< n\). Choose a planar tangent-normal coordinate system with the origin in \(\texttt {S}\) which contains \(\texttt {X}\) (use Lemma 2.6(1)). In this coordinate system the boundary of  is given by the equation

is given by the equation  . A routine calculation shows that it intersects the boundaries of the \(\tau \)-balls, associated to \(\texttt {S}\) (with centers in \(\texttt {C}' = (0, \tau )\) and \(\texttt {C}'' = (0, -\tau )\)), given by the equations \(x^2 + (y \pm \tau )^2 = \tau ^2\), in the points

. A routine calculation shows that it intersects the boundaries of the \(\tau \)-balls, associated to \(\texttt {S}\) (with centers in \(\texttt {C}' = (0, \tau )\) and \(\texttt {C}'' = (0, -\tau )\)), given by the equations \(x^2 + (y \pm \tau )^2 = \tau ^2\), in the points

the norm of which is

. It follows that within the given two-dimensional coordinate system

. It follows that within the given two-dimensional coordinate system

see Fig. 2. Since \(\texttt {S}\in \mathcal {M}\) and the reach of \(\mathcal {M}\) is \(\tau \), the manifold \(\mathcal {M}\) does not intersect the open \(\tau \)-balls, associated to \(\texttt {S}\), so

.

. -

2.

The derivative of the given function is

which is positive for \(p, \tau > 0\) which assures the existence of the required \(\lambda \). Calculated with Mathematica, the actual value is

$$\begin{aligned}\lambda= & {} \frac{2 \tau \left( 3 \kappa ^2+\tau ^2\right) }{3 \root 3 \of {27 \kappa ^4 \tau ^2-36 \kappa ^2 \tau ^4+3 \sqrt{81 \kappa ^8 \tau ^4-408 \kappa ^6 \tau ^6-96 \kappa ^4 \tau ^8}-8 \tau ^6}}\\ {}{} & {} + \frac{\root 3 \of {27 \kappa ^4 \tau ^2-36 \kappa ^2 \tau ^4+3 \sqrt{81 \kappa ^8 \tau ^4-408 \kappa ^6 \tau ^6-96 \kappa ^4 \tau ^8}-8 \tau ^6}}{6 \tau }-\frac{\tau }{3}.\end{aligned}$$

\(\square \)

We can strengthen this result to thickenings of \(\mathcal {M}\) (recall the notation for open and closed thickenings,  ,

,  ).

).

Corollary 3.3

For every \(r \in \mathbb {R}_{\ge 0}\) and every \(p\in \mathbb {R}_{> \lambda + r}\) we have  .

.

Proof

Lemma 3.2 implies that  . Hence \(\overline{\mathcal {M}}_{r}\) is contained in the union of r-thickenings of open ellipsoids

. Hence \(\overline{\mathcal {M}}_{r}\) is contained in the union of r-thickenings of open ellipsoids  , and an r-thickening of

, and an r-thickening of  is contained in

is contained in  . \(\square \)

. \(\square \)

Let us now also get an upper bound on \(p\).

Lemma 3.4

Assume  . Then

. Then  ; in particular

; in particular  and

and  do not intersect the medial axis of \(\mathcal {M}\).

do not intersect the medial axis of \(\mathcal {M}\).

Proof

Take any \(\texttt {S}\in \mathcal {S}\) and  . By Lemma 2.9 we have

. By Lemma 2.9 we have  . \(\square \)

. \(\square \)

The results in this section give the theoretical bounds on the persistence parameter \(p\), within which we look for a deformation retraction from  to \(\mathcal {M}\), which we summarize in the following corollary (where \({\mathcal {A}}^\complement = \mathbb {R}^n \setminus {\mathcal {A}}\)).

to \(\mathcal {M}\), which we summarize in the following corollary (where \({\mathcal {A}}^\complement = \mathbb {R}^n \setminus {\mathcal {A}}\)).

Corollary 3.5

If  , then

, then  .

.

4 Program

In this section (as well as the next one) we assume that \(\tau = 1\) and \(0< m< n\).

Our goal is to prove that if we restrict the persistence parameter \(p\) to a suitable interval, the union of ellipsoids  deformation retracts to \(\mathcal {M}\). Recall that the normal deformation retraction is the map retracting a point to its closest point on the manifold, i.e. the convex combination of a point and its projection: \({(\texttt {X}, t) \mapsto (1-t) \;\! \texttt {X}+ t \;\! pr(\texttt {X}) = \texttt {X}+ t \;\! prv(\texttt {X})}\). For example, in Niyogi et al. (2008) this is how the union of balls around sample points is deformation retracted to the manifold.

deformation retracts to \(\mathcal {M}\). Recall that the normal deformation retraction is the map retracting a point to its closest point on the manifold, i.e. the convex combination of a point and its projection: \({(\texttt {X}, t) \mapsto (1-t) \;\! \texttt {X}+ t \;\! pr(\texttt {X}) = \texttt {X}+ t \;\! prv(\texttt {X})}\). For example, in Niyogi et al. (2008) this is how the union of balls around sample points is deformation retracted to the manifold.

The same idea does not in general work for the union of ellipsoids, or any other sufficiently elongated figures. Figure 3 shows what can go wrong.

However, it turns out that the only places where the normal deformation retraction does not work are the neighbourhoods of tips of some ellipsoids which avoid all other ellipsoids. This section is dedicated to proving the following form of this claim: for all points in at least two ellipsoids the normal deformation retraction works. This means that the line segment between a point \(\texttt {X}\) and \(pr(\texttt {X})\) is contained in the union of ellipsoids, but actually more holds: the line segment is contained already in one of the ellipsoids. More formally, the rest of the section is the proof of the following lemma.

Lemma 4.1

For every  , if there are \(\texttt {S}', \texttt {S}'' \in \mathcal {S}\), \(\texttt {S}' \ne \texttt {S}''\) such that

, if there are \(\texttt {S}', \texttt {S}'' \in \mathcal {S}\), \(\texttt {S}' \ne \texttt {S}''\) such that  , then there exists \(\texttt {S}\in \mathcal {S}\) such that

, then there exists \(\texttt {S}\in \mathcal {S}\) such that  . By convexity the entire line segment between \(\texttt {X}\) and \(pr(\texttt {X})\) is therefore in

. By convexity the entire line segment between \(\texttt {X}\) and \(pr(\texttt {X})\) is therefore in  .

.

To prove this, we would in principle need to examine all possible configurations of ellipsoids and a point. However, we can restrict ourselves to a set of cases, which include the “worst case scenarios”.

Let \(\texttt {S}', \texttt {S}'' \in \mathcal {S}\) be two different sample points, and let  (we purposefully take closed ellipsoids here). Denote \(\texttt {Y}\mathrel {\mathop :}=pr(\texttt {X})\). We claim that there is \(\texttt {S}\in \mathcal {S}\) (not necessarily distinct from \(\texttt {S}'\) and \(\texttt {S}''\)) such that

(we purposefully take closed ellipsoids here). Denote \(\texttt {Y}\mathrel {\mathop :}=pr(\texttt {X})\). We claim that there is \(\texttt {S}\in \mathcal {S}\) (not necessarily distinct from \(\texttt {S}'\) and \(\texttt {S}''\)) such that  and

and  . Due to convexity of ellipsoids, the line segment \(\texttt {X}\texttt {Y}\) is in

. Due to convexity of ellipsoids, the line segment \(\texttt {X}\texttt {Y}\) is in  ; with the possible exception of the point \(\texttt {X}\), this line segment is in

; with the possible exception of the point \(\texttt {X}\), this line segment is in  .

.

Assuming \(p\in {\mathbb {R}}_{(\lambda , 1)}\), the point \(\texttt {Y}\) is covered by at least one open ellipsoid. Suppose that none of the closed ellipsoids, containing \(\texttt {Y}\) in their interior, contains \(\texttt {X}\). Let us try to construct a situation where this is most likely to be the case. We will derive a contradiction by showing that even in these “worst case scenarios” we fail in satisfying this assumption.

To determine whether a point \(\texttt {X}\) is in the ellipsoid with the center \(\texttt {S}'\), the following two pieces of information are sufficient: the distance between \(\texttt {X}\) and \(\texttt {S}'\), and the angle between the line segment \(\texttt {X}\texttt {S}'\) and the normal space  . Moreover, membership of \(\texttt {X}\) in the ellipsoid is “monotone” with respect to these two conditions: if a point is in the ellipsoid, it will remain so if we decrease its distance to \(\texttt {S}'\) or increase the angle to the normal space.

. Moreover, membership of \(\texttt {X}\) in the ellipsoid is “monotone” with respect to these two conditions: if a point is in the ellipsoid, it will remain so if we decrease its distance to \(\texttt {S}'\) or increase the angle to the normal space.

We will produce a set of configurations which include the extremal points for these two criteria (maximal distance from the ellipsoid center, minimal angle to the normal space). If every such point is still in the ellipsoid, then all possible points are.

Consider a planar tangent-normal coordinate system with the origin in \(\texttt {S}'\) which contains \(\texttt {X}\) in the fourth quadrant (nonnegative tangent coordinate, nonpositive normal coordinate). In this coordinate system, the manifold passes horizontally through \(\texttt {S}'\). Consider the part of the manifold with positive tangent coordinate (i.e. the part of the manifold rightwards of \(\texttt {S}'\)). The fastest that this piece can turn away from \(\texttt {X}\) is in this plane along the boundary of the upper \(\tau \)-ball, associated to \(\texttt {S}'\).Footnote 6 Suppose the manifold continues along this path until some point \(\texttt {X}'\), and consider a plane containing the points \(\texttt {X}\), \(\texttt {X}'\) and \(\texttt {S}\) where the distance between \(\texttt {S}\in \mathcal {S}\) and \(\texttt {Y}\) is bounded by \(\varkappa \), so  . Going from \(\texttt {X}'\) to \(\texttt {S}\), the quickest way to turn the normal direction towards \(\texttt {X}\) is within this plane, and along a \(\tau \)-arc. While this second plane need not be the same as the first one, they intersect along the line containing \(\texttt {X}\) and \(\texttt {X}'\). We can turn the half-plane containing \(\texttt {S}'\) and the half-plane containing \(\texttt {S}\) along the line so that they form one plane, and that will be the configuration where it is equally (un)likely for

. Going from \(\texttt {X}'\) to \(\texttt {S}\), the quickest way to turn the normal direction towards \(\texttt {X}\) is within this plane, and along a \(\tau \)-arc. While this second plane need not be the same as the first one, they intersect along the line containing \(\texttt {X}\) and \(\texttt {X}'\). We can turn the half-plane containing \(\texttt {S}'\) and the half-plane containing \(\texttt {S}\) along the line so that they form one plane, and that will be the configuration where it is equally (un)likely for  to contain \(\texttt {X}\) (since we did not change any relative positions in the half-plane containing \(\texttt {X}\), \(\texttt {X}'\) and \(\texttt {S}\)), but where \(\texttt {S}'\), \(\texttt {X}\), \(\texttt {X}'\), \(\texttt {Y}\) and \(\texttt {S}\) all lie in the same plane.

to contain \(\texttt {X}\) (since we did not change any relative positions in the half-plane containing \(\texttt {X}\), \(\texttt {X}'\) and \(\texttt {S}\)), but where \(\texttt {S}'\), \(\texttt {X}\), \(\texttt {X}'\), \(\texttt {Y}\) and \(\texttt {S}\) all lie in the same plane.

We can make the same argument starting from \(\texttt {S}''\) instead of \(\texttt {S}'\), so we conclude the following: if our claim fails for some configuration of \(\texttt {X}\), \(\texttt {Y}\), \(\texttt {S}'\), \(\texttt {S}''\), \(\texttt {S}\), then it fails in a planar case where the part of the manifold connecting points \(\texttt {S}'\) and \(\texttt {S}''\) consists of (at most) three \(\tau \)-arcs, as in Fig. 4.

We started with the assumption  , but we may without loss of generality additionally assume

, but we may without loss of generality additionally assume  . If we had a counterexample \(\texttt {X}\) to our claim in the interior of all ellipsoids containing \(\texttt {X}\), we could project it in the opposite direction of \(pr(\texttt {X})\) to the first ellipsoid boundary we hit, and declare the center of that ellipsoid to be \(\texttt {S}'\).

. If we had a counterexample \(\texttt {X}\) to our claim in the interior of all ellipsoids containing \(\texttt {X}\), we could project it in the opposite direction of \(pr(\texttt {X})\) to the first ellipsoid boundary we hit, and declare the center of that ellipsoid to be \(\texttt {S}'\).

Although the reduction of cases we have made is already a vast simplification of the necessary calculations, we find that it is still not enough to make a theoretical derivation of the desired result feasible. Instead, we produce a proof with a computer.

We can reduce the possible configurations to four parameters (see Fig. 5):

-

\(\alpha \) denotes the angle measuring the length of the first \(\tau \)-arc,

-

\(\sigma \) denoted the angle for the second \(\tau \)-arc until \(\texttt {S}\),

-

\(p\) is, as usual, the persistence parameter,

-

\(\chi \) determines the position of \(\texttt {X}\) in the boundary

.

.

Notice that Fig. 5 does not include both ellipsoids containing \(\texttt {X}\) but not \(\texttt {Y}\), like Fig. 4 does. In order to derive Lemma 4.1, we will prove with the help from the computer that as soon as \(\texttt {Y}\) is not in the first ellipsoid, both \(\texttt {X}\) and \(\texttt {Y}\) will be in an ellipsoid, the center of which is within \(\varkappa \) distance from \(\texttt {Y}\). This allows us to restrict ourselves to just the four aforementioned variables, which makes the program run in a reasonable time.

The space of the configurations we restricted ourselves to—let us denote it by \(\mathscr {C}\)—is compact (we give its precise definition below). We want to calculate for each configuration in \(\mathscr {C}\) that \(\texttt {X}\) is in some ellipsoid with the center within \(\varkappa \) distance from \(\texttt {Y}\) (it follows automatically that \(\texttt {Y}\) is in this ellipsoid). The boundary of the ellipsoid is a level set of a smooth function. We can compose it with a suitable linear function so that \(\texttt {X}\) is in the open ellipsoid if and only if the value of the adjusted function is positive. Let us denote this adjusted function by \(v:\mathscr {C}\rightarrow \mathbb {R}\); we have our claim if we show that \(v\) is positive for all configurations in \(\mathscr {C}\).

Of course, the program cannot calculate the function values for all infinitely many configurations in \(\mathscr {C}\). We note that the (continuous) partial derivatives of \(v\) are bounded on compact \(\mathscr {C}\), hence the function is Lipschitz. If we change the parameters by at most \(\delta \), the function value changes by at most \(C \cdot \delta \) where C is the Lipschitz coefficient. The program calculates the function values in a finite lattice of points, so that each point in \(\mathscr {C}\) is at most a suitable \(\delta \) away from the lattice, and verifies that all these values are larger than \(C \cdot \delta \). This shows that \(v\) is positive on the whole \(\mathscr {C}\).

Let us now define \(\mathscr {C}\) precisely and then calculate the Lipschitz coefficient of \(v\). We may orient the coordinate system so that the point \(\texttt {X}\) is in the closed fourth quadrant. Hence we have \(\texttt {X}= \big (\sqrt{p+ p^2} \cos (\chi ), -p\, \sin (\chi )\big )\), where \(\chi \) ranges over the interval \({\mathbb {R}}_{[0, \frac{\pi }{2}]}\).

Unfortunately due to our method we cannot allow \(p\) to range over the whole interval \({\mathbb {R}}_{(\lambda , 1)}\); if we did, the values of \(v\) would come arbitrarily close to zero, in particular below \(C \cdot \delta \), so the program would not prove anything. Let us set \(p\in {\mathbb {R}}_{[m_p, M_p]}\), where we have chosen in our program \(m_p\mathrel {\mathop :}=0.5\) and \(M_p\mathrel {\mathop :}=0.96\). The closer \(M_p\) is to 1, the smaller the density we prove is required. However, larger \(M_p\) necessitates smaller \(\delta \) which increases the computation time. Through experimentation, we have chosen bounds, so that the program ran for a few days. Ultimately, with better computers (and more patience) one can improve our result. We note that experimentally we never came across any counterexample to our claims even outside of \(\mathscr {C}\) (so long as the configuration satisfied the theoretical assumptions from Corollary 3.5). We discuss this further in Sect. 7.