Abstract

We consider a common measurement paradigm, where an unknown subset of an affine space is measured by unknown continuous quasi-convex functions. Given the measurement data, can one determine the dimension of this space? In this paper, we develop a method for inferring the intrinsic dimension of the data from measurements by quasi-convex functions, under natural assumptions. The dimension inference problem depends only on discrete data of the ordering of the measured points of space, induced by the sensor functions. We construct a filtration of Dowker complexes, associated to measurements by quasi-convex functions. Topological features of these complexes are then used to infer the intrinsic dimension. We prove convergence theorems that guarantee obtaining the correct intrinsic dimension in the limit of large data, under natural assumptions. We also illustrate the usability of this method in simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Data in many scientific applications are often obtained by “sensing” the phase space via sensors/functions that are convex. Convex sensing is a class of problems of inferring the underlying geometry of data that was sampled via such functions. To be precise, let us recall the following

Definition 1.1

Let \(K\subseteq {\mathbb {R}}^d\) be open convex. A function \(f:K\rightarrow {\mathbb {R}}\) is quasi-convex if each sublevel set \(f^{-1}(-\infty ,\ell )=\{ x \in K \, \vert \, f(x)<\ell \}\) is convex or empty, for all \(\ell \in {\mathbb {R}}\).

The following is perhaps the shortest, albeit naive, formulation of a convex sensing problem. A collection of n points \(X=\{x_a\}_{a=1}^n\) in an open convex region \(K\subset {\mathbb {R}}^d\) is sensed by measuring the values of m sensors, i.e. continuous quasi-convex functions \({\mathcal {F}}=\{f_i:K\rightarrow {\mathbb {R}}\}_{i=1}^m\) . Suppose that one has access only to the \(m\times n\) data matrix \(M = [M_{ia}]\) of sensor values, where

but does not have direct access to the information about the dimension d of the underlying space, the open convex region K, the points \(x_a\in K\), or any further details of the quasi-convex functions \(f_i\). Can one recover any geometric information about the sampled region K? At the very minimum, can one infer the dimension d?

1.1 Motivation from neuroscience

While the convex sensing problems may be not uncommon in many scientific applications, our chief motivation comes from neuroscience. Neurons in the brain regions that represent sensory information often possess receptive fields. A paradigmatic example of a receptive field is that of a hippocampal place cell (O’Keefe and Dostrovsky 1971). Place cells are a class of neurons in rodent hippocampus that act as position sensors. Here the relevant stimulus space \(K\subset {\mathbb {R}}^d\) is the animal’s physical environment (Yartsev and Ulanovsky 2013), with \(d\in \{1,2,3\}\), and \(x\in K \) is the animal’s location in this space. Each neuron is activated with a certain probability that is a continuous function \(f:K\rightarrow {\mathbb {R}}_{\ge 0}\) of the animal’s position in space. In other words, the probability of a single neuron’s activation at a time t is given by \(p(t)=f(x(t))\), where x(t) is the animal’s position.

For each place cell, the function f is called its place field. In neuroscience experiments, place fields are observed to be approximately quasi-concaveFootnote 1; in other words, a place field can be approximated by a quasi-concave function (see Fig. 1). Fore example, in Fig. 1, quasi-concavity is slightly violated in cells 1 and 3, as the superlevel sets bounded by the light blue level are not perfectly convex. Note that the violations of quasi-concavity occur at the levels where the function values are close to zero. Regardless of the physical reasons of why the place fields have this property, such violations are often thought to be a result of the intrinsic noisiness of the neurons as well as the measurement noise. Place fields can be easily computed when both the neuronal activity data and the relevant stimulus space are available. A number of other classes of sensory neurons in the brain also possess quasi-concave receptive fields, that is, each such neuron responds with respect to a quasi-concave probability density function \(f:K\rightarrow {\mathbb {R}}_{\ge 0}\) on the stimulus space.

The activities of three different experimentally recorded place cells in a rat’s hippocampus. The colors represent the probability of each neuron’s firing as a function of the animal’s location; here the redder colors are higher probability and bluer colors are lower probability. In particular, pixels in dark blue represent zero probability

In many situations, unlike place cells, the relevant stimulus space for a given neural population is unknown. This raises a natural question: can one infer the dimension of the stimulus space with quasi-concave receptive fields from neural activity alone? More precisely, given the neural activity of m neurons with quasi-concave receptive fields \(f_i: K \rightarrow {\mathbb {R}} \), can one “sense” the stimulus space by sampling the neuronal activity at n moments of time as \(M_{ia} =f_i(x(t_a))\)? Here one has access only to the measurements \(M_{ia}\), but not the points \(x_a=x(t_a)\) in the domain K. The above natural question motivates our naive formulation of the convex sensing problem, as introduced in the beginning of Sect. 1.

Here we would like to emphasize that, for the case of the experimentally observed place cells (Fig. 1), we have the perfect knowledge of the geometry of the stimulus space K (the rectangular environment), the coordinates of the stimuli \(x_a=x(t_a)\), and the functions \(f_i:K\rightarrow {\mathbb {R}}\). In contrast, in the convex sensing problem, we only assume access to the \(m\times n\) matrix \(M = [M_{ia}] = [f_i(x_a)]\), recording the function values on the stimuli.

1.2 The geometry of convex sensing data

Convex sensing problems possess a natural transformation group. If \(\phi :{\mathbb {R}} \rightarrow {\mathbb {R}} \) is a strictly monotone-increasing function,Footnote 2 then the sub-level sets of the composition \(\phi \circ f \) and f are identical up to an order-preserving re-labeling. Thus, if \(\phi \) is a strictly monotone-increasing function, then f is quasi-convex if and only if \(\phi \circ f \) is quasi-convex. It is easy to show that two sets of real numbers have the same ordering, that is, \(a_1<a_2<\cdots < a_n\) and \(b_1<b_2<\cdots < b_n\) if and only if there exists a strictly monotone function \(\phi :{\mathbb {R}} \rightarrow {\mathbb {R}} \), such that \(b_i=\phi (a_i)\) for all i. It thus follows that it is only the total order of each row in the matrix M in Eq. (1) that constrain the geometric features of the point cloud \(X_n = \{x_1,\ldots , x_n\}\) in a convex sensing problem. This motivates the following definition.

Definition 1.2

Let V be a finite set. A sequence of length k in V is a k-tuple \(s = (v_1,\ldots , v_k)\) of elements in V without repetitions. We denote by \(S_k[V]\) the set of all sequences of length k on V.

If M is an \(m\times n\) real matrix that has distinct entries in each row, then each row yields a sequence of length n. For the sake of an example, consider a real-valued matrix

Since the first row has the ordering \(2.56<3.96<4.19<8.23\), the total order \(<_1\) on \( V= \{1, 2, 3, 4\}\) is \( 3<_1 4<_1 2<_1 1 \). Thus, the order sequence for the first row is \(s_1 = (3,4,2,1)\in S_4[V]\). Similarly, the order sequence for the second row is \(s_2 = (2,1,3,4)\).

It is easy to see that if the sampled points \(X_n\) and the quasi-convex functions \(\{f_i\}_{i\in [m]}\) satisfy some regularity assumptions,Footnote 3 then each row of the data matrix \(M_{ia} = f_i(x_a)\) has no repeated values with probability 1. We denote the set of all such data matrices as

For \(M = [M_{ia}]\in {\mathcal {M}}_{m,n}^o\), one can define a collection S(M) of m maximal-length sequences as \(S(M)=\{s_1,\dots , s_m\}\), where each sequence,Footnote 4\(s_i\in { S_n[n] } \),

is obtained from the total order of the i-th row:

The following observation makes it possible to re-state any convex sensing problem purely in terms of embedding a set of points that satisfy certain convex hull non-containment conditions. Let \({\mathrm{conv}}(x_1,\ldots , x_k)\) denote the convex hull of a collection of points \(x_1,\ldots , x_k\) in \({\mathbb {R}}^d\).

Lemma 1.3

For any collection of n distinct points \( \{x_1,x_2,\dots , x_n\}\subset {\mathbb {R}}^d\), the following statements are equivalent:

-

(i)

There exists a continuous quasi-convex function \(f:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) such that

$$\begin{aligned} f (x_{1})<f (x_{2})<\cdots <f (x_{ n}), \end{aligned}$$(2) -

(ii)

For each \(k = 2,\ldots , n\), \(x_{ k }\notin {\mathrm{conv}}(x_{ 1 },\ldots , x_{ k-1 })\).

Proof

The implication \((i)\!\!\implies \!\! (ii)\) follows from Definition 1.1. To prove that \((ii)\!\!\implies \!\! (i)\), denote \(C_k = {\mathrm{conv}}(x_{ 1 },\ldots , x_{ k })\), \(d_k(x){\mathop {=}\limits ^{\mathrm {def}}}{\mathrm{dist}}(x,C_k)\) for any \(k=1,\dots n\), and define \(f (x) = \sum _{k=1}^n h_k\cdot d_k(x)\), where \(h_1 = 1\) and

Note that (ii) implies that \(d_k(x_{ k+1 })>0\) for all \(k\ge 2\). Recall that, for any convex set \(C\subset {\mathbb {R}}^d\), the function \(x\mapsto {\mathrm{dist}}(x,C) \) is continuous and convex.Footnote 5 Thus, since \(h_k \) are positive, f(x) is a continuous convexFootnote 6 (and thus quasi-convex) function. Moreover, \( f(x_{ 1 }) =0 < {\mathrm{dist}}(x_{ 2 },x_{ 1 })= f (x_{ 2 }) \), and

The last inequality is equivalent to \(f(x_{ k+1 })>f(x_{ k })\). Thus inequalities (2) hold. \(\square \)

Corollary 1.4

A matrix \(M=[M_{ia}]\in {\mathcal {M}}_{m,n}^o\) can be obtained as \(M_{ia} =f_i(x_a)\) from a collection of m continuous quasi-convex functions \( f_i:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) and n points \( x_1, \dots , x_n\in {\mathbb {R}}^d\) if and only if there exist n points \( x_1, \dots , x_n\in {\mathbb {R}}^d\) such that, for each sequence \(s=(a_1, a_2,\dots , a_n)\in S(M)\) and each \(k = 2,\ldots , n\), \(x_{ a_k }\notin {\mathrm{conv}}(x_{ a_1 },\ldots , x_{ a_{k-1} })\).

We recall that a set of points is convexly independent if none of these points lies in the convex hull of the others. An important implication of Corollary 1.4 is the following

Corollary 1.5

For every matrix \(M\in {\mathcal {M}}_{m,n}^o\) and convexly independent points \(x_1,x_2,\dots , x_n \in {\mathbb {R}}^d\), there exist m continuous quasi-convex functions \(f_i:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) such that \(M_{ia} = f_i(x_a)\).

Note that any n distinct points in \({\mathbb {R}}^2\) on the unit circle \(S^1\) are convexly independent. Therefore, by Corollary 1.5, for any matrix \(M\in {\mathcal {M}}_{m,n}^o\), any choice of n distinct points \(X_n\subset S^1\) along with appropriately chosen quasi-convex functions yields a two-dimensional solution to the “naive” convex sensing problem on M.

The situation where all the sampled points are convexly independent is highly improbable, for large n. If one explicitly excludes this situation, then the combinatorics of S(M) constrains the minimal possible dimension d of the geometric realization, as illustrated by the following example.

Example 1

Let \(n>2\), and \(M\in {\mathcal {M}}_{n-1,n}^o\) be a matrix obtained as in Eq. (1) with continuous quasi-convex functions \(f_i\), whose \((n-1)\) sequences \(S(M)=\{s_1,s_2,\dots , s_{n-1}\} \) are of the form

where each of the “\(\cdots \)” in \(s_i\) is an arbitrary permutation of \([n]\setminus \{n, i\}\). Assume that at least one point of \( X_n = \{x_1,\ldots , x_n\}\subset {\mathbb {R}}^d\) is contained in the interior of the convex hull \({\mathrm{conv}}(X_n)\), then the dimension in which M can be obtained as in Corollary 1.4 is \(d= n-2\).

Proof

For any \(a\le n-1\), the point \(x_a\) is ordered the last in the sequence \(s_a=(\cdots , n,a) \); thus, by Lemma 1.3 each such point \(x_a\) cannot be in the interior of the convex hull of the other points, therefore \(x_n\in {\mathrm{conv}}(x_1, \dots , x_{n-1})\). Assume that the embedding dimension is \(d\le n-3\), then by the Caratheodory’s theorem we conclude that there exists \(b\in [n-1]\), such that

However, by conditions in Eq. (3) there exists a continuous quasi-convex function \(f_b\) such that \(f_b(x_a)< f_b(x_{n})\) for all \(a\in [n-1]\setminus \{ b\}\), thus Lemma 1.3 yields a contradiction with (4). Therefore, the matrix is not embeddable in dimension \(d\le n-3\).

To prove that these sequences are embeddable in dimension \(d=n-2\), one can place points \(x_1,\ldots , x_{n-1}\) to the vertices of an \((n-2)\)-simplex in \({\mathbb {R}}^{n-2}\), and place \(x_{n}\) to the barycenter of that simplex. By construction, \(\{x_1,\ldots , x_{n-1}\}\) are convexly independent and we have for following convex hull relations for every \(i<n\): \(x_n \notin {\mathrm{conv}}\left( \left\{ x_1,\ldots , x_{n-1}\right\} \setminus \{x_i\}\right) \) , and \(x_i \notin {\mathrm{conv}}(\{x_1,\ldots , x_{n}\}\setminus \{x_i\})\). Therefore, by Lemma 1.3 there exist quasi-convex continuous functions that realize the sequences in (3). \(\square \)

1.3 Dimension inference in a convex sensing problem

It is clear from Corollary 1.5 and Example 1, that the problem of dimension inference is well-posed only in the presence of some additional assumptions that guarantee convex dependence of the sampled points. Instead of making such an assumption explicit, we take a probabilistic perspective, wherein points are drawn from a probability distribution that is equivalent to the Lebesgue measure. We assume that there are three objects (which are unknown) that underly any “convex sensing” data:

-

(i)

an open convex set \(K\subseteq {\mathbb {R}}^d\),

-

(ii)

m quasi-convex continuous functions \({\mathcal {F}}=\{f_i:K\rightarrow {\mathbb {R}}\}_{i=1}^m\), and

-

(iii)

a probability measure \(P_K\) on K.

In relation to the neuroscience motivation in Sect. 1.1, \(K\subseteq {\mathbb {R}}^d\) is the stimulus space, each function \(f_i\) is the negative of the receptive field of a neuron, and \(P_K\) is the measure that describes the probability distribution of the stimuli. To guarantee that the convex sensing problem is well-posed, we impose the following regularity assumptions.

Definition 1.6

A regular pair is a pair \(({\mathcal {F}}, P_K)\) that satisfies the conditions (i)-(iii) above as well as the following two conditions:

-

(R1)

The probability measure \(P_K\) is equivalent to the Lebesgue measure on K.

-

(R2)

Level sets of all functions in \( {\mathcal {F}}\) are of measure zero, i.e. for every \(i\in [m]\) and \(\ell \in {\mathbb {R}}\), \(P_K(f_i^{-1}(\ell ))=0\).

Definition 1.7

A point cloud \(\{x_1,\ldots , x_n\}\subset K\) is sampled from a regular pair \(({\mathcal {F}}, P_K)\) if it is i.i.d. from \(P_K\). A matrix \(M=[M_{ia}]\in {\mathcal {M}}_{m,n}^o\) is sampled from a regular pair \(({\mathcal {F}}, P_K)\), if for all \(i\in [m]\), and \(a\in [n]\), \(M_{ia} =f_i(x_a), \) where \(\{x_1,\ldots , x_n\}\subset K \) is sampled from \(({\mathcal {F}}, P_K)\).

The assumption (R1) ensures that the domain K is well-sampled, and thus the probability that the points \( x_1,\ldots , x_n\) are convexly independent approaches zero in the limit of large n. The assumption (R2) guarantees, with probability 1, that the data matrix M has no repeated values in each row, and thus is in \( {\mathcal {M}}_{m,n}^o\).

In this paper, we develop a method for estimating the dimension of convex sensing data. Intuitively, such an estimator needs to be consistent, i.e. “behave well” in the limit of large data. In addition to the conditions imposed on a regular pair, other properties of a pair \(({\mathcal {F}}, P_K)\) may be needed, depending on the context. It is therefore natural to define a consistent dimension estimator in relation to a particular class of regular pairs. Since an estimator may rely on different parameters for different regular pairs, we consider a one-parameter family of such estimators, motivating the following definition of consistency.

Definition 1.8

Let \({\mathcal {RP}}\) be a class of regular pairs. For each regular pair \({({\mathcal {F}},P_K)}\in {\mathcal {RP}}\) we denote by \(d {({\mathcal {F}},P_K)}\) the dimension d, where the open convex set \(K\subseteq {\mathbb {R}}^d\) is embedded. A one-parameter family of functions \({{\hat{d}}}(\varepsilon ) :{\mathcal {M}}_{m,n}^o\rightarrow {\mathbb {N}}\) is called an asymptotically consistent estimator in \({\mathcal {RP}}\), if for every regular pair \({({\mathcal {F}},P_K)}\in {\mathcal {RP}}\), there exists \(l>0\), such that for every \(\varepsilon \in (0,l)\) and each sequence of matrices \(M_n \in {\mathcal {M}}_{m,n}^o \), sampled from \({({\mathcal {F}},P_K)}\),

The structure of this paper is as follows. In Sect. 2, we define two multi-dimensional filtrations of simplicial complexes: the empirical Dowker complex \({\mathrm{Dow}}(S(M))\) that can be associated to a data matrix M, and the Dowker complex \({{\mathbb {D}}{\mathrm{ow}}}{({\mathcal {F}},P_K)}\), that can be associated to a regular pair \({({\mathcal {F}},P_K)}\). Using an interleaving distance between multi-filtered complexes, we prove (Theorem 2.10) that for a sequence \(\{ M_n\}\) of data matrices, sampled from a regular pair \({({\mathcal {F}},P_K)}\), \({\mathrm{Dow}}(S(M_n))\rightarrow {{\mathbb {D}}{\mathrm{ow}}}{({\mathcal {F}},P_K)}\) in probability, as \(n\rightarrow \infty \).

In Sect. 3, we develop tools for estimating the dimension of \({({\mathcal {F}},P_K)}\) using persistent homology. We define a set of maximal persistent lengths associated to \({{\mathbb {D}}{\mathrm{ow}}}{({\mathcal {F}},P_K)}\) and prove (Lemma 3.6) that a lower bound of the dimension of \({({\mathcal {F}},P_K)}\) can be derived from these persistent lengths. Next we define another set of maximal persistence lengths from \({\mathrm{Dow}}(S(M_n))\) and prove (Theorem 3.8) that they converge to the maximal persistence lengths associated to \({{\mathbb {D}}{\mathrm{ow}}}{({\mathcal {F}},P_K)}\) in probability, in the limit of large sampling of the data. The rest of Sect. 3 is devoted to two subsampling procedures for different practical situations, as well as simulation results that illustrate that the correct dimension can be inferred with these two methods.

In Sect. 4, we introduce complete regular pairs and prove (Theorem 4.3) that the lower bound in Lemma 3.6 is equal to the dimension \(d {({\mathcal {F}},P_K)}\) for complete regular pairs. This establishes (Theorem 4.4) that the dimension estimator introduced in Sect. 3.3 is an asymptotically consistent estimator in the class of complete regular pairs. In Sect. 5, we define an estimator that can be used to test (Theorem 5.6) whether the data matrix is sampled from a complete regular pair. The Appendix (Sect. 7) contains the proofs of the main theorems as well as some technical supporting lemmas.

2 Empirical Dowker complex and the interleaving convergence theorem

In this section, we define the empirical Dowker complex from the m sequences induced from the rows of the data matrix M and the Dowker complex from the regular pair \({({\mathcal {F}},P_K)}\) and prove that the empirical Dowker complex converges to the Dowker complex in probability. These complexes are both examples of multi-filtered simplicial complexes.

Definition 2.1

Let \(I = \prod _{i\in [m]} I_i\) be an m-orthotope in \({\mathbb {R}}^m\), where each \(I_i\) is an interval (open, closed, half-open, finite, or infinite are all allowed) in \({\mathbb {R}}\). Let \(\le \) be the natural partial order on I induced from \({\mathbb {R}}^m\). A multi-filtered simplicial complex \({\mathcal {D}}\) indexed over I is a collection \(\{{\mathcal {D}}_\alpha \}_{\alpha \in I}\) of simplicial complexes on a fixed finite vertex set, such that, \({\mathcal {D}}_\alpha \subseteq {\mathcal {D}}_\beta \), for all \(\alpha \le \beta \) in I.

We define the empirical Dowker complexes from a collection of sequences of maximal lengthFootnote 7 on the vertex set [n].

Definition 2.2

Let \(S=\{s_1,\ldots , s_m\}\) be a collection of sequences on [n] of length n. Let \(\le _i\) be the total order on [n] induced from \(s_i\); namely, for \(a,b\in [n]\), \(a\le _i b\) if and only if a is before or equal to b in \(s_i\). We define the following multi-filtered simplicial complex, with vertex set [m] and indexed over \([0,1]^m\):

where

and

Here \(\varDelta (\{ \sigma _a\}_{a\in [n]})\) denotes the smallest simplicial complex containing the faces \(\{ \sigma _a\}_{a\in [n]}\). This filtered complex is called the empirical Dowker complex of S.

Example 2.3

For \(n = 3\), \(m = 4\), consider \(S = \{s_1, s_2, s_3, s_4\} = \{(1,2,3), (2,1,3), (3,1,2), (1,3,2)\}\). The four total orders on [3] induced by sequences in S are \(1\le _1 2\le _1 3\), \(2\le _2 1\le _2 3\), \(3\le _3 1\le _3 2\), and \(1\le _4 3\le _4 2\). Let us compute \({\mathrm{Dow}}(S)(t_1, t_2, t_3, t_4)\) for \((t_1, t_2, t_3, t_4) = \left( \frac{1}{2}, \frac{1}{3}, \frac{2}{3}, \frac{1}{4}\right) \).

First, \(n(t_1, t_2, t_3, t_4)= (3/2, 1, 2, 3/4)\), hence, for \(a = 1\),

Hence \(\#(\{b\in [n]:b\le _i a\}) = 1,2,2,1\), for \(i = 1,2,3,4\). Since \(1\le 3/2, 2\nleq 1, 2\le 2\) and \(1\nleq 3/4\), the Eq. (6) yields \( \sigma _1 = \{1,3\}\).

Similarly, for \(\sigma _2\), we compute \(\#(\{b\in [n]:b\le _i 2\}) = 2,1,3,3\), for \(i = 1,2,3,4\); since \(2\nleq 3/2, 1\le 1, 3\nleq 2\), and \(3\nleq 3/4\), \(\sigma _2 = \{2\}\). Finally, for \(\sigma _3\), we compute \(\#(\{b\in [n]:b\le _i 3\}) = 3,3,1,2\), for \(i = 1,2,3,4\); since \(3\nleq 3/2, 3\nleq 1,1\le 2\), and \(2\nleq 3/4\), \(\sigma _3 = \{3\}\). Therefore, we conclude that

i.e. the simplicial complex with vertices \([4] = \{1,2,3,4\}\) and facets \(\{1,3\}\) and \(\{2\}\).

Recall from Sect. 1.2 that the relevant geometric information of the \(m\times n\) data matrix \(M\in {\mathcal {M}}_{m,n}^o\) is contained in the collection of m sequences \(S(M) = \{s_1,\ldots , s_m\}\), where \(s_i\subset S_n[n]\) is of length n and records the total order induced by the i-th row of M. Therefore, we can consider the empirical Dowker complex \({\mathrm{Dow}}(S(M))\) derived from the data matrix M.

Dowker complexes first appeared in the paper (Dowker 1952) by C.H. Dowker, defined as a simplicial complex induced by a relation between two sets. For modern treatments and generalizations, we refer the readers to Section 4.9 of Ghrist (2014) and Section 4.2.3 of Chazal et al. (2014), Chowdhury and Mémoli (2018), and Brun and Blaser (2019). In particular, our definition of empirical Dowker complex is a multi-parameter generalization of the Dowker complex defined in Chazal et al. (2014). Specifically, the one-dimensional filtration of simplicial complex (indexed over t) \({\mathrm{Dow}}(S(M))(n\cdot t,\ldots , n\cdot t)\) is equal to the Dowker complex defined in Chazal et al. (2014).

Recall that, for a collection \({\mathcal {A}} = \{A_i\}_{i\in [m]}\) of sets, the nerve of \({\mathcal {A}}\), denoted \({\mathrm{nerve}}({\mathcal {A}})\), is the simplicial complex on the vertex set [m] defined as

The following lemma is immediate from Definition 2.2.

Lemma 2.4

Let \(S=\{s_1,\ldots , s_m\}\) be a collection of sequences on [n] of length n. For each \(i\in [m]\) and \(t\in {\mathbb {R}}\), consider

where \(\le _i\) is the total order on [n] induced by \(s_i\). Then

Next we connect the combinatorics of \({\mathrm{Dow}}(S(M))\) to the geometry. From Lemma 2.4, we know that \({\mathrm{Dow}}(S(M))\) is the nerve of \(\{A^{(i)}(t_i)\}_{i\in [m]}\). To define an analogue of \({\mathrm{Dow}}(S(M))\) from the regular pair \(({\mathcal {F}},P_K)\), we use the following

Lemma 2.5

Assume that \(P_K\) is a probability measure on a convex open set K that is equivalent to the Lebesgue measure. Let \(f:K\rightarrow {\mathbb {R}}\) be a continuous function with \(P_K(f^{-1}(\ell )) = 0\) for all \(\ell \in {\mathbb {R}}\). Then there exists a unique strictly increasing continuous function \(\lambda :(0,1)\rightarrow {\mathbb {R}}\) such that, for all \(t\in (0,1)\),

Proof

Since K is path-connected, by intermediate value theorem, f(K) is an interval in \({\mathbb {R}}\). Define a function \(p_f:f(K)\rightarrow [0,1]\) by \(p_f(\ell ) {\mathop {=}\limits ^{\mathrm {def}}}P_K(f^{-1}(-\infty ,\ell ))\). Rewriting Eq. (7) as \(p_f(\lambda (t)) = t\), we note that \(\lambda (t)\) (if exists) is the inverse of \(p_f\), proving uniqueness of \(\lambda (t)\). For the existence and continuity of \(\lambda (t)\), it suffices to prove \(p_f\) is continuous and strictly increasing.

To prove \(p_f\) is continuous, we prove \(p_f\) is continuous from the right and from the left. Let \(\ell \in f(K)\). For \(\ell _n\nearrow \ell \) in f(K),Footnote 8 from definition, \(f^{-1}(-\infty ,\ell _n)\nearrow f^{-1}(-\infty ,\ell )\).Footnote 9 Since \(P_K\) is a finite measure, taking \(P_K\) on both sides, we obtain \(p_f(\ell _n)\nearrow p_f(\ell )\). Thus \(p_f\) is continuous from the left. On the other hand, for \(\ell _n\searrow \ell \) in f(K), from definition,

Thus \(p_f(\ell _n)\searrow P_K(\bigcap _{n = 1}^\infty f^{-1}(-\infty ,\ell _n))=P_K(f^{-1}(-\infty ,\ell ))+P_K(f^{-1}(\ell )) = p_f(\ell )+0 = p_f(\ell )\) and \(p_f\) is continuous from the right. Therefore, \(p_f\) is a continuous function.

Now we turn to prove \(p_f\) is strictly increasing. For \(\ell _1<\ell _2\) in f(K), we need to prove \(p_f(\ell _1)<p_f(\ell _2)\). Let \(U_1 = f^{-1}(-\infty ,\ell _1)\) and \(U_2 = f^{-1}(-\infty ,\ell _2)\), which are open convex sets with \(U_1\subseteq U_2\). Since f(K) is an interval, for any \(\ell \in (\ell _1,\ell _2)\), there exists \(x\in K\) with \(f(x) = \ell \). Thus \(U_1\ne U_2\). Note that \(U_2\nsubseteq cl(U_1)\); otherwise, \(U_1\subseteq U_2 = int(U_2)\subseteq int(cl(U_1))=U_1\) will imply \(U_1 = U_2\), where the last equality follows from openness and convexity of \(U_1\). Thus, there exists \(x_0\in U_2\setminus cl(U_1)\). Choose \(\epsilon >0\) such that \(B(x_0,\epsilon )\subseteq U_2\) but \(B(x_0,\epsilon )\cap cl(U_1) = \varnothing \). Then \(P_K(U_2)\ge P_K(U_1)+P_K(B(x_0,\epsilon ))>P_K(U_1)\); equivalently, \(p_f(\ell _2)>p_f(\ell _1)\). Hence, \(p_f\) is strictly increasing. \(\square \)

For a regular pair \({({\mathcal {F}},P_K)}=(\{f_i:K\rightarrow {\mathbb {R}}\}_{i\in [m]},P_K)\), Lemma 2.5 guarantees that for each \(i\in [m]\), there exists a unique strictly increasing continuous function \(\lambda _i:(0,1)\rightarrow {\mathbb {R}}\) that satisfies (7). The following definition provides a continuous analogue of \(A^{(i)}(t)\).

Definition 2.6

Let \(({\mathcal {F}},P_K)=(\{f_i\}_{i\in [m]},P_K)\) be a regular pair. For each \(i\in [m]\) and \(t\in (0,1)\), define

where \(\lambda _i :(0,1)\rightarrow {\mathbb {R}}\) is the unique function that satisfies (7). For convenience, we also define \(K^{(i)}(0){\mathop {=}\limits ^{\mathrm {def}}}\varnothing \) and \(K^{(i)}(1){\mathop {=}\limits ^{\mathrm {def}}}K\).

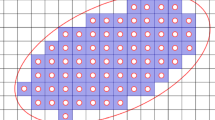

In other words, \(K^{(i)}(t)\) are sublevel sets of \(f_i\) rescaled with respect to the measure \(P_K\) (see Fig. 2). Analogous to Lemma 2.4, we use \(K^{(i)}(t)\) to define the continuous version of Dowker complex.

Definition 2.7

Let \(({\mathcal {F}}, P_K)\) be a regular pair. Define a multi-filtered complex \({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}}, P_K)\), indexed over \([0,1]^m\), by

This multi-filtered complex is called the Dowker complex induced from \(({\mathcal {F}}, P_K)\).

The complex \({\mathrm{Dow}}(S(M))\) is what we can obtain from the data matrix M, but it does not capture the whole geometric information of \(({\mathcal {F}},P_K)\). On the other hand, \({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}}, P_K)\) reflects the whole geometric information but is not directly computable. Since \(A^{(i)}(t_i)\) is an approximation of \(K^{(i)}(t_i)\), one might expect \({\mathrm{Dow}}(S(M))\) approximates \({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}}, P_K)\). To compare theese two complexes, we need the concept of the interleaving distance.

Definition 2.8

For a multi-filtered complex \({\mathcal {K}}\) indexed over \({\mathbb {R}}^m\) and \(\epsilon >0\), the \(\epsilon \)-shift of \({\mathcal {K}}\), denoted \({\mathcal {K}}+\epsilon \), is the multi-filtered complex defined by

For two multi-filtered complexes \({\mathcal {K}}\) and \({\mathcal {L}}\) indexed over \({\mathbb {R}}^m\), the simplicial interleaving distance between \({\mathcal {K}}\) and \({\mathcal {L}}\) is defined as

Note that this interleaving distance is between multi-filtered simplicial complexes (see, e.g. Lesnick 2015, for the standard definition of interleaving distance between multi-dimensional persistence modules). Similar to the standard interleaving distance, the simplicial interleaving distance \(d_{\mathrm{INT}}\) defined here is also a pseudo-metric; namely, \(d_{\mathrm{INT}}({\mathcal {K}},{\mathcal {L}}) = 0\) does not imply \({\mathcal {K}}={\mathcal {L}}\).

The definition of simplicial interleaving distance involves a shift of indices and that is why the two multi-filtered complexes to be compared are required to be indexed over the whole \({\mathbb {R}}^m\). Since both \({\mathrm{Dow}}(S(M))\) and \({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}}, P_K)\) are indexed only over \([0,1]^m\), we need to extend their indexing domain to \({\mathbb {R}}^m\). This motivates the definition below.

Definition 2.9

For \({\mathcal {D}}={\mathrm{Dow}}(S(M))\) or \({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}}, P_K)\) and \((t_1,\ldots , t_m)\in {\mathbb {R}}^m\), define

where \(\theta :{\mathbb {R}}\rightarrow [0,1]\) is defined by \(\theta (t) = t\), if \(0\le t\le 1\); \(\theta (t) =0\), if \(t<0\); \(\theta (t) =1\), if \(t>1\).

With the above notations, we state one of our main theorems.

Theorem 2.10

(Interleaving Convergence Theorem) Let \(({\mathcal {F}}, P_K)\) be a regular pair and \(M_n\) be an \(m\times n\) data matrix sampled from \({({\mathcal {F}},P_K)}\). Then the simplicial interleaving distance between \({\mathrm{Dow}}(S(M_n))\) and \({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}}, P_K)\) converges to 0 in probability as \(n\rightarrow \infty \); namely, for all \(\epsilon >0\),

The proof of Theorem 2.10 is given in Section 6.2. In Secti. 3, we use Theorem 2.10 to infer a lower bound for the dimension of \({({\mathcal {F}},P_K)}\).

3 Estimating the stimulus space dimension

In this section we define the topological features of \({\mathrm{Dow}}(S(M))\) that we use to infer a lower bound for the intrinsic dimension \(d{({\mathcal {F}},P_K)}\) of a regular pair \({({\mathcal {F}},P_K)}\). Specifically, in Sect. 3.1, we review the basics of persistence modules and persistence homology and define the maximal persistence lengths. In Sect. 3.2 we define the features of \({{\mathbb {D}}{\mathrm{ow}}}{({\mathcal {F}},P_K)}\) that can be used to infer the embedding dimension. Lemma 3.6 introduces a lower bound \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}\) of the intrinsic dimension \(d{({\mathcal {F}},P_K)}\) that is defined in terms of these features. In Sect. 3.3, we define the analogous features of \({\mathrm{Dow}}(S(M))\) and prove their convergence to the respective features of \({{\mathbb {D}}{\mathrm{ow}}}{({\mathcal {F}},P_K)}\) in the limit of large sampling (Theorem 3.8). Section 3.4 provides explicit algorithms for the computation of the features of \({\mathrm{Dow}}(S(M))\) that allow to infer the dimension due to Theorem 3.8. Lastly, Sect. 3.5 discusses the computational aspects of the method and provides two practical approaches in their respective scenarios. We also illustrate the usability of these methods for the dimension inference using synthetic data.

3.1 Persistence modules and maximal persistence length

First we recall the definition of persistence modules, persistence intervals and persistence diagrams; for more details see, e.g. Chapter 1 of Oudot (2015). Then we define the maximal persistence length for a 1-dimensional filtration of simplicial complexes. We consider a ground field \({\mathbb {F}}\) (which is normally taken to be \({\mathbb {F}}_2\) for computational reasons). All the results in this paper do not depend on the choice of the field.

Definition 3.1

A persistence module \({\mathcal {M}}\) indexed over an interval [0, T] is a collection \(\{{\mathcal {M}}_t\}_{t\in [0,T]}\) of vector spaces over \({\mathbb {F}}\) along with linear maps \(\phi _s^t:{\mathcal {M}}_s\rightarrow {\mathcal {M}}_t\) for every \(s\le t\) in [0, T] such that \(\phi _s^u = \phi _t^u\circ \phi _s^t\), and \(\phi _t^t ={\text {id}}_{{\mathcal {M}}_{\mathrm{t}}}\) for all \(s\le t\le u\) in [0, T].

The direct sum of two persistence modules \({\mathcal {M}}\) and \({\mathcal {N}}\) over [0, T] is the persistence module \(({\mathcal {M}}\oplus {\mathcal {N}})_t{\mathop {=}\limits ^{\mathrm {def}}}{\mathcal {M}}_t\oplus {\mathcal {N}}_t\). For an interval \(J\subseteq [0,T]\), the interval module \({\mathbb {I}}_J = \{({\mathbb {I}}_J)_t\} \) is defined as

along with the identity maps \(\phi _s^t: {\mathbb {F}}\rightarrow {\mathbb {F}}\) for every \(s\le t\) in J and zero maps \(\phi _s^t=0 \) for all other \(s< t\) in [0, T].

A well-known structural characterization of a persistence module is via its persistence intervals. The following is a structural theorem that characterizes persistence modules and guarantees the existence and uniqueness of persistence intervals (Crawley-Boevey 2015) (see, also Oudot 2015 and references therein).

Theorem 3.2

Let \({\mathcal {M}} = \{{\mathcal {K}}_t\}_{t\in [0,T]}\) be a persistence module over [0, T]. If, for each \(t\in [0,T]\), \({\mathcal {M}}_t\) is a finite dimensional vector space over \({\mathbb {F}}\), then \({\mathcal {M}}\) can be decomposed as a direct sum of interval modules; namely,

where \(\{J\}\) is a collection of some intervals (open, closed, or half-open) in [0, T]. The decomposition is unique in the sense that, for every such decomposition, the collection of intervals is the same.

Rigorously speaking, one should distinguish open, closed, and half-open intervals. In what follows, we only use the lengths of the persistence intervals, and hence those distinctions will not matter. An important class of persistence modules is obtained from a 1-dimensional filtration of simplicial complexes by applying the homology functors \(H_k(\ \cdot \ ;{\mathbb {F}})\), \(k = 0, 1, 2, ...\). Specifically, for a 1-dimensional filtration of simplicial complexes \({\mathcal {K}} = \{{\mathcal {K}}_t\}_{t\in [0,T]}\) and a fixed nonnegative integer k, we have the persistence module \(H_k({\mathcal {K}};{\mathbb {F}}) = \{H_k({\mathcal {K}}_t;{\mathbb {F}})\}_{t\in [0,T]}\) along with the linear maps \((i_s^t)_*:H_k({\mathcal {K}}_s;{\mathbb {F}})\rightarrow H_k({\mathcal {K}}_t;{\mathbb {F}})\) for every \(s\le t\) in [0, T], where \(i_s^t\) is the inclusion map from \({\mathcal {K}}_s\) to \({\mathcal {K}}_t\). Since \(H_k(\ \cdot \ ;{\mathbb {F}})\) is a covariant functor, the equality \((i_t^u)_*\circ (i_s^t)_* = (i_s^u)_*\) holds for every \(s\le t\le u\) in [0, T].

For each k, we may use the persistence diagram of \(H_k({\mathcal {K}};{\mathbb {F}})\) for analysis. For our purpose, instead of the whole diagram, we summarize the diagram by only looking at the longest length among all persistence intervals, which we formally define below:

Definition 3.3

Let \({\mathcal {K}} = \{{\mathcal {K}}_t\}_{t\in [0,T]}\) be a 1-dimensional filtration of simplicial complexes. For each nonnegative integer k, we define

and call it the maximal persistence length in dimension \({\varvec{k}}\).

This definition is similar to the one used in Section 3 of Bobrowski et al. (2015).Footnote 10 Normally, the length of a persistence interval in \(H_k({\mathcal {K}};{\mathbb {F}})\) is viewed as its significance in dimension k. Therefore, \(l_{\max }(k,{\mathcal {K}})\), the maximum among such interval lengths, is viewed as the significance of \({\mathcal {K}}\) in dimension k.

3.2 The length \(L_k{({\mathcal {F}},P_K)}\) and its relation to the dimension of \({({\mathcal {F}},P_K)}\)

In this section, from the regular pair \({({\mathcal {F}},P_K)}\), we define quantities that we use to bound the dimension \(d{({\mathcal {F}},P_K)}\) from below. We start with the following notation.

Definition 3.4

Given \(({\mathcal {F}}, P_K)\), where \({\mathcal {F}} = \{f_i:K\rightarrow {\mathbb {R}}\}_{i\in [m]}\) is a collection of quasi-convex functions defined on a convex open set K and \(P_K\) is a probability measure on K. For \(x\in K\), we define

The function \(T_i(x)\) may be regarded as the \(P_K\)-rescaled version of \(f_i\) (see Fig. 3 for an illustration). Now we define a one dimensional filtration of simplicial complexes \({\mathcal {K}}_x\) that are used to infer a lower bound of the dimension \(d{({\mathcal {F}},P_K)}\). The geometry underlying the definition is depicted in Fig. 4.

From left to right, the filtration \({\mathcal {K}}_x(t)\), as the nerve of the sublevel sets of \(\{f_i\}_{i\in [m]}\), starts at \(t=0\) as the empty simplicial complex and increases as t goes up to \(t_{\max }(x)\), where the sublevel sets of \(\{f_i\}_{i\in [m]}\) touch the point x on their boundaries. The formal formulation of the process is in (10) of Definition 3.5

Throughout Sect. 3, we fix an arbitrary coefficient field \({\mathbb {F}}\) when taking homology; namely, for a filtration of simplicial complexes \({\mathcal {K}}\) and a nonnegative integer k, \(H_k({\mathcal {K}}){\mathop {=}\limits ^{\mathrm {def}}}H_k({\mathcal {K}};{\mathbb {F}})\).

Definition 3.5

Let \({({\mathcal {F}},P_K)}\) be a regular pair, where \({\mathcal {F}} = \{f_i:K\rightarrow {\mathbb {R}}\}_{i\in [m]}\). For \(x\in K\), let

Define a one dimensional filtered complex \({\mathcal {K}}_x\), indexed over \(t\in [0,t_{\max }(x)]\), by

For every nonnegative integer k, we define

As illustrated in Fig. 4, if x is “central” in some appropriate sense (see Definition 4.1 in Sect. 4), a \((d{({\mathcal {F}},P_K)}-1)\)-dimensional sphere is expected to show up and persist for a significant amount of time. In general, \(L_k{({\mathcal {F}},P_K)}\) can at least be used to derive a lower bound for the dimension of the regular pair \(({\mathcal {F}}, P_K)\) due to the following lemma.

Lemma 3.6

Let \({({\mathcal {F}},P_K)}\) be a regular pair. Then, for \(k\ge d{({\mathcal {F}},P_K)}\), \(L_k({\mathcal {F}},P_K)=0\). In particular,

Proof

For notational simplicity, in this proof, we denote \(d_{{\mathrm{low}}}=d_{{\mathrm{low}}}{({\mathcal {F}},P_K)}\) and \(d = d{({\mathcal {F}},P_K)}\). Recall that \({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}},P_K)(t_1,\ldots , t_m) = {\mathrm{nerve}}\left( \{f_i^{-1}(-\infty ,\lambda _i(t_i))\}_{i=1}^m\right) \). Since the functions \(f_i\) are quasi-convex, intersections of convex sets are convex and convex sets are contractible, the set \(\{f_i^{-1}(-\infty ,\lambda _i(t_i))\}_{i=1}^m\) is a good cover. Thus, by nerve lemma (see, e.g., Theorem 10.7 in Björner 1995 or Corollary 4G.3 in Hatcher 2002), we have the following homotopy equivalence:

Notice that \(\bigcup _{i\in [m]} f_i^{-1}(-\infty ,\lambda _i(t_i))\) is open in \({\mathbb {R}}^d\) and it is well-known that, for every open set \(U\subseteq {\mathbb {R}}^d\), \(H_k(U) = 0\), for all \(k\ge d\) (see, e.g., Proposition 3.29 in Hatcher 2002). Thus, for \(k\ge d\), \(H_k\left( \bigcup _{i\in [m]} f_i^{-1}(-\infty ,\lambda _i(t_i))\right) = 0\). Combining with (13), we obtain, for \(k\ge d\) and \((t_1,\ldots , t_m)\in [0,1]^m\), \(H_k({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}},P_K)(t_1,\ldots , t_m)) = 0\). Therefore, for \(k\ge d\), \(l_{\max }(k,{\mathcal {K}}_x)=0\), for all \(x\in K\), and \(L_k{({\mathcal {F}},P_K)}=0\). Thus \(d_{{\mathrm{low}}}-1 = \max \{k:L_k({\mathcal {F}},P_K)> 0\}\le d-1\) or, equivalently, \(d_{{\mathrm{low}}}\le d\). \(\square \)

\(L_k({\mathcal {F}},P_K)\) is defined with respect to a regular pair \(({\mathcal {F}}, P_K)\) and thus is not directly computable from discrete data. In Sect. 3.3, we follow an analogous approach in defining \(L_k{({\mathcal {F}},P_K)}\) to define \(L_k(M)\) and prove that \(L_k(M)\) converges to \(L_k{({\mathcal {F}},P_K)}\).

3.3 The length \(L_k(M)\) and its convergence to \(L_k{({\mathcal {F}},P_K)}\)

In Theorem 2.10, we see that, for the data matrix M, \({\mathrm{Dow}}(S(M))\) approximates \({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}}, P_K)\) with high probability. Thus, it is natural to use \({\mathrm{Dow}}(S(M))\) to define an analogue \(L_k(M)\) of \(L_k{({\mathcal {F}},P_K)}\).

Definition 3.7

Let \(M\in {\mathcal {M}}_{m,n}^o\) and \(S(M)=\{s_1,\ldots , s_m\}\) be the collection of m sequences induced from the rows of M (\(s_i\) corresponds to row i). For \(a\in [n]\) and \(i\in [m]\), denote

For \(a\in [n]\), which corresponds to the a-th column of the data matrix M, let

Define a one dimensional filtered complex \(\hat{{\mathcal {K}}}_{a}\), indexed over \(t\in [0,{\hat{t}}_{\max }(a)]\), by

See Definition 2.2 for the definition of \({\mathrm{Dow}}(S(M))\). For every nonneative integer k, we define

Since \({\mathrm{Dow}}(S(M))\) approximates \({{\mathbb {D}}{\mathrm{ow}}}({\mathcal {F}},P_K)\), intuitively, \(\hat{{\mathcal {K}}}_a(t)\) approximates \({\mathcal {K}}_{x_a}(t)\) and \(L_k(M)\) approximates \(L_k({\mathcal {F}},P_K)\). With the help of Theorem 2.10 and the Isometry Theorem in topological data analysis (see e.g. Theorem 7.16 in Sect. 7 of Oudot 2015), these intuitions are justified as follows:

Theorem 3.8

Let \(({\mathcal {F}}, P_K)\) be a regular pair. Assume that K is bounded and each \(f_i\in {\mathcal {F}}\) can be continuously extended to the closure \({\bar{K}}\). Let \(M_n\) be an \(m\times n\) matrix sampled from \({({\mathcal {F}},P_K)}\). Then, for all \(k\in \{0\}\cup {\mathbb {N}}\), as \(n\rightarrow \infty \), \(L_k(M_n)\) converges to \(L_k({\mathcal {F}},P_K)\) in probability; namely, for all \(\epsilon >0\),

Moreover, the rate of convergence is independent of k.Footnote 11

The proof of Theorem 3.8 is given in Sect. 7.2. According to Theorem 3.8, for each non-negative integer k, \(L_k(M_n)\) are consistent estimators of \(L_k({\mathcal {F}},P_K)\) and they converge uniformly in probability. Thus, by Lemma 3.6 and Theorem 3.8, we can estimate a lower bound for the dimension of \({({\mathcal {F}},P_K)}\) from the data matrix M, via looking at the values of \(L_k(M_n)\). Formally, we can define the following estimator of \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}\).

Definition 3.9

For \(\epsilon >0\) and \(M\in {\mathcal {M}}_{m,n}^o\), we define

As a consequence of Lemma 3.6 and Theorem 3.8, it is immediate that \({\hat{d}}_{{\mathrm{low}}}(M_n,\epsilon )\) is a consistent estimator, for appropriately chosen \(\epsilon \).

Corollary 3.10

Let \({({\mathcal {F}},P_K)}\) be a regular pair satisfying the conditions in Theorem 3.8 and \(M_n\in {\mathcal {M}}_{m,n}^o\) be sampled from \({({\mathcal {F}},P_K)}\). Denote \(d_{\mathrm{low}}= d_{\mathrm{low}}{({\mathcal {F}},P_K)}\). Then, for all \(0<\epsilon <L_{d_{{\mathrm{low}}}-1}{({\mathcal {F}},P_K)}\),

Proof

For notational simplicity, in this proof, we denote \(d = d{({\mathcal {F}},P_K)}\). By Lemma 3.6 and Theorem 3.8, for \(k\ge d\), \(L_k(M_n)\rightarrow 0\) in probability and \(L_{d_{\mathrm{low}}-1}(M_n)\rightarrow L_{d_{\mathrm{low}}-1}{({\mathcal {F}},P_K)}>0\) in probability, as \(n\rightarrow \infty \), with the same rate of convergence. Since \(0<\epsilon <L_{d_{{\mathrm{low}}}-1}{({\mathcal {F}},P_K)}\), as \(n\rightarrow \infty \), w.h.p., \(L_{d_{\mathrm{low}}-1}(M_n)>\epsilon \) and \(L_k(M_n)<\epsilon \). Therefore, w.h.p., \({\hat{d}}_{{\mathrm{low}}}(M_n,\epsilon ) = d_{{\mathrm{low}}}\), and the result follows. \(\square \)

From Corollary 3.10, \({\hat{d}}_{{\mathrm{low}}}(M_n,\epsilon )\) can be used as a consistent estimator of \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}\). However, we need to know how to choose an appropriate \(\epsilon \) for \({\hat{d}}_{{\mathrm{low}}}(M_n,\epsilon )\), and hence estimation of \(L_k{({\mathcal {F}},P_K)}\) is still necessary. Therefore, in practice, we suggest one use a statistical approach estimating \(L_k{({\mathcal {F}},P_K)}\) to infer \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}\), instead of using \({\hat{d}}_{{\mathrm{low}}}(M_n,\epsilon )\) directly. The details are discussed in Sect. 3.5.

3.4 Algorithm for \(\hat{{\mathcal {K}}}_a\) and \(L_k(M)\)

For ease of implementation, we combine Definitions 3.7 and 2.2 and summarize them as algorithms for the computaion of \(\hat{{\mathcal {K}}}_a\) and \(L_k(M)\). Algorithm 1 is for \(\hat{{\mathcal {K}}}_a\).

The next algorithm, Algorithm 2, is for computing \(L_k(M)\). Note that, in the algorithm, PersistenceIntervals is a function with two inputs, a filtration of simplicial complexes and a positive integer that is set to limit the dimension of the computation of persistent homology to avoid possible intractable computational complexities. As the name suggests, the output of PersistenceIntervals is the persistence intervals of the first input in dimensions less than or equal to the second input.

3.5 How to use the algorithms in different situations

The worst case complexity of a standard algorithm for computing the persistent homology of a 1-dimensional filtration of simplicial complexes is cubical in the number of simplices (see, e.g. Section 5.3.1 in Otter et al. 2017 and references therein). Since each \(\hat{{\mathcal {K}}}_a\) starts from the empty simplicial complex and ends at the full simplex \(\varDelta ^{m-1}\), we would need to go through all faces of \(\varDelta ^{m-1}\). However, since we limit the computation only in dimension \(0, 1,\ldots , d_{{\mathrm{up}}}\), where \(d_{{\mathrm{up}}}\le m-1\) is pre-set, we only need to consider the \((\min \{d_{{\mathrm{up}}}+1, m-1\})\)-skeleton of \(\varDelta ^{m-1}\). Therefore, for our algorithm, the number of faces in the 1-dimensional filtration is

which is \(O(m^{d_{{\mathrm{up}}}+2})\). Since there are n such \(a\in [n]\), the worst case complexity of computing \(\{L_k(M)\}_{k = 0}^{d_{\mathrm{up}}}\) is \(O(n\cdot m^{3(d_{{\mathrm{up}}}+2)}) = O(n\cdot m^{3d_{{\mathrm{up}}}+6})\), which is of degree \(3d_{{\mathrm{up}}}+6\) in m but only linear in n.

Since the algorithm is linear in n, even in the case when n is large, as long as m is not too large, the algorithm is still tractable. Moreover, to use the full power of Theorem 3.8, we would want n to be large. In the case when n is large, we may subsample the points (i.e. the columns) to see how large the variance of \(L_k(M_n)\) is; this is called bootstrap in statistics. Moreover, we can implement the subsampling for different numbers of columns and get the convergence trend.

On the other hand, to infer the dimension \(d{({\mathcal {F}},P_K)}\), we will need at least \(m\ge d{({\mathcal {F}},P_K)}+1\). Thus, we want m to be not too small. However, since the computational complexity of \(L_k(M_n)\) goes up in high degree order with respect to m, we cannot have m being too large. In the case when m is too large, we can overcome the computational difficulty by subsampling the functions (i.e. the rows); namely, pick randomly \(m_s\), say \(m_s = 10\), functions, which correspond to their respective \(m_s\) rows of \(M_n\) and compute the \(L_k\) of the submatrix thus formed; repeat this process many times and see how the result is distributed.

We elaborate on these two methods (subsampling points or functions) in the following two subsections. We also implement the methods for estimating the embedding dimension in their appropriate situations, plot the results and give some principles for decision making (i.e. deciding, given the the plot and k, whether we accept \(L_k{({\mathcal {F}},P_K)}>0\) or not).

3.5.1 Subsample points when n is sufficiently large

In the case when n is sufficiently large, say \(n\ge 300\), we are allowed to subsample, say \(n_s\), points (i.e. columns of \(M_n\)) and obtain the variance information. Moreover, letting \(n_s\) go up, we can obtain further how the trend of convergence goes, which, by Theorem 3.8, should converge to the true \(L_k{({\mathcal {F}},P_K)}\). The technique of subsampling is called bootstrap in statistics.

Figure 5 is the boxplotsFootnote 12 of \(L_k(M_{n_s})\) obtained by implementing this idea under different settings of (d, m, n), where \(d=d{({\mathcal {F}},P_K)}\) is the dimension of \({({\mathcal {F}},P_K)}\), m is the number of functions and n is the number of data points. Here, we choose (m, n) to be (10, 350) throughout, where m is moderate for computation and n is sufficiently large for subsampling. Subsampling is repeated 100 times for each boxplot. To compare with the result of a purely random matrix, we also generate a \(10\times 350\) matrix whose entries are iid from \(\mathrm {Unif}(0,1)\) and compute its \(L_k\)’s. The details of how the boxplots are generated are in the caption of Fig. 5.

The four panels are boxplots of \(L_k\) obtained from subsampling the points. Throughout the panels, \(m = 10\) and \(n = 350\), where m is the number of functions and n is the number of total sample poionts. The panels correspond to a \(d=3\), b \(d=4\), c \(d=5\), where \(d=d{({\mathcal {F}},P_K)}\) is the dimension of \({({\mathcal {F}},P_K)}\). The functions are chosen to be random quadratic functions defined on the unit d-ball in \({\mathbb {R}}^d\). Panel (d) is obtained by computing the \(L_k\)’s of an \(m\times n\) matrix \(M_n\) with entries i.i.d. from Unif(0,1), which is treated as a purely random matrix and whose main purpose is for comparison with other panels. Each figure in each panel is generated by subsampling \(n_s = 50, 150, 200\) columns of \(M_n\), repeated 100 times. By the decision principle, every figure in panel (a) successfully infer their respective true dimensions; in panel (b), \(n_s=50\) fails to infer the true dimension 4 but only infers a lower bound 3 while \(n_s = 150, 200\) successfully infer the true dimension 4; in panel (c), both \(n_s = 50, 150\) fail to infer the true dimension 5 but only infer a lower bound 4 while \(n_s = 200\) successfully infer the true dimension 5. In panel (d), the figure has a quite different behavior. (It can be proved that, if entries of \(M_n\in {\mathcal {M}}_{m,n}^o\) are i.i.d. from \(\mathrm {Unif}(0,1)\), then \(L_k(M_n)\) behaves as positive for \(k \le m-2\) and \(L_k(M_n)\) behaves as going to zero, for \(k\ge m-1\); thus \(L_k(M_n)\) relies on m instead of an intrinsic d.)

Let us elaborate a little more on Fig. 5. The decision principle we propose to follow is that,

on each boxplot of \(L_k(M_{n_s})\), if the first quartileFootnote 13Q1 is not greater than 0, reject \(L_k{({\mathcal {F}},P_K)}>0\); otherwise, accept \(L_k{({\mathcal {F}},P_K)}>0\).

For panel (a) where \(d = 3\), we can see that, as \(n_s\) goes up, the variance of \(L_k(M_{n_s})\) for each k goes down. For \(k = 2\), the first quartile of \(L_k(M_{n_s})\) is greater than 0 even for \(n_s = 50\); for \(k\ge 3\), \(L_k(M_n)\) stays at 0 with only some noise-like dots all the time. According to this principle, we can conclude \(d\ge 3\) for this regular pair. In fact, as we know in advance, \(d = 3\).

For panel (b) where \(d = 4\), the same shrinking variance behavior can be observed. Moreover, the principle concludes \(d\ge 4\) after \(n_s = 150\), where the first quartile starts to stay away from 0. Similarly, for panel (c) where \(d = 5\), in \(n_s = 50\) and \(n_s = 150\), our principle concludes \(d\ge 4\) and in \(n_s = 200\), it concludes \(d\ge 5\).

It is observed that, for higher d, we would need \(n_s\) to be larger to make the best conclusion (i.e. inferring the true dimension). However, by making \(n_s\) go up, the variance of \(L_k(M_{n_s})\) goes down and we may also use this information. Therefore, for small sample case, one may count on this convergence behavior and develop other principles by quantifying the trend of convergence. For example, in panel (c) where \(d = 5\), when \(n_s\) goes up from 50 to 150, we observe that \(L_4(M_{n_s})\) pokes out from noiselike outliers to a filled box. This trend suggests that we “may accept" \(L_4{({\mathcal {F}},P_K)}>0\). We will leave it to the practitioners to decide their own principles on how to use the convergence trend information in their fields of interest.

3.5.2 Subsample functions when m is large

As we mentioned earlier, the worst case computational complexity of \(L_k(M_n)\) goes up although polynomially but with degree \(3 d_{\mathrm{up}}+6\) (high degree) in m, the number of rows of \(M_n\). To overcome this difficulty, we propose to subsample the rows (i.e. the collection of functions) of \(M_n\). Specifically, for a fixed number \(m_s<m\), we randomly choose \(m_s\) rows of \(M_n\) and construct the \(m_s\times n\) submatrix \(M_{m_s\times n}\) accordingly, compute \(L_k(M_{m_s\times n})\) and repeat the process as many times as assigned. Figure 6 is the boxplot of \(L_k(M_{m_s\times n})\) with \(m_s = 10\), repeated \(N_{{\mathrm{rep}}} = 1000\) times, under different settings. Notice that throughout the plots, \(m = 100\), \(n = 150\), \(m_s = 10\) and \(N_{{\mathrm{rep}}} = 1000\). We still adopt the principle as last subsection that we only accept \(L_k{({\mathcal {F}},P_K)}>0\) when the first quartile Q1 is above 0. Therefore, the concluding lower bounds for the plots are 2, 3, 4 and 4, resp., for panel (a), (b), (c) and (d) in Fig. 6.

Boxplots of \(L_k\) obtained from subsampling functions. Throughout the panels, \(m = 60\), \(n = 150\) and \(m_s = 10\), where m is the number of functions (rows of \(M_n\)), n is the number of total sample points and \(m_s\) is the number of functions used in each function subsampling. Each panel is generated under a fixed regular pair with a \(d=2\), b \(d=3\), c \(d=4\), d \(d=5\), where d is the true dimension of the regular pair. Function subsampling is repeated 1000 times for each panel

A lower bound for \(d{({\mathcal {F}},P_K)}\) may not be very satisfactory. In Sects. 4 and 5, we develop some theory and methods to decide whether the lower bound obtained in this section is indeed the dimension \(d{({\mathcal {F}},P_K)}\).

4 Complete regular pairs and \({\hat{d}}_{\mathrm{low}}(M,\varepsilon )\) as an asymptotically consistent dimension estimator

We establish in Sect. 3 that a lower bound \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}\) of \(d{({\mathcal {F}},P_K)}\) is generally inferable from sampled data. Here we provide a sufficient condition for \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}= d{({\mathcal {F}},P_K)}\); this ensures that the dimension \(d{({\mathcal {F}},P_K)}\) can be inferred with high probability. Recall that the conic hull of a set \(S\subseteq {\mathbb {R}}^d\), denoted \({\mathbf {cone}}({{\varvec{S}}})\), is the set

Definition 4.1

Let \({({\mathcal {F}},P_K)}\) be a regular pair, where \({\mathcal {F}} = \{f_i\}_{i\in [m]} \) and each \(f_i:K\rightarrow {\mathbb {R}}\) is differentiable. The set

is called the type 1 central region of \(({\mathcal {F}}, P_K)\).

Two examples illustrating the definition of \({\mathrm{Cent}}_1\). a x is a point in \({\mathrm{Cent}}_1\), because the gradients at x positively span the whole Euclidean space \({\mathbb {R}}^2\). b x is not a point in \({\mathrm{Cent}}_1\), since the gradients at x do not positively span the whole Euclidean space \({\mathbb {R}}^2\). In both panels, the colored arrows are the gradients at x of the functions of their respective colors

Figure 7 illustrates two possibilities: (a) \(x\in {\mathrm{Cent}}_1\), and (b) \(x\notin {\mathrm{Cent}}_1\). Since the gradient of a function f at a point x is the outward pointing normal direction to the sublevel set \(f^{-1}(-\infty ,x)\), every point \(x\in {\mathrm{Cent}}_1\) is “enclosed” from every direction by the union of the sublevel sets \(\{f_i^{-1}(-\infty ,x)\}_{i\in [m]}\) (panel (a) of Fig. 7). It is therefore intuitive to see that at every point \(x\in {\mathrm{Cent}}_1\), the filtration \({\mathcal {K}}_x(t)\) (see Fig. 4 on page 13) results in the persistence of a (homological) \((d-1)\)-sphere. Therefore, for \(x\in {\mathrm{Cent}}_1\), \(l_{\max }(d-1,{\mathcal {K}}_x)\) (and hence \(L_{d-1}{({\mathcal {F}},P_K)}\)) is expected to be positive and the lower bound in Lemma 3.6 yields the dimension \(d{({\mathcal {F}},P_K)}\). It is also easy to visualize why there is no persistent \((d-1)\)-sphere in \({\mathcal {K}}_x(t) \) if \(x\notin {\mathrm{Cent}}_1\), see panel (b) of Fig. 7. This motivates the following definition.

Definition 4.2

A regular pair \({({\mathcal {F}},P_K)}\) is said to be complete if its type 1 central region \( {\mathrm{Cent}}_1\) is non-empty.

The following theorem makes the above intuition more precise.

Theorem 4.3

Let \({({\mathcal {F}},P_K)}\) be a regular pair, where \({\mathcal {F}} = \{f_i\}_{i\in [m]} \) and each \(f_i:K\rightarrow {\mathbb {R}}\) is differentiable. If \({({\mathcal {F}},P_K)}\) is complete, then the lower bound in Lemma 3.6 is indeed the dimension of the regular pair, i.e. \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}= d{({\mathcal {F}},P_K)}\).

The proof of Theorem 4.3 is given in Sect. 4.1. Notably, if the regular pair is not complete (i.e. none of the points illustrated in panel (a) of Fig. 7 exists), then the desired persistent \((d-1)\)-sphere cannot be formed in a small neighborhood of any point in K and one cannot be certain about whether \(L_{d-1}{({\mathcal {F}},P_K)}\) is positive and, equivalently, whether \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}=d{({\mathcal {F}},P_K)}\). Immediate from Theorem 4.3 and Corollary 3.10 is the following

Theorem 4.4

Let \({({\mathcal {F}},P_K)}\) be a regular pair satisfying the conditions in Theorem 3.8, where \({\mathcal {F}} = \{f_i\}_{i\in [m]} \) and each \(f_i:K\rightarrow {\mathbb {R}}\) is differentiable. If \({({\mathcal {F}},P_K)}\) is complete regular pair with dimension \(d=d{({\mathcal {F}},P_K)}\), and matrices \(M_n\in {\mathcal {M}}_{m,n}^o \) are sampled from \({({\mathcal {F}},P_K)}\), then for every \(\varepsilon \in \left( 0,L_{d-1}{({\mathcal {F}},P_K)}\right) \),

In other words, \({\hat{d}}(\varepsilon ):{\mathcal {M}}_{m,n}^o\rightarrow {\mathbb {N}}\) defined by \({\hat{d}}(\varepsilon )(M_n){\mathop {=}\limits ^{\mathrm {def}}}{\hat{d}}_{{\mathrm{low}}}(M_n,\varepsilon )\) is an asymptotically consistent estimator in the class of complete regular pairs.

Proof

By Theorem 4.3, \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}= d{({\mathcal {F}},P_K)}\). Moreover, by Corollay 3.10,

Thus, the result follows. \(\square \)

4.1 Proof of Theorem 4.3

Recall, the following notation from Sect. 3. Let \({({\mathcal {F}},P_K)}= (\{f_i\}_{i\in [m]},P_K)\) be a regular pair; for any \(t\in [0,1]\), we denote \(K^{(i)}(t) {\mathop {=}\limits ^{\mathrm {def}}}f_i^{-1}(-\infty ,\lambda _i(t))\), where \(\lambda _i(t) \) is a monotone-increasing function that satisfies \(P_K(f_i^{-1}(-\infty ,\lambda _i(t))) = t\). For any \(x\in K\), we also denote

Theorem 4.3 follows from the following key lemma.

Lemma 4.5

Let \((\{f_i\}_{i=1}^m, P_K)\) be a complete regular pair. Suppose \(x_0\in {\mathrm{Cent}}_1\), then there exists \(\varepsilon >0\) such that

Proof of Theorem 4.3

By Lemma 3.6, \(d_{{\text {low}}}{({\mathcal {F}},P_K)}\le d{({\mathcal {F}},P_K)}\). We therefore only need to prove \(L_{d-1}({\mathcal {F}},P_K)>0\). Let \(x_0\in {\mathrm{Cent}}_1\). By Lemma 4.5, \(l_{\max }(d-1,x_0)>0\) (see Definition 3.5). Therefore, \(L_{d-1}({\mathcal {F}},P_K)>0\), completing the proof. \(\square \)

To prove Lemma 4.5, we need

Lemma 4.6

For \(t_1,\ldots , t_m\in (0,1)\) and \(\sigma \subseteq [m]\), if \(\bigcap _{i\in [m]} K^{(i)}(t_i)\ne \varnothing \), then there exists \(\epsilon >0\) such that \(\bigcap _{i\in \sigma } K^{(i)}(t_i-\epsilon )\ne \varnothing \). In addition, by monotonicity of \(K^{(i)}(t)\), we also have \(\bigcap _{i\in \sigma } K^{(i)}(t_i-\eta )\ne \varnothing \), for all \(0<\eta \le \epsilon \).

Proof

Let \(\epsilon _n\) be a sequence with \(\epsilon _n\searrow 0\). Let us first prove that \(K^{(i)}(t_i-\epsilon _n)\nearrow K^{(i)}(t_i)\); equivalently, \(K^{(i)}(t_i) = \bigcup _{n=1}^\infty K^{(i)}(t_i-\epsilon _n)\). For any n, \(K^{(i)}(t_i-\epsilon _n)\subseteq K^{(i)}(t_i)\) by definition. Therefore, \(\bigcup _{n=1}^\infty K^{(i)}(t_i-\epsilon _n)\subseteq K^{(i)}(t_i)\). For the other inclusion, assume \(x\in K^{(i)}(t_i)=f^{-1}(-\infty ,\lambda _i(t_i))\). Then \(f_i(x)<\lambda _i(t_i)\). By Lemma 2.5, \(\lambda _i\) is continuous and strictly increasing. Hence, there exists n such that \(\lambda _i(t_i-\epsilon _n)>f_i(x)\); in other words, \(x\in K^{(i)}(t_i-\epsilon _n)\). Therefore, \(x\in \bigcup _{n=1}^\infty K^{(i)}(t_i-\epsilon _n)\), proving the claim.

Now we have, as \(n\nearrow \infty \), \(K^{(i)}(t_i-\epsilon _n)\nearrow K^{(i)}(t_i)\). Thus \(\bigcap _{i\in \sigma } K^{(i)}(t_i-\epsilon _n)\nearrow \bigcap _{i\in \sigma } K^{(i)}(t_i) \). Since \(\bigcap _{i\in \sigma } K^{(i)}(t_i)\ne \varnothing \), there must exist n such that \(\bigcap _{i\in \sigma } K^{(i)}(t_i-\epsilon _n)\ne \varnothing \). Taking \(\epsilon = \epsilon _n\), the result follows. \(\square \)

Proof of Lemma 4.5

For each \(i\in [m]\), we denote by

the appropriate open convex sublevel set of \(f_i\). Note that \( K^{(i)}(T_i(x_0)-t)\subseteq \varOmega _i\) for any \(t \ge 0\), and the nerve in the left-hand-side of (22) is a subcomplex of the \({\mathrm{nerve}}\left( \left\{ \varOmega _i\right\} _{i\in [m]}\right) \).

For each non-empty \(\sigma \in {\mathrm{nerve}}\left( \left\{ \varOmega _i\right\} _{i\in [m]}\right) \), the subset \(\bigcap _{i\in \sigma }\varOmega _i \) is open and non-empty and hence has a nonzero \(P_K\) measure. Thus, by Lemma 4.6, there exists \(\varepsilon _\sigma >0\), such that \( \bigcap _{i\in \sigma } K^{(i)}(T_i(x_0)-t) \) is non-empty for any \(t\in [0, \varepsilon _\sigma )\). Choosing \(\varepsilon \) to be the minimum of all such \(\varepsilon _\sigma \) thus guarantees that

It thus suffices to prove (22) for \(t=0\). Since each \(\varOmega _i\) is open and convex, by the nerve lemmaFootnote 14 it is enough to show that \(\bigcup _{i\in [m]} \varOmega _i \sim S^{d-1}\). Moreover, since \(x_0\) lies on the boundary of each \(\varOmega _i \), the union \( \{ x_0\} \cup \bigcup _{i\in [m]} \varOmega _i \) is star-shaped. Therefore, it suffices to prove that there exists \(\eta >0\) such that

where \(B_\eta (x_0) = \{x\in {\mathbb {R}}^d: \Vert x-x_0\Vert <\eta \}\).

Suppose no such \(\eta >0\) exists, then, for all \(n\in {\mathbb {N}}\), there exists a unit vector \(v_n\in S^{d-1}=\{ x\in {\mathbb {R}}^d \, , \, \Vert {x} \Vert =1\}\) such that \(x_n=x_0+\frac{1}{n}\cdot v_n\notin \bigcup _{i\in [m]} \varOmega _i\). By compactness of \(S^{d-1}\), there is an infinite subsequence \(\{ v_{n_j}\}\), that converges to a particular \(v_*\in S^{d-1}\). Since all \(f_i\) are differentiable, using Taylor’s theorem, we obtain

Since \(x_n \notin \varOmega _i \) for all i, \(f_i(x_n)\ge f_i(x_0)\). Taking \(\liminf _{j\rightarrow \infty }\) on both sides of Eq. (24), we conclude that \( \langle \nabla f_i(x_0), v_*\rangle \ge 0, \ \forall \ i\in [m] \). Since \(-v_*\in {\mathrm{cone}}(\{\nabla f_i(x_0)\}_{i\in [m]}) = {\mathbb {R}}^d\), choosing appropriate nonnegative coefficients in (20) yields \(0\le \langle -v_*, v_*\rangle \) and hence \(v_* = 0\), a contradiction. Therefore the inclusion (25) holds for some \(\eta >0\). \(\square \)

5 Testing the completeness of \({({\mathcal {F}},P_K)}\) from sampled data

Theorem 4.3 establishes that completeness of \({({\mathcal {F}},P_K)}\) implies \(d_{\mathrm{low}}{({\mathcal {F}},P_K)}= d{({\mathcal {F}},P_K)}\), and thus the data dimension \(d{({\mathcal {F}},P_K)}\) can be inferred from sampled data. Unfortunately, completeness cannot be directly tested from sampled data, since the gradient information is not directly accessible from discrete samples.

To address this difficulty, we consider a different notion of central region, \({\mathrm{Cent}}_0\subseteq K\), which, under a natural assumption (Definition 5.2 below), is indistinguishable from \({\mathrm{Cent}}_1\) in the probability measure \(P_K\) (Lemma 5.3). We also establish that the probability measure of \({\mathrm{Cent}}_0\) can be approximated from sampled data (Theorem 5.6). This thus makes it possible to test completeness of a regular pair from (discrete) sampled data.

Definition 5.1

Let \(({\mathcal {F}}, P_K) \) be a regular pair, the subset

is called the type 0 central region of \(({\mathcal {F}}, P_K)\).

A point x belongs to \({\mathrm{Cent}}_0\) if the appropriate open sublevel sets of \(\{f_i\}\) have an empty intersection. If the functions \(f_i\) are differentiable and quasi-convex, a sublevel set must be a subset of the open half-space:

If \(x \in {\mathrm{Cent}}_1\), then \(\{\nabla f_i(x)\}_{i\in [m]}\) positively span the whole Euclidean space; hence, the open half-spaces \(H_i^-\), must have empty intersection: \( \cap _{i=1}^n H_i^-=\varnothing \). Thus because of the inclusion (25), we conclude \(x\in {\mathrm{Cent}}_0\). We thus established the inclusion

Note that the reverse direction of the above containment is not necessary true. For example, if for some \(x\in K\), \(\{\nabla f_i(x)\}_{i\in [m]}\) positively spans a proper linear subspaceFootnote 15 of \({\mathbb {R}}^d\), then \(x\in {\mathrm{Cent}}_0\) but \(x\notin {\mathrm{Cent}}_1\). A “regular” random choice of m vectors in \({\mathbb {R}}^d\) is improbable (albeit possible) to exactly positively span a proper linear subspace, motivating the following

Definition 5.2

A set of vectors \(V = \{v_1,\ldots , v_m\}\subseteq {\mathbb {R}}^d\) is said to be in general direction if, for every \(\sigma \subseteq [m]\) with \(|\sigma |\le d\), the set of vectors \(\{v_i\}_{i\in \sigma }\) is linearly independent. A collection of differentiable functions \({\mathcal {F}} = \{f_i:K\rightarrow {\mathbb {R}}\}_{i\in [m]}\) is said to be in general position if for (Lebesgue) almost every x in K, the vectors \(\{\nabla f_i(x)\}_{i\in [m]}\) are in general direction.

In other words, functions in general position are those functions, whose gradients are improbable to positively span a proper subspace. It turns out that assuming that the functions are in general position serves our purpose of replacing \({\mathrm{Cent}}_1\) with \({\mathrm{Cent}}_0\), as described in the following

Lemma 5.3

Let \(({\mathcal {F}}, P_K)\) be a regular pair, where each function in \( {\mathcal {F}}\) is differentiable. Assume that \({\mathcal {F}}\) is in general position, then

The proof of Lemma 5.3 is given in Sect. 5.1. In the next lemma, we prove that \({\mathrm{Cent}}_1\) is always an open subset of K; hence, completeness of a regular pair \({({\mathcal {F}},P_K)}\) is equivalent to \(P_K({\mathrm{Cent}}_1)>0\). Consequently, Lemma 5.3 ensures that completeness of a regular pair in general position is equivalent to \(P_K({\mathrm{Cent}}_0)>0\).

Lemma 5.4

Let \(\{f_i:K\rightarrow {\mathbb {R}}\}_{i\in [m]}\) be a collection of quasi-convex \(C^1\) functions, where \(K\subseteq {\mathbb {R}}^d\) is open and convex. Then the set \({\mathrm{Cent}}_1=\big \{x\in K: {\mathrm{cone}}(\{\nabla f_i(x)\}_{i\in [m]})={\mathbb {R}}^d\big \}\) is open. In particular, \({\mathrm{Cent}}_1\ne \varnothing \) is equivalent to \(P_K({\mathrm{Cent}}_1)>0\).

Proof

Define a function \(h:K\times S^{d-1}\rightarrow {\mathbb {R}}\) by \(h(x,u) = \max _{i\in [m]} \langle u,\nabla f_i(x)\rangle \). Since each \(f_i\) is \(C^1\), the functions \((x,u)\mapsto \langle u,\nabla f_i(x)\rangle \) are continuous and hence h is also continuous. For \(x\in K\), we define \(\rho (x)=\min _{u\in S^{d-1}} h(x,u)\). Let us prove that, for \(x\in K\), \(\rho (x)>0\) if and only if \({\mathrm{cone}}(\{\nabla f_i(x)\}_{i\in [m]}) = {\mathbb {R}}^d\).

For one direction, let \(x\in K\) satisfy \(\rho (x)>0\), or, equivalently, \(\max _{i\in [m]} \langle u,\nabla f_i(x)\rangle >0\), for all \(u\in S^{d-1}\). If \(0\in {\mathrm{bd}}({\mathrm{conv}}(\{\nabla f_i(x)\}_{i\in [m]}))\), then the nonzero vector v pointing outward of \({\mathrm{conv}}(\{\nabla f_i(x)\}_{i\in [m]})\) and orthogonal to the hyperface containing 0 will make \(\max _{i\in [m]} \langle v,\nabla f_i(x)\rangle = 0\), a contradiction. If \(0\notin {\mathrm{conv}}(\{\nabla f_i(x)\}_{i\in [m]})\), then taking \(v = -{\mathrm{argmin}}_{z\in {\mathrm{conv}}(\{\nabla f_i(x)\}_{i\in [m]})} \langle z, z\rangle \) will make \(\max _{i\in [m]} \langle v,\nabla f_i(x)\rangle \!<\!0\), also a contradiction. Therefore, \(0\!\in \!{\mathrm{int}}({\mathrm{conv}}(\{\nabla f_i(x)\}_{i\in [m]}))\) and hence \({\mathrm{cone}}(\{\nabla f_i(x)\}_{i\in [m]}) = {\mathbb {R}}^d\). For the other direction, let \(x\in K\) satisfy \({\mathrm{cone}}(\{\nabla f_i(x)\}_{i\in [m]}) = {\mathbb {R}}^d\). To prove \(\rho (x)>0\), since \(u\mapsto \max _{i\in [m]} \langle u,\nabla f_i(x)\rangle \) is continuous and \(S^{d-1}\) is compact, it suffices to prove that, for all \(u\in S^{d-1}\), \(\max _{i\in [m]} \langle u, \nabla f_i(x)\rangle >0\). Given \(u\in S^{d-1}\), since \({\mathrm{cone}}(\{\nabla f_i(x)\}_{i\in [m]}) = {\mathbb {R}}^d\), \(u = \sum _{i\in [m]} r_i\cdot \nabla f_i(x)\), for some \(r_i\ge 0\). If \(\langle u,\nabla f_i(x)\rangle \le 0\) for all \(i\in [m]\), then \(\langle u,u\rangle = \sum _{i\in [m]} r_i\langle u,\nabla f_i(x)\rangle \le 0\), a contradiction. Thus, the other direction is proved and, for \(x\in K\), \(\rho (x)>0\) if and only if \({\mathrm{cone}}(\{\nabla f_i(x)\}_{i\in [m]}) = {\mathbb {R}}^d\).

Now let \(x_0\in K\) such that \({\mathrm{cone}}(\{\nabla f_i(x_0)\}_{i\in [m]})={\mathbb {R}}^d\). By what has been claimed, this is equivalent to \(\rho (x_0)>0\). We want to prove that there exists \(\epsilon >0\) such that for all \(x\in B(x_0,\epsilon )\), \({\mathrm{cone}}(\{\nabla f_i(x)\}_{i\in [m]})={\mathbb {R}}^d\), or equivalently, \(\rho (x)>0\). Suppose not, then there exists a sequence \((x_n, u_n)\in K\times S^{d-1}\) such that \(x_n\rightarrow x_0\) and \(h(x_n, u_n)\le 0\) for all n. By compactness of \(S^{d-1}\), there is a subsequence \(u_{n_j}\rightarrow u_0\) and thus by continuity of h, \(h(x_0, u_0)\le 0\). However, \(h(x_0, u_0)\ge \min _{u\in S^{d-1}} h(x_0,u) = \rho (x_0)>0\), a contradiction. Thus the proof is complete. \(\square \)

We have now established that for a regular pair in general position, completeness is equivalent to \(P_K({\mathrm{Cent}}_0)>0\). A natural question arises: assuming that the considered regular pair is in general position, can one know/test whether \(P_K({\mathrm{Cent}}_0)\) is positive or not, solely from the data matrix M? To answer the question, we propose the following natural discretization of \({\mathrm{Cent}}_0\).

Definition 5.5

For a matrix \(M\in {\mathcal {M}}_{m,n}^o\), the set

is called the discretized central region.

If a matrix \(M\in {\mathcal {M}}_{m,n}^o\) is sampled from a regular pair, then for each \(a\in [n]\), the set \( \{b\in [n]: M_{ib} <M_{i a} \} \) is a discretization of \(f_i^{-1}(-\infty ,f_i(x_a))\), and \({\widehat{{\mathrm{Cent}}}}_0(M)\) can be thought of as an approximation of \({\mathrm{Cent}}_0\). The following theorem confirms this intuition.

Theorem 5.6

Let \(M_n\in {\mathcal {M}}_{m,n}^o\) be sampled from a regular pair, then \(\frac{1}{n}{\#({\widehat{{\mathrm{Cent}}}}_0(M_n))} \) converges to \(P_K({\mathrm{Cent}}_0)\) in probability:

The proof of Theorem 5.6 involves technicalities used in the proof of Interleaving Convergence Theorem (Theorem 2.10) and is given in Sect. 7.3 in the Appendix. Theorem 5.6 establishes that \(\frac{1}{n}{\#({\widehat{{\mathrm{Cent}}}}_0(M_n))}\) serves as an approximation of \(P_K({\mathrm{Cent}}_0)\), and thus enables one to test whether \(P_K({\mathrm{Cent}}_0)>0\). Thus, by Lemma 5.3, this provides a way to test the completeness of the underlying regular pair \({({\mathcal {F}},P_K)}\).

5.1 Proof of Lemma 5.3

First we prove the first part of Lemma 5.3.

Lemma 5.7

Let \(({\mathcal {F}},P_K) = (\{f_i\}_{i\in [m]},P_K)\) be a regular pair, where each function in \( {\mathcal {F}}\) is differentiable. Assume that \({\mathcal {F}}\) is in general position, then \(P_K({\mathrm{Cent}}_1\setminus {\mathrm{Cent}}_0)=0\).

Proof

Let \(K^\prime =\{ x\in K\, \vert \, \exists \ i, \,\nabla f_i(x)= 0 \} \) denote the union of critical points of functions in \({\mathcal {F}}\). Since \({\mathcal {F}}\) is in general position, \(K'\) has Lebesgue measure zero. Assume \(x_0\in {\mathrm{Cent}}_1\setminus K^\prime \), and thus

It can be easily shown, see e.g. Theorem 3.2.3 in Cambini and Martein (2009), that if f is differentiable and quasi-convex on an open convex K with \(\nabla f(x_0)\ne 0\), then \(f(x)<f(x_0)\) implies \(\left\langle \nabla f(x_0),x-x_0\right\rangle <0\). Thus

where the last equality follows from (27), as one can chose \(u=x-x_0\). This implies \(x_0\in {\mathrm{Cent}}_0\). Therefore, \({\mathrm{Cent}}_1\setminus K'\subseteq {\mathrm{Cent}}_0\) and \(P_K({\mathrm{Cent}}_1\setminus {\mathrm{Cent}}_0)\le P_K(K') = 0\). \(\square \)

To prove the second half of Lemma 5.3, we first recall that a convex cone \({\mathcal {C}} \subseteq {\mathbb {R}}^d\) is called flat if there exists \(w\ne 0\) such that both \(w\in {\mathcal {C}}\) and \(-w\in {\mathcal {C}} \). Otherwise, it is called salient. If a convex cone \({\mathcal {C}}\) is closed and salient, then there existsFootnote 16\(w\in {\mathbb {R}}^d\) such that \(\langle u, w\rangle <0\), for all non-zero \( u\in {\mathcal {C}}\).

Lemma 5.8

Let \(({\mathcal {F}},P_K) = (\{f_i\}_{i\in [m]},P_K)\) be a regular pair, where each \(f_i\) is differentiable, then

Proof

Let \(x_0\in {\mathrm{Cent}}_0\setminus {\mathrm{Cent}}_1\). Denote

Since \(x_0\notin {\mathrm{Cent}}_1\), \({\mathcal {C}}_0 \ne {\mathbb {R}}^d\). Thus, it suffices to prove that the cone \({\mathcal {C}}_0\) is flat. Suppose that the cone \({\mathcal {C}}_0 \) is not flat, then there exists w such that \(\langle u, w\rangle <0\), for all non-zero \( u\in {\mathcal {C}}_0\). In particular, \(\langle \nabla f_i(x_0), w\rangle <0,\ \forall \ i\in [m]\). Let us show that \(\forall \ i\in [m]\), there exists \(\alpha _i>0\) such that \(x_0+\alpha _i w\in f_i^{-1}(-\infty ,f_i(x_0))\). Suppose not, then there exists \(i\in [m]\), such that \(f_i(x_0+\alpha w)\ge f(x_0),\ \forall \ \alpha >0\), and we have

which is a contradiction. Thus, such positive \(\alpha _i\)’s exist, and we obtain that

This contradicts the assumption that \(x_0\in {\mathrm{Cent}}_0\). Therefore the cone \({\mathcal {C}}_0\) is flat. \(\square \)

It can be shown that the inclusion in (28) is in fact an equality. However, since we do not need the equality here, it was left out the proof. To finish the proof of Lemma 5.3, we use the following

Lemma 5.9

Let \(V = \{v_1,\ldots , v_m\}\subset {\mathbb {R}}^d\) be a set of vectors in general direction, then \({\mathrm{cone}}(V)={\mathbb {R}}^d\) or \({\mathrm{cone}}(V)\) is salient.

To prove Lemma 5.9, we use the following lemma.

Lemma 5.10

(see e.g. Theorem 2.5 in Regis 2016) Let \(V = \{v_1,\ldots , v_m\}\) be a set of non-zero vectors in \({\mathbb {R}}^d\), then the following two statements are equivalent:

-

(i)

\({\mathrm{cone}}(V)={\mathrm{span}}(V)\);

-

(ii)

For each \(i\in [m]\), \(-v_i\in {\mathrm{cone}}(V\setminus \{v_i\})\).

Proof of Lemma 5.9

For \(m\le d\), the vectors \(\{v_1,\ldots , v_m\}\) are linearly independent. Suppose there exists \(w\in R^d \), such that \(w, -w\in {\mathrm{cone}}(V)\). Thus there exist \(a_i, b_i\ge 0\) with \( w= \sum _{i=1}^m a_iv_i=-\sum _{i=1}^m b_iv_i\). Since the vectors \(\{v_1,\ldots , v_m\}\) are linearly independent, \(a_i+b_i=0\) for all \(i\in [m]\), and thus \(w=0\). Therefore \({\mathrm{cone}}(V)\) is salient.

For \(m>d\), we prove by induction on the size of V. Suppose the result holds for any set of \(m\ge d\) vectors in general direction. Let \(V = \{v_1,\ldots , v_{m+1}\}\) be a set of \(m+1\) vectors in general direction. Since any d vectors in V is a basis in \({\mathbb {R}}^d\), \({\mathrm{span}}(V) = {\mathbb {R}}^d\). Suppose the result is false for V; equivalently, \({\mathrm{cone}}(V)\ne {\mathbb {R}}^d\) and \({\mathrm{cone}}(V)\) is flat. By Lemma 5.10, there exists \(j\in [m+1]\) such that

Since \({\mathrm{cone}}(V)\) is flat, there exists a nonzero \(w\in {\mathbb {R}}^d\) such that \(w, -w\in {\mathrm{cone}}(V)\), and thus \(w= \sum _{i=1}^{m+1} a_iv_i=-\sum _{i=1}^{m+1} b_iv_i \), with \(a_i, b_i\ge 0\) for all i. Let us prove that \(a_j +b_j>0\). If \(a_j+b_j = 0\), then \(a_j = b_j = 0\). Thus, \(w, -w\in {\mathrm{cone}}(V\setminus \{v_j\})\) and \({\mathrm{cone}}(V\setminus \{v_j\})\) is not salient. Since \(|V\setminus \{v_j\}|=m\), by the induction hypothesis, we must have \({\mathrm{cone}}(V\setminus \{v_j\})={\mathbb {R}}^d\). However, \({\mathrm{cone}}(V)\ne {\mathbb {R}}^d\) and hence \({\mathrm{cone}}(V\setminus \{v_j\})\ne {\mathbb {R}}^d\), a contradiction. Therefore \(a_j+b_j>0\), and we can conclude that

contradicting to (29). Therefore, the result holds for any V in general direction of size \(|V| = m+1\). This completes the proof by induction. \(\square \)

We now finish the proof of Lemma 5.3.

Proof of Lemma 5.3

The first half of the proof of Lemma 5.3 is done in Lemma 5.7. To prove the second half, we combine Lemmas 5.8 and 5.9 to obtain

Since \(\{f_i\}_{i\in [m]}\) is in general position, the right hand side of (30) has measure zero, completing the proof. \(\square \)

6 Discussion