Abstract

Psychological interventions provided via mobile, wireless technological communication devices (mHealth) are a promising method of healthcare delivery for young people. However, high attrition is increasingly recognised as a challenge to accurately interpreting and implementing the findings of mHealth trials. The present meta-analysis consolidates this research and investigates potential methodological, sociodemographic, and intervention moderators of attrition. A systematic search of MEDLINE, PsycInfo, and Embase was conducted. Study reporting quality was evaluated (QualSyst tool), and attrition rates (proportions) were calculated and pooled, using both random- and mixed-effects models. The pooled attrition rate, sourced from 15 independent samples (Nparticipants = 1766), was 17% (CI [9.14, 30.13]). This increased to 26% (CI [15.20, 41.03]) when adjusting for publication bias. Attrition was significantly higher among application-based interventions (26%, CI [14.56, 41.86]) compared to those delivered via text or multimedia message (6%, CI [1.88, 16.98]). These data were, however, characterised by significant between-study variance. Attrition in mHealth trials with young people is common but may be mitigated by using message-based interventions. Taken together, the results can provide guidance in accounting for attrition across future mHealth research, clinical practice, public policy, and intervention design. However, sustained research focus on the effectiveness of different engagement strategies is needed to realise mHealth’s promise of equitable and efficient healthcare access for young people globally.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Young people are disproportionately vulnerable to developing mental illness: half of all lifetime disorders emerge by age 14 and three quarters by age 24 (Kessler et al., 2005). Among those aged 10 to 24 worldwide, mental illness is the leading cause of years lost due to disability, contributing 45% of the disease burden in this age group (Gore et al., 2011). Mental disorders among youth are also highly recurrent and comorbid (Otto et al., 2021). Left untreated, young people are at heightened risk of self-harm and suicide (Moran et al., 2012). Despite the availability of evidence-based psychological interventions, young people often experience personal (e.g. low health literacy, self-reliance) and systemic (e.g. economic marginalisation, geographic inaccessibility, experiences of race- and gender-based discrimination) barriers to accessing mental healthcare (Radez et al., 2021). Electronic health (‘eHealth’), which includes the Internet and related technologies, may help to overcome these service barriers (Christensen et al., 2002). The delivery of health information and support via hand-held wireless technological communication devices, also known as mobile health (‘mHealth’), offers additional flexibility to young people, with daily use and access to mobile devices at record high rates across socioeconomic groups (Lehtimaki et al., 2021).

In contrast to synchronous mHealth (which involves real-time interaction between the provider and the client via, for example, videoconferencing, voice calls, online chat, or message exchanges), asynchronous mHealth takes the form of self-guided smartphone or tablet applications (‘apps’) or automated text or multimedia messages (Myers et al., 2018; Price et al., 2014). Asynchronous mHealth is available on-demand, in almost any geographic location, and is typically free or of low cost to users (Gindidis et al., 2019). The anonymity associated with asynchronous mHealth also helps to minimise stigma—one of the most frequently cited barriers to professional help-seeking among young people (Hollis et al., 2017). Importantly, a growing body of evidence indicates that mHealth is both appealing and effective in ameliorating symptoms of depression, anxiety, and stress among young people (Buttazzoni et al., 2021; Domhardt et al., 2020; Leech et al., 2021).

mHealth trials are, however, characterised by high dropout and non-usage attrition rates (Torous et al., 2020). Dropout attrition occurs when a participant does not complete the post-intervention assessment/s, whilst non-usage attrition occurs when a participant does not use the mHealth intervention (Eysenbach, 2005). Given the lack of standardised measurement and reporting of non-usage attrition in trials (Ng et al., 2019), dropout attrition is the focus of the present review. Attrition poses a threat to a trial’s validity by altering the random composition and equivalence of groups in randomised controlled trials (RCTs), limiting generalisability to only those who completed the study, and reducing sample size and statistical power (Marcellus, 2004). Accordingly, there have been repeated calls for future research to focus on attrition in mHealth trials with young people (Domhardt et al., 2020; Liverpool et al., 2020).

Attrition may, in part, be related to study design. RCTs incorporate strategies to mitigate early withdrawal, including the recruitment of individuals who are most likely to remain engaged and a supportive environment that encourages participation (de Haan et al., 2013). Attrition may also be affected by education level, albeit inconsistently: a relationship between low education and high attrition has been identified, as has a null or negligible effect (Linardon & Fuller-Tyszkiewicz, 2020). Young people’s current education level signifies their developmental stage, with each stage bringing physical, emotional, cognitive, and social changes that may impact engagement in mental healthcare (Block & Greeno, 2011; Cavaiola & Kane-Cavaiola, 1989; Harpaz-Rotem et al., 2004; Pelkonen et al., 2000).

There is also evidence that mHealth format plays a role in engagement. App interventions require greater user initiative and more active, ongoing involvement than message-based interventions (Mayberry & Jaser, 2018). As such, app interventions may be associated with lower engagement, particularly for those who are less motivated or involved in their healthcare and those with lower digital literacy (Willcox et al., 2019). Targeting mHealth interventions to their intended audience is also critical (Liverpool et al., 2020). Attrition occurs when intervention content (including reading level, educational content) does not seem relevant to participants (Borghouts et al., 2021; Garrido et al., 2019). For young people in particular, relatable situations and characters are preferred. User consultation and co-design are therefore considered best practice for mHealth intervention design (Thabrew et al., 2018).

The present study is the first meta-analytic investigation, to the authors’ knowledge, of attrition in mHealth trials with young people aged 10 to 24 years. Our aims were to: (1) provide a weighted estimate of the rate of attrition among these trials; and (2) quantitatively evaluate the influence of methodological (i.e. study design), sociodemographic (i.e. current education level as a proxy for developmental stage; Cavaiola & Kane-Cavaiola, 1989), and intervention characteristics (i.e. mHealth format, audience-targeted design, duration) on effect estimates. In combination, this information can provide a nuanced understanding of attrition and how it might be mitigated in future research and practice (Torous et al., 2020).

Methods

Literature Search

The Ovid MEDLINE, Ovid APA PsycInfo, and Elsevier Embase databases were searched for the period between January 1, 2006 (coinciding with the year of the first published reference to the term “mHealth”; Istepanian et al., 2006) and March 27, 2021. A time-limited updated search was conducted on January 24, 2022, with no additional eligible studies identified. Search terms focused on the target population (e.g. ‘young people’) and mHealth interventions (e.g. ‘mobile health’) for mental health (see Online Resource 1 for complete logic grids). Additionally, the reference lists of included studies and relevant reviews (e.g. those assessing availability or efficacy of mHealth for young people; see Online Resource 2 for a list of reviews) were manually searched by the first author (E.P.). Elsevier Scopus citation searching of included studies was also performed, although it yielded no further eligible studies.

Study Eligibility and Screening

A protocol for this review is registered on the International Prospective Register of Systematic Reviews (PROSPERO CRD42021274905). In addition to being peer-reviewed journal articles published in the English language from January 2006 onwards, studies needed to meet the following criteria:

-

1.

The sample comprised young people aged between 10 and 24 years, in accordance with the World Health Organisation’s (WHO, 2014) definition of young people. Samples that solely comprised special-service or vulnerable populations (e.g. veterans, those experiencing homelessness, chronic illness, or disability) were excluded due to their unique interventional needs (Edidin et al., 2012; Yeo & Sawyer, 2005).

-

2.

The intervention was accessed solely via a portable wireless technological communication device (i.e. mobile phone, tablet device; Hollis et al., 2017; WHO, 2011). Interventions that were synchronous (i.e. real-time interaction between healthcare professionals and young people via, for example, videoconferencing, voice calls, online chat, or message exchanges; Myers et al., 2018; Price et al., 2014) or involved multiple modalities (e.g. mHealth intervention used as an adjunct to face-to-face treatment) were excluded as the focus of this review was asynchronous mHealth interventions alone.

-

3.

The mHealth intervention focused on the prevention or management of a mental health disorder or symptom or the enhancement of psychological well-being. Interventions dedicated solely to mental health assessment or focused primarily on physical health promotion (e.g. healthy lifestyles, weight management, sleep enhancement, alcohol-, substance-, or tobacco-use reduction) were excluded.

-

4.

Both RCTs and uncontrolled, single-arm trials were eligible. Whilst the former is considered the ‘gold standard’ in treatment evaluation, uncontrolled trials are crucial for assessing intervention implementation in ‘real-world’ settings (Handley et al., 2018).

-

5.

Data to calculate effect sizes (i.e. mHealth attrition rates as proportions) were reported. Studies that lacked synthesisable data (e.g. protocols, editorials, qualitative studies), in addition to conference abstracts/proceedings (which typically lack the detailed reporting of methods and results required for a meta-analysis; Balshem et al., 2013), were excluded.

Study screening was undertaken by the first author (E.P.) using Covidence systematic review software (Veritas Health Innovation, 2021). Lead authors of 14 studies were contacted to determine study eligibility or to obtain additional demographic data, with 10 responding. All full-text records were independently screened by a second reviewer (fourth author, A.R.) to assess selection bias. Inter-rater reliability was excellent (Viera & Garrett, 2005), with reviewers agreeing in 99% of cases (k = 0.88). The three discrepant records were discussed, and full agreement was reached.

Data Extraction

In accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses 2020 statement (Page et al., 2021), a purposely designed Microsoft Excel spreadsheet was used to extract the following data from each study: study characteristics (e.g. country, study design, sample size), sample characteristics (e.g. mean age, gender, historical or current diagnosis of a mental disorder), mHealth characteristics (e.g. format, duration), and effect size data (i.e. number of mHealth participants who did not complete the immediate post-intervention assessment divided by the total number of mHealth participants).

Reporting Quality of Included Studies

The 14-item Standard Quality Assessment Criteria for Evaluating Primary Research Papers from a Variety of Fields (QualSyst; Kmet et al., 2004) was used to assess the risk of methodological bias in each study. For each item, studies were rated as 2 (criterion met), 1 (criterion partially met), 0 (criterion not met), or N/A (criterion not applicable to the study design). A summary score (ranging from 0 to 1; total score divided by total possible score) was then calculated. The percentage of studies that received scores of 2, 1, and 0 was also calculated for each criterion. The reliability of this tool was assessed by having the same author (E.P.) re-evaluate all studies after a period of 6 months. The consistency of scores for each study at both time points was very high (k = 0.82 to 1.00).

Effect Size Calculations

Effect size data (proportions) were entered into Comprehensive Meta-Analysis software (Borenstein et al., 2013), with logit transformations applied to counteract the influence of outliers (Lipsey & Wilson, 2001). Inverse-variance weighting was used to weight the obtained proportions prior to averaging them (Hedges & Olkin, 2014). These analyses were conducted using a random-effects model, which assumes that included samples are estimating different, yet related, effects (Borenstein et al., 2010). For each proportion, a 95% confidence interval (CI) and p value were calculated (Borenstein, 2019). Effect estimates were represented graphically through forest plots generated in Microsoft Excel (Neyeloff et al., 2012).

Publication bias was assessed with a funnel plot, which plots observed effect sizes against the inverse of their standard errors (Sterne et al., 2011). In the absence of publication bias, effect sizes concentrate around a precise estimate with increasing sample size, forming a symmetric inverted funnel shape. The funnel plot was statistically checked with the trim-and-fill method (Duval & Tweedie, 2000) and Egger’s regression test (Egger et al., 1997).

Between-sample heterogeneity was assessed with tau (τ), or the between-samples SD, and I2, or the proportion of variation in observed effects that is due to true effect variance (Borenstein et al., 2017, 2021). Finally, a 95% prediction interval (PI) was used to provide a summary of the spread of underlying effects in the samples included in the primary analysis (Deeks et al., 2021; Riley et al., 2011).

Sensitivity Analysis

To identify potential statistical outliers, a one-sample removed sensitivity analysis was conducted, whereby samples were removed one at a time and the meta-analysis re-run to determine the impact on the overall result (Borenstein et al., 2021). If the removal of a sample resulted in a meaningful change to the pooled effect estimate, its associated significance level, or estimates of between-study heterogeneity, the sample was classified as a potential outlier.

Moderator Analyses

Between-group differences in study design (i.e. RCT vs. single-arm trial), education level (i.e. secondary vs. tertiary), mHealth format (i.e. app- vs. message-based), and mHealth audience-targeted design (i.e. designed for young people vs. general population) were statistically tested using Cochran’s Q. These analyses relied on a mixed-effects model (i.e. random-effects model within subgroups and fixed-effect model across subgroups; Borenstein et al., 2021). Additionally, the potential moderating effect of mHealth duration (defined as intervention time, in weeks) was examined using a univariate random-effects meta-regression. Both Q model statistics, which show variability associated with the regression model, and Q residual statistics, which indicate variability unaccounted for by the model, were considered (Borenstein et al., 2021). There were sufficient samples to conduct these moderator analyses (Nsamples ≥ 10, Nsamples per subgroup ≥ 4; Deeks et al., 2021; Fu et al., 2011).

Results

Study Selection

The literature search yielded 18,809 records, of which 13,551 remained once duplicates were automatically removed (see Fig. 1). A further 11,142 manually identified duplicates and off-topic records were excluded by title screening, leaving 2409 records. Abstracts were screened against the eligibility criteria, with 399 full-text records subsequently retrieved. Eleven reports met all inclusion criteria. During the screening process, two reports with overlapping samples were identified (Whittaker et al., 2012, 2017); the report containing the most recent data was considered the lead study for the purpose of this review. Additionally, two reports each provided data for two independent samples (Flett et al., 2020; O’Dea et al., 2020). A manual search of the reference lists of 17 reviews on mHealth for young people identified three additional reports (Kenny et al., 2015; Paul & Fleming, 2019; Pisani et al., 2018). The final sample therefore comprised 15 independent samples, sourced from 13 independent studies and interventions.

Flow diagram of study selection process adapted from the Preferred Reporting Items for Systematic Reviews and Meta-Analyses 2020 (Page et al., 2021)

Study Characteristics

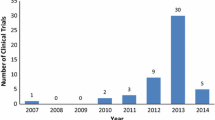

All studies were published between 2014 and 2021. These comprised six single-arm trials and seven RCTs that compared mHealth to waitlist (Nstudies = 3), assessment-only (Nstudies = 2), or placebo controls (e.g. same number of text messages, but without mental healthcare content; Nstudies = 2). Sample sizes varied considerably (range = 13–855, Mdn = 101), with most studies recruiting participants from multiple sites (Nstudies = 8) and primarily within an educational setting (Nstudies = 11). The largest study, involving 15 secondary schools, comprised 32% of the pooled sample (Whittaker et al., 2017).

Sample Characteristics

The pooled sample of 2671 young people had a mean age of 16.87 years (SD = 2.95, range = 10.90–20.06; Nstudies = 10; see Table 1) and was primarily characterised by female secondary students who identified as White. The only study to report employment status was also one of the few studies to recruit a community (rather than student) sample (Arps et al., 2018). Approximately 7% of participants self-reported a history of a mental disorder or a current subthreshold disorder (e.g. a score of ≥ 16 on the Centre for Epidemiologic Studies Depression Scale; Kageyama et al., 2021; Takahashi et al., 2019), although these data were not routinely reported (Nstudies = 6).

mHealth Characteristics

Smartphone or tablet apps were the most common format (Ninterventions = 9), followed by text or multimedia messages (Ninterventions = 4). Most interventions involved best-practice youth consultation or co-design through focus groups and/or pilot-testing (Ninterventions = 9). Content was also guided by evidence-based techniques and principles, typically cognitive behaviour therapy (Ninterventions = 4) and social cognitive theory (Ninterventions = 3). Therapeutic strategies included psychoeducation, social and emotional learning, self-monitoring of mood, meditation, and breathing exercises. Game-like elements to increase user engagement and enhance user experience (e.g. points or badges rewarded for task completion) were also included (Lim et al., 2019; Pisani et al., 2018).

Reporting Quality of Included Studies

The average QualSyst summary score of 0.92 (SD = 0.04, range = 0.85–1.00) suggests that included studies provided sufficient information regarding potential sources of methodological bias (see Fig. 2 and Online Resource 3). Sample selection methods were a key limitation, with most studies relying on self-selected participants, who may be more motivated to engage in mHealth (criterion 3, 46% fulfilled). The psychological nature of the interventions also made it difficult to blind investigators and participants (criteria 6 and 7, 29% and 25% fulfilled, respectively). Although statistical methods were detailed (criterion 10, 92% fulfilled), variance estimates (e.g. CIs, standard errors; criterion 11, 46% fulfilled) were not routinely reported.

Percentage of studies meeting each criterion on the QualSyst tool (Kmet et al., 2004)

mHealth Attrition Rate

The pooled attrition rate across the 15 independent samples was 17% (95% CI [9.14, 30.13], p < 0.001; see Table 2). However, this finding was characterised by publication bias, as indicated by the asymmetric funnel plot with imputation of four missing samples and the significant result of Egger’s regression test (p = 0.003). With imputed samples included in the analysis, the pooled attrition rate increased to 26% (95% CI [15.20, 41.03]).

Attrition rates varied considerably across samples (Q = 366.24, p < 0.001; τ = 1.32; I2 = 96.18%; 95% PI [1%, 80%]). The highest and lowest rates were both reported by multi-site RCTs: 66% of secondary students did not complete their evaluation of the CopeSmart app (Kenny et al., 2020), whereas just 2% of students withdrew from a trial assessing a multimedia message program targeting depression (MEMO-CBT; Whittaker et al., 2017). Large but imprecise effects (i.e. wide CIs) were associated with the three smallest samples (Kageyama et al., 2021; Lim et al., 2019; Paul & Fleming, 2019). Although Flett et al., (2020, sample B) and Kenny et al. (2015) reported attrition rates as high as 57% for their respective app interventions, these values were not significantly different from the population rate (p > 0.05).

Sensitivity Analysis

No statistical outliers were identified: the removal of any one sample did not cause a meaningful change to the pooled attrition rate, its associated significance level, or estimates of between-study heterogeneity.

Moderator Analyses

Study design, education level, and mHealth audience-targeted design were not significant moderators, with attrition estimates remaining similar across these subgroups (see Table 3). Intervention duration was also unable to explain any of the between-sample variation (Qmodel (1) = 0.22, p = 0.643; Qresidual (13) = 318.27, p < 0.001; R2 = 0.00%). However, mHealth format did influence attrition in a significant way: apps were associated with higher attrition than message-based interventions (QB (1) = 5.93, p = 0.015).

Discussion

The present meta-analysis provides the first weighted average attrition rate and evaluation of potential attrition moderators in psychological mHealth trials with young people. The pooled results, based on 15 samples from 13 studies of excellent to good methodological quality, revealed that almost one in five young people drop out of an mHealth trial. This rate increased to 26% when adjusting for publication bias. Researchers can use the pooled rate as an estimate of expected attrition for a priori power analyses in future trials and also as a benchmark against which the attrition rates of new trials can be compared (Linardon & Fuller-Tyszkiewicz, 2020; Torous et al., 2020).

App-based interventions were associated with significantly higher attrition than text or multimedia message interventions, suggesting a need to further explore strategies to promote uptake and sustained engagement with mHealth. Notably, the observed attrition rate of 17% in this review was substantially lower than those associated with face-to-face mental healthcare for young people, where rates as high as 60% have been reported (de Haan et al., 2013)—moreso among ethnic minority groups (de Haan et al., 2018). It follows that mHealth offers promise as an initial gateway for mental healthcare delivery to young people. Clinicians and policymakers can cautiously interpret the pooled attrition rate as an indication of expected mHealth non-use. Along with other considerations like mHealth efficacy and available resources, this can assist in guiding clinical treatment plans and healthcare system planning (Linardon & Fuller-Tyszkiewicz, 2020; Torous et al., 2020).

It is perhaps not surprising that study design did not significantly impact attrition rates given that most studies comprised self-selected participants with no historical or current mental disorder diagnosis—characteristics that may increase the likelihood of participants remaining engaged (Renfrew et al., 2020). Moreover, the mHealth trials examined were also often conducted within an educational setting—a supportive environment that encouraged participation (Lehtimaki et al., 2021). Although mHealth audience-targeted design did not influence attrition rates in this review, this analysis may have been confounded by participants’ age. The non-targeted interventions explored in this review all involved participants aged ≥ 18 or tertiary students. The non-significant result should, therefore, not be interpreted by mHealth developers as evidence that targeting future interventions to young people is unnecessary. User consultation and co-design both remain best practice (Thabrew et al., 2018).

The lower attrition rate associated with text or multimedia message interventions suggests that messages are a promising mHealth format in terms of expected use. This finding is particularly encouraging for the implementation of mHealth within low- and middle-income countries and underserved populations (e.g. rural, financially disadvantaged) in high-income countries, where message-based interventions may be the only viable mHealth option (Willcox et al., 2019). Indeed, few of these young people have access to a smartphone because they are often not as available or affordable as a ‘basic’ mobile phone, rendering apps inaccessible (Rodriguez-Villa et al., 2020). Another barrier to app usage is their consumption of large amounts of mobile data, which can be expensive and/or unavailable in mobile data ‘blackspots’ (Mayberry & Jaser, 2018). This finding highlights a need to continue investigating the efficacy and usage rates of message-based interventions, as well as the potential to incorporate these interventions into clinical practice and public policy responses to promote engagement in mental healthcare services (Linardon & Fuller-Tyszkiewicz, 2020).

Methodological Limitations and Future Research

Several methodological limitations were encountered in the present review. First, despite a comprehensive literature search, eligible studies may have been overlooked because they were inappropriately indexed, rarely cited, excluded, or not published (Deeks et al., 2021). Second, the small number of eligible samples (particularly within each subgroup) and low number of participants in some samples potentially reduced the precision and generalisability of the results, as well as the statistical power of the moderator analyses (Borenstein, 2019; Pigott, 2012). Third, the pooled sample was not representative of the broader population of young people. Participants were primarily White female students from educated, industrialised, rich, and democratic societies—demographic characteristics that may impact attrition (Renfrew et al., 2020). Future research should aim to recruit culturally and linguistically diverse participants from community, clinical, and educational settings, and focus on expansion into economically marginalised countries. Doing so may allow comprehensive investigation of differential intervention effectiveness across ethnic groups and provide insights into how to culturally adapt interventions (Willis et al., 2022). Fourth, included studies did not consistently report data relating to trial procedure, participant demographics, and mHealth interventions, preventing examination of additional potential moderators, such as participant compensation, socioeconomic status, baseline mental health, help-seeking attitudes, and mHealth usage reminders (Eysenbach, 2005; Renfrew et al., 2020). Similarly, included studies did not consistently report non-usage attrition. Although dropout attrition is often used synonymously with non-use, they are different processes: within trials, low dropout can occur in conjunction with high non-use and vice versa (Eysenbach, 2005; Torous et al., 2020). Future mHealth trials should endeavour to standardise the measurement of non-usage to enable synthesis (Ng et al., 2019). Finally, our reliance on current education level as a proxy for young people’s developmental stage could be problematic given that education may also be an indicator of socioeconomic status (Galobardes et al., 2007). Education, in turn, may influence mHealth engagement through other factors, such as digital health literacy and the ‘digital divide’ (Maenhout et al., 2022). Future mHealth trials should, ideally, provide comprehensive details about their participants, procedure, and intervention (Dumville et al., 2006), consistent with available reporting guidelines (Eysenbach & CONSORT-EHEALTH Group, 2011). This additional contextual information is important to the development of holistic theories of mHealth attrition (Eysenbach, 2005).

In sum, the present review ascertained the rate of attrition in psychological mHealth trials with young people. Message-based interventions appeared to be more successful than apps in retaining participants, although this research remains preliminary. To enable further progress in the identification of effective strategies and factors to minimise attrition and promote participant engagement in the longer-term, the nascent field of mHealth for young people must first progress.

Data, Materials, or Code Availability

Not applicable.

References

* References marked with an asterisk indicate studies included in the meta-analysis

*Arps, E. R., Friesen, M. D., & Overall, N. C. (2018). Promoting youth mental health via text-messages: A New Zealand feasibility study. Applied Psychology: Health and Well-Being, 10(3), 457–480.

Balshem, H., Stevens, A., Ansari, M., Norris, S., Kansagara, D., Shamliyan, T., Chou, R., Chung, M., Moher, D., & Dickersin, K. (2013, November). Finding grey literature evidence and assessing for outcome and analysis reporting biases when comparing medical interventions: AHRQ and the Effective Health Care Program (Methods Guide for Effectiveness and Comparative Effectiveness Reviews, AHRQ Publication No. 13(14)-EHC096-EF). Agency for Healthcare Research and Quality. https://effectivehealthcare.ahrq.gov/products/methods-guidance-reporting-bias/methods

Block, A. M., & Greeno, C. G. (2011). Examining outpatient treatment dropout in adolescents: A literature review. Child and Adolescent Social Work Journal, 28, 393–420.

Borenstein, M. (2019). Common mistakes in meta-analysis and how to avoid them. Biostat, Inc.

Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2010). A basic introduction to fixed-effect and random-effects models for meta-analysis. Research Synthesis Methods, 1(2), 97–111.

Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2013). Comprehensive meta-analysis (Version 3) [Computer software]. Biostat, Inc. https://www.meta-analysis.com/

Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2021). Introduction to meta-analysis (2nd ed.). John Wiley & Sons Ltd.

Borenstein, M., Higgins, J. P. T., Hedges, L. V., & Rothstein, H. R. (2017). Basics of meta-analysis: I2 is not an absolute measure of heterogeneity. Research Synthesis Methods, 8, 5–18.

Borghouts, J., Eikey, E., Mark, G., De Leon, C., Schueller, S. M., Schneider, M., Stadnick, N., Zheng, K., Mukamel, D., & Sorkin, D. H. (2021). Barriers to and facilitators of user engagement with digital mental health interventions: Systematic review. Journal of Medical Internet Research, 23(3), Article e24387.

Buttazzoni, A., Brar, K., & Minaker, L. (2021). Smartphone-based interventions and internalizing disorders in youth: Systematic review and meta-analysis. Journal of Medical Internet Research, 23(1), Article e16490.

Cavaiola, A. A., & Kane-Cavaiola, C. (1989). Basics of adolescent development for the chemical dependency professional. Journal of Chemical Dependency Treatment, 2(1), 11–24.

*Chandra, P. S., Sowmya, H. R., Mehrotra, S., & Duggal, M. (2014). ‘SMS’ for mental health – Feasibility and acceptability of using text messages for mental health promotion among young women from urban low income settings in India. Asian Journal of Psychiatry, 11, 59–64.

Christensen, H., Griffiths, K. M., & Evans, K. (2002, May). e-Mental health in Australia: Implications of the internet and related technologies for policy (Information Strategy Committee Discussion Paper Number 3). Commonwealth Department of Health and Ageing. https://trove.nla.gov.au/work/23086732

Deeks, J. J., Higgins, J. P. T., & Altman, D. G. (2021). Chapter 10: Analysing data and undertaking meta-analyses. In J. P. T. Higgins, J. Thomas, J. Chandler, M. Cumpston, T. Li, M. J. Page, & V. A. Welch (Eds.), Cochrane handbook for systematic reviews of interventions (version 6.2). Cochrane. https://training.cochrane.org/handbook/current/chapter-10

de Haan, A. M., Boon, A. E., de Jong, J. T. V. M., Hoeve, M., & Vermeiren, R. R. J. M. (2013). A meta-analytic review on treatment dropout in child and adolescent outpatient mental health care. Clinical Psychology Review, 33(5), 698–711.

de Haan, A. M., Boon, A. E., de Jong, J. T. V. M., & Vermeiren, R. R. J. M. (2018). A review of mental health treatment dropout by ethnic minority youth. Transcultural Psychiatry, 55(1), 3–30.

Domhardt, M., Steubl, L., & Baumeister, H. (2020). Internet- and mobile-based interventions for mental and somatic conditions in children and adolescents: A systematic review of meta-analyses. Zeitschrift Für Kinder- Und Jugendpsychiatrie Und Psychotherapie, 48(1), 33–46.

Dumville, J. C., Torgerson, D. J., & Hewitt, C. E. (2006). Reporting attrition in randomised controlled trials. British Medical Journal (clinical Research Edition), 332(7547), 969–971.

Duval, S., & Tweedie, R. (2000). Trim and fill: A simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics, 56(2), 455–463.

Edidin, J. P., Ganim, Z., Hunter, S. J., & Karnik, N. S. (2012). The mental and physical health of homeless youth: A literature review. Child Psychiatry and Human Development, 43(3), 354–375.

*Edridge, C., Wolpert, M., Deighton, J., & Edbrooke-Childs, J. (2020). An mHealth intervention (ReZone) to help young people self-manage overwhelming feelings: Cluster-randomized controlled trial. Journal of Medical Internet Research, 22(7), Article e14223.

Egger, M., Smith, G. D., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. BMJ, 315(7109), 629–634.

Eysenbach, G. (2005). The law of attrition. Journal of Medical Internet Research, 7(1), Article e11.

Eysenbach, G., & CONSORT-EHEALTH Group. (2011). CONSORT-EHEALTH: Improving and standardizing evaluation reports of web-based and mobile health interventions. Journal of Medical Internet Research, 13(4), Article e126.

*Flett, J. A. M., Conner, T. S., Riordan, B. C., Patterson, T., & Hayne, H. (2020). App-based mindfulness meditation for psychological distress and adjustment to college in incoming university students: A pragmatic, randomised, waitlist-controlled trial. Psychology & Health, 35(9), 1049–1074.

Fu, R., Gartlehner, G., Grant, M., Shamliyan, T., Sedrakyan, A., Wilt, T. J., Griffith, L., Oremus, M., Raina, P., Ismaila, A., Santaguida, P., Lau, J., & Trikalinos, T. A. (2011). Conducting quantitative synthesis when comparing medical interventions: AHRQ and the Effective Health Care Program. Journal of Clinical Epidemiology, 64(11), 1187–1197.

Galobardes, B., Lynch, J., & Davey Smith, G. (2007). Measuring socioeconomic position in health research. British Medical Bulletin, 81–82(1), 21–37.

Garrido, S., Millington, C., Cheers, D., Boydell, K., Schubert, E., Meade, T., & Nguyen, Q. V. (2019). What works and what doesn’t work? A systematic review of digital mental health interventions for depression and anxiety in young people. Frontiers in Psychiatry, 10, Article 759.

Gindidis, S., Stewart, S., & Roodenburg, J. (2019). A systematic scoping review of adolescent mental health treatment using mobile apps. Advances in Mental Health, 17(2), 161–177.

Gore, F. M., Bloem, P. J., Patton, G. C., Ferguson, J., Joseph, V., Coffey, C., Sawyer, S. M., & Mathers, C. D. (2011). Global burden of disease in young people aged 10–24 years: A systematic analysis. The Lancet, 377(9783), 2093–2102.

Handley, M. A., Lyles, C. R., McCulloch, C., & Cattamanchi, A. (2018). Selecting and improving quasi-experimental designs in effectiveness and implementation research. Annual Review of Public Health, 39(1), 5–25.

Harpaz-Rotem, I., Leslie, D., & Rosenheck, R. A. (2004). Treatment retention among children entering a new episode of mental health care. Psychiatric Services, 55(9), 1022–1028.

Hedges, L. V., & Olkin, I. (2014). Statistical methods for meta-analysis. Academic Press.

Hollis, C., Falconer, C. J., Martin, J. L., Whittington, C., Stockton, S., Glazebrook, C., & Davies, E. B. (2017). Annual research review: Digital health interventions for children and young people with mental health problems – A systematic and meta-review. Journal of Child Psychology and Psychiatry, 58(4), 474–503.

Istepanian, R., Laxminarayan, S., & Pattichis, C. S. (2006). M-Health: Emerging mobile health systems. Springer Science+Business Media, Inc.

*Kageyama, K., Kato, Y., Mesaki, T., Uchida, H., Takahashi, K., Marume, R., Sejima, Y., & Hirao, K. (2021). Effects of video viewing smartphone application intervention involving positive word stimulation in people with subthreshold depression: A pilot randomized controlled trial. Journal of Affective Disorders, 282, 74–81.

*Kenny, R., Dooley, B., & Fitzgerald, A. (2015). Feasibility of “CopeSmart”: A telemental health app for adolescents. JMIR Mental Health, 2(3), Article e22.

*Kenny, R., Fitzgerald, A., Segurado, R., & Dooley, B. (2020). Is there an app for that? A cluster randomised controlled trial of a mobile app–based mental health intervention. Health Informatics Journal, 26(3), 1538–1559.

Kessler, R. C., Berglund, P., Demler, O., Jin, R., Merikangas, K. R., & Walters, E. E. (2005). Lifetime prevalence and age-of-onset distributions of DSM-IV disorders in the National Comorbidity Survey Replication. Archives of General Psychiatry, 62, 593–602.

Kmet, L. M., Lee, R. C., & Cook, L. S. (2004). Standard quality assessment criteria for evaluating primary research papers from a variety of fields (Health Technology Assessment Initiative No. 13). Alberta Heritage Foundation for Medical Research. https://era.library.ualberta.ca/items/48b9b989-c221-4df6-9e35-af782082280e

Leech, T., Dorstyn, D., Taylor, A., & Li, W. (2021). Mental health apps for adolescents and young adults: A systematic review of randomised controlled trials. Children and Youth Services Review, 127, Article 106073.

Lehtimaki, S., Martic, J., Wahl, B., Foster, K. T., & Schwalbe, N. (2021). Evidence on digital mental health interventions for adolescents and young people: Systematic overview. JMIR Mental Health, 8(4), Article e25847.

*Lim, M. H., Rodebaugh, T. L., Eres, R., Long, K. M., Penn, D. L., & Gleeson, J. F. M. (2019). A pilot digital intervention targeting loneliness in youth mental health. Frontiers in Psychiatry, 10, Article 604.

Linardon, J., & Fuller-Tyszkiewicz, M. (2020). Attrition and adherence in smartphone-delivered interventions for mental health problems: A systematic and meta-analytic review. Journal of Consulting and Clinical Psychology, 88(1), 1–13.

Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. SAGE Publications, Inc.

Liverpool, S., Mota, C. P., Sales, C. M. D., Čuš, A., Carletto, S., Hancheva, C., Sousa, S., Cerón, S. C., Moreno-Peral, P., Pietrabissa, G., Moltrecht, B., Ulberg, R., Ferreira, N., & Edbrooke-Childs, J. (2020). Engaging children and young people in digital mental health interventions: Systematic review of modes of delivery, facilitators, and barriers. Journal of Medical Internet Research, 22(6), Article e16317.

Maenhout, L., Peuters, C., Cardon, G., Crombez, G., DeSmet, A., & Compernolle, S. (2022). Nonusage attrition of adolescents in an mHealth promotion intervention and the role of socioeconomic status: Secondary analysis of a 2-arm cluster-controlled trial. JMIR mHealth and uHealth, 10(5), Article e36404.

Marcellus, L. (2004). Are we missing anything? Pursuing research on attrition. Canadian Journal of Nursing Research, 36(3), 82–98.

Mayberry, L. S., & Jaser, S. S. (2018). Should there be an app for that? The case for text messaging in mHealth interventions. Journal of Internal Medicine, 283(2), 212–213.

Moran, P., Coffey, C., Romaniuk, H., Olsson, C., Borschmann, R., Carlin, J. B., & Patton, G. C. (2012). The natural history of self-harm from adolescence to young adulthood: A population-based cohort study. The Lancet, 379(9812), 236–243.

Myers, K., Cummings, J. R., Zima, B., Oberleitner, R., Roth, D., Merry, S. M., Bohr, Y., & Stasiak, K. (2018). Advances in asynchronous telehealth technologies to improve access and quality of mental health care for children and adolescents. Journal of Technology in Behavioral Science, 3(2), 87–106.

Neyeloff, J. L., Fuchs, S. C., & Moreira, L. B. (2012). Meta-analyses and forest plots using a Microsoft Excel spreadsheet: Step-by-step guide focusing on descriptive data analysis. BMC Research Notes, 5, 52.

Ng, M. M., Firth, J., Minen, M., & Torous, J. (2019). User engagement in mental health apps: A review of measurement, reporting, and validity. Psychiatric Services, 70(7), 538–544.

*O’Dea, B., Han, J., Batterham, P. J., Achilles, M. R., Calear, A. L., Werner-Seidler, A., Parker, B., Shand, F., & Christensen, H. (2020). A randomised controlled trial of a relationship-focussed mobile phone application for improving adolescents’ mental health. Journal of Child Psychology and Psychiatry, 61(8), 899–913.

Otto, C., Reiss, F., Voss, C., Wüstner, A., Meyrose, A. K., Hölling, H., & Ravens-Sieberer, U. (2021). Mental health and well-being from childhood to adulthood: Design, methods and results of the 11-year follow-up of the BELLA study. European Child & Adolescent Psychiatry, 30, 1559–1577.

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … & Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ, 372, Article n71.

*Paul, A. M., & Fleming, C. J. E. (2019). Anxiety management on campus: An evaluation of a mobile health intervention. Journal of Technology in Behavioral Science, 4(1), 58–61.

Pelkonen, M., Marttunen, M., Laippala, P., & Lonnqvist, J. (2000). Factors associated with early dropout from adolescent psychiatric outpatient treatment. Journal of the American Academy of Child and Adolescent Psychiatry, 39(3), 329–336.

Pigott, T. D. (2012). Advances in meta-analysis. Springer.

*Pisani, A. R., Wyman, P. A., Gurditta, K., Schmeelk-Cone, K., Anderson, C. L., & Judd, E. (2018). Mobile phone intervention to reduce youth suicide in rural communities: Field test. JMIR Mental Health, 5(2), Article e10425.

Price, M., Yuen, E. K., Goetter, E. M., Herbert, J. D., Forman, E. M., Acierno, R., & Ruggiero, K. J. (2014). mHealth: A mechanism to deliver more accessible, more effective mental health care. Clinical Psychology and Psychotherapy, 21(5), 427–436.

Radez, J., Reardon, T., Creswell, C., Lawrence, P. J., Evdoka-Burton, G., & Waite, P. (2021). Why do children and adolescents (not) seek and access professional help for their mental health problems? A systematic review of quantitative and qualitative studies. European Child & Adolescent Psychiatry, 30(2), 183–211.

Renfrew, M. E., Morton, D. P., Morton, J. K., Hinze, J. S., Przybylko, G., & Craig, B. A. (2020). The influence of three modes of human support on attrition and adherence to a web- and mobile app-based mental health promotion intervention in a nonclinical cohort: Randomized comparative study. Journal of Medical Internet Research, 22(9), Article e19945.

Riley, R. D., Higgins, J. P. T., & Deeks, J. J. (2011). Interpretation of random effects meta-analyses. BMJ, 342, Article d549.

Rodriguez-Villa, E., Naslund, J., Keshavan, M., Patel, V., & Torous, J. (2020). Making mental health more accessible in light of COVID-19: Scalable digital health with digital navigators in low and middle-income countries. Asian Journal of Psychiatry, 54, Article 102433.

Sterne, J. A. C., Sutton, A. J., Ioannidis, J. P. A., Terrin, N., Jones, D. R., Lau, J., Carpenter, J., Rücker, G., Harbord, R. M., Schmid, C. H., Tetzlaff, J., Deeks, J. J., Peters, J., Macaskill, P., Schwarzer, G., Duval, S., Altman, D. G., Moher, D., & Higgins, J. P. T. (2011). Recommendations for examining and interpreting funnel plot asymmetry in meta-analyses of randomised controlled trials. BMJ, 343(7818), Article d4002.

*Takahashi, K., Takada, K., & Hirao, K. (2019). Feasibility and preliminary efficacy of a smartphone application intervention for subthreshold depression. Early Intervention in Psychiatry, 13(1), 133–136.

Thabrew, H., Fleming, T., Hetrick, S., & Merry, S. (2018). Co-design of eHealth interventions with children and young people. Frontiers in Psychiatry, 9, Article 481.

Torous, J., Lipschitz, J., Ng, M., & Firth, J. (2020). Dropout rates in clinical trials of smartphone apps for depressive symptoms: A systematic review and meta-analysis. Journal of Affective Disorders, 263, 413–419.

Veritas Health Innovation. (2021). Covidence systematic review software [Computer software]. https://www.covidence.org

Viera, J. A., & Garrett, J. M. (2005). Understanding interobserver agreement: The kappa statistic. Family Medicine, 37(5), 360–363.

*Whittaker, R., Merry, S., Stasiak, K., McDowell, H., Doherty, I., Shepherd, M., Dorey, E., Parag, V., Ameratunga, S., & Rodgers, A. (2012). MEMO–A mobile phone depression prevention intervention for adolescents: Development process and postprogram findings on acceptability from a randomized controlled trial. Journal of Medical Internet Research, 14(1), Article e13.

*Whittaker, R., Stasiak, K., McDowell, H., Doherty, I., Shepherd, M., Chua, S., Dorey, E., Parag, V., Ameratunga, S., Rodgers, A., & Merry, S. (2017). MEMO: An mHealth intervention to prevent the onset of depression in adolescents: A double-blind, randomised, placebo-controlled trial. Journal of Child Psychology and Psychiatry, 58(9), 1014–1022.

Willcox, J. C., Dobson, R., & Whittaker, R. (2019). Old-fashioned technology in the era of “bling”: Is there a future for text messaging in health care? Journal of Medical Internet Research, 21(12), Article e16630.

Willis, H. A., Gonzalez, J. C., Call, C. C., Quezada, D., & Scholars for Elevating Equity and Diversity (SEED), & Galán, C. A. (2022). Culturally responsive telepsychology & mHealth interventions for racial-ethnic minoritized youth: Research gaps and future directions. Journal of Clinical Child & Adolescent Psychology, 51(6), 1053–1069.

World Health Organisation. (2014). Health for the world’s adolescents: A second chance in the second decade. https://apps.who.int/adolescent/second-decade/section2/page1/recognizing-adolescence.html

World Health Organisation. (2011). mHealth: New horizons for health through mobile technologies: Second Global Survey on eHealth (Global Observatory for eHealth Series, Volume 3). https://apps.who.int/iris/bitstream/handle/10665/44607/9789241564250_eng.pdf

Yeo, M., & Sawyer, S. (2005). Chronic illness and disability. BMJ, 330(7493), 721–723.

Acknowledgements

The authors would like to thank Vikki Langton, Research Librarian, for guidance with the logic grids. Thanks are also extended to the researchers who kindly responded to requests for additional information about their studies.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Contributions

E.P.: data curation, methodology, formal analysis, writing—original draft, visualisation; D.D.: conceptualisation, methodology, supervision, writing—review and editing; A.T.: methodology, supervision, writing—review and editing; A.R.: data curation, validation, writing—review and editing.

Corresponding author

Ethics declarations

Ethics Approval and Consent to Participate

Not applicable.

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Prior, E., Dorstyn, D., Taylor, A. et al. Attrition in Psychological mHealth Interventions for Young People: A Meta-Analysis. J. technol. behav. sci. (2023). https://doi.org/10.1007/s41347-023-00362-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41347-023-00362-x