Abstract

Objectives

Virtual reality technology is able to simulate real life environments and has been used to facilitate behavioral interventions for people with autism. This systematic review aims to evaluate the role of virtual reality (VR) technology in the context of behavioral interventions designed to increase behaviors that support more independent functioning (e.g., teach vocational skills, adaptive behavior) or decrease challenging behaviors that interfere with daily functioning for individuals with autism.

Methods

We conducted a systematic search in four databases followed by a reference search for those articles identified by the systematic database search. We also conducted a quality review using the evaluative method for evaluating and determining evidence-based practices in autism.

Results

We identified 23 studies with a majority of the studies (n = 18; 75%) utilizing group experimental or quasi-experiment research design and the remaining (n = 5; 21.74%) utilizing single-case research design. Of those studies, targeted behavior includes vocational skills (n = 10), safety skills (n = 4), functional behaviors (n = 2), and challenging behavior (n = 7). Of the 23 studies, 11 met the quality criteria to be classified as “strong” or “adequate” and can offer evidence on the integration of VR technology into behavioral interventions.

Conclusions

The use of VR to provide behavioral interventions to teach driving skills and to teach interview skills can be considered an evidence-based practice.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Recent numbers from the Centers for Disease Control and Prevention (CDC) indicate that 1 in 44 children have autism spectrum disorder (ASD; Maenner et al., 2021). ASD is characterized by a range of strengths (e.g., attention to detail) and needs (e.g., social communication skills). The needs of those with ASD can best be supported through interventions, particularly those considered to be evidence-based practices. Evidence-based practices are interventions and teaching methods that are substantially supported through research to produce positive outcomes (Hume et al., 2021). One evidence-based practice aimed at improving socially valid behavioral concerns is applied behavior analysis (ABA). ABA is a branch of the science of behavior analysis which relies on assessment to inform individualized interventions designed to reduce or strengthen human behavior (Cooper et al., 2020). There is a strong body of evidence to demonstrate its use in supporting individuals with ASD (Leaf et al., 2020; Tiura et al., 2017). Evidence-based practices for individuals with ASD that are aligned with the principles of ABA include discrete trial training, functional communication training, prompting, and self-monitoring, to name a few (Hume et al., 2021; Ivy & Schreck, 2016). However, as our society shifts to a more technological world, there has been an increase in the use of technology-based interventions to support individuals with ASD (Thai & Nathan-Roberts, 2018).

Technology-aided instruction and intervention (TAII) is an evidence-based practice for individuals with ASD in which technology is the primary component of the intervention (Hume et al., 2021). This can include the use of more common technology, such as the use of computers or mobile device applications, as well as more advanced technology, like virtual reality (VR), augmented reality (AR), mixed reality (MR), or robots. For example, in Rosenbloom et al. (2016), researchers investigated the effectiveness of using a commercially developed mobile device application, I-Connect (Wills & Mason, 2014), for self-monitoring in the general education classroom. The results of the study indicated strong outcomes for increasing on-task behavior and reducing disruptive behaviors, including good social validity from the participant and the teacher. TAII has demonstrated to be effective at supporting a variety of needs (e.g., communication, academics, adaptive skills) for individuals with ASD from infancy to adulthood (Hume et al., 2021). Advanced technology, like VR, has the potential to support TAII and has become more accessible over the last decade.

VR is a three-dimensional, computer-generated visual experience that can replicate real life that the user can interact with (Clay et al., 2021; Lorenzo et al., 2016). It allows for various types of immersive experiences for the user: non-immersive (e.g., desktop display), semi-immersive (e.g., large screen display), and immersive (e.g., head-mounted display; Di Natale et al., 2020). There are several advantages to the use of VR interventions for individuals with ASD, which align with the principles and dimensions of behavior analysis. A clear benefit is its ability to emulate a real-world setting (i.e., environmental arrangement) and offer experiences that cannot otherwise be captured through typical teaching methods like text (i.e., written instructions) or videos (Bailenson et al., 2008). VR allows for repeated practice of skills that may be difficult, or dangerous, to do in real life (e.g., safety skills; Karami et al., 2021). This is extremely advantageous for individuals with ASD as it may reduce the stress associated with learning adaptive and functional skills through more traditional means (Didehbani et al., 2016). It allows for individualization as the implementer can adapt the user’s experience (e.g., appearance of the VR environment displayed, complexity of the task) to meet their specific needs (Bailenson et al., 2008). Real-time feedback empowers programming of specific contingencies and reinforcement schedules to facilitate learning (Clay et al., 2021; Karami et al., 2021). Because VR can mimic the real world, it likely supports generalization. While this could be achieved using in-vivo teaching methods, it enables possibilities beyond face-to-face teaching. VR enables the tracking of a user’s movements (Bailenson et al., 2008), which provides valuable information as to where the user responds, interprets, and interacts with the world (Lorenzo et al., 2016), which is beneficial for data-based decision-making. Additionally, individuals with ASD have shown a strong preference for technology (Valencia et al., 2019), potentially contributing to the social validity of this type of intervention.

Despite an increase in research regarding VR and ASD, there are a limited number of systemic literature reviews to further inform research and practice. For example, Barton et al. (2017) focused broadly on technology-based interventions, which do include information regarding VR. However, their review did not capture the extant literature on VR given this was not the focus and the search terms encapsulating VR were not specifically used. To date, two existing reviews specific to use of VR as an intervention for people with ASD have been identified. Both Mesa-Gresa et al. (2018) and Lorenzo et al. (2019) provided analysis of this topic, with the focus of Lorenzo et al. (2019) being specific to immersive VR. Together, these reviews analyzed 43 published articles from 1990 to 2018. While these reviews give researchers and practitioners an understanding of how VR has been used, neither assessed the rigor of the research using a quality evaluative method (e.g., Center for Exceptional Children Standards; Reichow et al., 2008). This is an important consideration as synthesizing the literature and evaluating the effectiveness of interventions without consideration for the quality of the research (e.g., threats to internal validity) are less helpful in informing practice (Kratochwill et al., 2013).

Additionally, practitioners use systematic reviews to inform decisions related to identifying target behaviors and selecting between intervention options. Therefore, more focused reviews may provide an easier way for them to access the research. Currently, to the authors’ best knowledge, four focused reviews exist on VR for people with ASD. Specifically, two of these reviews focused on social communication skills (Irish, 2013; Vasquez et al., 2015), which included a range of social communication skills (e.g., social interactions, social conventions), and two reviews more broadly focused on VR interventions to teach social skills, such as joint attention, pretend play, and social interactions (Dechsling et al., 2021; Parsons & Mitchell, 2002). A focus in these areas is not surprising given the diagnostic criteria for ASD relates to deficits in social communication. However, individuals with ASD often have difficulty acquiring skills that facilitate independence (e.g., adaptive behavior and vocational skills). One of the primary benefits of VR is the ability to design an accessible and safe practice space to develop skills that are needed to promote independence and autonomy (Irish, 2013; Parsons & Mitchell, 2002). Thus, it is exciting to see an increase in published research using VR-based interventions to teach functional and adaptive behaviors (Didehbani et al., 2016; Karami et al., 2021).

Lastly, although some reviews of the VR literature have been published, most of these reviews are limited in that they did not utilize systematic search procedures or provided assessment of the methodological rigor (e.g., Reichow et al., 2008) of the studies included. The absence of a measure for methodological rigor limits the certainty of evidence and complicates interpretation of the review’s conclusions. While there is a need to address the deficits of communication for those with ASD, it is also imperative to teach adaptive and functional skills in order to promote independence and autonomy.

There is potential for VR to enable people with ASD to have meaningful opportunities to learn and generalize adaptive and functional skills to their everyday life. The current review aims to (1) evaluate the current existing body of literature utilizing behavioral interventions delivered using VR to increase behaviors that support more independent functioning (e.g., teach vocational skills, adaptive behavior) or decrease challenging behaviors that interfere with daily functioning that interfere with daily functioning for individuals with ASD and (2) assess the methodological rigor of the literature to inform future research and practice for the use of VR when targeting adaptive and functional skills.

Method

Search Procedures

The researchers completed a systematic search of the following databases: PsycINFO, Medline, Psychology and Behavioral Sciences Collection, and ERIC. These searches were conducted by combining a term to describe ASD (i.e., “Autis*,” “Developmental disab*,” “Asperger,” “ASD”) with a term to describe VR (“virtual reality”) and (“intervention”, “treatment”). The original search of the databases was conducted in September 2021 and yielded 191 articles after the removal of duplicates (see Fig. 1 graphic results). Following the database searches, the third author completed an initial screening of each article by their title and abstract and excluded articles that did not include the use of VR and ASD (n = 129). An additional search using Google Scholar was then conducted to identify any additional articles. After excluding duplicates, a total of 34 articles were added to the total article list for application of the inclusion criteria. In total, the researchers reviewed the full text of 67 articles using the inclusion and exclusion criteria. This resulted in 51 articles being excluded and 16 articles being included in the review.

Following the database searches, ancestral and forward searches of the included articles were conducted. The ancestral searches consisted of reviewing the references of the included articles and extracting relevant studies if titles contained any of the search terms (defined above). Forward searches were conducted via Google Scholar to search the record of the included articles using the “cited by” button. Relevant articles which cited the included article were then reviewed for possible inclusion. All articles extracted from the ancestral and forward searches were added into a Microsoft Excel™ spreadsheet. These articles then underwent review for inclusion. A total of seven additional articles were retrieved from the ancestral and forward search bringing the final number of articles included to a total of 23 studies.

Inclusion and Exclusion Criteria

To be included in this review, articles had to meet the following criteria: (a) be peer-reviewed and published in English, (b) include at least one participant with an ASD, (c) implement a behavioral intervention designed to facilitate independence by increasing functional/ adaptive behaviors or decreasing challenging/ interfering behaviors, (d) utilize an experimental or quasi-experimental research design to evaluate the effects of the intervention on the target behaviors, (e) use a form of VR to facilitate the therapeutic intervention, and (f) provide quantitative data pertaining to the participant’s acquisition of adaptive and functional target behaviors (e.g., teaching air travel behavior, treatment of phobia, learning pedestrian safety skills). Studies that did not collect data on an adaptive or functional target behavior or did not include a therapeutic intervention as the independent variable were excluded (e.g., social skills, communication skills). Functional and adaptive behaviors were broadly defined as behaviors that fall within the categories of vocational, domestic, personal, or community, leisure skills (Ivy & Schreck, 2016). Skills targeted within the studies were evaluated by cross checking the dependent variable with commonly used behavior analytic assessments (e.g., Essential for Living, Assessment of Functional Living Skills) to determine inclusion (McGreevy et al., 2012; Partington & Mueller, 2012). For example, Fitzgerald et al. (2018) conducted a study that evaluated the use of VR and video modeling to teach paper folding tasks (e.g., making a paper boat). However, this paper was excluded since although folding paper might have some functional and adaptive contexts, such as folding paper menus in the context of job skills or increasing leisure skills for an individual, a direct context was not provided for a functional or adaptive context, and the study focus was more so a comparison of two intervention methods (e.g., VR versus video modeling). Additionally, the researchers included any study utilizing a quasi-experimental, group comparison experimental, or single-case experimental design. Studies evaluating only the social validity of VR interventions or participants’ perspectives that did not also involve an evaluation of intervention outcomes were excluded. For example, McCleery et al. (2020) evaluated the usability and feasibility of an immersive VR program to teach police interaction skills for participants with autism but did not measure targeted skills gained from the intervention program and thus was excluded from this review given the lack of data collection. Finally, any study that discussed the development of technologies or the architecture of the technologies but did not provide quantitative data on the effects of the intervention on the target dependent variables was excluded. For example, Trepagnier et al. (2005) discussed multiple computer-based and virtual environment technologies that are in development but did not utilize those technologies in an experiment. After application of the inclusion criteria, a total of 23 articles were included in this review.

Descriptive Synthesis

The raters coded each article by the following variables: (a) number of participants, (b) participants characteristics (age, gender, diagnosis), (c) dependent variable, (d) independent variable, (e) technology utilized (description of the VR technology), (f) experimental design, and (g) study outcomes. Raters coded the total number of participants including both the participants with ASD and without ASD. Raters provided a narrative description of the dependent variables, independent variables, and technology used. Raters coded the experimental design as either group experimental, quasi-experimental, or single-case experimental designs. Finally, raters coded the study outcomes according to how the author(s) reported the outcomes for the target dependent variable(s).

Quality Evaluation Method

Articles were group based on the experimental design (i.e., single-case research versus group experimental/quasi-experimental) to facilitate the quality evaluation. Primary indicators of quality include evaluation of descriptions included in a study such as participant information, independent variable, dependent variable, and use of statistical tests. The two lead authors then evaluated each study according to the corresponding rubric developed by Reichow et al. (2008) evaluative method for single-case or group-experimental research design. Reichow’s evaluative method was chosen in comparison to other quality evaluative methods (e.g., Council for Exceptional Children Standards) since it includes procedures to evaluate both single-case and group experimental research, evaluates internal and external validity, and was specifically developed for research specific to individuals with autism (Cook et al., 2015; Reichow et al., 2008). Additionally, Reichow’s evaluative method has been well established in the literature to aid in the identification of practices that meet the standards to be classified as an evidence-based practice (EBP; Lynch et al., 2018; Reichow, 2012).

Interrater Reliability

During the review for inclusion, two raters coded 100% of the articles (n = 74). To evaluate the reliability of the application of the inclusion and exclusion criteria, interrater reliability (IRR) was calculated using the percent agreement by dividing the total number of agreements by the total number of agreements plus disagreements and then multiplying by 100 to obtain a percentage. Agreement on inclusion was obtained on 89.19% of the studies (n = 66). Disagreements were reviewed and discussed by the raters until agreement was established for a final agreement of 100%.

Data Extraction

Two raters independently coded 50% of the included articles (n = 23), which were assigned using a randomizer application (i.e., www.random.org). Each article was coded across three categories with a total of 36 items for which reliability was evaluated (i.e., 12 articles with three categories each). Agreement was established on 33 of the items. IRR was calculated using the percentage of agreement by dividing the total number of items with agreement by the total number of items and then multiplying by 100 to obtain a percentage. The initial IRR was 91.67%. Disagreements were reviewed by the raters and discussed for a final IRR of 100%. The final table was then evaluated for accuracy by the remaining authors to ensure accuracy of the table.

Quality Evaluation

Twelve studies of the 23 articles (50%) were independently reviewed by the two lead authors to establish IRR. The 12 articles included seven group experimental/quasi-experimental design studies and four single-case research studies. There were 12 indicators per article for a total of 24 items for which reliability was evaluated. Agreement was established on 21 of the 23 total items (91%). Disagreements were discussed by the authors until a consensus for a final IRR of 100%.

Results

The 23 articles included in this review were summarized by dependent variable, intervention components, behavioral components, and technology used. Table 1 provides the data summary of each study.

Participants

Across the 23 included studies, there were a total of 888 participants (excluding the staff participants included in Smith et al., 2021a) with an approximate mean age of 17.47 (range = 4 to 29.4 years). A total of 519 participants were reported having formal diagnosis of autism, ASD, Asperger’s, or pervasive developmental disorder-not otherwise specified (PDD-NOS). The majority of the included participants were high to moderate functioning levels. Furthermore, most studies included specific participant characteristics or inclusion criteria such as unimpaired cognition/average IQ (e.g., Maskey et al., 2019a; Ward & Esposito, 2019), minimum reading level ability (e.g., Genova et al., 2021; Smith et al., 2014), verbal fluency/spoken language abilities (e.g., Dixon et al., 2020; Maskey et al., 2014), ability to follow directions (e.g., Maskey et al., 2019b, 2019c), normal vision and hearing (e.g., Smith et al., 2014, 2021a), and no severe physical, medical, or psychiatric condition would interfere (e.g., Cox et al, 2017; Johnston et al., 2020). Only one study reported the inclusion of participants (n = 3) that did not have spoken language (i.e., Miller et al., 2020). Interestingly, none of the included studies reported screening of seizure disorders. And of the studies that reported specific exclusion criteria (n = 5), seizure disorders were not specifically listed.

Dependent Variables

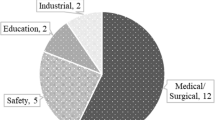

Of the 23 studies, 43.48% (n = 10) taught vocational related skills. Specifically, nine of these studies (e.g., Burke et al., 2018; Genova et al., 2021; Smith et al., 2014) targeted job interview skills and one targeted general vocational skill (i.e., Bozgeyikli et al., 2017). Of the functional behaviors targeted, 30.43% (n = 7) of the studies focused on the treatment of challenging behavior, such as the treatment of fears, phobias (e.g., Maskey et al., 2014, 2019a; Meindl et al., 2019), or hypersensitivity to environmental sounds (e.g., crying babies, barking dogs, sirens; Johnston et al., 2020) that caused that caused anxiety, disruption to daily live, and/or challenging behavioral reactions (e.g., agitation, hiding, panic attacks, refusing to go outside). Four (17.39%) studies focused on safety related skills, such as pedestrian safety (i.e., Dixon et al., 2020), driving skills (i.e., Cox et al., 2017; Wade et al., 2016), and transportation use (i.e., Miller et al., 2020; Simões et al., 2018). One study targeted general functioning skills (i.e., understanding the context and characteristics of common objects (i.e., Wang & Reid, 2013). And lastly, only one study focused on increasing exercise engagement (i.e., McMahon et al., 2020). See Table 1 for a summary of each study.

Behavior Analytic Components Embedded within VR

A combination of behavior analytic components, such as antecedent interventions, prompting, reinforcement, or corrective feedback, was utilized by all the included studies. For nine of the studies (39.13%), the VR system primarily provided the learning stimuli, prompts, and consequence variables (e.g., reinforcement or feedback) and in some cases a researcher or therapist provided pre-training on the use of the VR system. For five of the studies (21.73%), a combination of the VR system and therapist implementation was used. For example, for most of the studies utilizing VR within the context of job interview training, the VR system was primarily used for practice interviews and additional instruction was provided by a therapist on related interview skills (e.g., Smith et al., 2021a; Strickland et al., 2013). Lastly, in eight studies (34.78%), the VR system was utilized primarily for the learning stimuli needed for teaching the targeted skill with a therapist delivering instruction, prompting, and reinforcement. For example, Dixon et al. (2020) used the VR system within the context of pedestrian safety (visual and auditory stimuli) with a therapist delivering questions related to the safety of the situation (e.g., “Is there a moving car?”) and providing reinforcement for the participant's responses. See Table 1 for a summary of each study.

Generalization Measurement

The majority of the studies included some type of generalization assessment. Eight of the studies (34.78%) provided a real-life opportunity to assess generalization. Specifically, two studies included real-world practice (e.g., Meindl et al., 2019; Miller et al., 2020). For example, Meindl conducted real-life blood draws in medical environments and assessed generalization across two different nurses and to the participant’s other arm. Of the studies that did not collect generalization data, six studies (26.09%) provided mock interviews (e.g., Burke et al., 2018; Genova et al., 2021; Smith et al., 2014) rather than generalization to real life contexts. Five studies (21.74%) collected self-reporting of phobias/fears that had been treated at various follow-up points (e.g., 6 weeks, 6 months, 12 months; e.g., Maskey et al., 2014, 2019a, 2019c). However, four studies (17.39%) did not assess for any dimension of generalization, simulated practice, or self-reporting of effects past treatment (e.g., Bozgeyikli et al., 2017; Simões et al., 2018; Strickland et al., 2013).

VR Technology

All studies used software to create the virtual environments, but some used additional hardware displays and interfaces to increase the level of immersion. A non-immersive VR was the most commonly utilized configuration, which was used by 43.48% of the included studies (n = 10). This type of VR configuration is the least immersive and generally relied on a standard desktop sized computer monitor (i.e., size range) with basic inputs from the user (e.g., desktop keyboard or controller; Bamodu & Ye, 2013). A semi-immersive VR which was used by 30.43% (n = 7). This configuration relied on external equipment, such as sensors for interaction (e.g., XBOX Kinect, Leap Motion) and projectors or large screens to display the VR simulation (e.g., Blue Room advanced VRE) to create a sense of deeper immersion and interactivity within a VR simulation (Bamodu & Ye, 2013). Lastly, fully immersive VR was used by 30.34% (n = 7) of the included studies. This set up entailed both the use of advanced VR technology (e.g., motion tracking, head mounted display, Oculus Touch controllers) with the use of software (e.g., Unity Game engine) to create the more advanced 3D virtual environments (Bamodu & Ye, 2013). See Table 1 for a summary of each study.

Quality Ratings and Evaluation of Evidence

There were 18 group experimental design studies and five single-case experimental design studies (n = 23 studies total). Overall, the raters identified two (8.75%; Cox et al., 2017; Wade et al., 2016) of the studies as meeting criteria to be classified as “strong” and nine (39.13%; Dixon et al., 2020; Genova et al., 2021; Humm et al., 2014; Meindl et al., 2019; Simões et al., 2018; Smith et al., 2014; Smith et al., 2021a; Smith et al., 2021b; Wang & Reid, 2013) of the studies as meeting criteria to be classified as “adequate.” The remaining studies (n = 12; 52.17%) did not meet criteria and cannot offer evidence towards the research question (i.e., Bozgeyikli et al., 2017; Burke et al., 2018; Burke et al., 2021; Genova et al., 2021; Johnston et al., 2020; Maskey et al., 2014; Maskey et al., 2019a; Maskey et al., 2019b; Maskey et al., 2019c; McMahon et al., 2020; Miller et al., 2020; Strickland et al., 2013; Ward & Esposito, 2019). Of the 18 group experimental design studies, the raters classified two (11.11%) as “strong,” six (33.33%) as “adequate,” and ten (55.56%) as “weak.” Of the five single-case experimental design studies, the raters classified zero as “strong,” three (60%; Dixon et al., 2020; Meindl et al., 2019; Wang & Reid, 2013) as “adequate,” and two (40%; Johnston et al., 2020; McMahon et al., 2020) as “weak.” Across the between group design studies, the most common “unacceptable” primary indicator rating was related to the dependent variable. Meaning there were at least three out of four missing features (i.e., the variables were defined with precision; details of measurement for replication; measures linked to dependent variable; data collected at appropriate times for analysis). Across the secondary indicators, the most common criteria not met was random assignment. For the single case studies, there were not commonalities in the primary indicators. Rather, the most common “unacceptable” secondary indicator rating was related to calculation of the kappa statistic, use of blind raters, and collection of generalization and maintenance data. See Tables 2 and 3 for quality ratings of each study.

Taken as a whole, the two studies (Cox et al., 2017; Wade et al., 2016) identified as “strong” quality studies were conducted by two different research teams, at two different locations, with 71 different participants. They both used VR in the context of teaching driving skills and meet the qualifications to be considered an evidence-based practice. With four between groups experimental studies (i.e., Humm et al., 2014; Smith et al, 2014, 2021a, 2021b) conducted by two different research labs, rating as “adequate,” the use of VR in the context of teaching interview skills can also be considered an evidence-based practice.

Discussion

This review synthesized 23 studies investigating the use of VR to provide behavioral interventions designed to increase behaviors that support independent functioning or decrease behaviors that interfere with functioning in daily life for individuals with autism. Of those studies, ten targeted vocational related skills, seven targeted challenging behaviors, four targeted safety skills (e.g., driving, airplane travel, pedestrian safety), and two studies targeted general functional skills (e.g., engagement, executive functioning). In terms of quality ratings, two of the studies met the quality criteria for a classification of “strong” and eight met the quality criteria for a classification of “adequate.” This literature base supports the use of VR as an evidence-based modality for behavioral interventions in teaching driving and interview skills. There is also a need for replication of both single case and between group experimental designs, as well as an increase in the rigor of quality design methodology.

The first aim of this review was to evaluate the current existing body of literature utilizing behavioral interventions delivered using VR to increase behaviors that support independent functioning and address challenging behaviors that interfere with daily functioning. The literature base highlighted the use of VR in simulating daily environments (e.g., interview settings and streets) in the teaching environment. The use of VR to simulate these daily environments can enhance the safety and generalizability of training and intervention overall. For example, the use of VR to simulate a street by Dixon et al. (2020) removes the inherent danger associated with street crossings while allowing the trainee to develop autonomy. Similarly, the use of VR to simulate driving conditions by Cox et al. (2017) can allow for safe practice environments protecting the trainee, instructor, other drivers, and pedestrians. In particular, VR environments can reduce the risks associated with skill acquisition that might not be feasible in the real-world environments. For example, when practicing safely walking across the street in a VR environment, there are no real risks if the user does not wait for the crosswalk sign to signal as compared to the real environment, where an individual could be hit by a car.

Another potential benefit of VR based interventions are the ability to customize the user’s intervention based on their progress for skill acquisition, such as embedding prompts to help highlight the salient cues in the environment that should evoke a specific behavior response from the user. For example, Cox et al. (2017) included extra stimulus cues within the VR driving simulation based on user eye gaze to highlight driving hazards that should evoke driver attention and defensive driving maneuvers. This type of included component can potentially help ensure the VR interaction can individualize to the user, thus providing a more tailored intervention and user experience.

VR interventions can also allow for extra practice and a variety of exemplars to better promote generalization of skills (multiple exemplar training study). Furthermore, VR can also easily allow for generalization to the natural environment since it allows for programming of the relevant stimuli that would occur within the natural environment (Stokes & Baer, 1977). For example, Miller et al. (2020) included programming for generalization within the sessions of the study. Specifically, this study conducted the last session of the study at the airport to provide a real-world rehearsal of the air travel skills targeted during the VR-based intervention. This study highlights the utility and efficacy of VR based interventions as well as the need to evaluate the transfer of skills to the “real” environment. However, given the lack of assessment of generalization to real-environments from the studies included in this review, more analysis is needed to evaluate the effects of generalization on VR-trained skills.

Lastly, some of the studies included in this review indicated the effectiveness of using lower cost VR systems, which may increase the feasibly of VR-based interventions within clinical applications. For example, Miller et al. (2020) used an iPhone X with Google Cardboard device to create a virtual air travel experience. And several studies used a commercially available Internet software program (i.e., Molly Porter by SIMmerson Immersive Simulations) to provide mock interviews for developing interview skills (i.e., Genova et al., 2021; Humm et al., 2014; Smith et al., 2014, 2021a, 2021b; Ward & Esposito, 2019). Although low-tech solutions may be readily available, research is still needed to help evaluate the costs and benefits of the various VR technology as it relates to skills being taught, the needs of the individual, and the programming of relevant environmental variables to help best promote generalization of skills to real-world environments.

The secondary aim of this review was to assess the methodological rigor of the literature to inform future research and practice for the use of VR when targeting adaptive and functional skills. A major strength of this literature base is the inclusion of both single-case experiments and between-group experiments. This literature base was able to establish the use of VR as an evidence-based modality to provide behavioral interventions for teaching driving and interview skills. Unfortunately, over half of the included studies did not meet quality criteria to contribute to the knowledge base. This indicates a need for further replication with a focus on methodological quality for VR-based modalities in the context of behavioral interventions. In particular, description of the dependent variable is crucial to replication of research but was a limitation of this literature base (Kazdin, 2011). Also, the use of rigorous designs with control conditions and random assignment can enhance the rigor of this literature base.

While the current research evaluated in this review indicates that VR is a conducive platform complementary for the integration of behavior analytic strategies to develop effective interventions, there are a few considerations worthy of discussion. First, there is a need for decision-making frameworks to help inform practitioners and service providers which equipment options allow for individualization or what technology options best align to various characteristics and needs of the individuals we serve. For example, Simões et al. (2018) provided differentiation across the technology used. Specifically, four of the participants in the study did not use the VR head-mounted display due to vision impairments, however the desktop configuration was still conducive for those users to participate in the VR intervention. This highlights the need for clear decision-making framework for technology selected in VR-based interventions.

Second, there is a need for cross field collaboration to ensure that VR interventions have the programming capacity for individualization, systematic teaching procedures, and reinforcement contingencies that are transferable to the real environments. In many of the studies included in this review, therapist/researchers were still providing prompts and reinforcement rather than these elements being seamlessly incorporated into the VR system. For example, Dixon et al. (2020) had their participants vocally state if it was safe to cross the road, rather than crossing the street in the VR environment. Real-time videos were used of the participants communities rather than the development of a virtual world that would allow for participant interactions. Such VR uses may be limiting in that the participants do not get a fully immersed experience where the real-world behaviors (e.g., crossing the street safely) contact reinforcement. And furthermore, it may be difficult from a programming perspective for a therapist to incorporate adjustment to the stimulus presentation within a virtual environment; thus, collaboration at the programming level is needed to ensure the relevant variations to the virtual environment are included. This may indicate that there was a lack of collaboration across technology developers and behavior analysts. As such, future research should consider the benefits of cross-field collaboration to improve the quality and efficacy of VR-based interventions.

Third, there is a need to evaluate other skills taught using behavioral interventions, where VR could provide a better context for developing effective interventions. Given the few studies focused on safety skills in the current literature, this seems like an important area that could benefit individuals who are working to develop these functional skills. For example, abduction prevention could be an area where VR-based interventions might provide for more effective training, as compared to role playing or social stories-based interventions, since the virtual environment could include relevant signals with multiple exemplars and provide practice opportunities (e.g., Ledbetter-Cho et al., 2016).

For practitioners, it is important to highlight the use of evidence-based practices (EBPs) when developing interventions for individuals with autism. Given the range of technology options for VR-based interventions, consideration of prerequisite skills for both the use of technology and the skill is targeted within the intervention. Thus, assessment should be utilized to help guide the intervention plans. For example, if using VR goggles, it would be important to do some direct assessment to ensure the user has the necessary skills and that the VR experience is enjoyable and does not cause issues, such as motion sickness. Practitioners would also want to be sure that generalization of the skill is accounted for within the intervention and transfers easily to the real world. This may also require incorporating other stakeholders within the intervention phases to ensure the technology used is feasible for everyone involved. As VR technology continues to advance, research is needed to help provide a clear framework for collaboration and decision-making to help progress and extend VR-based interventions.

References

References marked with an * are included in the review

Bailenson, J. N., Yee, N., Blascovich, J., Beall, A. C., Lundblad, N., & Jin, M. (2008). The use of immersive virtual reality in the learning sciences: Digital transformations of teachers, students, and social context. The Journal of the Learning Sciences, 17(1), 102–141. https://doi.org/10.1080/10508400701793141

Bamodu, O., & Ye, X. M. (2013). Virtual reality and virtual reality system components. Advanced Materials Research, 765, 1169–1172. https://doi.org/10.4028/www.scientific.net/AMR.765-767.1169

Barton, E. E., Pustejovsky, J. E., Maggin, D. M., & Reichow, B. (2017). Technology-aided instruction and intervention for students with ASD: A meta-analysis using novel methods of estimating effect sizes for single-case research. Remedial and Special Education, 38(6), 371–386. https://doi.org/10.1177/0741932517729508

*Bozgeyikli, L., Bozgeyikli, E., Raij, A., Alqasemi, R., Katkoori, S., & Dubey, R. (2017). Vocational rehabilitation of individuals with autism spectrum disorder with virtual reality. ACM Transactions on Accessible Computing, 10(2), 1–25. https://doi.org/10.1145/3046786

*Burke, S. L., Bresnahan, T., Li, T., Epnere, K., Rizzo, A., Partin, M., Ahlness, R. M., & Trimmer, M. (2018). Using virtual interactive training agents (ViTA) with adults with autism and other developmental disabilities. Journal of Autism and Developmental Disorders, 48(3), 905–912. https://doi.org/10.1007/s10803-017-3374-z

*Burke, S. L., Li, T., Grudzien, A., & Garcia, S. (2021). Brief report: Improving employment interview self-efficacy among adults with autism and other developmental disabilities using virtual interactive training agents (ViTA). Journal of Autism and Developmental Disorders, 51(2), 741–748. https://doi.org/10.1007/s10803-020-04571-8

Clay, C. J., Schmitz, B. A., Balakrishnan, B., Hopfenblatt, J. P., Evans, A., & Kahng, S. (2021). Feasibility of virtual reality behavior skills training for preservice clinicians. Journal of Applied Behavior Analysis, 54(2), 547–565. https://doi.org/10.1002/jaba.809

Cook, B. G., Buysse, V., Klingner, J., Landrum, T. J., McWilliam, R. A., Tankersley, M., & Test, D. W. (2015). CEC’s standards for classifying the evidence base of practices in special education. Remedial and Special Education, 36(4), 220–234. https://doi.org/10.1177/0741932514557271

Cooper, J. O., Heron, T. E., & Heward, W. L. (2020). Applied behavior analysis (3rd ed.). Pearson.

*Cox, D. J., Brown, T., Ross, V., Moncrief, M., Schmitt, R., Gaffney, G., & Reeve, R. (2017). Can youth with autism spectrum disorder use virtual reality driving simulation training to evaluate and improve driving performance? An exploratory study. Journal of Autism and Developmental Disorders, 47(8), 2544–2555. https://doi.org/10.1007/s10803-017-3164-7

Dechsling, A., Shic, F., Zhang, D., Marschik, P. B., Esposito, G., Orm, S., Sütterlin, S., Kalandadze, T., Øien, R. A., & Nordahl-Hansen, A. (2021). Virtual reality and naturalistic developmental behavioral interventions for children with autism spectrum disorder. Research in Developmental Disabilities, 111, 103885. https://doi.org/10.1016/j.ridd.2021.103885

Didehbani, N., Allen, T., Kandalaft, M., Krawczyk, D., & Chapman, S. (2016). Virtual reality social cognition training for children with high functioning autism. Computers in Human Behavior, 62, 703–711. https://doi.org/10.1016/j.chb.2016.04.033

Di Natale, A. F., Repetto, C., Riva, G., & Villani, D. (2020). Immersive virtual reality in K-12 and higher education: A 10-year systematic review of empirical research. British Journal of Educational Technology, 51(6), 2006–2033. https://doi.org/10.1111/bjet.13030

*Dixon, D. R., Miyake, C. J., Nohelty, K., Novack, M. N., & Granpeesheh, D. (2020). Evaluation of an immersive virtual reality safety training used to teach pedestrian skills to children with autism spectrum disorder. Behavior Analysis in Practice, 13(3), 631–640. https://doi.org/10.1007/s40617-019-00401-1

Fitzgerald, E., Yap, H. K., Ashton, C., Moore, D. W., Furlonger, B., Anderson, A., Kickbush, R., Donald, J., Busacca, M., & English, D. L. (2018). Comparing the effectiveness of virtual reality and video modelling as an intervention strategy for individuals with autism spectrum disorder: Brief report. Developmental Neurorehabilitation, 21(3), 197–201. https://doi.org/10.1080/17518423.2018.1432713

*Genova, H. M., Lancaster, K., Morecraft, J., Haas, M., Edwards, A., DiBenedetto, M., Krch, D., DeLuca, J., & Smith, M. J. (2021). A pilot RCT of virtual reality job interview training in transition-age youth on the autism spectrum. Research in Autism Spectrum Disorders, 89, 101878. https://doi.org/10.1016/j.rasd.2021.101878

Hume, K., Steinbrenner, J. R., Odom, S. L., Morin, K. L., Nowell, S. W., Tomaszewski, B., Szendrey, S., McIntyre, N. S., Yücesoy-Özkan, S., & Savage, M. N. (2021). Evidence-based practices for children, youth, and young adults with autism: Third generation review. Journal of Autism and Developmental Disorders, 51(11), 4013–4032. https://doi.org/10.1007/s10803-020-04844-2

*Humm, L. B., Olsen, D., Morris, B. E., Fleming, M., & Smith, M. (2014). Simulated job interview improves skills for adults with serious mental illnesses. Studies in Health Technology and Informatics, 199, 50–54.

Irish, J. E. (2013). Can I sit here? A review of the literature supporting the use of single-user virtual environments to help adolescents with autism learn appropriate social communication skills. Computers in Human Behavior, 29(5), A17–A24. https://doi.org/10.1016/j.chb.2012.12.031

Ivy, J. W., & Schreck, K. A. (2016). The efficacy of ABA for individuals with autism across the lifespan. Current Developmental Disorders Reports, 3(1), 57–66. https://doi.org/10.1007/s40474-016-0070-1

*Johnston, D., Egermann, H., & Kearney, G. (2020). SoundFields: A virtual reality game designed to address auditory hypersensitivity in individuals with autism spectrum disorder. Applied Sciences, 10(9), 2996. https://doi.org/10.3390/app10092996

Karami, B., Koushki, R., Arabgol, F., Rahmani, M., & Vahabie, A. H. (2021). Effectiveness of virtual/augmented reality–based therapeutic interventions on individuals with autism spectrum disorder: A comprehensive meta-analysis. Frontiers in Psychiatry, 12, 665326. https://doi.org/10.3389/fpsyt.2021.665326

Kazdin, A. E. (2011). Single-case research designs: Methods for clinical and applied settings. Oxford University Press.

Kratochwill, T. R., Hitchcock, J. H., Horner, R. H., Levin, J. R., Odom, S. L., Rindskopf, D. M., & Shadish, W. R. (2013). Single-case intervention research design standards. Remedial and Special Education, 34(1), 26–38. https://doi.org/10.1177/0741932512452794

Leaf, J. B., Cihon, J. H., Ferguson, J. L., Milne, C. M., Leaf, R., & McEachin, J. (2020). Advances in our understanding of behavioral intervention: 1980 to 2020 for individuals diagnosed with autism spectrum disorder. Journal of Autism and Developmental Disorders, 51(12), 4395–4410. https://doi.org/10.1007/s10803-020-04481-9

Ledbetter-Cho, K., Lang, R., Davenport, K., Moore, M., Lee, A., O’Reilly, M., Watkins, L., & Falcomata, T. (2016). Behavioral skills training to improve the abduction-prevention skills of children with autism. Behavior Analysis in Practice, 9(3), 266–270. https://doi.org/10.1007/s40617-016-0128-x

Lorenzo, G., Lledó, A., Arráez-Vera, G., & Lorenzo-Lledó, A. (2019). The application of immersive virtual reality for students with ASD: A review between 1990–2017. Education and Information Technologies, 24(1), 127–151. https://doi.org/10.1007/s10639-018-9766-7

Lorenzo, G., Lledó, A., Pomares, J., & Roig, R. (2016). Design and application of an immersive virtual reality system to enhance emotional skills for children with autism spectrum disorders. Computers & Education, 98, 192–205. https://doi.org/10.1016/j.compedu.2016.03.018

Lynch, Y., McCleary, M., & Smith, M. (2018). Instructional strategies used in direct AAC interventions with children to support graphic symbol learning: A systematic review. Child Language Teaching and Therapy, 34(1), 23–36. https://doi.org/10.1177/0265659018755524

Maenner, M. J., Shaw, K. A., Bakian, A. V., Bilder, D. A., Durkin, M. S., Esler, A., Furnier, S. M., Hallas, L., Hall-Lande, J., Hudson, A., Hughes, M. M., Patrick, M., Pierce, K., Poynter, J. N., Salinas, A., Shenouda, J., Vehorn, A., Warren, Z., Constantino, J. N., … & Cogswell, M. E. (2021). Prevalence and characteristics of autism spectrum disorder among children aged 8 years—Autism and developmental disabilities monitoring network, 11 sites, united states, 2018. MMWR Surveillance Summaries, 70(11), 1–16. https://doi.org/10.15585/mmwr.ss7011a1

*Maskey, M., Lowry, J., Rodgers, J., McConachie, H., & Parr, J. R. (2014). Reducing specific phobia/fear in young people with autism spectrum disorders (ASDs) through a virtual reality environment intervention. PloS one, 9(7), e100374. https://doi.org/10.1371/journal.pone.0100374

*Maskey, M., McConachie, H., Rodgers, J., Grahame, V., Maxwell, J., Tavernor, L., & Parr, J. R. (2019a). An intervention for fears and phobias in young people with autism spectrum disorders using flat screen computer-delivered virtual reality and cognitive behaviour therapy. Research in Autism Spectrum Disorders, 59, 58–67. https://doi.org/10.1016/j.rasd.2018.11.005

*Maskey, M., Rodgers, J., Grahame, V., Glod, M., Honey, E., Kinnear, J., Labus, M., Milne, J., Minos, D., McConachie, H., & Parr, J. R. (2019b). A randomised controlled feasibility trial of immersive virtual reality treatment with cognitive behaviour therapy for specific phobias in young people with autism spectrum disorder. Journal of Autism and Developmental Disorders, 49(5), 1912–1927. https://doi.org/10.1007/s10803-018-3861-x

*Maskey, M., Rodgers, J., Ingham, B., Freeston, M., Evans, G., Labus, M., & Parr, J. R. (2019c). Using virtual reality environments to augment cognitive behavioral therapy for fears and phobias in autistic adults. Autism in Adulthood, 1(2), 134–145. https://doi.org/10.1089/aut.2018.0019

McGreevy, P., Fry, T., & Cornwall, C. (2012). Essential for living. McGreevy.

McCleery, J. P., Zitter, A., Solórzano, R., Turnacioglu, S., Miller, J. S., Ravindran, V., & Parish-Morris, J. (2020). Safety and feasibility of an immersive virtual reality intervention program for teaching police interaction skills to adolescents and adults with autism. Autism Research, 13(8), 1418–1424. https://doi.org/10.1002/aur.2352

*McMahon, D. D., Barrio, B., McMahon, A. K., Tutt, K., & Firestone, J. (2020). Virtual reality exercise games for high school students with intellectual and developmental disabilities. Journal of Special Education Technology, 35(2), 87–96. https://doi.org/10.1177/0162643419836416

*Meindl, J. N., Saba, S., Gray, M., Stuebing, L., & Jarvis, A. (2019). Reducing blood draw phobia in an adult with autism spectrum disorder using low-cost virtual reality exposure therapy. Journal of Applied Research in Intellectual Disabilities, 32(6), 1446–1452. https://doi.org/10.1111/jar.12637

Mesa-Gresa, P., Gil-Gómez, H., Lozano-Quilis, J. A., & Gil-Gómez, J. A. (2018). Effectiveness of virtual reality for children and adolescents with autism spectrum disorder: An evidence-based systematic review. Sensors, 18(8), 2486. https://doi.org/10.3390/s18082486

*Miller, I. T., Wiederhold, B. K., Miller, C. S., & Wiederhold, M. D. (2020). Virtual reality air travel training with children on the autism spectrum: A preliminary report. Cyberpsychology, Behavior, and Social Networking, 23(1), 10–15. https://doi.org/10.1089/cyber.2019.0093

Parsons, S., & Mitchell, P. (2002). The potential of virtual reality in social skills training for people with autistic spectrum disorders. Journal of Intellectual Disability Research, 46(5), 430–443. https://doi.org/10.1046/j.1365-2788.2002.00425.x

Partington, J. W., & Mueller, M. M. (2012). The assessment of functional living skills: Basic living skills assessment protocol. Behavior Analysts, Inc. and Stimulus Publications.

Reichow, B., Volkmar, F. R., & Cicchetti, D. V. (2008). Development of the evaluative method for evaluating and determining evidence-based practices in autism. Journal of Autism and Developmental Disorders, 38(7), 1311–1319. https://doi.org/10.1007/s10803-007-0517-7

Reichow, B. (2012). Overview of meta-analyses on early intensive behavioral intervention for young children with autism spectrum disorders. Journal of Autism and Developmental Disorders, 42(4), 512–520. https://doi.org/10.1007/s10803-011-1218-9

Rosenbloom, R., Mason, R. A., Wills, H. P., & Mason, B. A. (2016). Technology delivered self-monitoring application to promote successful inclusion of an elementary student with autism. Assistive Technology, 28(1), 9–16. https://doi.org/10.1080/10400435.2015.1059384

*Simões, M., Bernardes, M., Barros, F., & Castelo-Branco, M. (2018). Virtual travel training for autism spectrum disorder: Proof-of-concept interventional study. JMIR Serious Games, 6(1), e8428. https://doi.org/10.2196/games.8428

*Smith, M. J., Ginger, E. J., Wright, K., Wright, M. A., Taylor, J. L., Humm, L. B., Olsen, D. E., Bell, M. D., & Fleming, M. F. (2014). Virtual reality job interview training in adults with autism spectrum disorder. Journal of Autism and Developmental Disorders, 44(10), 2450–2463. https://doi.org/10.1007/s10803-014-2113-y

*Smith, M. J., Sherwood, K., Ross, B., Smith, J. D., DaWalt, L., Bishop, L., Humm, L., Elkins, J., & Steacy, C. (2021a). Virtual interview training for autistic transition age youth: A randomized controlled feasibility and effectiveness trial. Autism, 25(6), 1536–1552. https://doi.org/10.1177/1362361321989928

*Smith, M. J., Smith, J. D., Jordan, N., Sherwood, K., McRobert, E., Ross, B., Oulvey, E. A., & Atkins, M. (2021b). Virtual reality job interview training in transition services: Results of a single-arm, noncontrolled effectiveness-implementation hybrid trial. Journal of Special Education Technology, 36(1), 3–17. https://doi.org/10.1177/0162643420960093

Stokes, T. F., & Baer, D. M. (1977). An implicit technology of generalization. Journal of Applied Behavior Analysis, 10(2), 349–367. https://doi.org/10.1901/jaba.1977.10-349

*Strickland, D. C., Coles, C. D., & Southern, L. B. (2013). JobTIPS: A transition to employment program for individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 43(10), 2472–2483. https://doi.org/10.1177/1362361321989928

Thai, E., & Nathan-Roberts, D. (2018). Social skill focuses of virtual reality systems for individuals diagnosed with autism spectrum disorder: A systematic review. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 62(1), 1469–1473.

Tiura, M., Kim, J., Detmers, D., & Baldi, H. (2017). Predictors of longitudinal ABA treatment outcomes for children with autism: A growth curve analysis. Research in Developmental Disabilities, 70, 185–197. https://doi.org/10.1016/j.ridd.2017.09.008

Trepagnier, C. Y., Sebrechts, M. M., Finkelmeyer, A., Coleman, M., Stewart, W., & Werner-Adler, M. (2005). Virtual environments to address autistic social deficits. Annual Review of CyberTherapy and Telemedicine, 3, 101–107.

Valencia, K., Rusu, C., Quiñones, D., & Jamet, E. (2019). The impact of technology on people with autism spectrum disorder: A systematic literature review. Sensors, 19(20), 4485. https://doi.org/10.3390/s19204485

Vasquez, E., Nagendran, A., Welch, G. F., Marino, M. T., Hughes, D. E., Koch, A., & Delisio, L. (2015). Virtual learning environments for students with disabilities: A review and analysis of the empirical literature and two case studies. Rural Special Education Quarterly, 34(3), 26–32. https://doi.org/10.1177/875687051503400306

*Wade, J., Zhang, L., Bian, D., Fan, J., Swanson, A., Weitlauf, A., Sarkar, M., Warren, Z., & Sarkar, N. (2016). A gaze-contingent adaptive virtual reality driving environment for intervention in individuals with autism spectrum disorders. ACM Transactions on Interactive Intelligent Systems, 6(1), 1–23. https://doi.org/10.1145/2892636

*Wang, M., & Reid, D. (2013). Using the virtual reality-cognitive rehabilitation approach to improve contextual processing in children with autism. The Scientific World Journal, 2013, 716890. https://doi.org/10.1155/2013/716890

*Ward, D. M., & Esposito, M. K. (2019). Virtual reality in transition program for adults with autism: Self-efficacy, confidence, and interview skills. Contemporary School Psychology, 23(4), 423–431. https://doi.org/10.1007/s40688-018-0195-9

Wills, H. P., & Mason, B. A. (2014). Implementation of a self-monitoring application to improve on-task behavior: A high school pilot study. Journal of Behavioral Education, 23(4), 421–434. https://doi.org/10.1007/s10864-014-9204-x

Acknowledgements

We thank Drs. Bryant Silbaugh and Se-Woong Park who worked on an earlier larger scale project that was not completed. We thank them for the knowledge they shared that inspired this review.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Contributions

AC and LN: designed and executed the study, assisted with the data analyses, and wrote the paper. SG: assisted with data analysis and wrote part of the results, and assisted with edits. MK: assisted with data analysis and wrote the introduction. JQ and KC: collaborated on the data analysis and writing related to the technical aspects of VR and assisted with edits.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Carnett, A., Neely, L., Gardiner, S. et al. Systematic Review of Virtual Reality in Behavioral Interventions for Individuals with Autism. Adv Neurodev Disord 7, 426–442 (2023). https://doi.org/10.1007/s41252-022-00287-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41252-022-00287-1