Abstract

Numerical simulations of neutron star–neutron star and neutron star–black hole binaries play an important role in our ability to model gravitational-wave and electromagnetic signals powered by these systems. These simulations have to take into account a wide range of physical processes including general relativity, magnetohydrodynamics, and neutrino radiation transport. The latter is particularly important in order to understand the properties of the matter ejected by many mergers, the optical/infrared signals powered by nuclear reactions in the ejecta, and the contribution of that ejecta to astrophysical nucleosynthesis. However, accurate evolutions of the neutrino transport equations that include all relevant physical processes remain beyond our current reach. In this review, I will discuss the current state of neutrino modeling in general relativistic simulations of neutron star mergers and of their post-merger remnants. I will focus on the three main types of algorithms used in simulations so far: leakage, moments, and Monte-Carlo scheme. I will review the advantages and limitations of each scheme, as well as the various neutrino–matter interactions that should be included in simulations. We will see that the quality of the treatment of neutrinos in merger simulations has greatly increased over the last decade, but also that many potentially important interactions remain difficult to take into account in simulations (pair annihilation, oscillations, inelastic scattering).

Similar content being viewed by others

1 Introduction

Over the last decade, the study of merging compact objects has made tremendous progress. Recently observed astrophysical events provide us with some of the most reliable information currently at our disposal regarding the population of stellar mass black holes in the nearby Universe. Rarer events that include neutron stars also inform us about the mass distribution of neutron stars, the equation of state of dense matter, and the origin of heavy elements formed through rapid neutron capture nucleosynthesis (r-process). Our ability to study these systems has largely grown in tandem with the sensitivity of the LIGO and Virgo gravitational-wave detectors. Gravitational-wave observatories have now detected dozens of binary black hole (BBH) mergers, as well as two likely binary neutron star (BNS) mergers and at least two likely neutron star-black hole (NSBH) mergers (see Sect. 2.2 for a more detailed discussion of these events). An overview of these events can be found in the three GWTC catalogues (Abbott et al. 2019, 2021a, b).

While BNS and NSBH mergers are not as commonly observed as BBH mergers, they do have important advantages for nuclear astrophysics. The presence of a neutron star means that these systems can potentially be used to constrain the equation of state of cold, neutron rich dense matter (Abbott et al. 2018)—a crucial source of information about many-nucleon interactions and, potentially, the high-density states of quantum chromodynamics. Additionally, some mergers and post-merger remnants eject material that undergoes r-process nucleosynthesis. The radioactive decay of the ashes of the r-process can then power optical/infrared emission days to weeks after the merger: a kilonova (Lattimer and Schramm 1976; Li and Paczynski 1998; Metzger et al. 2010; Roberts et al. 2011; Kasen et al. 2013). The production site(s) of r-process elements remain(s) very uncertain today, and the observation of neutron star mergers and associated kilonovae may help us solve the long-standing problem of their astrophysical origin. Additionally, some post-merger remnants likely produce collimated relativistic outflows (jets) that are currently believed to be the source of short-hard gamma-ray bursts (SGRBs) (Eichler et al. 1989; Nakar 2007; Fong and Berger 2013). The exact process powering SGRBs is however not well understood, and further observations of neutron star mergers could help us ellucidate how these high-energy events occur in practice. Finally, joint observations of neutron star mergers using both gravitational and electromagnetic waves may also provide additional information about the properties of the merging compact objects, the position of the merging binary, and even the value of the Hubble constant (Holz and Hughes 2005; Nissanke et al. 2010; Abbott et al. 2017a; Hotokezaka et al. 2019).

Neutron star mergers involve a wide range of nonlinear physical processes, preventing us from providing quantitative theoretical predictions for the result of a merger using purely analytical methods. As a result, numerical simulations are an important tool in current attempts to model the gravitational-wave and electromagnetic signals powered by compact binary mergers. Gravity, fluid dynamics, magnetic fields and neutrinos all play major roles during and after neutron star mergers, with out-of-equlibrium nuclear reactions also becoming important on longer time scales (\(\sim \) seconds). In theory, merger simulations thus need to solve Boltzmann’s equations of radiation transport coupled to the relativistic equations of magnetohydrodynamics and Einstein’s equation of general relativity. However, no simulation can do this with the desired level of realism at this point. Two major roadblocks to this modeling efforts are our inability to properly resolve magnetohydrodynamical instabilities during merger (and thus the dynamo process that may follow the growth of magnetic fields due to these instabilities) (Kiuchi et al. 2015), as well as the difficulty of properly solving Boltzmann’s equation of radiation transport for the evolution of neutrinos (Foucart et al. 2018). In this review, we focus on the second problem. The role of magnetic fields in merger simulations is discussed in more detail, for example, in Baiotti and Rezzolla (2017), Paschalidis (2017) and Burns (2020).

Neutrinos play a number of roles in neutron star mergers, with particularly noticeable impacts on the production of r-process elements and the properties of kilonovae. However, properly accounting for neutrino–matter interactions in neutron star mergers remains a difficult problem because, within a merger remnant, neutrinos transition from being in equilibrium with the fluid (in dense hot regions) to mostly free-streaming through the ejected material (far away). In the intermediate regions, neutrino–matter interactions play an important role in the evolution of the temperature and composition of the fluid, but neutrinos cannot be assumed to be in equilibrium with the fluid. Numerical methods that properly capture both regimes are technically challenging and/or computationally expensive. As a result, most merger simulations use approximate neutrino transport algorithms that introduce potentially significant and often hard to quantify errors in our predictions for the nuclei produced during r-process nucleosynthesis and for the properties of kilonovae.

The main objective of this review is to provide an overview of the various algorithms currently used in general relativistic simulations of neutron star mergers and of their post-merger remnants. These can be broadly classified into three groups: leakage methods, which do not explicitly transport neutrinos; moment schemes, which evolve a truncated expansion of the transport equations in momentum space with methods highly similar to those used to evolve the equations of relativistic magnetohydrodynamics; and Monte-Carlo methods, which sample the distribution of neutrinos with packets (or superparticles) propagating through numerical simulations. These are discussed in detail in Sect. 4. Section 2 aims to provide some scientific background about merging neutron stars, while Sect. 3 provides an overview of neutrino physics in neutron star mergers, and of the important neutrino–matter interactions that are currently included or neglected in simulations. Finally, Sect. 5 discusses what existing simulations can tell us about the ways in which our choice of algorithm impacts our numerical results. We note that the objective here is not to review all results in the study of neutron star mergers with neutrinos, but rather to focus on the numerical methods used to perform general relativistic radiation transport. We will thus focus on comparisons of different numerical methods, rather that provide an extensive review of existing simulations that make use of neutrino transport.

Conventions: In this manuscript, latin letters are used for the indices of spatial 3-dimensional vectors/tensors, while greek letters are used for the indices of 4-dimensional vectors/tensors. Sections discussing numerical methods will often use units such that \(h=c=G=1\), but we explicitly keep physical constants in our expressions when discussing interaction rates.

2 Scientific background

2.1 Overview of neutron star mergers physics

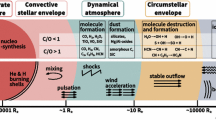

Before delving deeper into the topic of radiation transport in neutron star mergers, it is worth reviewing how we currently understand the evolution of these systems, as well as when different physical processes are expected to play an important role. When discussing neutron star merger simulations, we are typically concerned with the evolution of a binary from tens of milliseconds before merger to a few seconds after merger, i.e., from the moment standard post-Newtonian methods can no longer accurately model the gravitational wave signal to the moment when the accretion disk formed during a merger has lost most of its mass. In the late inspiral (O(10) orbits before merger), the tidal distortion of a neutron star by its binary companion has a potentially measurable impact on the gravitational wave signal, which can be used to put constraints on the equation of state of neutron stars (Flanagan and Hinderer 2008; Abbott et al. 2018). The main role of numerical simulations in that regime is to help test and calibrate analytical waveform models used in the analysis of gravitational wave events (e.g., Bernuzzi et al. 2012; Hinderer et al. 2016; Akcay et al. 2019 for BNS mergers and Thompson et al. 2020; Matas et al. 2020 for NSBH mergers). General relativity, fluid dynamics, and the choice of equation of state are important at that stage, but magnetic fields only impact relatively weak pre-merger electromagnetic signals and neutrinos have practically no impact on the evolution of the system.

The right panel is reproduced with permission from Foucart et al. (2017), copyright by IOP; the left panel visualizes a simulation from Foucart et al. (2016a)

Merger of a disrupting NSBH binary (Right) and of a low-mass NSNS binary (Left). In disrupting NSBH systems, most of the matter is rapidly accreted onto the black hole, while the rest forms an accretion disk and extended tidal tail. Low-mass NSNS binaries form a massive neutron star remnant surrounded by a bound disk, with a smaller amount of material ejected in the tidal tail.

For NSBH binaries, the same remains true during the merger itself, i.e., the few milliseconds during which the neutron star is either tidally disrupted by its black hole companion, or absorbed whole by the black hole. The outcome of the merger is determined by the masses and spins of the compact objects, the equation of state of dense matter (Lattimer and Schramm 1976; Pannarale et al. 2011; Foucart 2012), and the eccentricity of the orbit (East et al. 2015). Numerical simulations of low-eccentricity binaries have shown that only low mass and/or high spin black holes disrupt their neutron star companions (\(M_{\mathrm{BH}}\lesssim 5\,M_{\odot }\) for non-spinning compact objects and circular orbits), a prerequisite to the production of any post-merger electromagnetic signal. If the neutron star is tidally disrupted, a few percents of a solar mass of very neutron rich, cold matter is typically ejected, and tenths of a solar mass remain in a bound accretion disk and/or tidal tail around the black hole (see e.g., Foucart 2020; Kyutoku et al. 2021 for recent reviews, and Fig. 1). In eccentric binaries, neutron stars are typically easier to disrupt, and eject more mass in their tidal tails.

For BNS systems, on the other hand, other physical processes become important once the neutron stars collide. First, the shear region that is naturally created between the merging neutron stars is unstable to the Kelvin–Helmoltz instability, leading to the rapid growth of small scale turbulence (Kiuchi et al. 2015). Magnetic fields are quickly amplified to \(B\sim 10^{16}\) G as a result, and start to play an important role in the evolution of the system. Whether a dynamo process can generate a large scale magnetic field from this turbulent state is an important open questions that simulations have not so far been able to answer. The collision of the two neutron stars also creates hot regions where neutrino emission and absorption can no longer be ignored. BNS mergers eject relatively small amounts of cold tidal ejecta (\(\lesssim 0.01\,M_{\odot }\)), as well as hotter material coming from the regions where the cores of the neutron stars collide. We will see that neutrinos play an important role in the evolution of that hot ejecta. Depending on the equation of state and on the mass of the system, the remnant may immediately collapse to a black hole (on milliseconds time scales), remain temporarily supported by rotation and/or thermal pressure, or form a long-lived neutron star (as on Fig. 1). In all cases, that remnant is surrounded by a hot accretion disk—with more asymmetric systems producing more massive disks (see e.g., Baiotti and Rezzolla 2017; Burns 2020; Radice et al. 2020 for recent reviews).

After merger, neutrino emission is the main source of cooling for the accretion disk and remnant neutron star (if there is one), and neutrino–matter interactions drive changes in the composition of the disk material and of the outflows. Initially, the efficiency of neutrinos in cooling the disk lies in between the radiatively efficient (thin disks) and radiatively inefficient (thick disks) regimes observed in AGNs. NSBH and BNS simulations including radiation transport show a disk aspect ratio \(H/R \sim \) (0.2–0.3) (with H the scale height of the disk and R its radius) (Foucart et al. 2015; Fujibayashi et al. 2018). Hydrodynamical shocks and/or fluid instabilities and then turbulence driven by the magnetorotational instability (MRI) lead to angular momentum transport and heating in the disk, and drive accretion onto the compact object. If a large scale poloidal magnetic field threads the disk, magnetically driven outflows are likely to unbind \(\sim 20\%\) of the mass of the disk (Siegel and Metzger 2017; Fernández et al. 2019)—but this is not a given considering uncertainties about the large scale structure of the magnetic field in post-merger accretion disks. Indeed, while it is possible to grow such a large scale field after merger (Christie et al. 2019), this takes too long to efficiently contribute to the production of winds. A large scale magnetic field generated during merger appears to be required for these winds to exist.

After O(100 ms), the density of the disk decreases enough that neutrino cooling becomes inneficient (Fernández and Metzger 2013; De and Siegel 2021), while the MRI remains active. The disk becomes advection dominated. It puffs up to \(H/R\sim 1\), and viscous spreading of the disk leads to the ejection of 5–25% of the disk mass (viscous outflows) (Fernández and Metzger 2013). Neutrino–matter interactions directly impact the properties of magnetically driven outflows, and indirectly impact the properties of viscous outflows (due to neutrino–matter interactions during the early evolution of the disk, before weak-interaction freeze-out).

The post-merger evolution is also impacted by the presence and life time of a massive neutron star remnant. A hot neutron star remnant is a bright source of neutrinos that can accelerate changes to the composition of matter outflows in the polar regions. How efficiently matter can accrete onto the neutron star remains uncertain. Axisymmetric simulations treating the neutron star surface as a hard boundary predict the eventual ejection of most of the remnant disk (Metzger and Fernández 2014); whether this would remain true for more realistic boundary conditions is unclear, but it is at least likely that a larger fraction of the disk is eventually unbound for neutron star remnants than for black hole remnants. The neutron star remnants themselves are initially differentially rotating, and simulations generally find rotation profiles that are stable to the MRI in most of the star (the angular velocity increases with radius). Some other angular momentum transport mechanism is thus required to bring these remnants to uniform rotation, e.g., convection and/or the Spruit–Taylor dynamo (Margalit et al. 2022). The exact impact of the interaction between the neutron star remnant, its external magnetic field, and the surrounding accretion disk on the evolution of the system remains very uncertain. Examples of post-merger remnants are shown in Fig. 2.

The right panel is reproduced with permission from Hayashi et al. (2022a), copyright by APS; the left panel visualizes a simulation from Foucart et al. (2016a)

Post-merger remnant a few milliseconds after a BNS merger (Left), and 0.3 s after a NSBH merger (Right). The BNS system forms a massive, differentially rotating neutron star surrounded by a low-mass accretion disk, with shocked spiral arms visible in the disk. The NSBH system forms an extended accretion disk around the remnant black hole, with collimated magnetic fields in the polar region.

2.2 Observables and existing observations

The main signals observed so far in neutron star mergers include gravitational wave emission during the late inspiral of the binary towards mergers, SGRBs (and their multi-wavelength afterglows) likely due to relativistic jets powered by the post-merger remnant, and kilonovae. For a system with component masses \(m_{1},m_{2}\), the gravitational waves provide us with a very accurate measurement of the chirp mass \(M_{c} = (m_{1}m_{2})^{0.6}/(m_{1}+m_{2})^{0.2}\), as well as, for sufficiently loud signals, less accurate information about the mass ratio (and thus the component masses), the spins of the compact objects, the equation of state of neutron stars (through their tidal deformability), as well as the distance, orientation, and sky localization of the source (especially for multi-detector observations). We will not discuss the gravitational wave signal in much more detail here, as it is not meaningfully impacted by neutrinos. Outflows generated during and after the merger (see previous section) will be the main source of post-merger electromagnetic signals. Relativistic collimated outflows power SGRBs detectable by observers located along the spin axis of the remnant. As the jet material becomes less relativistic, SGRBs are followed by longer wavelength afterglows detectable by off-axis observers (Fong et al. 2015). The gamma-ray emission is very short lived (\(\lesssim 2\) s for a typical SGRB), but radio afterglows can still be observed a year after the merger (Mooley 2018). The exact mechanism powering the relativistic jet remains unknown. The most commonly discussed model requires the formation of a large scale poloidal magnetic field threading a black hole remnant, with energy extraction from the black hole’s rotation though a Blandford–Znajek-like process (Blandford and Znajek 1977). Some SGRB models are however powered by neutrino–antineutrino pair annihilations in the polar regions. Explaining the most energetic SGRBs through this mechanism is difficult given what is currently known of the neutrino luminosity of post-merger remnants and the efficiency of the pair annihilation process (Just et al. 2016), yet even in a magnetically-powered SGRB, energy deposition due to neutrino pair annihilation or baryon loading of the polar regions due to neutrino-driven winds could impact the formation of a jet (Fujibayashi et al. 2017).

The properties of kilonovae and the role of neutron star mergers in astrophysical nucleosynthesis are likely to be much more significantly impacted by neutrinos than gravitational waves or even SGRBs. Absorption and emission of electron-type neutrinos (\(\nu _{e}\)) and antineutrinos (\({\bar{\nu }}_{e}\)) modifies the relative number of neutrons and protons in the fluid. This is usually expressed through the lepton fraction

with \(n_{e^\pm },n_{n},n_{p},n_{\nu _{e}},n_{{\bar{\nu }}_{e}}\) the number density of electrons, positrons, neutrons, protons, \(\nu _{e}\) and \({\bar{\nu }}_{e}\) respectively. Many simulations use the net electron fraction \(Y_{e}\) instead of the lepton fraction, and assume that charge neutrality requires \(n_{e^{-}}-n_{e^{+}}=n_{p}\),Footnote 1 so that

The electron fraction is a crucial determinant of the outcome of r-process nucleosynthesis in merger outflows. Low \(Y_{e}\) outflows (roughly \(Y_{e}\lesssim 0.25\)) produce heavier r-process elements, while higher \(Y_{e}\) outflows produce lighter r-process elements (Lippuner and Roberts 2015). In particular, for the conditions typically observed in merger outflows, there is not much production of elements above the “2nd peak” of the r-process (at atomic number \(A\sim 130\)) for high-\(Y_{e}\) outflows, and an under-production of elements below the 2nd peak for neutron-rich (low \(Y_{e}\)) outflows. Cold outflows that do not interact much with neutrinos are typically neutron-rich, but hotter outflows can end up with \(Y_{e}\sim \) 0.4–0.5 due to neutrino–matter interactions. Cooling from neutrino emission and heating from neutrino absorption are also important to the thermodynamics of the remnant and of the outflows, and neutrino absorption in the disk corona and close to the neutron star surface can lead to the production of neutrino-driven winds (Dessart et al. 2009). It is thus clear that neutrino–matter interactions should be properly understood if we aim to model the role of neutron star mergers in the production of r-process elements.

The impact of neutrinos on kilonovae is less direct but no less important. Most of the r-process occurs within a few seconds of the merger, after which the outflows are mainly composed of radioactively unstable heavy nuclei. Radioactive decays of these nuclei will continue to release energy over much longer timescales. Initially, the outflows are opaque to most photons, and decay products are thermalized—except for neutrinos, which immediately escape the outflows. As the density of the outflows decrease, however, they will eventually become optically thin to optical/infrared photons. When this transition happens depends on the composition of the outflows. Lanthanides and actinides, which are among the heavier r-process elements that are only produced by neutron-rich outflows, have much higher opacities than other nuclei produced during the r-process. As a result, neutron-rich outflows become optically thin later than higher \(Y_{e}\) outflows (\(\sim 10\) days vs. \(\sim 1\) day), and the corresponding kilonova signal is redder (peaks in the infrared, instead of in the optical). Overall, the duration, color, and magnitude of a kilonova tell us about the mass of the outflows, their composition, and their velocity (Barnes and Kasen 2013). For a given binary merger, it will also depend on the relative orientation of the binary and the observer, as different types of outflows have different geometry.

Other electromagnetic counterparts to neutron star mergers have been proposed, with no confirmed observations so far. This include bursts of radiation before merger (Tsang 2013), continuous emission from magnetosphere interactions (Palenzuela et al. 2013), coherent emission from magnetosphere interactions (Most and Philippov 2022), and months to decades-long synchrotron radio emission from the mildly relativistic ejecta as it interacts with the interstellar medium (Hotokezaka et al. 2016). Neutrinos have no impact on the first three, however, and only a minor impact on the third (as neutrino–matter interactions may slightly change the mass/velocity of the outflows). More detailed discussions of the range of electromagnetic transients that may follow a merger can be found, e.g., in Fernández and Metzger (2016) and Burns (2020)

Electromagnetic emission from neutron star mergers has likely been observed for decades now in the form of SGRBs, and a first kilonova may have been observed in the afterglow of GRB130603B as early as 2013 (Tanvir et al. 2013; Berger et al. 2013). However, our current understanding of the engine powering SGRBs is not sufficient to provide us with much information about the parameters of the binary system that created the burst—or even to differentiate between a BNS and NSBH merger. Gravitational wave observations provide more direct information about the properties of the compact objects. So far, two systems have been observed with component masses most easily explained by the merger of two neutron stars: GW170817 (Abbott et al. 2017b) and GW1902425 (Abbott et al. 2020). The former is a relatively low mass system, whose observation was followed by a weak SGRB (most likely observed off-axis), radio emission most likely associated with a relativistic jet, and a clear kilonova signal most easily explain by a combination of at least two outflow components—one that led to strong r-process nucleosynthesis, and one that did not. The exact process that produced these outflows remain a subject of research today. GW190425 has a higher total mass (\(3.4\,M_{\odot }\)). There was no observed electromagnetic counterpart to that signal, a relatively unsurprising result considering the large uncertainty in the location of the source and the high likelihood that such a system did not eject a significant amount of matter (Barbieri et al. 2021; Raaijmakers et al. 2021; Dudi et al. 2021; Camilletti et al. 2022). At least two NSBH mergers were observed in 2020 (Abbott et al. 2021c), with more candidates also available in the latest gravitational wave catalogue (Abbott et al. 2021b). None of these systems was however expected to lead to the disruption of their neutron star, and thus their lack of electromagnetic counterpart was unsurprising.

Overall, we note that the analysis of current and future observations of neutron star mergers would benefit from accurate models of kilonova signals, as well as from an improved understanding of the engine behind gamma-ray bursts. In that respect, it is particularly important to understand the role of neutrinos in setting the composition of the outflows powering kilonovae, and possibly their impact on the production of relativistic jets. In the rest of this review, we will mainly focus on these issues, and on the methods available to evolve neutrinos in merger simulations.

3 Neutrinos in mergers

3.1 Definitions

When solving the general relativistic equations of radiation transport, we would ideally evolve Boltzmann’s equation, or the quantum kinetics equations (QKE, when accounting for neutrino oscillations). Classically, we evolve the distribution function of neutrinos \(f_{\nu }(t,x^{i},p_{j})\), defined such that

is the number of neutrinos within a 6D volume of phase space V. Here, \(x^{i}\) are the spatial coordinates and \(p_{j}\) the spatial components of the 4-momentum one-form \(p_{\mu }\), while h is Planck’s constant.

When using the classical equations of radiation transport, we usually neglect neutrino masses and assume \(p^{\mu } p_{\mu } = 0\). Boltzmann’s equation is then

with \(\tau \) the proper time in the fluid frame, \(\nu \) the neutrino energy in the fluid frame, and \(\Gamma ^{\alpha }_{\beta \gamma }\) the Christoffel symbols. The left-hand side simply implies that neutrinos follow null geodesics, while the right-hand side includes all neutrino–matter and neutrino–neutrino interactions, and thus hides most of the complexity in these equations. We note that we should evolve a separate \(f_{\nu }\) for each type of neutrinos (\(\nu _{e},\nu _{\mu },\nu _{\tau }\)) and antineutrinos (\({\bar{\nu }}_{e},{\bar{\nu }}_{\mu },{\bar{\nu }}_{\tau }\)); and that these distributions functions may be coupled through the collision terms. As neutrinos are fermions, we have \(0\le f_{\nu } \le 1\).

The spacial coordinate volume \(d^{3}x = dx dy dz\) and momentum volume \(d^{3}p = dp_{x} dp_{y} dp_{z}\) are not invariant under coordinate transformations, but \(d^{3}x p^{t} \sqrt{-g}\) and \(d^{3}p (p^{t} \sqrt{-g})^{-1}\) are, with g the determinant of the spacetime metric \(g_{\mu \nu }\). Thus \(d^{3}x d^{3}p\) is invariant under coordinate transformations. The stress-energy tensor of neutrinos at \((t,x^{i})\) is

In general relativistic merger simulations, we often use the \(3+1\) decomposition of the metric

with \(\alpha \) the lapse, \(\beta ^{i}\) the shift, and \(\gamma _{ij}\) the 3-metric on a slice of constant time t. The unit normal one-form to such a slice is then \(n_{\mu }=(-\alpha ,0,0,0)\), and the 4-vector \(n^{\mu }=g^{\mu \nu }n_{\nu }\) can be interpreted as the 4-velocity of an observer moving along that normal—which we will call normal observer from now on. From there, we can deduce that \(\epsilon =-p^{\mu } n_{\mu }=\alpha p^{t}\) is the energy of a neutrino of 4-momentum \(p^{\mu }\) as measured by a normal observer. More generally, the energy of a neutrino measured by an observer with 4-velocity \(u^{\mu }\) is \(\nu = -p^{\mu } u_{\mu }\). Here, we will generally reserve the symbol \(\epsilon \) for the energy measured by normal observers, and \(\nu \) for the energy measured in the fluid rest frame, i.e., when \(u^{\mu }\) is the 4-velocity of the fluid.

3.1.1 Equilibrium distribution

We will often make use of the equilibrium distribution of neutrinos. For neutrinos in equilibrium with a fluid at temperature T moving with 4-velocity \(u^{\mu }\), that is the Fermi–Dirac distribution

with \(\mu \) the chemical potential of neutrinos, and \(k_{B}\) Boltzmann’s constant. We note that in an orthonormal frame \(({\hat{t}},{\hat{x}}^{i})\) the energy density of neutrinos is

with \({\hat{\epsilon }}=p^{{\hat{t}}}\) the energy of neutrinos as measured by a stationary observer in the orthonormal frame. We thus see that we recover the expected results for the equilibrium energy of a fermion gas in the fluid frame,

where in the last expression we used the special relativistic result \(\nu = \Vert p\Vert c\). This is more easily expressed in terms of the Fermi integrals \(F_{n}\), which we will use extensively in this section:

From this definition, we see that

Similarly, the equilibrium number density of neutrinos is

and the average energy of neutrinos in equilibrium with the fluid

(which asymptotes to \(3.15k_{B}T\) at low densities, when \(\Vert \mu \Vert \ll k_{B} T\)).

3.2 Commonly considered reactions

Let us now discuss the various neutrino–matter interactions that are commonly considered in neutron star merger simulations. Our objective here is not to provide detailed derivations of all interaction rates, but rather to review the reactions that may be taken into consideration and to get reasonable estimates of the scaling of reaction rates with the fluid properties. This will allow us to estimate when different reactions become important to the evolution of the system. Accordingly, for the sake of brevity, the cross-sections and reaction rates presented here sometimes make stronger approximations than what is done in merger simulations. However, for each reaction we provide references to more detailed discussions of these cross-sections. We will also make use of our discussion of the \(p+e^{-} \leftrightarrow n+\nu _{e}\) and \(e^{+}e^{-}\leftrightarrow \nu {\bar{\nu }}\) reactions to illustrate a number of issues that arise when attempting to include collision terms in the radiation transport equations, and thus discuss these reactions in more detail than the others. Given the significant overlap between reactions important to neutron star merger simulations and reactions important to core-collapse supernova simulations, a number of expressions in this section are slight modifications of the interaction rates presented in the review of neutrino reactions in core-collapse supernovae of Burrows et al. (2006), though for numerical estimates of interaction rates we focus on the conditions most commonly found in neutron star mergers and post-merger remnants.

3.2.1 Charged-current reactions

The reactions with the strongest impact on the observable properties of neutron star mergers involve absorption and emission of \(\nu _{e}\) and \({\bar{\nu }}_{e}\). Indeed, these reactions are often (but not always) the main source of cooling in the system, and they are the only reactions that lead to changes in the electron fraction \(Y_{e}\) of the fluid. In the hot, dense remnant of a BNS or NSBH merger, this mostly occurs through the reactions

which are typically included at least approximately in all merger simulations that attempt to account for neutrino–matter interactions.

Self-consistently calculating the forward and backward reaction rates can be difficult. Final state blocking means that these reactions depend on the distribution functions of \(p,n,e^{+},e^{-},\nu _{e},{\bar{\nu }}_{e}\). While we can typically assume equilibrium distributions at the fluid temperature and composition for \(n,p,e^{+},e^{-}\) in neutron star mergers, at least in regions where neutrino–matter interactions are important, the neutrinos may be far out of equilibrium—and many approximate schemes used in simulations today do not contain enough information about the neutrino distribution function to fully account for the value of \(f_{\nu }\) in all reactions.

To illustrate these issues, and some of the ways in which they are handled in existing simulations, let us consider the cross-section per baryon for the reaction \(n+\nu _{e} \rightarrow p+e^{-}\), the dominant absorption process in merger outflows, derived by Bruenn (1985). Following the notation of Burrows et al. (2006), we get

with

\(\nu _{\nu _{e}}\) the fluid frame neutrino energy, \(\Delta _{np} = (m_{n}-m_{p})c^{2} = 1.293\) MeV the difference in rest mass energy between neutrons and protons, \(m_{e}\) the mass of an electron, and \(W_{M}\) a small correction for weak magnetism and recoil (\(2.5\%\) for 20 MeV neutrinos) (Vogel 1984). Neutrinos in BNS and NSBH mergers have typical energies \(\nu \gtrsim 10\) MeV, significantly larger than the rest mass energy of an electron. Thus, to a reasonably good approximation (for the purpose of our qualitative discussion here at least),

This dependence of neutrino cross-sections on the square of the neutrino energies is found in many reactions relevant to neutron star mergers, and is going to be a significant source of uncertainty in our simulations, as many approximate transport algorithms do not provide detailed information about the neutrino spectrum.

The opacity for the absorption of \(\nu _{e}\) on n is then

with \(f_{n},f_{p}\) the distribution functions of neutrons and protons, and \(E\approx p^{2}/2m\) the kinetic energy of the baryons (ignoring the difference in mass between protons and neutrons and momentum transfer onto the proton). In the last expression, which ignores the final state blocking factor of the protons, \(n_{n}\) is the neutron number density. That expression would be very inaccurate in the densest region of a star (where \(f_{p}\) cannot be neglected), but is quite accurate in the lower-density regions where neutrinos decouple from the fluid.

To gain a more intuitive understanding of the rate of these interactions, let us assume that the typical length scale within a neutron star is \(\sim \) 1 km. We can see from this expression that for a 20 MeV neutrino, we expect \(\kappa _{a} = 1\) km\(^{-1}\) for \(n_{n}\sim 10^{-3}\) fm\(^{-3}\), i.e., for a neutron mass density of \(\sim 10^{12}\) g/cm\(^{3}\). As the center of a neutron star has density \(\rho _{c} \sim 10^{15}\) g/cm\(^{3}\), we see that neutrinos inside the neutron star have a mean free path much shorter than the size of the star, and decouple from the matter as they move through the crust of the neutron star.

Similar scalings apply to the \(p+{\bar{\nu }}_{e} \rightarrow n + e^{+}\) reaction, as

and \(\kappa _{a} \approx n_{p} \sigma _{{\bar{\nu }}_{e} p}\) for the absorption of \({\bar{\nu }}_{e}\) on protons, under the same assumptions as for absorption onto neutrons. The correction \(W_{{\bar{M}}}\) is more significant than \(W_{M}\) (\(\sim 15\%\) at 20 MeV) (Vogel 1984; Horowitz 2002), though still not large enough to impact our order of magnitude estimates. As \(n_{p}<n_{n}\) in most regions of a neutron star merger remnant, the absorption opacity for \({\bar{\nu }}_{e}\) is smaller than for \(\nu _{e}\).

It is also possible to include in simulations the impact of \(\nu _{e}\) and/or \({\bar{\nu }}_{e}\) absorption on atomic nuclei. This is typically more important in the core-collapse context than in mergers, as in mergers most of the matter is in the form of free nucleons in regions where neutrino–matter interactions are significant. Additionally, simulations do not keep track of the abundances of individual nuclei, and equations of state for the fluid do not always contain that information, complicating any estimate of the absorption cross-section for this process. Cross-sections for the absorption of \(\nu _{e}\) onto nuclei can be found in Bruenn (1985). In high-density, low-temperature, neutron-rich regions inside of merging neutron stars, the modified URCA processes (Yakovlev et al. 2001; Alford et al. 2021)

(with N a spectator nucleon) may also play a role in the evolution of the system through the creation of an effective bulk viscosity in the post-merger remnant (Alford et al. 2018).

In the expressions derived so far for neutrino absorption, we have generally ignored final state blocking factors. These can however be approximately calculated if we rely on the fact that the fluid particles are in statistical equilibrium at a given temperature. Final state blocking factors for neutrino emission are slightly more complex to take into account. For neutrinos of a given energy and momentum, the neutrino emission rate will generally be of the form \(\eta = \eta ^{*} (1-f_{\nu })\), where the \((1-f_{\nu })\) term captures Pauli blocking for neutrinos in the final state. This is not a form that is practical to use in simulations, as we would like the emission rate and opacities to depend solely on the properties of the fluid, without any dependency on \(f_{\nu }\). Burrows et al. (2006) show that a convenient redefinition of the emissivity and absorption opacity can solve this problem. If we directly use \(\eta ^{*}\) as our emission rate (without neutrino blocking factor), and define \(\kappa _{a}^{*} = \kappa _{a} / (1-f_{\nu }^{\mathrm{eq}})\) as our absorption opacity (with \(f_{\nu }^{\mathrm{eq}}\) taken from Eq. (7)), then the collision term for charged-current reactions in Boltzmann’s equation can be written in the two equivalent ways

with \(\eta \) the emissivity per unit of solid angle and neutrino energy. Importantly, in the first expression \(\eta _{\nu }\) depends of \(f_{\nu }\), but in the second \(\eta _{\nu }^{*}\) does not. Accordingly, most simulations use \(\eta ^{*}\) and \(\kappa _{a}^{*}\) to parametrize neutrino–matter interactions. In our discussion of numerical algorithms for neutrino transport, emissivity and absorption opacity will generally refer to these corrected values.

We also note that when all reactions are accounted for, \(\eta ^{*} =c\kappa _{a}^{*} f_{\nu }^{\mathrm{eq}}\) (Kirchoff’s law). This allows us to calculate only one of \((\eta ^{*},\kappa ^{*}_{a})\), then set the other to make sure that the equilibrium energy density of neutrinos has the desired physical value. This is particularly useful in dense, hot regions, where neutrinos quickly reach equilibrium with the fluid. In that regime, the exact emission and absorption rate can be more difficult to calculate (due to blocking factors), but they are also fairly unimportant: what matters is that neutrinos quickly reach their equilibrium density, and then diffuse through the dense regions. This is guaranteed when using Kirchoff’s law, even if \(\eta ^{*}\) and \(\kappa _{a}^{*}\) are not extremely accurate.

The total emission rate of neutrinos due to a given reaction can be calculated by integrating \(\eta ^{*}\) over both solid angle and neutrino energy. In terms of the absorption opacity, we get

For comparison with results for other reactions, we can estimate this emission rate for \(\nu _{e}\), ignoring the final state blocking factor of protons in the inverse reaction and using \(W_{M}\sim 1\). We then get for the emission of electron neutrinos due to electron capture on protons (energy per unit volume)

with \(\eta = \mu /(k_{B} T)\). Similarly, the number of neutrinos emitted per unit volume is simply

and the average energy of emitted neutrinos

For \(\Vert \eta _{\nu }\Vert \ll 1\), \(\langle \nu \rangle \sim 5.1 k_{B} T\). We note that this is higher than the average energy of neutrinos in equilibrium with the fluid. This will generally be true whenever neutrinos are allowed to directly escape from an emission region instead of thermalizing with the fluid first. A more explicit expression for \(Q_{pe^{-}}\) is

We see that the emission rate of neutrinos has a strong dependence in the fluid temperature, with \(Q\propto T^{6}\), and a linear dependence in the fluid density (ignoring the Fermi integral term). The emission rate of \({\bar{\nu }}_{e}\) can be computed in the exact same manner,

In this expression, we made use of the fact that \(\eta _{\bar{\nu }_{e}}^{\mathrm{eq}} = - \eta _{\nu _{e}}^{\mathrm{eq}}\). The dependence of these emission rates on \(n_{n}\) and \(n_{p}\) may seem counterintuitive, as \(Q_{pe^{-}}\) involves absorption of electrons on protons, yet is proportional to \(n_{n}\). This is however a natural result of using Kirchoff’s law; the complete dependence of \(Q_{pe^{-}}\) in the density of all fluid particles is practically hidden in the Fermi integral term \(F_{5}(\eta _{\nu _{e}}^{\mathrm{eq}})\), and the assumption of statistical equilibrium in the fluid. In particular, as \(F_{n}(\eta )\) monotonically increase with \(\eta \), and neutrino emission in post-merger remnants comes from regions of the fluid where \(\eta _{\nu _{e}}<0\) (more neutron-rich than in equilibrium), we generally get \(Q_{ne^{+}}>Q_{pe^{-}}\) even though \(n_{p}<n_{n}\).

3.2.2 Pair processes

After charged current reactions, the most commonly considered processes for the emission and asborption of neutrinos are the pair processes

i.e., electron–positron annihilation, plasmon decay, and nucleon–nucleon Bremsstrahlung. Here, each pair can be \(\nu _{e}{\bar{\nu }}_{e}\), \(\nu _{\mu }{\bar{\nu }}_{\mu }\), or \(\nu _{\tau }{\bar{\nu }}_{\tau }\). Pair processes will be the dominant source of neutrino emission for muon and tau neutrinos, as charged-current reactions involving muons and taus are significantly less common than charged current reactions involving electrons in the merger context (the mass of a muon is 105 MeV, while most of the post-merger remnant has temperature \(T\lesssim \) 50 MeV, and the neutrinospheres and optically thin regions are at even lower temperatures). Pair processes are however harder to accurately include in simulations due to their nonlinear dependencies in the neutrino distribution functions. The reaction rates for the \(\nu {\bar{\nu }}\) pair productions (forward reactions) depend on the distribution function of both neutrinos and antineutrinos through blocking factors, which are typically difficult to estimate accurately with existing transport algorithms. Worse, the reaction rates for pair annihilations (inverse reactions) are directly propoprtional to the product of the distribution functions of neutrinos and antineutrinos.

Let us consider for example the reactions \(\nu {\bar{\nu }} \rightarrow e^{+} e^{-}\), for neutrinos of energy significantly higher than \(m_{e} c^{2}\) (as appropriate in neutron star mergers). We can slightly adapt the results of Salmonson and Wilson (1999), based on the Newtonian rate calculations of Cooperstein et al. (1986) and Goodman et al. (1987), to find the rate of momentum deposition per unit volume

with \(G_{F}=5.29\times 10^{-44}\) cm\(^{2}\) MeV\(^{-2}\) and \(D=2.34\) for electron type neutrinos, while \(D=0.50\) for muon or tau neutrinos. We have here chosen to rewrite the results of Salmonson and Wilson (1999) into a manifestly covariant expression more appropriate for general relativistic simulations. From this expression, we can see that the probability that a given neutrino is annihilated will depend on both the momentum of that neutrino and the distribution function of its antiparticle.

To limit the computational cost of this calculation, it is often convenient to make some assumptions regarding the distribution function of neutrinos, e.g. ignoring neutrino blocking factors (for the forward reactions), assuming equilibrium distributions of neutrinos (for either direction), or, in moment schemes, using approximate moments of the distribution functions (for the backward reactions). The most common strategy in existing merger simulations has been to compute the forward reaction rates assuming equilibrium distributions of neutrinos or ignoring blocking factors, either for all neutrinos or only for the muon and tau neutrinos. The inverse reaction rates are then computed using Kirchhoff’s law, even though that law is not necessarily valid for pair processes (O’Connor 2015). These approximations are generally reasonable for heavy lepton neutrinos close to the neutrinosphere, i.e., where most of the neutrinos that leave the remnant are emitted, because as long as charged-current reactions including muons and taus are negligible, the distribution functions of \(\nu _{\mu },{\bar{\nu }}_{\mu },\nu _{\tau },{\bar{\nu }}_{\tau }\) are all identical and close to equilibrium. They are however very unreliable for electron-type neutrinos and for calculations of the rate of \(\nu {\bar{\nu }}\) annihilation in regions where the neutrinos are not in equilibrium with the fluid (e.g. in the polar regions). We will consider some of the ways in which the latter process has been studied in our discussion of specific radiation transport algorithm and simulations.

Now that we have established the difficulty of properly treating pair processes, let us estimate their importance in the merger context. We begin again with \(e^{+}e^{-}\) annihilation. Ignoring neutrino blocking factors, Burrows et al. (2006) integrate the reactions rate of Dicus (1972) to find a total emissivity in \(\nu {\bar{\nu }}\) pair of

with \(Q_{0}=9.76\times 10^{24}\) ergs cm\(^{-3}\) s\(^{-1}\) for \(\nu _{e}{\bar{\nu }}_{e}\), and \(Q_{0}=4.17\times 10^{24}\) ergs cm\(^{-3}\) s\(^{-1}\) for all other neutrinos combined. The average energy of the emitted neutrinos in the fluid frame is

(e.g., \(\langle \nu \rangle \approx 4.1T\) when \(\eta _{e}=0\)). Approximate expressions for the energy spectrum of the neutrinos are also found in Burrows et al. (2006), following the work of Bruenn (1985). If we compare this result to \(Q_{pe^{-}}\) and \(Q_{ne^{+}}\) from the previous section, we see that for \(\nu _{e} {\bar{\nu }}_{e}\), pair processes will only dominate over charge current reactions in very hot and/or low density regions of the fluid, where neutrinos will either rapidly reach their equilibrium distribution or rapidly cool the fluid. In such cases, getting exact reaction rates is not overly important as long as we obtain the correct equilibrium distribution and have sufficiently high emission rates. Even in dense regions of the fluid, neglecting \(\nu _{e}{\bar{\nu }}_{e}\) production is thus not a particularly strong approximation (but neglecting pair annihilation in low-density regions might be, as we will see).

What about the heavy-lepton neutrinos? The equilibrium energy density of neutrinos is

At \(T=1\) MeV, the timescale for neutrinos to reach that equilibrium density solely through \(e^{+}e^{-}\) emission is thus O(10 s), but at \(T=10\) MeV, it is O(0.1 ms), i.e., much shorter than the dynamical timescale of a neutron star merger. In hot regions, heavy-lepton neutrinos (muons and taus) will thus reach their expected equilibrium density, and the neutrino luminosity of \(\nu _{\mu }{\bar{\nu }}_{\mu } \nu _{\tau } {\bar{\nu }}_{\tau }\) will be set by the diffusion timescale of neutrinos through the hot, dense remnant. For heavy-lepton neutrinos, ignoring pair processes (and missing the associated cooling of the remnant) would be significantly worse than incuding approximate reaction rates, as long as those rates properly recover the equilibrium energy of neutrinos in dense regions.

Let us now briefly consider other pair processes. For nucleon–nucleon Bremsstrahlung, Burrows et al. (2006) (building on results by Brinkmann and Turner 1988; Hannestad and Raffelt 1998) find the total neutrino emissivity per species to be

We see that Bremsstrahlung will dominate over \(e^{+}e^{-}\) annihilation in denser, colder regions. In the densest region of a post-merger accretion disk (typically \(n_{n} \sim 10^{35-37}\) cm\(^{-3}\), \(T\sim (1-10)\) MeV), we see that the process dominating the production of heavy-lepton neutrinos may thus vary, and we can neglect neither Bremsstrahlung nor \(e^{+}e^{-}\) pair production/annihilation.

Approximate formula for the total energy emission from plasmon decays and for the average energy of the neutrinos emitted through that process can be found in Ruffert et al. (1996). They are equivalent to

with \(Q_{0,pl} =(6\times 10^{23} {\text{ergs cm}}^{-3}\,{\text{s}}^{-1})\) per species for electron-type neutrinos and \(Q_{0,pl} =(10^{21} {\text{ergs cm}}^{-3}\,{\text{s}}^{-1})\) per species for other neutrinos. The blocking factor \(B=\langle (1-f_{\nu }) \rangle \langle (1-f_{{\bar{\nu }}}) \rangle \) can only be evaluated assuming a specific neutrino distribution function, while \(\gamma \approx 0.056 \sqrt{(\pi ^{2}+3\eta _{e}^{2})/3}\) is a parameter with strong sensitivity to the electron degeneracy parameter \(\eta _{e}\). We see that we need relatively fine-tuned conditions for plasmon decay to dominate over pair annihilation, especially for heavy-lepton neutrinos—as a result, this reaction is often ignored in merger simulations.

From these estimates of the emissivity of pair processes, we can understand one additional difficulty in the use of these processes in simulations. Both \(e^{+}e^{-}\) creation/annihilation and plasmon decays have \(Q\propto T^9\), with no explicit dependence in the fluid density (at least in regions where blocking factors are negligible). This can prove problematic in merger simulations, where numerical errors can lead to the creation of hot low-density regions whose properties are not necessarily well modeled by equations of state built to capture the properties of dense matter. As a result, some simulations ignore pair processes below an ad-hoc density threshold.

3.2.3 Neutrino scattering

Scattering of neutrinos on protons, neutrons, nuclei and electrons plays an important role in setting the diffusion timescale of neutrinos through the densest regions of merger remnants. The total cross-sections per baryon for the nearly elastic scattering of neutrinos onto protons and neutrons are (Yueh and Buchler 1976; Bruenn 1985; Burrows et al. 2006)

and the scattering opacity for these two processes combined is thus

We see that these quasi-elastic scatterings are about as likely as charged-current absorption for \(\nu _{e}\) and \({\bar{\nu }}_{e}\), and will often be a dominant contribution to the total opacity of the fluid for heavy-lepton neutrinos. We also note that the differential cross-sections are

with \(\mu =\cos \theta \) and \(\theta \) the scattering angle. As \(\delta _{p}\sim -0.2\) and \(\delta _{n}\sim -0.1\) at the most relevant neutrino energies (Burrows et al. 2006), back-scattering is favored. However, most merger simulations assume isotropic elastic scatterings in the fluid frame (\(\delta _{n,p}=0\)), an approximation whose impact on simulation results has not been tested so far.

Similar calculations can be made for scattering on atomic nuclei. For example, elastric scattering on \(\alpha \) particles has a total cross-section per nucleus of (Yueh and Buchler 1976; Burrows et al. 2006)

We note that in the neutron star merger context, we typically have \(n_{\alpha } \ll n_{n}\) in regions where neutrino scattering is important, and similar results apply to heavier nuclei. As many equations of state used in merger simulations do not provide detailed information about the abundances of individual atomic nuclei, the contribution of nuclei to the total scattering opacity is often only approximately taken into account (e.g. considering only \(\alpha \) particles, or \(\alpha \) particles and some ‘representative’ nucleus of fixed proton number Z and atomic number A), or completely ignored.

Including inelastric scattering of neutrinos on electrons is a more difficult problem, and as a result inelastic scattering has not so far been taken into account in merger simulations. To understand these issues, we can look at the methods used to treat inelastic scattering in core-collapse supernovae (Bruenn 1985; Burrows et al. 2006). The relevant part of Boltzmann’s equation can be written

Note that \(f_{\nu }\) is the distribution function for neutrinos with energy \(\nu \) and momentum \(p_{\mu }\), while \(f_{\nu }'\) is the distribution function for neutrinos with energy \(\nu '\) and momentum \(p_{\mu }'\). \(R^{\mathrm{in,out}}\) are the scattering kernels to scatter into/out of the energy bin \(\nu \) from/to \(\nu '\). Even ignoring the blocking factors \((1-f_{\nu })\) and \((1-f_{\nu }')\), the collision terms clearly depend on the distribution function of neutrinos, and couple the values of \(f_{\nu }\) at all neutrino momenta. One possible approximation is to use a truncated expansion of the kernels in \(\cos \theta \):

with \(\Phi _{0,1}\) known functions of the incoming and outgoing neutrino energies. The integrals over \(p_{i}'\) are then similarly truncated using moments of the distribution function \(f_{\nu }'\). While this makes the evolution of \(f_{\nu }\) slightly more tractable numerically, we still end up with numerically stiff terms coupling every pair of neutrino energies, which makes these reactions expensive to include in simulations.

The scattering kernels have complex dependencies in the incoming and outgoing neutrino energies, and the temperature of the fluid (which sets the electron distribution function). As a very rough order of magnitude estimate, and assuming that the neutrinos have energies larger than or comparable to the electrons, we have

with \(\epsilon _{\nu }\) the typical energy of neutrinos at the current point, and \(\epsilon _{e}\) the typical energy of electrons. This leads to an effective opacity (i.e., the inverse of the mean free path of neutrinos with respect to scattering on electrons)

We thus see that at the densities at which neutrino–matter interactions are most important in neutron star mergers, inelastic scattering on electrons has a significantly lower opacity than elastic scattering on nucleons or charged-current reactions, but not necessarily smaller than absorption opacities for pair processes. Accordingly, its direct impact on \(\nu _{e}\) and \({\bar{\nu }}_{e}\) is likely subdominant, but it could be important to the thermalization of heavy-lepton neutrinos.

Finally, we note that scattering on nucleons is not perfectly elastic. The typical exchange of energy between neutrinos and the fluid is much smaller for nucleon scatterings than for electron scatterings, but as seen above, the cross-sections for nucleon scatterings are larger in regions where neutrino–matter interactions are important. In core-collapse supernovae, Wang and Burrows (2020) showed that the smaller energy transfer during each scattering can be used to treat inelastic scattering on nucleons as a diffusion process in energy space, leading to much cheaper calculations than when using scattering kernels: there is no need to couple all energy bins through numerically stiff interaction terms. In Wang and Burrows (2020), the impact of neutrino–nucleon scattering on the thermalization of heavy-lepton nucleons was also shown to be comparable to the impact of neutrino–electron scattering. Accounting for inelastic scattering on nucleons could thus provide an avenue to partially account for the thermalization effect of scattering events without an implementation of inelastic scattering on electrons.

3.2.4 Discussion

From the previous sections, we see that the reactions currently used in our most advanced merger simulations can, if properly included in a transport algorithm, capture the dominant processes for emission, absorption, diffusion, and thermalization of \(\nu _{e}\) and \(\bar{\nu }_{e}\) in most of a post-merger remnant. Without even getting into the complications of approximate transport methods, however, we see that the situation is already more complex for other species of neutrinos. Emission of heavy-lepton neutrinos is dominated by pair processes which are poorly modeled as soon as neutrinos are out of equilibrium with the fluid. Thermalization of these neutrinos is likely impacted by inelastic scattering, which current simulations do not take into account. Finally, pair annihilation of all types of neutrinos in low-density regions is difficult to include, but possibly important to jet formation. It is thus worth noting that uncertainties in transport schemes are not the only potential sources of errors in our modeling of neutrinos today; the choice of physical processes included in the simulations, and the accuracy to which they are modeled, remains an area where significant improvements are possible.

3.3 Quantum kinetics and neutrino oscillations

So far, we have considered neutrinos as particles in well-defined flavor states (electron, muon, tau). However, we know that this is only an approximation. Even in vacuum, the fact that the mass eigenstates of neutrinos are different from their flavor eigenstates leads to oscillations between flavors. Vacuum oscillations occur on length scales too long to impact the evolution of a post-merger remnant, though if neutrinos from a neutron star merger were ever to be observed, oscillations between the source and the Earth would certainly be significant. There are however other processes that lead to flavor transformation with more relevance to the merger problem. Generally, any process that transform electron type neutrinos into heavy-lepton neutrinos (or vice-versa) close enough to the merger remnant that neutrino–matter interactions are still impacting the composition of the outflows has the potential to change the properties of kilonovae and the outcome of nucleosynthesis in neutron star mergers.

One way to study neutrino oscillations is through the quantum kinetic equations (QKE). In that formalism, neutrinos are described by the \(3\times 3\) density matrix \(\rho (t,x^{i},p^{\mu })\). The diagonal terms of this matrix can be understood as equivalent to the distribution functions \(f_{\nu _{e}}\), \(f_{\nu _{\mu }}\), \(f_{\nu _{\tau }}\), while the off-diagonal terms encode quantum coherence between flavors. A second matrix \({\bar{\rho }}\) contains information about antineutrinos. The density matrix evolves according to (Vlasenko et al. 2014)

where the left-hand side is a total time derivative in phase-space, and the two terms on the right-hand side are responsible for, respectively, oscillations and collisions. The Hamiltonian H can be decomposed as

with \(H_{\mathrm{vac}}\) responsible for vacuum oscillations, \(H_{\mathrm{mat}}\) for interactions between neutrinos and the matter potential, and \(H_{\nu \nu }\) for neutrino self-interactions.

At least two types of oscillations have been found to be potentially improtant in the merger context. The matter–neutrino resonance (MNR) occurs when the matter potential is equal to the neutrino self-interaction potential, and can impact the luminosity of \(\nu _{e}\) and \({\bar{\nu }}_{e}\) within a few radii of the post-merger remnant (Caballero et al. 2014; Zhu et al. 2016). The flast-flavor instability (FFI), on the other hand, is due solely to the neutrino self-potential, and seems to occur in regions where the sign of the net lepton flux (number flux of \(\nu _{e}\) minus number flux of \({\bar{\nu }}_{e}\)) changes between different directions of propagation of the neutrinos (Banerjee et al. 2011; Wu et al. 2017; Grohs et al. 2022). The FFI occurs on very short timescales (\(\sim \)ns, i.e., cm length scales), and is likely active in many regions close to the post-merger remnant (Grohs et al. 2022). How much flavor transformation occurs as a result of the FFI remains uncertain, but recent studies using simplified prescriptions for where the FFI occurs and how much flavor transformation happens as a result have shown that it could plausibly lead to significant changes in the composition of matter outflows (Li and Siegel 2021; Fernández et al. 2022). As quantum kinetics is not at this point studied as part of general relativistic radiation transport algorithms coupled to merger simulations, but rather evaluated using either simple approximations or specialized zoomed-in simulations, we do not discuss it in more detail here. We do however emphasize that these oscillations could very well have an impact on the composition of the matter outflows produced in mergers. The fact that they are not included directly within simulations is due largely to the additional technical difficulty of evolving the quantum kinetic equations and to the very short timescales involved in the FFI, rather than to a certainty that oscillations are not important to astrophysical results. Obtaining better models for the role of oscillations in merger simulations is certainly an important open problem in merger simulations today.

4 Radiation transport algorithms

Having discussed the reactions that we would like to take into account in neutron star merger simulations, we can now turn to a discussion of the various methods used so far to treat neutrino transport and neutrino–matter interactions. These can be broadly classified into quasi-local leakage schemes, approximate transport schemes based on the moment formalism, and Monte-Carlo evolution of Boltzmann’s equation. Multiple simulations have also considered mixed leakage-moment schemes, while algorithms mixing Monte-Carlo methods with a moment scheme have been considered but not successfully used in merger simulations. For most of this section, we attempt to keep the discussion focused to the methods used for general relativistic radiation hydrodynamics simulations, either in the context of neutron star merger simulations or for the evolution of their post-merger remnant. We will however discuss along the way a number of techniques that were first developed for Newtonian simulations or for simulations using quasi-Newtonian potentials that have either been ported to general relativistic simulations, or are likely to be used in that context in the near future. This is particularly true for the more advanced leakage schemes, which were first developed in non-relativistic codes but are currently being integrated in general relativistic simulations. We also note that neutrino radiation transport algorithms used in simulations of core-collapse supernovae are often more advanced than any of the algorithms used in merger simulations (e.g. Mezzacappa and Bruenn 1993; Liebendoerfer et al. 2009; Takiwaki et al. 2014; Kuroda et al. 2016; O’Connor and Couch 2018; Roberts et al. 2016; Bruenn et al. 2020; Skinner et al. 2019). In fact, many algorithms used in merger simulations today are directly inspired from work done in the core-collapse community. Accordingly, while we do not attempt to review the algorithms used in the core-collapse context, we will occcasionally refer to methods developed for core-collapse simulations if they have been used in the merger context. More advanced methods have also been proposed, but not yet applied to the merger problem; e.g. methods for a fully covariant evolution of the radiative transport equations appropriate for a direct discretization of Boltzmann’s equation have recently been studied in Davis and Gammie (2020), Lattice–Boltzmann methods have been implemented and used on test problems in Weih et al. (2020a), and the MOCMC (Method of Characteristics Moment Closure) method has shown that it is possible to combine particle and moment formalisms to improve on the convergence properties of a pure Monte-Carlo radiation transport code (Ryan and Dolence 2020).

In this review, we focus particularly on moment methods, as they have been used in the majority of the most advanced radiation hydrodynamics simulations of mergers to-date. Most general relativistic simulations using leakage schemes use methods that are at best order-of-magnitude accurate, while very few simulations have been performed with the recently developed Monte-Carlo algorithms. Accordingly, moment schemes remain at the moment our best source of information about the role of neutrinos in neutron star mergers.

We note that while most simulations consider 3 species of neutrinos and 3 species of antineutrinos, it is fairly common for simulations to assume that the distribution function of all heavy-lepton neutrinos \(\nu _{\mu },\nu _{\tau },{\bar{\nu }}_{\mu }, {\bar{\nu }}_{\tau }\) are identical, and thus to replace the evolution of those 4 species by the evolution of a single species \(\nu _{x}\) that represent them all; we will use the notation \(\nu _{x}\) to represent all heavy-lepton neutrinos here as well.

4.1 Leakage schemes

4.1.1 Overview

Leakage algorithms are the simplest methods used to treat neutrinos in neutron star merger and post-merger simulations. In their most basic form, they can capture the cooling of the post-merger remnant at the order-of-magnitude level, but not the evolution of the composition of the outflows. More advanced leakage schemes have however been developed for post-merger simulations, and mixed leakage-moment schemes have been used in general relativistic merger simulations. Those advanced schemes can at least approximately capture absorption within the outflows of neutrinos emitted by a post-merger accretion disk or by a neutron star remnant.

The leakage schemes used in merger simulations today were first developed by Ruffert et al. (1996), Rosswog and Liebendörfer (2003). They generally rely on a local computation of the neutrino energy and number emission rates per unit volume, \(Q_{\nu ,{\mathrm{free}}}\) and \(R_{\nu ,{\mathrm{free}}}\), for each species of neutrinos. In addition, leakage schemes compute an estimate of the optical depth between a given grid cell and the outer boundary of the computational domain, in order to estimate the diffusion timescale of trapped neutrinos through the remnant.

The energy emission rate \(Q_{\nu ,{\mathrm{free}}}\) is calculated as described in Sect. 3.2. In the merger context, simulations have usually considered the total (energy-integrated) emission rate, but energy-dependent leakage schemes have been developed for post-merger simulations (Perego et al. 2016). In the former case, \(R_{\nu ,{\mathrm{free}}} = Q_{\nu ,{\mathrm{free}}}/\langle \epsilon _{\nu }\rangle \), with \(\langle \epsilon _{\nu }\rangle \) the average energy of emitted neutrinos. In the latter case, \(R_{\nu ,{\mathrm{free}}}\) for each energy bin is just \(Q_{\nu ,{\mathrm{free}}}/\epsilon _{\nu }\), with \(\epsilon _{\nu }\) the energy at the center of the bin. In optically thin regions, this is sufficient to calculate the cooling rate and composition changes of the fluid. In optically thick regions, however, the rates at which neutrinos carry away energy and lepton number are much lower than the free emission rates. In those regions, we expect neutrinos to quickly reach their equilibrium distribution function \(f_{\nu }^{\mathrm{eq}}\), and to slowly diffuse out of the remnant over a time scale \(t_{\mathrm{diff}}\). The rate at which neutrinos carry energy away from a given cell is then approximately given, for neutrinos of a given energy \(\nu \), by

with \(E_{\nu }^{\mathrm{eq}}\) the equilibrium energy density of neutrinos. Most leakage schemes used in merger simulations implement the diffusion time scale prescription of Rosswog and Liebendörfer (2003)

where \(\kappa _{\mathrm{tot}}\) is the total opacity at the current point (including all absorption and scattering processes considered in the simulation), and \(\tau _{\nu }\) is the estimated optical depth between that point and the domain boundary. The parameter \(\alpha _{\mathrm{diff}}\) is calibrated to the result of transport simulations; Rosswog and Liebendörfer (2003) use \(\alpha _{\mathrm{diff}}=3\), but this choice is not unique (e.g. O’Connor and Ott 2010 argue for an increase of \(\alpha _{\mathrm{diff}}\) by a factor of two). The optical depth is defined as

with the minimum taken over all possible paths \(\Gamma \) starting from the current point and ending at the boundary of the computational domain. The optical depth \(\tau _{\nu }\) is energy dependent, but the reactions that dominate the calculation of \(\kappa _{\mathrm{tot}}\) all have \(\kappa \propto \nu ^{2}\) (charged-current reactions and elastic scatterings). Calculating \(\tau _{\nu }\) at a single energy and then assuming \(\tau _{\nu } \propto \nu ^{2}\) is thus a reasonable approximation. We discuss different methods to estimate \(\tau _{\nu }\) later in this section. More complex estimates of \(t_{\mathrm{diff}}\) are also possible; for example, in the Improved leakage–equilibration–absorption scheme (ILEAS) of Ardevol-Pulpillo et al. (2019), separate diffusion timescales are calculated for the number and energy emission rate from the local gradient of the number density and energy density. Such a local calculation also has the advantage of allowing for emission rates that match the expected diffusion limit in optically thick regions, which is not possible using the simpler dimensional analysis of earlier schemes.

In an energy integrated leakage scheme, one then needs to integrate \(Q_{\mathrm{diff}}(\nu )\) and \(R_{\mathrm{diff}}(\nu )\) separately over \(\nu \):

or, if we assume \(t_{\mathrm{diff}}\propto \nu ^{2}\),

and similarly for the number diffusion rate

The average energy of escaping neutrinos in the diffusion regime is then

We note that the average energy of diffusing neutrinos is significantly lower than the average energy of a thermal spectrum, reflecting the fact that low energy neutrinos diffuse faster than high energy neutrinos.

The actual rate at which neutrinos leave a given region of the fluid is then given by an interpolation between the estimates valid at low and high optical depth, effectively considering that neutrino transport is limited by the lowest of those two rates. Ruffert et al. (1996) uses

Alternatively, Sekiguchi (2010) considers an exponential transition between the two regimes

These results can then be coupled to the evolution of the fluid equations using conservation of energy-momentum and lepton number

The neutrino pressure term can for example be computed assuming a relativistic gas of neutrinos in equilibrium with the fluid, i.e., \(P_{\nu _{i}} = E_{\nu _{i}}^{\mathrm{eq}}/3\) (O’Connor and Ott 2010), potentially suppressed in regions where neutrinos are not trapped. Alternatively, we will see below that more advanced leakage schemes have been coupled to evolution equations for the trapped neutrinos—in which case the neutrino pressure is calculated directly from the estimated energy density of trapped neutrinos.

4.1.2 Leakage in general relativistic merger simulations

In general relativistic merger simulations, the first published leakage scheme was developed by Sekiguchi (2010). This algorithm is a mixed moment-leakage scheme with significantly more complexity that the simple scheme described above. The algorithm assumes evolution equations

for the stress-energy tensor \(T^{\alpha \beta }_{\mathrm{fl+tr}}\) of the fluid and trapped neutrinos combined, \(T^{\alpha \beta }_{\mathrm{st}}\) of streaming neutrinos, and for the lepton fraction \(Y_{l}\). The streaming neutrinos are evolved using a moment scheme (see Sect. 4.2), which allowed later iterations of this algorithm to realitively easily take into account reabsorption of the streaming neutrinos in low density regions. We can see from the evolution equations that this algorithm has the advantage of guaranteeing exact conservation of energy-momentum. Additionally, the algorithm evolves the fractions \(Y_{e}\), \(Y_{\nu _{e}}\), \(Y_{\bar{\nu }_{e}}\) and \(Y_{\nu _{x}}\) of electrons and neutrinos, assuming that these fractions reach their equilibrium value (at given temperature, density and lepton fraction) in regions where neutrino–matter interactions are fast compared to the numerical time step. This allows for relatively simple estimate of the contribution of neutrinos to the fluid pressure, assuming that the neutrinos are a relativistic gas.

An algorithm closer to the original methods of Ruffert et al. (1996), Rosswog and Liebendörfer (2003) was first used in general relativistic simulations by Deaton et al. (2013). In that work, the only contribution of neutrinos to the evolution of the system is the source terms of Eqs. (55)–(56). In Deaton et al. (2013), the minimum optical depth was calculated by considering lines along the coordinate directions \({\hat{x}},{\hat{y}},{\hat{z}}\) of a cartesian grid, as well as along the diagonals of a cube in the same coordinates, a method similar to that previously used by Ruffert and Janka (1999). This algorithm however requires global communications between all points of the numerical grid whenever \(\tau _{\nu }\) is computed, and creates preferred directions along the axis of the cartesian coordinates. An improved method to calculate \(\tau _{\nu }\) was later proposed by Neilsen et al. (2014), and is now the most commonly adopted algorithm in numerical relativity simulations. Their method relies on finding the path of shortest optical depth linking neighboring cell centers on a grid. We can indeed discretize our equation for \(\tau _{\nu }\) at point \(\bar{x}\) as

with the minimum taken over all neighboring points \({\bar{x}}_{n}\). Here, \(\Delta s_{n}\) is the distance between \({\bar{x}}\) and \({\bar{x}}_{n}\). Given an initial guess \(\tau _{\nu }^0\) for the optical depth at each point, we can solve this equation iteratively, using

until \(\Vert \tau _{\nu }^{k+1}-\tau _{\nu }^{k}\Vert <\epsilon \) at all points for some small constant \(\epsilon \). Because the optical depth evolves slowly over time, a single iteration initialized with the value of \(\tau _{\nu }\) at the previous time step is generally sufficient to maintain a good estimate of \(\tau _{\nu }\) everywhere, except when computing \(\tau _{\nu }\) for the first time. This method is now widely used in neutron star merger simulations (Foucart et al. 2014; Radice et al. 2018; Mösta et al. 2020; Cipolletta et al. 2021; Most and Raithel 2021). A conceptually similar algorithm that does not rely on the existence of an underlying cartesian grid has also been developed in Perego et al. (2014a), allowing for the easy use of this method in grid-less simulations (e.g. SPH), while an improved numerical methods to solve for \(\tau _{\nu }\) by solving the eikonal equation has been proposed by Palenzuela et al. (2022).

4.1.3 Leakage limitations and improved leakage schemes

The accuracy of a leakage scheme can be very problem dependent. The free parameters in most leakage schemes are calibrated to spherically symmetric transport problems, and tend to perform best in that context—while neutron star mergers and their post-merger remnants are very asymmetric. Even ignoring symmetry issues, however, the standard scheme discussed above has a number of important limitations. We have already mentioned the fact that the simplest leakage schemes do not accurately capture the local diffusion rate of neutrinos in the high optical depth limit; in this section we consider a few additional notable issues.

First, in regions where the total optical depth is high but the absorption and inelastic scattering optical depths are low, the assumption that neutrinos reach their equilibrium distribution function can be inaccurate. This is particularly problematic for heavy-lepton neutrinos, which typically have much lower absorption opacity than scattering opacity. One way to solve this issue is to keep track of the energy density of neutrinos, rather than assuming an equilibrium energy density. In Newtonian simulations of post-merger disks, the Advanced Spectal Leakage (ASL) scheme of Perego et al. (2016), for example, calculates the energy and number density of trapped neutrinos assuming that the distribution function of trapped neutrinos \(f_{\nu }^{\mathrm{tr}}\) satisfies the equation

with

for the estimated production time scale \(t_{\nu ,{\mathrm{prod}}}\) and diffusion time scale \(t_{\nu ,{\mathrm{diff}}}\). Here, \(\Delta t\) is the time step of the evolution. We see that in this scheme, if the time scale to produce neutrinos is long, the trapped neutrinos do not reach equilibrium with the fluid. Perego et al. (2016) further assume