Abstract

The industrialization of automated driving functions according to level 3 requires an efficient test and calibration concept to deal with an increased complexity, growing customer demands, and a larger vehicle fleet offered. Therefore, a method for a complexity reduction of the calibration parameter space is presented. In the two-step approach, a qualitative sensitivity analysis is used to identify valid regions in the search space and subsequently decrease dimensionality based on the parameter-specific global influences. The reduced parameter space and sensitivity information can then serve as a starting point for an efficient calibration process on the target hardware. To examine the method’s potential, our approach is applied to the parameter space of an automated driving function. The results expose clear dependencies between parameters and driving scenarios and allow an exclusion of parameter space dimensions based on sensitivity values. The predefined search space can be narrowed down to valid regions using the parameter range identification approach. Finally, the findings are validated with a quantitative variance-based sensitivity analysis. The validation confirms that our method provides equivalent results with a comparably smaller number of system evaluations.

Similar content being viewed by others

1 Introduction

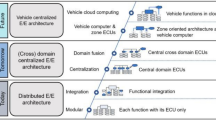

The upcoming market introduction of highly automated driving functions increases the requirements for the customer-orientated calibration of these systems. To deal with increased complexity, virtual testing tools (e.g., software in the loop and hardware in the loop) are used to lower the ratio of vehicle tests and, therefore, save costs and resources. For the parameterization of automated driving functions, optimization algorithms can be applied to obtain a certain system behaviour in dedicated driving situations using a closed-loop simulation environment. A reduction and improved system understanding of the high-dimensional parameter space previous to the simulative optimization can be valuable to enable a fast convergence towards the global optimum [14]. Moreover, it can serve as a valuable input for the manual calibration on the target hardware.

One commonly used approach to enable a more efficient optimization is the combination of optimization algorithms with sensitivity analyses (see for example [14, 15, 28]. The knowledge about the influence of a parameter on the objective function enables a fast converging optimization procedure and even offers chances to neglect parameters if their sensitivity value is small enough [23]. An objective function is used to evaluate the system behaviour in an optimization problem. Whereas sensitivity analyses were earlier mainly of mathematical interest to analyse the influence of the variables in differential equations, their field of application became larger nowadays [12]. The development of complex control systems and high-dimensional, nonlinear models motivated the usage of sensitivity analyses in the vehicle systems area to increase model understanding. In the contributions of Suarez et al. [25] and Wang [27], they use sensitivity analyses to evaluate the influence of design parameters of a vehicle body on the driving behaviour. Another popular area for sensitivity analyses and virtual optimizations is the calibration of powertrain components such as engines and transmissions. Due to its isolated testability on a test bench or as an X-in-the-loop model, sensitivity measures are calculated to understand the impact of certain design parameters on the powertrain performance (see, for example, [19], Chiang and Stefanoupoulou [7, 18]. A comparative review of different sensitivity analysis methods and their applications is provided in Hamby [10] as well as Iooss et al. [12].

Next to the chance to reduce dimensionality by neglecting parameters with small sensitivities, the optimization space is further bounded by parameter-specific ranges. Due to its potential to increase the effectiveness of optimization algorithms, several bounding approaches have been introduced in the context of system identification. The term ‘bounding’ therein stands for the process of confining parameters of a system, so that the input–output error remains below a certain threshold [16]. Bijan et al. [2] analytically derive valid ranges for input parameters of a genetic algorithm and observed an improved convergence behaviour. The approaches described by Cerone and Regruto [5, 6] enable an identification of valid parameter bounds for nonlinear dynamic control systems by modelling the nonlinear block as a linear combination of polynomials given a bounded output error. A comparative review of further bounding approaches is given by Milanese et al. [16].

The definition of valid parameter bounds is especially important prior to the application of sensitivity analyses. If invalid parameter values are considered, they might lead to an erroneous system behaviour that falsifies resulting sensitivity metrics [22]. However, factor bounds are usually defined empirically if an analytical derivation is not possible. The reason for that is mostly a too high computational effort needed to ascertain exact bounds [16].

In this contribution, we introduce an integrated method for an efficient analytical identification of valid parameter bounds and calculation of sensitivity values for the calibration problem of an automated driving function. By applying a qualitative sensitivity analysis to different regions in the parameter space, we aim to identify invalid areas that would lead to distorted results in the subsequent influence analysis and optimization. The usage of synergies in the sampling plan and the efficiency of the applied sensitivity analysis enable a reasonably small number of system evaluations needed to provide reliable results. Whereas simulative parameter analyses are already established in various areas (e.g., powertrain calibration), parameterizations of automated driving functions are nowadays mostly obtained on the target hardware. The hereafter described method offers a first step towards virtual parameterizations by providing an increased system understanding of the search space and redefining a subspace with the most influential parameters and validated domains.

The remainder of this paper is organized as follows. Section 2 provides the theoretical background for our work including applied sensitivity analyses and related convergence measures. Based on that, Sect. 3 introduces our method for the combined dimensionality reduction and parameter range identification. In Sect. 4, we apply the approach to reduce complexity of the parameter space of a level 3 automated driving function [20]. The results of Sect. 4 are thereafter validated with a comprehensive quantitative sensitivity analysis (Sect. 5), where we cross-check the impact of different parameter regions. Section 6 finally concludes the paper.

2 Theoretical background

As already mentioned, we use a qualitative sensitivity analysis to examine different regions of the search space and derive valid parameter ranges or influence measures. The findings provided by that are thereafter validated with a quantitative variance-based method with a distinctly larger sampling plan. Finally, we introduce convergence measures to evaluate sufficiency of the sampling size.

In general, sensitivity analyses can be classified into local and global approaches and further into quantitative and qualitative methods [22]. Whereas local analyses are usually only valid in the respective point, global approaches aim for universal validity within the parameter space. The difference between qualitative and quantitative methods is the interpretability of sensitivity measures. Qualitative measures allow only a relative comparison between influence values, but no direct conclusion on quantitative impacts on the objective function, although the advantage is a much smaller sampling plan needed to achieve convergence [23]. In the following, the objective function F(P) with respect to the parameter space P and the number of parameters \(n_{\text{p}}\) is defined as follows:

2.1 Elementary effects method

Due to its successful application in various fields related to vehicle control systems (c.f. [7, 10, 18, 19] and its computational efficiency, we use the elementary effects method (EEM) for our analyses. The EEM is a global qualitative analysis and was first introduced by Morris [17] and further developed by Campolongo et al. [3]. The calculation of sensitivity measures is based on a one-factor-at-a-time (OAT) sampling plan characterised by a variation in only one dimension between two consecutive samples. Among various sampling strategies, the radial approach as described by Campolongo et al. [4] offers the most uniform distribution of points in the parameter space. Starting from a quasi-random plan (e.g., latin hypercube sampling) containing \(r\) samples, each point is thereafter varied radially along all \(n_{\text{P}}\) dimensions one at a time, as exemplarily illustrated in Fig. 1. The number of samples \(n_{\text{EEM}}\) can be calculated with

For every radial sample group (c.f. Fig. 1), the relative change of the objective function F(P) with regard to the respective parameter change \(\Delta\) is calculated. The so-called elementary effect \({\text{EE}}_{i}^{j}\) for parameter i at the jth sample group is thus defined as follows:

After calculating all r elementary effects for each parameter, the mean \(\mu_{i}\) and standard deviation \(\sigma_{i}\) serve as sensitivity metrics for this method [17]:

When summing up elementary effects, as described in Eq. (4) some effects may neutralize each other if the model is non-monotonic and causes negative effects. Thus, a modified calculation of \(\mu_{i}\) is proposed in Campolongo et al. [3]:

Whereas Eq. (6) solves the problem of effects neutralizing each other, the mean \(\mu_{i}^{*}\) loses information about the direction of impact compared to \(\mu_{i}\). It is, therefore, recommended to calculate both metrics.

The information about the global influence of a parameter can be extracted by plotting the results of Eqs. (5) and (6) in a \(\mu^{*} - \sigma\)-plane (c.f. Fig. 2). The metric \(\mu^{*}\) is defined as the mean change in F(P) with regard to the respective parameter change. The \(\mu^{*}\)-intercept can, therefore, be interpreted as a measure for main effects of the parameter on F(P). For the calculation of \(\sigma\), the variance of the elementary effects is analysed. A widespread distribution of the relative influences (or elementary effects) towards F(P) can be explained by a nonlinear relationship between F(P) and the respective parameter or interdependencies to remaining parameters. The \(\sigma\)-value in Fig. 2 thus serves as an indicator for nonlinearities and interdependencies to other parameters [3].

Parameters laying in the upper right corner of the plot are thus more influential than in the lower left corner. As mentioned above, the sensitivity metrics are qualitative, i.e., they can be used to compare parameter impacts among each other, but do not allow a direct conclusion about the absolute influence on F(P).

2.2 Variance-based sensitivity analysis

The variance-based sensitivity analysis (VBSA) as described by Saltelli et al. [21,22,23] is based on the decomposition of the output variance into fractions that can be attributed to input variables or groups of inputs. In contrast to the EEM, the VBSA provides sensitivity values that are interpretable as ratios on the output variance and, therefore, normalized between zero and one. The calculation of the main effect \(S_{i}\) for parameter i is described in Eq. (7) following the notation in Saltelli et al. [21]:

The symbol V stands for the variance and E stands for the mean of the argument. The indices \(P_{i}\) and \(P\sim i\) implicate to perform respective operations over the factor i or all factors except i. \(S_{i}\), therefore, represents the ratio of the input variance of i with regard to the output variance and is called the first order effect on F(P). Effects of higher order (total effect) \(S_{\text{Ti}}\) can be calculated using Eq. (8):

In analogy to \(\sigma\) for the EEM, total effects can be interpreted as interdependencies [4]. Since the calculation of Eqs. (7) and (8) requires an unreasonably large number of system evaluations, many approximation techniques were developed. In this contribution, we use the approximation as described by Saltelli et al. [21, 22] and Jansen [13]. For the variance in Eq. (7), they propose to use an estimator as follows:

For Eq. (8), the following estimator is proposed:

Given the sample size N, two independent sampling matrices A and B each consisting of N rows and \(n_{\text{p}}\) columns are generated. These matrices are used to create a third matrix \(A_{\text{B}}^{\text{i}}\) which is equal to A except that the ith column is replaced by the ith column of B. The differences of the newly generated matrix and A or B are then used to approximate the numerator in Eqs. (7) and (8). The number of system evaluations \(n_{\text{VBSA}}\) for the variance-based approach is given by Eq. (11).

2.3 Convergence measures for sensitivity analyses

The number of samples \(n_{\text{EEM}}\) and \(n_{\text{VBSA}}\) is decisive for the validity of sensitivity measures obtained by the previously described analyses. If the sampling plan is too small, problem-specific nonlinear effects or interdependencies between parameters might be neglected [26]. Thus, several qualitative and quantitative convergence methods exist. In a qualitative analysis, we need to perform the proposed methods with different sampling sizes and compare the sensitivity values with regard to \(n_{\text{VBSA}}\) or \(n_{\text{EEM}}\). Unchanged results with increasing number of samples indicate convergence [11]. Quantitative approaches involve the calculation of the deviation of sensitivity values between two consecutive calculation steps. If the relative change falls below a predefined tolerance threshold, convergence is achieved [26]. However, two adjacent calculation steps could coincidentally lead to same sensitivity values, while the distribution changes again with a larger number of samples. Therefore, Sarrazin et al. [24] suggest the repeated calculation of sensitivities with different samples but constant sample size. Confidence intervals then allow a comparison based on a statistical basis. For the determination of confidence bounds, the bootstrapping method is applied which is commonly used in statistics to evaluate estimation techniques [9]. The idea of bootstrapping in our context is to repeatedly generate new sampling plans from the initial plan and calculate sensitivity metrics. The number of sampling plans used for the determination of confidence bounds is denoted by \(n_{\text{B}}\). Therefore, n random samples (or \(n_{\text{VBSA}}\) and \(n_{\text{EEM}}\) in our case) are pulled with replacement from the initial sample set of dimension (\(n\) × 1). For each of the \(n_{\text{B}}\) sampling matrices, the sensitivity analysis is applied resulting to \(n_{\text{B}}\) sensitivity metrics for each parameter to a given sample size. Commonly known percentile methods then enable the provision of confidence intervals to a defined confidence level \(\kappa_{\text{Conf}}\). After performing the bootstrapping method for different sample sizes, we can compare the width of the confidence intervals with respect to n and thus analyse convergence. The width \(R_{{{\text{CI}},{\text{i}}}}\) is hereafter defined as follows:

The variables \(S_{i}^{\text{ub}}\) and \(S_{i}^{\text{lb}}\) describe upper and lower bounds of the confidence interval for a sensitivity measure to a given confidence level \(\kappa_{\text{Conf}}\).

3 Combined method for the complexity reduction of the calibration problem for automated driving functions

Our approach intends to support the parameterization process of automated driving functions by narrowing down the search space to valid regions with regard to the objective function. To do so, we follow a two-step process that comprises a reduction of initial parameter bounds to valid ranges and a calculation of scenario-specific sensitivities. The output of the analyses is an increased system understanding on one hand and a decreased search space with smaller parameter bounds and less dimensions on the other hand. Figure 3 illustrates the structure of the method.

The input block of the chart comprises a simulation environment as the means to evaluate performance of the driving function in a representative scenario catalogue measured by the objective function. The parameters enable an optimization of the driving behaviour and are the main subject of our analysis.

3.1 Sampling approach

As a first step, we need to generate a sampling plan that enables both a derivation of valid parameter bounds and a sensitivity analysis. Since every sampling point requires one simulation run of the whole scenario catalogue, we intend to keep the number of samples as small as possible while still keeping a good coverage of the parameter space. Moreover, we aim to use synergies within the design for both analyses.

To examine the influence range of a parameter i, we intend to divide the initially defined domain defined by the upper bound \({\text{ub}}_{i}\) and lower bound \({\text{lb}}_{i}\) into smaller subregions. Thereafter, we perform sensitivity analyses in the reduced subspaces. The sensitivity value of the factor then provides information about the impact in the respective subrange. Due to its computational efficiency, we use the qualitative elementary effects method (EEM) to locally perform influence analyses. Based on that, we derive a valid search space and calculate global sensitivity metrics based on those radial samples that lay in permitted areas. A more detailed description of the approach is given in Sects. 3.2 and 3.3.

As a first step of the parameter range identification for the factor \(P_{i}\), we divide the initial parameter range into \(n_{\text{Int}}\) even intervals. Within the reduced search space defined by two consecutive bounds with respect to the parameter under test a Morris sampling, as described in Sect. 2.1, can be performed. The corresponding bounds \({\text{lb}}_{i}^{*}\) and \({\text{ub}}_{i}^{*}\) for the interval k\(\in \left[ {1, \ldots , n_{\text{Int}} } \right]\) can be calculated as follows:

With otherwise unchanged bounds, we can create a local Morris plan for the examination of the influence of parameter \(P_{i}\) in the interval k:

For the examination of domains of all \(n_{\text{p}}\) parameters, a total number of \(n_{\text{PB}}\) system evaluations are needed:

Figure 4a shows an exemplarily local Morris plan within the parameter space for the first interval of parameter \(P_{1}\) for a two-dimensional example.

For the subsequent calculation of the global sensitivity indices, we aim to use those radial sampling groups that lay within valid areas of the search space. We, therefore, check all elementary effects in the sampling plans and concatenate them if all of their OAT variations are located in valid areas. Figure 4b illustrates the filtering of radial sampling groups based on their location in the parameter space and the updated valid parameter bounds. Points laying on the dashed lines in Fig. 4b are not considered for the global sensitivity analysis. Since the evaluation of the objective function has already been performed for the first part of the analysis and the sample plans were generated independently, we can use these results without further computational effort.

3.2 Derivation of valid parameter bounds

As already pointed out in Sect. 1, the validity of parameter bounds is crucial for the applicability of sensitivity analysis. We consequently intend to first identify valid parameter ranges and thereafter perform the Morris sensitivity analysis using only valid samples.

Based on the sampling approach for the examination of intervals as presented in Sect. 3.1 and objective function values for each sample, we can perform the EEM locally in different regions of the parameter space. The number of discrete intervals per parameter is defined by \(n_{\text{Int}}\) (c.f. Sect. 3.1). For every subrange of the initial domain from parameter \(P_{i}\), we compute the sensitivity metrics \(\mu_{i}^{*}\) and \(\sigma_{i}\). If the search space was sufficiently sampled and none of the measures in the respective interval k are greater than a predefined threshold, the parameter \(P_{i}\) does not have an influence between \({\text{lb}}_{i}^{*} \left( k \right)\) and \({\text{ub}}_{i}^{*} \left( k \right)\). The validity of this statement has to be confirmed with convergence analyses for the number of samples \(n_{\text{PB}}\) or \(r\), respectively. Since high values of \(\mu_{i}^{*}\) and \(\sigma_{i}\) both indicate higher influences we aim to combine the two metrics to a single sensitivity value. The qualitative character of the EEM allows only a comparison among factors. We thus suggest to subsequently normalize sensitivity metrics with respect to the most influential parameter. The graphical equivalent in the \(\mu^{*} - \sigma\)-plane to the extreme case that all elementary effects are zero would be that the respective parameter lays in the point of origin. We, therefore, first calculate a single sensitivity measure that is defined as the Euclidean distance between the sensitivity point and zero. The formula for \(s_{i}\) is defined as follows:

Figure 5 illustrates sensitivity vectors, whose distances we define as sensitivity measures [c.f. Eq. (17)].

To normalize the values, we perform a MinMax-Scaling afterwards to calculate a relative sensitivity \(s_{i}^{\text{rel}}\) [8]:

With respect to the defined intervals, the variable \(s_{i,k}^{\text{rel}}\) denotes the sensitivity value of parameter \(P_{i}\) in the interval k. As pointed out in Sect. 2.1 that the main effect and degree of interdependencies and nonlinearities is highest in the upper right corner, we rate those parameters as most important.

For the identification of the valid parameter range, we suggest to make a conservative estimate for the initial bounds \({\text{lb}}_{i}\) and \({\text{ub}}_{i}\) and use the herein presented approach to narrow them down to valid regions (\({\text{lb}}_{i}^{\text{valid}}\) and \({\text{ub}}_{i}^{\text{valid}}\)). While the identification of influential intervals throughout the whole domain is theoretically possible with this approach, we thus assume a negligible influence mainly at outer areas of the parameter range. We analyse in Sect. 4 if this assumption holds for the considered use case. Therefore, we propose to examine sensitivities starting from the lowest interval with ascending k or the largest interval with descending k and identify valid lower and upper bounds \({\text{lb}}_{i}^{\text{valid}}\) and \({\text{ub}}_{i}^{\text{valid}}\) of the respective parameter \(P_{i}\). For the lower bound, starting from k = 1, we examine following intervals with rising values for k as long as the sensitivity value \(s_{i,k}^{\text{rel}}\) exceeds a lower sensitivity threshold \(s_{\text{min} , PB}^{\text{rel}}\) or all intervals were considered. The renewed lower bound is then obtained with Eq. (13). The upper bound identification works similar. An in-depth description of the suggested approach is given by the flowchart in Fig. 6. Elementary effects for parameter \(P_{i}\) in the interval k are herein given by \({\text{EE}}_{i,k}\).

3.3 Calculation of scenario-specific sensitivities

The aforementioned parameter range identification serves as a necessary process step to ensure the validity of global sensitivity measures computed in the second step of our method (c.f. Fig. 1). More importantly, the same sampling plan was used as for the hereafter described analysis, which enables a reuse of radial sampling groups (c.f. Sect. 3.1). The goal of the method presented in the following is to improve system understanding for the manual calibration process and potentially exclude parameter space dimensions based on their global influence.

Given the reordered sampling plan as exemplarily illustrated in Fig. 4b, the sensitivity metrics \(\mu_{i}^{*}\) and \(\sigma_{i}\) for the valid search space can be calculated following the Morris method, as described in Sect. 2.1. To enable a comparison of parameter influences among scenarios, we use the normalization approach as introduced in Eqs. (17) and (18). Since the simulation environment and the objective function may contain inaccuracies that complicate the transferability to the real world, the quantitative effect on F(P) would not be of big interest. Even if the absolute influence might change between manoeuvres, the relative sensitivity still provides valuable information about the order of magnitude parameters have in respective scenarios.

Similar to the method in Sect. 3.2, we have the opportunity to exclude unimportant factors from the optimization problem if the respective sensitivity value falls below a previously defined threshold \(s_{\text{min} }^{\text{rel}}\). Note that the threshold for the dimensionality reduction does not necessarily have to be the same as for the parameter range reduction (\(s_{{\text{min} ,{\text{PB}}}}^{\text{rel}} )\).

3.4 Evaluation of the complexity reduction

The two-step method described above provides a reduced valid parameter region based on newly identified bounds on one hand and scenario-specific sensitivities on the other hand. The normalization of influences allows the dimensionality reduction if the parameter’s sensitivity lays below the predefined threshold \(s_{\text{min} }^{\text{rel}}\).

In the following, we introduce metrics for an overall complexity reduction to measure the potential of our approach. Since the volume of an \(n_{\text{p}}\)-dimensional hyperspace can only serve as a comparison measure for equal dimensions, we introduce two independent metrics for the dimensionality reduction and parameter space reduction. The dimensionality reduction DR is defined as the relative decrease of the number of parameters through the sensitivity analysis. The variable \(n_{\text{p}}^{*}\) defines the number of parameters after the dimensionality reduction:

Similarly, the parameter space reduction PSR is defined as the relative decrease of the search space caused by the identification of the new bounds:

The higher the above-mentioned metrics (DR and PSR), the more the parameter space could be confined. It needs to be noted that the metrics serve as a means to compare the complexity reduction among scenarios and do not allow any conclusion to the actual reduction of computational resources or calibration time.

4 Complexity reduction for the calibration of an automated driving function

In this paragraph, we apply the previously presented method to reduce complexity of the calibration problem of an automated driving function. Therefore, we first describe the underlying optimization problem (Sect. 4.1) before presenting results in Sect. 4.2. Finally, in Sect. 4.3, we discuss our approach critically and perform convergence analyses (Sect. 4.4).

4.1 Problem description

The calibration of automated driving functions can be understood as an optimization problem that involves tuning parameters of the system, so that it provides a customer-friendly driving behaviour in relevant driving situations. Whereas optimization criteria can vary based on the function under test, the automated driving function of SAE automation level 3 (c.f. SAE [20] analysed in this work should be mainly optimized for driving comfort. The calibration is usually performed with a representative scenario catalogue aiming for an overall optimized performance. As pointed out before, we aim to virtualize the process and thus perform the complexity reduction within a closed-loop simulation. Without claiming an exact transferability to the target hardware, we can provide qualitative sensitivity information and promising regions in the parameter space for further simulative optimizations or manual calibrations in the vehicle. The objective function used in this contribution is defined as follows:

The function evaluates oscillations of the vehicle in the driving lane using the velocity \(v\) as well as the amplitude \(A_{\kappa }\) and frequency \(f_{\kappa }\) of the curvature \(\kappa\). The higher F(P) the better the system behaviour is assessed by the driver. The regression coefficients \(C_{1} , \ldots , C_{4} \in {\mathbb{R}}\) were identified previously in comprehensive driving studies. For the calibration of the driving function, eight parameters are considered. The variable P is, therefore, defined as \(P \in {\mathbb{R}}^{8}\), i.e., \(n_{\text{P}} = 8\). The parameters serve as calibration factors for the trajectory-planning module of the driving function. They define maximally allowed lateral and longitudinal accelerations as well as weight factors for a cost function that evaluates the suitability of planned trajectories. The manoeuvre catalogue can be arbitrarily large, but due to restricted computation resources, we define it just as comprehensive as necessary. The manoeuvre catalogue considered is defined based on examinations from [1]:

-

Keep lane on a curvy road (M1).

-

Lane change on the highway (M2).

-

Acceleration (M3).

-

Deceleration (M4).

-

Overtake slower vehicle on the highway (M5).

-

Lane change and stop (M6).

4.2 Complexity reduction

For an overall complexity reduction of the calibration problem, we apply the methods, as shown in Sects. 3.2 and 3.3, for all manoeuvres individually and provide a reduced parameter space with regard to the respective scenario. In a next step, conclusions valid for the whole scenario catalogue can be drawn. Since the method works similar for all scenarios, we only describe the analysis of the first manoeuvre (“Keep Lane on a curvy road”) in detail and provide results for remaining scenarios without further explanation. The initial ranges for all parameters are normalized and limited by 0 and 1, which leads to common bounds \({\text{lb}}_{i}\) and \({\text{ub}}_{i}\) for all factors:

According to the overall method, we need to derive valid parameter bounds before applying the global sensitivity analysis. Therefore, we follow the algorithm introduced in Sect. 3.2 to analyse sensitivities along each parameter range and derive valid parameter bounds. The development of \(s_{i,k}^{\text{rel}}\) for parameter i with regard to the interval k is plotted in Fig. 7 for all parameters for scenario M1. We divided each parameter range into \(n_{\text{Int}} = 10\) even intervals. The minimum sensitivity threshold was defined to \(s_{{\text{min} ,{\text{PB}}}}^{\text{rel}} = 0.01 = 1 \%\).

For the derivation of valid bounds, we follow the algorithm, as described in Fig. 6, and obtain a renewed lower bound for \(P_{1}\) of \({\text{lb}}_{1}^{\text{valid}} = 0.6\) (\({\text{ub}}_{1}^{\text{valid}}\) remains unchanged). Moreover, the upper bound of \(P_{2}\) can be redefined to \({\text{ub}}_{2}^{\text{valid}} = 0.6\) (\({\text{lb}}_{ 2}^{\text{valid}}\) remains unchanged). Parameters \(P_{3}\) and \(P_{4}\) seem to have no influence on F(P), since \(s_{i,k}\) remains zero over the whole domain. Parameters \(P_{5}\)–\(P_{8}\) on the other hand seem to be sensitive towards F(P). However, the final clarification of these assumptions can only be provided by a global sensitivity analysis in the valid parameter space region.

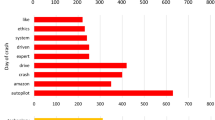

For the calculation of the sensitivity metrics as defined in Eqs. (5) and (6), we first concatenate a new Morris plan from those radial sampling groups laying in permitted areas of the parameter space (c.f. Sect. 3.1). The corresponding \(\mu^{*} - \sigma\)-plot for this scenario is shown in Fig. 8. The scaling of both axes is identical to avoid any plotting distortions of the results. Since the Morris method only allows a comparison among the parameters but no direct conclusion about the impact on F(P), we refrain from specifying axes intercepts.

It can be seen that parameters \(P_{1}\)–\(P_{4}\) have comparably small values for the main effect (\(\mu^{*}\)) and low nonlinearities and interdependencies to other parameters (\(\sigma\)-value). Parameters \(P_{5}\)–\(P_{8}\) on the other hand lay further in the top right, which implicates higher interdependencies and nonlinearities. Parameter \(P_{8}\) seems to be mostly influential due to its position in the upper right corner. By comparing these findings with the plots in Fig. 7, we notice that the results confirm observations based on the parameter range analysis. The dimensionality reduction and parameter range identification can, therefore, be used as mutual plausibility checks.

In the following, we calculate normalized sensitivities using Eqs. (17) and (18) to get a single sensitivity measure and enable the comparison among different manoeuvres. To reduce the dimensionality of the parameter space for the optimization problem, we need to define a minimum threshold \(s_{\text{rel}}^{\text{min} }\) (c.f. Sect. 3.3). Since the EEM is a qualitative method, we suggest to define values close to zero but not exactly zero. For our problem, we choose \(s_{\text{min} }^{\text{rel}} = 0.01\). Table 1 comprises results of the global sensitivity analysis and subsequent dimensionality reduction, while Table 2 gives an overview of newly found bounds for each scenario.

When analysing the results in Table 1, it becomes obvious that the impact of many parameters changes with regard to the scenario. Whereas scenarios M3–M5 can be optimized solely by parameter \(P_{2}\), the distribution of influential parameters changes for the remaining scenarios. Since these scenarios mainly consist of longitudinal changes of the driving state with low curvature changes, while the others contain larger lateral requirements, the parameter \(P_{2}\) might be especially influential towards the optimization of the longitudinal driving behaviour. Moreover, it becomes clear that some of the parameters (e.g., \(P_{3}\) and \(P_{4}\)) have an exclusive influence on only one manoeuvre, whereas other parameters (e.g., \(P_{1}\), \(P_{2}\), and \(P_{5}\)–\(P_{8}\)) seem to affect more manoeuvres. The different parameter bounds per manoeuvre (c.f. Table 2) finally confirm the potential of the scenario-specific analysis, since influence ranges change depending on the driving situation.

The different values for DR and PSR with respect to the manoeuvre represent the characteristics of our optimization problem. Parameters are not all equally influential and do not have a fixed influence range, instead the valid parameter space for the optimization varies heavily depending on the scenario. The exposition of these findings with our analysis, therefore, simplifies the parameter tuning, since the search space and thus the number of possible parameter combinations can be reduced compared to the initial setup. It should be noted that high values for PSR and DR indicate a reduction of the parameter space, but do not necessarily improve subsequent optimizations. If the relevance threshold is too small, optimization algorithms might still search areas with negligible influences and get stuck in local optima.

4.3 Discussion of the results

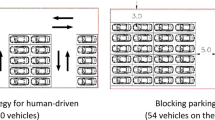

The results, as illustrated in Sect. 4.2, imply a grouping of the variables with regard to scenario specifications and a change of parameter influences within different domains. To analyse our findings, we need to look into the chain of effects and understand the structure of the function modules. Many of the tuneable parameters for automated driving functions are located in the control systems of the actuators (e.g., steering system). Alternatively, they are located inside motion-planning modules and serve as, e.g., weighting factors in the cost function of an optimization problem, which is the case for our problem (c.f. Sect. 4.1). Since the implementation of the analysed trajectory-planning module separates longitudinal and lateral strategies, there may also exist varying sensitivities of the corresponding parameters per scenario. Manoeuvres 3 and 4 are mainly longitudinal manoeuvres, whereas M1 and M2 mostly contain lateral parts. The results expose a larger influence of parameter \(P_{2}\) on longitudinal scenarios, whereas parameters \(P_{3}\)–\(P_{8}\) seem to show a sensitive behaviour towards lateral scenarios. As described before (Sect. 3.2), we chose a very large initial range to make sure that the actual valid range lays in between those initial bounds. The tendency of some parameters to loose impact at outer areas of the ranges can be explained when looking into more detail to the function modules. Very high absolute values for a weighting factor within a cost function could, for example, cause an overcompensation of all remaining factors and thus the planning of unrealistic driving states, which is caught by further constraints of the system. Alternatively, the switch to a fall back emergency mode for an unrealistic planning behaviour is possible. On the other hand, a small value of such a factor would lead to a decreased influence and, therefore, an overcompensation by other factors. Next to the findings in cost functions, a similar behaviour can be observed in control systems when a parameter serves as a gain factor or the affected signal runs into a limiter, so that its influence does not change anymore below or above a certain threshold.

The findings described before confirm the applicability of our approach to the parameter space of an automated driving function. For further driver assistance systems (e.g., adaptive cruise control, lane keeping assistant), a similar performance can be expected, since the structure of the chain of effects is comparable. It mostly comprises control systems and motion-planning modules with tuneable parameters affecting the closed-loop-driving behaviour. Internal safety modules preventing undesired driving behaviour caused by invalid parameterizations of the driving function are mostly required by law, so that fall back layers may limit the influential regions as exposed above. The transferability of this concept to other problems with large parameter spaces is generally given. However, the effectiveness strongly depends on the characteristics of the optimization problem. If the objective function is not limited by any constraints, the method might not allow a reduction of the parameter space. Since the chain of effects for many vehicle control systems (e.g., powertrain and suspension system) is similar to automated driving functions with respect to safety restrictions, we may be able to reduce parameter space complexity for these cases.

4.4 Convergence analysis

The validity of the results strongly depends on the sufficiency of our sampling plan. Therefore, we finally apply a quantitative convergence analysis, as described in Sect. 2.3. Following the bootstrapping approach, we apply the presented methods \(n_{\text{B}} = 500\) times for respective sample sizes \(n_{\text{EEM}}\) or \(n_{\text{PB}}\) and thereafter calculate the mean as well as the 5% and 95% confidence interval. Based on that, we plot the mean of the resulting sensitivities \(s_{i}\) as well as \({\text{lb}}^{\text{valid}}\) and \({\text{ub}}^{\text{valid}}\) against the corresponding number of system evaluations. In addition, the width of the confidence interval \(R_{\text{CI}}\) is analysed. Figures 9 and 10 show the results for the parameter range identification for scenario M1 as a representative for the whole scenario catalogue.

The results show that valid parameter ranges can already be achieved with a relatively small number of system evaluations. The width of the confidence interval reaches a value of zero after \(n_{\text{PB}} \approx 10000\) which is equivalent to r = 14 as the number of elementary effects in the respective subspace [c.f. Eq. (16)].

We examine the validity of the results of the dimensionality reduction by analysing convergence of the combined sensitivity \(s_{i}\) [c.f. Eq. (17)], since the normalization as applied for \(s_{i}^{\text{rel}}\) prohibits the calculation of confidence bounds. While the final prioritization of the parameters already becomes apparent with a small sample size, the confidence interval only decreases with larger values for \(n_{\text{EEM}}\). As shown in Fig. 11, after approx. \(n_{\text{EEM}} \approx 1000\), the width of the confidence interval is so small (\(R_{\text{CI}} < 45)\) that a clear distinction of parameters \(P_{7}\) and \(P_{8}\) is possible. Parameters \(P_{5}\) and \(P_{6}\) lay so close together that the confidence intervals still overlap slightly. The same applies for \(P_{1}\) and \(P_{2}\). However, with respect to the order of magnitude of the combined sensitivities, we can accept small overlaps between two parameters. Since the measures are qualitative and we compute the relative sensitivity with regard to the most influential parameter, the deviations in \(s_{i}^{\text{rel}}\) would be negligibly small, so that we can assume similar influences for both parameters based on the method.

It is noticeable that the parameter range identification requires a distinctly larger sampling size than the dimensionality reduction method. These findings align with our method, since we reuse valid radial samples from the initial plan for the global sensitivity analysis. However, the risk is high that due to a great reduction of initial parameter ranges, the number of radial samples for the second part of our method is too small to provide reliable results. Since optimal values for \(n_{\text{EEM}}\) and \(n_{\text{PB}}\) are problem-specific, it is always recommended to perform a convergence analysis after applying the method to ensure the validity of the results. If \(n_{\text{EEM}}\) turns out to be too small, one can generate more OAT samples within the reduced parameter space following the sampling method, as shown in Sect. 2.1.

5 Validation of the results

To validate our findings, we finally apply the quantitative variance-based sensitivity analysis to our problem. Similar to Sect. 4, we use the manoeuvre M1 as a representative for the scenario catalogue. We, therefore, divide the search space into a valid region and an invalid region. The valid region is defined through the bounds \({\text{lb}}_{i}^{\text{valid}}\) and \({\text{ub}}_{i}^{\text{valid}}\), whereas the invalid space represents the regions in the parameter space outside permitted bounds. To evaluate our findings of the global sensitivity analysis, we compute the main (ME) and total effects (TE) with the variance-based sensitivity analysis (c.f. Sect. 2.2) in the permitted subspace and compare them to the relative sensitivities \(s_{i}^{\text{rel}}\) provided by the EEM. In a second step, another quantitative VBSA is performed inside invalid regions of the parameter space. The comparison of sensitivity metrics in the valid and invalid space finally allows the validation of the complexity reduction approach.

The results are illustrated through bar plots in Fig. 12. The error bars symbolize the 5% and 95% confidence interval. A sufficient convergence was achieved with \(n_{\text{VBSA}} = 16400\) system evaluations. The results in Fig. 12a indicate small main effects and total effects for parameters \(P_{1}\)–\(P_{4}\) and comparably high values for parameters \(P_{5}\)–\(P_{8}\). Compared to the \(\mu^{*} - \sigma\) plot in Fig. 8, these findings generally align with the qualitative sensitivity results. However, the results of the VBSA do not allow an exclusion of parameters \(P_{3}\) and \(P_{4}\) with a threshold of \(0.01\), since it estimates higher influences. Previous examinations (e.g. [26] already exposed the risk of the EEM to be too conservative and rate parameters as non-sensitive that turn out to be important. However, in our case, the VBSA provides results in a similarly small order of magnitude (\({\text{ME}}_{3} = 0.047,\;{\text{TE}}_{3} = 0.061,\;{\text{ME}}_{4} = 0.047,\;{\text{TE}}_{4} = 0.061\)), so that the exclusion of \(P_{3}\) and \(P_{4}\) by the EEM can be justified. Moreover, the influence results for \(P_{1}\), \(P_{2}\), and \(P_{5}\)–\(P_{8}\) indicate the same factor ranking, as outlined in Sect. 4 and thus confirms the applicability of the qualitative approach.

When comparing the distributions of main and total effects between Fig. 12a, b, we observe an average decrease of the main effect for \(P_{1}\)–\(P_{4}\) of approximately 50% and a reduction of the total effect by approx. 30%. The distinct reduction of these sensitivity metrics outside of the valid bounds and increase of the influences for \(P_{5}\)–\(P_{8}\) confirm the findings of the parameter range reduction. The fact that the sensitivities of \(P_{1}\) and \(P_{2}\) are not closer to zero might be caused by the less conservative VBSA approach (see above) and the variance and mean computation technique (c.f. Sect. 2.2) applied in this work. The computation based on a combined matrix \(A_{B}^{i}\) out of two independent matrices A and B might cause small approximation errors [13]. The results suggest that by applying the elementary effects method locally in certain regions of the parameter space, we can reliably reduce domains, so that non-influential areas are neglected. The validity of the findings could be confirmed qualitatively with the VBSA. The comparably high number of system evaluations needed (\(n_{\text{VBSA}} = 16400\)) to achieve convergence underlines the computational efficiency of our approach (\(n_{\text{EEM}} \approx 1000\)).

6 Conclusion

In this contribution, we introduced a two-step method for a combined complexity reduction of the parameter space for the calibration of automated driving functions. To reduce the search space with a minimum number of system evaluations, we locally apply the qualitative Morris analysis to examine the respective subspaces. Thereafter, we reuse valid samples to perform a global influence analysis. Our approach thus offers the opportunity to first narrow down individual domains of parameters and second exclude calibration factors based on their global influence on the objective function. The potential of the method is evaluated by performing a complexity reduction for the parameter space of an automated driving function. We, therefore, formulate a representative scenario catalogue containing six manoeuvres and apply the method to every scenario individually. The results expose a clear dependency of the parameter’s impact and influence range on the characteristics of the scenario. The analysis enables us to reduce the dimensionality by 47.5% and redefine an up to 65.7% smaller parameter space on average with regard to the respective scenario. Next to the distinct reduction of complexity, the sensitivity values provide an improved system understanding and can help to derive a calibration strategy based on a ranked parameter list. The relatively small number of system evaluations (\(n_{\text{PB}} \approx 10000\) or \(n_{\text{EEM}} \approx 1000\)) compared to a variance-based approach (\(n_{\text{VBSA}} \approx 16400)\) underlines the potential of the analysis as a preceding step to the actual parameterization. The application of the method to the problem presented in this work shows that it can save expensive calibration time especially worthwhile on the target hardware.

In further investigations, we intend to evaluate the potential of this approach in combination with an optimization algorithm and the transferability to the vehicle. Moreover, the presented method should be applied to further vehicle control systems and large parameter spaces of other areas (e.g., scenario creation for the validation of automated driving functions) to evaluate its transferability. Additional investigations could concentrate on developing this method further, so that invalid regions not only in the outer areas of the parameter ranges can be derived but over the whole domain.

References

Bellem, H., Schönenberg, T., Krems, J.F., Schrauf, M.: Objective metrics of comfort: developing a driving style for highly automated vehicles. Transp. Res. Part F Traffic Psychol. Behav. 41, 45–54 (2016)

Bijan, M.G., Al-Badri, M., Pillay, P., Angers, P.: Induction machine parameter range constraints in genetic algorithm based efficiency estimation techniques. IEEE Trans. Indus. Appl. 54(5), 4186–4197 (2018)

Campolongo, F., Cariboni, J., Saltelli, A.: An effective screening design for sensitivity analysis of large models. Environ. Modell. Softw. 22(10), 1509–1518 (2007)

Campolongo, F., Saltelli, A., Cariboni, J.: From screening to quantitative sensitivity analysis. A unified approach. Comput. Phys. Commun. 182(4), 978–988 (2011)

Cerone, V., Regruto, D.: Parameter bounds for discrete-time Hammerstein models with bounded output errors. IEEE Trans. Autom. Control 48(10), 1855–1860 (2003)

Cerone, V., Regruto, D.: Parameter bounds evaluation of Wiener models with noninvertible polynomial nonlinearities. Automatica 42(10), 1775–1781 (2006)

Chiang, C.J., Stefanopoulou, A.G.: Sensitivity analysis of combustion timing of homogeneous charge compression ignition gasoline engines. J. Dyn. Syst. Meas. Contr. 131(1), 014506 (2009)

Dunn-Rankin, P., Knezek, G.A., Wallace, S.R., Zhang, S.: Scaling methods. Psychology Press, Hove (2014)

Efron, B.: Bootstrap methods: another look at the jackknife. Breakthroughs in Statistics, pp. 569–593. Springer, New York (1992)

Hamby, D.M.: A review of techniques for parameter sensitivity analysis of environmental models. Environ. Monit. Assess. 32(2), 135–154 (1994)

Herman, J.D., Kollat, J.B., Reed, P.M., Wagener, T.: Method of morris effectively reduces the computational demands of global sensitivity analysis for distributed watershed models. Hydrol. Earth Syst. Sci. 17(7), 2893–2903 (2013)

Iooss, B., Lemaître, P.: A review on global sensitivity analysis methods. Uncertainty Management in Simulation-Optimization of Complex Systems, pp. 101–122. Springer, Boston (2015)

Jansen, M.J.: Analysis of variance designs for model output. Comput. Phys. Commun. 117(1–2), 35–43 (1999)

Mach, F.: Reduction of optimization problem by combination of optimization algorithm and sensitivity analysis. IEEE Trans. Magn. 52(3), 1–4 (2016)

Maute, K., Nikbay, M., Farhat, C.: Coupled analytical sensitivity analysis and optimization of three-dimensional nonlinear aeroelastic systems. AIAA J. 39(11), 2051–2061 (2001)

Milanese, M., Norton, J., Piet-Lahanier, H., Walter, É. (eds.): Bounding approaches to system identification. Springer, New York (2013)

Morris, M.D.: Factorial sampling plans for preliminary computational experiments. Technometrics 33(2), 161–174 (1991)

Pei, Y., Davis, M.J., Pickett, L.M., Som, S.: Engine combustion network (ECN): global sensitivity analysis of Spray A for different combustion vessels. Combust. Flame 162(6), 2337–2347 (2015)

Rakopoulos, C.D., Rakopoulos, D.C., Giakoumis, E.G., Kyritsis, D.C.: Validation and sensitivity analysis of a two zone Diesel engine model for combustion and emissions prediction. Energy Convers. Manage. 45(9–10), 1471–1495 (2004)

SAE On-Road Automated Vehicle Standards Committee. Taxonomy and definitions for terms related to on-road motor vehicle automated driving systems. SAE International, Warrendale (2014)

Saltelli, A., Annoni, P., Azzini, I., Campolongo, F., Ratto, M., Tarantola, S.: Variance based sensitivity analysis of model output design and estimator for the total sensitivity index. Comput. Phys. Commun. 181(2), 259–270 (2010)

Saltelli, A., Ratto, M., Andres, T., Campolongo, F., Cariboni, J., Gatelli, D., Tarantola, S.: Global sensitivity analysis: the primer. Wiley, New York (2008)

Saltelli, A., Tarantola, S., Campolongo, F., Ratto, M.: Sensitivity analysis in practice: a guide to assessing scientific models. Wiley, New York (2004)

Sarrazin, F., Pianosi, F., Wagener, T.: Global sensitivity analysis of environmental models: convergence and validation. Environ. Modell. Softw. 79, 135–152 (2016)

Suarez, B., Felez, J., Maroto, J., Rodriguez, P.: Sensitivity analysis to assess the influence of the inertial properties of railway vehicle bodies on the vehicle’s dynamic behaviour. Vehicle Syst. Dyn. 51(2), 251–279 (2013)

Vanrolleghem, P.A., Mannina, G., Cosenza, A., Neumann, M.B.: Global sensitivity analysis for urban water quality modelling: terminology, convergence and comparison of different methods. J. Hydrol. 522, 339–352 (2015)

Wang, S.: Design sensitivity analysis of noise, vibration, and harshness of vehicle body structure. J. Struct. Mech. 27(3), 317–335 (1999)

Xu, W.T., Lin, J.H., Zhang, Y.H., Kennedy, D., Williams, F.W.: Pseudo-excitation-method-based sensitivity analysis and optimization for vehicle ride comfort. Eng. Optim. 41(7), 699–711 (2009)

Acknowledgements

We would like to express our gratitude to the developers of the department of automated driving at BMW for providing us all relevant information to develop this method.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declared no potential conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Fraikin, N., Funk, K., Frey, M. et al. Dimensionality reduction and identification of valid parameter bounds for the efficient calibration of automated driving functions. Automot. Engine Technol. 4, 75–91 (2019). https://doi.org/10.1007/s41104-019-00043-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41104-019-00043-z