Abstract

This paper deals with the inferential studies of inliers in Gompertz distribution. The inliers are inconsistent observations, which are generally the resultant of instantaneous and early failures. These situations are generally modeled using a non-standard mixture of distributions with a failure time distribution (FTD) for positive observations. Considering FTD as Gompertz distribution, we have studied various methods of estimating parameters including the uniformly minimum variance unbiased estimate of some parametric functions. An application of inliers prone models is illustrated with a real data set.

Similar content being viewed by others

References

Abu-Zinadah HH (2014) Six method of estimations for the shape parameter of Exponentiated gompertz distribution. Appl Math Sci 8(88):4349–4359

Aitchison J (1955) On the distribution of a positive random variable having a discrete probability mass at the origin. J Am Stat Assoc 50:901–908

Al-Khedhairi A, El-Gohary A (2008) A new class of bivariate Gompertz distributions and its mixture. Int J Math Anal 2:235–253

Ananda MM, Dalpatadu RJ, Singh AK (1996) Adaptive bayes estimators for parameters of the Gompertz survival model. Appl Math Comput 75(2–3):167–177

Charalambides CH (1974) Inimum variance unbiased estimation for a class of left truncated distributions. Sankhya A 36:392–418

Chen Z (1997) Parameter estimation of the Gompertz population. Biom J 39(1):117–124

Dixit VU (2003) Estimation of parameters of mixed failure time distribution based on an extended modified sampling scheme. Commun Stat Theory Methods 32(10):1911–1923

Gompertz B (1825) On the nature of the function expressive of the law of human mortality and on the new mode of determining the value of life contingencies. Philos Trans R Soc A 115:513–580

Gordon NH (1990) Maximum likelihood estimation for mixtures of two Gompertz distribution when censoring occurs. Commun Stat Simul Comput 19:733–747

Gupta RC (1977) Minimum variance unbiased estimation in modified power series distribution and some of its applications. Commun Stat 6:977–991

Gupta RD, Kundu D (2001) Generalized exponential distribution: different method of estimations. J Stat Comput Simul 69:315–337

Ismail AA (2010) Bayes estimation of Gompertz distribution parameters and acceleration factor under partially accelerated life tests with type-I censoring. J Stat Comput Simul 80(11):1253–1264

Jaheen ZF (2003) A Bayesian analysis of record statistics from the Gompertz model. Appl Math Comput 145(2–3):307–320

Jani PN (1977) Minimum variance unbiased estimation for some left-truncated modified power power series distributions. Sankhya 39:258–278

Jani PN (1993) A characterization of one-parameter exponential family of distributions. Calcutta Stat Assoc Bull 43(171–172):253–255

Jani PN, Dave HP (1990) Minimum variance unbiased estimation in a class of exponential family of distributions and some of its applications. Metron 48:493–507

Jayade VP, Prasad MS (1990) Estimation of parameters of mixed failure time distribution. Commun Stat Theory Methods 19(12):4667–4677

Johnson NL, Kotz S, Balakrishnan N (1995) Continuous univatiate distribution, vol 2, 2nd edn. Wiley, New York

Joshi SW, Park CJ (1974) Minimum variance unbiased estimation for truncated power series distributions. Sankhya A 36:305–314

Kale BK (2003) Modified failure time distributions to accommodate instantaneous and early failures. In: Misra JC (ed) Industrial mathematics and statistics. Narosa Publishing House, New Delhi, pp 623–648

Kale BK, Muralidharan K (2000) Optimal estimating equations in mixture distributions accommodating instantaneous or early failures. J Indian Stat Assoc 38:317–329

Kale BK, Muralidharan K (2007) Masking effect of inliers. J Indian Stat Assoc 45(1):33–49

Kale BK, Muralidharan K (2008) Maximum likelihood estimation in presence of inliers. J Indian Soc Probab Stat 10:65–80

Kao JHK (1958) Computer methods for estimating Weibull parameters in reliability studies. Trans IRE Reliab Quality Control 13:15–22

Kao JHK (1959) A graphical estimation of mixed Weibull parameters in life testing electron tube. Technometrics 1:389–407

Kleyle RM, Dahiya RL (1975) Estimation of parameters of mixed failure time distribution from censored data. Commun Stat Theory Methods 4(9):873–882

Muralidharan K (2010) Inlier prone models: a review. ProbStat Forum 3:38–51

Muralidharan K, Lathika P (2006) Analysis of instantaneous and early failures in Weibull distribution. Metrika 64(3):305–316

Murthy DNP, Xie M, Jiang R (2004) Weibull models. Wiley, New Jersey

Patil GP (1963a) Minimum variance unbiased estimation and certain problem of additive number theory. Ann Math Stat 34:1050–1056

Roy J, Mitra SK (1957) Unbiased minimum variance estimation in a class of discrete distributions. Sankhya 18:371–378

Shawky A, Abu-Zinadah HH (2009) Exponentiated pareto distribution: different method of estimations. Int J Contempl Math Sci 4(14):677–693

Shawky A, Bakoban RA (2012) Exponential gamma distribution: different methods of estimations. J Appl Math 2012:284296

Shinde RL, Shanubhogue A (2000) Estimation of parameters and the mean life of a mixed failure time distribution. Commu Stat Theory Methods 29(1):2621–2642

Swain J, Venkatraman S, Wilson J (1988) Least squares estimation of distribution function in Johnson’s translation system. J Stat Comput Simul 29:271–297

Vannman K (1995) On the distribution of the estimated mean from the nonstandard mixtures of distribution. Commun Stat Theory Methods 24(6):1569–1584

Walker SG, Adham SA (2001) A multivariate Gompertz-type distribution. J Appl Stat 28:1051–1065

Wu JW, Hung WL, Tsai CH (2004) Estimation of parameters of the Gompertz distribution using the least squares method. Appl Math Comput 158(1):133–147

Acknowledgments

We thank the referees and the editor for their careful reading, useful comments and valuable suggestions which greatly improved this research paper.

Author information

Authors and Affiliations

Corresponding author

Appendix: Asymptotic Distribution of MLE

Appendix: Asymptotic Distribution of MLE

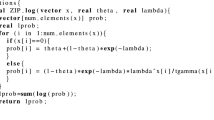

For inlier prone Gompertz distribution \(g\left( {x;p,\alpha ,\theta } \right) \) given by (4) with \(\alpha \) known,

and

One can verify that \(E\left( {\frac{\partial ln\,g\left( {x;p,\alpha ,\theta } \right) }{\partial p}} \right) =0\) and \(E\left( {\frac{\partial ln\,g\left( {x;p,\alpha ,\theta } \right) }{\partial \theta }} \right) =0\).

Also,

Hence, the Fisher information is:

where, \(p^{*}=1-pe^{-\frac{\theta }{\alpha }\left( {e^{\alpha d}-1} \right) }\).

Therefore, the Fisher information matrix \(I_g \left( {p,\theta } \right) \) is given by:

The inverse matrix \(I_g^{-1} \left( {p,\theta } \right) \) is given by:

and the determinant of \(I_g \left( {p,\theta } \right) \) is given by \({\Delta }\) is \({\Delta }=\frac{e^{-\frac{2\theta }{\alpha }\left( {e^{\alpha d}-1} \right) }}{\theta ^{2}p^{*}}\).

Using the standard result of MLE, we have

Using the estimated variances, one can also propose large sample tests for p and \(\theta \).

Rights and permissions

About this article

Cite this article

Muralidharan, K., Bavagosai, P. Some Inferential Studies on Inliers in Gompertz Distribution. J Indian Soc Probab Stat 17, 35–55 (2016). https://doi.org/10.1007/s41096-016-0005-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41096-016-0005-5