Abstract

Methods based on artificial neural networks for intuitionistic fuzzy time series forecasting can produce successful forecasting results. In the literature, exponential smoothing methods are hybridised with artificial neural networks due to their simple and efficient structures to improve the forecasting performance. The contribution of this paper is to propose a new forecasting approach combining exponential smoothing methods and intuitionistic fuzzy time series. In this study, a forecasting algorithm based on the dendrite neuron model and simple exponential smoothing methods is proposed for modelling intuitionistic fuzzy time series. In the fuzzification stage of the proposed method, the intuitionistic fuzzy c-means method is used. The proposed method is a modular method using two separate dendrite neuron model neural networks and the grey wolf optimisation algorithm is used to estimate all parameters of the method. The performance of the proposed method is applied on four different random time series obtained for Index of Coin Market Cap and the performance of the method is compared with some other fuzzy forecasting methods. As a result of the analyses, it is concluded that the proposed modular method has better forecasting results than other methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Fuzzy sets were first proposed by Zadeh (1965). Fuzzy sets have been used and are useful in many important studies in the literature. Chen and Chen (2002), and Lin et al. (2006) used fuzzy sets in the construction of fuzzy inference systems as decision support systems. Chen and Lee (2010) utilised fuzzy sets for solving decision-making problems. Chen (2002), Chen and Wang (2010), Chen and Chen (2011, 2014), Chen and Jian (2017), Chen et al. (2019), Zeng et al. (2019), Samal and Dash (2023) and Goyal and Bisht (2023) proposed methods based on fuzzy sets for solving the forecasting problem. Intuitionistic fuzzy sets have an extended definition of fuzzy sets and have an additional dimension for uncertainty. Intuitionistic fuzzy sets are proposed by Atanassov (1986). Chen and Randyanto (2013), Zou et al. (2020) and Meng et al. (2020) contributed and used intuitionistic fuzzy sets literature. In the literature, it has been observed that forecasting methods based on intuitionistic fuzzy sets have been developed and successful forecasting results can be obtained. Although intuitionistic fuzzy time series have different uses in the literature, Egrioglu et al. (2019) defined intuitionistic fuzzy time series in multivariate time series structure. In recent years, many forecasting methods for intuitionistic fuzzy time series have been developed. Abhishekh and Singh (2020) proposed an enhanced and versatile method of forecasting using the concept of intuitionistic fuzzy time series based on their score function. Abhishekh and Singh (2018) presented a refined method of forecasting based on high-order intuitionistic fuzzy time series by transforming historical fuzzy time series data into intuitionistic fuzzy time series data. Xu and Zheng (2019) proposed a long-term forecasting method for intuitionistic fuzzy time series. Kumar et al. (2019) proposed an intuitionistic fuzzy time series forecasting based on a dual hesitant fuzzy set for stock market forecasting. Hassan et al. (2020) designed an intuitionistic fuzzy forecasting model combined with information granules and weighted association reasoning. Wang et al. (2020) proposed a traffic anomaly detection algorithm based on intuitionistic fuzzy time series graph mining. Abhishekh and Singh (2020) proposed a new method of time series forecasting using an intuitionistic fuzzy set based on average length. Fan et al. (2020) proposed a network traffic forecasting model based on long-term intuitionistic fuzzy time series. Pattanayak et al. (2021) proposed a novel probabilistic intuitionistic fuzzy set-based model using a support vector machine for high-order fuzzy time series forecasting.

Chen et al. (2021) proposed an intuitionistic fuzzy time series model based on based on the quantile discretization approach. Kocak et al. (2021) used long short-term memory artificial neural networks to determine the fuzzy relations in their deep intuitionistic fuzzy time series forecasting method. Nik Badrul Alam et al. (2022a) integrated the 4253HT smoother with the intuitionistic fuzzy time series forecasting model. Nik Badrul Alam et al. (2022b) proposed an intuitionistic fuzzy time series forecasting model to forecast Malaysian crude palm oil prices. Arslan and Cagcag Yolcu (2022) proposed an intuitionistic fuzzy time series forecasting model based on a new hybrid sigma-pi neural network. Bas et al. (2022) proposed an intuitionistic fuzzy time series method based on a bootstrapped combined Pi-Sigma artificial neural network and intuitionistic fuzzy c-means. Pant and Kumar (2022a) proposed a novel hybrid forecasting method using particle swarm optimisation and intuitionistic fuzzy sets. Pant and Kumar (2022b) proposed a computational method based on intuitionistic fuzzy sets for forecasting. Pant et al. (2022) proposed a novel intuitionistic fuzzy time series forecasting method to forecast death due to COVID-19 in India. Vamitha and Vanitha (2022) proposed a new model for temperature forecasting using intuitionistic fuzzy time series forecasting. Yolcu and Yolcu (2023) used a cascade forward neural network in the determination of intuitionistic fuzzy relations in their intuitionistic fuzzy time series forecasting model. Dixit and Jain (2023) proposed a new intuitionistic fuzzy time series method for non-stationary time series data with a suitable number of clusters and different window sizes for fuzzy rule generation. Yücesoy et al. (2023) proposed a new intuitionistic fuzzy time series method that uses intuitionistic fuzzy clustering, bagging of decision trees and principal component analysis for forecasting problems. Çakır (2023) proposed a novel Markov-weighted intuitionistic fuzzy time series model for forecasting problems. Kocak et al. (2023) proposed a novel explainable robust high-order intuitionistic fuzzy time series forecasting method based on intuitionistic fuzzy c-means algorithm and robust regression method. Pant et al. (2023) proposed a computational-based partitioning intuitionistic fuzzy time series forecasting method.

When the literature is examined, the need to use both membership and non-membership values leads to the need for more complicated modelling methods in intuitionistic fuzzy time series methods. Another problem is whether the intuitionistic fuzzy time series method should be studied with an approach that uses membership and non-membership values at the same time or with a dual approach with two different models using membership and non-membership values separately and finally a combination of these models. In the literature, the dual approach is generally preferred. The dual approach is based on optimising two different models with two separate and independent optimisation processes. In addition, a third optimisation process is required to combine the outputs of the two models. This leads to the emergence of three different modelling errors. The motivation of this paper is to present an approach that can solve three different modelling error problems and simplification of the model problem. Although intuitionistic fuzzy time series forecasting methods require complicated modelling tools, it is known in the literature that complicated methods may have worse forecasting performance than simple forecasting methods and complicated methods bring memorisation problems. Another motivation for this study is to transform the intuitionistic fuzzy time series method from complicated to simple.

In this study, all weights of two separate dendrite neuron models, one of which works with membership values and the other with non-membership values, are estimated in a single grey wolf optimisation process. In addition, the parameters used in combining the outputs of two dendritic neuron models and combining the final model with exponential smoothing are estimated in the same grey wolf optimisation process. The method proposed in this study combines the output of the intuitionistic fuzzy time series method with the exponential smoothing mechanism, allowing the forecasting method to transform into a simple forecasting method or the method to produce combined forecasts with the forecasts of the simple forecasting method and complicated forecasting method. In this respect, the method has the advantage of having an adaptive and modular structure. The main contribution of this paper is to propose a new forecasting approach that combines the traditional time series forecasting method, the simple exponential smoothing method, with the contemporary forecasting method, the intuitionistic fuzzy time series method.

The other sections of the paper are as follows. In the second part of the study, general information about grey wolf optimisation, intuitionistic fuzzy c-means and the dendrite neuron model is presented. In the third section, the proposed method is introduced. In the fourth section, the application of the proposed method and performance comparison results are explained with the help of tables and graphs.

2 The general information

In this section, summarising information about grey wolf optimisation, intuitionistic fuzzy c-means and dendrite neuron model are presented under subheadings, respectively.

2.1 Grey wolf optimisation algorithm

The grey wolf optimisation algorithm is a meta-heuristic AI optimisation algorithm inspired by the intelligent behaviour of grey wolf packs, proposed by Mirjalili et al. (2014). The method is applied in main steps such as hunting, searching for prey, encircling prey, and attacking prey. The main working logic of grey wolf optimisation is that alpha, beta and delta wolves direct omega wolves according to the order of hierarchy in the hierarchy within the pack and imitate this hierarchical structure. The working logic of the grey wolf optimisation algorithm is given in the following algorithm.

Algorithm 1 Grey wolf optimiser

Step 1. The initial population is created. Uniform distribution is used to generate positions.

Step 2. Alpha, beta, and delta solutions are determined. These are the three best solutions in the population.

Step 3. For each agent in the population, a new agent is created by utilising alpha beta and delta solutions.

Step 4. The population is updated according to whether improvement is achieved with the new solutions obtained. Step 5. Alpha, beta and delta solutions are updated. Here, the previous three best solutions are compared with the new three best solutions.

Step 6. Stop conditions are checked. Steps 3–5 are continued until the stopping conditions are met. If the stop conditions are met, proceed to Step 7. The stop condition is activated when the fitness value does not improve for ten consecutive iterations.

Step 7. The alpha solution is obtained as the solution of the optimisation problem. At this stage, the algorithm reaches the best solution result.

2.2 Intuitionistic fuzzy c-means

Although many different methods are used in clustering data, fuzzy clustering methods offer realistic solutions, especially for the grading of universal cluster elements close to cluster boundaries. In intuitionistic fuzzy clustering, both membership and non-membership values carry two separate important information due to the presence of degrees of hesitation. In the heuristic fuzzy c-means method, iterative equations similar to fuzzy c-means are used to calculate membership values and centres. Let \({u}_{ik}\) denote the membership value of an element of the i-th universal set belonging to the k-th set. Let \({d}_{ik}\) denote the distance value of an element of the i-th universal set belonging to the k-th set. Membership values are calculated with the following formula in intuitionistic fuzzy c-means.

In Eq. (1), \(c\) is the number of fuzzy sets, \(n\) is the number of observations and m is the fuzziness index. Equation (2) is used in the calculation of hesitation degrees. This equation allows obtaining small hesitation values for membership values close to zero and one, and high hesitation values for membership values close to 0.5.

The values of \({u}_{ik}^{*}\) given in Eq. (3) indicate the fuzzy membership value consisting of the sum of the heuristic membership and the degree of hesitation.

Fuzzy membership values are used in the calculation of cluster centres with Eq. (4).

In this formula, \({x}_{k}\) denotes the kth data point.

By controlling the magnitude of the change in the membership values, the algorithm of the intuitionistic fuzzy c-means method is obtained with the successive use of the given formulas.

With the application of intuitionistic fuzzy clustering, membership, non-membership and hesitation values of each observation for each cluster can be obtained.

2.3 Dendrite neuron model

In the literature, different models of the biological neuron can be written. A good mathematical model of the biological neuron is the dendrite neuron model. The dendrite neuron model (DNM) was first proposed by Todo et al. (2014). The dendrite neuron model artificial neural network consists of synaptic, dendrite, membrane and soma layers. In the synaptic layer, different non-linear transformations of all inputs are performed. A separate transformation of each input is performed in each dendrite branch.

In Eq. (5), index \(i\) indicates the input and \(j\) indicates the dendrtie branch. In the dendrite layer, the inputs for each dendrite are combined with a multiplicative merging function using Eq. (6). Thus, each dendrite branch has an output.

In the membrane layer, the information from the branches is combined with the aggregation function as given in Eq. (7).

In the Soma layer, the nonlinear transformation given in Eq. (8) is applied to obtain the output.

Although various training algorithms have been proposed in the literature for training the dendrite neuron model, it is known that artificial intelligence optimisation algorithms can produce successful results for training this network. In the dendrite neuron model, the number of dendrite branches is considered as a hyperparameter and the value of this parameter can be determined by various methods. In the application of artificial intelligence optimisation algorithms, the mean value of the error squares calculated over the training set is preferred as the fitness function.

3 Proposed method

In this study, a new hybrid of intuitionistic fuzzy time series forecasting method and simple exponential smoothing method is proposed. The proposed method can transform into a simple exponential smoothing method by adjusting itself according to the data set. In the proposed method, the time series of interest can be predicted with a complex prediction model or a simple prediction model, or it is possible to realise the weighted prediction of these two approaches. In this respect, the proposed method has a modular and adaptive structure. An important feature of the proposed method is that all parameters in the model can be estimated with a single grey wolf optimiser.

The operation processes of the proposed method are given in Fig. 1. In the proposed method, the real observations of the time series are clustered and membership and non-membership values are obtained for each observation according to the number of predetermined heuristic fuzzy clusters. The outputs of the dendrite neuron models created separately for membership and non-membership values are combined with simple exponential smoothing to obtain the final forecasts. The step-by-step algorithm of the proposed method is given below.

Algorithm 2 The proposed method

Step 1. Possible parameter values of the method are determined. These parameters are listed below.

\(c\): The number of intuitionistic fuzzy clusters

\(p\): The number of lagged variables

\(nd\): The number of dendrite branches

\(ntrain\): The length of the training set

\(nval\): The length of the validation set

\(ntest\): The length of the test set

The possible values of these parameters can be determined according to the components of the analysed time series.

Step 2. Time series data are divided into three as training, validation and test data. The last \(ntest\) of the time series is separated as test data and the remaining initial time series is separated as training data as given in Eqs. (9)–(12).

The training set is used to determine the optimal weight and side values, the validation set is used to select the hyperparameters, and the test data are used for comparisons with alternative methods.

Step 3. The intuitionistic fuzzy c means method is applied to the training data. The matrices of membership (\(\mu )\) and non-membership \((\gamma\)) values are calculated as given in Eqs. (13)–(14).

In the application of the heuristic fuzzy c averages method, the fuzziness index (m) is taken as 2 and the alpha as 0.85.

Step 4. Lagged variables are obtained for each column of the matrices of membership and non-membership values. Thus, delayed membership and non-membership matrices that will be input to dendrite neuron models are obtained as given in Eqs. (15)–(16).

These values are prepared to form inputs to the artificial neural network used.

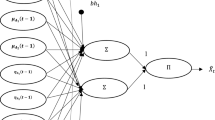

Step 5. The parameters of the modular neural network architecture given in Fig. 2 are estimated by grey wolf optimisation algorithm. Figure 2, the inputs of the network are the lagged variables of memberships and non-memberships for all clusters.

The output of the proposed method is given in the following equations. Synaptic layer calculations can be handled in two parts. In Eq. (17), synaptic calculations are performed with membership values, whilst in Eq. (18), synaptic calculations can be performed with non-membership values.

For membership values, the output of the dendrite branch (\({Z}_{j}^{\mu })\) is calculated by Eq. (19) and for non-membership values, the output of the dendrite branch (\({Z}_{j}^{\gamma })\) is calculated by Eq. (20).

The outputs of the membrane layer are similarly calculated by Eqs. (21)–(22) based on membership and non-membership values.

The outputs of the Soma layer are calculated by Eqs. (23) and (24).

The final output of the dendrite neural network is A, which is the linear combination of the outputs from the membership and non-membership values. This output is calculated by Eq. (25).

The output of the proposed method is calculated as the linear combination of the one-step lagged variable and the intuitionistic fuzzy time series part by Eq. (26).

The grey wolf optimisation algorithm aims to minimise the mean value of the error squares calculated for the training set. The optimisation problem to be solved with grey wolf optimiser is a constrained multivariate optimisation problem and is shown as given in Eq. (27).

In Eq. (27), \(\Xi\) denotes all parameters in the proposed method and its elements are as follows.

\({W}^{\left(1\right)}\),\({W}^{\left(2\right)}\), \({\Theta }^{\left(1\right)}\) and \({\Theta }^{\left(2\right)}\) are \(p\times c\times nd\) dimensional matrices. \({k}_{{\text{soma}}}^{\mu },{\theta }_{{\text{soma}}}^{\mu },{k}_{{\text{soma}}}^{\gamma },{\theta }_{{\text{soma}}}^{\gamma },w\) and \(\lambda\) are scalars. In addition, \(w\) (\(0\le w\le 1\)) and \(\lambda\) \(\left(0\le \lambda \le 1\right)\) may have restricted values in the range between zero and one.

Algorithm 1 is used to solve the optimisation problem (27). In using this algorithm, the values of the solutions corresponding to \(w\) and \(\lambda\) are constrained by Eq. (28).

Step 6. In step 4, forecasts for the validation set are obtained using the optimised parameter values. The best hyperparameter values \({c}_{best}\), \({p}_{best}\) and \({nd}_{best}\) are determined based on the validity set performance.

Step 7. The model is retrained using the best hyperparameter values (\({c}_{best}\), \({p}_{best}\) and \({nd}_{best}\)) and the test set forecasts are obtained.

4 Applications

In this study, four time series are used to compare the performance of the proposed method. The time series used in the application are divided into three parts as training-validation and test set. This allows for a more detailed and comprehensive comparison of the methods to be used in the analysis. In order to compare the performance of the proposed method, the Chen (2002) high-order fuzzy time series solution method, which is one of the classical fuzzy time series methods, the fuzzy time series method based on the multiplicative neuron model (FTS-SMN) proposed in Aladağ (2013), the picture fuzzy regression functions method (PFF) proposed in Bas et al. (2020), and the high-order univariate heuristic fuzzy time series prediction model (IFTS) method in Egrioglu et al. (2019) were used.

The first time series used in the application is the time series of the Index of Coin Market Cap opening prices (CMC-OPEN) between 12/07/2022 and 13/02/2023. The time series contains a total of 150 observations. The graph of the time series is given in Fig. 3. The time series is downloaded from the Yahoo Finance website (https://finance.yahoo.com/).

The results obtained by analysing the time series are given in Table 1. Table 1 presents the mean and standard deviation of the root of mean square error values for 30 different random initial weights. The results for the Chen (2002) method and the best hyperparameter setting are also given in Table 1.

In Table 1 and all other Tables, \({c}_{best}\) is the best number of clusters, \({p}_{best}\) is the best number of lagged variables and \({nd}_{best}\) is the best number of dendrite neurons. Also, (–) indicates that the method does not have a corresponding value for a cell of the table.

Table 1 shows that the mean statistics of the proposed method is smaller than all other methods

The second time series used in the application is the time series of the opening prices of CMC-OPEN between 5/04/2022 and 7/11/2022. The time series contains a total of 150 observations. The graph of the time series is given in Fig. 4. The time series is downloaded from the Yahoo Finance Web site (https://finance.yahoo.com/).

The results obtained by analysing the time series are given in Table 2.

Table 2 shows that the proposed method is the second-best method after Aladağ (2013)

The third time series used in the application is the time series of the opening prices of CMC-OPEN between 11/08/2022 and 16/03/2023. The time series contains a total of 150 observations. The graph of the time series is given in Fig. 5. The time series is downloaded from the Yahoo Finance Web site (https://finance.yahoo.com/).

The results obtained by analysing the time series are given in Table 3.

Table 3 shows that the mean statistics of the proposed method are smaller than all other methods

Finally, the time series of CMC-OPEN opening prices between 16/11/2022 and 23/06/2023. The time series contains a total of 150 observations. The graph of the time series is given in Fig. 6. The time series is downloaded from the Yahoo Finance website (https://finance.yahoo.com/).

The results obtained by analysing the time series are given in Table 4.

Table 4 shows that the mean statistics of the proposed method are smaller than all other methods

5 Conclusions

In this study, for the first time in the literature, a new forecasting method is proposed by integrating the intuitionistic fuzzy time series method with simple exponential smoothing. The dendrite neuron model artificial neural network, which works with delayed values of membership and non-membership values, is hybridised with exponential smoothing and a new architectural structure is presented. The main contribution of this paper is to introduce a new combined forecasting method to the literature.

The proposed method can balance between two simple and two complex forecasting models and can automatically increase the bias towards either one. It has been observed that the proposed method can produce more successful prediction results than the current prediction methods in the literature. Future studies may focus on the development of integrated prediction systems with different exponential smoothing methods. In addition, it is an important research problem to address the method in the concept of explainable artificial intelligence.

Data availability

Data will be made available on request.

References

Abhishekh GSS, Singh SR (2018) A score function-based method of forecasting using intuitionistic fuzzy time series. New Math Nat Comput 14(01):91–111

Abhishekh GSS, Singh SR (2020) A new method of time series forecasting using intuitionistic fuzzy set based on averagelength.J. Ind Prod Eng 37(4):175–185

Aladag CH (2013) Using multiplicative neuron model to establish fuzzy logic relationships. Expert Syst Appl 40(3):850–853

Arslan SN, Cagcag Yolcu O (2022) A hybrid sigma-pi neural network for combined intuitionistic fuzzy time series prediction model. Neural Comput Appl 34(15):12895–12917

Atanassov KT (1986) Intuitionistic fuzzy sets. Fuzzy Sets Syst 20:87–96

Bas E, Yolcu U, Egrioglu E (2020) Picture fuzzy regression functions approach for financial time series based on ridge regression and genetic algorithm. J Comput Appl Math 370:112656

Bas E, Egrioglu E, Kolemen E (2022) A novel intuitionistic fuzzy time series method based on bootstrapped combined pi-sigma artificial neural network. Eng Appl Artif Intell 114:105030

Çakır S (2023) Renewable energy generation forecasting in Turkey via intuitionistic fuzzy time series approach. Renew Energy 214:194–200

Chen SM (2002) Forecasting enrollments based on high-order fuzzy time series. Cybern Syst 33(1):1–16

Chen SM, Chen YC (2002) Automatically constructing membership functions and generating fuzzy rules using genetic algorithms. Cybern Syst 33(8):841–862

Chen SM, Chen CD (2011) Handling forecasting problems based on high-order fuzzy logical relationships. Expert Syst Appl 38(4):3857–3864

Chen SM, Chen SW (2014) Fuzzy forecasting based on two-factors second-order fuzzy-trend logical relationship groups and the probabilities of trends of fuzzy logical relationships. IEEE Trans Cybern 45(3):391–403

Chen SM, Jian WS (2017) Fuzzy forecasting based on two-factors second-order fuzzy-trend logical relationship groups, similarity measures and PSO techniques. Inf Sci 391:65–79

Chen SM, Lee LW (2010) Fuzzy decision-making based on likelihood-based comparison relations. IEEE Trans Fuzzy Syst 18(3):613–628

Chen SM, Randyanto Y (2013) A novel similarity measure between intuitionistic fuzzy sets and its applications. Int J Pattern Recognit Artif Intell 27(7):1350021

Chen SM, Wang NY (2010) Fuzzy forecasting based on fuzzy-trend logical relationship groups. IEEE Trans Syst Man Cybern Part B (cybern) 40(5):1343–1358

Chen SM, Zou XY, Gunawan GC (2019) Fuzzy time series forecasting based on proportions of intervals and particle swarm optimization techniques. Inf Sci 500:127–139

Chen LS, Chen MY, Chang JR, Yu PY (2021) An intuitionistic fuzzy time series model based on new data transformation method. Int J Comput Intell Syst 14(1):550–559

Dixit A, Jain S (2023) Intuitionistic fuzzy time series forecasting method for non-stationary time series data with suitable number of clusters and different window size for fuzzy rule generation. Inf Sci 623:132–145

Egrioglu E, Yolcu U, Bas E (2019) Intuitionistic high-order fuzzy time series forecasting method based on pi-sigma artificial neural networks trained by artificial bee colony. Granul Comput 4:639–654

Fan X, Wang Y, Zhang M (2020) Network traffic forecasting model based on long-term intuitionistic fuzzy time series. Inf Sci 506:131–147

Goyal G, Bisht DC (2023) Adaptive hybrid fuzzy time series forecasting technique based on particle swarm optimization. Granul Comput 8(2):373–390

Hassan SG, Iqbal S, Garg HM, Shuangyin L, Kieuvan TT (2020) Designing intuitionistic fuzzy forecasting model combined with information granules and weighted association reasoning. IEEE Access 8:141090–141103

Kocak C, Egrioglu E, Bas E (2021) A new deep intuitionistic fuzzy time series forecasting method based on long short-term memory. J Super Comput 77:6178–6196

Kocak C, Egrioglu E, Bas E (2023) A new explainable robust high-order intuitionistic fuzzy time-series method. Soft Comput 27(3):1783–1796

Kumar S, Bisht K, Gupta KK (2019) Intuitionistic fuzzy time series forecasting based on dual hesitant fuzzy set for stock market: DHFS-based IFTS model for stock market. Exploring critical approaches of evolutionary computation. IGI Global, Hershey, pp 37–57

Lin HC, Wang LH, Chen SM (2006) Query expansion for document retrieval based on fuzzy rules and user relevance feedback techniques. Expert Syst Appl 31(2):397–405

Meng F, Chen SM, Yuan R (2020) Group decision making with heterogeneous intuitionistic fuzzy preference relations. Inf Sci 523:197–219

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Nik Badrul Alam NMFH, Ramli N, Abd Nassir A (2022a) Predicting Malaysian crude palm oil prices using intuitionistic fuzzy time series forecasting model. ESTEEM Acad J 18:61–70

Nik Badrul Alam NMFH, Ramli N, Mohamed AST, Adnan NIM (2022b) Integration of 4253HT smoother with intuitionistic fuzzy time series forecasting model. PJMS 18(4):929–941

Pant M, Kumar S (2022a) Particle swarm optimization and intuitionistic fuzzy set-based novel method for fuzzy time series forecasting. Granul Comput 7(2):285–303

Pant S, Kumar S (2022b) IFS and SODA based computational method for fuzzy time series forecasting. Expert Syst Appl 209(15):118213

Pant M, Shukla AK, Kumar S (2022) Novel intuitionistic fuzzy time series modeling to forecast the death cases of COVID-19 in India. Smart trends in computing and communications. Springer, Berlin, pp 525–531

Pant M, Bisht K, Negi S (2023) Computational-based partitioning and Strong α, β-cut based novel method for intuitionistic fuzzy time series forecasting. Appl Soft Comput 142:110336

Pattanayak RM, Behera HS, Panigrahi S (2021) A novel probabilistic intuitionistic fuzzy set based model for high order fuzzy time series forecasting. Eng Appl Artif Intell 99:104136

Samal S, Dash R (2023) Developing a novel stock index trend predictor model by integrating multiple criteria decision-making with an optimized online sequential extreme learning machine. Granul Comput 8(3):411–440

Todo Y, Tamura H, Yamashita K, Tang Z (2014) Unsupervised learnable neuron model with nonlinear interaction on dendrites. Neural Netw 60:96–103

Vamitha V, Vanitha V (2022) Intuitionistic fuzzy time series forecasting model: aesthetic approach on temperature prediction. In: AIP conference. AIP Publishing, p 020014

Wang YN, Wang J, Fan X, Song Y (2020) Network traffic anomaly detection algorithm based on intuitionistic fuzzy time series graph mining. IEEE Access 8:63381–63389

Xu SS, Zheng KQ (2019) The Long-term forecasting method for IFTS. In: 2019 international conference on communications, information system and computer engineering (CISCE). IEEE, pp 669–673

Yolcu OC, Yolcu U (2023) A novel intuitionistic fuzzy time series prediction model with cascaded structure for financial time series. Expert Syst Appl 215:119336

Yücesoy E, Egrioglu E, Bas E (2023) A new intuitionistic fuzzy time series method based on the bagging of decision trees and principal component analysis. Granul Comput 8(6):1925–1935

Zadeh LA (1965) Fuzzy sets. Inf Control 8:338–353

Zeng S, Chen SM, Teng MO (2019) Fuzzy forecasting based on linear combinations of independent variables, subtractive clustering algorithm and artificial bee colony algorithm. Inf Sci 484:350–366

Zou XY, Chen SM, Fan KY (2020) Multiple attribute decision making using improved intuitionistic fuzzy weighted geometric operators of intuitionistic fuzzy values. Inf Sci 535:242–253

Acknowledgements

This study is supported by the Higher Education Council (YÖK) 100/2000 priority area "Sustainable Water Resources (Water Saving Technologies and Treatment Technologies)" scholarship.

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Contributions

TC: methodology, conceptualization, writing, data analysis, software. EB: methodology, software, writing, conceptualization. EE: methodology, conceptualization, writing, editing. TA: methodology, conceptualization, writing, editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors do not have any competing interests.

Ethical approval and consent to participate

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cansu, T., Bas, E., Egrioglu, E. et al. Intuitionistic fuzzy time series forecasting method based on dendrite neuron model and exponential smoothing. Granul. Comput. 9, 49 (2024). https://doi.org/10.1007/s41066-024-00474-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41066-024-00474-6