Abstract

Probabilistic linguistic term sets (PLTSs) are an effective tool to express preferences with different weights for different linguistic terms, and the TODIM method is based on prospect theory and could consider the decision maker’s cognitive behavior. In this paper, we extend the TODIM to solve the multi-attribute decision-making (MADM) problems with PLTSs. First of all, the definition, operations, comparative method, and deviation degrees of PLTSs are introduced; a new standardization method for the attribute values was proposed with respect to the situation in which the probabilistic sum for all linguistic terms is less than 1. Then, the objective weights for criteria can be obtained by information entropy theory and the steps of the extended TODIM method for PLTSs are proposed. Finally, an example is to verify the developed approach.

Similar content being viewed by others

1 Introduction

In actual decision-making, there are a large number of qualitative criteria which are hardly evaluated by accurate numerical values. Hence, how to make the assessment of alternatives precisely is pivotal. However, for the complex decision-making problems, decision makers usually provide their opinions by natural language, such as “good”, “fair”, “poor”, and other similar linguistic terms (LTs) (Wu and Xu 2016). Now, decision-making based on LTs has become an important research aspect in the field of decision analysis (Xu and Wang 2016). For example, Xu (2007) gave the goal programming method with LTs for MADM problems. Xu and Wang (2017) solved group decision-making (GDM) problem with multi-granularity linguistic model. Mendel (2016) proposed three approaches to synthesizing an interval type-2 fuzzy set model of LTs.

In the traditional decision-making methods based on LTs, the DMs can express their preferences only by one LT. However, sometimes, it is difficult to depict complex qualitative information only by one LT. For instance, a DM may think that it may be “very good”, “good”, or “a little good” for one object, but he/she is not sure how good it is. In this situation, Rodriguez et al. (2012) proposed hesitant fuzzy LT sets (HFLTSs), which have several possible LTs. Then, Beg and Rashid (2013) and Wei et al. (2014) proposed some aggregation operators based on HFLTSs. Zhu and Xu (2014) proposed some preference relations (HFLPRs) for HFLTSs. Furthermore, Beg and Rashid (2013) proposed an extended TOPSIS method for the HFLTSs. Dong et al. (2015) and Rodríguez et al. (2013) proposed some GDM method with HFLTSs. Wang (2015) proposed the extended HFLTSs (EHFLTSs) for the non-continuous LTs. Liu and Rodriguez (2014) further developed the fuzzy envelopes of HFLTSs and applied them to MADM problems.

However, all possible LTs given by the DMs in HFLTSs have the same importance. Obviously, this is not realistic. In real decision-making, the DMs may prefer some possible LTs and give some different importance degrees. In other words, we can give some possible LTs and then give their importance degrees for evaluating an object (Liu and Rodríguez 2014). This importance degree can be regarded as probabilistic distribution (Wu and Xu 2016), belief degree (Yang 2001; Yang and Xu 2002), and so on. To better depict such a situation, Pang et al. (2016) proposed the probabilistic LT sets (PLTSs) which can express different importance degrees or weights of all the possible LTs. Obviously, PLTSs have the flexibility and richness in expressing complex fuzzy linguistic information.

To do a reasonable and feasible decision-making, the decision-making methods are now essential and a lot of efforts have been made in the past few decades, such as the TOPSIS (Liu 2009), the VIKOR (Chatterjee and Samarjit 2017), the GRA (Liu and Liu 2010), the PROMETHEE (Liu and Guan 2009), the ELECTRE (Liu and Zhang 2011; Roy and Bertier 1972), and so on. Now, these methods have been extended for different attribute values, such as fuzzy numbers, LTs, and so on. However, because the MADM problems have becoming increasingly complicated over the years, there is an obvious shortcoming in the existing methods, which supposes that the DMs are completely rational. Now, many excellent researches involving behavior experiments (Kahneman and Tversky 1979; Tversky and Kahneman 1992) have shown that the DMs are bounded rational in decision-making process, and the psychology and behavior of the DMs are important factors which can obviously influence decision results. Hence, Gomes and Lima first presented the TODIM in 1992 (Gomes and Lima 1992), which is a valuable MADM method considering the DMs psychology and behavior based on prospect theory (PT) (Kahneman and Tversky 1979), and it has been applied to some decision-making problems (Pang et al. 2016). Moreover, its some new extensions gave been developed. Such as Lourenzutti and Krohling (2013) presented an IF-RTODIM for intuitionistic fuzzy numbers (IFNs). Wang et al. (2016) proposed a likelihood function of HFLTSs embedded into TODIM. Ren et al. (2016) developed a Pythagorean fuzzy TODIM approach to MADM problems.

Obviously, the TODIM method is a valuable and important MADM tool considering the DMs psychology and behavior, and its extensions are also very effective in solving the MADM problems for different fuzzy environments, but there is no research about the TODIM method for PLTSs in the existing researches. Consequently, considering the DMs’ psychology and behavior, it will be a valuable research topic about how to solve the MADM problem with PLTSs. In addition, due to the increasing complexity of the decision-making environment, it is usually difficult for DMs to give the weight evaluation information completely. Therefore, it is very necessary to develop a method to obtain the objective weight of each attribute and then to propose an extended TODIM for PLTSs, so that the more comprehensive and reasonable decision can be made in an intricate situation. Therefore, the aims of this paper are (1) to propose the extended TODIM method to process the multi-attribute group decision-making (MAGDM) problems with PLTSs; (2) to explore the normalization method of PLTSs with respect to the situation in which the probabilistic sum for all linguistic terms is less than 1; (3) to develop a weight determination method based on entropy; (4) to show the effectiveness and advantages of the proposed approach.

To achieve this goal, the rest is introduced as follows: some basic concepts of the LTSs, HFLTSs, and PLTSs, and the TODIM were briefly introduced in Sect. 2. Section 3 proposes the extended TODIM to process PLTSs. In Sect. 4, an example is given to demonstrate the validity and advantages of our method. In Sect. 5, we conclude this paper.

2 Preliminaries

2.1 The LTSs and HFLTSs

The LTSs, which are finite and ordered, are regularly used to express DMs opinions for attributes of MADM problems, and they can be defined as follows (Herrera et al. 1995):

where \( S_{\alpha } \) is called a linguistic variable; \( \tau \) is a positive integer. LTSs can meet:

-

(1)

$$ S_{\alpha } \succ S_{\beta } , {\text{if}} \alpha > \beta ; $$

-

(2)

The negation operator is: \( neg\left( {S_{\alpha } } \right) = S_{\beta} \), such that \( \alpha { + } \beta = \tau. \)

To relieve the loss of information, a continuous LTS is obtained from its discrete version by Xu (2012):

Let \( S_{\alpha } ,S_{\beta } \in \overline{S} \) be any two LTs, and then based on the LTS \( \overline{S} \), the operation on \( S_{\alpha } \) and \( S_{\beta } \) can be defined by (Xu and Wang 2017):

where \( \lambda_{1} ,\lambda_{2} \ge 0. \) Furthermore, we gave the definition of HFLTSs (Rodriguez et al. 2013).

Definition 1 (Rodriguez et al. 2013 )

Suppose that \( S = \left\{ {S_{0} ,S_{1} , \ldots ,S_{g} } \right\} \) is an LTS, and then, an HFLTS \( b_{S} \) is defined as a subset of LTs \( S \).

Example 1

Let \( S \) be the following LTS:

Then, we give two examples about HFLTSs:

Simplifying the results above, we get:

Furthermore, Zhu and Xu (2014) gave an operational definition of HFLTS.

Definition 2 (Zhu and Xu 2014)

Suppose that \( b_{\alpha } = \left\{ {b_{\alpha }^{l} |l = 1,2, \ldots ,\# b_{\alpha } } \right\} \) and \( b_{\beta } = \left\{ {b_{\beta }^{l} |l = 1,2, \ldots ,\# b_{\beta } } \right\} \) are any two HFLTSs, such that \( \# b_{\alpha } = \# b_{\beta } \), then

where \( b_{\alpha }^{\rho \left( l \right)} \) and \( b_{\beta }^{\rho \left( l \right)} \) are the lth LTs in \( b_{\alpha } \) and \( b_{\beta } \), respectively, \( \# b_{\alpha } \) and \( \# b_{\beta } \) are the numbers of the LTs in \( b_{\alpha } \) and \( b_{\beta } \), respectively.

2.2 PLTSs

With respect to the shortcoming of HFLTSs which cannot express the probability of possible LTs, Pang et al. (2016) proposed PLTSs.

Definition 3 (Pang et al. 2016)

Suppose that \( S = \left\{ {S_{0} ,S_{1} , \ldots ,S_{\tau } } \right\} \) is an LTS, and then

is defined a PLTS, where \( LT^{(k)} \left( {p^{(k)} } \right) \) is the LT \( LT^{(k)} \) with the probability \( p^{(k)} \), and \( \# LT(p) \) is the number of all different LTs in \( LT(p) \).

We can note that if \( \sum\nolimits_{k = 1}^{\# LT(p)} {p^{(k)} = 1} \), then the PLTS is with complete probabilistic information; if \( \sum\nolimits_{k = 1}^{\# LT(p)} {p^{(k)} < 1} \), then the PLTS is with partial probabilistic information; if \( \sum\nolimits_{k = 1}^{\# LT(p)} {p^{(k)} = 0} \), then the PLTS is with completely unknown probabilistic information.

Definition 4 (Pang et al. 2016)

Suppose that \( LT(p) = \left\{ {LT^{(k)} \left( {p^{(k)} } \right)|k = 1,2, \ldots ,\# LT(p)} \right\} \) is a PLTS, and \( r^{(k)} \) is the subscript of LT \( LT^{(k)} \). If \( LT^{(k)} \left( {p^{(k)} } \right) \) \( \left( {k = 1,2, \ldots ,\# LT(p)} \right) \) are ranked according to the values of \( r^{(k)} p^{(k)} \left( {k = 1,2, \ldots ,\# LT(p)} \right) \) in descending order, then \( LT(p) \) is called an ordered PLTS,

Example 2

Suppose that the LTS S is the set used in Example 1, and then, it can be denoted by the PLTS \( LT(p) = \left\{ {S_{4} \left( {0.1} \right),S_{5} \left( {0.65} \right),S_{6} \left( {0.2} \right)} \right\} \). We can also calculate \( r^{(k)} p^{(k)} \left( {k = 1,2,3} \right) \), and get \( 4 \times 0.1 = 0.4, 5 \times 0.65 = 3.25, 6 \times 0.2 = 1.2 \). Reordering the LTs in \( LT(p) \) in descending order, we have

In the following, Pang et al. (2016) come up with some basic operations:

Definition 5 (Pang et al. 2016)

Let \( LT_{1} (p) \) and \( LT_{2} (p) \) be two ordered PLTSs, \( LT_{1} (p) = \left\{ {LT_{1}^{(k)} \left( {p_{1}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{1} (p)} \right\} \) and \( LT_{2} (p) = \left\{ {LT_{2}^{(k)} \left( {p_{2}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{2} (p)} \right\} \). Then

where \( LT_{1}^{(k)} \) and \( LT_{2}^{(k)} \) are the \( k \) th LTs in \( LT_{1} (p) \) and \( LT_{2} (p) \), respectively, \( p_{1}^{(k)} \) and \( p_{2}^{(k)} \) are the probabilities of the \( k \) th LTs in \( LT_{1} (p) \) and \( LT_{2} (p) \), respectively.

2.3 The comparison for two PLTSs

First, we introduced the score of PLTS which is defined by Pang et al. (2016) as follows.

Definition 6 (Pang et al. 2016)

Let \( LT(p) = \left\{ {LT^{(k)} \left( {p^{(k)} } \right)|k = 1,2, \ldots ,\# LT(p)} \right\} \) be a PLTS, and \( r^{(k)} \) is the subscript of LT \( LT^{(k)} \). The score of \( LT(p) \) is given as follows:

where \( \bar{\alpha } = {{\sum\nolimits_{{k = 1}}^{{\# LT(p)}} {r^{{(k)}} p^{{(k)}} } } \mathord{\left/ {\vphantom {{\sum\limits_{{k = 1}}^{{\# LT(p)}} {r^{{(k)}} p^{{(k)}} } } {\sum\limits_{{k = 1}}^{{\# LT(p)}} {p^{{(k)}} } }}} \right. \kern-\nulldelimiterspace} {\sum\nolimits_{{k = 1}}^{{\# LT(p)}} {p^{{(k)}} } }} \)

For any two PLTSs \( LT_{1} (p) \) and \( LT_{2} (p) \), if \( E\left( {LT_{1} (p)} \right) < E\left( {LT_{2} (p)} \right) \), then \( LT_{1} (p) \prec LT_{2} (p) \); if \( E\left( {LT_{1} (p)} \right) > E\left( {LT_{2} (p)} \right) \), then \( LT_{1} (p) \succ LT_{2} (p) \). However, if \( E\left( {LT_{1} (p)} \right) = E\left( {LT_{2} (p)} \right) \), then two PLTSs cannot be compared by their scores. To solve this problem, Pang et al. (2016) further defined the deviation degree of a PLTS as follows:

Definition 7 (Pang et al. 2016)

Suppose that \( LT(p) = \left\{ {LT^{(k)} \left( {p^{(k)} } \right)|k = 1,2, \ldots ,\# LT(p)} \right\} \) is a PLTS, and \( r^{(k)} \) is the subscript of LT \( L^{(k)} \), and \( E\left( {LT(p)} \right) = S_{{\bar{\alpha }}} \), where \( \bar{\alpha } = {{\sum\nolimits_{k = 1}^{\# LT(p)} {r^{(k)} p^{(k)} } } \mathord{\left/ {\vphantom {{\sum\nolimits_{k = 1}^{\# LT(p)} {r^{(k)} p^{(k)} } } {\sum\nolimits_{k = 1}^{\# LT(p)} {p^{(k)} } }}} \right. \kern-0pt} {\sum\nolimits_{k = 1}^{\# LT(p)} {p^{(k)} } }} \). The deviation of \( LT(p) \) is:

For two PLTSs \( LT_{1} (p) \) and \( LT_{2} (p) \), if \( E\left( {LT_{1} (p)} \right) = E\left( {LT_{2} (p)} \right) \) and \( \bar{\sigma }\left( {LT_{1} (p)} \right) > \bar{\sigma }\left( {LT_{2} (p)} \right) \), then \( LT_{1} (p) \prec LT_{2} (p) \); and if \( \bar{\sigma }\left( {LT_{1} (p)} \right) = \bar{\sigma }\left( {LT_{2} (p)} \right) \), then \( LT_{1} (p) \) is indifferent to \( L_{2} (p) \), denoted by \( LT_{1} (p) \sim LT_{2} (p) \). Therefore, there is the following definition about comparison for two PLTSs.

Definition 8 (Pang et al. 2016)

Given two PLTSs \( LT_{1} (p) \) and \( LT_{2} (p) \), then If \( E\left( {LT_{1} (p)} \right) > E\left( {LT_{2} (p)} \right) \), then \( LT_{1} (p) \succ LT_{2} (p) \). else if \( E\left( {LT_{1} (p)} \right) = E\left( {LT_{2} (p)} \right) \), then (i) If \( \sigma \left( {LT_{1} (p)} \right) > \sigma \left( {LT_{2} (p)} \right) \), then \( LT_{1} (p) \prec LT_{2} (p) \). else if \( \sigma \left( {LT_{1} (p)} \right) < \sigma \left( {LT_{2} (p)} \right) \), then \( LT_{1} (p) \succ LT_{2} (p) \).

Definition 9 (Pang et al. 2016)

Let \( LT_{1} (p) = \left\{ {LT_{1}^{(k)} \left( {p_{1}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{1} (p)} \right\} \) and \( LT_{2} (p) = \left\{ {LT_{2}^{(k)} \left( {p_{2}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{2} (p)} \right\} \) be any two PLTSs, \( \#LT_{1} (p) = \# LT_{2} (p) \). Then, the distance between \( LT_{1} (p) \) and \( LT_{2} (p) \) is defined as:

2.4 The traditional TODIM method

The TODIM is proposed based on prospect theory (Kahneman and Tversky 1979), and its main advantage is the capability of capturing the DMs behavior. The steps of the traditional TODIM approach are shown as follows (Gomes and Lima 1992):

For convenience, let \( M = \left\{ {1,2, \ldots ,m} \right\} \) and \( N = \left\{ {1,2, \ldots ,n} \right\} \).

-

Step 1

Identify the decision matrix \( X = \left( {x_{ij} } \right)_{m \times n} \), where \( x_{ij} \) is the jth attribute value with respect to the ith alternative, and then normalize \( X = \left( {x_{ij} } \right)_{m \times n} \) into \( G = \left( {g_{ij} } \right)_{m \times n} \), and \( x_{ij} \) and \( g_{ij} \) are all crisp numbers, \( i \in M,j \in N \).

-

Step 2

Calculate the relative weight \( w_{jr} \) of the attribute \( C_{j} \) to the reference attribute \( C_{r} \) by:

$$ w_{jr} = {\raise0.7ex\hbox{${w_{j} }$} \!\mathord{\left/ {\vphantom {{w_{j} } {w_{r} }}}\right.\kern-0pt} \!\lower0.7ex\hbox{${w_{r} }$}}, \, r,j \in N, $$(14)where \( w_{j} \) is the weight of the attribute \( C_{j} \) and \( w_{r} = \hbox{max} \left\{ {w_{j} |j \in N} \right\} \).

-

Step 3

Obtain the dominance degree of alternative \( x_{i} \) over the alternative \( x_{t} \) using the following expression:

$$ \vartheta \left( {x_{i} ,x_{t} } \right) = \sum\limits_{j = 1}^{n} {\phi_{j} } \left( {x_{i} ,x_{t} } \right), \, \forall \left( {i,t} \right), $$(15)where

$$ \phi _{j} \left( {x_{i} ,x_{t} } \right) = \left\{ {\begin{array}{ll} {\sqrt {w_{{jr}} (g_{{ij}} - g_{{tj}} )/\sum\nolimits_{{j = 1}}^{n} {w_{{jr}} } } ,} \hfill & {{\text{if }}\quad g_{{ij}} - g_{{tj}} > 0,} \hfill \\ 0 \hfill & {{\text{if }}\quad g_{{ij}} - g_{{tj}} = 0,} \hfill \\ { - \frac{1}{\theta }\sqrt {\left( {\sum\nolimits_{{j = 1}}^{n} {w_{{jr}} } } \right)\left( {g_{{ij}} - g_{{tj}} } \right)/w_{{jr}} } } \hfill & {{\text{if }}\quad g_{{ij}} - g_{{tj}} < 0,} \hfill \\ \end{array} } \right. $$(16)The function \( \phi_{j} \left( {x_{i} ,x_{t} } \right) \) is the contribution of the attribute \( C_{j} \) to \( \vartheta \left( {x_{i} ,x_{t} } \right) \). The \( \theta \) is the DM’ attenuation parameter about the losses which is explained by prospect theory (Kahneman and Tversky 1979). In Eq. (16), three cases can occur: (1) if \( g_{ij} - g_{tj} > 0 \), then \( \phi_{j} \left( {x_{i} ,x_{t} } \right) \) represents a gain; (2) if \( g_{ij} - g_{tj} = 0 \), then \( \phi_{j} \left( {x_{i} ,x_{t} } \right) \) represents a nil; (3) if \( g_{ij} - g_{tj} < 0 \), then \( \phi_{j} \left( {x_{i} ,x_{t} } \right) \) represents a loss.

-

Step 4

Get the overall prospect value of the alternative \( x_{i} \) by

-

Step 5

Sort the alternatives by their overall prospect values \( \delta \left( {x_{i} } \right)\left( {i \in M} \right) \, \).

3 The extended TODIM method for MADM problems with PLTSs

3.1 Description of the MADM problems

For a MADM problem with PLTSs, let \( x = \left\{ {x_{1} ,x_{2} , \ldots ,x_{m} } \right\} \) be a finite set of alternatives and \( C = \left\{ {C_{1} ,C_{2} , \ldots ,C_{n} } \right\} \) be a set of attributes. Based on the LTS \( S = \left\{ {S_{\alpha } |\alpha = 0,1, \ldots ,\tau } \right\} \), the DMs evaluate the alternatives \( x_{i} \left( {i = 1,2, \ldots ,m} \right) \) for the attributes \( C_{j} \left( {j = 1,2, \ldots ,n} \right) \), and give the evaluation results by PLTSs \( LT_{ij} (p) = \left\{ {LT_{ij}^{(k)} \left( {p_{ij}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{ij} (p)} \right\} \), where \( LT_{ij}^{(k)} \left( {k = 1,2, \ldots ,\# LT_{ij} (p)} \right) \) are LTs with the corresponding probability \( p_{ij}^{(k)} \left( {k = 1,2, \ldots ,\# LT_{ij} (p)} \right) \), \( p_{ij}^{(k)} > 0 \), \( k = 1,2, \ldots ,\# LT_{ij} (p) \), and \( \sum\nolimits_{k = 1}^{{\# LT_{ij} (p)}} {p_{ij}^{(k)} } \le 1 \). All the PLTSs \( LT_{ij} (p)\left( {i = 1,2, \ldots ,m,j = 1,2, \ldots ,n} \right) \) are used to build the decision matrix \( R = \left[ {LT_{ij} (p)} \right]_{m \times n} \), and the goal is to select the best alternative.

3.2 The normalization of PLTSs

As mentioned in Sect. 2.2, there is existing partial probabilistic information when \( \sum\nolimits_{k = 1}^{\# LT(p)} {p^{(k)} < 1} \). To estimate the unknown part of probabilistic information, we consider continuous re-distribution of the missing probability by a recursive method for a PLTS \( LT(p) \) with \( \sum\nolimits_{k = 1}^{\# LT(p)} {p^{(k)} < 1} \). It will guarantee the unchanged preference for each expert by this way, and then, the associated steps are shown as following:

For convenience, we use \( S_{l}^{(k)} \) to express the probability of the LT \( L^{(k)} \) after the lth iteration, and use \( \mu_{l} \) to express the ignorance of probabilistic information after the lth iteration.

Let \( \sum\nolimits_{k = 1}^{\# LT(p)} {p^{(k)} = a < 1} \), we can first obtain the associated probability of \( LT^{(k)} \left( {k = 1,2, \ldots ,\# LT(p)} \right) \), note that as \( S_{1}^{(k)} = p^{(k)} + p^{(k)} \left( {1 - a} \right) \), then the uncertain probability can be calculated by \( \zeta_{1} = 1 - \sum\nolimits_{k = 1}^{\# LT(p)} {S_{1}^{(k)} } = 1 - \sum\nolimits_{k = 1}^{\# LT(p)} {p_{{}}^{(k)} \left( {2 - a} \right)} \).

Next, we repeat the above process in the following:

\( S_{3}^{(k)} = S_{2}^{(k)} + S_{2}^{(k)} \cdot \zeta_{2} = S_{2}^{(k)} \left( {1{ + }\left( {\zeta_{1} } \right)^{2} } \right), \)

Therefore, we transform preceding formula in the following form:

Because \( 0 < \zeta_{1} < 1, \)

From the course of calculability, we can conclude the normalized form for a PLTS \( LT(p) \) with \( \sum\nolimits_{k = 1}^{\# LT(p)} {p^{(k)} < 1} \) by

where \(p^{{\prime (k)}} = p^{{(k)}}/p^{{(k)}} \sum\nolimits_{{k = 1}}^{{\# LT(p)}} {p^{{(k)}} } {\text{ }} - 0pt\sum\nolimits_{{k = 1}}^{{\# LT(p)}} {p^{{(k)}} } \).

In addition, sometimes, the numbers of LTs in PLTSs are usually different, it is necessary to standardize the cardinality of a PLTS for the convenience of computing.

Definition 10 (Pang et al. 2016)

Let \( LT_{1} (p) = \left\{ {LT_{1}^{(k)} \left( {p_{1}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{1} (p)} \right\} \) and \( LT_{2} (p) = \left\{ {LT_{2}^{(k)} \left( {p_{2}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{2} (p)} \right\} \) be any two PLTSs, and let \( \# LT_{1} (p) \) and \( \# LT_{2} (p) \) be the numbers of LTs in \( LT_{1} (p) \) and \( LT_{2} (p) \), respectively. If \( \# LT_{1} (p) > \# LT_{2} (p) \), then the \( \# LT_{1} (p) - \# LT_{2} (p) \) LTs need to be added to \( LT_{2} (p) \) and make the numbers of LTs in \( LT_{1} (p) \) and \( LT_{2} (p) \) equal. The added LTs are the smallest ones in \( LT_{2} (p) \), and their probabilities should be zero.

Let \( LT_{1} (p) = \left\{ {LT_{1}^{(k)} \left( {p_{1}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{1} (p)} \right\} \) and \( LT_{2} (p) = \left\{ {LT_{2}^{(k)} \left( {p_{2}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{2} (p)} \right\} \) be any two PLTSs, and then, the normalization can be performed as follows:

-

(1)

If \( \sum\nolimits_{k = 1}^{\# LT(p)} {p_{i}^{(k)} < 1} \), then by the formula (18), we calculate \( LT^{\prime}_{i} (p),i = 1,2 \).

-

(2)

If \( \# LT_{1} (p) \ne \# LT_{2} (p) \), then according to Definition 10, we add some elements to the one with the smaller number of elements.

Example 3

Let \( LT_{1} (p) = \left\{ {S_{3} \left( {0.30} \right),S_{2} \left( {0.30} \right),S_{1} \left( {0.30} \right)} \right\} \) and \( LT_{2} (p) = \left\{ {S_{2} \left( {0.60} \right),S_{3} \left( {0.40} \right)} \right\} \) be two PLTSs, and then: (1) according to formula (18), \( LT^{\prime}_{1} (p) = \left\{ {S_{3} \left( {0.333} \right),S_{2} \left( {0.333} \right),S_{1} \left( {0.333} \right)} \right\} \); (2) since \( \# LT_{2} (p) < \# LT_{1} (p) \), then we add the LT \( S_{2} \) to \( LT_{2} (p) \) and make the numbers of LTs in \( LT_{1} (p) \) and \( LT_{2} (p) \) identical, and thus, we have \( LT^{\prime}_{2} (p) = \left\{ {S_{2} \left( {0.6} \right),S_{3} \left( {0.4} \right),S_{2} \left( 0 \right)} \right\} \).

3.3 Determining objective weights based on entropy measures

It is important to determine a reasonable weight for each attribute in the course of decision-making. Because the DMs are usually influenced by their knowledge structure, personal bias, and familiarity with the decision alternatives, it is necessary to consider the MADM problem with completely unknown weights of criteria, and we need develop a weight determination method based on entropy under probabilistic linguistic environment. The steps are shown as follows.

First, transformed decision matrix \( R = \left[ {LT_{ij} (p)} \right]_{m \times n} \) into \( Z = \left[ {\overline{{LP_{ij} }} (p)} \right]_{m \times n} \), where \( \overline{{LT_{ij} }} (p) = {{\sum\nolimits_{k = 1}^{{\# LT_{ij} (p)}} {r^{(k)} p^{(k)} } } \mathord{\left/ {\vphantom {{\sum\nolimits_{k = 1}^{{\# LT_{ij} (p)}} {r^{(k)} p^{(k)} } } {\# LT_{ij} (p)}}} \right. \kern-0pt} {\# LT_{ij} (p)}}. \) Next, calculate the entropy for attribute, the entropy values for the jth attribute are

Then, the weight of each attribute can be defined by the following:

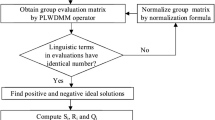

3.4 Procedure for probabilistic linguistic TODIM method

In this sub-section, we will give decision-making steps of the extended TODIM method for the MAGDM problems with the PLTSs.

-

Step 1

Standardize decision matrix

In general, there are the benefit type and cost type in the attributes. To keep all attributes compatible, we can transform the cost type into benefit one as follows.

where \( \left( {LT_{ij} (p)} \right)^{c} \) is complement of \( LT_{ij} (p) \), \( \left( {LT_{ij}^{{}} (p)} \right)^{c} = \left\{ {neg(LT_{ij}^{(k)} )\left( {p_{ij}^{(k)} } \right)|k = 1,2, \ldots ,\# LT_{ij} (p)} \right\} \). In addition, we need to normalize each attribute value according to the above steps. First, if \( \sum\nolimits_{k = 1}^{{\# LT_{ij} (p)}} {p_{ij}^{(k)} < 1} \), and then, by the formula (18), we calculate \( LT^{\prime}_{ij} (p) \). Then, if the numbers of LTs in \( LT_{ij} (p) \) are not equal, then we need do a normalization according to Definition 6.

-

Step 2

Obtain attribute weight vector \( \omega_{j} = \left( {\omega_{1} ,\omega_{2} , \ldots ,\omega_{n} } \right)^{T} \) of the \( \left\{ {C_{1} ,C_{2} , \ldots ,C_{n} } \right\} \) by Eqs. (19) and (20).

-

Step 3

Obtain the relative weight \( w_{jr} \) of the attribute \( C_{j} \) to the reference \( C_{r} \) by

where \( w_{j} \) is the weight of the attribute \( C_{j} \) and \( w_{r} = \hbox{max} \left\{ {w_{j} |j \in N} \right\}. \)

-

Step 4

Obtain the dominance of each alternative \( x_{i} \) over each alternative \( x_{t} \) by

where

-

Step 5

Obtain the overall prospect value of the alternative \( x_{i} \) by

-

Step 6

Sort the alternatives by \( \delta \left( {x_{i} } \right)\left( {i \in M} \right) \, \). The bigger the dominance degree \( \delta \left( {x_{i} } \right) \) is, the better alternative \( A_{i} \) is.

4 An example

In this part, we cited an example from Pang et al. (2016) to show the application and the steps of the proposed approach.

A company wants to develop large projects for the future 5 years, and five members are invited to evaluate them. Three initially selected projects \( x_{i} \left( {i = 1,2,3} \right) \) are evaluated by four attributes (suppose that all attributes are benefit type) and include (1) \( C_{1} \): economic and social perspective; (2) \( C_{2} \): the service satisfaction perspective; (3) \( C_{3} \): market perspective; (4) \( C_{4} \): growth perspective. Suppose that their weight vector is completely unknown. The goal is to rank the three projects.

4.1 The steps of the probabilistic linguistic TODIM method

We can solve this problem by the proposed probabilistic linguistic TODIM method, and the steps are shown as follows.

-

Step 1

The five members used the following LTS:

\( S = \left\{ {S_{0} = {\text{none, }}S_{1} = {\text{ very low, }}S_{2} = {\text{low, }}S_{3} {\text{ = medium, }}S_{4} {\text{ = high}},S_{5} {\text{ = very high, }}S_{6} = {\text{perfect}}} \right\} \) to evaluate the projects \( x_{i} \left( {i = 1,2,3} \right) \) by selecting a LT. The original information given five members is shown in Tables 1, 2, 3, 4, and 5. Note that the blanks “–“of Tables 1, 2, 3, 4, and 5 mean that the DM cannot give the evaluation information. By directly synthesizing the information from the five tables, we can obtain the group’s decision matrix (Table 6) by the PLTSs; for example, about evaluation information of \( x_{2} \) with respect to \( C_{2} \), because one of five members selects \( s_{2} \), two select \( s_{3} \), one selects \( s_{4} \), and one cannot give the evaluation information; this result can be expressed by PLTS \( \left\{ {s_{3} \left( {0.40} \right),s_{4} \left( {0.20} \right),s_{2} \left( {0.20} \right)} \right\} \), and because 0.4 + 0.2 + 0.2 < 1, we can normalize it to \( \left\{ {s_{3} \left( {0.50} \right),s_{4} \left( {0.25} \right),s_{2} \left( {0.25} \right)} \right\} \). Using formulas (18) and Definition 10, the normalized matrix is shown in Table 7.

If there is same weight for the DMs, it can be done as mentioned above. Otherwise, we can determine the weight of LT for each PLTS according to the weight vector of DMs, respectively. For example, suppose that the weight vector of DMs is \( \omega_{{}} = \left( {0.2,0.1,0.3,0.15,0.25} \right)^{T} \), about evaluation information of \( x_{2} \) with respect to \( C_{2} \), two DMs select \( s_{3} \) with weights 0.2 and 0.25, one DM selects \( s_{4} \) with weight 0.15, and last one DM selects \( s_{2} \) with weight 0.3, and then, we can get the result by PLTS \( \left\{ {s_{3} \left( {0.45} \right),s_{4} \left( {0.15} \right),s_{2} \left( {0.3} \right)} \right\}. \)

-

Step 2

Calculate the attribute weight vector \( \omega_{j} = \left( {\omega_{1} ,\omega_{2} , \ldots ,\omega_{n} } \right)^{T} \) by Eqs. (14) and (15), and we can get

$$ H_{j} = \left( { - 0.457, - 0.278, - 0.36, - 1.003} \right)^{T} $$$$ \omega_{j} = \left( {0.239,0.210,0.223,0.328} \right)^{T}. $$ -

Step 3

Obtain the relative weight \( w_{jr} \) of the attribute \( C_{j} \) to the reference attribute \( C_{r} \)

Since \( w_{4} = \hbox{max} \left\{ {w_{1} ,w_{2} ,w_{3} ,w_{4} } \right\} \), then \( C_{4} \) is the reference attribute and the reference weight is \( w_{r} = 0.328 \). Therefore, the relative weights for all the attributes \( C_{j} \left( {j = 1,2,3,4} \right) \) are \( w_{1r} = 0.729 \), \( w_{2r} = 0.640 \), \( w_{3r} = 0.680 \), and \( w_{4r} = 1 \), respectively.

-

Step 4

Obtain the dominance of each alternative \( x_{i} \) over each alternative \( x_{t} \) by Eqs. (23) and (24) (\( \theta = 1 \)).

For each attribute \( C_{j} \), we can get the dominance degree matrices according to the Eq. (24) as follows:

Then, we can get the overall dominance degree between alternatives by Eq. (23):

-

Step 5

Obtain the overall prospect value of the alternative \( x_{i} \) according to the Eq. (25), and then, we can get the results \( \delta \left( {x_{i} } \right)\left( {i = 1,2,3} \right) \) shown in Table 8.

Table 8 Overall prospect values for all alternatives -

Step 6

Sort the alternatives by their \( \delta \left( {x_{i} } \right) \). The bigger \( \delta \left( {x_{i} } \right) \) is, the better alternative \( A_{i} \) is, we get

$$ x_{1} \succ x_{3} \succ x_{2} . $$Therefore, the best choice is \( x_{1} .\)

4.2 Effect from the attenuation factor of the losses

Kahneman and Tversky (1979) suggested that the parameter \( \theta \) can get the value from 1.0 to 2.5, so we can rank the three projects according to the different value \( \theta \) by step 0.1, and the ranking results are listed in Table 9.

In Table 9, we can notice the values of \( \theta \) from 1 to 2.5 by adding 0.1 for each simulation and then record the ranking results. As can be seen from the results, the change of the \( \theta \) from \( \theta = 1 \) to \( \theta = 2. 5 \) has no effect on the ranking results. In other words, the ranking results are usual consistent with all the change of the attenuation index of losses \( \theta \).

4.3 Further discussions for the case

To verify the effective and explain the advantages of the proposed method, we can compare with the existing methods.

4.3.1 Compare with the methods based on probabilistic linguistic information proposed by Pang et al. (2016)

This example got from reference (Pang et al. 2016), so we can directly compare with it. From the ranking results of the alternatives, there is a ranking result \( x_{1} \succ x_{3} \succ x_{2} \) in (Pang et al. 2016), so we can get the same ranking result from these two methods. This will show the effectiveness of the proposed method.

The advantage of the method proposed by Pang et al. (2016) is that it can consider the preferences in qualitative setting, namely, express the attributes with several possible LTs. Naturally, the proposed method in this paper remains the same advantage. Furthermore, the proposed method can consider the DMs’ psychology and behavior, and can produce more reasonable ranking result, while the method proposed by Pang et al. (2016) has not this characteristic. Obviously, our proposed method is more reasonable and can also get a better decision result, because the proposed method can effectively consider the DMs’ psychology and behavior.

4.3.2 Compare with the TOPSIS method based on the traditional HFLTSs proposed by Pang et al. (2016)

The ranking of the proposed method by Pang et al. (2016) is \( x_{3} \succ x_{1} \succ x_{2} \). Obviously, it is different from the result produced by the propose method. The main reason is that the proposed TOPSIS method based on the traditional HFLTSs can note use the original probabilistic information in the PLTSs, so it can produce the distortion of decision results. However, the proposed method in this paper can give the comprehensive values of each alternative by fully using probabilistic information, and further give the ranking results. In addition, our proposed method can consider the DMs’ psychology and behavior. Furthermore, this method is able to capture the loss and gain under uncertainty from the view of reference point. Especially, when the DM is more sensitive to the loss, the proposed method can be regarded as a useful bounded rationality behavioral decision-making method.

5 Conclusion

In this paper, we explore an extended TODIM method to process the information of PLTSs. We first introduced the some basic knowledge of PLTSs and the TODIM method. Then, we proposed probabilistic linguistic TODIM method for MADM and describe the operational processes in detail. Finally, an example is given to describe the decision steps of developed method and to verify its effectiveness. Its prominent characteristic is that it can consider the decision maker’s psychological behavior. Therefore, it is more flexible for processing probabilistic linguistic MAGDM problems. Because the DMs are more sensitive to the loss and their bounded rationality, there is urgent need about the probabilistic linguistic TODIM method to solve the related MADM problems. In the further research, it is necessary and meaningful to extend some new methods based on the PLTSs, because the PLTSs are an effective mathematical approach of depicting preferences with different weights in qualitative setting; for example, the VIKOR method or GRA method is extended to process the PLTSs. Meanwhile, we can further study MADM problems on information aggregation operators with PLTSs or interval-valued PLTS environments.

References

Beg I, Rashid T (2013) TOPSIS for Hesitant fuzzy linguistic term sets. Int J Intell Syst 28(12):1162–1171

Chatterjee K, Samarjit K (2017) Unified Granular-number-based AHP-VIKOR multi-criteria decision framework. Granular Computing, pp 1–23

Dong YC, Chen X, Herrera F (2015) Minimizing adjusted simple terms in the consensus reaching process with hesitant linguistic assessments in group decision making. Inf Sci 297:95–117

Gomes LFAM, Lima MMPP (1992) TODIM: basics and application to multicriteria ranking of projects with environmental impacts. Found Comput Decis Sci 16(4):113–127

Herrera F, Herrera-Viedma E, Verdegay JL (1995) A sequential selection process in group decision making with a linguistic assessment approach. Inf Sci 85:223–239

Kahneman D, Tversky A (1979) Prospect theory: an analysis of decision under risk. Econometrica 47:263–291

Liu PD (2009) Multi-attribute decision-making method research based on interval vague set and TOPSIS method. Technol Econ Dev Econ 3:453–463

Liu PD, Guan ZL (2009) Evaluation research on the quality of the railway passenger service based on the linguistic variables and the improved PROMETHEE-II method. JCP 4(3):265–270

Liu WL, Liu PD (2010) Hybrid multiple attribute decision making method based on relative approach degree of grey relation projection. Afr J Bus Manag 4(17):3716–3724

Liu HB, Rodriguez RM (2014) A fuzzy envelope for hesitant fuzzy linguistic term set and its application to multicriteria decision making. Inf Sci 258:220–238

Liu PD, Zhang X (2011) Research on the supplier selection of supply chain based on entropy weight and improved ELECTRE-III method. Int J Prod Res 49(3):637–646

Lourenzutti R, Krohling RA (2013) A Study of TODIM in a Intuitionistic fuzzy and random environment. Expert Syst Appl 40(16):6459–6468

Mendel JM (2016) A comparison of three approaches for estimating (synthesizing) an interval type-2 fuzzy set model of a linguistic term for computing with words. Granul Comput 1(1):59–69

Pang Q, Wang H, Xu ZS (2016) Probabilistic linguistic term sets in multi-attribute group decision making. Inf Sci 369:128–143

Ren PJ, Xu ZS, Gou XJ (2016) Pythagorean fuzzy TODIM approach to multi-criteria decision making. Appl Soft Comput 42:246–259

Rodriguez RM, Martinez L, Herrera F (2012) Hesitant fuzzy linguistic term sets for decision making. IEEE Trans Fuzzy Syst 20:109–119

Rodriguez RM, Martinez L, Herrera F (2013) A group decision making model dealing with comparative linguistic expressions based on hesitant fuzzy linguistic term sets. Inf Sci 241:28–42

Roy B, Bertier B (1972) La metode ELECTRE II, In: Sixieme Conference Internationale de Rechearche Operationelle, Dublin

Tversky A, Kahneman D (1992) Advances in prospect theory: cumulative representation of uncertainty. Risk Uncertain 5:297–323

Wang H (2015) Extended hesitant fuzzy linguistic term sets and their aggregation in group decision making. Int J Comput Intell Syst 8(1):14–33

Wang J, Wang JQ, Zhang HY (2016) A likelihood-based TODIM approach based on multi-hesitant fuzzy linguistic information for evaluation in logistics outsourcing. Comput Ind Eng 99:287–299

Wei CP, Zhao N, Tang XJ (2014) Operators and comparisons of hesitant fuzzy linguistic term sets. IEEE Trans Fuzzy Syst 22(3):575–585

Wu ZB, Xu JP (2016) Possibility distribution-based approach for MAGDM with hesitant fuzzy linguistic information. IEEE Trans Cybern 46(3):694–705

Xu ZS (2007) A method for multiple attribute decision making with incomplete weight information in linguistic setting. Knowl Based Syst 20:719–725

Xu ZS (2012) Linguistic decision making: theory and methods. Springer, New York

Xu ZS, Wang H (2016) Managing multi-granularity linguistic information in qualitative group decision making: an overview. Granul Comput 1(1):21–35

Xu ZS, Wang H (2017) On the syntax and semantics of virtual linguistic terms for information fusion in decision making. Inf Fusion 34:43–48

Yang JB (2001) Rule and utility based evidential reasoning approach for multiattribute decision analysis under uncertainties. Eur J Oper Res 131:31–61

Yang JB, Xu DL (2002) On the evidential reasoning algorithm for multiple attribute decision analysis under uncertainty. IEEE Trans Syst Man Cybern Part A Syst Hum 32(3):289–304

Zhu B, Xu ZS (2014) Consistency measures for hesitant fuzzy linguistic preference relations. IEEE Trans Fuzzy Syst 22(1):35–45

Acknowledgements

This paper is supported by the National Natural Science Foundation of China (Nos. 71471172 and 71271124), Shandong Provincial Social Science Planning Project (Nos. 15BGLJ06, 16CGLJ31 and 16CKJJ27), the Special Funds of Taishan Scholars Project of Shandong Province (No. ts201511045), the Teaching Reform Research Project of Undergraduate Colleges and Universities in Shandong Province (2015Z057), and Key research and development program of Shandong Province (2016GNC110016).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, P., You, X. Probabilistic linguistic TODIM approach for multiple attribute decision-making. Granul. Comput. 2, 333–342 (2017). https://doi.org/10.1007/s41066-017-0047-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41066-017-0047-4