Abstract

Recently, deep learning techniques are gradually replacing traditional statistical and machine learning models as the first choice for price forecasting tasks. In this paper, we leverage probabilistic deep learning for inferring the volatility index VIX. We employ the probabilistic counterpart of WaveNet, Temporal Convolutional Network (TCN), and Transformers. We show that TCN outperforms all models with an RMSE around 0.189. In addition, it has been well known that modern neural networks provide inaccurate uncertainty estimates. For solving this problem, we use the standard deviation scaling to calibrate the networks. Furthermore, we found out that MNF with Gaussian prior outperforms Reparameterization Trick and Flipout models in terms of precision and uncertainty predictions. Finally, we claim that MNF with Cauchy and LogUniform prior distributions yield well-calibrated TCN, and Transformer and WaveNet networks being the former that best infer the VIX values for one and five-step-ahead forecasting, and the probabilistic Transformer model yields an adequate forecasting for the COVID-19 pandemic period.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Investors and regulators are concerned about financial market volatility and crashes. For this reason, the volatility index (VIX) was introduced in 1993 by the Chicago Board Options Exchange (CBOE) with the aim of assessing the expected financial market volatility in the short run, i.e., for the next 30 days, since it is calculated as an implied volatility from the options on the S &P 500 index on this time-to-maturity [1]. The VIX has been proven to be a good predictor of expected stock index shifts, and therefore as an early warning for investor sentiment and financial market turbulences [1]. Furthermore, VIX has shown to be valid as an important indicator of volatility in the international financial markets [2], predicting recessions [3], can help the investors to evaluate the volatility-related and forecast exchange traded products [4, 5], and improves volatility prediction and option valuation [6, 7]. Due to its importance for asset managers and regulators, it would be useful to foresee the values of the index; however, the VIX is very difficult to forecast [8]. There exist several proposals to predict time series found in the literature classified as conventional and modern methods (see, e.g., [9] and the references therein). Among modern methods, deep learning techniques have been successfully applied to financial time series. Given a probability space, a time series may be defined as a discrete-time stochastic process, in other words, a collection of random variables indexed by the integers [10]. Since time series is a sequence of repeated observations of a given set of variables over a period time [11], where sequences are data points that can be ordered and past observations may provide relevant information about future ones, deep learning models employed for other type of sequence models are also useful for time series. Sequence models may be classified as (see, e.g., [12]), (i) one-to-sequence, where a single input is employed to generate a sequence as an output (e.g., generating text from an image), (ii) sequence-to-one, where a sequence of data is used to generate a single output (e.g., sentiment classification) and (iii) sequence-to-sequence, a sequential data is the input to produce a sequence as output (e.g., machine translation). Time series can be regarded as a special sequence-to-sequence case with trend, seasonality, autocorrelation and noise characteristics [13]. Furthermore, financial time series are characterized by nonstationary, nonlinear, high-noise, which makes the prediction of these time series more challenging [9].

Though several deep learning models have been successfully applied to calculate point estimates of financial variables, all financial models are subject to modeling errors and uncertainty caused by inexact data inputs; therefore, probabilistic models are more adequate to achieve more realistic financial inferences and predictions [14], and then for optimal decision making [15]. Besides, it has been recently found that neural networks are miscalibrated [16]. Thus, our work intends to tackle the abovementioned drawbacks by contributing to the literature in the following aspects: (i) We employ three modern deep learning models to predict the VIX values in a deterministic framework. These models correspond to WaveNet, Temporal Convolutional Networks (TCN), and Transformer, (ii) we obtain the probabilistic version of the deterministic models by using three techniques: Reparameterization Trick (RT), Flipout, and Multiplicative Normalizing Flows (MNF), (iii) we calibrate the probabilistic models with a simple approach known as the standard deviation scaling, and finally (iv) we find that the probabilistic models of WaveNet-MNF and TCN-MNF with LogUniform and Cauchy priors, respectively, are well calibrated.

The rest of the paper is divided as follows. Section 2 presents an overview of the literature related to the examined models in our study. Section 3 describes the the methodology of our work. Section 4 presents the VIX dataset. Section 5 presents the results of our manuscript on deterministic and probabilistic models and its calibration. Finally, Sect. 6 concludes the paper.

2 Related literature

From traditional econometric models, the GARCH family is the most employed technique to forecast VIX. The GARCH models based on filtered historical simulation proposed by [17] perform better than the Normal-VIX model of [18], which is an implied VIX approach. [19] find out that three GARCH (standard GARCH, GJR-GARCH, and Heston-Nandi) models yield a reasonable VIX forecasting being the Heston-Nandi specification the best approach. [20] propose a dynamic jump intensity (DJI) GARCH model, which surpasses other GARCH-type specifications for the entire analyzed sample; however, GARCH and GJR-GARCH perform better during the 2008 financial crisis period. The works of Wu and other authors ([21, 22]) show that a two-component realized EGARCH (REGARCH-2C) and the time-varying risk aversion via the realized EGARCH-mixed-data sampling (REGARCHMIDAS-RA) outperformed GARCH, GJR-GARCH, nonlinear GARCH, EGARCH, and REGARCH.

On the other hand, [23] combine volatility filtering and principal component analysis to extract volatility factors in order to predict the VIX. Other models have been utilized, such as univariate and multivariate (VAR) autoregressive models, Principal Components (PCA) model, ARIMA and ARFIMA models [24], semi-parametric approach [25], Singular Spectrum Analysis combined with Holt-Winters (SSA-HW) and Autoregressive Integrated (ARI-) SSA-HW models [26].

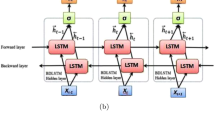

Regarding deep learning models applied to financial time series forecasting, [27] performed an exhaustive review of the literature between 2005 and 2019, whereas [28] carry it out for 2020 and 2022. In these studies, related to VIX, Psaradellis and Sermpinis [29] proposed a HAR-GASVR, which is a Heterogeneous Autoregressive Process (HAR) with Genetic Algorithm with Support Vector Regressor (GASVR) model. On the other hand, Huang et al. [9] and Yujun et al. [30] employ variational mode decomposition (VMD) methods combined with the long short-term memory (LSTM) model.

Within the analyzed neural networks in our study, WaveNet has been applied to VIX [31] and in probabilistic models [32]. In this work, we also implement TCN for financial time series for its adequate performance in time series [33], in financial time series [34], high-frequency financial data [35], and probabilistic forecasting [36]. Transformer models have been also applied in finance [37] and probabilistic developments for time series [38].

In another strand of research about machine and deep learning techniques, [39] apply random forest to forecast VIX and shows its superiority compared to the HAR and augmented HAR models. The latter models exhibited good performance according to [40], in particular, the pure HAR specification. [41] found that Adaptive Boosting outperforms Naïve Bayes, Logistic Regression, Decision Tree, Random Forest, Multi-Layer Perceptron, and an Ensemble model that combined all methods. [42] employ an LSTM network and random forests for forecasting the VIX. Their results show that LSTM performs slightly better than random forests. On the other hand, [43] found that including sentiment variables (twitter data with sentiment values) does not improve forecasting performance of VIX.

Finally, [44] conclude that machine learning techniques (especially, Naive Bayes, Ridge Deviance, Adaptive Boosting, and Discriminant Analysis) are more effective than econometric models in forecasting the direction of the VIX.

To the best of our knowledge, there are few attempts of probabilistic model applications specifically to financial time series [45,46,47].

3 Methodology

This section briefly reviews the deterministic and Bayesian neural networks employed. Furthermore, the calibration problem of neural networks is also succinctly reviewed, and this section ends with the description of the model construction.

3.1 Neural networks

An artificial neural network is a special type of machine learning model that connects neurons organized in layers, while deep learning model is a kind of neural network with numerous layers and neurons [12]. In particular, the WaveNet, the Temporal Convolutional Network, and the Transformer deterministic models are employed in our work.

3.1.1 WaveNet

The WaveNet model was introduced by [48] in 2016 to generate raw audio waveforms for reproducing human voices and musical instruments purposes. In short, there is a convolutional layer, which access the current and previous inputs. Moreover, there is a stack of dilated (aka atrous) causal one-dimensional convolutional layers, that is, when applying a convolutional layer some input values are omitted, with exponentially increasing filters [49]. At the end of the architecture, there are dense layers with an adequate activation function. Thus, this model learns short- and long-term patterns. In the original paper, the authors stacked 10 convolutional layers with dilation rates of 1, 2, 4, 8,..., 256, 512 [50]. Since audio is a type of sequential data, we apply WaveNet to financial time series, which is also a form of sequential data as abovementioned.

3.1.2 Temporal convolutional network (TCN)

The Temporal Convolutional Network (TCN) was first developed by [51] and the authors unified the traditional two-step procedure for video-based action segmentation. The first step involves a Convolutional Neural Network (CNN) that encodes spatial-temporal information, and the second step involves a Recurrent Neural Network (RNN) that captures high-level temporal linkages. Therefore, a TCN may be summarized as a hierarchical temporal encoder–decoder network and allows for long-term patterns, since it is an adaptation of WaveNet [51]. The available Keras package for TCN coded by Philippe Rémy, and based on [52], is employed in our work.

3.1.3 Transformer

The standard Transformer model was developed in [53], “Attention is all you need,” which is a non-recurrent encoder–decoder architecture that helps to transform (that is why the name Transformer) a sequence into another one. The encoder is generally composed of multi-head attention (MHA) and feed-forward layers with residual connections in between. Though the decoder part is like the encoder, it has a self-attention layer (see, e.g., [54] for more details about models based on attention). The attention mechanism is usually represented as Attention(Q, K, V), where Q contains the query, K denotes the keys, and V stands for the values. The main component-MHA-allows for “attending” long-term dependencies in a different way to the short-term dependencies simultaneously. One of its important applications is the Bidirectional Encoder Representations from Transformers (BERT) and GPT-3 models in natural language processing [55].

Deterministic neural network models estimate the weights as a single value, and next section deals with models that considers the weights of the network as a probability distribution.

3.2 Bayesian neural networks

Probabilistic models like Bayesian neural networks (BNNs) are more adequate for financial estimates since financial data are prone to measurement errors and are noisy. To estimate the weights in BNN, a prior distribution (in general) over the network weights is placed. Therefore, an appropriate model should quantify the uncertainties to get a better understanding of the risk involved and improve the decision-making process [14]. There are two main uncertainty sources: aleatoric uncertainty (or data uncertainty) and epistemic uncertainty (or model uncertainty) and an ideal BNN would yield more accurate uncertainty estimates because high uncertainties is a sign of imprecise model predictions [56]. The total uncertainty of a new test output \(y^*\) given a new test input \(x^*\) may be expressed as (see, e.g., [57, 58], Section 2.2., and the references therein)

where \(\frac{1}{T} \sum _{t=1}^{T} \sigma _t^2 \), the mean of the prediction variance, represents the aleatoric uncertainty and \(\frac{1}{T} \sum _{t=1}^{T} (\mu _t - \bar{\mu })^2\), the variance of the prediction mean, represents the epistemic uncertainty.

For the inference in probabilistic models, Markov chain Monte Carlo (MCMC) approach can be considered (e.g., Metropolis–Hastings, Gibbs sampling, Hamiltonian Monte Carlo–HMC, among others) and variational inference. The latter will be employed in this work and is described as follows (based on [59] and its notation, where more details can be found and the references therein).

The output of a BNN is the posterior distribution of the network weights. MCMC methods may be applied to this end; however, they are computationally expensive. Another approach, which is gaining interest in academia, is variational inference. Let \(p(\omega )\) denote the prior distribution over a parameter \(\omega \) (the network weights) on a parameter space \(\Omega \). The posterior distribution of the parameter is given by

where \(p(\mathcal {D}|\omega )\) is known as the likelihood and \(p(\mathcal {D})\) the marginal (or evidence) in Bayesian inference framework. In detail, the dataset \(\mathcal {D}\) is denoted as \(\{(x_i,y_i)\}_{i=1}^N\), where \(x_i\) represents the inputs and \(y_i\) the outputs of the total N sample of the analyzed dataset.

The goal in variational inference is to find a variational distribution \(q_\theta (\omega )\) (indexed by a variational parameter \(\theta \) and from a family of distributions Q), which approximates to the posterior distribution \(p(\omega |\mathcal {D})\). This is done by minimizing the Kullback–Leibler (KL) divergence between the two aforementioned distributions, and it is defined as

It can be shown that minimizing the KL divergence is equivalent to maximizing the evidence lower bound (ELBO), which is given by

The mean-field approximation with normal distributions may be a proposal for the Q family of distributions [60, 61]. That is,

where i indicates the index of the neurons from the previous layer and j the index of neurons for the current layer. However, it poses a dimensionality problem in the parameters (mean \(\mu _{ij}\) and variance \(\sigma _{ij}^{2}\)) to be estimated. Moreover, the KL divergence may be approximated by sampling the variational distribution, \(q_\theta (\omega )\), but it is not possible to perform backpropagation through a random variable. A solution to this problem is Reparameterization Trick, and this is our first approach.

3.2.1 Reparameterization trick

An unbiased and efficient stochastic gradient-based variational inference is provided by (non-local) Reparameterization Trick (RT), and it was applied to variational autoencoders in [62] to make backpropagation possible and the output parameters are normally distributed [63, 64]. Rather than sampling from \(\omega \), samples are generated from another variable \(\epsilon _{ij}\), which is standard normally distributed, and then, \(\omega _{ij}=\mu _{ij} + \sigma _{ij}\epsilon _{ij}\) is calculated, allowing for backpropagation. More details can be found in [62, 65, 66] and the TensorFlow documentation at DenseReparameterization.

3.2.2 Flipout

Flipout also provides an unbiased and efficient stochastic gradients estimator, but reduces the variance of the gradient estimates compared to RT. It was proposed by [67] and applied to LSTM and convolutional networks. The authors impose two constraints, which are (i) independent perturbations and (ii) these perturbations are centered at zero, and it has a symmetric distribution. See more details on the TensorFlow documentation at DenseFlipout.

3.2.3 Multiplicative normalizing flows

Normalizing flows (NF) are probabilistic models useful to fit a complex distribution by learning a transformation (or flow) [64]. The NF can be represented as

where \(p_{T}(y)\) is the probability density function (pdf) of the transformed variable y, T is the invertible mapping, and p(x) is the pdf of an invertible random variable (rv) x. By including auxiliary rv’s \(z \sim q_\theta (z)\) and a factorial Gaussian posterior for the weights with mean parameters conditioned on scaling factors that are modeled by NF, the multiplicative normalizing flows (MNF) are obtained [68]. Therefore, the variational posterior for fully connected layers (similar result is obtained for convolutional layers) is given by

and then a distribution \(q(z_K)\) is obtained

by applying the transform in Eq. 6 successively as

Finally, by incorporating an auxiliary distribution \(r(z_K|\omega , \phi )\)—with a new parameter \(\phi \)—the KL divergence may be bounded as follows

For more details, see, e.g., [58], Section 2.3. The codes and references found at MNF are utilized in our work for the MNF model.

Since the seminal work of [69], more attention is being payed in the academia to obtain not only accurate forecasting but also reliable prediction confidence level of robust neural networks. This is achieved by the so-called calibration process, which is the topic of the next section.

3.3 Calibration

A miscalibrated network implies an overestimation in errors; therefore, their predictions are not reliable, and a calibration of the networks is necessary.

For classification tasks, it is very well-known calibration techniques such as the Platt calibration, histogram binning, Bayesian binning into quantiles, Temperature scaling, Isotonic regression, and ensemble-based calibration methods, and the usual metrics such as expected calibration error (ECE), maximum calibration error (MCE), negative log-likelihood (NLL), and the visual reliability diagrams are employed (see, e.g., [69]). More recently, in the literature, these techniques are classified as post hoc rescaling of predictions, averaging multiple predictions and data augmentation strategies ([70] and the references therein). For a comprehensive revision of calibration methods see [71,72,73]. We follow a similar quantile recalibration method for regression tasks in machine learning [74], and it is seen as a post hoc rescaling method. The standard deviation scaling method (proposed by [75]) is adapted in our work, which simply scales the total uncertainty (see Eq. 1) of the uncalibrated network by a factor that minimizes the root mean squared calibration error—RMSCE—([76], Eq. 19).

3.4 Experiment design

The methodology in our work is described as follows. In a first step, the VIX time series data are collected from Yahoo Finance, which is freely accessible. Since time series (with trend, seasonality, autocorrelation, and noise attributes) are a special case of many-to-many sequence domain, it is needed a different treatment from the most common tasks in this domain. In particular, the windowed dataset creation as in [13] is performed to consider a rolling window for forecasting purposes. We employ a window size of 20 days, i.e., a trading month. Moreover, a robust to outlier scaler transformation of data will be employed. This transformation subtracts the median (instead of the mean as usual) and scales the data to the interquartile range (rather than the standard deviation). Furthermore, the split dataset is done in chronological order, 80% for training set, 10% for validation set, and 10% for test set. Thus, we analyze 2000 observations for training, 250 for validation, and 250 for test set, respectively.

Before executing any model, it is important to get a better knowledge of the statistical properties of the analyzed data. Main descriptive statistics (mean, median, standard deviation, first and third quartiles, minimum and maximum) are calculated for the volatility index. In addition, useful graphical tools such as histogram, boxplot, and autocorrelation function (ACF) plots are also obtained.

Then, robust neural network models like WaveNet, TCN, and Transformer will be applied to compare the performance with the usual metrics (MSE, MAE, MSLE, MAPE) for regression tasks and their respective hyperparameters are fine-tuned. Furthermore, the mean correct prediction of direction of change (MCPDC) is also calculated, which the higher values the better, since it computes the percentage of forecasts for which the changes of the estimates coincide with the same direction of the true VIX values (see, e.g., [77] and [78] for related applications of the MCPDC).

After tuning the hyperparameters for the WaveNet model, the following values are obtained: seven blocks, five layers per block, and 96 filters. For more specific details about the code, see geron-github and wavenet.

While for the TCN model, we found one stack (nb_stack), and 64 filters to use in the convolutional layer (nb_filters). The same number of units (64) is fixed for the LSTM, which is the layer that connects after the TCN architecture, the setup of [1, 2, 4, 8, 16] for the dilations (dilation_list), and the kernel size is equal to 3. See more details at tcn.

For the Transformer model, the Keras documentation for time series classification is adapted in our work. In the MHA part, we found 256 units for the size of each attention head for query and key (key_dim), eight attention heads (num_heads), and dropout probability of 0.10, according to the Grid Search run in Keras Tuner, while, in the feed-forward part, the number of filters (ff_dim) of eight are utilized in the one-dimensional convolutional layer. Moreover, we stack eight of these transformer encoder blocks. Finally, for the multilayer perceptron head, 264 units and a dropout probability of 0.10 are employed. For more details, have a look at the Keras documentation: MHA and transformer.

The Huber function is employed as the loss function, since it is less susceptible to saturation than MAE and less sensitive to outliers than MSE.

Bayesian neural networks for each of the deterministic models are obtained by implementing three Bayesian approaches in the last layer of the deterministic model: RT, Flipout, and MNF.

There are works (see, e.g., [79,80,81]) that have proposed different methodologies for improving what in the literature is known as the Bayesian last layer (BLL) methods, which consider only the probabilistic part over the last layer of the network. BLL models are attractive in terms of tractability and expressiveness in the field of Bayesian neural networks. These models enable desirable features such as exact inference under certain distributional assumptions and can be theoretically seen as a Bayesian linear regression model, and they are scalable to large models. However, BLL provide overconfident uncertainty estimates for predictions outside of the data distribution (OOD). Although there are proposals (the works aforementioned) for overcoming this problem, this is an open problem in the field. But, one solution is simply training the model as it is and then, looking at the reliability diagrams for showing calibration issues. Thus, we reported BLL models which are scalable, tractable, faster to train and also well-calibrated. These properties are sometimes harder to find in fully Bayesian models because of convergence training issues, and they are extremely computationally expensive. Regarding the Bayesian fully connected neural network (FCNN) is a model that can be also worked, although this kind of architecture does not extract significant information from time series because of leak of memory (instead of recurrent and convolutional layers that do a better job).

Finally, the observed proportion of data falling inside an interval and the expected proportion of data at different percentile levels are calculated for each Bayesian neural network and the models are calibrated following the standard deviation scaling. That is, scale the total uncertainty (see Eq. 1) of each model by a factor which minimizes the Root-Mean-Square Error of Calibration (RMSEC).

The loss function for the probabilistic models is composed by the negative log-likelihood plus the KL divergence. The latter has weights given by 1 divided by the training set length.

As baseline model, a Naive Forecaster is employed, which the forecasted value at time \(t+n\) is the same value at time t (see [13]), where \(n = 1, 5, 10\), i.e., one-, five-, and ten- step-ahead, respectively, in our study.

The software employed is Python, TensorFlow, Keras Tuner, and TensorFlow Probability and the latter for the probabilistic models. Finally, code repositories for the models and MNF replicability will also be useful in our work.

4 Data

The analyzed data consist of the volatility index VIX, downloaded from Yahoo Finance in daily frequency from August 22, 2013, to July 31, 2023. Thus, the total length of data is 2500 observations.

Figure 1 shows the daily behavior of historical VIX price from August 22, 2013, to July 31, 2023, and its descriptive statistics is presented in Table 1.

As seen in the descriptive statistics, the maximum value of the VIX index was 82.7 on March 2020 as a result of the COVID-19 pandemic. Similar values were recorded in the subprime crisis. The minimum value was 9.14, with a mean of 18.11 and median of 16.05, showing a positive skewness, as shown in Fig. 34. Values between 15 and 25 are considered moderate, whereas VIX values between 25 and 30 are considered high (have a look at https://www.cboe.com/tradable_products/vix/), and this is also confirmed by the boxplot (see Fig. 35). That is why a robust to outlier scaler transformation of the analyzed data will be employed to train the network models. Outliers are observed above the value of 40. As previously mentioned, VIX values greater than 30 are considered extremely high indicating high turbulence in the markets. Finally, the autocorrelation function (ACF) and partial autocorrelation function (PACF) are depicted for the VIX index. See Figs. 36 and 37, respectively. From the serial correlation plot of the VIX time series, a long-term dependence pattern can be observed. By observing both the ACF and PACF, an AR(2) model could be identified. This is important for traditional time series modeling and for the use of structural time series (STS) modeling in TensorFlow Probability, but this will be the focus of future research.

5 Results

This section presents the results for the deterministic and probabilistic models as its calibration, mainly focused on one-step-ahead prediction. Then, the multi-step-ahead forecasting (for five and 10 days in the future) is also obtained. The comparison of these results is summarized by performing the Diebold–Mariano test. Finally, as a robustness check of the model performance, the COVID-19 pandemic crisis is also examined.

We also performed machine learning techniques to forecast the VIX price, and the results are found in Table 2. Interestingly, the Naive Forecaster approach, which basically assumes that future values will behave similarly as past values, is the best model followed by the Exponential Smoothing (ETS) algorithm. In particular, we follow the PyCaret tutorial for time series found at Pycaret-Github and more details are found at Pycaret-Doc.

5.1 Deterministic models

Table 3 exhibits the metrics for training, validation, and test set for the three models: WaveNet, TCN, and Transformer. The Transformer model is the network with the minimum loss (Huber Loss) and the highest MCPDC (0.472) in test set, while the TCN presents the lower values for MAE, RMSE, and MSLE, and the WaveNet exhibits the minimum MAPE. The results for the Naive Forecaster (NF) model are found in Table 4. In general, the error metrics for the NF is outperformed by the network models, except for the MSLE and MCPDC, which is only surpassed by the Transformer model. Furthermore, Figs. 2, 3 and 4 present the results of the prediction and actual data for the WaveNet, TCN, and Transformer models, respectively. In a visual analysis, the TCN seems to be the model that fits the best to the test dataset. For lower VIX values, i.e., in the las part of the plot, the TCN does not predict adequately the actual data, but the WaveNet and Transformer do a good job. However, the WaveNet behaves better than the Transformer for higher values of VIX, that is, at the very beginning of the graph.

5.2 Probabilistic models

The Bayesian techniques of RT, Flipout, and MNF reviewed in Sect. 3.2 are employed in the last layer of the previous deterministic networks to obtain their respective probabilistic models.

Table 5 presents the metrics for the probabilistic models, and for sake of comparison only the test dataset results will be considered in our analysis, which is exhibited by each neural network.

-

For the WaveNet case, the MNF is the model with the lowest value of loss and RMSE, and similar MAE and MSLE values are obtained for MNF and Flipout. The latter (Flipout model) performs the best for MAPE metric. RT is outperformed in most of the metrics by the other two models (Flipout and MNF).

-

MNF has the minimum MAE, RMSE, and MSLE values for the TCN network, whereas RT outperforms in loss and MAPE metrics, and Flipout performs the worst in most of the metrics.

-

The results of the metrics for the Transformer network show that MNF has the lowest MAPE value and the highest MCPDC value (0.502), RT model presents the minimum values for MAE, RMSE, and MSLE metrics, and Flipout model for the loss metric.

As a consequence, despite the mixed results in the different networks, it is observed a good performance of the MNF model in general.

An important result of [16] is that neural networks are miscalibrated and this affects the forecasting performance of a model. The next section deals with this issue, the calibration problem.

5.3 Calibration

This work implements three robust neural networks (WaveNet, TCN, and Transformer) mostly employed in the literature for many-to-many sequence tasks. After having the hyperparameters fine-tuned, these networks have been trained for the VIX forecasting purposes with good results in a deterministic manner. As mentioned in the Introduction Section, probabilistic models are more appropriate to achieve more realistic financial inferences and predictions. To this aim, we implement three models: RT, Flipout, and MNF in the last layer of the deterministic models and calculate their respective (total) uncertainties (see Eq. 1). However, these models are miscalibrated and affect not only the point estimates but also the uncertainty around these point predictions.

To analyze (mis)calibration, the observed proportion of data falling inside an interval and the expected proportion of data of a standard normal distribution at different percentile levels (i.e., 10%, 20%, 30%, 40%, 50%, 60%, 70%, 80%, and 90%) are calculated. Then, we plot the observed proportion of data vs the expected proportion of data (as per in [76], Fig. 12-b), before and after the calibration. This graph resembles a modified reliability diagram for classification tasks. A miscalibration is evidenced in the aforementioned plot, if the observed proportion of data lie far from the diagonal of the graph. On the other hand, a perfect calibration is noticed when all the observed proportion of data lies in the diagonal.

If a network model is miscalibrated, a post hoc rescaling method is followed to calibrate the model. In other words, the total uncertainty (see Eq. 1) of the miscalibrated model is multiplied by a factor c that minimizes the RMSCE [76], Eq. 19, given by

where p is the expected proportion of data and \(\hat{p}(p)\) is the observed proportion of data that lies inside the calculated interval given by the total uncertainty.

It is worth to mention that a scaling factor closer to 1, the better the model, being 1 a perfect calibration. The initial results of the calibration are shown in Table 6. The MNF (with standard normal prior) presents the higher values of scaling factor and the minimum RMSCE for the three models. The previous results are confirmed by the calibration diagrams and prediction plots. Figures 5 and 6 depict the calibration diagram and fit for the WaveNet and RT model, whereas Figs. 7 and 8 exhibit the calibration diagram and fit for the WaveNet and Flipout model. Figures 9 and 10 depict the calibration diagram and fit for the WaveNet and MNF model. On the other hand, Figs. 11 and 12 show the calibration diagram and fit for the TCN and RT model. Moreover, Figs. 13 and 14 present the calibration diagram and fit for the TCN and Flipout model. Figures 15 and 16 exhibit the calibration diagram and fit for the TCN and MNF model. On top of that, Figs. 17 and 18 show the calibration diagram and fit for the Transformer and RT model. Furthermore, Figs. 19 and 20 present the calibration diagram and fit for the Transformer and Flipout model. Finally, Figs. 21 and 22 depict the calibration diagram and fit for the Transformer and MNF model.

The most common distribution for the prior is the normal pdf, but better posterior approximation may be obtained by varying the prior. In our study, we also tested the Cauchy and LogUniform pdf’s (see Table 7 and the resources at waffles-and-posteriors). By changing to these prior distributions in the MNF setup, better results are obtained. For the TCN, the Cauchy distribution prior and two hidden layers with 50 units each, the scaling factor is 0.9800 (see Fig. 23 for the WaveNet and MNF model—with LogUniform prior—calibration diagram and its prediction after calibration in Fig. 24), whereas for the WaveNet, a scaling factor of 0.9859 is achieved with LogUniform prior and three hidden layers with 50 units each layer (see Figs. 25 and 26 the calibration diagram for the TCN and MNF model—with Cauchy prior—and its prediction after the calibration procedure, respectively). Finally, Fig. 27 exhibits the calibration diagram for the Transformer and MNF model (with LogUniform prior) and Fig. 28 its respective prediction after the calibration procedure. A scaling factor of 0.9418 is found with three layers of 5, 100, and 5 units for the latter model. As observed in Fig. 27, the calibration for Transformer is outperformed by the other two models, and this issue will be the focus of future research.

Next section presents the results for five- and ten-step-ahead prediction.

5.4 Multi-step-ahead results

Results for five-step-ahead are found in Table 8, whereas results for ten- step-ahead are found in Table 9.

For the probabilistic models, as observed in the aforementioned tables, the WaveNet architecture (see Fig. 29) presents the best results for the error metrics and MCPDC considering five-step-ahead, whereas, among the probabilistic specifications, the TCN model (see Fig. 30) exhibits the lowest error metric values and the highest MCPDC for ten-step-ahead forecasting.

5.5 Diebold–Mariano test

To compare the results of the point estimates obtained by the different models, a Diebold–Mariano test is performed, and the results are exhibited in Table 10. Comparison for three models is shown in the table, the Naive Forecaster—NF, Deterministic neural network, and its Probabilistic version (MNF model). The results for the other models are available upon request. In general, for one-step-ahead forecast, the deterministic models work better; however, the WaveNet probabilistic network performs better than its deterministic version and the NF model. Finally, for five- and ten-step-ahead, the probabilistic models outperform in more cases, and in particular, the probabilistic TCN model is better than its deterministic counterpart and the NF model for five-step-ahead forecasting.

5.6 COVID-19 pandemic results

To get a deeper insight on the performance of the probabilistic models analyzed in the previous section, we include the COVID-19 pandemic period in the test dataset. A pandemic may be classified as a systemic risk, and the financial literature evidenced the impact of COVID-19 pandemic in the financial market volatility (see, e.g., [82, 83]), and VIX reached its highest closing value of 82.69 on March 16, 2020. To this end, the whole sample ranges from November 30, 2007, to July 31, 2020, for a total of 3189 observations, and thus, the neural network models could learn from another turbulent event in the financial markets, the so-called subprime crisis in 2008. The origins of the two crises were very different, and the volatility decreased rapidly after its peak on COVID-19 pandemic compared to the subprime crisis [84], which makes the prediction of VIX values more challenging. The dataset split is given by 80/15/5 for training (2,551 observations), validation (478 data points), and test (160 values) sets, respectively. The results are shown in Fig. 31 for the WaveNet, Fig. 32 for TCN, and Fig. 33 for Transformer probabilistic models.

As observed in the graphs, Transformer exhibits an adequate point estimation and calibration. Notably, the models, in general, show a wider 95% interval around the VIX peak, as a result of the high uncertainty presented in the markets caused by the WHO statement and concerns about the potential solution of the pandemic. This scenario yields on a less predictable power of the models for the VIX values in the aforementioned region.

5.7 Main findings

Results confirmed that more robust neural networks provide a good one-step-ahead forecasting performance for the volatility index VIX in a deterministic and probabilistic setup (as in other many-to-many sequence data), but these networks are miscalibrated [16].

MNF with standard normal prior provides better results than RT and Flipout for the calibration procedure in our case study.

By varying the priors with heavier-tailed distributions in the MNF model, a well calibration is found for the different networks. This is in line with the outstanding works of Fortuin and his team on BNN priors, see, for instance, [85] and [86].

In the multi-step-ahead results, the forecasting is more challenging, and the probabilistic models show high uncertainty, where the model is not capable of predicting the point estimate accurately.

We found out that the probabilistic Transformer model (with MNF and LogUniform prior) yields adequate point estimation and calibration for high volatile periods, such as the effects of the COVID-19 pandemic in the financial markets.

More application works will be needed to compare the performance of uninformative priors (like standard normal) with heavy-tailed prior distributions, and our work sheds some lights about the study of different priors on BNN in the financial time series field.

6 Conclusions and future research

We implemented Bayesian neural networks (BNNs) to forecast the volatility index VIX in a probabilistic manner and thus estimate the weights of three robust neural networks, used in sequence data, like WaveNet, TCN, and Transformer. Three different approaches were employed to this aim, Reparameterization Trick (RT), Flipout, and Multiplicative Normalizing Flows (MNF). Since modern networks are miscalibrated we employed a simple approach to calibrate the models following the standard deviation scaling method. Our results show that MNF presents the best calibration and overperformance is obtained varying the prior distributions, which is a promising future research in financial time series forecasting with BNN.

Other methodologies related to the analyzed models in our study can be tested such as the Knowledge-Driven Temporal Convolutional Network (KDTCN) proposed by [87] who include background knowledge, news and asset price information into deep prediction models, to mitigate the problem of asset trend forecasting and abrupt changes explainability. Another model is the Seq-U-Net, where [88] claim is more efficient than other convolutional setups (including TCN and WaveNet). In the same vein, the Retentive Networks (RetNet), which reduce the inference cost and memory complexity issues of transformer models [89], may be also tested. Furthermore, ensemble deep learning methods [90] will be also subject of study in the BNN framework. Future work may be also devoted to employ VIX forecasting for trading rules (see, e.g., [78]) or hedging strategies. Finally, the probabilistic view may be applied to calculate value-at-risk (VaR), which is considered a high quantile of a financial loss distribution, and contrast results with [91] approach.

References

Whaley, R.E.: Understanding the vix. J. Portfolio Manag. 35(3), 98–105 (2009)

Wang, H.: Vix and volatility forecasting: a new insight. Phys. A Stat. Mech Appl. 533, 121951 (2019)

Hansen, A.L.: Predicting recessions using vix-yield curve cycles. Int. J. Forecast. 40(1), 409–422 (2024)

Huang, H.H., Lin, Y.R.: Forecasting vix with stock and oil prices. Finance Uver Czech J. Econ. Finance 73(1), 24–55 (2023)

Xu, H., Xu, C., Sun, Y., Peng, J., Tian, W., He, Y.: Exchange rate forecasting based on integration of gated recurrent unit (GRU) and CBOE volatility index (VIX). Comput. Econ., 1–29 (2023)

Pan, Z., Wang, Y., Liu, L., Wang, Q.: Improving volatility prediction and option valuation using VIX information: a volatility spillover Garch model. J. Future Mark. 39(6), 744–776 (2019)

Martin, V.L., Tang, C., Yao, W.: Forecasting the volatility of asset returns: the informational gains from option prices. Int. J. Forecast. 37(2), 862–880 (2021)

Degiannakis, S.: Forecasting vix. J. Money Invest. Bank. 4, 5–19 (2008)

Huang, Y., Gao, Y., Gan, Y., Ye, M.: A new financial data forecasting model using genetic algorithm and long short-term memory network. Neurocomputing 425, 207–218 (2021)

McNeil, A.J., Frey, R., Embrechts, P.: Quantitative Risk Management: Concepts, Techniques and Tools-Revised Edition. Princeton University Press, New Jersey (2015)

Adhikari, R., Agrawal, R.K.: An introductory study on time series modeling and forecasting. Preprint at arXiv:1302.6613 (2013)

Kapoor, A., Gulli, A., Pal, S., Chollet, F.: Deep Learning with TensorFlow and Keras: Build and Deploy Supervised, Unsupervised, Deep, and Reinforcement Learning Models. Packt Publishing Ltd, Birmingham (2022)

Moroney, L.: AI and Machine Learning for Coders. O’Reilly Media, Sebastopol (2020)

Kanungo, D.K.: Probabilistic Machine Learning for Finance and Investing. O’Reilly Media, Sebastopol (2023)

Dheur, V., Taieb, S.B.: A large-scale study of probabilistic calibration in neural network regression. Preprint at arXiv:2306.02738 (2023)

Guo, C., Pleiss, G., Sun, Y., Weinberger, K.Q.: On calibration of modern neural networks. In: International conference on machine learning (pp. 1321-1330). PMLR (2017)

Jiang, Y., Lazar, E.: Forecasting vix using filtered historical simulation. J. Financ. Economet. 20(4), 655–680 (2022)

Hao, J., Zhang, J.E.: Garch option pricing models, the cboe vix, and variance risk premium. J. Financ. Economet. 11, 556–580 (2024)

Liu, Q., Guo, S., Qiao, G.: Vix forecasting and variance risk premium: a new garch approach. N. Am. J. Econ. Financ. 34, 314–322 (2015)

Qiao, G., Yang, J., Li, W.: Vix forecasting based on Garch-type model with observable dynamic jumps: a new perspective. N. Am. J. Econ. Financ. 53, 101186 (2020)

Wu, X., Zhao, A., Liu, L.: Forecasting vix using two-component realized egarch model. N. Am. J. Econ. Financ. 67, 101934 (2023)

Wu, X., He, Q., Xie, H.: Forecasting vix with time-varying risk aversion. Int. Rev. Econ. Financ. 88, 458–475 (2023)

Andreou, E., Ghysels, E.: Predicting the vix and the volatility risk premium: the role of short-run funding spreads volatility factors. J. Econometr. 220(2), 366–398 (2021)

Konstantinidi, E., Skiadopoulos, G., Tzagkaraki, E.: Can the evolution of implied volatility be forecasted? Evidence from European and us implied volatility indices. J. Bank. Financ. 32(11), 2401–2411 (2008)

Clements, A., Fuller, J.: Forecasting increases in the vix: A time-varying long volatility hedge for equities. NCER Working Paper Series 88, National Centre for Econometric Research (2012)

Degiannakis, S., Filis, G., Hassani, H.: Forecasting global stock market implied volatility indices. J. Empir. Financ. 46, 111–129 (2018)

Sezer, O.B., Gudelek, M.U., Ozbayoglu, A.M.: Financial time series forecasting with deep learning: a systematic literature review: 2005–2019. Appl. Soft Comput. 90, 106181 (2020)

Zhang, C., Sjarif, N.N.A., Ibrahim, R.B.: Deep learning techniques for financial time series forecasting: A review of recent advancements: 2020-2022. Preprint at arXiv:2305.04811 (2023)

Psaradellis, I., Sermpinis, G.: Modelling and trading the us implied volatility indices. evidence from the vix, vxn and vxd indices. Int. J. Forecast. 32(4), 1268–1283 (2016)

Yujun, Y., Yimei, Y., Wang, Z.: Research on a hybrid prediction model for stock price based on long short-term memory and variational mode decomposition. Soft. Comput. 25, 13513–13531 (2016)

Borovykh, A., Bohte, S., Oosterlee, C.W.: Dilated convolutional neural networks for time series forecasting. J. Comput. Financ. 22(4), 73–101 (2019)

Sun, X., Chen, J.: High-dimensional probabilistic time series prediction via wavenet+ t. In: 2022 International Conference on Computational Science and Computational Intelligence (CSCI) (pp. 13-18). IEEE (2022)

Yan, J., Mu, L., Wang, L., Ranjan, R., Zomaya, A.Y.: Temporal convolutional networks for the advance prediction of ENSO. Sci. Rep. 10(1), 8055 (2020)

Zhao, M.: Financial time series forecast of temporal convolutional network based on feature extraction by variational mode decomposition. In: Liang, Q., Wang, W., Mu, J., Liu, X., Na, Z. (eds.) Artificial Intelligence in China. AIC 2022. Temporal convolutional networks for the advance prediction of enso, vol. 871, pp. 365–374. Springer, Singapore (2022)

Dai, W., An, Y., Long, W.: Price change prediction of ultra high frequency financial data based on temporal convolutional network. Proc. Comput. Sci. 199, 1177–1183 (2022)

Chen, Y., Kang, Y., Chen, Y., Wang, Z.: Probabilistic forecasting with temporal convolutional neural network. Neurocomputing 399, 491–501 (2020)

López-Ruiz, S., Hernández-Castellanos, C.I., Rodríguez-Vázquez, K.: Multi-objective framework for quantile forecasting in financial time series using transformers. In: Proceedings of the Genetic and Evolutionary Computation Conference, 395-403 (2022)

Tang, B., Matteson, D.S.: Probabilistic transformer for time series analysis. Adv. Neural. Inf. Process. Syst. 34, 23592–23608 (2021)

Kim, B.Y., Han, H.: Multi-step-ahead forecasting of the cboe volatility index in a data-rich environment: application of random forest with boruta algorithm. Korean Econ. Rev. 38, 541–569 (2022)

Fernandes, M., Medeiros, M.C., Scharth, M.: Modeling and predicting the cboe market volatility index. J. Bank. Financ. 40, 1–10 (2014)

Bai, Y., Cai, C.X.: Predicting vix with adaptive machine learning. Preprint available at SSRN 3866415 (2023)

Osterrieder, J., Kucharczyk, D., Rudolf, S., Wittwer, D.: Neural networks and arbitrage in the vix: a deep learning approach for the vix. Digit. Financ. 2(1), 97–115 (2020)

Oliveira, N., Cortez, P., Areal, N.: The impact of microblogging data for stock market prediction: using twitter to predict returns, volatility, trading volume and survey sentiment indices. Expert Syst. Appl. 73, 125–144 (2017)

Vrontos, S.D., Galakis, J., Vrontos, I.D.: Implied volatility directional forecasting: a machine learning approach. Quantit. Financ. 21(10), 1687–1706 (2021)

Barunik, J., Hanus, L.: Learning probability distributions in macroeconomics and finance. Preprint at arXiv:2204.06848 (2022)

Benton, G., Gruver, N., Maddox, W., Wilson, A.G.: Deep probabilistic time series forecasting over long horizons. Under review as a conference paper at ICLR 2023 (2023)

Du, H., Du, S., Li, W.: Probabilistic time series forecasting with deep non-linear state space models. CAAI Trans. Intell. Technol. 8(1), 3–13 (2023)

Oord, A.V.D., Dieleman, S., Zen, H., Simonyan, K., Vinyals, O., Graves, A., Kalchbrenner, N., Senior, A., Kavukcuoglu, K.: Wavenet: A generative model for raw audio. Preprint at arXiv:1609.03499 (2016)

Gulli, A., Pal, S.: Deep Learning with Keras. Packt Publishing Ltd, Birmingham (2017)

Géron, A.: Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow. O’Reilly Media, Sebastopol (2022)

Lea, C., Vidal, R., Reiter, A., Hager, G.D.: Temporal convolutional networks: A unified approach to action segmentation. In: Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8-10 and 15-16, 2016, Proceedings, Part III 14 (pp. 47-54). Springer International Publishing (2016)

Bai, S., Kolter, J.Z., Koltun, V.: An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. Preprint at arXiv:1803.01271 (2018)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., Polosukhin, I.: Attention is all you need. In: Advances in neural information processing systems, 30 (2017)

Luong, M.T., Pham, H., Manning, C.D.: Effective approaches to attention-based neural machine translation. Preprint at arXiv:1508.04025 (2015)

Manu, J.: Modern Time Series Forecasting with Python: Explore Industry-Ready Time Series Forecasting Using Modern Machine Learning and Deep Learning. Packt Publishing Ltd, Birmingham (2022)

Benatan, M., Gietema, J., Schneider, M.: Enhancing Deep Learning with Bayesian Inference. Packt Publishing Ltd, Birmingham (2023)

Hortúa, H.J., Volpi, R., Marinelli, D., Malagò, L.: Parameter estimation for the cosmic microwave background with Bayesian neural networks. Phys. Rev. D (2020). https://doi.org/10.1103/physrevd.102.103509

Hortúa, H.J. , García, L.Á., Castañeda-Colorado, L.: Constraining cosmological parameters from N-body simulations with variational Bayesian neural networks. Front. Astron. Space Sci. (2023). https://doi.org/10.3389/fspas.2023.1139120

Kwon, Y., Won, J.-H., Kim, B.J., Paik, M.C.: Uncertainty quantification using Bayesian neural networks in classification: application to biomedical image segmentation. Comput. Stat. Data Anal. 142, 106816 (2020)

Graves, A.: Practical variational inference for neural networks. Adv. Neural. Inf. Process. Syst. 24, 2348–2656 (2011)

Blundell, C., Cornebise, J., Kavukcuoglu, K., Wierstra, D.: Practical variational inference for neural networks. In: International conference on machine learning, 1613–1622 (2015)

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. Preprint at arXiv:1312.6114 (2013)

Kingma, D.P., Salimans, T., Welling, M.: Variational dropout and the local reparameterization trick. Adv. Neural. Inf. Process. Syst. 28, 2575–2583 (2015)

Dürr, O., Sick, B., Murina, E.: Probabilistic Deep Learning: With Python, Keras and Tensorflow Probability. Manning Publications, New York (2020)

Bengio, Y., Leonard, N., Courville, A.: Estimating or propagating gradients through stochastic neurons for conditional computation. Preprint at arXiv:1308.3432 (2013)

Rezende, D.J., Mohamed, S., Wierstra, D.: Stochastic backpropagation and approximate inference in deep generative models. In: International Conference on Machine Learning (2014)

Wen, Y., Vicol, P., Ba, J., Tran, D., Grosse, R.: Flipout: Efficient pseudo-independent weight perturbations on mini-batches (2018). Preprint at arXiv:1803.04386

Louizos, C., Welling, M.: Multiplicative normalizing flows for variational bayesian neural networks, (2017). Preprint at arXiv:1703.01961

Guo, C., Pleiss, G., Sun, Y., Weinberger, K.Q.: On calibration of modern neural networks, In: International conference on machine learning, 1321–1330 (2017)

Minderer, M.: Revisiting the calibration of modern neural networks. Adv. Neural. Inf. Process. Syst. 34, 15682–15694 (2021)

Dheur, V., Taieb, S.B.: A large-scale study of probabilistic calibration in neural network regression, (2023). Preprint at arXiv:2306.02738

Vasilev, R., D’yakonov, A.: Calibration of neural networks, (2023). Preprint at arXiv:2303.10761

Wang, C.: Calibration in deep learning: A survey of the state-of-the-art, (2023). Preprint at arXiv:2308.01222

Kuleshov, V., Fenner, N., Ermon, S.: Accurate uncertainties for deep learning using calibrated regression, In Proceedings of the 35th International Conference on Machine Learning. Ed. by Jennifer Dy and Andreas Krause. Vol. 80. Proceedings of Machine Learning Research. PMLR, 2796–2804 (2018)

Levi, D., Gispan, L., Giladi, N., Fetaya, E.: Evaluating and calibrating uncertainty prediction in regression tasks. Sensors 22(15), 5540 (2022)

Psaros, A.F., Meng, X., Zou, Z., Guo, L., Karniadakis, G.E.: Uncertainty quantification in scientific machine learning: methods, metrics, and comparisons. J. Comput. Phys. 477, 111902 (2023)

Goncalves, S., Guidolin, M.: Predictable dynamics in the s &p 500 index options implied volatility surface. J. Bus. 79(3), 1591–1635 (2006)

Bernales, A., Guidolin, M.: Can we forecast the implied volatility surface dynamics of equity options? Predictability and economic value tests. J. Bank. Financ. 46, 326–342 (2014)

Weber, N., Starc, J., Mittal, A., Blanco, R., Màrquez, L.: Optimizing over a bayesian last layer. In: NeurIPS workshop on Bayesian Deep Learning (2018)

M. Sharma, S. Farquhar, E. Nalisnick, and T. Rainforth. Do bayesian neural networks need to be fully stochastic? In: International Conference on Artificial Intelligence and Statistics, PMLR, (206):7694–7722 (2023)

Harrison, J., Willes, J., Snoek, J.: Variational bayesian last layers, (2024). Available at arXiv:2404.11599

Chaudhary, R., Bakhshi, P., Gupta, H.: Volatility in international stock markets: an empirical study during covid-19. J. Risk Financ. Manag. 13(9), 208 (2020)

Rahman, M.M., Guotai, C., Das Gupta, A., Abedin, M.Z.: Impact of early covid-19 pandemic on the us and European stock markets and volatility forecasting. Econ. Res. Ekonomska Istraživanja 35(1), 3591–3608 (2022)

Kilburn, F.: Vol decay and correlation flips: Cfm’s take on the covid crisis, (2020). Available at bit.ly/4484ZV5

Fortuin, V., Garriga-Alonso, A., Ober, S.W., Wenzel, F., Rätsch, G., Turner, R.E., van der Wilk, M., Aitchison, L.: Bayesian neural network priors revisited, 2021. Preprint at arXiv:2102.06571

Fortuin, V.: Priors in Bayesian deep learning: a review. Int. Stat. Rev. 90(3), 563–591 (2022)

Deng, S., Zhang, N., Zhang, W., Chen, J., Pan, J.Z., Chen, H.: Knowledge-driven stock trend prediction and explanation via temporal convolutional network. In: Companion Proceedings of The 2019 World Wide Web Conference, 678–685 (2019)

Stoller, D., Tian, M., Ewert, S., Dixon, S.: Seq-u-net: A one-dimensional causal u-net for efficient sequence modelling, (2019). Preprint at arXiv:1911.06393

Sun, Y., Dong, L., Huang, S., Ma, S., Xia, Y., Xue, J., Wang, J., Wei, F.: Retentive network: A successor to transformer for large language models, (2023). Preprint at arXiv:2307.08621

Li, Y., Pan, Y.: A novel ensemble deep learning model for stock prediction based on stock prices and news. Int. J. Data Sci. Anal. 13, 139–149 (2022)

Mohebali, B., Tahmassebi, A., Meyer-Baese, A., Gandomi, A.H.: Probabilistic neural networks: A brief overview of theory, implementation, and application. In: Samui, P., Bui, D.T., Chakraborty, S., Deo, R.C. (eds.) Handbook of Probabilistic Models, pp. 347–367. Elsevier, Oxford (2020)

Acknowledgements

The authors would like to thank the comments of two anonymous reviewers that helped to improve the initial version of the manuscript.

Funding

Open Access funding provided by Colombia Consortium

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A Additional Graphs for VIX

Appendix A Additional Graphs for VIX

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hortúa, H.J., Mora-Valencia, A. Forecasting VIX using Bayesian deep learning. Int J Data Sci Anal (2024). https://doi.org/10.1007/s41060-024-00562-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41060-024-00562-5