Abstract

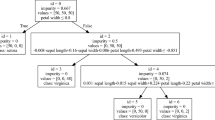

Due to their robust nature, ensemble methods have gained a lot of popularity these days. In this work, we propose several variations of oblique decision tree ensembles called as oblique random forests, which are implemented with binary and ternary decision structures. Oblique random forests are trained using a linear classifier, where the feature axis is not invariably orthogonal to the decision hyperplanes at each internal node of the base model. For the multiclass classification problems, the training samples are partitioned at non-leaf nodes into groups of classes corresponding to the underlying geometric characteristics, with respect to a randomly chosen feature subspace. Each of the proposed models employ a different binary base classifier. The binary classifiers used for this work are twin support vector machines (TWSVM), Improvements on \(\nu \)-TWSVM, multi-surface proximal support vector machine (MPSVM) and Regularized MPSVM. We also propose a novel approach to choose the final hyperplane to split the data at the non-leaf node while optimizing an impurity criterion in the decision tree. This work presents a comparative analysis of different base classifiers for implementing Oblique Random forests using binary and ternary decision structures. In addition, multiple regularization strategies like Tikhonov regularization, axis-parallel split regularization, and null space regularization are used to address limited sample size issues in the oblique random forest decision trees implemented with MPSVM and RegMPSVM. Whereas implementations for TWSVM and I\(\nu \)TWSVM is done with Tikhonov regularization only. All these models are compared for their generalization ability through benchmark 38 UCI classification datasets. The efficacy of these methods is also established through statistical analysis.

Similar content being viewed by others

Data Availability

The datasets analysed during the current study are available in the University of California Irvine (UCI) repository [54].

References

Breiman, L., Friedman, J., Olshen, R., Stone, C.: Classification and regression trees. Wadsworth Int, Group 37(15), 237–251 (1984)

Quinlan, J.R.: C4.5: Programs for Machine Learning. Elsevier, Amsterdam (2014)

Ho, T. K.: Random decision forests. In: Proceedings of 3rd International Conference on Document Analysis and Recognition, Vol. 1, IEEE, pp. 278–282 (1995)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Katuwal, R., Ponnuthurai, N.S., Zhang, L.: Heterogeneous oblique random forest. Pattern Recognit. 99, 107078 (2020)

Breiman, L.: Bias, Variance, and Arcing Classifiers (1996)

Goerss, J.S.: Tropical cyclone track forecasts using an ensemble of dynamical models. Mon. Weather Rev. 128(4), 1187–1193 (2000)

Wiering, M.A., Van Hasselt, H.: Ensemble algorithms in reinforcement learning. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 38(4), 930–936 (2008)

Bonissone, P., Cadenas, J.M., Garrido, M.C., Díaz-Valladares, R.A.: A fuzzy random forest. Int. J. Approx. Reason. 51(7), 729–747 (2010)

Yassin, S.S.: Road accident prediction and model interpretation using a hybrid K-means and random forest algorithm approach. In: SN Applied Sciences, Vol. 2 (9), Springer, pp. 1–13 (2020)

Banfield, R.E., Hall, L.O., Bowyer, K.W., Kegelmeyer, W.P.: A comparison of decision tree ensemble creation techniques. IEEE Trans. Pattern Anal. Mach. Intell. 29(1), 173–180 (2006)

Murthy, S.K., Kasif, S., Salzberg, S.: A system for induction of oblique decision trees. J. Artif. Intell. Res. 2, 1–32 (1994)

Menze, B. H., Kelm, B. M., Splitthoff, D. N., Koethe, U., Hamprecht, F. A.: On oblique random forests. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Springer, pp. 453–469 (2011)

Zhang, L., Varadarajan, J., Nagaratnam Suganthan, P., Ahuja, N., Moulin, P.: Robust visual tracking using oblique random forests. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5589–5598 (2017)

Murthy, K.V.S., Salzberg, S.L.: On growing better decision trees from data, Ph.D. Thesis, Citeseer (1995)

Fernández-Delgado, M., Cernadas, E., Barro, S., Amorim, D.: Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 15(1), 3133–3181 (2014)

Zhang, L., Suganthan, P.N.: Benchmarking ensemble classifiers with novel co-trained kernel ridge regression and random vector functional link ensembles [research frontier]. IEEE Comput. Intell. Mag. 12(4), 61–72 (2017)

Breiman, L.: Bagging predictors. Mach. Learn. 24(2), 123–140 (1996)

Barandiaran, I.: The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 20(8), 832–844 (1996)

Criminisi, A., Shotton, J., Konukoglu, E., et al.: Decision forests: A unified framework for classification, regression, density estimation, manifold learning and semi-supervised learning. Foundations and Trends® in Computer Graphics and Vision 7(2—-3), 81–227 (2012)

Zhang, L., Suganthan, P.N.: Oblique decision tree ensemble via multisurface proximal support vector machine. IEEE Trans. Cybern. 45(10), 2165–2176 (2014)

Mangasarian, O.L., Wild, E.W.: Multisurface proximal support vector machine classification via generalized eigenvalues. IEEE Trans. Pattern Anal. Mach. Intell. 28(1), 69–74 (2005)

Manwani, N., Sastry, P.: Geometric decision tree. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 42(1), 181–192 (2011)

Chen, L.-F., Liao, H.-Y.M., Ko, M.-T., Lin, J.-C., Yu, G.-J.: A new lda-based face recognition system which can solve the small sample size problem. Pattern Recognit. 33(10), 1713–1726 (2000)

Jiang, X.: Linear subspace learning-based dimensionality reduction. IEEE Signal Process. Mag. 28(2), 16–26 (2011)

Khemchandani, R., Saigal, P.: Color image classification and retrieval through ternary decision structure based multi-category TWSVM. Neurocomputing 165, 444–455 (2015)

Khemchandani, R., Saigal, P., Chandra, S.: Improvements on \(\nu \)-twin support vector machine. Neural Netw. 79, 97–107 (2016)

Saigal, P., Khanna, V., Rastogi, R.: Divide and conquer approach for semi-supervised multi-category classification through localized kernel spectral clustering. Neurocomputing 238, 296–306 (2017)

Saigal, P., Chandra, S., Rastogi, R.: Multi-category Ternion support vector machine. Eng. Appl. Artif. Intell. 85, 229–242 (2019)

Rastogi, R., Saigal, P., Chandra, S.: Angle-based twin parametric-margin support vector machine for pattern classification. Knowl.-Based Syst. 139, 64–77 (2018)

Khemchandani, R., Saigal, P., Chandra, S.: Angle-based twin support vector machine. Ann. Oper. Res. 269(1), 387–417 (2018)

Gupta, D., Richhariya, B., Borah, P.: A fuzzy twin support vector machine based on information entropy for class imbalance learning. Neural Comput. Appl. 31(11), 7153–7164 (2019)

Khemchandani, R., Pal, A., Chandra, S.: Fuzzy least squares twin support vector clustering. Neural Comput. Appl. 29(2), 553–563 (2018)

Chen, S.-G., Wu, X.-J., Xu, J.: Locality preserving projection least squares twin support vector machine for pattern classification. Pattern Anal. Appl. 23(2), 1–13 (2020)

Quinlan, J.R.: Induction of decision trees. Mach. Learn. 1(1), 81–106 (1986)

Hunt, E.B., Marin, J., Stone, P.J.: Experiments in Induction. Academic Press, Cambridge (1966)

Khemchandani, R., Chandra, S., et al.: Twin support vector machines for pattern classification. IEEE Trans. Pattern Anal. Mach. Intell. 29(5), 905–910 (2007)

Khemchandani, R.: Mathematical programming applications in machine learning., Ph.D. Thesis (2008)

Mangasarian, O.L.: Nonlinear Programming, vol. 10. SIAM, Philadelphia (1993)

Gunn, S. R., et al.: Support vector machines for classification and regression, ISIS Technical Report 14 (1998)

Mangasarian, O. L., Wild, E. W.: Proximal support vector machine classifiers. In: Proceedings KDD-2001: Knowledge discovery and data mining, Citeseer (2001)

Guarracino, M.R., Cifarelli, C., Seref, O., Pardalos, P.M.: A classification method based on generalized eigenvalue problems. Optim. Methods Softw. 22(1), 73–81 (2007)

Hsu, C.-W., Lin, C.-J.: A comparison of methods for multiclass support vector machines. IEEE Trans. Neural Netw. 13(2), 415–425 (2002)

Khemchandani, R., Chandra, S., et al.: Fuzzy linear proximal support vector machines for multi-category data classification. Neurocomputing 67, 426–435 (2005)

Lei, H., Govindaraju, V.: Half-against-half multi-class support vector machines. In: International Workshop on Multiple Classifier Systems, Springer, pp. 156–164 (2005)

Shao, Y.-H., Chen, W.-J., Huang, W.-B., Yang, Z.-M., Deng, N.-Y.: The best separating decision tree twin support vector machine for multi-class classification. Procedia Comput. Sci. 17, 1032–1038 (2013)

Xie, J., Hone, K., Xie, W., Gao, X., Shi, Y., Liu, X.: Extending twin support vector machine classifier for multi-category classification problems. Intell. Data Anal. 17(4), 649–664 (2013)

Geurts, P., Ernst, D., Wehenkel, L.: Extremely randomized trees. Mach. Learn. 63(1), 3–42 (2006)

Zhang, C.-X., Zhang, J.-S.: RotBoost: a technique for combining rotation forest and AdaBoost. Pattern Recognit. Lett. 29(10), 1524–1536 (2008)

Kohavi, R., Wolpert, D. H., et al.: Bias plus variance decomposition for zero-one loss functions. In: ICML, Vol. 96, pp. 275–83 (1996)

Mehta, M., Rissanen, J., Agrawal, R., et al.: Mdl-based decision tree pruning. In: KDD, Vol. 21, pp. 216–221 (1995)

Zhang, C., Ma, Y.: Ensemble Machine Learning: Methods and Applications. Springer, Berlin (2012)

Bhattacharyya, A.: On a measure of divergence between two statistical populations defined by their probability distributions. Bull. Calcutta Math. Soc. 35, 99–109 (1943)

Blake, C., Merz, C. J.: Uci repository of machine learning databases (1998). http://www.ics.uci.edu/~mlearn/MLRepository.html

Ganaie, M.A., Muhammad, T., Suganthan, P.M.: Oblique decision tree ensemble via twin bounded SVM. Expert Syst. Appl. 143, 113072 (2020)

Demšar, J.: Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 7, 1–30 (2006)

Acknowledgements

This work is an extension of our conference paper “Oblique Random Forest via Regularized Multisurface Proximal Support Vector Machine" presented at Global Conference for Advancement in Technology (GCAT), 2019.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors equally contributed to the study conception and design. Material preparation, data collection and analysis were performed by all authors. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest. Authors certify that there is no actual or potential conflict of interest in relation to this article.

Financial or Non-financial interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Saigal, P., David, A. & Rastogi, R. Oblique random forests with binary and ternary decision structures and non-parallel hyperplanes classifiers. Int J Data Sci Anal (2023). https://doi.org/10.1007/s41060-023-00472-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41060-023-00472-y