Abstract

Since the COVID-19 pandemic, the demand to study and work from home has become of great importance. While recent research has provided evidence for the negative influence of remote studying on the mental and physical health of students, less is known about digital well-being interventions to mitigate these effects. This study had two objectives: 1) to assess the effects of the online well-being intervention, StudentPOWR, on the subjective well-being of students studying from home, and 2) to investigate the influence of engagement with the intervention on subjective well-being. This was a non-blind, 3-arm (full access, limited access, and waitlist control) randomized controlled trial (RCT) and took place in March 2021. University students (N = 99) studying remotely in Ireland and the Netherlands were randomly assigned to the full access (N = 36), partial access (N = 30), or waitlist control (N = 33) group for the four-week intervention period. Measures included the SPANE questionnaire for subjective well-being and the DBCI-ES-Ex for engagement with the intervention. Participants in both the full access and the partial access groups improved in their subjective well-being scores from baseline to week 2 compared to the waitlist control group (p = .004, Hedges g equal to 0.4902 - small effect size - and 0.5698 - medium effect size - for the full intervention and partial intervention, respectively). However, post-intervention, participants in the partial intervention – but not in the full access group - showed significantly greater changes in subjective well-being scores compared to those in the waitlist control group. Possible explanations for these results, comparisons with previous studies, and suggestions for future research are explored.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The growth of the field of positive psychology has been fast and rapid (Rusk & Waters, 2013). A central goal of this growing branch of psychology is the identification, development, and evaluation of interventions that aim to enhance well-being (Wood & Johnson, 2016). The rationale for the development of well-being interventions was in part due to the recognition that well-being and psychopathology are two independent constructs (Carr et al., 2021). There has been substantial progress in research and practice in expanding the notion of mental health beyond the absence of mental illness and rather integrating the presence of positive features, including well-being (Galderisi et al., 2015). Well-being has frequently been described as a difficult concept to define due to the dynamic, multifaceted constructs that constitute it (Dodge et al., 2012). Researchers across a variety of backgrounds suggest dividing well-being into objective and subjective components (Voukelatou et al., 2021). The objective components of well-being include many material and social attributes of one’s life circumstances, including physical resources, education, employment and income, housing, and health. Such attributes are quantitatively measured (Wallace et al., 2021). The subjective components of well-being, on the other hand, are represented in an individual’s subjective thoughts and feelings about one’s life and circumstances, and their level of satisfaction with such (King et al., 2014). It is interpreted to mean experiencing a high level of positive affect, a low level of negative affect, and a high degree of satisfaction with one’s life (Deci & Ryan, 2008). Subjective well-being has been traditionally captured through studies based on data collected by self-reporting measures where individuals evaluate their own lives (Keyes, 2006). Subjective well-being is enhanced when the social determinants of a healthy life, in particular psychosocial (mental health and social support) and physical (diet and physical activity), are promoted and protected (Naz & Bögenhold, 2018).

The COVID-19 pandemic had significant negative effects on the overall well-being of individuals (Toselli et al., 2022), forcing people from around the globe to considerably modify their daily routines (Bastoni et al., 2021). Social distancing and self-isolation policies were introduced in most European countries, including public gathering bans, border closure, temporary restrictions on free internal movements of the citizens, and school and workplace closure (ECDPC, 2020). The Republic of Ireland was placed on full lockdown on the 27th of March 2020, which involved a ban on all non-essential journeys outside the home for two weeks. The only exceptions were for travelling to and from work for essential workers, shopping for essential food and household goods, attending medical appointments, supporting the sick or elderly, and taking brief physical exercise within 2 km of one’s home. In May 2020, the Irish government published a COVID-19 ‘roadmap’ of four phases for reopening society and business to ease the restrictions in a phased manner over five months (Department of the Taoiseach, 2020). Within the Netherlands, the Dutch Government implemented a strategy which it called an “Intelligent COVID-19 Lockdown” (de Haas et al., 2020). This lockdown involved social distancing, social isolation, public event cancellations, self-quarantine and a 9pm curfew (Fried et al., 2022). Large-scale businesses, schools, and universities were closed, and international travel was restricted (Government of the Netherlands, 2020). Although recognized as effective measures to curb the spread of COVID-19, these “stay-at-home” policies evoked a negative effect on the overall well-being of the population, with a greater proportion of individuals experiencing physical and social inactivity, poor sleep quality, unhealthy diet behaviours, and unemployment (Ammar et al., 2021).

The COVID-19 pandemic had an extensive impact on the higher education sector globally (Crawford et al., 2020), with universities from around the world, including Ireland and the Netherlands, switching to remote learning to prevent the spread of the virus (Gewin, 2020). University students, who are already recognized as a vulnerable population, were at increased risk of mental and physical health issues given the COVID-19-related interferences to higher education (Liu et al., 2020). Prior to the outbreak of the virus, the World Health Organisation’s international survey of 13,984 participants across eight countries found that one-third of first year college students self-reported a mental health disorder (Auerbach et al., 2018). Students’ experience of studying has been described as disrupted and leading to feelings of anxiety, hopelessness, and insecurity (Ma et al., 2020; Hajdúk et al., 2020). Remote learning has also impacted the physical activity of students, with reports demonstrating that students’ levels of physical activity reduced across all ages (Martínez-de-Quel et al., 2021). Physical activity has well-established relations with positive mental health and well-being (2018 PAGAC; Sallis et al., 2016). The sudden changes in learning environments, along with the prevalence of mental health disorders and decreases in physical activity levels following the COVID-19 pandemic has brought the importance of well-being into focus for many universities (Novo et al., 2020). Student well-being has been shown to increase a sense of belonging, positive relationships with others, participation in learning activities, autonomy, and competencies (Cox & Brewster, 2021) and reduce their stress, frustration, burnout, and withdrawal from active learning (Yazici et al., 2016). Well-being not only stimulates student academic achievement, but also prepares students for lifelong success (Mahatmya et al., 2018). Thus, focusing on methods to promote and enhance student subjective well-being is a necessary endeavor.

With research suggesting that the change to remote learning is having a measurable negative impact on student well-being (e.g., Ostafichuk et al., 2021), digital interventions appear as a compelling strategy to improve student well-being and have been developed and evaluated in randomized controlled trials, with many showing positive results (Lattie et al., 2019).

Universities are an ideal setting for such well-being interventions (Baik et al., 2019). However, with many universities teaching from home, and more remote working options available for students after university, digital well-being interventions may be the optimal method to improve well-being as they can be delivered, for example, through smartphone applications, computer programs, short message service (SMS), interactive websites, social media, and wearable devices (Perski et al., 2017). One such intervention is StudentPOWR.

1.1 StudentPOWR

StudentPOWR is a holistic well-being platform that incorporates both psychological and physical well-being. It comprises the pre-existing online well-being tool, ‘POWR’ (wrkit.com/products/well-being), and the pre-existing online exercise tool, Move (wrkit.com/move). POWR is an interactive well-being tool designed by a team of clinical psychologists to help individuals manage their overall well-being and academic performance. It is paid for by the university and is then free for the students to use. POWR is accessible 24/7 on all online devices and has six pillars of well-being: mind, active, sleep, food, life, and work. The user completes clinical questionnaires within each pillar of well-being and receives a score based on their answers. The scores fall into four categories: low, average, high, or excellent. According to their scores in these questionnaires, an individually tailored behavioural ‘plan’ is recommended. There are over 450 behavioural plans that are recommended according to an algorithm that was developed by the clinical and technology teams. These are easy seven-day plans that are based on cognitive behavioural therapy techniques and encourage users to engage in health-promoting behaviors that enhance well-being (see Appendix 1 for screenshots and a more detailed description of features on POWR). Next to POWR, StudentPOWR also has the online exercise tool, Move (see screenshots in Appendix 2). Move contains workout videos, including Yoga, Pilates, High Intensity Interval Training, mobility classes, and deskercises (short exercises that can be done at one’s desk with no equipment needed). Move was developed by licensed personal trainers, Yoga, and Pilates instructors. Each workout is designed to be done in the comfort of the individual’s home, so they are all suitable for students as they study from home.

1.2 The Current Study

While there is a growing body of evidence supporting that digital interventions can have significant effects on improving well-being, low levels of user engagement have been reported as barriers to optimal efficacy (Gan et al., 2022). User engagement is considered one of the most important factors in determining the success of a digital intervention (Sharpe et al., 2017). Although various interpretations of engagement have emerged (Doherty & Doherty, 2018), it has been commonly described as a subjective experience characterized by attention, interest, and affect (Perski et al., 2017). Based on the above evidence, there is an urgent need for, and high potential in using digital interventions to improve student well-being (Rauschenberg et al., 2021). The current study therefore aims to evaluate the online well-being intervention, “StudentPOWR”, in improving the subjective well-being of university students studying from home in Ireland and the Netherlands. Additionally, engagement, as defined above, is assessed throughout the intervention as a potential moderator between the StudentPOWR intervention and subjective well-being scores.

2 Methods

2.1 Participants

The student participants were recruited using convenience sampling with an advertisement (Appendix 1) via social media posts and group chats. In total, 99 students who were studying from home were interested in participating and all were eligible to take part in the study, completing all questionnaires. Based on a power calculation using G*Power (Faul et al., 2009), a minimum of 54 participants were required in total (A priori power calculation for a repeated measures ANOVA on the interaction effect of the between and within factors with 3 levels each, assuming a medium effect size (r = .25), alpha of 0.05 and Power of 0.95; see Appendix 3). We further ran a post-hoc analysis with the same settings and setting the sample size to 70 (this is the most conservative scenario as 70 is the minimum sample size in our intervention achieved at time point 3) resulted in a power > 0.99. Data were collected remotely from three universities in the Netherlands and five universities in Ireland. Participants originated from diverse study faculties across universities. Participation in the study was voluntary. The inclusion criteria were: (1) age 18 years or older; (2) university student studying from home; (3) fluency in the English language; (4) access to a laptop and smartphone.

2.2 Procedure

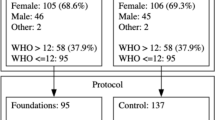

A non-blind, 3-arm (full access, limited access, and waitlist control) randomized controlled trial (RCT) was used to carry out this investigation in March 2021. After the recruitment process, a total of 99 students were interested, and all met the inclusion criteria. The participants were provided a unique 8-digit code to ensure anonymity and confidentiality.

The participants were surveyed at three intervals over the four-week study period (see Fig. 1). There were three conditions, all over a period of four weeks: (1) full access to the intervention, (2) limited access to the intervention, and (3) a waiting-list control group. Both the limited access group and the control group had the possibility to receive full access after the completion of the study. To establish baseline subjective well-being scores before any intervention, all participants received a Qualtrics link to complete the pre-test SPANE well-being questionnaire (Diener et al., 2010). Randomization of participants was conducted using the random function in Microsoft Excel 2015 (aiming to achieve equal sized groups). Participants were randomly assigned to either condition 1 (N = 36), condition 2 (N = 30), or condition 3 (N = 33).

The participants in condition 1 were emailed with a link to register with full access to StudentPOWR (POWR and Move). Participants in condition 2 were emailed with a link to register with limited access to StudentPOWR (just Move). Participants in condition 3 were informed that they were in the wait-list control group and that they would be granted full access to StudentPOWR after four weeks. All participants were aware of the study’s purpose and their assignment, as participants in conditions 2 and 3 needed to know that they would have full access to the intervention after the four weeks of the study period. Two weeks later, the SPANE well-being questionnaire (Diener et al., 2010) was administered to each of the conditions again (mid-test, time point 2). To test for participants’ engagement with StudentPOWR, the DBCI-ES-Ex (Perski et al., 2020) was also administered to participants in condition 1 and 2. Four weeks after baseline, the SPANE well-being questionnaire and the DBCI-ES-Ex engagement questionnaire were administered to participants in conditions 1 and 2 again (post-test, time point 2). In condition 3, just the SPANE questionnaire was administered. After finishing the data collection, participants in condition 2 and condition 3 were then granted full access to StudentPOWR for the following four weeks. Participants in condition 1 continued to have full access for the next four weeks. No data were collected during the four weeks post-intervention. Following data collection and during the following four weeks, a well-being challenge was run on StudentPOWR. If they wished to participate in the challenge, participants were required to take an online Pilates class on the Move portal of StudentPOWR. This challenge was incentivized with a Fitbit Versa 3, for which the winner was selected at random using the random function in Microsoft Excel 2015. Participants were aware of this challenge prior to participation in the research and no data were collected during the challenge. The current study procedure was granted ethics approval by the Ethics Review Committee Psychology and Neuroscience (ERCPN; OZL_188_11_02_2018_S22) on 10/02/2021.

2.3 Measures

The SPANE well-being Scale (Diener et al., 2010) was used to assess the subjective well-being of participants at the three time points (α = 0.89, averaged across all three time points). This is a 12-item self-report measure of positive feelings, negative feelings, and the balance between the two. Items like “How often do you experience feeling happy?” were all ranked on a 5-point frequency scale ranging from “Very Rarely/Never” to “Very Often/Always”. The negative feelings score is subtracted from the positive feelings score, and the resulting difference score can vary from − 24 (unhappiest possible) to + 24 (happiest possible).

The experiential subscale of the Digital Behaviour Change Intervention Engagement Scale (DBCI-ES-Ex) (Perski et al., 2020) was used to assess participants engagement with StudentPOWR (α = 0.79, averaged across the two time points). It consists of eight items on a 7-point answering scale ranging from “1: not at all” to “7: extremely”. The items were introduced as: “Please answer the following questions with regard to your most recent use of StudentPOWR. How strongly did you experience the following?” The items were (1) Interest, (2) Intrigue, (3) Focus, (4) Inattention, (5) Distraction, (6) Enjoyment, (7) Annoyance, and (8) Pleasure, with items 4, 5, and 7 reverse scored. For the DBCI, a sum score was calculated (range: 8 (no engagement at all) – 56 (fully engaged).

2.4 Data Analysis

All participants with data at least 1 time point (n = 99) were included in the statistical analyses. Statistical analyses were conducted using IBM SPSS Statistics 27. To assess whether randomization of participants into the three conditions was successful, baseline values for all outcomes were compared using an ANOVA. To test whether subjective well-being scores improved during the intervention in each of the three conditions, mixed regressions were conducted with repeated measures over time of the dependent variable subjective well-being (SPANE). Specifically, we used a random intercept (subjects) model (model 1 in Table 1) and we used Gender, Exchange student, Age, Time, Condition, and the interaction between Time and Condition as fixed effects. The covariance structure was set to scale identity which is the simplest possible model assuming constant variance at each time point and no correlation between measurement times (more complicated covariance structures were explored but the results were essentially the same, so we decided to use the simplest structure for parsimony). The engagement outcome (DBCI) was missing both at baseline and in the control condition (by design). By leaving out time point 1 and the waitlist control group, a mixed regression with random intercept was run with subjective well-being (SPANE) as dependent variable. Gender, Exchange student, Age, Time, Condition, Engagement (DBCI - time varying) and all the possible interaction between Time, Condition and DBCI were used as fixed effects (model 2a in Table 1). The covariance structure was set to scale identity. Lastly, a simple linear regression was carried out to predict participants’ mean well-being change scores (pre-post intervention) based on their engagement with the intervention (model 3). All tests were carried out with alpha = 0.05.

3 Results

3.1 Study Population

The final sample consisted of 70 women (70.7%) and 29 men (29.3%), whose ages ranged from 19 to 43 (M = 23.7, SD = 3.86). Participant demographics are presented in Table 2. Between groups, no significant differences were found in baseline characteristics (all p’s > 0.72) suggesting that randomization was successful.

3.2 Dropout Analysis

From the start (pre-test) to the end of the intervention, there was an overall dropout rate of 29%. There was a 19% dropout rate in condition 1 (full access to intervention), a 43% dropout rate in condition 2 (partial access to intervention), and a 27% dropout rate in condition 3 (wait-list control group). Of the females who participated in the research, there was a 27% dropout rate and a 36% dropout rate of males. Dropout rates are presented in Table 3. Possible bias arising from how baseline variables relate to missingness of an outcome was resolved by including all baseline variables as predictors into the effect analyses with mixed regression (Verbeke & Molenberghs, 2000).

3.3 Improvements in Subjective Well-Being

The means and standard deviations of SPANE scores for each of the conditions at each time point are presented in Table 4. A significant interaction between time and condition was found, F (4,151) = 4.04, p = .004. Focusing on the regression coefficients related to the interaction using the first time point and the control condition (Waitlist control, Condition 3) as reference, these show a significant difference between the control condition at time 1 vs. (a) the full intervention (Condition 1) at time 2 (p = .003, regression coefficient \(b=3.87\), 95% CI = [1.30, 6.44], Hedges g = 0.4902, small effect size), (b) the partial intervention (Condition 2) at time 2 (p=.012, regression coefficient \(b=3.60\), 95% CI = [0.80, 6.40], Hedges g = 0.5698, medium effect size), and (c) the partial intervention at time 3 (p = .003, regression coefficient \(b=4.54\), 95% CI = [1.57, 7.51], Hedges g = 0.9024, large effect size). No significant difference was found between the control condition at time 1 vs. the full intervention at time 3 (p = .175, regression coefficient \(b=1.81\), 95% CI = [-0.82, 4.44]). This is visualized in Fig. 2.

3.4 Engagement with the Intervention

A mixed regression was run to see how engagement with the intervention influenced the intervention-effects on subjective well-being. The means and standard deviations of engagement scores for conditions 1 and 2 are provided in Table 5. Since the specified model (model 2a in Table 1) considers four interaction terms (three of the first order, one of the second order), which makes the model complex and difficult to interpret, we ran model selection on the interaction terms, using backward stepwise regression. Specifically, first we focused on the second order interaction, which was not significant (p = .468). Then, the analysis was run again focusing only on the first order interactions (model 2b in Table 1). Again, these were all not significant with the largest p-value corresponding to the interaction between Condition and Engagement (p = .889), which was removed from the analysis, leading to model 2c in Table 1. The remaining first order interactions were also not significant (p = .163 for condition*time and p = .095 for time*engagement). We then tested both models (model 2d and model 2e in Table 1) keeping only one of the two remaining first order interaction - these were also not significant: p = .124 for condition*time and p = .073 for time*engagement. Finally, we fitted the model (model 2f in Table 1) without any interaction. We focus on this final model, which resulted in only engagement being a significant predictor (F(1, 88,540) = 19,239; p < .001), suggesting a possible confounding effect of engagement. However, the conclusion could only be drawn with caution, as the analysis was performed on a reduced dataset.

For condition 1, the mean engagement score at mid-intervention was 35.77 and decreased to 35.14 post-intervention, while the mean subjective well-being score at mid-intervention was reduced to 8.57 and 8.38 at post-intervention. For condition 2, the mean engagement score at mid-intervention was 34.83 and increased to 37.18 at post-intervention. The mean subjective well-being score at mid-intervention was 8.81 for condition 2 and increased to 11.06 at post-intervention.

A simple linear regression was carried out to predict participants’ mean well-being change scores (pre-post intervention) based on their engagement change score with the intervention (model 3 in Table 1). Preliminary analyses were performed to ensure no violation of the assumptions of normality, linearity, and homoscedasticity. The value of well-being change scores when one is not engaged at all is 1.049. A significant regression model was found (F (1, 45) = 6.69, p = .013, regression coefficient \(b=0.318\), 95% CI = [0.07, 0.556]), with an R2 of 0.132. Using the regression equation, we can then predict that there is a relationship between engagement and mean well-being change scores such that each time engagement is increased by 1 score (X-axis), participants’ mean well-being change score can then be expected to improve by 0.318 (Y-axis). This suggests that the level of engagement participants in condition 1 and 2 had with the intervention had a significant effect on their subjective well-being scores post-intervention, demonstrating that engagement served as a moderator for subjective well-being.

4 Discussion

In this study, the effects of the StudentPOWR well-being intervention on the subjective well-being of students studying from home was examined. In line with earlier digital well-being interventions (e.g., Harrer et al., 2019; Lambert et al., 2019; Krifa et al., 2022; Davies et al., 2014), our online intervention (POWR and Move) was successful in changing student well-being scores compared to a control group at week 2, with a small effect size of the full intervention (Hedges g = 0.4902), and a medium effect size of the partial intervention (Hedges g = 0.5698). However, post-intervention measures (week 4) suggested that only students receiving the partial intervention (Move) – and not the full intervention - had significant changes in subjective well-being compared to the control group, with a large effect size (Hedges g = 0.9024). Engagement scores for those with full access to the intervention decreased slightly over the course of the experiment, while the engagement scores for participants with partial access to the intervention increased over time.

Although initially unexpected, the findings are in line with earlier studies (e.g., Kurelović et al., 2016; Eppler & Mengis, 2003) that suggest that perceived information overload leads to an overall negative impact on well-being, including psychological stress (Lee et al., 2016), anxiety (Bawden & Robinson, 2009), and negative affect (LaRose et al., 2014). Different authors talk about a variety of factors that influence information overload, with a common consensus that the technology used to get the information plays a key role (Ruff, 2002; Vigil, 2011). In our combined POWR and Move intervention – offering informative articles, notifications, clinical plans, webinars, exercise videos and more - it might have been that there were too many features involved.

Some authors suggest that this (digital) information overload might lead to a reduced user engagement (Sharpe et al., 2017) and higher levels of digital fatigue (defined as “a state of indifference or apathy brought on by an overexposure to something”; Merriam-Webster, 2022, p1). The advent of COVID-19 resulted in an unprecedented level of digital global communication from both universities and individuals (Chang et al., 2020). During this time, there was a rise in the general use of technology as people were using it to consume news media, watch television, use social media to connect with others, and utilize lifestyle apps to shop for groceries and other consumer goods (Garfin, 2020). Previous research suggests that excessive use of digital devices leads to digital fatigue in students during COVID-19 (Sarangal & Nargotra, 2022; Sharma et al., 2021). This digital fatigue has been found to decrease subjective well-being (Singh et al., 2022) and may have influenced the subjective well-being scores of students receiving the full intervention (POWR and Move).

The level of engagement (as measured through attention, interest, and affect) that participants in condition 1 and 2 had with the intervention had a significant effect on their subjective well-being scores post intervention, such that those who were more engagement had increases in subjective well-being. This is in line with previous research by Gander et al. (2016) who concluded that positive psychology interventions based on engagement, meaning, positive relationships, and accomplishment are effective in increasing well-being.

5 Limitations, Strengths, and Future Directions

The current study had several limitations. First, the 2021 sample was (1) recruited online, (2) mostly female, and (3) only consisted of students across Ireland and the Netherlands. The self-guided manner of data collection might have compromised the internal validity (such as extraneous variables influencing subjective well-being scores) (Neumeier et al., 2017; Andrade, 2018). Additionally, studies have indicated greater levels of mental health problems due to COVID-19 in women compared to men (McGinty et al., 2020; Pierce et al., 2020; Di Giuseppe et al., 2020). Therefore, generalizability to other populations might be limited (Gray et al., 2020; Arendt et al., 2020). This was however considered a worthwhile trade-off in order to gain a better understanding of the interventions’ effectiveness in the real-world context for which they are intended. While COVID-19 restrictions varied in countries across the globe and this study focused only on universities in Ireland and the Netherlands, there was a high percentage of participants who were exchange students, enhancing the cultural diversity of the sample.

A second limitation was that dropout rates differed between conditions (differential attrition; Bell et al., 2013). There was nearly double the dropout rate of participants from the partial access group (45% dropout rate) compared to the full access group (24% dropout rate). This differential attrition may have influenced the increasing subjective well-being scores for participants in the partial access group.

To overcome some of the limitations, participants were randomly assigned to one of the three conditions, which is one method of avoiding selection effects within experiments (i.e., each group had similar subjective well-being scores before the intervention) (Lanz, 2020). The randomization of participants reduced bias and provided a rigorous tool to examine the cause-effect relationship between the intervention and its outcome (Hariton & Locascio, 2018). Additionally, dropout analyses were conducted on exchange and non-exchange students which provided insights into differences between these cohorts of students. Further, the inclusion of a waitlist control group had ethical advantages because it allowed for the provision of care (if delayed) to participants during a difficult period (Cunningham et al., 2013).

In future studies, it is recommended to include country of origin as a variable to gain a better understanding of the subjective well-being of students from different countries. The influence of cultural and social contexts on university students’ wellbeing is an underexplored research area (Hernández-Torrano et al., 2020). It is also recommended to include a more extensive measure of engagement. In the context of digital interventions, engagement has typically been conceptualized as (1) the extent of usage of digital interventions, focusing on amount, frequency, duration, and depth of usage (Danaher et al., 2006) and (2) a subjective experience characterized by attention, interest, and affect (Perski et al., 2017). While our study accounted for the latter when measuring engagement, a more accurate representation might include the former as well, incorporating drop-out rates into the measurement of engagement. Future replication studies should consider both concepts of engagement, by measuring amount, frequency, duration, and depth of usage as well as the constructs measured in Perski et al.’s DBCI-ES-Ex. This would prevent from the extraneous effects of differential attrition on the outcomes of the research.

6 Conclusion

The current study showed some positive outcomes of the StudentPOWR intervention in improving the subjective well-being of students studying from home. While it initially was predicted that the combination of POWR and Move in one intervention would have had a greater positive impact on subjective well-being than just Move, this was not the case. Engagement with the interventions played a potential role in this effect. The findings of this research, along with previous studies on information overload, digital fatigue, and subjective well-being, suggest that when designing digital well-being interventions to enhance student well-being, sometimes less is more. This is particularly relevant during times of heightened technology use (such as COVID-19).

Data Availability

All data and additional materials are fully disclosed in the Supplementary materials and at the Open Science Framework: https://doi.org/10.17605/OSF.IO/GT6KE

References

Ammar, A., Trabelsi, K., Brach, M., Chtourou, H., Boukhris, O., Masmoudi, L., & Hoekelmann, A. (2021). Effects of home confinement on mental health and lifestyle behaviours during the COVID-19 outbreak: Insight from the ECLB-COVID19 multicenter study. Biology of Sport, 38(1), 9–21.

Andrade, C. (2018). Internal, external, and ecological validity in research design, conduct, and evaluation. Indian Journal of Psychological Medicine, 40(5), 498–499.

Arendt, F., Markiewitz, A., Mestas, M., & Scherr, S. (2020). COVID-19 pandemic, government responses, and public mental health: Investigating consequences through crisis hotline calls in two countries. Social Science & Medicine, 265, 113532.

Auerbach, R. P., Mortier, P., Bruffaerts, R., Alonso, J., Benjet, C., Cuijpers, P., ... & Kessler, R. C. (2018). WHO World Mental Health Surveys International College Student Project: Prevalence and distribution of mental disorders. Journal of Abnormal Psychology, 127(7), 623.

Baik, C., Larcombe, W., & Brooker, A. (2019). How universities can enhance student mental well-being: The student perspective. Higher Education Research & Development, 38(4), 674–687.

Bastoni, S., Wrede, C., Ammar, A., Braakman-Jansen, A., Sanderman, R., Gaggioli, A., & van Gemert-Pijnen, L. (2021). Psychosocial effects and use of communication technologies during home confinement in the first wave of the COVID-19 pandemic in Italy and the Netherlands. International Journal of Environmental Research and Public Health, 18(5), 2619.

Bawden, D., & Robinson, L. (2009). The dark side of information: Overload, anxiety and other paradoxes and pathologies. Journal of Information Science, 35(2), 180–191.

Bell, M. L., Kenward, M. G., Fairclough, D. L., & Horton, N. J. (2013). Differential dropout and bias in randomised controlled trials: When it matters and when it may not. BMJ, 346, e8668. https://doi.org/10.1136/bmj.e8668

Carr, A., Cullen, K., Keeney, C., Canning, C., Mooney, O., Chinseallaigh, E., & O’Dowd, A. (2021). Effectiveness of positive psychology interventions: A systematic review and meta-analysis. The Journal of Positive Psychology, 16(6), 749–769.

Chang, S., McKay, D., Caidi, N., Mendoza, A., Gomes, C., & Ekmekcioglu, C. (2020). From way across the sea: Information overload and international students during the COVID-19 pandemic. Proceedings of the Association for Information Science and Technology, 57(1), e289.

Cohen, J. (1969). Statistical power analysis for the behavioural sciences. Academic Press.

Cox, A. M., & Brewster, L. (2021). Services for student well-being in academic libraries: Three challenges. New Review of Academic Librarianship, 27(2), 149–164.

Crawford, J., Butler-Henderson, K., Rudolph, J., Malkawi, B., Glowatz, M., Burton, R., ... & Lam, S. (2020). COVID-19: 20 countries' higher education intra-period digital pedagogy responses. Journal of Applied Learning & Teaching, 3(1), 1–20.

Cunningham, J. A., Kypri, K., & McCambridge, J. (2013). Exploratory randomized controlled trial evaluating the impact of a waiting list control design. BMC Medical Research Methodology, 13(1), 1–7.

Danaher, B., Boles, S., Akers, L., Gordon, J., & Severson, H. (2006). Defining participant exposure measures in web-based health behavior change programs. Journal of Medical Internet Research, 8(3), e15.

Davies, E. B., Morriss, R., & Glazebrook, C. (2014). Computer-delivered and web-based interventions to improve depression, anxiety, and psychological well-being of university students: A systematic review and meta-analysis. Journal of Medical Internet Research, 16(5), e3142.

De Haas, M., Faber, R., & Hamersma, M. (2020). How COVID-19 and the dutch ‘intelligent lockdown’ change activities, work and travel behaviour: Evidence from longitudinal data in the Netherlands. Transportation Research Interdisciplinary Perspectives, 6, 100150.

Deci, E. L., & Ryan, R. M. (2008). Facilitating optimal motivation and psychological well-being across life’s domains. Canadian Psychology/Psychologie Canadienne, 49(1), 14.

Department of the Taoiseach. (2020). The Path Ahead. COVID-19 Resilience and Recovery 2021. Retrieved August 18, 2023, from https://www.gov.ie/en/publication/c4876-covid-19-resilience-and-recovery-2021-the-path-ahead/?referrer=http://www.gov.ie/ThePathAhead/

Di Giuseppe, M., Zilcha-Mano, S., Prout, T. A., Perry, J. C., Orrù, G., & Conversano, C. (2020). Psychological impact of coronavirus disease 2019 among italians during the first week of lockdown. Frontiers in Psychiatry, 11, 1022.

Diener, E., Wirtz, D., Tov, W., Kim-Prieto, C., Choi, D. W., Oishi, S., & Biswas-Diener, R. (2010). New well-being measures: Short scales to assess flourishing and positive and negative feelings. Social Indicators Research, 97(2), 143–156.

Dodge, R., Daly, A., Huyton, J., & Sanders, L. (2012). The challenge of defining wellbeing. International Journal of Wellbeing, 2(3), 222–235. https://doi.org/10.5502/ijw.v2i3.4

Doherty, K., & Doherty, G. (2018). Engagement in HCI. ACM Computing Surveys, 51(5), 1–39.

Eppler, M. J., & Mengis, J. (2003). A framework for information overload research in organizations. Università della Svizzera Italiana. https://sonar.ch/usi/documents/317892

European Centre for Disease Prevention and Control. (2020). Data on the geographic distribution of COVID-19 cases worldwide. (accessed 22 May 2023). https://www.ecdc.europa.eu/en/publications-data/download-todays-data-geographic-distribution-covid-19-cases-worldwide

Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41, 1149–1160.

Fried, E. I., Papanikolaou, F., & Epskamp, S. (2022). Mental health and social contact during the COVID-19 pandemic: An ecological momentary assessment study. Clinical Psychological Science, 10(2), 340–354.

Galderisi, S., Heinz, A., Kastrup, M., Beezhold, J., & Sartorius, N. (2015). Toward a new definition of mental health. World Psychiatry, 14(2), 231.

Gan, D. Z., McGillivray, L., Larsen, M. E., Christensen, H., & Torok, M. (2022). Technology-supported strategies for promoting user engagement with digital mental health interventions: A systematic review. Digital Health, 8, 20552076221098268.

Gander, F., Proyer, R. T., & Ruch, W. (2016). Positive psychology interventions addressing pleasure, engagement, meaning, positive relationships, and accomplishment increase well-being and ameliorate depressive symptoms: A randomized, placebo-controlled online study. Frontiers in Psychology, 7, 686.

Garfin, D. R. (2020). Technology as a coping tool during the COVID-19 pandemic: Implications and recommendations. Stress and Health, 36(4), 555.

Gewin, V. (2020). Five tips for moving teaching online as COVID-19 takes hold. Nature, 580(7802), 295–296.

Government of the Netherlands. (2020). New Regional Measures to Control the Spread of Coronavirus (accessed 22 May 2023). https://www.government.nl/latest/news/2020/09/18/new-regional-measures-to-control-the-spread-of-coronavirus

Gray, N. S., O’Connor, C., Knowles, J., Pink, J., Simkiss, N. J., Williams, S. D., & Snowden, R. J. (2020). The influence of the COVID-19 pandemic on mental well-being and psychological distress: Impact upon a single country. Frontiers in Psychiatry, 11, 594115. https://doi.org/10.3389/fpsyt.2020.594115

Hajdúk, M., Dančík, D., Januška, J., Svetský, V., Straková, A., Turček, M., & Pečeňák, J. (2020). Psychotic experiences in student population during the COVID-19 pandemic. Schizophrenia Research, 222, 520.

Hariton, E., & Locascio, J. J. (2018). Randomised controlled trials—the gold standard for effectiveness research. BJOG: An International Journal of Obstetrics and Gynaecology, 125(13), 1716.

Harrer, M., Adam, S. H., Baumeister, H., Cuijpers, P., Karyotaki, E., Auerbach, R. P., ... & Ebert, D. D. (2019). Internet interventions for mental health in university students: A systematic review and meta-analysis. International Journal of Methods in Psychiatric Research, 28(2), e1759.

Hernández-Torrano, D., Ibrayeva, L., Sparks, J., Lim, N., Clementi, A., Almukhambetova, A., & Muratkyzy, A. (2020). Mental health and well-being of university students: A bibliometric mapping of the literature. Frontiers in Psychology, 11, 1226.

Keyes, C. L. (2006). Subjective well-being in mental health and human development research worldwide: An introduction. Social Indicators Research, 77(1), 1–10.

King, M. F., Renó, V. F., & Novo, E. M. (2014). The concept, dimensions and methods of assessment of human well-being within a socioecological context: A literature review. Social Indicators Research, 116(3), 681–698.

Krifa, I., Hallez, Q., van Zyl, L. E., Braham, A., Sahli, J., Ben Nasr, S., & Shankland, R. (2022). Effectiveness of an online positive psychology intervention among tunisian healthcare students on mental health and study engagement during the Covid-19 pandemic. Applied Psychology: Health and Well‐Being, 14(4), 1228–1254.

Kurelović, E. K., Tomljanović, J., & Davidović, V. (2016). Information overload, information literacy and use of technology by students. International Journal of Educational and Pedagogical Sciences, 10(3), 917–921.

Lambert, L., Passmore, H. A., & Joshanloo, M. (2019). A positive psychology intervention program in a culturally-diverse university: Boosting happiness and reducing fear. Journal of Happiness Studies, 20(4), 1141–1162.

Lanz, J. J. (2020). Evidence-based resilience intervention for nursing students: A randomized controlled pilot trial. International Journal of Applied Positive Psychology, 5(3), 217–230.

LaRose, R., Connolly, R., Lee, H., Li, K., & Hales, K. D. (2014). Connection overload? A cross cultural study of the consequences of social media connection. Information Systems Management, 31(1), 59–73.

Lattie, E. G., Adkins, E. C., Winquist, N., Stiles-Shields, C., Wafford, Q. E., & Graham, A. K. (2019). Digital mental health interventions for depression, anxiety, and enhancement of psychological well-being among college students: Systematic review. Journal of Medical Internet Research, 21(7), e12869.

Lee, A. R., Son, S. M., & Kim, K. K. (2016). Information and communication technology overload and social networking service fatigue: A stress perspective. Computers in Human Behavior, 55, 51–61.

Liu, C. H., Zhang, E., Wong, G. T. F., & Hyun, S. (2020). Factors associated with depression, anxiety, and PTSD symptomatology during the COVID-19 pandemic: Clinical implications for US young adult mental health. Psychiatry Research, 290, 113172.

Ma, Z., Zhao, J., Li, Y., Chen, D., Wang, T., Zhang, Z., & Liu, X. (2020). Mental health problems and correlates among 746 217 college students during the coronavirus disease 2019 outbreak in China. Epidemiology and Psychiatric Sciences, 29, E181. https://doi.org/10.1017/S2045796020000931

Mahatmya, D., Thurston, M., & Lynch, M. E. (2018). Developing students’ well-being through integrative, experiential learning courses. Journal of Student Affairs Research and Practice, 55(3), 295–307.

Martínez-de-Quel, Ó., Suárez-Iglesias, D., López-Flores, M., & Pérez, C. A. (2021). Physical activity, dietary habits and sleep quality before and during COVID-19 lockdown: A longitudinal study. Appetite, 158, 105019.

McGinty, E. E., Presskreischer, R., Han, H., & Barry, C. L. (2020). Psychological distress and loneliness reported by US adults in 2018 and April 2020. Jama, 324(1), 93–94.

Merriam Webster Dictionary. (2022). Fatigue. Retrieved August 18, 2023, from https://www.merriamwebster.com/dictionary/fatigue

Muller, K. E., & Barton, C. N. (1989). Approximate power for repeated-measures ANOVA lacking sphericity. Journal of the American Statistical Association, 84(406), 549–555.

Naz, F., & Bögenhold, D. (2018). A contested terrain: Re/conceptualising the well-being of homeworkers. The Economic and Labour Relations Review, 29(3), 328–345.

Neumeier, L. M., Brook, L., Ditchburn, G., & Sckopke, P. (2017). Delivering your daily dose of well-being to the workplace: A randomized controlled trial of an online well-being programme for employees. European Journal of Work and Organizational Psychology, 26(4), 555–573.

Novo, M., Gancedo, Y., Vázquez, M. J., Marcos, V., & Fariña, F. (2020). Relationship between class participation and well-being in university students and the effect of Covid-19. In Proceedings of the 12th Annual International Conference on Education and New Learning Technologies, Valencia, Spain (pp. 6–7).

Ostafichuk, P. M., Tse, M., Power, J., Jaeger, C. P., & Nakane, J. (2021). Remote learning impacts on student wellbeing. In Proceedings of the Canadian Engineering Education Association (CEEA). https://doi.org/10.24908/pceea.vi0.14867

Perski, O., Blandford, A., Garnett, C., Crane, D., West, R., & Michie, S. (2020). A self-report measure of engagement with digital behavior change interventions (DBCIs): Development and psychometric evaluation of the “DBCI Engagement Scale”. Translational Behavioral Medicine, 10(1), 267–277.

Perski, O., Blandford, A., West, R., & Michie, S. (2017). Conceptualising engagement with digital behaviour change interventions: A systematic review using principles from critical interpretive synthesis. Translational Behavioral Medicine, 7(2), 254–267.

Pierce, M., Hope, H., Ford, T., Hatch, S., Hotopf, M., John, A., & Abel, K. M. (2020). Mental health before and during the COVID-19 pandemic: A longitudinal probability sample survey of the UK population. The Lancet Psychiatry, 7(10), 883–892.

Rauschenberg, C., Schick, A., Hirjak, D., Seidler, A., Paetzold, I., Apfelbacher, C., & Reininghaus, U. (2021). Evidence synthesis of digital interventions to mitigate the negative impact of the COVID-19 pandemic on public mental health: Rapid meta-review. Journal of Medical Internet Research, 23(3), e23365.

Ruff, J. (2002). Information overload: Causes, symptoms and solutions (pp. 1–13). Harvard Graduate School of Education’s Learning Innovations Laboratory (LILA).

Rusk, R. D., & Waters, L. E. (2013). Tracing the size, reach, impact, and breadth of positive psychology. The Journal of Positive Psychology, 8(3), 207–221.

Sallis, J. F., Cerin, E., Conway, T. L., Adams, M. A., Frank, L. D., Pratt, M., & Owen, N. (2016). Physical activity in relation to urban environments in 14 cities worldwide: A cross-sectional study. The Lancet, 387(10034), 2207–2217.

Sarangal, R. K., & Nargotra, M. (2022). Digital fatigue among students in current COVID-19 pandemic: A study of higher education. Gurukul Business Review, 18(64), P63–P71. https://doi.org/10.48205/gbr.v18.5

Sharma, M. K., Sunil, S., Anand, N., Amudhan, S., & Ganjekar, S. (2021). Webinar fatigue: Fallout of COVID-19. Journal of the Egyptian Public Health Association, 96(1), 1–2.

Sharpe, E., Karasouli, E., & Meyer, C. (2017). Examining factors of engagement with digital interventions for weight management: Rapid review. JMIR Research Protocols, 6(10), e205.

Singh, P., Bala, H., Dey, B. L., & Filieri, R. (2022). Enforced remote working: The impact of digital platform-induced stress and remote working experience on technology exhaustion and subjective wellbeing. Journal of Business Research, 151, 269–286.

Toselli, S., Bragonzoni, L., Grigoletto, A., Masini, A., Marini, S., Barone, G., ... & Dallolio, L. (2022). Effect of a park-based physical activity intervention on psychological well-being at the time of COVID-19. International Journal of Environmental Research and Public Health, 19(10), 6028.

Verbeke, G., & Molenberghs, G. (2000). Linear mixed models for longitudinal data. Springer.

Vigil, A. T. (2011). An experiment analyzing information overload and its impact on students’ consumer knowledge of high-definition television (Doctoral dissertation, Colorado State University).

Voukelatou, V., Gabrielli, L., Miliou, I., Cresci, S., Sharma, R., Tesconi, M., & Pappalardo, L. (2021). Measuring objective and subjective well-being: Dimensions and data sources. International Journal of Data Science and Analytics, 11(4), 279–309.

Wallace, K. J., Jago, M., Pannell, D. J., & Kim, M. K. (2021). Well-being, values, and planning in environmental management. Journal of Environmental Management, 277, 111447.

Wood, A. M., & Johnson, J. (Eds.). (2016). The Wiley handbook of positive clinical psychology. Wiley.

Yazici, A. B., Gul, M., Yazici, E., & Gul, G. K. (2016). Tennis enhances well-being in university students. Mental Illness, 8(1), 21–25.

Author information

Authors and Affiliations

Contributions

Louise Nixon: Conceptualization, Visualization, Project administration, Methodology, Writing - Original Draft, Investigation.

Gill A. Ten Hoor: Supervision, Conceptualization, Project administration, Methodology, Writing - Review & Editing.

Alberto Cassese: Methodology, Analysis, Writing, Review & Editing.

Brian Slattery: Supervision, Conceptualization, Project administration, Methodology, Writing - Review & Editing.

Corresponding author

Ethics declarations

The current study procedure was granted ethics approval by the Maastricht University Ethics Review Committee Psychology and Neuroscience (ERCPN; OZL_188_11_02_2018_S22) on 10/02/2021. Informed consent was obtained online by clicking on the appropriate button.

Competing Interests

Louise Nixon conducted the study at Maastricht University, and is currently employed by Wrkit to further validate and develop the POWR wellbeing tool for students and employees. Apart from providing access to the software, Wrkit had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results. For all other authors, no competing interests are declared.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Description and Screenshots of StudentPOWR Intervention and Advertisement for Recruitment

1.1 POWR

The ‘Listen’ section of POWR provides users with relaxing meditations and bedtime stories (e.g., a guided meditation story that helps the listener to wind down at the end of the day), while the ‘Breathe’ section has guided breathing exercises. The ‘Daily Pick Me Ups’ are small tasks for users to do and are different each day, for example, “Reach out to an old friend”. The Reflect function involves micro journaling, whereby the user is asked different questions each time such as “A simple thing I value is.” or “Somebody I am grateful to have in my life is.” followed by a variety of emotions to express how this makes the user feel. POWR has a Well-being Blog, with new health-promoting articles, TEDTalks, recipes, and videos each week. There are also monthly well-being challenges, extrinsically motivating users to win a well-being-themed prize.

Appendix 2: Overview of Features of StudentPOWR

StudentPOWR | Feature | Description |

|---|---|---|

POWR | Clinical Questionnaires | Clinically validated questionnaires in each of the 6 pillars of well-being: life, mind, sleep, food, active, and work. The user completes the questionnaire and is provided a percentage score and recommended a 7-day behavioural plan based on their score. |

Behavioural Plans | There are over 450 easy-to-follow 7-day plans that are based on cognitive behavioural therapy techniques. The user commits to doing the plan recommended to them and then once complete, they take the questionnaire in that pillar of well-being. | |

Blog | Evidence-based articles provided by experts in various fields of well-being. Contributors include psychotherapists, clinical psychologists, nutritionists, personal trainers, environmentalists, financial well-being experts etc. | |

Mood, Sleep, and Exercise Tracking | Daily tracking device in areas of mood, sleep, and exercise enable users to self-monitor their behaviour. | |

Listen | Contains meditations, soundscapes and bedtime stories. | |

Breathe | Guided breathing techniques with music and visuals to reduce stress levels. | |

Reflect | Micro-journaling function that asks personal questions and requires the user to reflect on their own life and achievements with the aim to enhance mindfulness and gratitude. | |

Webinars | Monthly webinars with expert speakers providing advice in various areas of well-being including diet, sleep, mental health, and physical health. | |

Daily Pick Me Ups | Small uplifting tasks for users to do each day to boost their well-being. | |

MOVE | Deskercises | Video classes of short exercises that can be done at one’s desk with no equipment needed. |

High Intensity Interval Training (HIIT) | HIIT video classes | |

Pilates | Pilates video classes | |

Yoga | Yoga video classes | |

Mobility | Mobility video classes |

Appendix 3: G*Power Calculation ( Faul et al., 2009) to Determine Sample Size

The effect size f in this power calculation is computed as the square root of the ratio between the variance explained by the effect under study (numerator) and the variance within group (denominator). G*Power automatically sets this effect size to 0.25, which is a medium effect size for this set-up accordingly to Cohen (see G*Power 3.1 manual and its reference to Cohen, 1969, page 348). Note that the effect size can also be represented as eta squared, which is defined as the ratio between the variance explained by the effect under study (numerator) and the sum of the variance explained by the effect under study and the variance within group (denominator). The relationship between eta-squared and f is: eta-squared = f2/(1 + f2 ).

Our choice of f = 0.25 implies an effect where the variance explained by the interaction is 16 times smaller than the variance within groups (error).

Note that a random intercept model with compound symmetry is equivalent to a repeated measures ANOVA with the assumption of sphericity, see Muller and Barton (1989) and Verbeke and Molenberghs (2000). In the analysis our model choice was a random intercept model with an assumption of scale identity (homogeneous variances and zero covariances), which is a special case of compound symmetry (homogeneous variances and homogeneous covariances). Therefore, we argue it is appropriate in our case to use repeated measures ANOVA for power computations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nixon, L., Slattery, B., Cassese, A. et al. Effects of the Digital Intervention StudentPOWR on the Subjective Wellbeing of Students Studying from Home: a Randomized Wait-List Control Trial. Int J Appl Posit Psychol 9, 165–188 (2024). https://doi.org/10.1007/s41042-023-00114-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41042-023-00114-5