Abstract

Trajectory similarity computation is a fundamental function in many applications of urban data analysis, such as trajectory clustering, trajectory compression, and route planning. In this paper, we study trajectory similarity computation on the road network. However, existing methods have been designed primarily for road network trajectories with spatial information, while ignoring the important temporal information in the real world. To solve this problem, we propose a Feature Enhanced Spatial–Temporal trajectory similarity computation framework FEST, which is a graph neural network (GNN) and sequence model pipeline. We first use the GNN model to capture global information on the road network. In particular, we enhance the process with multi-graph to learn multiple signals from the road network on different aspects. In addition to the original road network topology signal, we also take into account the content signal to learn spatial–temporal features from trajectory traffic, as well as the adaptive similarity signal of the road network to learn hidden features. From these three signals, we construct a multi-graph and use GCN to learn road intersection embedding jointly. Next, we propose a feature-enhanced Transformer with spatial–temporal information to learn correlation within the trajectory, and we further use mean-pooling to get the final trajectory embedding. We compare FEST with six trajectory similarity computation methods on two real-world datasets. The results show that FEST consistently outperforms all baselines and can improve the accuracy of the best-performing baseline.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the ubiquitousness of GPS-enabled devices, massive trajectory data is being collected at an unprecedented rate. A trajectory portrays the spatial–temporal movement of an object over a while, which is a sequence of location and timestamp. Trajectory similarity computation aims to compute trajectory similarity score for given two trajectories, which is an essential function in the fields of spatial–temporal data mining and urban data exploration, such as trajectory clustering [1], anomaly trajectory detection [2], and route planning [3].

To measure the similarity between two trajectories, many classic similarity measures have been presented to quantify the intrinsic similarities of trajectories, such as DTW [4], EDR [5] and ERP [6], etc. However, these measurements are designed for Euclidean space, which overlooks the fact that real-world trajectories are often generated on road networks, making these measurements difficult to use in spatial spaces. To solve this problem, some road network-based heuristics algorithms are proposed, such as TP [7], DITA [8], and LCRS [9], etc, these measurements consider that the road network attributes are designed different road network-aware distance to compute trajectory similarity on the road network. However, these heuristics algorithms rely on dynamic programming to compute the optimal alignments, requiring quadratic computational complexity \(\mathcal {O} (n^2)\), where n is the average length of trajectories, which restricts their application to large-scale trajectory analysis and becomes the bottleneck for computing trajectory similarities.

To solve the above issue, many studies [10,11,12,13,14] propose to leverage learning methods to approximate heuristics algorithms, which first learn low-dimensional vectors of trajectories with a deep learning model, and then use embedding-based similarity computation, e.g., dot-product, to compute trajectory similarity scores in embedding space. Embedding computation techniques usually only require linear complexity \(\mathcal {O} (n)\), so the time of trajectory similarity computation on large-scale datasets is greatly saved. For example, T2vec [15] is the first deep learning approach to learning representations of trajectories to support accurate and efficient trajectory similarity computation with an encoder-decoder model using RNN [16]. NeuTraj [10] further incorporates spatial attention memory unit into RNN to model the correlation between spatially close trajectories. Traj2SimVec [12] tries to use sub-trajectories to assist the learning of trajectories and uses the triple loss function to optimize the model. T3S [17] builds the model using RNN and attention neural networks and tunes its parameters to approximate any trajectory similarity measurements. However, these models are designed in Euclidean space, ignoring that most real-world trajectory applications are based on the road network. Later, GTS [13] is the first deep learning model designed for road network-based trajectories. GTS uses GCN [18] to capture road network information, then applies LSTM [19] to learn trajectory embedding. GRLSTM [20] considers the discontinuity of trajectory sampling points on the road network and uses the knowledge graph [21] to enhance the road network graph.

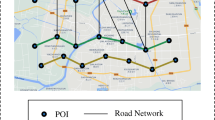

In the real world, trajectory sampling points include both coordinates and timestamps, but the above models only consider spatial information, while ignoring temporal information. Since spatially similar trajectories are not necessarily similar in temporal, we need to consider not only the distance relationship in space but also the relationship in time in the actual trajectory computation similarity process. Figure 1 shows a simple example. For the red trajectory, the purple trajectory is more similar to it in terms of spatial distance, but considering the time information, the brown trajectory is more matched. To measure trajectory similarity with spatial–temporal information, the extended version of GTS (\(\text {GTS}^+\)) constructs a fusion graph by element-wise product with spatial and temporal adjacency matrices. An ST-LSTM is also designed for introducing the temporal information by gate mechanism. However, fusion graph by element-wise product destroys the integrity of both spatial graph and temporal graph. Moreover, ST-LSTM ignores the periodicity of temporal and lacks the feature interaction with the point of trajectory.

To solve the above issues, we propose a novel feature-enhanced spatial–temporal trajectory similarity computation framework FEST on the road network. In particular, we consider and enhance spatial–temporal features from two aspects, i.e., graph signal and sequence signal. We design a multi-graph learning module to learn vertex embedding of the road network according to multiple properties, such as traffic flow, and time periodicity. In addition to the basic road network topology information, we construct a spatial–temporal traffic similarity graph to capture the content correlation between vertices, such as hot crossroads in different regions that have similar high traffic flow. We also introduce an adaptive graph to learn the hidden relations between vertices, and also dynamically adjust vertices embedding. For sequence signal, we enhance the computation method of the attention coefficient in the Transformer [22] by calculating the features of the vertices in the trajectory, so that the Transformer can more effectively capture the relationship between vertices embedding and vertices geographic feature, and act on the final trajectory embedding.

In short, the main contributions of this paper are summarized as follows:

-

We propose FEST, a novel feature-enhanced spatial–temporal trajectory similarity computation framework on the road network.

-

We design two enhanced modules for the trajectory to learn spatial–temporal information. One is a multi-graph learning module to enhance road network signals by constructing the traffic similarity graph and adaptive similarity graph. The other is a feature-enhanced transformer, which incorporates temporal and road intersection spatial features in the attention mechanism.

-

We conduct extensive experiments on two real-world datasets. The experiments show our model outperforms other methods.

2 Related Work

2.1 Euclidean Trajectory Similarity

Heuristic Method The calculation method of trajectory similarity based on the Euclidean free-space is a widely researched field, involving the use of spatial straight-line distances to assess the similarity between trajectories. Heuristic methods such as dynamic time warping (DTW) [4], longest common subsequence (LCSS) [23], edit distance with real penalty (ERP) [6], edit distance on real sequences (EDR) [5], edit distance with projections (EDwP) [24], Ont-way Distance (OWD) [25], and Hausdorff [26] are commonly utilized in trajectory analysis to measure the similarity between spatial sequences. However, these methods incur high computational costs, as they usually rely on point-by-point matching, which generates quadratic computational complexity [10, 15]. Therefore, there is a growing interest in exploring more efficient learning methods, which can potentially reduce computational complexity by exploiting the structural relationships within the data.

Learning Method For Learning-based methods on Euclidean space, NeuTraj [10] introduces the first learning-based trajectory similarity measure. This method maps trajectories to grid trajectories and selects some as seeds, which guides the pairwise similarity and dissimilarity calculations. Then, it utilizes LSTM to generate embedding vectors, approximating non-learning similarity computations. Although NeuTraj lowers time complexity significantly, it requires pre-training for similarity computation among all seed trajectories. To enhance training efficiency, Traj2SimVec [12] simplifies training trajectories into triplet samples, reducing training time complexity to \(\mathcal {O} (logn)\), where n is the average length of training trajectories. T3S [17] and TMN [27] make an improvement on Traj2SimVec, by using attention networks to consider the dissimilarity and similarity between different trajectories. TrajGAT [11] focuses on long trajectories, which uses a Point-Region(PR) quadtree to construct a graph, then designs a graph attention-based Transformer to generate embedding vectors from the graph and calculates the similarity.

2.2 Road Network Based Trajectory Similarity

Heuristic Method Trajectory similarity computation methods based on the road network have also been widely studied. Unlike trajectories based on Euclidean space, the road network has more constraints and complex terrain scenarios, which often need to consider the road’s layout, direction, traffic rules, and other factors. Recent methods map every coordinate point of each trajectory onto the road network to identify corresponding nodes and then employ a similarity function to calculate the similarity between trajectories, Such as NetDTW [28], NetLCSS [14], NetEDR [29], TP [7], LORS [30], LCRS [9] and DITA [8]. However, these methods often need to be optimized to reduce the computation times [31,32,33,34], but this also leads to their lack of robustness as well, and they can easily receive interference from noisy points in the data, which in turn leads to a degradation of the final result.

Learning Method For complex road network, non-learning methods suffer from high computational complexity and lack of stability. To overcome this problem, learning-based approaches have been introduced to efficiently reconstruct high-dimensional input data into low-dimensional representations [13, 35,36,37]. GTS [13] initially adopts a trajectory-aware random walk scheme to capture the representation of each road intersection within the spatial network. Subsequently, it leverages a GNN-based model integrated with LSTM to acquire the trajectory representation, facilitating the computation of similarity. \(\text {GTS}^+\) [38] extends the GTS framework to incorporate temporal information into the similarity computation. It designs a joint training approach, embedding spatial graph and temporal graph in one GNN model, and fusing the spatial and temporal features with a special ST-LSTM model. Focusing on the multi-relation of points on road network, GRLSTM [20] constructs a point knowledge graph and then applies a Knowledge Graph Embedding (KGE) method to build a fusion graph. Subsequently, it captures the topology structure information of the fusion graph using GAT, and employs a novel Residual-LSTM to further capture the trajectory representation.

3 Preliminary

We focus on solving the trajectory similarity computation on road network. In this section, we present our preliminary definitions and the problem statement.

3.1 Preliminary Definitions

Definition 1

(Road Network)

The road network is denoted as a directed graph \(\mathcal {G} = \left\{ \mathcal {V}, \mathcal {E}, \mathcal {A} \right\}\), where \(\mathcal {V} = \left\{ v_1, v_2,..., v_{\vert \mathcal {V} \vert } \right\}\) and \(\mathcal {E} = \left\{ e_1, e_2,..., e_{\vert \mathcal {E} \vert } \right\}\). Each vertex \(v_i \in \mathcal {V}\) represents a road intersection or a road end with attributes latitude and longitude, while each edge \(e_{i,j} \in \mathcal {E}\) denotes a road segment connecting \(v_i\) and \(v_j\). Matrix \(\mathcal {A}\) is the adjacency matrix of the road network graph, where \(\mathcal {A}[i,j] = 1\) if \(e_{i,j}\) exists, otherwise, \(\mathcal {A}[i,j] = 0\).

Definition 2

(GPS Trajectory)

A GPS trajectory, denoted as \(\mathcal {T}_{gps}\), is a sequence of coordinate points collected by GPS-enabled devices at a fixed sampling time interval. Each sample point is represented as \(p_i = (x_i, y_i, t_i)\), where \(x_i\), \(y_i\), and \(t_i\) denote longitude, latitude, and timestamp, respectively.

Definition 3

(Road Network Trajectory)

A road network trajectory \(\mathcal {T}\) is a time-ordered sequence which is generated from \(\mathcal {T}_{gps}\) by coordinate alignment, i.e., \(\mathcal {T}=\left\langle \tau _1, \tau _2,..., \tau _{\vert \mathcal {T}\vert } \right\rangle\), which contains the \(\vert \mathcal {T} \vert\) vertices of \(\mathcal {G}\) passed by \(\mathcal {T}_{gps}\). Each element \(\tau _i = (v_i, t_i) \in \mathcal {T}\) denotes that the user passes vertex \(v_i\), i.e., road intersection, at timestamp \(t_i\).

3.2 Spatial–Temporal Similarity

Given two trajectories \(\mathcal {T}_1\) and \(\mathcal {T}_2\), we define the trajectory spatial–temporal similarity function \(f(\cdot , \cdot )\) as follows:

where \(f_s(\cdot , \cdot )\) and \(f_t(\cdot , \cdot )\) compute the similarity on spatial and temporal respectively, and \(\lambda \in [0, 1]\) controls the relative weight of spatial and temporal, providing flexibility that can be used to support different applications. In this paper, we implement Eq. 1 followed by \(\text {GTS}^+\) [38], which refines Eq. 1 as follows:

where \(d_s (\cdot , \cdot )\) is the spatial distance of two vertices, and \(d_t (\cdot , \cdot )\) is the time distance of two vertices.

3.3 Problem Statement

Given a road network trajectory set \(\mathcal {D} = \left\{ \mathcal {T}_1, \mathcal {T}_2,..., \mathcal {T}_{\vert \mathcal {D}\vert } \right\}\) on the road network graph \(\mathcal {G}\). A spatial–temporal similarity measurement \(f(\cdot , \cdot )\) can compute any two trajectories \(\mathcal {T}_i, \mathcal {T}_j \in \mathcal {D}\). However, most heuristic spatial–temporal similarity measurements incur quadratic time complexity, which is hard to use on massive datasets in the real world. Our task aims to design a deep learning model to approximately compute the spatial–temporal similarity between any pair of trajectories from trajectory set \(\mathcal {D}\) under a spatial–temporal similarity function \(f(\cdot , \cdot )\).

4 Methodology

In this section, we will present the key designs of FEST, which includes two sub-modules: multi-graph learning and feature-enhanced Transformer. Figure 2 shows the overview of our model. The Multi-graph learning aims to enhance features of vertices from graph-level. In particular, we consider three graph signals of the road network, i.e., topological signal, traffic similarity signal, and adaptive relation signal. The feature-enhanced Transformer aims to enhance trajectory embedding from the sequence-level. We incorporate geographic properties of vertices to explicitly adjust the self-attention coefficients within trajectory sequences to improve the accuracy of representation. We will go through our model in detail in order of execution.

4.1 Multi-graph Learning on Road Network

In general, we can use a learnable matrix \(\textbf{E} \in \mathbb {R}^{\vert \mathcal {V} \vert \times d}\), where \(\vert \mathcal {V} \vert\) is the number of vertices and d is the dimension, to transform a trajectory to the embedding sequence, and use a sequence model to learn trajectory embedding. However, this method ignores much information about the road network. On the one hand, the road network has directionality and continuity, which can reflect the flow of trajectories and the decisions of drivers at road intersections. On the other hand, the road network also contains rich geographic information, such as intersection coordinates and the number of forks at intersections, etc. Therefore, to effectively represent trajectory embedding, we need to first consider how to model the characteristics of the road network. In this paper, we explore the three characteristics of the road network, including topological structure, traffic similarity, and adaptive similarity.

Topological Graph The basic information of the road network is topological structure, which represents the structure of a city, Here, we use road network graph \(\mathcal {G} = \left\{ \mathcal {V}, \mathcal {E}, \mathcal {A} \right\}\) (see Definition 3.1) to learn road intersection embeddings from topological structure. Formally, given the adjacency matrix \(\mathcal {A}\) of the road network graph \(\mathcal {G}\), we use GCN to learn topological structure as follows:

where \(\textbf{X}\) is the input feature, \(\textbf{A}_{r} = \mathcal {A} + \textbf{I}\), \(\textbf{D}^{ii}_r = \sum _j \textbf{A}_{r}^{ij}\) is the degree matrix of \(\textbf{A}_r\), and \(W_{1}\) is the learnable matrix.

Traffic Similarity Graph However, the raw road network fails to capture the content correlation between road intersections on the road network. For example, a city may have several large shopping malls with a high degree of similarity in the road intersections around these malls, e.g., the distribution of traffic volumes in these road segments. This content correlation can reflect the functional similarity of urban areas. Therefore, to capture traffic similarity between road intersections, we first use 24-dimensional vectors as time slots to record traffic volumes for a road intersection, with each slot representing one hour. We concatenate time slots of all road intersections and obtain traffic volumes matrix \(\textbf{M}_t\). Then we use min-max normalization to normalize each element of \(\textbf{M}_t\) to 0\(\sim\)1. To compute traffic similarity, we apply dot-product on each pair of road intersections as follows:

then we construct the traffic similarity graph using top-k similarity as follows:

where \(\mathcal {N}_s(v_i)\) is the top-k similar road intersection set of road intersection \(v_i\) obtained from \(\textbf{S}_t\). We also use GCN to learn road intersection embedding from \(\mathcal {G}_t\) as follows:

where \(\textbf{X}\) is the same input feature as Eq. 3, \(\textbf{A}_{t} = \mathcal {G}_{t} + \textbf{I}\), \(\textbf{D}_{t}^{ii} = \sum _j \textbf{A}_{t}^{ij}\) is the degree matrix of \(\textbf{A}_{t}\), and \(W_{2}\) is the learnable matrix.

Adaptive Relation Graph Some correlations are hard to model explicitly. To tackle this problem, we construct a self-adaptive adjacency matrix \(\textbf{A}_{adp}\) by multiplying two randomly initialized node embedding with learnable parameters \(E_1\) and \(E_2\) as follows:

where \(\text {Softmax}(\cdot )\) is used to normalize the self-adaptive adjacency matrix. Then we use another GCN layer to learn road intersection embeddings as follows:

where \(\textbf{X}\) is the same input feature as Eq. 3, and \(W_{3}\) is the learnable matrix.

Now, we have learned three types of road intersection embeddings from different GCN layers. Next, we use an additional linear layer to fuse them into a new embedding space as follows:

where \(W_4\) is learnable parameter, and \(\textbf{H} \in \mathbb {R}^{\vert \mathcal {V} \vert \times d}\). To improve the expressiveness of the GCN and enable it to capture long-distance dependencies, we further apply multi-layer GCN on these three graph, i.e., topological graph, traffic similarity graph, and adaptive relation graph, to improve the performance. For convenience, we omit these descriptions of formulas.

4.2 Feature Enhanced Transformer

Previous studies [20, 38, 39] use the LSTM to learn trajectory embedding, which has suffered from a long training time. In our model, we use the transformer to learn trajectory embedding as it can be trained in parallel and can also capture the global information of the sequence with a more powerful feature extraction capability. To improve the performance of the transformer on spatial–temporal trajectory similarity computation, we enhance the attention coefficients with temporal encoding and road intersection feature encoding.

Road Intersection Encoding Each road intersection has a unique ID, which can be used to transform to embedding by looking up a learnable matrix \(E_p\). However, this method ignores the spatial information of road intersections. To solve this issue, we take \(\textbf{H}\) as an embedding matrix. Formally, given a road intersection \(v_i\), we look up the \(\textbf{H}\) to get road intersection embedding \(\textbf{p}_i\). Therefore, given a trajectory \(\mathcal {T}=\left\langle v_1, v_2,..., v_{\vert \mathcal {T}\vert } \right\rangle\), we can get road intersection ID feature sequence \(\textbf{P} = \left\langle \textbf{p}_1, \textbf{p}_2,..., \textbf{p}_{\vert \mathcal {T}\vert } \right\rangle\).

Time Encoding In section 4.1, we use time slots to record traffic volume, which encodes time information in the form of a graph. However, this method implicitly encodes time information and does not have sufficient time characteristic expressiveness. To learn periodicity of time, we leverage time2vec [15] to acquire a date vector \(\textbf{t}\) with a size of d/2, formulated as follows:

Here, \(\textbf{t}[i]\) denotes the i-th element of vector \(\textbf{t}\), \(\omega _0, \omega _1,..., \omega _{\frac{d}{2} - 1}\) and \(\psi _0, \psi _1,..., \psi _{\frac{d}{2} - 1}\) are learnable parameters, and \(\text {sin}(\cdot )\) serves as a periodic activation function that helps capture periodic behaviors without the need for feature engineering. Therefore, the input of a road intersection \(v_i\) is as follows:

where the \(\textbf{pe}_i\) is position encoding.

Next, we apply Transformer [22] to model trajectories. Formally, given a road intersection sequence input embedding \(\textbf{X} = \left\langle \textbf{x}_1, \textbf{x}_2,..., \textbf{x}_{\vert \mathcal {T}\vert } \right\rangle\) for trajectory \(\mathcal {T}\), we first use three linear layers to obtain query matrix \(Q = \textbf{X} W_q\), key matrix \(K = \textbf{X} W_k\), value matrix \(V = \textbf{X} W_v\), then we apply self-attention as follows:

where d is the dimension. We also apply multi-head attention to improve transformer expression. To reduce symbolic representation, we ignore the formula of multi-head attention.

Feature Enhanced Transformer Since the road intersection ID is the global information, only modeling trajectory by road intersection ID embedding sequence can not capture the internal information of the trajectory. Attribute properties of the road intersection in different trajectories are not necessarily the same. For example, two trajectories pass through the same road intersection \(v_i\), but their previous road intersection and the next road intersection are not necessarily the same, so relying on global information alone cannot effectively provide dynamic attributes of road intersection \(v_i\).

Inspired by TrajCL [40], we use a quadruple \(\hat{\textbf{p}}_i = (x_i, y_i, l_i, r_i)\) to encode the features for road intersection \(v_i\) within trajectory, where \(x_i\) and \(y_i\) denote the longitude and latitude respectively, \(l_i\) is the mean length between previous road intersection \(v_{i - 1}\) and next road intersection \(v_{i + 1}\), \(r_i\) is the radian between trajectory segments \(v_{i - 1} \rightarrow v_{i}\) and \(v_{i} \rightarrow v_{i + 1}\). Therefore, given a trajectory \(\mathcal {T}=\left\langle v_1, v_2,..., v_{\vert \mathcal {T}\vert } \right\rangle\), we can get road intersection feature sequence \(\hat{\textbf{P}} = \left\langle \hat{\textbf{p}}_1, \hat{\textbf{p}}_2,..., \hat{\textbf{p}}_{\vert \mathcal {T}\vert } \right\rangle\). Next, we use a linear layer to transform the road intersection feature into hidden embedding \(\textbf{P}^\prime = \hat{\textbf{P}} W_5\). Therefore, the feature enhanced input of road intersection \(v_i\) in trajectory \(\mathcal {T}\) as follows:

where \(\textbf{p}_i^\prime \in \textbf{P}^\prime\), \(\textbf{t}_i\) is time embedding, and \(\textbf{pe}_i\) is position encoding.

Then we compute the coefficient of the road intersection feature for each trajectory as follows:

where query matrix \(Q^\prime = \textbf{P}^\prime W_q^\prime\) and key matrix \(K^\prime = \textbf{P}^\prime W_k^\prime\). Then we modify Eq. 12 as follows:

We also apply the feed-forward network similar to the vanilla transformer. Then we use mean pooling for the output of the transformer to get the final trajectory embedding.

4.3 Optimization

In reality, our goal is to find the most similar trajectory for a given trajectory instead of computing the trajectory similarity score. Follow by previous studies [38, 39], we choose pair-wise loss function to optimize our model, which is widely used in ranking applications. Pair-wise loss function can calculate relative order relationships in dataset, which is consistent with our goals. To apply pair-wise loss, we first need to compute similar scores of trajectory embeddings. Suppose the learned trajectory embeddings by our model of trajectories \(\mathcal {T}_i\) and \(\mathcal {T}_j\) are \(e_i\) and \(e_j\), we first use the dot-product to compute the similar score \(\text {Sim}(\mathcal {T}_i,\mathcal {T}_j)\):

Then given three trajectories, including anchor trajectory \(\mathcal {T}\), positive trajectory \(\mathcal {T}_{+}\), and negative trajectory \(\mathcal {T}_{-}\), we define pair-wise loss function in trajectory similarity computation as follows:

where \(\mathcal {D}^{tr}\) is the training dataset and \(\mathbb {1}(\cdot )\) is the indicator function. For a given trajectory in Eq. 17, we need to compute similarities between all other trajectories and it. This process would be very time-consuming as the trajectory dataset usually varies largely. To reduce the computation time in the training process, we randomly sample one trajectory instead of traversing all trajectories for the given trajectory.

5 Experiment

In this part, we conduct extensive experiments to evaluate our FEST and compare it with the state-of-the-art baselines. In particular, we introduce the experiment settings in Sect. 5.1, compare FEST with the baselines in Sect. 5.2, check our model designs in Sect. 5.3, and study hyper-parameters in Sect. 5.4.

5.1 Experiment Settings

Dataset Our model is evaluated on two real-world datasets: Beijing and New York. The details of these datasets are presented in Table 1. The Beijing dataset comprises a road network with 28,342 road intersections and 27,690 edges. We utilize the taxi driving data from the T-drive project.Footnote 1 Taxi trajectories in the Beijing dataset are sampled every 10 min and are organized by taxi ID, and each trajectory may span several days. After segmentation by hour, a total of 5,621,428 trajectories are obtained, discarding those with less than 6 points. The average trajectory length in the Beijing dataset is 25. Regarding the New York dataset, the road networkFootnote 2 encompasses 95,581 road intersections and 260,855 edges. We gather 697,622,444 trips from the NYC open data.Footnote 3 A subset is randomly sampled to construct the trajectory dataset. Following preprocessing, our experiments involve 10,541,288 trajectories, with an average length of 38, excluding those with less than 6 points. For both trajectory datasets, we randomly partition them into training, evaluation, and testing sets with a ratio of [0.2, 0.1, 0.7]. For both datasets, we create the ground truth based on the similarity calculated by Eq. 1 that considers both spatial and temporal information.

Baselines We compare our model with six state-of-the-art trajectory similarity computation methods, including four grid-based methods Traj2vec [41], Siamese [42], NeuTraj [43], Traj2SimVec [44] and two road network-based methods GTS+ [38], GRLSTM [20]. The details of these methods are summarized as follows:

-

Traj2vec This method learns trajectory embedding by a sequence-to-sequence model, and uses mean square error as a loss function to train the model.

-

Siamese Siamese is a time series learning approach based on the Siamese network. They train the model using the cross-entropy loss function. We set the backbone of their Siamese network with LSTM and use a similar setting as [43] to support trajectory similarity computation.

-

NeuTraj This method revises the structure of LSTM to learn the embedding of the grid in the process of training their framework. To support our task, we replace the grid with road intersections in their framework.

-

Traj2SimVec This method employs a new loss for learning the trajectory similarity by point matching. We apply their model to the road network in a similar way to learn the similarity between trajectories.

-

GTS+ GTS [39] is the first deep-learning method for road network-based trajectory similarity. They apply the GNN and LSTM to learn trajectory embedding. Here, we use the extended version of GTS, which supports spatial–temporal similarity computation for road networks.

-

GRLSTM This method considers the discontinuity of trajectories, uses the knowledge graph to construct new relations between road intersections, and applies LSTM to learn the trajectory similarity.

For the evaluation of spatial–temporal based similarity measurement, we extend the above approaches that only support spatial similarity, i.e., Traj2vec, Siamese, Neutraj, Traj2SimVec, and GRLSTM, in a similar way: we initialize the temporal embedding with one-hot encoding and feed it into an MLP layer. Then we concatenate the output of the MLP layer with that of the road intersection embedding to formulate the input of the above models.

Implementation We implement our FEST using PyTorch 2.0 on Ubuntu 20.04 with an NVIDIA RTX GeForce 3090 GPU. We both set the embedding size d as 128 in our model and baseline models, the multi-graph learning layers as 2, and the road features enhanced Transformer layers as 3. The attention head is 8. The dropout ratio is 0.1. The top-k in traffic similar graph is 20 for both datasets. We use Adam [45] to optimize our model. The batch size for both datasets is set as 128, and the training epoch is 200. For the Beijing dataset, the learning rate is 5e−4. For the New York dataset, the learning rate is 1e−3.

Performance Metric Following previous studies [39], we use the hitting ratio in the top-K list (HR@K) as the metric in our experiments to evaluate the performance of different methods. The definition of HR@K is set as follows:

where \(T^{te}\) is the test set of trajectories, \(\vert \cdot \vert\) is the set cardinality, \(L_\tau ^T@K\) is the list of predicted most similar trajectories for a given trajectory \(\tau\) with length K, and \(L_\tau ^{R}\) is the set of most similar trajectory in the training set for the given trajectory \(\tau\) where \(L_\tau ^{R} = \{\tau '\}\).

5.2 Main Results

Tables 2 and 3 show the main results and our model FEST achieves the best performance on all metrics in two real-world datasets. From the results, we have the following observations:

Firstly, compared to \(\text {GTS}^+\) and GRLSTM, the average performance on two datasets of four grid-based methods are not good, i.e., Traj2vec, Siamese, NeuTraj, and Traj2SimVec. On the one hand, grid-based methods fail to consider the road network information, e.g., neighbor relationships, which is very important in road network trajectory representation. On the other hand, our goal is to find the most similar trajectory in the dataset, not to calculate similarity. However, the loss functions of these four methods fail to optimize the rank relationship between trajectories. For example, Traj2vec uses mean square error as the loss function, which is more suitable for trajectory representation.

Secondly, the average performance of the road network-based model, i.e., \(\text {GTS}^+\) and GRLSTM, achieves the best in baselines. On the one hand, these two road network-based models utilize a graph neural network to learn topology information of the road network for road intersection embedding. Therefore, the final trajectory embedding not only includes the internal information of the trajectory but also captures external information of the road network. Moreover, the performance of \(\text {GTS}^+\) is better than GRLSTM. GRLSTM considers the discontinuity of road intersection trajectories on the road network, while ignoring the temporal information, leading to poor performance on spatial–temporal similarity.

Thirdly, our model achieves the best results on two datasets. We attribute the performance improvement to two aspects. For one, our model proposes a multi-graph learning module to enhance the road network signal. In addition to the original road network structure, enhanced graph signals capture the content similarity between road intersections, i.e., traffic similarity and adaptive similarity, which learns the spatial and implicit temporal information. These two similarity graphs can also help our model capture road intersection relationships over long distances, not just neighbors. For another, we use a more powerful sequence model Transformer to replace the RNN model to learn trajectory embedding. To improve performance over the spatial–temporal similarity, we explicitly encode the periodicity of time, and encode road intersection features to enhance the attention mechanism to learn more effective trajectory embedding.

5.3 Ablation Study

To further investigate the effectiveness of each sub-module in FEST, we conduct the following ablation experiments on both datasets. Tables 4 and 5 show the results. The settings of these methods are summarized as follows:

-

w/o feature We replace the feature-enhanced Transformer with a vanilla Transformer.

-

w/o adaptive We remove the adaptive graph and keep the other two graphs.

-

w/o traffic We remove the traffic similarity graph and keep the other two graphs.

-

w/o time We remove the time encoding in Transformer.

From Tables 4 and 5, we have the following analysis:

Firstly, the traffic similarity graph can improve the performance of our model significantly. On the one hand, the traffic similarity graph is constructed by the time-slot vector, which explicitly enhances the spatial information and implicitly encodes the temporal information. On the other hand, compared with conventional taxi trajectories, road intersection or trip trajectories are relatively sparse, so adjacent road intersections may be very far apart. The road network can not capture long-distance relationships, while the Traffic similarity graph can use road intersection similarities to construct long-distance relationships.

Secondly, adaptive graph and road intersection features can improve the performance of our model from two aspects, i.e., graph-level and sequence-level. Adaptive graph can dynamically adjust the road intersection relationships on the road network. road intersection features can incorporate the different properties of a road intersection in different trajectories, which can enhance the generalization of road intersection and contribute to the effectiveness of trajectory embedding.

Thirdly, the lack of time encoding can lead to a significant decrease in performance, as the model cannot effectively capture time information without explicit time encoding, and therefore the learned trajectory representations cannot align with the spatial–temporal similarity computation task.

5.4 Hyper-parameter Study

We also conduct the hyper-parameter analysis on layers of the Transformer and the number of dimensions to provide more insights into some components in our framework. We only report the evaluation of HR@10 on the Beijing dataset, and the New York dataset has the same trend. The left of Fig. 3 shows the results of the effect of the Transformer layer on the performance of our model, and the right shows the results of the effect of dimension on the performance of our model.

For the layer of Transformer, we find that the performance first increased as the number of layers increased, and then decreased as the number of layers increased. When the number of layers is 1, the model performance is very poor, indicating that the learning ability is insufficient. Performance is best when the number of transformer layers is set to 3, and as the number of layers increases, the performance slowly decreases, showing overfitting.

For the number of dimensions, we also find that the performance trend of dimensions is similar to Transformer layers. When the dimension is 32, the model has fewer parameters and cannot hold enough trajectory information. However, if the dimension is too great, e.g., 256, the model will have too many parameters and suffer overfitting.

6 Conclusion

In this paper, we study the spatial–temporal road intersection trajectory similarity computation on the road network. We design two sub-modules to enhance the spatial–temporal feature of road intersection trajectory for the graph learning module and Transformer. In addition to the road network, we also construct two graphs, i.e., a traffic similarity graph and an adaptive graph, by different similarities to capture long-distance relations between road intersections and enhance spatial–temporal information on graph signals. Then a multi-graph learning module is introduced to learn road intersection embedding from multiple information of road network. To enhance the modeling ability of the sequential model for spatial–temporal extraction, we utilize a Transformer and modify the attention mechanism with road intersection features of trajectory. The extensive experiments show the effectiveness of our model.

References

Lee J-G, Han J, Whang K-Y (2007) Trajectory clustering: a partition-and-group framework. In: ACM SIGMOD, pp. 593–604

Piciarelli C, Micheloni C, Foresti GL (2008) Trajectory-based anomalous event detection. IEEE Trans Circuits Syst Video Technol 18(11):1544–1554

Bast H, Delling D, Goldberg A, Müller-Hannemann M, Pajor T, Sanders P, Wagner D, Werneck RF (2016) Route planning in transportation networks. In: Algorithm engineering: selected results and surveys, vol. 9220, pp. 19–80

Yi B, Jagadish HV, Faloutsos C (1998) Efficient retrieval of similar time sequences under time warping. In: ICDE, pp. 201–208

Chen L, Özsu MT, Oria V (2005) Robust and fast similarity search for moving object trajectories. In: SIGMOD, pp. 491–502

Chen L, Ng R (2004) On the marriage of lp-norms and edit distance. In: PVLDB, pp. 792–803

Shang S, Chen L, Wei Z, Jensen CS, Zheng K, Kalnis P (2017) Trajectory similarity join in spatial networks. PVLDB 10(11):1178

Shang Z, Li G, Bao Z (2018) DITA: distributed in-memory trajectory analytics. In: ACM SIGMOD, pp. 725–740

Yuan H, Li G (2019) Distributed in-memory trajectory similarity search and join on road network. In: ICDE, pp. 1262–1273

Yao D, Cong G, Zhang C, Bi J (2019) Computing trajectory similarity in linear time: a generic seed-guided neural metric learning approach. In: ICDE, pp. 1358–1369. IEEE

Yao D, Hu H, Du L, Cong G, Han S, Bi J (2022) TrajGAT: a graph-based long-term dependency modeling approach for trajectory similarity computation. In: SIGKDD, pp. 2275–2285

Zhang H, Zhang X, Jiang Q, Zheng B, Sun Z, Sun W, Wang C (2020) Trajectory similarity learning with auxiliary supervision and optimal matching. In: IJCAI, pp. 11–17

Han P, Wang J, Yao D, Shang S, Zhang X (2021) A graph-based approach for trajectory similarity computation in spatial networks. In: SIGKDD, pp. 556–564

Fang Z, Du Y, Zhu X, Hu D, Chen L, Gao Y, Jensen CS (2022) Spatio-temporal trajectory similarity learning in road networks. In: SIGKDD, pp. 347–356

Li X, Zhao K, Cong G, Jensen CS, Wei W (2018) Deep representation learning for trajectory similarity computation. In: ICDE, pp 617–628

Cho K, Merrienboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014)Learning phrase representations using RNN encoder–decoder for statistical machine translation. In: EMNLP, p 1724

Yang P, Wang H, Zhang Y, Qin L, Zhang W, Lin X (2021) T3s: effective representation learning for trajectory similarity computation. In: ICDE, pp 2183–2188

Kipf TN, Welling M (2017) Semi-supervised classification with graph convolutional networks. In: ICLR

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Zhou S, Li J, Wang H, Shang S, Han P (2023) GRLSTM: trajectory similarity computation with graph-based residual LSTM. In: AAAI, vol. 37, pp 4972–4980

Wang Z, Zhang J, Feng J, Chen Z (2014) Knowledge graph embedding by translating on hyperplanes. In: AAAI, vol. 28

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. In: NIPS, pp 5998–6008

Vlachos M, Gunopulos D, Kollios G (2002) Discovering similar multidimensional trajectories. In: ICDE, pp 673–684

Ranu S, Deepak P, Telang AD, Deshpande P, Raghavan S (2015) Indexing and matching trajectories under inconsistent sampling rates. In: ICDE, pp 999–1010

Frentzos E, Gratsias K, Theodoridis Y (2006) Index-based most similar trajectory search. In: ICDE, pp 816–825

Atev S, Miller G, Papanikolopoulos NP (2010) Clustering of vehicle trajectories. IEEE Trans Intell Transp Syst 11(3):647–657

Yang P, Wang H, Lian D, Zhang Y, Qin L, Zhang W (2022) Tmn: trajectory matching networks for predicting similarity. In: ICDE, pp 1700–1713

Xie X, Philips W, Veelaert P, Aghajan H (2014) Road network inference from GPS traces using DTW algorithm. In: ITSC, pp 906–911

Koide S, Xiao C, Ishikawa Y (2020) Fast subtrajectory similarity search in road networks under weighted edit distance constraints. PVLDB 13(11):2188–2201

Wang S, Bao Z, Culpepper JS, Xie Z, Liu Q, Qin X (2018) Torch: a search engine for trajectory data. In: SIGIR, pp 535–544

Rakthanmanon T, Campana BJL, Mueen A, Batista GEAPA, Westover MB, Zhu Q, Zakaria J, Keogh EJ (2012) Searching and mining trillions of time series subsequences under dynamic time warping. In: ACM SIGKDD, pp 262–270

Chen L, Shang S, Jensen CS, Yao B, Kalnis P (2020) Parallel semantic trajectory similarity join. In: ICDE, pp 997–1008

Shang S, Chen L, Jensen CS, Wen J, Kalnis P (2017) IEEE transactions on knowledge and data engineering. IEEE Trans Knowl Data Eng 29(7):1549–1562

Shang S, Chen L, Zheng K, Jensen CS, Wei Z, Kalnis P (2019) Parallel trajectory-to-location join. IEEE Trans Knowl Data Eng 31(6):1194–1207

Han P, Shang S, Sun A, Zhao P, Zheng K, Kalnis P (2019) AUC-MF: point of interest recommendation with AUC maximization. In: ICDE, pp 1558–1561

Han P, Li Z, Liu Y, Zhao P, Li J, Wang H, Shang S (2020) Contextualized point-of-interest recommendation. In: IJCAI, pp 2484–2490

Zhao K, Zhang Y, Yin H, Wang J, Zheng K, Zhou X, Xing C (2020) Discovering subsequence patterns for next POI recommendation. In: IJCAI, pp 3216–3222

Zhou S, Han P, Yao D, Chen L, Zhang X (2023) Spatial-temporal fusion graph framework for trajectory similarity computation. World Wide Web 26(4):1501–1523

Han P, Wang J, Yao D, Shang S, Zhang X (2021) A graph-based approach for trajectory similarity computation in spatial networks. In: SIGKDD, pp 556–564

Chang Y, Qi J, Liang Y, Tanin E (2023) Contrastive trajectory similarity learning with dual-feature attention. In: ICDE, pp 2933–2945

Yao D, Zhang C, Zhu Z, Hu Q, Wang Z, Huang J, Bi J (2018) Learning deep representation for trajectory clustering. Expert Syst 35(2):e12252

Pei W, Tax DMJ, Maaten L (2016) Modeling time series similarity with siamese recurrent networks. CoRR. arXiv:abs/1603.04713

Yao D, Cong G, Zhang C, Bi J (2019) Computing trajectory similarity in linear time: a generic seed-guided neural metric learning approach. In: ICDE, pp 1358–1369

Zhang H, Zhang X, Jiang Q, Zheng B, Sun Z, Sun W, Wang C (2020) Trajectory similarity learning with auxiliary supervision and optimal matching. In: IJCAI, pp 3209–3215

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

Acknowledgements

This work was supported by the National Key R&D Program of China (No. 2023YFC3305600) and National Natural Science Foundation of China (U22B2037, U21B2046).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, S., Huang, C., Wen, Y. et al. Feature Enhanced Spatial–Temporal Trajectory Similarity Computation. Data Sci. Eng. (2024). https://doi.org/10.1007/s41019-024-00255-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41019-024-00255-w