Abstract

Link prediction in knowledge hypergraphs is essential for various knowledge-based applications, including question answering and recommendation systems. However, many current approaches simply extend binary relation methods from knowledge graphs to n-ary relations, which does not allow for capturing entity positional and role information in n-ary tuples. To address this issue, we introduce PosKHG, a method that considers entities’ positions and roles within n-ary tuples. PosKHG uses an embedding space with basis vectors to represent entities’ positional and role information through a linear combination, which allows for similar representations of entities with related roles and positions. Additionally, PosKHG employs a relation matrix to capture the compatibility of both information with all associated entities and a scoring function to measure the plausibility of tuples made up of entities with specific roles and positions. PosKHG achieves full expressiveness and high prediction efficiency. In experimental results, PosKHG achieved an average improvement of 4.1% on MRR compared to other state-of-the-art knowledge hypergraph embedding methods. Our code is available at https://anonymous.4open.science/r/PosKHG-C5B3/.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In knowledge graphs, real-world knowledge is represented as triples consisting of a head entity (h), a binary relation (r), and a tail entity (t). Recently, knowledge hypergraphs have gained increasing attention as a more general and expressive way of representing knowledge, as they can model non-binary relations commonly found in real-world scenarios. A significant portion of relations in the Freebase dataset is nonbinary; 61% of relations are nonbinary [1], and more than one-third of entities participate in non-binary relations [27]. Therefore, examining how knowledge hypergraphs can enhance various downstream tasks, such as link prediction and node classification, are significant. However, due to the high cost of storing all correct tuples, knowledge hypergraphs are often incomplete, making it worthwhile to attempt predicting the correctness of hidden tuples based on existing tuples.

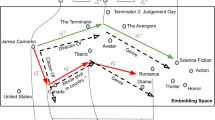

N-ary relations, which describe relationships between more than two entities, offer a more nuanced and expressive way to model complex semantics. In Fig. 1, an oval represents a tuple, while a circle represents an entity. The entities in the tuple are arranged in a specific order, each occupying a unique position and fulfilling a particular role. The position represents the order of entities in a tuple. The role is distinct from the entity type and is defined by a particular relation at a specific position, serving as the semantic meaning of the entity in the tuple. For example, in relation to SportAward, the roles of entities at positions 1, 2, and 3 are defined as Award, Season, and Winner, respectively. They are represented by the entities Best Scorer, Season 07-08, and LeBron James, indicating that "LeBron James won the best scorer award in the 07-08 season." If LeBron James and Season 07-08 are swapped, the fact represented by the tuple would be inconsistent with reality. This definition was first introduced by Wen et al. [27]. All entities and their respective roles determine the meaning of the tuple. The importance of considering roles and positions in the modeling of knowledge hypergraphs is clear from this example. At the same time, prior work has yet to focus on using position and role information in knowledge hypergraph modeling.

Several previous studies have focused on the link prediction task in knowledge hypergraphs. However, these existing methods [11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30, 1, 19] overlook the importance of positions and roles and continue to utilize binary modeling ideas similar to those used in knowledge graphs. Specifically, these methods embed n-ary relations and entities into a low-dimensional space without considering the specific order of entities and employ these embeddings to assess the plausibility of tuples. For example, m-TransH [27] and RAE [32] both extend the knowledge graph model TransH [25] by projecting entities onto relation-specific hyperplanes for tuple plausibility scoring, but these models have limited expressivity [30, 11], while HypE [1] and GETD [30] extend the knowledge graph model SimplE [11] and TuckER [2], respectively. However, these models entirely ignore position and role semantics [10]. On the other hand, NaLP [9], HINGE [18], and NeuInfer [10] do consider role information. However, they only utilize neural networks to measure tuple plausibility and do not consider the effect of entity position on semantics. To the best of our knowledge, no model currently considers both positional information and role semantics in the context of knowledge hypergraphs.

An example of the knowledge hypergraph for Lebron James is shown. Each relation in the knowledge hypergraph consists of entities with semantic roles assigned to specific positions. These roles may be explicit or implicit, while the positions are always explicit. In the figure, the roles are explicitly indicated

Therefore, we have identified that, in order to fully and expressively represent knowledge hypergraphs, the following requirements must be met: (1) the complex semantics of tuples in terms of roles and positions should be taken into account during the modeling process, including the semantic relationships among roles, positions, and entity compatibility, and (2) the modeling method should be sufficiently expressive to represent all types of relations. To the best of our knowledge, none of the existing methods fully satisfy these requirements.

In this paper, we focus on each tuple’s role and position features in knowledge hypergraphs and propose a Position-award Knowledge HyperGraph embedding model with full expressivity for knowledge hypergraphs. Unlike previous knowledge hypergraph embedding methods, PosKHG introduces a latent space for positions and roles, such that entities with related roles and the same positions have similar representations. Additionally, PosKHG learns a relation matrix for each relation to capture its compatibility with all related entities and devises a scoring function for efficient prediction. The critical innovation of PosKHG is its ability to model knowledge hypergraphs in terms of positions and roles.

The contributions of this paper are as follows:

-

PosKHG is a novel knowledge hypergraph embedding model that predicts links in knowledge hypergraphs. It strengthens the emphasis on the roles and positions of entities in n-ary relations. It learns a latent space and a relation matrix of roles to capture semantic relatedness and compatibility.

-

We prove that PosKHG is fully expressive for knowledge hypergraphs, which can model all patterns of relations without any limitation.

-

Extensive experiments are conducted on six representative datasets, demonstrating that PosKHG achieves state-of-the-art performance on knowledge hypergraph datasets and comparable performance on knowledge graph datasets.

The rest of this paper is organized as follows. In Sect. 3, we introduce the preliminaries. In Sect. 4, we provide a detailed description of PosKHG. Section 5 presents a theoretical analysis of PosKHG’s full expressivity and complexity. In Sect. 6, we present the results of our experiments. Finally, we conclude this paper in Sect. 7.

2 Related Work

Our algorithm is conceptually related to previous models in knowledge graphs and recent models in knowledge hypergraphs, which can be classified into two categories.

2.1 Link Prediction in Knowledge Graphs

One popular approach to modeling knowledge graphs is tensor decomposition, exemplified by methods like RESCAL [17] and ComplEx [23]. RESCAL associates knowledge graphs with three-way tensors of head entities, relations, and tail entities and learns entity and relation embeddings to minimize reconstruction error. ComplEx similarly associates each relation with a matrix of head and tail entities, decomposed and learned like RESCAL. However, the main drawback of tensor decomposition methods is that they are limited to modeling a specific arity of relations. At the same time, PosKHG can predict multiple arities of relations simultaneously.

Translation-based methods, such as TransE [4, 24], treat each valid triple as a translation from a head entity to a tail entity through their relation. Subsequently, several improved methods based on TransE have been proposed, including TransH [25], which introduces a relation-specific hyperplane. In TransH, entities are projected onto the relational hyperplane before translation. However, these methods are limited to modeling symmetric relations, while our method, PosKHG, can model any pattern of relations with full expressiveness.

Neural network-based methods model the effectiveness of triples using techniques such as convolutional layers and fully connected layers. For example, ConvKB [16] represents each triple as a three-column matrix fed into these layers to generate an effectiveness score. Nathani [15] proposed a generalized graph attention model as an encoder to capture neighborhood features and applied ConvKB as a decoder. While these methods can be effective, they often come with high time complexity. In contrast, the complexity of our method, PosKHG, is linear in both time and space.

2.2 Link Prediction in Knowledge Hypergraphs

Since binary relations simplify the complexity of real-world facts, some recent studies have tried to represent and predict links in knowledge hypergraphs, primarily through embedding-based methods. These studies represent n-ary facts as tuples with predefined relations and generalize binary relation methods to n-ary cases.

m-TransH [27] and RAE [32] extend the TransH model [25], which is a translation embedding model for binary relations, to the n-ary case. However, these models are not fully expressive and cannot model asymmetric relations. They also do not consider the influence of role and position on tuple semantics. NaLP [9] and HINGE [18] represent n-ary facts as attribute-value pairs and model the associations between these attributes and values. However, these methods assume that the properties of n-ary facts are equally important, which is not always the case in reality. In contrast, our method, PosKHG, uses the tuple form where different entities have different levels of importance, more reflective of real-world scenarios.

RAM [14] and NeuInfer [10] consider incorporating entity role information into embeddings, using tensor decomposition-based and neural network-based methods, respectively, to measure tuple plausibility. However, these models only consider role semantics and do not consider the effect of positional information. To the best of our knowledge, no existing work considers both entity role and positional semantics in knowledge hypergraph modeling. Therefore, we propose PosKHG, which utilizes both role and positional information to improve the performance of knowledge hypergraph link prediction.

3 Preliminaries

This section presents the preliminaries of the knowledge hypergraph and the link prediction task. The notations used throughout the paper are summarized in Table 1.

Definition 1

(Knowledge Hypergraph) A knowledge hypergraph is defined as \(\mathcal {H}=(\mathcal {E},\mathcal {R},\mathcal {T}_{O})\), where \(\mathcal {E}\), \(\mathcal {R}\), and \(\mathcal {T}_{O}\) is a finite set of entities, relations, and observed tuples, respectively. \(t_{i}=r(\rho _{1}^{r}: e_{1}, \rho _{2}^{r}: e_{2},..., \rho _{\alpha }^{r}: e_{\alpha })\) denotes a tuple where \(r \in \mathcal {R}\) is a relation, each \(e_{i} \in \mathcal {E}\) is an entity, i is the position index, each \(\rho _{i}^{r}\) is the corresponding role of relation r, and \(\alpha\) is the non-negative integral arity of the relation r.

After defining the knowledge hypergraph, which follows the definition given by Liu et al. [14], we define the task of link prediction in knowledge hypergraphs.

Definition 2

(Link Prediction in Knowledge Hypergraphs) Let \(\mathcal {T}\) denote all tuples set, \(\mathcal {T}_{O} \subseteq \mathcal {T}_{T} \subseteq \mathcal {T}\) indicates the relationship among the set of observed, all ground truth, and all tuples, respectively. The hidden tuples set \(\mathcal {T}_{H}\) is the differences between \(\mathcal {T}\) and \(\mathcal {T}_{O}\). Given the observed tuples \(\mathcal {T}_{O}\), the aim of link prediction in knowledge hypergraphs is to predict the labels of the hidden tuples \(\mathcal {T}_{H}\).

4 The PosKHG Model

As previously mentioned, positions and roles are crucial elements of knowledge hypergraphs, as they help identify entities’ semantics and relations. In particular, positions and roles play a crucial role in determining the plausibility of tuples in knowledge hypergraph modeling by considering two key aspects.

-

One key aspect of knowledge hypergraph modeling is the semantic relatedness of roles in n-ary relational tuples. For example, data from the WikiPeople dataset [9] show that over 80% of the roles found in n-ary relations also appear in 2-arity relations. This highlights the importance of considering the shared semantics of different roles in knowledge hypergraph modeling.

-

Another factor contributing to the plausibility of tuples in knowledge hypergraph modeling is the compatibility between positions, roles, and entities, e.g., in Fig. 1, the role of “Player” and the corresponding entity of “LeBron James” interact with the entities of “Small Forward” and “Cavaliers” to affect the overall plausibility of the tuple. In other words, the compatibility between different elements within a tuple is crucial for accurately modeling knowledge using hypergraphs.

To address these issues, we propose a method called PosKHG that models knowledge hypergraphs at the role and position level, allowing for the capture of the semantic relatedness between roles and positions through a latent space. A relation matrix is also used to represent the compatibility between roles, positions, and all relevant entities. To measure the plausibility of tuples, we use a multi-linear product, which allows for full expressiveness. The overall structure of PosKHG is depicted in Fig. 2.

4.1 Latent Space for Roles and Positions

Since one entity may correspond to multiple positions and roles in a knowledge hypergraph dataset, such as the entity LeBron James in Fig. 1, the multi-embedding mechanism [22] is designed for entities and maps each entity \(e_{i} \in \mathcal {E}\) to multiple embeddings. Let \(\textbf{e}_{i} \in \mathbb {R}^{m \times d}\) denote entity embedding, m be the layers of multi-embedding, d be the embedding dimension.

Overview of PosKHG. Each entity generates an entity embedding, combining it with the role embedding to integrate the role semantics. Then, implements the incorporation of the positional semantics through concatenation operation. The relation matrix is produced by combining the basis matrices of a relation. Finally, entity embedding, role embedding, and relation matrix of each entity are fed into the scoring function to calculate the confidence score of the tuple

Inspired by sharing feature information of training examples in machine learning [26, 35, 28], in order to utilize semantic information about the positions and roles of the entities, a latent space is built for roles with L role latent vectors \(\textbf{b}_{l} \in \mathbb {R}^{d}, l=1,2,...,L\). First of all, the role embedding \(\textbf{c}_{i}^{r}\) is computed by a combination of role latent vectors:

where \(\textbf{w}_{i}^{r} \in \mathbb {R}^{L}\) is the weight vector of the role latent vector, known as the role weights. As a result, semantic relatedness is parameterized implicitly by role weights, while the weight vector needs to be normalized by the element-wise Softmax function \(\sigma\) for all l and \(l' \le L\):

After obtaining the role embedding, it further assigning various semantics to entities that at different positions. To be specific, the entity embedding and the role embedding are multiplied to obtain an embedding \(\textbf{e}_{i}' = \textbf{e}_{i} \cdot \textbf{c}_{i}^{r}\) that incorporates the role semantics. Moreover, the i-th positional semantics is combined by the concatenation function \(\textit{cat}\):

where \(\textit{cat}(\textbf{v},x)\) shifts vector \(\textbf{v}\) to the left by x steps.

4.2 Relation Matrix

The relations in knowledge hypergraphs consist of entities at different positions and corresponding roles. Figure 3 illustrates the latent space for roles. To measure the degree of compatibility among the positions, roles, and all participated entities, the roles at each position in the relation are learned with a relation matrix. For a relation \(r \in \mathcal {R}\), the relation matrix for the role at the i-th position is represented by \(\textbf{R}_{i}^{r} \in \mathbb {R}^{\alpha \times m}\), where the j-th row \(\textbf{R}_{i}^{r}[j,:]\) denotes the compatibility with multi-embedding of the j-th position entity. With a designed latent space of positions and roles, the relation matrix can be learned as follows for all \(i \le \alpha\):

where \(\textbf{B}_{l} \in \mathbb {R}^{\alpha \times m}\) is the basis matrix of relation linked with latent vector of role \(\textbf{b}_{l}\) in the latent space. The entire basis matrix is also normalized by \(\sigma\). The basis relation matrix \(\textbf{B}_{l}\) is aligned with the latent role vector \(\textbf{b}_{l}\), which is used to compute for role embeddings and relation matrices.

4.3 Scoring Function

The scoring function employs a multi-linear product approach to calculate the confidence of the knowledge hypergraph tuple, which can effectively improve the performance and introduce fewer parameters, making the training more efficient. For each tuple \(t_{i}=r(\rho _{1}^{r}: e_{1}, \rho _{2}^{r}: e_{2},..., \rho _{\alpha }^{r}: e_{\alpha })\), the score of tuples is calculated by the following equation:

where \(\textbf{R}_{i}^{r}[1,:]\textbf{e}_{1}\) captures the compatibility between the role \(\rho _{i}^{r}\) and i-th entity \(e_{i}\), i.e., the multi-embedding of \(e_{i}\) is weighted by the elements of \(\textbf{R}_{i}^{r}[1,:]\). Each summation term of the multi-linear product is the compatibility of the entity with the corresponding role at a different position.

4.4 Model Training

Generally, the knowledge hypergraph only provides positive examples, while negative examples need to be sampled by some way. Based on the scoring function designed above, the training loss and the learning target of the model are designed in the following way. For each positive tuple \(t \in \mathcal {T}_{O}\), the negative samples are obtained by replacing the entity linked with \(\rho _{i}^{r}\). The strategy generalizes from the ones in the binary case:

Furthermore, an instantaneous multi-class log-loss is adopted and a optimizer of an empirical risk is formulated as follows:

where the set E, B, W, and \(\mathcal {B}\) contains all elements of \(\textbf{e}_{i}\), \(\textbf{b}_{i}\), \(\textbf{w}_{i}^{r}\), and \(\textbf{B}_{i}\), respectively, the Softmax loss guarantees that exactly one correct sample is learned among the candidates.

Algorithm 1 is the training process of PosKHG. For each tuple sampled from a knowledge hypergraph, its negative sample is obtained at first. Next, the embeddings and the relation matrix are computed. Then, the confidence score of this sampled tuple is calculated. Finally, PosKHG is trained in mini-batch to minimize the above empirical risk formulation.

5 Theoretical Analysis

Table 2 summarizes the role-aware and position-aware properties, expressiveness, and the time and space complexity of existing n-ary relational approaches.

The PosKHG model is fully expressive, indicating that the model can correctly learn any valid n-ary relation in the knowledge hypergraph without being restricted to a specific pattern of relations. Given any ground truth tuples in the knowledge hypergraph, at least one embedding assignment of the model can correctly separate valid tuples from invalid ones. Furthermore, the PosKHG model can achieve a linear time and space complexity. Its embedding dimension constraint is presented in Theorem 1, and the complexity analysis is conducted.

Theorem 1

For any ground truth over entities \(\mathcal {E}\) and relations \(\mathcal {R}\) of the knowledge hypergraph containing \(\eta \ge 1\) ground truth tuples, there exists a PosKHG model with the embedding dimension \(d=\eta\), the multiplicity of entity embedding \(m=\textrm{max}_{r \in \mathcal {R}} \alpha\), and the latent space size \(L=\eta\), which accurately represents the ground truth tuple.

Proof

Let \(\mathcal {T}_{T}\) be the set of all ground truth tuples in the knowledge hypergraph with \(\rho = \vert \mathcal {T}_{T} \vert\). Then, the statement of Theorem 1 is equivalent to assigning parameters entity embeddings E, role basis vectors B, role weights W, and relation basis matrices \(\mathcal {B}\) to PosKHG. Under the conditions of embedding dimension \(d=\eta\), multiplicity of entity embeddings \(m=\textrm{max}_{r \in \mathcal {R}} \alpha\), and latent space size \(L=\eta\), the scoring function can be expressed as follows:

when \(\eta \ge 1\), for each entity \(e \in \mathcal {E}\) with multiple embeddings \(\textbf{e} \in \textrm{R}^{m \times d}\), \(\textbf{e}[i,j]\) is set to 1 if the entity e involves with the i-th role of the j-th tuple in \(\mathcal {T}_{T}\), and to 0 otherwise. As for the latent space, an identity matrix \(\textbf{I}_{L}\) is concatenated by the role latent vectors \([\textbf{b}_{1},...,\textbf{b}_{l}]\). The form of the relation basis matrix is \(\textbf{B}_{i}=[\textbf{I}_{\alpha },0] \in \{0,1\}^{\alpha \times m}\). Since an identity matrix is a group of latent vectors for \({R}^{\eta }\), the role weights \(\{\textbf{W}_{i}^{r}\}\) can be assigned to satisfy that \(\textbf{c}_{i}^{r}[j]=1\) if the relation r involves with the j-th tuple in \(\mathcal {T}_{T}\), and \(\textbf{c}_{i}^{r}=0\) otherwise. Then, the confidence score of the j-th ground truth tuple can be calculated by PosKHG through the following equation:

and each summation term is equal to 1, the score for t is \(\phi (t) = \alpha > 0\)

As for \(\phi (t) = 0\), assume there exists a false tuple \(t \not \in \mathcal {T}_{T}\), \(\phi (t) > 0\). Based on this assumption, there is at least one position j to ensure that \(\textbf{c}_{i}^{r}[j]=1\) and the j-th elements of \(\textbf{R}_{i}^{r}[1,:]\textbf{e}_{1},...,\textbf{R}_{i}^{r}[\alpha ,:]\textbf{e}_{\alpha }\) are all equal to 1. However, this can only happen when entities \(e_{1},...,e_{\alpha }\) and relation r appear in the j-th tuple of \(\mathcal {T}_{T}\) simultaneously, and then, \(t \in \mathcal {T}_{T}\) which contradicts the initial assumption. So that when \(t \not \in \mathcal {T}_{T}\), \(\phi (t) = 0\). \(\square\)

For the time complexity, since our scoring function uses a multi-linear product, the linear time complexity is \(\mathcal {O}(d)\). For space complexity, since the arity of relations in the knowledge hypergraph is rarely higher than 6 (as shown in Table 3), the assignment of parameter m will not exceed 3. If let \(m_{\alpha }\) be the maximum arity of relation in the knowledge hypergraph, \(m_{e}\) be the number of entities, \(m_{r}\) be the number of relations, the parameters spent on the role latent vector, the basis matrix of relation, and the role weight vector are at most \(\mathcal {O}(m_{e}d+Lm_{r}m_{\alpha }+Ld+Lmm_{\alpha }) = \mathcal {O}(m_{e}d+Lm_{r}m_{\alpha })\). Thus, the PosKHG model remains linear in both time and space.

6 Experiments

The performance of PosKHG was evaluated on two types of benchmarks. Section 6.1 describes the experimental setups, including the datasets and baselines used. In Sect. 6.2, we present the results of experiments designed to predict hidden tuples or hidden triples.

6.1 Experiment Settings

6.1.1 Datasets

The experiments on link prediction were conducted on six datasets. The knowledge hypergraph dataset JF17K was proposed by Wen et al. [27], while FB-AUTO was proposed by Fatemi et al. [1]. As no validation set was proposed for JF17K, we randomly selected 20% of the train set as validation. Four standard knowledge graph benchmarks, i.e., WN18, FB15k, WN18RR, and FB15k-237 were used for link prediction in knowledge graphs. The detailed statistics of the datasets are summarized in Table 3.

6.1.2 Baselines

For link prediction in knowledge hypergraphs, we compare PosKHG with the state-of-the-art approaches, including RAE [32], NaLP [9], HINGE [18], NeuInfer [10], HypE [1], and RAM [14]. In addition, GETD [30] can only model single-arity knowledge hypergraphs and therefore is not included in the comparison. As for link prediction in knowledge graphs, we compared PosKHG with several baselines, including TransE [3], DistMult [24], ComplEx [23], SimplE [11], RotatE [21], TuckER [2], HAKE [31], DualE [5], AutoSF [34], and ComplExRP [6].

6.1.3 Evaluation Metrics

Two evaluation metrics were employed to compare the performance of different link prediction methods: mean reciprocal rank (MRR) and Hit@K, where H@K is in %, and all results in Sect. 6.2 are rounded. Two metrics above are measured by ranking a test tuple t within a set of replaced tuples. For each tuple in the test set and each position i in the tuple, \(\vert \mathcal {E} \vert -1\) replaced tuples are generated by replacing the entity \(e_{i}\) with each entity in \(\mathcal {E} \backslash \{e_{i}\}\).

6.2 Results

6.2.1 Link Prediction in Knowledge Hypergraphs

Table 4 shows that PosKHG improves the MRR on the FB-AUTO dataset by at most 2.6%. When modeling knowledge hypergraphs, RAE, NaLP, and HINGE ignore positional and role semantics. RAE is based on generalizing the TransH translation model for knowledge hypergraphs. However, it is not fully expressive and can only model symmetric relations, leading to lower prediction performance than state-of-the-art models. NaLP and HINGE split tuples into primary tuple and auxiliary key-value pair attributes, which ignore the semantic information of positions and roles, resulting in lost information and lower prediction performance.

HypE is a generalization of the SimplE model, which variates the position differences but not the role. The experimental results of RAM (only considering roles) outperform that of HypE, which further illustrates the importance of role semantics in link prediction. While NeuInfer and RAM model the knowledge hypergraph using neural networks and tensor decomposition-based methods, respectively, which consider the difference in role semantics but not the positional information. The lack of utilizing positional information causes the worse experimental results of PosKHG, which fully justifies the significance of positional information for knowledge hypergraph modeling.

We conducted an ablation study that separately excluded position and role information and labeled the results as PosKHG\(\circledast\) and PosKHG\(\divideontimes\), respectively, to demonstrate the importance of position and role information in link prediction tasks. As shown in Table 4, the experimental results for PosKHG\(\circledast\) are still better than those for RAM, which only considers role semantics, and the results for PosKHG\(\divideontimes\) are still better than those for HypE, which only considers position semantics. However, the performance of both variants is inferior to that of PosKHG. This supports our conclusion that both position and role information are essential for knowledge hypergraph modeling.

6.2.2 Link Prediction with Different Arities

As shown in Fig. 4, to compare PosKHG with other models, we conducted experiments on knowledge hypergraphs with fixed arity using the JF17K-3 and JF17K-4 datasets, subsets of JF17K containing tuples with specific arities of relations. Among the translational models, we only include RAE, an improved version of m-TransH. As baselines, we use the tensor decomposition models GTED and the neural network models NaLP, HINGE, and NeuInfer.

The performance on knowledge hypergraph data with fixed arity is shown in Table 5. The tensor decomposition model generally outperforms other models. This is likely due to their intense expressiveness. Our proposed PosKHG consistently achieves state-of-the-art performance on all benchmark datasets due to its consideration of both position and role in its design.

Table 5 presents the results of directly predicting tuples of different arities after training on the entire knowledge hypergraph dataset. The RAM model considers role semantics but not positions, while GETD, an extension of the TuckER model, only considers positions but not roles.

PosKHG performs the best on all arities of relations in the FB-AUTO dataset, and on the JF17K dataset, it performs the best on high-arity relations. This is likely due to low-arity data noise during the training process for high-arity predictions. PosKHG improves by an average of 4.1% on all arities compared to RAM, demonstrating the importance of considering both positional and role information for link prediction tasks in knowledge hypergraphs.

6.2.3 Link Prediction in Knowledge Graphs

PosKHG achieves the second-best results on MRR and Hit@1 metrics and performs comparably on FB15k. These results demonstrate that while PosKHG may not achieve the optimal performance on binary datasets, it can achieve performance similar to binary relation models (Table 6). This shows that its design, which considers both position and (implicit) role, equally applies to knowledge graphs.

6.2.4 Sensitivity of Hyperparameters

Figure 5 further investigates the influence of critical hyperparameters on the JF17K dataset, including the embedding dimensionality (d), the latent space size (L), and the multiplicity of entity embeddings (m). Since the full expressiveness of PosKHG guarantees its learning capacity, it consistently performs well when d is greater than 30 (Fig. 5a). As for Fig. 5b, a small latent space size (\(L \ge 5\)) is sufficient for robust performance, which confirms the effectiveness of role semantic relatedness in knowledge hypergraphs. Figure 5(c) shows a peak point with the multiplicity of entity embeddings providing the best coverage for entity semantics when \(m=3\) or 4. Larger values of m lead to overfitting and intractable learning. Existing bilinear models also choose small values of m, such as \(m = 3\) in DistMult and SimplE. Therefore, an appropriate value for m is 3 to 5, while the optimal values for the embedding dimensionality and latent space size depend on the scale of the dataset.

7 Conclusion

In this paper, we propose a link prediction model PosKHG for knowledge hypergraphs, which learns the embedding representation from both role and position levels. Leveraging the latent space for entity semantic relatedness of role and position and relation matrix for entity compatibility achieves precise accuracy for link prediction, full expressiveness, and more generalized modeling of knowledge hypergraphs. The experimental results on both knowledge hypergraph datasets and four knowledge graph datasets demonstrate the superiority and robustness of PosKHG.

Availability of data and materials

Available.

References

Bahare F, Perouz T, David V, Poole D (2020) Knowledge hypergraphs, prediction beyond binary relations In: IJCAI

Balazevic I, Allen C, Hospedales T (2019). TuckER: tensor factorization for knowledge graph completion. In: EMNLP, pp 5188–5197

Bordes A, Usunier N, Garcia-Duran A, Weston J, Yakhnenko O (2013) Translating embeddings for modeling multi-relational data. In: NeurIPS

Bordes A, Usunier N, GarciaDuran A, Weston J, Yakhnenko O (2013) Translating embeddings for modeling multirelational data. In: Proceedings of the 26th international conference on neural information processing systems, pp 2787–2795

Cao Z, Xu Q, Yang Z, Cao X, Huang Q (2021) Dual quaternion knowledge graph embeddings. In: Proceedings of the AAAI conference on artificial intelligence 35(8):6894–6902

Chen Y, Minervini P, Riedel S, Stenetorp P (2021) Relation prediction as an auxiliary training objective for improving multi-relational graph representations. arXiv preprint arXiv:2110.02834

Ding B, Wang Q, Wang B, Guo L (2018) Improving knowledge graph embedding using simple constraints. In: Proceedings of the 56th annual meeting of the association for computational linguistics, pp 110–121

Ebisu T, Ichise R (2018) TorusE: knowledge graph embedding on a lie group. In: Proceedings of the 32nd AAAI conference on artificial intelligence, pp 1819-1826

Guan S, Jin X, Wang Y, Cheng X (2019) Link prediction on n-ary relational data. In: Proceedings of the 2019 world wide web conference, pp 583–593

Guan S, Jin X, Guo J, Wang Y, Cheng X (2020) NeuInfer: knowledge inference on n-ary facts. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp 6141–6151

Kazemi SM, Poole D (2018) SimplE embedding for link prediction in knowledge graphs. In: NeurIPS

Lacroix T, Usunier N, Obozinski G (2018) Canonical tensor decomposition for knowledge base completion. In: ICML

Lin Y, Liu Z, Sun M, Liu Y, Zhu X (2015b) Learning entity and relation embeddings for knowledge graph completion. In: Proceedings of the 29th AAAI conference on artificial intelligence, pp 2181–2187

Liu Y, Yao Q, Li Y (2021) Roleaware modeling for n-ary relational knowledge bases. arXiv preprint arXiv:2104.09780

Nathani D, Chauhan J, Sharma C, Kaul M (2019) Learning attention-based embeddings for relation prediction in knowledge graphs. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 4710–4723, Florence, Italy

Nguyen DQ, Nguyen TD, Nguyen DQ, Phung D (2018) A novel embedding model for knowledge base completion based on convolutional neural network. In: Proceedings of the 16th annual conference of the north American chapter of the association for computational linguistics: human language technologies, pp 327–333

Nickel M, Tresp V, Kriegel H-P (2011) A three-way model for collective learning on multi-relational data. In: Proceedings of the 28th international conference on machine learning, pp 809–816

Paolo R, Dingqi Y, Philippe C (2020) Beyond triplets: hyper-relational knowledge graph embedding for link prediction. In: Proceedings of the web conference 2020, pp 1885–1896

Peng Y, Choi B, Xu J (2021) Graph learning for combinatorial optimization: a survey of state-of-the-art. Data Sci. Eng. 6(2):119–141

Rossi A, Barbosa D, Firmani D, Matinata A, Merialdo P (2021) Knowledge graph embedding for link prediction: a comparative analysis. ACM Trans. Knowl. Discov. Data 15(2):1-49

Sun Z, Deng Z-H, Nie J-Y, Tang J (2019) RotatE: knowledge graph embedding by relational rotation in complex space. In: ICLR, Article 14.

Tran HN, Takasu A (2019) Analyzing knowledge graph embedding methods from a multi-embedding interaction perspective. arXiv preprint arXiv:1903.11406 (2019)

Trouillon T, Welbl J, Riedel S, Gaussier E, Bouchard G (2016) Complex embeddings for simple link prediction. In: Proceedings of the 33rd international conference on machine learning, pp 2071-2080

Wang Q, Mao Z, Wang B, Guo L (2017) Knowledge graph embedding: a survey of approaches and applications. TKDE 29, 12, pp 2724–2743

Wang Z, Zhang J, Feng J, Chen Z (2014) Knowledge graph embedding by translating on hyperplanes. In: Proceedings of the 28th AAAI conference on artificial intelligence, pp 1112–1119

Wawrzinek J, Pinto JMG, Wiehr O et al (2020) Exploiting latent semantic subspaces to derive associations for specific pharmaceutical semantics. Data Sci. Eng. 5(4):333–345

Wen J, Li J, Mao Y, Chen S, Zhang R (2016) On the representation and embedding of knowledge bases beyond binary relations. In: Proceedings of the twenty-fifth international joint conference on artificial intelligence, pp 1300–1307

Wu S, Zhang Y, Gao C et al (2020) GARG: anonymous recommendation of point-of-interest in mobile networks by graph convolution network. Data Sci Eng 5(4):433–447

Xiao H, Huang M, Zhu X (2016) TransG: a generative model for knowledge graph embedding. In: Proceedings of the 54th annual meeting of the association for computational linguistics, pp 2316–2325

Yu L, Quanming Y, Yong L (2020) Generalizing tensor decomposition for n-ary relational knowledge bases. In: Proceedings of the web conference 2020, pp 1104–1114

Zhang Z, Cai J, Zhang Y, Wang J (2020) Learning hierarchy-aware knowledge graph embeddings for link prediction. In: Proceedings of the AAAI conference on artificial intelligence 34(03):3065–3072

Zhang R, Li J, Mei J, Mao Y (2018) Scalable instance reconstruction in knowledge bases via relatedness affiliated embedding. In: Proceedings of the 2018 World Wide Web conference, pp 1185–1194

Zhang F, Wang X, Li Z, Li J (2021) Transrhs: a representation learning method for knowledge graphs with relation hierarchical structure. In: Proceedings of the twenty-ninth international conference on international joint conferences on artificial intelligence, pp. 2987–2993

Zhang Y, Yao Q, Dai W, Chen L (2020) AutoSF: searching scoring functions for knowledge graph embedding. In: 2020 IEEE 36th international conference on data engineering (ICDE). IEEE, pp 433–444

Zhu M, Shen D, Xu L et al (2021) Scalable multi-grained cross-modal similarity query with interpretability. Data Sci Eng 6(3):280–293

Acknowledgements

This work is supported by the National Key R &D Program of China (2020AAA0108504) and National Natural Science Foundation of China (61972275).

Funding

This study was funded by the National Key R &D Program of China (2020AAA0108504) and National Natural Science Foundation of China (61972275).

Author information

Authors and Affiliations

Contributions

All the authors listed in this manuscript have made substantial contributions to this article and signed appropriately.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Ethics approval

Not applicable.

Consent to participate

All authors agreed to participate.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, Z., Wang, X., Wang, C. et al. PosKHG: A Position-Aware Knowledge Hypergraph Model for Link Prediction. Data Sci. Eng. 8, 135–145 (2023). https://doi.org/10.1007/s41019-023-00214-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41019-023-00214-x