Abstract

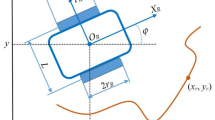

In this paper, a novel algorithm of trajectory tracking control for mobile robots using the reinforcement learning and PID is proposed. The Q-learning and PID are adopted for tracking the desired trajectory of the mobile robot. The proposed method can reduce the computational complexity of reward function for Q-learning and improve the tracking accuracy of mobile robot. The effectiveness of the proposed algorithm is demonstrated via simulation tests.

Similar content being viewed by others

Change history

22 January 2020

In the article “Trajectory Tracking Control for Mobile Robots Using Reinforcement Learning and PID” by Shuti Wang, Xunhe Yin, Peng Li, Mingzhi Zhang and Xin Wang (Iranian Journal of Science and Technology, Transactions of Electrical Engineering. <ExternalRef><RefSource>https://doi.org/10.1007/s40998-019-00286-4</RefSource><RefTarget Address="10.1007/s40998-019-00286-4" TargetType="DOI"/></ExternalRef>), there is an error in page 5. The erratum is to correct this error.

References

Anderlini E, Forehand David I M, Stansell P, Xiao Q, Abusara M (2016) Control of a point absorber using reinforcement learning. IEEE Trans Sustain Energy 7(4):1681–1690

Anderlini E, Forehand DIM, Bannon E, Xiao Q, Abusara M (2018) Reactive control of a two-body point absorber using reinforcement learning. Ocean Eng 148:650–658

Beghi A, Rampazzo M, Zorzi S (2017) Reinforcement learning control of transcritical carbon dioxide supermarket refrigeration systems. IFAC PapersOnline 50(1):13754–13759

Carlucho I, De Paula M, Villar SA, Acosta GG (2017) Incremental Q-learning strategy for adaptive PID control of mobile robots. Expert Syst Appl 80:183–199

Doya K (2000) Reinforcement learning in continuous time and space. Neural Comput 12(1):219–245

Fernandez-Gauna B, Osa JL, Graña M (2018) Experiments of conditioned reinforcement learning in continuous space control tasks. Neurocomputing 271:38–47

Genders W, Razavi S (2018) Evaluating reinforcement learning state representations for adaptive traffic signal control. Proc Comput Sci 130:26–33

Görges D (2017) Relations between model predictive control and reinforcement learning. IFAC PapersOnLine 50(1):4920–4928

Günther J, Pilarski PM, Helfrich G, Shen H, Diepold K (2016) Intelligent laser welding through representation, prediction, and control learning: an architecture with deep neural networks and reinforcement learning. Mechatronics 34:1–11

Hernández-del-Olmo F, Gaudioso E, Dormido R, Duro N (2018) Tackling the start-up of a reinforcement learning agent for the control of wastewater treatment plants. Knowl Based Syst 144:9–15

Huang J, Wen C, Wang W, Jiang Z-P (2014) Adaptive output feedback tracking control of a nonholonomic mobile robot. Automatica 50:821–831

Huang D, Zhai J, Ai W, Fei S (2016) Disturbance observer-based robust control for trajectory tracking of wheeled mobile robots. Neurocomputing 198:74–79

Jiang H, Zhang H, Cui Y, Xiao G (2018a) Robust control scheme for a class of uncertain nonlinear systems with completely unknown dynamics using data-driven reinforcement learning method. Neurocomputing 273:68–77

Jiang Z, Fan W, Liu W, Zhu B, Jinjing G (2018b) Reinforcement learning approach for coordinated passenger inflow control of urban rail transit in peak hours. Transp Res 88:1–16

Klancar G, Skrjanc I (2007) Tracking-error model-based predictive control for mobile robots in real time. Robot Auton Syst 55:460–469

Kofinas P, Doltsinis S, Dounis AI, Vouros GA (2017) A reinforcement learning approach for MPPT control method of photovoltaic sources. Renew Energy 108:461–473

Kubalik J, Alibekov E, Babuska R (2017) Optimal control via reinforcement learning with symbolic policy approximation. IFAC PapersOnLine 50(1):4162–4167

Kumar A, Sharma R (2018) Linguistic Lyapunov reinforcement learning control for robotic manipulators. Neurocomputing 272:84–95

Leena N, Saju KK (2016) Modelling and trajectory tracking of wheeled mobile robots. Proc Technol 24:538–545

Li Y, Chen L, Tee KP, Li Q (2015) Reinforcement learning control for coordinated manipulation of multi-robots. Neurocomputing 170:168–175

Li P, Dargaville R, Cao Y, Li D, Xia J (2017a) Storage aided system property enhancing and hybrid robust smoothing for large-scale PV Systems. IEEE Trans Smart Grid 8(6):2871–2879

Li R, Liwei Zhang L, Han JW (2017b) Multiple vehicle formation control based on robust adaptive control algorithm. IEEE Intell Transp Syst Mag 9(2):41–51

Li S, Ding L, Gao H, Chen C, Liu Z, Deng Z (2018a) Adaptive neural network tracking control-based reinforcement learning for wheeled mobile robots with skidding and slipping. Neurocomputing 283:20–30

Li P, Li R, Cao Y, Li D, Xie G (2018b) Multiobjective sizing optimization for island microgrids using a triangular aggregation model and the Levy–Harmony algorithm. IEEE Trans Ind Inf 14(8):3495–3505

Liu F, Song YD (2011) Stability condition for sampled data based control of linear continuous switched systems. Syst Control Lett 60(10):787–797

Lopez-Guede JM, Estevez J, Garmendia A, Graña M (2018) Making physical proofs of concept of reinforcement learning control in single robot hose transport task complete. Neurocomputing 271:95–103

Mahmoodabadi MJ, Abedzadeh Maafi R, Taherkhorsandi M (2017) An optimal adaptive robust PID controller subject to fuzzy rules and sliding modes for MIMO uncertain chaotic systems. Appl Soft Comput 52:1191–1199

Mendonça Matheus R F, Bernardino HS, Neto RF (2018) Reinforcement learning with optimized reward function for stealth applications. Entertain Comput 25:37–47

Miljkovic Z, Mitić M, Lazarevic M, Babic B (2013) Neural network reinforcement learning for visual control of robot manipulators. Expert Syst Appl 40(5):1721–1736

Padmanabhan R, Meskin N, Haddad WM (2015) Closed-loop control of anesthesia and mean arterial pressure using reinforcement learning. Biomed Signal Process Control 22:54–64

Padmanabhan R, Meskin N, Haddad WM (2017) Reinforcement learning-based control of drug dosing for cancer chemotherapy treatment. Math Biosci 293:11–20

Ramanathan P, Mangla KK, Satpathy S (2018) Smart controller for conical tank system using reinforcement learning algorithm. Measurement 116:422–428

Shah H, Gopal M (2016) Model-free predictive control of nonlinear processes based on reinforcement learning. Int Fed Autom Control 49(1):89–94

Shi H, Lin Z, Zhang S, Li X, Hwang K-S (2018) An adaptive decision-making method with fuzzy Bayesian reinforcement learning for robot soccer. Inf Sci 436–437:268–281

Simba KR, Uchiyama N, Sano S (2016) Real-time smooth trajectory generation for nonholonomic mobile robots using Bézier curves. Robot Comput Integr Manuf 41:31–42

Suruz Miah M, Gueaieb W (2014) Mobile robot trajectory tracking using noisy RSS measurements: an RFID approach. ISA Trans 53:433–443

Wang H, Fei Richard Yu, Zhu L, Tang T, Ning B (2015) A cognitive control approach to communication-based train control systems. IEEE Trans Intell Transp Syst 16(4):1676–1689

Xiao G, Zhang H, Luo Y, Qiuxia Q (2017) General value iteration based reinforcement learning for solving optimal tracking control problem of continuous-time affine nonlinear systems. Neurocomputing 245:114–123

Yang L, Nagy Z, Goffin P, Schlueter A (2015) Reinforcement learning for optimal control of low exergy buildings. Appl Energy 156:577–586

Zhan Y, Ammar HB, Taylor ME (2017) Scalable lifelong reinforcement learning. Pattern Recognit 72:407–418

Acknowledgements

This work was supported by the Fundamental Research Funds for the Central Universities under Grant 2019JBM004.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, S., Yin, X., Li, P. et al. Trajectory Tracking Control for Mobile Robots Using Reinforcement Learning and PID. Iran J Sci Technol Trans Electr Eng 44, 1059–1068 (2020). https://doi.org/10.1007/s40998-019-00286-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40998-019-00286-4