Abstract

Laser powder bed fusion of metals (PBF-LB/M) is a process widely used in additive manufacturing (AM). It is highly sensitive to its process parameters directly determining the quality of the components. Hence, optimal parameters are needed to ensure the highest part quality. However, current approaches such as experimental investigation and the numerical simulation of the process are time-consuming and costly, requiring more efficient ways for parameter optimization. In this work, the use of machine learning (ML) for parameter search is investigated based on the influence of laser power and speed on simulated melt pool dimensions and experimentally determined part density. In total, four machine learning algorithms are considered. The models are trained to predict the melt pool size and part density based on the process parameters. The accuracy is evaluated based on the deviation of the prediction from the actual value. The models are implemented in python using the scikit-learn library. The results show that ML models provide generalized predictions with small errors for both the melt pool dimensions and the part density, demonstrating the potential of ML in AM. The main limitation is data collection, which is still done experimentally or simulatively. However, the results show that ML provides an opportunity for more efficient parameter optimization in PBF-LB/M.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Additive manufacturing (AM) is a rapidly growing field with a double-digit market growth for the last decade that allows the simple production of complex three-dimensional geometries [1]. Nevertheless, there are numerous challenges in order to establish the technology on a broad scale on the market and to qualify it for serial production [2]. For the transition to the production of ready-to-install components and series production, it is important to speed up production, increase the predictability and repeatability of the process, and improve quality assurance [3]. In the area of manufacturing metallic parts laser powder bed fusion of metals (PBF-LB/M) is the most commonly used process in the AM industry [4]. The PBF-LB/M process can be used to produce high quality components. The complete melting of the material enables the production of components with high density (> 99.9%) and similar or higher tensile strength than conventional castings [5].

The PBF-LB/M process is governed by a variety of thermal and physical phenomena during the build process depending e.g. on the parameters used or the orientation of the part in the build space [6]. Hence, the determination of parameter combinations that enable the production of components with the highest quality is an essential part of process development. With more than 50 parameters that influence the process and the resulting part properties a trial-and-error-approach is not practical [3].

A common approach in the literature is the experimental investigation by using design of experiments (DOE) techniques to minimize the experimental effort [7]. In AM this procedure is mainly used to investigate the influence of the process parameters (e.g. laser power or laser speed) on the part properties (e.g. surface roughness or tensile strength) and to improve the quality of the final part [8]. Nevertheless, the production of test components always requires the use of material, machine time and personnel for the pre- and post-processing of the experiments, which quickly results in high costs. Furthermore, computer based numeric process simulations are used to investigate the influence of different parameters and determine process windows that provide optimized part properties. The finite element method (FEM) is a widely used approach in the literature to create digital models and simulate AM processes [6]. Due to the high complexity of the process, PBF-LB/M simulations can usually be divided into macro-, meso- or microscale approaches. Essentially, they differ in the size scale and phenomena considered [9]. Common simulation objectives are to calculate the development of inherent strains, possible component distortions and the temperature distribution within the component. However, the main disadvantage of this approach is that there is always a trade-off between result accuracy and computational effort [10].

Another approach that has been increasingly used in the near past is machine learning (ML). ML is an essential subfield of the growing research area artificial intelligence that explores the use of algorithms to enable systems to learn from data and autonomously adapt and improve themselves using statistical and mathematical principles [11]. The goal is to create models that are able to make decisions and predictions for new, unknown instances based on certain training data [12]. Due to growing data volumes and the multitude of influencing parameters, more and more application areas for ML algorithms are emerging in AM [13]. One approach for parameter optimization in AM is to investigate direct correlations between the process parameters and the resulting part properties using ML [14, 15].

With the goal of reducing the high financial and time effort related to current approaches, the use of ML techniques is investigated in this work. ML models show great potential to find relations between process parameters and part quality, but still require a high experimental effort to generate training data [14]. Hence, this work investigates the use of simulated training data in order to generate large data sets with significantly less effort. Therefore, ML algorithms trained with a mix of experimental and simulated data are used to relate the PBF-LB/M process parameters to both the resulting melt pool dimensions (in-process data) and the final part density (post-process data). In the literature, laser power and laser speed are often considered as relevant process parameters for the PBF-LB/M process in order to investigate the influence of the process parameters on the melt pool geometry and temperature distribution [8, 10]. Some studies also consider the influence of the hatch distance [16]. However, since the simulation of a single track is used to generate the melt pool data in order to minimize the computation time (3 to 4 times faster than a double track), the parameter is neglected. The goal is to use the trained models to calculate predictions for the melt pool dimensions and the component density for unknown parameter combinations. Moreover, the capability of the investigated ML approaches for describing the correlations is compared.

2 Methodology

Figure 1 shows the procedure used within this work. The PBF-LB/M process with the widely used titanium alloy Ti-6Al-4 V is considered. The data basis is provided on the one hand by experimental data from the literature of [8] and [16] and on the other hand by simulatively generated melt pool data based on the process model of [17]. The data set of [8] contains measurements regarding the resulting melt pool dimensions depending on laser power and speed for Ti-6Al-4V and is used to validate the accuracy of the simulation model. Furthermore, the measured values for the resulting component density (33 data points) of [16] of Ti-6Al-4V in dependence of laser power and speed are used as training data for the ML model to predict the component density.

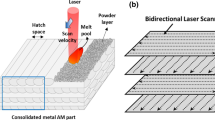

2.1 Process simulation

The FEM simulation is built in COMSOL Multiphysics 5.2a and maintained as simple as possible to minimize calculation times. As shown in Fig. 2a, a single layer of powder deposited on the base plate is considered. The model consists of a powder and bulk material phase. Due to FEM restrictions the powder layer is modelled as a homogenous material. The respective material properties for Ti-6Al-4V of [4] are used to model the powder, bulk and melt. The laser is modeled as a Gaussian distributed heat source and moves along the x-axis [17]. A track length of one millimeter is considered to ensure a continuous melt pool formation. The resulting melt pool dimensions of the simulation are evaluated at the end of the melt track and saved. Figure 2b shows an example of the evaluated melt pool dimensions length xmp, width ymp and depth zmp of a simulation which are used for the training of the ML models.

The process parameters used for the simulation are summarized in Table 1. For the generation of melt pool data, simulations are performed with varying combinations of laser power and speed. On the one hand, the 33 combinations from the work of [16] are used and, on the other hand, 100 randomly generated parameter combinations are simulated. For this purpose, different combinations are generated equally distributed in the considered power and velocity interval. In total, 133 data points with melt pool results for different process parameter combinations are generated in this way as a basis for training ML algorithms.

2.2 Machine learning models

In the context of this work, supervised learning algorithms will be focused on because the data set contains information on both the input and output values. Additionally, regression algorithms are considered since the goal is to predict numeric values. The ML models for linear regressions (LR), decision tree regressions (DTR), random forest regressions (RFR), and a multilayer perceptron (MLP) are compared [12]. After training, the models shall be able to calculate predictions for the resulting characteristics for unknown parameter combinations. Accordingly, the laser power and speed are used as input values and the melt pool dimensions and density as output values. However, the models for the melt pool and density prediction are built and trained separately, since only 33 data points from [16] are available with density information and 133 data points can be used for the melt pool prediction. Furthermore, one model per dataset is trained for each ML approach considered. The implementation of the models is done in python (version 3.8). The main libraries used are pandas for data processing and scikit-learn [18] for building and training the ML algorithms.

The evaluation of the model accuracy is done using the root mean squared error (RMSE), which is a common criterion for evaluating the accuracy of regression algorithms and is calculated according to (1) from the squared distance between the prediction of the algorithm \(\widehat{{y}_{i}}\) and the actual value \({y}_{i}\) for m data points [11].

When training the models, GridSearch is used to examine the hyperparameters of the models to ensure that they are as accurate as possible for the use case and to prevent common ML issues such as overfitting and underfitting of the algorithms [11]. The training error is calculated using tenfold-cross-validation, where the training data set is split into 10 partial data sets, each of which is used to train one model in order to estimate the variance of the model during training [12]. For the melt pool prediction model, 70% of the 133 data points containing melt pool information are used for training. The remaining 30% of the data is held back for training and then used to estimate the generalization ability of the algorithm by calculating the RMSE based on the difference between the model prediction and the simulated melt pool dimensions [11].

For density prediction, a slightly different approach is taken for estimating the generalization ability due to the small amount of data. The 33 data points that contain density information are used entirely for training the algorithm in order to give the model a sufficiently large data base. Therefore, for the estimation of the generalization ability of the models, a purely qualitative evaluation is performed. For this, the trained models are applied to the 100 randomly created parameter combinations and the resulting density is predicted. At last, the predicted values are plotted on the process map of [16] and the predicted values are compared to the experimental measurements to evaluate the algorithms capability of reproducing the dependencies between the process parameters and the resulting part density as the experiments show.

The comparison is done using the normalized hatch distance h* and the normalized energy density E* according to [16]. Equation (2) shows the calculation of h* using the laser radius rb and the hatch distance h. E*, on the other hand, is calculated according to (3) from the amount of energy absorbed by the material a, the density ρ, the specific heat capacity cP, the melting temperature Tliq and the initial temperature T0 of the material, as well as the laser power PL and the laser velocity vL. This method of calculation and representation in the process map of [16] allows linking the material properties, the process parameters and the resulting component density in one diagram.

3 Results

Initially, the simulation results are compared to the measured values of [8] regarding the accuracy of the calculated melt pool dimensions. The comparison shows that the simulation model is able to reproduce the width and depth of the melt pool with an error between 7 and 30% in the considered energy density range. The error increases significantly at extreme energy densities. Overall, the correlation between laser parameters and melt pool dimensions observed in the measured values is also correctly reproduced by the simulation, which is decisive for the evaluation for the use of ML techniques in this area.

The data set is analyzed with the goal of understanding the generated data set before the algorithms are trained, to detect outliers in the data and to identify potential correlations in the data. For this purpose, the Pearson correlation coefficients between the process parameters and the target variables are calculated. This factor describes the linear correlation between two variables with a value between -1 and 1. A value of 1 indicates a strong positive and -1 strong negative correlation between the variables under consideration.

The results for the generated data set are summarized in Table 2. Overall, higher values are evident for the correlation between the velocity and the target variables than for the laser power. Regarding the melt pool prediction, the Pearson coefficients for the laser power indicate a weak positive correlation to the melt pool dimensions. In contrast, the dependence of the melt pool on the laser speed is significantly larger. Accordingly, the coefficients for length x describe a strong positive and width y and depth z a strong negative linear correlation to the velocity. This means that with increasing speed, the melt pool length increases and the width and depth decrease. The analysis of the density data shows a similar behaviour. The correlation factor between component density and power is negligible, whereas the factor of 0.67 indicates a much stronger correlation with speed.

3.1 Melt pool prediction

The trained models for melt pool prediction are compared using the RMSE. In the scope of the work, it has been shown that by working out suitable hyperparameter combinations using GridSearch for the ML approaches, the RMSE of the models can be reduced by up to 85% (for the MLP). This illustrates the importance of this step for building ML models. In the following, the approaches are compared using the models with optimized hyperparameters. For the comparison, the melt pool width and depth are looked at primarily, as these are often considered as relevant dimensions for the investigation of critical energy densities [8].

The determined training and generalization errors for the models are shown in Table 3. The training error is averaged over the melt pool dimensions. For a detailed evaluation of the generalization ability, both the averaged error and the respective error per melt pool dimension are calculated. For all models, the training error is slightly lower than the generalization error, as expected, because ML algorithms can usually approximate known data points more accurately [11]. If the difference between the errors is very large, this may indicate that the algorithm is overfitting the training data set. However, due to the regulation of the models and the relatively small difference, overfitting can be ruled out here. Essentially, all models are able to represent the melt pool dimensions in a generalized way based on the process parameters. For all models the error is largest for predicting the melt pool length. Examination of the simulation values shows that the simulation model exhibits inconsistent and non-physical behaviour in x-direction, which could be caused by the variety of simplifications in the simulation made in order to minimize the computation time. Consequently, these values are difficult to represent by the ML approaches. However, the simulation results of the relevant values (melt pool width and depth) are robust and approximated accurately by the ML models.

The LR shows the most accurate prediction for these parameters. This can be explained by the high negative linear correlation of the quantities with the speed, which allows a good approximation by the linear approach. The error of the RFR model is overall only slightly higher than the LR with respect to the width and depth, but at the same time about 20% lower with respect to the length description. As expected, by training multiple DTR, the RFR approach is more accurate than training a single DTR, which highlights the power and flexibility of the RFR approach [12]. Overall, the RFR provides the most accurate modelling of the considered approaches for describing the process parameter to melt pool relationship.

For the RFR, the predicted values for the melt pool width and depth are visualized in Fig. 3. Each point represents one combination of laser power and speed from the training or test set for which the predictions of the model's melt pool dimensions (y-axis) are plotted against the simulated values (x-axis). The diagonal line marks the area where the prediction matches the simulated value. Consequently, the closer the points are to the diagonal, the more accurate is the prediction of the model. The plots show that the predictions of the model spread evenly and without significant outliers around the optimum line. This indicates a good approximation of the melt pool dimensions by the algorithm. Furthermore, a homogeneous distribution of the training and test data points is shown, which confirms that the model is able to transfer the trained correlations to unknown data without significant loss in accuracy (generalization capability). However, it is noticeable that the data points are slightly above the diagonal for low values of width and depth, and slightly below the diagonal for high values. Thus, the model is slightly less accurate in these fringe areas.

3.2 Density prediction

The ML models to predict the resulting part density are trained with all 33 experimentally determined density values to investigate whether the algorithms are able to correlate the resulting component density in the PBF-LB/M process with the laser parameters. The DTR approach will not be considered further in the following, as the approach is very similar to the RFR and the melt pool prediction has already demonstrated that the RFR provides more accurate approximations by training multiple DTR.

The calculated training errors are summarized in Table 4. Here, the RMSE describes the deviation of the prediction from the resulting component density in percentage points. Overall, the models provide predictions with a small training error of approximately ± 0.2 to ± 0.3 percentage points. The RFR has the largest training error and standard deviation. This may be an indicator of insufficient data, so that the algorithm is not able to detect the relationships between the process parameters and the resulting density during training. The MLP shows the best results with a roughly 30% lower RMSE compared to the other models. Overall, the RMSE with small standard deviation shows that the relatively small amount of data is sufficient to obtain reproducible and accurate results for density prediction with the MLP.

The results of the qualitative estimation of the generalization ability for the density prediction are plotted in Fig. 4 for the LR and MLP model. Here, the limits of LR become clear. The algorithm is not capable of recognizing more complex relationships in the data and approximates the relationship between E* and the density with clear boundaries, although the experimentally determined values show that relatively broad transition areas between the density categories are certainly recognizable. Furthermore, the critical energy density for lack-of-fusion pores is not represented by the predictions of the model. Finally, it is evident that no values below 98.5% are predicted for high energy densities, although several experimental measured values occur in this area. In contrast, the MLP algorithm allows the accurate mapping of the process parameter to part density relationship. The predictions show very similar distributions to the experimental data. First, the transition ranges between density categories are reflected in the models predictions, and second, the density categories are similarly distributed across the energy density as in the experimental data. Only the 99% to 99.5% range extends slightly further toward lower energy densities in the MLP predictions than in the measured data. In addition, the boundary for lack-of-fusion pores determined by [16] is reproduced by the model. Hence, to the right of the boundary, no component density above 99.5% is predicted by the model. In addition, density values lower than 98.5% are correctly predicted for high energy densities. Overall, the predictions of all models show the essential correlation that the resulting component density decreases with increasing energy density.

4 Discussion

The results of the work show that ML algorithms are generally capable of calculating predictions for the melt pool dimensions and component density for various combinations of laser power and speed with a small error.

Moreover, the potentials and limitations of the different algorithms become clear. LR is very well suited for describing linear relationships, as can be seen in the prediction of melt pool width and depth. However, the model is restricted to this range of applications and offers only limited possibilities for adapting the model to different data sets. The density prediction illustrates the limitations of the approach. As expected, the DTR is a little less accurate and less robust than the RFR. In addition, training the DTR has shown that regulation of the model is important, as the approach has a strong tendency to overfit. In principle, however, relatively accurate predictions of the melt pool dimensions are possible with both approaches. The RFR even achieves the most accurate prediction overall for the melt pool width and depth. The potentials of the MLP approach are particularly evident in the density prediction. While the other approaches do map the essential relationship, the transitions between density categories visible in the experimental data are only apparent with the MLP approach. Overall, the flexibility of the MLP approach allows the best approximation of the resulting component density. However, the application of the algorithms is limited to the considered parameter ranges of power and speed (see Table 1) as well as the used material Ti-6Al-4V. For parameter combinations outside these intervals, the calculated accuracy of the models is not ensured. To extend the process window to these ranges, further simulation and experimental data are needed.

The results shows that the trained ML models allow the prediction of melt pool dimensions and component density for varying combinations of laser power and scan speed. The prediction with the trained models for new parameter combinations is done with almost no time required. For the application of ML algorithms, mainly the data generation and preparation is time consuming. However, once the ML models are trained, they allow predictions in seconds for any combination of parameters. Thus, compared to simulative and experimental methods, ML approaches offer a way for more efficient process modeling of the PBF-LB/M process after one-time data collection, eliminating long simulation times and the fabrication of test components for each parameter combination.

Nevertheless, the work shows that ML algorithms cannot be used as a “black box” function to describe the PBF-LB/M process. Accordingly, the analysis of data and results requires a comprehensive understanding of the process and its physical boundaries in order to make correct interpretations, as shown by the evaluation of the melt pool and density prediction. Furthermore, the training of ML models requires knowledge of the algorithms in order to obtain the best possible predictions. As the training of the models shows, the right model selection is important, as well as knowledge about typical ML issues such as over- or underfitting, in order to detect and avoid them.

5 Conclusion

In this work, the use of ML algorithms for more efficient determination of individual process parameters for the PBF-LB/M process was investigated. Four different ML approaches were used to study the influence of the process parameters laser power and scan speed on the melt pool dimensions and component properties part density. Both simulatively generated and experimentally collected data were used as the data basis.

The results of the work show that the ML models provide relatively accurate predictions for both the melt pool geometry and the resulting component density. The melt pool length can be predicted with an error of ± 24.9 µm, the melt pool width of ± 0.3 µm and the melt pool depth of ± 0.8 µm. For the prediction of the component density, an error of ± 0.2 percentage points is determined and the qualitative comparison with experimental data shows that the ML models are able to correctly reproduce the resulting density in dependence of the normalized energy density.

In summary, the results demonstrate that ML algorithms can be used in the field of PBF-LB/M manufacturing to directly model relationships between the process parameters used and the resulting process characteristics and part properties. Thus, ML techniques have the potential to make the acquisition of suitable process parameters for AM more efficient than current approaches, with the generation of training data being the main limitation of the approach. Overall, however, the use of ML algorithms should rather be seen as a supplement to previous approaches, with which the PBF-LB/M process can be investigated more efficiently.

In further work, the accuracy of the models needs to be further increased and the application of ML algorithms in the AM field needs to be extended. The first can be done by the generation of further simulation and experimental data in order to enhance the data basis for the training. The second can be achieved, for example, by considering larger parameter intervals, other materials, and additional process parameters such as the hatch distance.

Finally, the application of ML techniques in industry needs to be simplified. So far, the use of ML techniques requires both computer science and AM expertise, which limits its use in the industry due to shortage of professionals combining both fields. Here, the integration of pre-trained ML models into existing or new software programs can break the barrier for the use of ML in the AM industry and fully exploit the potential of ML in AM. For example, trained ML models can be used in material research to make the development of new alloys more efficient by using ML-based predictions of appropriate process windows to reduce the experimental effort to parameter validation.

Data availability statement

The raw and processed data required to reproduce these findings are available upon request to the corresponding author.

References

Wohlers T, Campbell RI, Diegel O, Kowen J, Mostow N, Fidan I (2022) Wohlers report 2022 3D printing and additive manufacturing: global state of the industry. Wohlers Associates, ASTM International, Washington, DC

Vafadar A, Guzzomi F, Rassau A, Hayward K (2021) Advances in metal additive manufacturing: a review of common processes, industrial applications, and current challenges. Appl Sci 11(3):1213. https://doi.org/10.3390/app11031213

Spears TG, Gold SA (2016) In-process sensing in selective laser melting (SLM) additive manufacturing. Integr Mater Manuf Innov 5(1):16–40. https://doi.org/10.1186/s40192-016-0045-4

Bartsch K, Herzog D, Bossen B, Emmelmann C (2021) Material modeling of Ti–6Al–4V alloy processed by laser powder bed fusion for application in macro-scale process simulation. Mater Sci Eng A 814:141–237. https://doi.org/10.1016/j.msea.2021.141237

Lachmayer R, Lippert RB, Fahlbusch T (2016) 3D-Druck beleuchtet: Additive Manufacturing auf dem Weg in die Anwendung. Springer Vieweg, Berlin, Heidelberg

Meier C, Penny RW, Zou Y, Gibbs JS, Hart AJ (2017) Thermophysical phenomena in metal additive manufacturing by selective laser melting: fundamentals, modeling, simulation and experimentation. Annu Rev Heat Transf 20(1):241–316. https://doi.org/10.1615/AnnualRevHeatTransfer.2018019042

Elsayed M, Ghazy M, Youssef Y, Essa K (2019) Optimization of SLM process parameters for Ti6Al4V medical implants. Rapid Prototyp J 25(3):433–447. https://doi.org/10.1108/RPJ-05-2018-0112

Dilip JJS et al (2017) Influence of processing parameters on the evolution of melt pool, porosity, and microstructures in Ti-6Al-4V alloy parts fabricated by selective laser melting. Prog Addit Manuf 2(3):157–167. https://doi.org/10.1007/s40964-017-0030-2

King W, Anderson AT, Ferencz RM, Hodge NE, Kamath C, Khairallah SA (2015) Overview of modelling and simulation of metal powder bed fusion process at lawrence livermore national laboratory. Mater Sci Technol 31(8):957–968. https://doi.org/10.1179/1743284714Y.0000000728

Ansari MJ, Nguyen D-S, Park HS (2019) Investigation of SLM process in terms of temperature distribution and melting pool size: modeling and experimental approaches. Materials. https://doi.org/10.3390/ma12081272

Joshi AV (2020) Machine learning and artificial intelligence. Springer, Cham

Géron A (2019) Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow: concepts, tools, and techniques to build intelligent systems. O’Reilly, Beijing, Boston, Farnham, Sebastopol, Tokyo

Meng L et al (2020) Machine learning in additive manufacturing: a review. JOM 72(6):2363–2377. https://doi.org/10.1007/s11837-020-04155-y

Park HS, Nguyen DS, Le-Hong T, van Tran X (2022) Machine learning-based optimization of process parameters in selective laser melting for biomedical applications. J Intell Manuf 33(6):1843–1858. https://doi.org/10.1007/s10845-021-01773-4

Mehrpouya M, Gisario A, Rahimzadeh A, Nematollahi M, Baghbaderani KS, Elahinia M (2019) A prediction model for finding the optimal laser parameters in additive manufacturing of NiTi shape memory alloy. Int J Adv Manuf Technol 105(11):4691–4699. https://doi.org/10.1007/s00170-019-04596-z

Herzog D, Bartsch K, Bossen B (2020) Productivity optimization of laser powder bed fusion by hot isostatic pressing. Addit Manuf 36:101494. https://doi.org/10.1016/j.addma.2020.101494

M. Zeyn, “Simulative Untersuchung der Wärmeentwicklung innerhalb eines Hatches im SLM-Verfahren,” Master thesis, HAW, Hamburg, 2020.

Pedregosa F et al (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Acknowledgements

This research was funded by the Federal Ministry of Education and Research (BMBF) (grant number 01IS21052C).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kuehne, M., Bartsch, K., Bossen, B. et al. Predicting melt track geometry and part density in laser powder bed fusion of metals using machine learning. Prog Addit Manuf 8, 47–54 (2023). https://doi.org/10.1007/s40964-022-00387-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40964-022-00387-3