Abstract

Data contamination and excessive correlations between regressors (multicollinearity) constitute a standard and major problem in econometrics. Two techniques enable solving these problems, in separate ways: the Gini regression for the former, and the PLS (partial least squares) regression for the latter. Gini-PLS regressions are proposed in order to treat extreme values and multicollinearity simultaneously.

Similar content being viewed by others

Notes

Observations can be eliminated block by block instead of one by one, see Tenenhaus (1998), p. 77.

The rank vector is obtained by replacing the values of y by their ranks (the smallest value of y being ranked 1 and the highest n).

There are two G-correlation coefficients (which are not symmetric) \(\Gamma _{xy}:=\text {cov}(x,R(y))/\text {cov}(x,R(x))\) and \(\Gamma _{yx}:=\text {cov}(y,R(x))/\text {cov}(y,R(y))\) in the same manner as the coginis \(\text {cov}(x,R(y))\) and \(\text {cov}(y,R(x))\). The two G-correlations are equal if the distributions are exchangeable up to a linear transformation. Note that in the remainder, only one cogini is used: \(\text {cog}(x,y)=\text {cov}(x,R(y))\).

If the condition of linearity is relaxed, the parametric and non-parametric Gini techniques are not necessarily equivalent.

Note that the weight vector \(w_j\) may also be derived from the minimization of the Gini index of the residuals, i.e. by the parametric Gini regression whenever the link between y and \(x_j\) is not linear.

The rank vector is homogeneous of degree zero in \(x_j\), \(R(x_j)=R(\lambda x_j)\) for \(\lambda >0\), as well as translation invariant, \(R(x_j)=R(x_j+a_j)\) with \(a_j=(a,a,\ldots ,a)\in \mathbb {R}^{n}\). Hence the standardization of the variables \(x_j\) enables the data to be purged of measurement errors of the following form \(\tilde{x}_j = \lambda x_j + a\), as in PLS1 and Gini2-PLS1.

We set for simplicity that \(\text {cov}(x_j+u,y)-\text {cov}(x_j,y) \approx \frac{\partial \text {cov}(x_j,y)}{\partial x_j}\). On this basis we compute the derivative of the weight \(w_{1j}\) and we deduce the variation of \(VIP_{1j}\). Note that if the weight is negative the converse is obtained: \(\text {if} \ w_{1j}^{PLS1} < 0 \ \text {and if} \ \text {cov}(x_j,u) \lessgtr 0 \ \Longrightarrow \ \tilde{VIP}_{1j} \gtrless VIP_{1j}.\)

Note that if the weight is negative: \(\text {if} \ w_{1j}^{Gini2} < 0 \ \text {and if} \ \text {cov}(x_j,u) \lessgtr 0 \ \Longrightarrow \ \tilde{VIP}_{1j} \lessgtr VIP_{1j}.\)

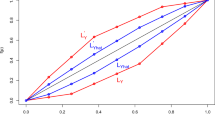

For the sake of simplicity, we only present the results for the first component \(t_1\). The results are similar for \(t_2\). Note that in all figures, the maximum value in the abscissa is 1, that is, \(1 \times 10^{4}\).

Note that the negative signs of the Gini correlations cannot systematically assess the negative correlation between two variables, see Yitzhaki (2003, p.293).

It is important to note that Gini-PLS regressions do not aim at detecting outliers. They allow for dealing with outliers without withdrawing them from the sample.

References

Bastien, P., V. Esposito Vinzi, and M. Tenenhaus. 2005. PLS generalised linear regression. Computational Statistics and Data Analysis 48: 17–46

Bry, X., C. Trottier, T. Verron, and F. Mortier. 2013. Supervised component generalized linear regression using a PLS-extension of the Fisher scoring algorithm. Journal of Multivariate Analysis 119: 47–60.

Choi, S.W. 2009. The effect of outliers on regression analysis: Regime type and foreign direct investment. Quarterly Journal of Political Science 4: 153–165.

Chung, D., and S. Keles. 2010. Sparse partial least squares classification for high dimensional data. Statistical Applications in Genetics and Molecular Biology 91, article 17.

Dixon, W.J. 1950. Analysis of extreme values. The Annals of Mathematical Statistics 2 (4): 488–506.

Durbin, J. 1954. Errors in variables. Review of the International Statistical Institute 22: 23–32.

John, G.H. 1995. Robust decision tree: Removing outliers from databases. In KDD-95 Proceeding, 174–179.

Olkin, I., and S. Yitzhaki. 1992. Gini regression analysis. International Statistical Review 602: 185–196.

Planchon, V. 2005. Traitement des valeurs aberrantes: concepts actuels et tendances générales. Biotechnologie Agronomie Société et Environnement 91: 185–196.

Russolillo, G. 2012. Non-Metric partial least squares. Electronic Journal of Statistics 6: 1641–1669.

Tenenhaus, M. 1998. La régression PLS théorie et pratique. Paris: Technip.

Schechtman, E., and S. Yitzhaki. 1999. On the proper bounds of the Gini correlation. Economics Letters 63 (2): 133–138.

Schechtman, E., and S. Yitzhaki. 2003. A family of correlation coefficients based on extended Gini. Journal of Economic Inequality 1: 129–146.

Wold, S., C. Albano, W. J. Dunn III, K. Esbensen, S. Hellberg, E. Johansson, and H. Sjöström. 1983. Pattern recognition: Finding and using regularities in multivariate data. In Proc. UFOST Conf., Food Research and Data Analysis, ed. J. Martens. Applied Science Publications: London.

Wold, S., H. Martens, and H. Wold. 1983. The multivariate calibration problem in chemistry solved by the PLS method. In Proc. Conf. Matrix Pencils, eds. A. Rnhe, and B. Kagstroem, 286–293. Berlin: Springer.

Yitzhaki, S. 2003. Gini’s mean difference: A superior measure of variability for non-normal distributions. Metron 61 (2): 285–316.

Yitzhaki, S., and E. Schechtman. 2004. The Gini instrumental variable, or the ’double instrumental variable’ estimator. Metron 52 (3): 287–313.

Yitzhaki, S., and E. Schechtman. 2013. The Gini Methodology: A Primer on a Statistical Methodology. Berlin: Springer.

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper was presented at the AFSE conference in Marseille, June 2013. The authors would like to thank particularly Michel Simioni for very helpful comments about the simulation part of the paper. The authors also acknowledge Michel Tenenhaus for comments on the first draft of the paper and Ricco Rakotomalala for his advices and for sharing his Tanagra PLS codes.

Appendices

Appendix A: Proof of Proposition 2

1. Properties (o)–(vi) of PLS1: See Tenenhaus (1998).

2. Properties (o)–(vi) of Gini1-PLS1.

\({(o) {t_1} \ \bot \ \cdots \ \bot {t_h}:}\)

The proof is by mathematical induction. We follow Tenenhaus (1998, p. 101) for PLS1, except that in our case, \(\hat{U}_{(0)}:=R(X)\) [the residuals are issued from the rank vectors Eq. (7)].On the one hand, let us show that \({t_1} \ \bot \ {t_2}\):

since \(t_1^{\intercal } \hat{U}_{(1)} = \mathbf {0}\), where \(\mathbf {0}\) is the null (row) vector of size p. Suppose the following claim is true:

We have to show that [h+1] is true, i.e., \(t_{h+1}\) is orthogonal to all components \(t_1,\ldots ,t_{h}\). The relation [h] implies that \(t_{h}^{\intercal }\hat{U}_{(h)} = \mathbf {0}\), hence

According to Steps 2-h, the partial regressions imply, for all \(j=1,\ldots ,p\),

The relation (11) provides \(\hat{U}_{(h)} = \hat{U}_{(h-1)} - t_h\hat{\beta }_{(h)}^{\intercal }\), where \(t_h\hat{\beta }_{(h)}^{\intercal }\) is the \(n\times p\) matrix containing \(\hat{\beta }_{hj}t_h\) in columns, for all \(j=1,\ldots ,p\). Since [h] implies that \(t_{h-1}^{\intercal }\hat{U}_{(h-1)}=\mathbf {0}\) and that \(t_{h-1}^{\intercal } t_{h} = 0\), we get

Using [h], we find

Finally, [h] yields

(i) \({ w_\ell ^\intercal \hat{\beta }_{\ell } = 1, \ \forall \ell \in \{2,\ldots ,h\}:}\)

Let \(\hat{\beta }_h\) be the column vector whose elements are \(\hat{\beta }_{hj}\) for all \(j=1,\ldots ,p\). The components \(t_h\) are given by \(w_h^{\intercal }\hat{U}_{(h-1)}^{\intercal }=t^{\intercal }_h\), and so

For \(h=1\), we have \(w_1^{\intercal }X^{\intercal }=t^{\intercal }_1\), and so \(w_{1}^{\intercal } \hat{\beta }_{1}=w_1^{\intercal }\frac{R(X)^{\intercal } t_1}{t_1^{\intercal }t_1}\ne 1\) if \(R(X)\ne X\). For \(h > 1\), we get \(w_h^{\intercal }\hat{U}^{\intercal }_{(h)}=t^{\intercal }_h\). From expression (11), we have

and so \(w_{h}^{\intercal } \hat{\beta }_{h} = w_h^{\intercal }\frac{\hat{U}^{\intercal }_{(h)} t_h}{t_h^{\intercal }t_h} =\frac{t_h^{\intercal } t_h}{t_h^{\intercal }t_h} = 1\).

(ii) \({ w_h^{\intercal } \hat{U}^{\intercal }_{(\ell )} = \mathbf {0}, \ \forall \ell \geqslant h > 1:}\)

Relation (11), \(R(x_j)=\hat{\beta }_{1j}t_1 + \hat{\beta }_{2j}t_2 + \cdots + \hat{u}_{(h-1)j}\), yields

For \(h=\ell =1\), we get \(R(X) \equiv \hat{U}_{(0)}=t_1\hat{\beta }_{1}^{\intercal }+\hat{U}_{(1)}\), and so

For \(h=\ell >1\), using (i), we deduce from (14) that

For all \(\ell> h > 1\), expressions (i) and (13) yield

(iii) \({w_h^{\intercal } \hat{\beta }_\ell = 0, \ \forall \ell> h > 1:}\)

Due to relations (i) and (13), we get

For \(\ell> h > 1\), relation (ii) provides the result.

(iv) \({ w_h^{\intercal } w_\ell = 0, \ \forall \ell> h > 1:}\)

Relation (ii) yields \(w_h^{\intercal }\hat{U}_{(\ell )}^{\intercal }= \mathbf {0}\), for all \(\ell> h > 1\). Thus

(v) \({ t_h^{\intercal }\hat{U}_\ell = \mathbf {0}, \ \forall \ell \geqslant h \geqslant 1:}\)

(vi) \({ \hat{U}_{(h)} \ne \hat{U}_{(0)} \prod _{\ell =1}^{h} \left( \mathbb {I} - w_\ell \beta _\ell ^{\intercal }\right) , \ \forall h \geqslant 1:}\)

Let \(h = 1\), since \(\hat{U}_{(0)}\equiv R(X)\), by Eq. (14) we have

3. Properties (o)–(vi) of Gini2-PLS1.

All properties (o)–(v) are obtained in the same manner as in the Gini1-PLS1 case. As to property (vi), let \(h = 1\), since \(\hat{U}_{(0)}\equiv X\):

Appendix B

Rights and permissions

About this article

Cite this article

Mussard, S., Souissi-Benrejab, F. Gini-PLS Regressions. J. Quant. Econ. 17, 477–512 (2019). https://doi.org/10.1007/s40953-018-0132-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40953-018-0132-9