Abstract

The study was a pilot intervention to develop Year 5–8 students’ close reading and writing of literary texts using the T-Shape Literacy Model (Wilson and Jesson in Set Res Inf Teach 1:15–22, 2019). Students analysed text sets to explore how different authors use language to engender mood and atmosphere. The study used a single-subject design logic for repeated researcher-designed and a quasi-experimental, matched control group design for repeated standardised measures of reading and writing. Nine teachers and their classes participated. The schools were part of a large school improvement programme using digital tools and pedagogy to accelerate students’ learning participated that the authors were research-practice partners in. The schools all served low socio-economic status communities and the majority of students were Māori (51%) and Pacific (28%). There was a large effect size on the overall score for the researcher-designed measure (effect size = 1.00) and for the close reading of single texts sub-score (effect size = 0.90). There was a moderate-to-high effect for students’ identification of language features (effect size = 0.75) but no significant effect on their synthesis scores. Students in the intervention significantly outperformed matched control group students in the standardised writing post-test (effect size = 0.65) but differences for the standardized reading comprehension test were not significant (effect size = 0.15). Results overall suggest the approach has promise for improving the metalinguistic knowledge, literary analysis and creative writing of younger and historically underserved groups of students.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

This paper reports the results of a teacher professional learning and development (TPLD) intervention to develop Year 5–8 students’ metalinguistic knowledge, literary analysis and creative writing. The intervention was based on the T-Shape Literacy Model (Wilson & Jesson, 2019) which proposes that students’ literacy learning can be enhanced when students read a range of texts (represented by the horizontal bar of the “T”) to explore a unifying concept in a deep way (as represented by the vertical bar). The model was developed originally in response to a practical issue that arose in school improvement work with a cluster of primary schools serving low socio-economic communities in Aotearoa New Zealand. Teachers had responded positively to messages about increasing the focus on deeper comprehension but reported that exploring each text in more depth took longer and an opportunity cost was the volume and variety of texts students could engage with. The T-Shaped Model proposes that this tension between depth and breadth can be reduced if teachers focus deeply on a smaller number of important aspects related to the texts. For example, by taking a broad topic such as World War One and tightening the focus (e.g. “What does it mean to be a “hero”?”), or by analysing a set of literary texts with a special focus on authors’ evocation of mood and atmosphere, rather than by trying to give roughly equal weight to a wider range of literary elements in a single text. A key benefit of the model, we theorise, is that engaging with multiple examples of new knowledge in different texts will strengthen understanding.

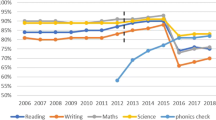

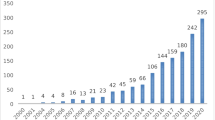

The study involved teachers from schools in a school improvement programme using digital tools and pedagogy to accelerate students’ learning. The goals of the overall digital programme were to support equity of access to technology for learning, enhanced academic outcomes, and preparation for future careers. The programme overwhelmingly involves schools serving economically poorer communities. Longitudinal and cross-sectional data show that, overall, students in the cluster have lower than average reading and writing achievement in the first year of school but make accelerated progress so that on standardised measures by Year 4 their reading is closer to but not yet at the national norm. From Year 4 onwards though, reading achievement improves at but not above the national rate, meaning that while performance relative to the national norms does not worsen, the gaps never fully close (Jesson et al., 2018).

The national context for the study is Aotearoa New Zealand which was, in the past, commonly characterised in international comparisons of literacy as having high quality but low equity (Kirsch et al., 2002). The “low equity” aspect of that designation has persisted but the “high quality” aspect has been increasingly called into question as our mean performance and the proportion of students represented in the highest bands relative to other nations have both decreased over time (Hood & Hughson, 2023). Still, in the most recent PIRLs round (Chamberlain et al., 2023), our Year 5 students had a mean reading score of 521 which was significantly higher than the PIRLS Scale Centrepoint of 500, the mean from the first cycle of PIRLS. Students here were also still overrepresented in the highest bands with 11% and 41% achieving at least the “advanced” and “high” levels, compared to 7% and 36% of students internationally. However, at the other end of the achievement continuum about twice as many students were represented in the very lowest band than students internationally. This inequality between high and low reading achievement is more pronounced than it is in most other countries, and more strongly associated with economic advantage and disadvantage. Despite many Māori and Pacific children achieving high levels of success in such measures, their distribution curve is still to the left of other groups meaning overall they are under-represented in the highest bands and overrepresented in the lower bands.

These data raise important issues that we need to acknowledge. Firstly, we know that even the act of citing data from sources such as PIRLs about inequitable outcomes for Māori and Pacific children inadvertently runs the risk of reinforcing deficit discourses about the children, their whānau and their communities. We run this risk and show these data to highlight an important issue that cannot be solved if it is not acknowledged as a problem. To be clear, we see the inequities highlighted in the data as an indictment on wider schooling, social and economic systems—not on the children, their whānau or their communities. We see children as already having rich and well developed literacies, albeit not necessarily those valued in formal school settings (Hetaraka et al., 2023). We see them, therefore, as inherently capable of achieving the highest levels of literacy, including, but not limited to reading and writing. Māori children made up over half the students in the study. All participants in the study were from English-medium schools which have been, and are, a key part of the colonising apparatus. This is particularly true with respect to the English curriculum which is, by definition, delivered in, and is about, the language of the colonisers. We are very cognisant of evidence over many years showing positive benefits for Māori children learning in Te Reo Māori in Māori medium schools. Nevertheless, the majority of Māori children still attend English-medium schools that implement the New Zealand Curriculum (Berryman et al., 2017) rather than Te Marautanga o Aotearoa and we therefore have a duty as educators to strive for more equitable outcomes for Māori children in these English-medium school too.

We are aware too that an intervention that focuses (mostly) on reading and writing might be seen as reinforcing a narrow view of literacies. We unashamedly do see reading and writing in English and/or Māori as vitally important and powerful literacies we want all young people to have command of. But that is not all want. We agree with multi-literacies advocates who argue that, increasingly, to be literate requires knowledge and control of a wider variety of language modes and semiotic systems (e.g. Cazden et al., 1996) that include but are not limited to reading and writing. We also agree with those who argue children are inherently literate and already have meaning-making knowledge and abilities (Hetaraka et al., 2023) that can and should be used in classrooms as valuable “funds of knowledge” (Gutiérrez & Rogoff, 2003). We do not see valuing written literacies and valuing other literacies as incompatible positions. Indeed, for us, the fact that children have knowledge and skills for making and communicating sophisticated meaning in non-written forms (such as oral, carvings, dance etc.) reinforces the notion that, therefore, they are perfectly capable of learning sophisticated levels of reading and writing as well. Arguing in favour of a more expansive view of literacies does not mean one does not also value reading and writing. Also, as we shall discuss later, engaging with other language modes and building on students’ existing literate funds of knowledge, have powerful affordances for learning and development in reading and writing.

Many of the responses to concerns about literacy learning and teaching here and internationally have focused, understandably, on the critical early years. One reason for focusing on the later primary years in this present study is that seminal studies have identified a sizeable group of students (about 8%) who have secure foundational skills and who made normal progress in their literacy development in their earlier years of school, but who begin to fall behind their peers and/or curriculum expectations from about Grade 4 (Year 5) (Spencer & Wagner, 2018). This so called “fourth-grade slump” (Chall, 1990) has long been evidenced here (Hattie, 2007; McNaughton, 2020) and overseas (Terry et al., 2023). This plateau in development is predicted by models of reading in which children’s acquisition of constrained skills, such as in encoding and decoding, hits a ceiling, and in which curriculum and assessment foci are increasingly on unconstrained aspects such as deeper comprehension and writing for particular purposes and audiences (Paris & Hamilton, 2014; Terry et al., 2023).

Of relevance to current concerns about a national “literacy crisis” (e.g. Hood & Hughson, 2022; Johnston, 2023), which often foreground our declining performance in international assessments, is that it is actually these types of unconstrained skills that are assessed in PIRLs. Unpacking criteria for the higher bands provides helpful insight into the reading construct used in these assessments. When reading literary texts, students who achieve the high international benchmark can: locate and identify significant actions and details embedded across the text; make inferences about relationships between intentions, actions, events, and feelings; interpret and integrate story events to give reasons for character actions and feelings traits and feelings as they develop across the text; recognise the use of some figurative language (e.g., metaphor, imagery). The construct used for assessing reading of information texts is comparably complex.

Local evidence from a recent round of the National Monitoring Study of Student Achievement supports the general picture of literary reading evident in PIRLs (NMSSA, 2019). Many students were able to make inferences, predictions, hypotheses, and evaluations about texts they read but struggled to support their view with specific references or evidence (NMSSA, 2021a).Year 4 and 8 students nationally had limited knowledge of language and design features of texts (e.g., figurative language, visual semiotics), seldom used appropriate meta-language, and struggled to explain how authors create effects (NMSSA, 2021b).

The aim of the intervention was to “raise the ceiling” by addressing some of these important unconstrained aspects of literacy in subject English. We use the term “subject English” to distinguish the learning area from the language. We employed a forward-thinking and backwards-mapping logic to do this. We began by considering what students are expected to know and do in subject English by the time they complete high school, and then considering patterns of instruction in the middle- to upper- primary years in terms of these future expectations. The logic is similar to expert-novice studies that have informed many fields of education. For example, disciplinary literacy scholars have often started their inquiry by exploring what, why and how professionals in their disciplines read and write (e.g. Shanahan & Shanahan, 2008). Once the practices of experts in a field are understood, the scholars have “back mapped” to identify outcomes and approaches appropriate at earlier stages of students’ apprenticeship, such as in high school history or chemistry classes. Looking forward to what literacies are expected of students in their later high school years, the most obvious feature is increased subject-specialisation. Not only does the learning content in each subject becomes increasingly specialised but so too do the texts students read and write, the language, structure and forms of representation employed in those texts, and the purposes for reading and writing (e.g. Shanahan & Shanahan, 2008). This is true in subject English as it is in subjects like science (Lee & Spratley, 2010). Internationally, literary analysis is one of the most highly valued outcomes in high school English (Deane, 2020) and our national high stakes English assessments for qualifications give considerable weight to students’ literary analysis of written, visual and oral texts, and ability to use literary and language features in their own writing, speaking and visual presentations. Students in the age range of the study (Year 5–8) are already expected to be able to demonstrate a “developing” (Curriculum Level 3) and “increasing” (Curriculum Level 4) understanding of how “texts are constructed for a range of purposes, audiences and situations”, how “texts can position a reader”, and how “language features are used for effect within and across texts” (Ministry of Education, 2007, pp. Achievement objectives fold-out chart).

Literary texts and literary ways of reading become increasingly important in subject English. Literary texts have particular affordances for developing the valued skills of making and justifying interpretations because, almost by definition, literary texts invite readers to take an interpretative stance (Goldman, et al., 2015). A hallmark of literary texts is that their authors deliberately want the reader to get more out of the text than the content that it explicitly communicates (Deane, 2020). Literary texts, it has been claimed, are designed to affect the reader in at least one of three ways: by making the familiar strange; by inducing a sense of recognition, and; by transporting readers into an imagined world so fully that they occupy it vicariously (Miall & Kuiken, 1999). These characteristics of literary texts make them particularly effective contexts for developing students’ metalinguistic knowledge, which is a highly valued outcome in subject English.

Metalinguistic knowledge helps readers understand how authors achieve particular effects and provides writers with a repertoire of tools they can employ to achieve effects in their own writing. Close reading requires more than tacit linguistic knowledge because textual details are the main evidence-base for building literary arguments. If students are to communicate their analyses of texts effectively, they need to have a metalanguage to identify the language features that were deployed, and the effects of those language features (Deane, 2020). Teaching metalinguistic concepts traditionally as a set of rules does not always support improvements in reading comprehension or writing (Graham et al., 2018; Schoenbach et al., 2012) but studies have shown positive effects when students are not only taught metalinguistic concepts but also how to apply their new knowledge as a tool for reading and writing (Myhill et al., 2012). Whether it is developed in the context of reading or writing or both, using metalinguistic knowledge as a tool is more important than being able “to describe a linguistic feature using grammatical terminology” (Van Lier, 1998, p. 136). For example, the ability to identify an adverb has, in itself, limited practical value for reading or writing. But awareness that adverbs can be deployed as subtle and powerful tools for characterisation, for instance, may have positive benefits for close reading and creative writing.

One contributing factor to the tapering off of students’ literacy progress evident in the student data is teaching that is not well matched to increasingly sophisticated and subject-specialised curriculum demands. There is limited evidence about actual patterns of literacy and/or subject English teaching in these year levels in this country. We do have as-yet-unpublished local evidence collected as part of the wider research-practice partnership that supports this as one explanation for problematic patterns of student achievement. To investigate patterns of literacy teaching we had collected online data from a total of 155 “class sites” and analysed all 576 texts and 536 activities provided to students via those sites over a period of one week. We found that, broadly speaking, children had opportunities to learn about literacy but fewer opportunities to learn about subject English. The observations showed that the majority (67%) of texts used in reading lessons were informational rather than literary texts and that very few of these (6%) were extended written texts such as chapter books. We analysed all the learning activities provided to students over one week and found that the majority of classes (56%) did have at least some focus on vocabulary development (56%) but fewer than 25% mentioned any literary elements (such as theme, setting, characterisation) and only 13% showed evidence of a focus on literary devices (such as figurative language). Most class sites provided no evidence of any teaching focus over the week related to audience and purpose, text structure or strategy instruction. Nearly half of class sites had no task over the week requiring more than a literal interpretation of the texts and only 8% of sites included texts that required critical literacy or critical thinking. There were few opportunities observed for making links between reading and writing.

Promoting a stronger focus on building students’ metalinguistic and literary knowledge through the use of text sets rather than individual texts was the key feature of the intervention. There are a range of reasons why text sets, rather than single texts, have been promoted in literacy programmes. Firstly, reading and synthesising multiple texts is valued in higher education and society more generally. Sophisticated adult readers form a coherent representation of current events, and their opinion about those events, drawing on a collection of print, visual, aural and multi-media texts. Students going on to university will, in most disciplines, be required, from their very first semester, to write essays or reports that synthesise material from multiple rather than single sources (Bråten, et al., 2020). Compare and contrast tasks are a common form of synthesis used in schools (Van Ockenburg, 2019). In the primary years, reading linked rather than disparate texts has been claimed to have advantages for motivation because reading is more purposeful when it is in aid of developing knowledge about an interesting topic or concept over time (Guthrie et al., 2004). Text sets also have particular affordances for language and literacy learning. It is well known that teaching students the meanings of words in a particular text prior to reading it increases their ability to comprehend that particular text (Wright & Cervetti, 2017) and that having relevant topic and domain knowledge can be a better predictor of reading comprehension than general reading comprehension ability (Cervetti & Wright, 2020). Developing vocabulary and background knowledge in the context of conceptually linked texts may be more efficient because many of the words and concepts taught in relation to one text will be encountered elsewhere in the text set.

Most accounts of text sets in literacy programmes focus on the use of thematically connected texts (Reynolds, 2022). Our approach is novel in that the thread that connects the texts relates to the crafting rather than the topic of the text. Two main advantages of developing literary and metalinguistic knowledge in the context of text sets, relate to transfer and multi-literacies. Transfer is the process of using knowledge acquired in one situation in some new or novel situation (Alexander & Murphy, 1999; Bransford et al., 2000). The goal of subject-English instruction is not just to support students to understand the literary and linguistic features of the text at hand, but to develop transferable knowledge that can be applied to other texts in the future (e.g., to novel novels).The transfer literature suggests that revisiting the same concepts in different instantiations supports transfer, presumably because repeated exposure enable students to see the deep underlying principle that connects those instantiations, rather than the surface differences between them (Bransford et al., 2000). Text sets comprising written, visual, oral and multi-modal texts of different genre have potential affordances for developing transferable knowledge about how effects are created, in memorable and engaging ways. For example, in the present study students analysed visual texts to explore how colour and lighting engendered mood before investigating the use of colour and lighting imagery in written texts.

Integrating reading and writing is another key feature of the model, Writing about texts they have read has benefits for reading comprehension in general (Graham & Hebert, 2011) and for literary interpretation and analysis in particular (Deane, 2020). Therefore, as well as supporting students to use their reading of literary texts to support their creative writing, we sought to develop their analysis writing.

In designing our approach, we sought to capitalise on the affordances of the wider digital learning programme. Students in schools like these have a wider range of digital mediums to draw on for showing and sharing their understanding of the text(s) (Rosedale et al., 2021). Digital learning objects (DLOs) are multimodal re-representations of knowledge that use “new” media such as screencast, podcast and animation. Student creation of DLO can deepen thinking and learning using a process of transduction whereby content is transformed from one modal representation into another (Bezemer & Kress, 2008). In our project, students selected their own texts for analysis, and created and shared their close readings with their peers in the form of screencast videos and presentations. As well as having cognitive benefits, student creation of DLOs can support engagement (Kearney, 2013), motivation (Yang & Wu, 2012) and student agency (Beach & O'Brien, 2015).

We selected mood and atmosphere as an engaging context with which to explore how authors use language in particular ways to achieve particular effects. We were influenced by Oatley’s (1995) taxonomy of emotional responses to literature that distinguishes between “outside” and “inside” responses. Outside emotional responses involve aesthetic emotions such as admiration and appreciation of the literary work itself. The inside, non-aesthetic, responses are those that come from entering and occupying the world of the text, for example, vicariously experiencing the mood of a setting, or feeling empathy or sympathy or identification with characters. Our goal was to support students to explore and share their non-aesthetic affective responses to the text before analysing the texts to identify how authors had engendered that response. Mood and atmosphere was an appropriate context for this.

Our main research question was: What are the effects of the T-Shaped Literacy model on Year 5–8 students’ close reading and writing in subject-English?

Method

Design

The study was a pilot intervention study that employed repeated researcher-designed and standardised measures of reading and writing. Analyses of data from the researcher-designed measures used a single-subject design logic. A quasi-experimental, matched control group design was used for the standardised data.

Participants

Nine teachers of eight classes and co-teaching hubs from four schools participated in professional learning and development. In Aotearoa New Zealand, schools are assigned a “decile” ranking for funding purposes based on the socio-economic status of the communities they serve. Decile 1 schools serve the most economically deprived communities. One school each was Decile 1, 2, 3 and 4. Three were located in small towns in the Far North and one was a suburban school in Auckland. The schools are all part of the digital learning initiative. A total of 130 Year 5–8 students participated. Māori were the largest ethnic group (51%), followed by Pacific (28%) and New Zealand European (18%).

The Intervention

The researchers and teachers met for a total of 10 online after-school PLD sessions of about one hour each. The weekly sessions began four weeks before the teachers began teaching the six week unit to their class and continued weekly throughout their implementation. Teachers nationally have high levels of autonomy with no prescription of texts, specific content or activities at a system level. Consistent with this, the aim of the PLD sessions was to support teachers to select their own texts and plan their own learning activities. The sessions were divided fairly evenly between researcher-led content and activities, and opportunities for teachers to discuss and share their own ideas. In the first session, we introduced key features of the T-Shaped Literacy Model, an outline of the proposed 6 week unit, and key intended learning outcomes for literary analysis and creative writing. We introduced a template designed to support students to record details each time they analysed an individual text, with the aim that these details would inform synthesis across texts later in the unit. In each session we read and analysed a short exemplar text together, with a particular focus on a few language features used to create mood and atmosphere, including: colour and lighting imagery; parts of speech (including adjectives; adverbs; concrete nouns); sensory language; pathetic fallacy; and figurative language). We emphasised that our focus was not just on helping students identify and use language features—but to understand how these can be employed to achieve particular effects. We suggested that for each text, teachers first help students think about what it made them feel and only then to consider how it was crafted to make them feel that way.

Vocabulary was a focus in all sessions. We emphasised the importance in each lesson of developing students’ metalinguistic knowledge. We also promoted opportunities to support students’ “magpie-ing” (collecting) of interesting words and phrases from their reading to use as resources in their own creative writing. These sessions also included time for teachers to share successes and problem-solve issues in their implementation of the units so far. We supported teachers to help their students develop DLOs in which they demonstrated their own analyses of a self-selected text for an audience of their peers. We also focused on supporting students to use their completed synthesis tables to write across-text syntheses, using exemplars, writing frames and explicit teaching about connectives. Across all sessions we modelled and supported teachers to lead rich classroom discussions about texts in classes.

Checks of class sites showed that all teachers were explicit with students that they were employing a T-Shaped literacy approaches; they all shared close reading and creative writing learning outcomes on the class site, and; they all used multiple texts and synthesis grids. All of the teachers attended at least 9 of the 10 PLD sessions and all shared examples of their implementation in those sessions.

Measures

Standardised Measures

The standardised assessment tools used were PAT Reading Comprehension (https://www.nzcer.org.nz/tests/pat-reading-comprehension-and-vocabulary) and e-asTTle Writing (http://e-asttle.tki.org.nz/Teacher-resources/e-asTTle-writing-Background). Both are online standardised assessment tools used by all schools in the wider project at the beginning and end of each school year. The standardised tests are far transfer measures in that they are framed around broader constructs of reading and writing than those addressed in the intervention.

Researcher-Designed Measure

Two versions of a close reading assessment were developed by the researchers as a near transfer measure of student learning. To control for possible differences in the difficulty of the two versions, we randomly selected half of the students to do Version A at time 1 and Version B at time 2, while the other half did them in the reverse order.

The close reading assessment had three parts. Part 1 was a multi-choice test that required students to identify parts of speech (e.g. adverbs, concrete nouns) and figurative devices (e.g. personification, simile) in sentences. Part two and three were in an open-response format. Part 2 involved close reading of extracts from two literary texts of about 250 words each. The extracts were taken from levelled School Journals and were checked for suitability by the teachers. Students were instructed to: “Explain at least three different ways the author used language features to create a strong sense of mood or atmosphere.” Part 3 was a synthesis activity that asked students to compare and contrast the two texts in terms of language and effects.

A research assistant was trained by the first author to apply marking rubrics to the open-response portions of the test. Each student assessment was blinded so that the scorer had no way of knowing whether it had been completed before or after the intervention.

Analysis

The standardised assessments have different norms for the beginning and end of each year level. To create a common scale for students of different year levels, and to control for normal growth, we calculated a “normdiff” score for each student by subtracting their actual score from the national norm at each time point. A student with a normdiff score of zero, regardless of their year level, achieved at exactly the same level as the national norm for that age group. Negative integers indicate their scores were below norm and positive integers that they were above. Change in achievement was explored using paired-samples t-tests for all students who had test scores for both time points.

We also established a matched, baseline control group to compare the standardised reading and writing gain scores of programme participants and non-participants. Separate matched comparison groups were established for the standardised reading (n = 93) and writing (n = 95) data. Each student in the intervention was matched to a non-intervention student with the same year level, ethnicity, gender and Time 1 score. If more than one control student matched a treatment student on all four criteria, a “composite” control student was created by averaging their Time 2 scores. We then completed two sample t-tests for normdiff gain between these two groups.

Analysis of data from the researcher-designed measures was single-subject repeated measures with paired t-tests conducted to compare pre- and post-scores, cut by year level, teacher, student ethnicity and gender.

Results

We report the results of the near transfer researcher-designed measures (Table 1)and the far transfer standardised reading (Table 2) and writing (Table 3) assessments below.

Researcher-Designed Measure

Close reading scores of all groups of students were low prior to the intervention. Students on average correctly identified less than one third of the language features in Part A and scored 4 marks out of a possible 20 for their analysis of single texts, and 1 out of 4 for synthesis.

Single-subject analyses of students’ pre- and post- intervention close reading scores (Table 1) showed a large effect size on their total score (effect size = 1.0) and literary analysis of single texts (effect size = 0.90). There was a moderate-to-high effect size for students’ identification of language features but no significant effect on their synthesis scores.

Paired t-tests showed similar, significant effects for females (effect size = 0.96) and males (effect size = 1.02). Effects were also similar by ethnicity with large effects for Māori, Pacific and Pākeha students.

Counter to expectations there was no linear relationship between the year level of the students and the total close reading scores. Clearly, numbers of students are small, and there may well be confounding effects (teacher, school, socio-economic), but Year 6 students actually scored higher on average than Year 7 and 8 students in both pre- and post-test.

There was significant variability in gain scores by teacher/classroom with effect sizes ranging from 0.61 to 1.49. Effect sizes were large for all but one of the classes and that effect was moderate. Two classes stood out with very high effect sizes of 1.49 and 1.44 respectively.

PAT Reading

Overall, the treatment students made larger gains than matched control groups students with an effect size of 0.154. However, this difference in gains is not significant. Furthermore, there are also no significant difference in the gains by gender and ethnicity (Table 2).

e-asTTle Writing

Treatment students significantly outperformed matched control group students in the standardised writing post-test (effect size = 0.65) (Table 3). Treatment students made on average 47 e-asTTle writing score points more than the norm gain. This is educationally significant because the norm gain for students in this age group (Years 5–8) is 28 points per year meaning treatment students made well over one year’s worth of additional progress. Gains were significant for Māori (effect size = 0.70) and Pākeha (effect size = 0.87) but not for Pacific (effect size = 0.50). Both genders made significant progress, and males made more (effect size = 1.04) than female (effect size = 0.36).

Discussion

The low scores for all groups at baseline and the lack of difference in baseline scores by year level are consistent with evidence from the national monitoring project (NMSSA) that primary students have limited metalinguistic knowledge and gaps in their close reading skills. The significant gains in close reading and language features identification scores suggest that these skills are sensitive to teaching and that improvements are possible with a deliberate focus even over a relatively short space of time. This implies, in turn, that the low baseline scores are likely related to teaching factors at least as much as they are to student factors such as maturity. Younger students can learn these more complex skills. There was a consistently positive trend on the research-designed measures for all classes and all groups of students. Although, as expected, there was significant variability by teacher, the gains were positive for all classes.

An important limitation in this pilot study is that the lack of a comparison group for the researcher-designed measures means it is possible that another teaching intervention focused on similar outcomes would have been as or more effective than our T-Shaped Literacy approach. The cross-sectional Time 1 data showing no significant differences associated with year level may support the intervention as being more effective than “business-as-usual” teaching however. With respect to this, it is important to note that the content we introduced was not, in one sense, new content; the curriculum expectations that students in those year levels learn the kind of content we focused on have been in place since at least 1994 (Locke, 2001). At the least, the study confirms that if teachers teach these complex and important skills, primary students can learn them.

Whereas the data from the researcher-designed measure show gains in language feature identification and in the close reading of single texts, there were no significant effects on across-text synthesis associated with the intervention. This is clearly disappointing given the approach was framed around students analysing multiple texts to explore how different authors create mood and atmosphere. One possibility is that the primary students do not yet have the maturity to tackle such cognitively complex tasks. However, if that were the case, we would expect to have seen the older students having markedly higher scores at baseline and making bigger gains—but that was not the case. The explanations we favour are those related to the professional learning opportunities and classroom teaching. It may be that the intervention was of too short a duration to impact synthesis skills, or that the learning activities we modelled were not the right ones, or that teachers’ implementation of synthesis approaches was not as high quality as their implementation of other components. A future iteration of the intervention will take place over a full school year and involve three units rather than one six week unit. We hope that a more extended intervention, and refinement of synthesis teaching approaches will contribute to more positive effects. Teachers reported that they felt students had improved in their synthesis and were able to compare authors’ use of language to create mood and atmosphere. Their impressions though were based on texts studied in class and syntheses communicated orally. It may be then that students need more practice and support to be able to synthesise previously unseen texts and to communicate the results of their syntheses in writing. The positive gains in metalinguistic knowledge and close reading may be more related to repeated practice—spending considerable time analysing a range of texts- than on the synthesis activities per se.

The treatment students did not make significantly greater gains than the matched comparison group students in the PAT reading comprehension data. This is not an altogether unexpected result. Most successful reading comprehension interventions have much smaller effects on standardised measures than they do on researcher-designed measures. For example, meta-analyses of text structure and reading strategy interventions by Hebert et al. (2016) and Okkinga et al. (2018) found small average effect sizes of 0.15 and 0.18 respectively on standardised measures but moderate effect of 0.57 and 0.431 on researcher designed measures. In our case we think this is in part an issue of alignment between the aspects of reading measured on the standardised tool and the aspects focused on in the intervention. Although metalinguistic knowledge and close reading are assessed in the standardised tool, these are just two of many other aspects. In addition, our close reading focus in the lessons was based around open-ended discussion, writing and DLO creation tasks whereas the standardised test on the other hand is in a multi-choice format. It is possible that the next iteration of the project will have stronger effects on standardised reading comprehension measures because sustaining the approach over a whole year and covering three rather than one aspect of literacy analysis (mood and atmosphere, narrative reliability and characterisation) will more likely have transferable effects, and because we will weave in more comprehension instruction into our focus—such as cognitive strategy instruction and teaching of text structures.

The evidence that treatment students made significantly more progress in the standardised writing assessment than matched students is an important finding given this was more of a far transfer measure than the researcher-designed measure. One possible explanation for why the far-transfer measure of writing showed improvement but that for reading did not is that the writing assessment may be more sensitive to improvements in students’ literary and meta-linguistic knowledge in that incorporation of language features and vocabulary are all rewarded in the marking schemes.

Overall, the results of the pilot study are promising and we are hopeful that a more sustained intervention with attention to the aspects of student learning that did not shift as much (synthesis, reading comprehension) will have more positive outcomes.

Data availability

Not applicable.

References

Alexander, P. A., & Murphy, P. K. (1999). Nurturing the seeds of transfer: A domain-specific perspective. International Journal of Educational Research, 31(7), 561–576.

Beach, R., & O’Brien, D. (2015). Fostering students’ science inquiry through app affordances of multimodality, collaboration, interactivity, and connectivity. Reading & Writing Quarterly, 31(2), 119–134.

Berryman, M., Egan, M., & Ford, T. (2017). Examining the potential of critical and Kaupapa Māori approaches to leading education reform in New Zealand’s English-medium secondary schools. International Journal of Leadership in Education, 20(5), 525–538.

Bezemer, J., & Kress, G. (2008). Writing in multimodal texts: A social semiotic account of designs for learning. Written Communication, 25(2), 166–195.

Bransford, J., Brown, A., & Cocking, R. (2000). How people learn: Mind, brain, experience, and school. National Academy Press.

Bråten, I., Braasch, J. L., & Salmerón, L. (2020). Reading multiple and non-traditional texts: New opportunities and new challenges. Handbook of Reading Research, Volume V, 79–98.

Cazden, C., Cope, B., Fairclough, N., Gee, J., Kalantzis, M., Kress, G., Luke, A., Luke, C., Michaels, S., & Nakata, M. (1996). A pedagogy of multiliteracies: Designing social futures. Harvard Educational Review, 66(1), 60–92.

Cervetti, G. N., & Wright, T. S. (2020). The role of knowledge in understanding and learning from text. Handbook of reading research, 5

Chall, J. S., Jacobs, V. A., & Baldwin, L. E. (1990). The reading crisis: Why poor children fall behind. Harvard University Press.

Chamberlain, M., & Forkert, J. (2023). Reading literacy at Year 5: New Zealand’s participation in PIRLS 2021. Marking 20 years of the Progress in International Reading Literacy Study.

Deane, P. (2020). Building and justifying interpretations of texts: A key practice in the English language arts. ETS Research Report Series, 2020(1), 1–53.

Goldman, S. R., McCarthy, K. S., & Burkett, C. (2015). 17 Interpretive inferences in literature. Inferences during reading, 386.

Graham, S., Liu, X., Bartlett, B., Ng, C., Harris, K. R., Aitken, A., & Talukdar, J. (2018). Reading for writing: A meta-analysis of the impact of reading interventions on writing. Review of Educational Research, 88(2), 243–284.

Graham, S., & Hebert, M. (2011). Writing to read: A meta-analysis of the impact of writing and writing instruction on reading. Harvard Educational Review, 81(4), 710–744.

Guthrie, J. T., Wigfield, A., Barbosa, P., Perencevich, K. C., Taboada, A., Davis, M. H., & Tonks, S. (2004). Increasing reading comprehension and engagement through concept-oriented reading instruction. Journal of Educational Psychology, 96(3), 403.

Gutiérrez, K. D., & Rogoff, B. (2003). Cultural ways of learning: Individual traits or repertoires of practice. Educational Researcher, 32(5), 19–25. https://doi.org/10.3102/0013189X032005019

Hattie, J. (2007). The status of reading in New Zealand schools: The upper primary plateau problem (UP). Reading Forum NZ, 22(3), 25–39.

Hebert, M., Bohaty, J. J., Nelson, J. R., & Brown, J. (2016). The effects of text structure instruction on expository reading comprehension: A meta-analysis. Journal of Educational Psychology, 108(5), 609.

Hetaraka, M., Meiklejohn-Whiu, S., Webber, M., & Jesson, R. (2023). Tiritiria: Understanding Māori children as inherently and inherited-ly literate—Towards a conceptual position. New Zealand Journal of Educational Studies, 58(1), 59–72.

Hood, N., & Hughson, T. (2022). Now I Don't Know My ABC: The Perilous State of Literacy in Aotearoa New Zealand. Education Hub.

Hood, N., & Hughson, T. (2023). Literacy Achievement in Aotearoa New Zealand: What is the Evidence? New Zealand Journal of Educational Studies, 58(1), 153–167.

Jesson, R., McNaughton, S., Wilson, A., Zhu, T., & Cockle, V. (2018). Improving achievement using digital pedagogy: Impact of a research practice partnership in New Zealand. Journal of Research on Technology in Education, 50(3), 183–199.

Johnston, M. (2023). Save our schools: Solutions for New Zealand's education crisis. The New Zealand Initiative.

Kearney, M. (2013). Learner-generated digital video: Using ideas videos in teacher education. Journal of Technology and Teacher Education, 21(3), 321–336.

Kirsch, I., de Jong, J., Lafontaine, D., McQueen, J., Mendelovits, J., & Monseur, C. (2002). Reading for change: Performance and engagement across countries: Results from PISA 2000. OECD Online Bookshop.

Lee, C. D., & Spratley, A. (2010). Reading in the Disciplines: The Challenges of Adolescent Literacy. Final Report from Carnegie Corporation of New York's Council on Advancing Adolescent Literacy. Carnegie Corporation of New York.

Locke, T. (2001). English teaching in New Zealand: In the frame and outside the square. Educational Studies in Language and Literature, 1, 135–148.

McNaughton, S. (2020). The literacy landscape in Aotearoa New Zealand. Office of the Prime Minister of New Zealand, Wellington.

Miall, D. S., & Kuiken, D. (1999). What is literariness? Three components of literary reading. Discourse Processes, 28(2), 121–138.

Ministry of Education. (2007). The New Zealand curriculum: Learning Media Wellington.

Myhill, D. A., Jones, S. M., Lines, H., & Watson, A. (2012). Re-thinking grammar: The impact of embedded grammar teaching on students’ writing and students’ metalinguistic understanding. Research Papers in Education, 27(2), 139–166.

NMSSA. (2019). National Monitoring Study of Student Achievement Report 22: English 2019—Key Findings. New Zealand: Educational Assessment Research Unit and New Zealand Council for Educational Research

NMSSA. (2021b). NMSSA Report 22-IN-3: Insights for Teachers 3. NMSSA English 2019—Multimodal Texts and Critical Literacy. New Zealand: Educational Assessment Research Unit (EARU)

NMSSA. (2021a). NMSSA Report 22-IN-2: Insights for Teachers 2. NMSSA English 2019—Making Meaning. New Zealand: Educational Assessment Research Unit (EARU)

Oatley, K. (1995). A taxonomy of the emotions of literary response and a theory of identification in fictional narrative. Poetics, 23(1–2), 53–74.

Okkinga, M., van Steensel, R., van Gelderen, A. J., van Schooten, E., Sleegers, P. J., & Arends, L. R. (2018). Effectiveness of reading-strategy interventions in whole classrooms: A meta-analysis: Springer.

Paris, S. G., & Hamilton, E. E. (2014). The development of children’s reading comprehension Handbook of research on reading comprehension (pp. 56–77): Routledge.

Reynolds, D. (2022). What is an ELA text set? Surveying and integrating cognitive, critical and disciplinary lenses. English Teaching: Practice Critique, 21(1), 98–110.

Rosedale, N. A., Jesson, R. N., & McNaughton, S. (2021). Business as usual or digital mechanisms for change?: What student DLOs reveal about doing mathematics. International Journal of Mobile and Blended Learning (IJMBL), 13(2), 17–35.

Schoenbach, R., Greenleaf, C., & Murphy, L. (2012). Reading for understanding: How Reading Apprenticeship improves disciplinary learning in secondary and college classrooms (2nd ed.). Jossey-Bass.

Shanahan, T., & Shanahan, C. (2008). Teaching disciplinary literacy to adolescents: Rethinking content-area literacy. Harvard Educational Review, 78(1), 40–59.

Spencer, M., & Wagner, R. K. (2018). The comprehension problems of children with poor reading comprehension despite adequate decoding: a meta-analysis. Review of Educational Research, 88(3), 366–400.

Terry, N. P., Gatlin-Nash, B., Webb, M. Y., Summy, S. R., & Raines, R. (2023). Revisiting the fourth-grade slump among black children: taking a closer look at oral language and reading. The Elementary School Journal, 123(3), 414–436.

Van Lier, L. (1998). The relationship between consciousness, interaction and language learning. Language Awareness, 7(2–3), 128–145. https://doi.org/10.1080/09658419808667105

Van Ockenburg, L., van Weijen, D., & Rijlaarsdam, G. (2019). Learning to write synthesis texts: A review of intervention studies. Journal of Writing Research, 10(3), 401–428.

Wilson, A., & Jesson, R. (2019). T-shaped literacy skills: An emerging research-practice hypothesis for literacy instruction set. Research Information for Teachers, 1, 15–22.

Wright, T. S., & Cervetti, G. N. (2017). A systematic review of the research on vocabulary instruction that impacts text comprehension. Reading Research Quarterly, 52(2), 203–226.

Yang, Y.-T.C., & Wu, W.-C.I. (2012). Digital storytelling for enhancing student academic achievement, critical thinking, and learning motivation: A year-long experimental study. Computers Education, 59(2), 339–352.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wilson, A., Rosedale, N. & Meiklejohn-Whiu, S. Piloting a T-Shaped Approach to Develop Primary Students’ Close Reading and Writing of Literary Texts. NZ J Educ Stud (2024). https://doi.org/10.1007/s40841-024-00310-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40841-024-00310-0