Abstract

Perseverance in R&D effort is the first fundamental step towards any kind of innovation. We investigate the beginning of the innovation story, rather than its end, through duration models. Among the drivers of our unconventional IO approach, we focus on heterogeneity, path dependence and market power, measured as elasticity of firm-specific demand. The Schumpeterian hypothesis emerges at the firm level. Heterogeneity at the industry level reveals Schumpeterian and Arrovian patterns, as well as U-shaped and inverted U-shaped patterns. We suggest considering the entire supply chain from a holistic perspective when evaluating mergers and innovation policies that support small firms by reducing their financial uncertainty and improving their institutional environment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Some innovations come about by chance, but most require perseverance, experiments, laboratory tests. Our intuition is that the probability of realising innovations and making them exploitable by the socio-technological system is causally related to the duration of R&D investments. Simply put, the duration of R&D effort, the primary input, underpins the innovative output. Our research question, therefore, concerns the incentives that stimulate and the barriers that hinder the survival of R&D investments. This is a relevant question, as the propensity and possibility of firms to continuously invest in R&D is crucial for maximising profits and increasing the likelihood of innovation. Besides, actual R&D investments are less than optimal (Jones and Williams, 1998), persistent R&D plays a central role for sustainable green innovations (Sarpong et al., 2023), and it is the increase in research effort that makes growth possible despite the decrease in research productivity (Bloom et al., 2020).

Our approach differs from the standard IO method, which is based on the intensive margin measured by how much the firm invests in R&D (input in levels), and number of patents, new products, new processes (the output). The standard IO method based on output may be biased towards successful innovations and is not necessarily fully correlated with R&D efforts. The R&D duration analysis captures this dimension and the potential of failed investments, thus complementing, rather than replacing, the existing IO approach. The amount of R&D investment used as input by the standard IO method is not necessarily meaningful, because even after a breakthrough has been made, a company must continue to invest in R&D, as it may take a long time to convert innovation into economic results (Coad and Rao, 2008). It is the experience of doing R&D at any given time that drives the innovation process by making firms progressively better (Geroski, 2005).

Rephrasing Mansfield et al. (1977), the innovation process is the product of some conditional probabilities: the probability that the R&D investment will be made on an ongoing basis; the probability that, in the event of technical success, the resulting product or process will be commercialised; and finally the probability that, in the event of commercialisation, the project will yield a satisfactory return on investment. As the process leading to innovative outputs follows several uncertain steps, but starts with the continuous implementation of R&D (the input) in the first instance, we provide empirical evidence on the determinants of this crucial first move.

Standard IO method also presents an empirical problem related to the variety of proxies used to quantify innovation (see Table 1 in Del Monte and Papagni (2003)). Outcomes like patents, new products, new processes are used, but these proxies are rather noisy (an example is the discussion on patents by Griliches (1990)). The evaluation of the results is complex, Coad and Rao (2008); Del Monte and Papagni (2003) use principal components analysis to create a synthetic measure of innovation containing input (R&D expenditure) and output (patents), and Neves et al. (2021) propose a meta-analysis. Instead, by focusing on the duration of the R&D effort (which represents the pedestal of innovation input), our research question partly overcomes the aforementioned measurement difficulties. The duration of the R&D investment is well defined, as we can take advantage of panel data from a rich survey that asks companies each year for the amount they spend on R&D, market research, product design and testing, excluding software development costs and expenditure on education and training of workers.

A number of determinants of R&D duration must be considered as they play a joint role in explaining why some companies are continuously involved in R&D effort while others exhibit intermittent investment behaviour. The process of converting an idea into a set of successful procedures/products is subject to interruptions caused by the uncertainty of the economic environment (Bloom, 2006), thus macroeconomic and firm-specific uncertainty are relevant elements. The process is also costly, so financial aspects, complementarity with other investments, institutional factors and exposure to international markets may play a role. The causal effect of past R&D activities on the R&D duration, due to the path-dependent nature of innovation (from the seminal contribution of Atkinson and Stiglitz (1969)) can be identified provided that unit-specific characteristics, both measurable and non-measurable, are controlled for. Measurable characteristics, such as age, ownership type, size and technological conditions are included as explanatory variables and analysed in their role of expose to the risk of R&D investment disruption. The role of unobservable heterogeneity is investigated at both the company and industry level. At the firm level we compare different models for continuous and discrete duration data, some of which are better suited to handle unobservable individual effects. The analysis conducted at company level is complemented by the heterogeneous panel-based analysis at industry level in which we emphasize sectorial disaggregation.

Considering the heterogeneity at the firm and industry level, a key aspect we want to assess is the role of market power on R&D effort. At the company level, to our knowledge, there is a lack of analysis regarding the role of market power, and its various measures, in the context of innovation duration models and controlling for other important determinants. An advantage of R&D duration analysis is that it delineates a causal effect of market power better than R&D output analysis does: R&D effort does not contribute with certainty to an increase, even temporary, in market power as patents or new leading products might do. In fact, the R&D project could fail, or be oriented toward a subset of potential products or be radical in nature and not serve any existing market. Even if the R&D effort were driven by the desire to achieve greater market power, this achievement is only a future potentiality that cannot empirically generate endogeneity due to simultaneity. Furthermore, the increase in market power depends on how the company manages to influence consumer preferences, something that is more related to advertising (once the discovery has been made) rather than R&D per se. As outlined by Griffith and Van Reenen (2022), theory can predict both positive and negative effects of competition on innovation, and empirical evidence is also mixed. Observable indicators of market competition are endogenous outcomes of more primitive objects, such as elasticities of substitution (consumer preferences).

In an attempt to provide robust empirical evidence, we propose a new direct survey-based measure of firm-specific elasticity of demand and discuss its role with respect to the standard firm-level accounting measure (the Lerner index based on the price–cost margin) and the industry-level concentration ratio (measured by the Herfindahl-Hirschman index and the number of firms). We also distinguish the effects of market power and firm size, which represents an important point as there is much research on the link between firm size and innovation (Cohen and Klepper, 1996a, b; Corsino et al., 2011; Plehn-Dujowich, 2009; Yin and Zuscovitch, 1998). Finally, we check robustness of our results to the possible endogeneity of the alternative market power measures and other explanatory variables.

At the industry level, what is important is to compare the aggregate and sector-disaggregated role of market power on R&D. This dual analysis delivers a landscape in which one may identify the possible emergence of feedback effects across sectors as well as along supply chains as a consequence of mergers and acquisitions, in terms of the resulting technological progress, in the long run.

The rest of this article is organised as follows: Sect. 2 theoretically motivates the relevance of investigating continuity of R&D effort through the duration analysis. Section 3 describes the empirical specifications of the R&D duration models. It also presents the sample, an unbalanced longitudinal panel of Italian companies. Section 4 reports the results and reflects on their interpretation. Section 5 highlights why Italy is an interesting case for our analysis and provides implications for innovation policy and antitrust policy, and suggestions for further research.

2 Duration of R&D effort

Theoretically, uniformly smoothing R&D efforts over the entire horizon is convenient in terms of the resulting discounted profit performance. The rationale for this statement is presented in Appendix A1.1. Empirically, in our data, it is striking that discontinuities in the R&D effort, controlling for the stock of accumulated knowledge and size, produce an average reduction in profits of around 10%. Despite the importance of continuous R&D efforts, few empirical studies have directly investigated this issue through duration models.

Usually random effect probit models and dynamic first-order autoregressive specifications are applied to R&D and innovative output, see Table 1 in Le Bas and Scellato (2014) and references therein. The discrete-time proportional hazard models are used by Máñez et al. (2015); Triguero et al. (2014) for Spanish manufacturing firms; Triguero et al. (2014) find a positive relationship between previous innovative experience and the probability of survival in innovation; Máñez et al. (2015) compares SMEs (between 10 and 200 employees) and large companies (more than 200 employees), and find more persistent R&D activities in SMEs operating in high-tech industries.

The richest literature is instead concerned with a parallel topic to our research question, namely whether innovation is positively associated with firm survival (the state of the art is Ugur and Vivarelli (2021)). Interestingly, Zhang and Mohnen (2022) find that R&D has a greater marginal effect on firm survival than (product) innovation output. Related to this field, the role of persistent innovation is stressed in Cefis and Ciccarelli (2005) who find that the difference in profitability between innovators (defined as firms that apply for a patent) and non-innovators is greater when comparison is made between persistent innovators and non-innovators. Similar results are in Bartoloni and Baussola (2009) who define innovators as firms that introduced either process and/or product innovations. Artés (2009) uses a random effect model inside a two-stage selection procedure and argues that market structure affects long-run R&D decisions (whether to conduct R&D or not) but does not affect short-run ones (how much to invest once the firm decides to be innovative).

Notwithstanding the difficulties embodied in a production-function framework, innovation input and output are also found to be positively associated with productivity, both at the aggregated level (Geroski, 1989) and at the firm level (Parisi et al., 2006). A more comprehensive approach is carried on by Crépon et al. (1998), who move from some “stylised facts” and build an empirical model that encompasses the whole innovation process: the decision to undertake research activity, the magnitude of such effort, the output of the innovative process and the impact on firm’s productivity. Particularly relevant to our paper is the result that innovative output is positively affected by R&D intensity and, in turn, positively affects productivity. Other examples studying productivity benefits from R&D in a sophisticated framework are Doraszelski and Jaumandreu (2013); Mairesse and Robin (2009); Masso and Vahter (2008); Polder et al. (2009); Van Leeuwen and Klomp (2006).

Our duration model thus aims to describe the behaviour of firm-level R&D efforts in a dynamic and uncertain environment. We assume that firms striving to invest in R&D seek to maximise their profits, devote resources to it, are subject to constraints, have a history of transition before entering the current investment state (there is path dependence) and have specific individual, regional and sectoral characteristics (there are initial conditions that determine the different probabilities of being in the state we observe in the data).

The triggers for discontinuing R&D (competing risks) arrive randomly in the time interval from \(t_i\) to \(t_i+\Delta t_i\) (or in the time interval \([t_i, t_i+1)\) when time is discrete, see Sect. 3.2) with probability \(\theta _{1i}(t)\); examples are a macroeconomic shock common to all firms, and firm-specific adverse events. Whenever a shock arrives, company i decides whether to interrupt or to continue R&D; the probability of stopping R&D is \(\theta _{2i}(t)\) and depends on a set of constraints and incentives, as well as on the type of R&D and the firm, i.e. the factors suitable for considering that companies decide on the duration of R&D not only directly, but also indirectly through other decisions. Once R&D is stopped, a company may also decide to start R&D again, therefore we have multiple spells i.e. transition periods from R&D expenditure to R&D expenditure.

We estimate the conditional probability \(\lambda _{i}(t | x_{it}, \nu _{i}) = \theta _{1i}(t) \theta _{2i}(t)\) of stopping R&D effort given that company i was still investing in \(t_i\); in words, we explain the hazard rate of R&D effort as a function of measurable time-varying and time-invariant explanatory variables (condensed into \(x_{it_i}^{\prime }\) for each company i at time \(t_i\)) and unobservable time-invariant explanatory variables capturing unmeasurable heterogeneity (the term \(\nu _{i}\)). Note that the temporal observations may differ between i in the unbalanced panel; when unambiguous, we will use the simpler notation t and \(x_{it}^{\prime }\). The set \(x_{it}^{\prime }\) for the hazard rates includes, as time-varying covariates, financial constrains (with a supposed positive effect), planned investments in physical capital and software (which should have a negative and positive effect, respectively), international competition (with a supposed negative effect), macroeconomic and firm-specific uncertainty (with positive effects), and, as time-invariant covariates, technological opportunities, age and group-membership (which should have negative effect on the hazard ratio), family-type ownership (whose role is a priori ambiguous) and geographical localization (in less developed areas, the hazard ratio should be higher).Footnote 1 In view of their relevance, the explanatory variables market power and persistence included in \(x_{it}^{\prime }\) are discussed in the following two subsections.

The term \(\nu _{i}\) captures firm-specific factors such as organisational capabilities (Dosi et al., 2000), managerial capabilities, technological opportunities (Dosi, 1997), appropriability, unobservable type of R&D. These factors may not be captured by the measurable covariates and their omission would generate biased estimates in panel data, as we will discuss in Sect. 3.5.

2.1 Market power

One of the most crucial variables inside \(x_{it}^{\prime }\) is market power. It is of particular interest to investigate its role within firm-level duration models, as well as to comparatively explore the role of market power on R&D intensity at a disaggregated level for as many sectors as possible, in order to provide as complete a picture as possible. Indeed, the effect of market power is ambiguous in both theoretical models and empirical studies and therefore requires analyses at the individual and sectoral level.

Schumpeter (1943), and the literature that sprung from his work, argue that firms with greater market power have a higher incentive to innovate because they can better appropriate the returns of their R&D investment: intense market competition would imply lower post-entry rents (Hall, 2011), whereas low competitive pressure would reduce the risks associated with an innovative race. In fact, were the race lost, a firm would still enjoy oligopolistic profits. On the other hand, many authors support Arrow (1962)’s thesis, that competition positively affects the innovative effort, as a firm that successfully introduces a new product in the market could then become monopolist (“escape-competition effect”, Coscollá-Girona et al. (2015)). Conversely, firms that already enjoy market power do not need to innovate to stay in business. Moreover, a monopolist that innovates basically replaces itself as the monopolist in the market. This is the so called “replacement effect" put forward in Arrow as opposed to the “competitive effect" underlying the Schumpeterian hypothesis. An exhaustive account of the related theoretical debate is e.g. in (Tirole (1988) pp. 390–396) and Reinganum (1989), and is also incorporated in advanced IO textbooks as Martin (2001).

From the perspective of the duration model at the firm level, our idea is that market power incentives to invest in long-term projects, encourages continued investment and changes sensitivity to research failures, thus enabling companies to continue to invest in R&D even without success. We test alternative measures of market power in models controlling for other determinants which certainly include firm size. Although the literature has analysed innovative outcomes (product and process innovations), the same identified size-related reasons could also stimulate R&D effort. For example, the escape competition theory might be more appropriate for large firms, as they can exploit economies of scale, effectively protect their discoveries (conditions of appropriability), be better able to withstand uncertainty, be less financially constrained, make rational R&D planning decisions and have a greater “absorptive capacity”, i.e. the ability to receive, process and utilise information from their environment (Geroski, 2005).

Indeed, the original positions adopted by Schumpeter and Arrow referred (or were taken to refer) to the impact of industry structure on innovation, whereas a significant part of the theoretical debate based on dynamic innovation races (stemming from Loury (1979); Lee and Wilde (1980)) has focused on the different degree of market power associated with Cournot and Bertrand Behavior for a given industry structure (see Delbono and Denicolò (1990, 1991)). In connection with this stream of literature, it is worth stressing that, in discussing the impact of competition on innovation incentives, the focus has been on the level of individual and aggregate R&D investments, i.e. R&D inputs. This also characterizes the empirical analysis of our paper, as we focus on R&D efforts that are inputs rather than outputs (the latter bring actual innovations of any kind).

Although the aforementioned literature looks at monotone relationships between innovation and market power or concentration, the empirical and theoretical analysis carried out by Aghion et al. (2005) (see also Aghion et al. (2015)) singles out a concave and single peaked one. This finding points at the existence of a specific industry structure maximising aggregate R&D effort, a critical feature which should systematically attract the attention of antitrust authorities evaluating a merger proposal which might foster or compromise the pace of technological progress of an entire industry in the long run, as the debate about the Dow-Dupont case has illustrated (see Federico et al. (2017, 2018); Denicolò and Polo (2018)). This is an aspect on which we shall specifically dwell upon after the discussion concerning duration and market power, showing indeed the arising of both monotone and non-monotone aggregate R&D curves, either U or inverted U-shaped, if we allow for heterogeneity in the industries.

In this respect, the ensuing debate initiated by Gilbert (2006) and then developed by Cohen (2010); Shapiro (2012); Whinston (2012) is of paramount importance. All these contributions discuss under a new light the emergence of a variety of patterns between innovation and competition or market structure, to emphasise the need of focussing on the policy implications concerning mergers. In particular, Shapiro (2012) proposes a reconciliation between the Schumpeterian and Arrovian positions through a few essential principles (contestability, appropriability and synergies), and stresses that merger policy need not depend on a specific shape of the relationship between innovation at the industry level and a specific measure of competition or concentration. Indeed, recent theoretical research (Marshall and Parra, 2019; Delbono and Lambertini, 2022) illustrates the possibility of generating virtually any effect of competition on innovation (either monotone or not) from a single model, by focussing on the technological and demand parameters that may affect this relationship. Possibly, but not necessarily, this obtains by changing also the numerosity and size of firms in the industry. More precisely, this is the case in Marshall and Parra (2019) whereas it is not in Delbono and Lambertini (2022) on which we base our sectorial disaggregated analysis presented in Sect. 4.1.

2.2 Innovation persistence

Since the well-know discussion of the economics of QWERTY (David, 1985), a stream of literature has tried to determine whether innovation persistence is "true" or "spurious". Spurious persistence refers to continuity in R&D effort due to unobserved time-invariant firm characteristics. It is an artefact of the inability to control for the individual heterogeneity, the \(\nu _{i}\) term of the conditional hazard \(\lambda _{i}(t | x_{it}, \nu _{i})\). Models can incorporate some correction accounting for spurious persistence, which is found to explain a significant part of the innovative process in standard IO methods (see, among others, Antonelli et al. (2013); Hecker and Ganter (2014); Lhuillery (2014); Peters (2009); Triguero and Córcoles (2013); Woerter (2014)).

Conversely, true persistence refers to the path-dependence nature of innovation which results from the causal effect of past on present innovative activities, i.e. the manifestation of state dependence independently of unobserved individual effects. In our case, it is the effect of inter-temporal spillovers between subsequent R&D efforts, that we capture through specific covariates inside \(x_{it}^{\prime }\) of the conditional hazard \(\lambda _{i}(t | x_{it}, \nu _{i})\). Among the mechanisms that may explain true persistence, three major accounts can be told apart. First, technological knowledge is an economic good characterized by cumulability and non-exhaustibility, and represents at the same time an input and an output of the knowledge-generating process (Antonelli et al., 2012). Hence, firms that have generated new technological knowledge can rely upon such output to generate new, additional knowledge at a lower cost. Dynamic increasing returns are likely to shape innovative activities: the larger the cumulated size of innovation, the larger is the positive effect on costs (“learning-to-learn” and “learning-by-doing” effects). Malerba and Orsenigo (1995) propose the concept of cumulativeness at the firm level, according to which “firms continuously active in a certain technological domain accumulate knowledge and expertise”.

A second stream holds that firms need to successfully profit from their innovation so to be able to innovate again. According to this view, commercial success increases the probability of future innovation because it allows for the reallocation of profits to new research projects (“success-breads-success” effect). Firms that successfully innovate are hence more likely to follow on innovations because of higher permanent market power (Le Bas and Scellato, 2014). We control for this effect by introducing market power into our set of explanatory variables. Hence, the innovative base at the company level is an important explanatory variable in a model aimed at understanding the role of market power on R&D duration.

Thirdly, it is worth mentioning the “sunk-costs” effect theory, according to which innovative activities are characterized by high set up costs for research facilities and the training of personnel, and by long-term commitments in terms of investment. Once research has started, the opportunity cost of interrupting it is quite high. This implies that research and development activities generate high entry and exit barriers as well (Antonelli et al., 2012).

All these arguments can be seen as complementary, rather than mutually exclusive, in explaining innovation persistence (Ruttan, 1997). They generate inter-temporal externalities and temporal spillovers. In brief, in Dosi (1988) “what the firm can hope to do technologically in the future is narrowly constrained by what it has been capable of doing in the past”.Footnote 2

3 The empirical framework

3.1 The data

The sample results from merging the Survey on Industrial and Service Firms, annually conducted by the Bank of Italy since 1984, and the Company Accounts Data Service (CADS), collected since 1982. Together they constitute an optimal database in terms of quality and continuity of the available information and representativeness of the Italian entrepreneurial population. Of particular interest are the questions on competitiveness and those on current (in the t-period), past (in the \(t-1\)-period) and prospective (the respondents’ expectation in t for the future \(t+1\)) investiments and sales.Footnote 3 The sample includes manufacturing, energy and mining industries, private non-financial services and construction, as well as companies with ten or more employees (both SMEs and large firms). We therefore extend the landscape of large manufacturing firms investigated by, e.g., Piva and Vivarelli (2007); Arrighetti et al. (2014).

The R&D investments over employees, \(I^{\rm {{\tiny R\&D}}}_{it}/Em_{it}\), is at the core of our empirical analysis on R&D input duration.Footnote 4 It is worth stressing that the numerator is derived from the answer to a specific survey question by the Bank of Italy, and, as such, it represents the gross expenditure on R&D, market research, design and test products, while it excludes any costs for software development and expenditure on education and training of workers. Both the purchased services from an external company and the one developed in house are included, thus our measure could capture, in the words of Gilbert (2006), the numerous, scattered and varied sources of invention. Moreover, our measure is able to capture the innovation undertaken by small firms which relies more on the acquisition of external technologies (Bontempi and Mairesse, 2015; Conte and Vivarelli, 2005).Footnote 5

To estimate the firm-level R&D duration models we exploit the 1996–2012 sub-period, while the pre-estimation 1984–1995 period is used to derive the instrument set for potentially endogenous explanatory variables (see Sect. 3.5.4).Footnote 6 For the industry-level analysis, we use the entire 1984–2012 period in order to maximise the number of observations available for each sector (see Sect. 4.1). Table 1 compares, over the estimating 1996–2012 period, the full sample of 3634 firms with the 1949 firms that performed R&D in at least one year and were considered in the duration analysis. The working sample is still representative of firms’ characteristics as they were distributed in the full sample. Characteristics that are positively (negatively) correlated with R&D investment, and thus over- (under-) represented in the working sample, are controlled for in all estimated models, and the Heckman (1979) two-step estimator is implemented to avoid selection bias in the duration equations. Details on the sectorial distribution over the period 1984–2021 are in Table 7 in Appendix A1.2.

3.2 Measuring duration

Our variable of interest is the duration of firm’s innovative effort, or its “innovative spell”. Such spell is calculated for each firm in our sample as the number of years it reports positive \(I^{\rm {{\tiny R\&D}}}_{it}/Em_{it}\). Our dependent variable is then represented by the probability that a firm interrupts R&D investment in the year \(t_i\), given that it has invested in the period \((t_i-s; t_i-1)\), \(s>0\). A distinguishing feature of duration data is that some observations may be right-censored: some spells may be incomplete and their true length unknown (this happens, for instance, when we register firms innovating in the last observed year, 2012, and we do not know whether they did the same in 2013 or not).Footnote 7 Let \(T_{i}^{\star }\) be a random variable measuring the firm’s innovative spell length, and let \(c_{i}\) be the censoring time (i.e. the time beyond which we do not observe the firm’s behaviour), measured from the time origin of the spell. Then, the random variable that will be observed is \(T_{i}=\min (T_{i}^{\star }, c_{i})\). An indicator variable \(d_{i}\) is also observed, and it is equal to 1 if \(T_{i}=T_{i}^{\star }\), 0 if \(T_{i}=c_{i}\). Suppose the random variable \(T_{i}\) has continuous probability distribution \(f(t_{i})\), where \(t_{i}\) is realisation of \(T_{i}\). The cumulative probability (failure function) is

The survival function describes the probability that the innovative spell is at least of length \(t_{i}\):

The probability that a spell that has lasted until time \(t_{i}\) will end in the next short time interval (\(\Delta t_{i}\)) is given by the hazard function

where \(f(t_{i})=\text {d}F(t_{i})/\text {d}t_{i}\). Roughly speaking, \(\lambda _{i}(t)\) is the rate at which each spell i will be completed at duration \(t_i\), given that it has lasted until \(t_i\), and it represents the rate of change (and the derivative of the negative logarithm) of the survival function. All probabilities may be computed using the hazard function; for example, \(F(t_{i}) = 1-\exp \Bigl (- \int _{0}^{t_{i}}\lambda _{i}(s)\text {d}s \Bigr )\) and \(S(t_{i}) = \exp \Bigl (- \int _{0}^{t_{i}}\lambda _{i}(s)\text {d}s \Bigr )\).

Actually our survival times are banded into discrete intervals of time, all intervals are of equal unit length (a year) so that \(\Delta t_{i}=1\), and there are \(I = 1, 2, \dots , I_i\) grouping points defining the \(I_i+1\) intervals at which investment in R&D could be interrupted, \([0, 1), [1, 2), \dots , [I_i-1, I_i), [I_i, \infty )\).Footnote 8 The time-aggregate hazard function can be written as \(\lambda _{i}(t) = \Pr (T_{i} \in [t_{i}, t_{i}+1) | T_{i} \ge t_{i}) = \Pr (t_{i} \le T_{i} < t_{i}+1 | T_{i} \ge t_{i}) = \frac{f(t_{i})}{S(t_{i})}\) to represent the probability that R&D spending will stop between time \(t_{i}\) and time \(t_{i}+1\), given that the company i is still investing until the beginning of interval \([t_{i}, t_{i}+1)\), with \(S(t_{i}) = \prod _{\tau =0}^{t_i-1}(1-\lambda _{i}(\tau ))\).

Table 2 reports the functions of survival and hazard, estimated by the Kaplan and Meier (1958) and the Nelson (1972)-Aalen (1978) estimators respectively. The survival function is less-than-proportionally decreasing in time: at the end of the considered period, only about 16% of firms are still spending in R&D, but most of exits take place at the very beginning, with 49% of firms ceasing investment within the first 4 years. Alternatively, in terms of hazard rates (spell exit rates), the probability of interrupting innovative activity is higher in the first four years, but then decreases once the innovation activity has lasted for a certain period (the only exception to this pattern is related with the 2008/09 crisis, 13–14 in the table). Companies experienced multiple spells meaning that they interrupted and restarted R&D spending several times (5 at maximum). The more frequently innovation is interrupted and restarted, the greater the number of spells and the lower their average duration, in our case just over two and a half years (2.59).Footnote 9

In the last two columns of Table 2 we deal with left censoring which occurs when we do not observe the starting date of the R&D spell (this concerns the spells of firms reporting positive R&D investment in 1996, the first year of our estimating sample; the length of the innovative spell could be greater than what is observed).Footnote 10 As pointed out in Iceland (1997), omitting left-censored cases could lead to serious selection bias because one might exclude from the analysis firms that present the longest innovative spells. In fact, whereas firms that invest in R&D discontinuously (i.e. have several short spells) may be expected to re-enter the data set, firms that continuously engage in innovative activity would be ignored, leading to a downward bias in estimation of the survival function. Several methods can mitigate the problem (Stevens, 1995; Iceland, 1997; Carter and Signorino, 2013). To retain all available information, we follow Stevens (1995) suggesting to include left-censored spells in the analysis and add a dummy variable indicating whether the observed spell is left-censored or not.

3.3 Measuring market power

As emerged from the cellophane case (Stocking and Mueller, 1995), measuring market power is a non-trivial issue. What we can do, indeed, is to comparatively discuss alternative possibilities in the empirical analyses at company-level (in Sect. 4) and industry-level (in Sect. 4.1). The standard two proxies in the empirical IO literature are the firm-level Learner index measuring the price–cost margin, PCM, and the industry-level concentration ratio as the number of firms within each sector and the Herfindahl-Hirschman index.Footnote 11

The price–cost margin is defined by (see Domowitz et al. (1986); Bontempi et al. (2010); Aghion et al. (2005)):

where \(\Delta\) is the change.Footnote 12 This measure is widely used in spite of its drawbacks. As reported in Coscollá-Girona et al. (2015); Domowitz et al. (1986), increased PCMs (or high degrees of concentration) are not necessarily symptoms of lack of competition. Boone (2000) points out that there is no simple relationship between product market competition and market structure if firm’s cost efficiency levels are asymmetric. For instance, enhanced competition may raise the market shares of the most efficient firms at the expense of inefficient ones, increasing the concentration index (“reallocation effect”, Boone (2008)); or concentration may rise as the most inefficient firms exit the market because of more intense competition (“selection effect”, Boone (2008)). The latter case may also lead to an increase in the average PCM. In like manner, if less competitive pressure leads to higher costs due to inefficiency or absence of cost-reducing innovations, the PCM will decrease (Coscollá-Girona et al., 2015). Furthermore, as pointed out by Domowitz et al. (1986), PCMs are sensitive to demand fluctuations. A limitation of PCM that plagues the empirical works is that it is conceptually difficult as it includes wages of R&D employees in the payroll amount being deducted. If R&D is cut, it has a quasi-automatic effect on the PCM, unless employees are taking over other tasks within the firm or the data allows to separate R&D employees’ wages from the total payroll. Another drawback of PCM is the high correlation with cash flow (41%) which we used to capture internal funds and financial constraints on innovation. In general, measures mainly based on the level of sales risk to generate empirically an obvious correlation with an input such as the amount of R&D expenditure or an output such as patents.

To be coherent with Delbono and Lambertini (2022) and the analysis disaggregated at the industry level, the second measure we use is \(share_{jt}\), given by the total number of firms investing in R&D within each industry \(j = 1, \dots , 23\) in each year t, divided by the total number of firms within each industry in each year. Compared with concentration measures that simply consider the largest firms in an industry, \(share_{jt}\) takes into account the number of R&D producers inside each industry, not just the largest ones, reflecting the changes in the industrial diffusion of innovation activity.Footnote 13

An important contribution of this study is the use of a third, and new, measure of market power, given by the implied demand elasticity obtained from qualitative data. In the 1996 and 2007 surveys firms were asked the following question (Bank of Italy, 2008):

Consider the following hypothetical experiment: suppose your firm raises today the price of the goods produced by 10 percent. What would be the percent change in the value of sales, assuming that your firm’s competitors leave their prices unchanged, and holding everything else constant?

Such a question directly refers to the firm price elasticity of demand, as all other variables can shift the demand curve faced by the firm are to be held constant in the thought experiment. The fact that Italian companies are small-sized, unlisted and typically operate in well-defined industries makes us to expect that the respondents have a clear idea when answering the survey question on demand elasticity.Footnote 14

The answers given in the two surveys show small differences, due to rounding and non-significant variations in elasticity.Footnote 15 This allows to assume that elasticity is a constant characteristics of our companies, thus not affected by endogeneity problems in our duration models (see Sect. 3.5.4). Hence, we can use the individual averages as an estimate of the firm-specific elasticity. Table 3 shows that mean operator does not change the statistical properties of the distribution. Descriptive statistics given in Table 3 are consistent with some firms having market power. The mean value of \(\eta\) is \(-\)1.36, and the great majority of firms, about 87%, show an implied elasticity greater than 1 in absolute value.

Table 4 reports some descriptive statistics about the relationship between the implied demand elasticity, R&D, PCM, firm’s size and age. Additional qualitative analyses of the companies across elasticity classes confirm the capacity of our new measure to condense the market structure and degree of competition (see the Appendix, Sect. A1.3).

Low elasticity classes, in absolute values, are characterised by high R&D, high PCM, large size and high age companies. Firm’s size is positively correlated with PCM (8%), share (14%), cash flow (7%), while it is not correlated with elasticity. This last point is important as, in our estimates, we aim at differentiating between firms size and market power.Footnote 16

3.4 Measuring persistence

To capture the factors that determine the true persistence and cumulativeness of the R&D effort, we include four drivers: the lagged values of the logarithm of innovative stock (\(\ln\) RD)Footnote 17 the number of previous spells in which the firm has continuously invested in R&D (Spell Number); the elapsed duration of previous spell (Time); and a dummy equal to 1 if the spell is left-censored (Left Cens.). As companies contribute to multiple spells (in Sect. 3.2), the inclusion of the previous event and its length among the time-varying explanatory variables helps to correct for the dependence between the occurrence of one event and the hazard of subsequent events.

3.5 Modelling duration

The next steps concern the specification of how the hazard depends on observed and unobserved explanatory variables (the shape of the hazard), the role of ignoring unobserved heterogeneity, and how to model the distribution of \(\nu _{i}\). As discussed above, we have many theories that suggest potential covariates rather than a specific functional form of the duration model (the true form is unknown a priori). Henceforth, we prefer to use reduced form approaches, as it is common in the literature on duration analysis (Heckman and Singer, 1984a).Footnote 18 The comparison of qualitative results, obtained from alternative specifications of the hazard shape and treatments of heterogeneity, represents a sensitivity analysis on the model space, ensuring the transparency of our results and providing useful insights.

3.5.1 The Cox model

We use the semi-parametric (Cox, 1972) model as starting point for our analysis because it is quite flexible and does not require any assumption about the functional form of the hazard. Each \(i^{th}\) firm faces a hazard function which is common to all firms (the “baseline” hazard, \(\lambda _0\) which is a function of t alone) and is then modified by the set of explanatory variables \(x_{it}^{\prime }\). The relationship between the individual risk and the vector \(x_{it}^{\prime }\) depends upon the estimated coefficient vector \(\beta\). The Cox model assumes that the individual risk equals the product of the “baseline” risk and the function \(\psi (\textbf{x}, \beta ) = \exp (\textbf{x}^{\prime }\beta )\):

In other words, the shape of the hazard function is the same for all firms, and variations in \(x_{it}^{\prime }\) just shift the function. This allows for a direct interpretation of the estimated coefficients because for the \(k^{th}\) covariate (\(k=1, 2, \dots , K\))

represents the constant proportional effect of an increase in the relative variable on the conditional probability of exiting the innovative state. This approach provides estimation of \(\beta\) without requiring estimation of \(\lambda _0\).

The Cox model suffers from four potential drawbacks. First, it implies a continuous-time specification, thus assuming the absence of ties. Even if the behavioural process generating the exit rates were to occur in continuous time, our data are nevertheless recorded in grouped form (the year). From Table 2, the ratio of the length of the intervals used for grouping to the average spell length is not small (equal to 0.386), suggesting that a discrete time specification would be more appropriate. Indeed, if ties occur infrequently, it is possible to account for them with the Efron (1977) method. But when ties are frequent, there is no way to avoid asymptotic bias in both the estimated coefficients and the corresponding covariance matrix. Second, the Cox model is not robust to neglected covariates, specifically unobserved heterogeneity (or frailty in biostatistics); failing to control for firm capabilities (technological opportunities, appropriability conditions, etc.) could lead to spurious association between e.g. R&D effort and market power, and spurious persistence.Footnote 19 Third, it is impossible to obtain any information about the shape of the hazard function \(\lambda _0\), which is actually important to ascertain if there is negative (positive) duration dependence. Finally, the Cox model in Equation (4) assumes Proportional Hazards (PH). This means that the effect of any explanatory variable on the hazard is assumed to be constant over duration time. It is straightforward that, should the PH assumption fail, estimated covariate effects would be biased. The PH assumption may fail to hold for two reasons: (1) because the effect of explanatory variables is intrinsically non-proportional; (2) because unobserved individual heterogeneity is not accounted for and makes the effect depend on duration time, even if the underlying process is of the proportional hazards form (Lancaster and Nickell, 1980; Brenton et al., 2010). Estimated results for the Cox model are in the Appendix A1.4, Table 11; in Table 12 the PH assumption is strongly reject.

3.5.2 The Cloglog model

The Cloglog model assumes a complementary log-log form for the hazard, and overcomes many limitations that affect the Cox model. In fact, as well as giving a discrete time representation of an underlying continuous time proportional hazards model, it also controls for unobserved heterogeneity and gives information about the duration dependence of the hazard. The conditional likelihood of the data (a sample of N firms where independence over i is assumed) is

where \(d_i=1\) if the interval is not right-censored and 0 otherwise. The first term corresponds to completed spells and represents the conditional probability that \(T_i\) falls into the \([t_i, t_{i}+1)\) interval. If a firm i is censored (exits the sample) at some point inside the interval, we do not know whether it would have invested during the interval or not, and we must censor it (the first term equals 1). The second term is the probability that a spell lasts at least until the interval \([t_{i}-1, t_{i})\). The last three rows are the discrete-time variant of a continuous-time PH model (Kalbfleisch and Prentice, 2002; Kiefer, 1988; Meyer, 1990). If \(t_i \in [t_{i}, t_{i}+1)\), \(\gamma (t_{i})= \ln \left( \int _{t_i}^{t_{i}+1}\lambda _{0}(s)\text {d}s\right)\), and the time-varying covariates in \(x_{it_i}^{\prime }\) are assumed to be constant within each interval (but may vary between time intervals). The baseline hazard function \(\gamma (t_i) = [\gamma (0) \gamma (1) \dots \gamma (I_i-1)]^{\prime }\) is a polynomial in time that allows for a flexible definition of duration dependence; usually it is chosen by the researcher. In the present work, the highest order polynomial that resulted significant was the first, as we include appropriate covariates to capture the duration dependence of the hazard (see Sect. 3.4).

Without loss of generality, we can write the log-likelihood as

where the expression for the interval hazard rate \(\lambda _i(t|x_{it}^{\prime }\beta ) = 1-\exp \Bigl (-\exp (x_{it}^{\prime }\beta + \gamma (t)\Bigr )\) can be seen as a form of generalized linear model with particular link the complementary log-log transformation, \(\ln \Bigl [- \ln (1-\lambda _i(t|x_{it}; \beta ))\Bigr ] = x_{it}^{\prime }\beta + \gamma (t)\) (Allison, 1982; Jenkins, 1995).

Since in each discrete-time interval the spell either ends or it does not, a binary choice model can be used for the probability of interrupting R&D in each period (Jenkins, 1995; Kiefer, 1988; Han and Hausman, 1990; Meyer, 1990; Lancaster, 1990; Sueyoshi, 1995). In the log-likelihood

\(1-S(t_i|x_{it}; \beta ) = F(t_i|x_{it}; \beta )\) is either the logit or probit model, rather than the complementary log-log model. For example, a logistic hazard model with interval-specific intercepts may be consistent with an underlying continuous time model in which the within-interval durations follow a loglogistic distribution (Sueyoshi, 1995). The log-likelihood is

where \(\dfrac{\lambda _{i}(t|x_{it}; \beta )}{1-\lambda _{i}(t|x_{it}; \beta )} = \Biggl (\dfrac{\lambda _{0}(t)}{1-\lambda _{0}(t)}\Biggr )\exp (x_{it}^{\prime }\beta )\).

It follows that \(\ln \Biggl (\dfrac{\lambda _{i}(t|x_{it}; \beta )}{1-\lambda _{i}(t|x_{it}; \beta )}\Biggr ) = \text {logit}[\lambda _{i}(t|x_{it}; \beta )] = \delta (t) + \exp (x_{it}^{\prime }\beta )\), where \(\delta (t) = \text {logit}[\lambda _{0}(t)]\) can be a polynomial in time as the \(\gamma (t)\) in the Cloglog model. The Cloglog and logistic hazard models yield similar results for relatively small hazard rates: \(\text {logit}[\lambda _{i}(t|x_{it}; \beta )] = \ln \Biggl (\dfrac{\lambda _{i}(t|x_{it}; \beta )}{1-\lambda _{i}(t|x_{it}; \beta )}\Biggr ) = \ln (\lambda _{i}(t|x_{it}; \beta )) - \ln (1 - \lambda _{i}(t|x_{it}; \beta )) \approx \ln (\lambda _{i}(t|x_{it}; \beta ))\) for small \(\lambda _{i}(t|x_{it}; \beta )\).

Hence, Eq. (9) can be rewritten as the log-likelihood function of a binary dependent variable \(y_{it}=1\) if spell i ends in interval t, and 0 otherwise:

where the functional form for \(\lambda _i(t | x_{it}; \beta )\) can be a complementary log-log model or a logistic model. Considering the R&D investment a continuous process measured in grouped form, we prefer the model with the Cloglog link. In general, duration data are much more informative than binary data (Rust, 1994; Van den Berg, 2001) as they preserve information about the differences in time in which each company stopped R&D spending and multiple spells; on the other side, the logistic model is not a PH model.

As argued in Sect. 2, many studies highlight the importance of unobserved heterogeneity (let denote it by \(\nu _{i}\)) in explaining the persistence of innovation. If unobserved heterogeneity is indeed important, ignoring it will lead to over- (under-) estimation of the degree of negative (positive) duration dependence (Lancaster, 1979; Kiefer, 1988). This is a selection effect: if duration dependence is negative, individuals with high values of \(\nu\) will stop R&D faster, ceteris paribus. Furthermore, the proportionate effect \(\beta _k\) of a given regressor \(x_k\) on the hazard rate will no longer be constant and independent of survival time, and \(\beta _k\) will be biased (Lancaster, 1979). Heterogeneity enters the underlying continuous hazard function multiplicatively:

hence, the Cloglog model becomes:

where \(u_i \equiv \ln \nu _i\) and \(\nu _{i}\) is a random variable taking on positive values, with mean normalised to one and finite variance \(\sigma ^2\). In multi-spell data we do not require that \(\nu _{i}\) is distributed independently of \(x_{it}\)Honoré (1993).Footnote 20

Estimation of model (12) requires an expression for the density function that does not condition upon the unobserved effects. It is hence convenient to specify a distribution for \(\nu\) to “integrate out” the unobserved effect (i.e., one works with the function \(\lambda _i(t | x_{it}; \beta , \sigma ^2)\) rather than \(\lambda _i(t | x_{it}; \beta , \nu _{i})\)). We compared two assumptions, the first one that the heterogeneity \(\nu _i\) is normally distributed, and the second one with Gamma-distributed frailty (Meyer, 1990). The two distributions either require numerical quadrature techniques or provide closed form expressions for the hazard function with frailty which, in the end, is summarised by few key parameters.

The Cloglog framework also allows for the incorporation of unobserved heterogeneity non-parametrically by assuming that there are several types of firm spell (“mass points” in Heckman and Singer (1984b)). This implies that each spell has probabilities associated with the different mass point, allowing for different intercepts of the hazard function. For a model with \(n = 1, \dots , M\) mass points \(m_n\) with probabilities \(p_{m_n}\), the hazard in Eq. (12) becomes:

Normalising the first mass point to zero, the intercept for type-1 firms is \(\beta _0\) (corresponding to the first element of \(x_{it}^{\prime }\) which is \(\equiv 1\)), that for type-2 firms is \(\beta _0+m_2\) and so on. The log-likelihood is \(\ln L = \sum _{i=1}^{N}\sum _{n=1}^{M} p_{m_n} \ln L_n\) where \(\ln L_n = d_i \ln \left[ 1-S_n(t_i|x_{it}; \beta , m_n)\right] + \ln \left[ S_n(t_i|x_{it}; \beta , m_n)\right]\).

Estimated results for the Cloglog models with normally-distributed frailty, gamma distributed frailty, and mass -points frailty are in the Appendix A1.4, Tables 13, 15, 16. The PH assumption, in Table 14, is not rejected.

3.5.3 The probit model

Discrete-time survival analysis often employs logit models as an alternative to semi-parametrical approaches as the Cloglog, to relax the PH assumption. However, because the considered event (interruption of innovative effort) occurs quite frequently, and many spells last longer than 1 year, the logit assumption of event independence would be invalid, leading to biased estimates (Banbury and Mitchell, 1995). Hence, we prefer to use a random effects probit model (Antonelli et al., 2013; Hecker and Ganter, 2014; Lhuillery, 2014; Peters, 2009; Triguero and Córcoles, 2013; Triguero et al., 2014).

The expressions in the likelihood function are given by

where \(\Phi\) denotes the cumulative density function of the standard normal distribution. We added to model specification the individual averages of time-varying explanatory variables so to estimate the Chamberlain (1980) correlated random effects probit model in the Mundlak (1978) version. Hence, unobserved heterogeneity \(\nu _i\) is assumed to be normally distributed with mean \(\bar{x}^{\prime }_i\pi\), and \(\sigma ^2_{\upsilon }\) is the variance of the error in \(\nu _i = \bar{x}^{\prime }_i\pi +\upsilon _i\).

Estimated results are in the Appendix A1.4, Table 17.

3.5.4 Discussion on endogeneity

The hazard rate framework allows for a “less-affected-by-endogeneity” investigation of the role of market structure and other controls on R&D effort. Indeed, companies are not certain that their investments will be successful and will definitely bring, for example, greater market power, generating simultaneity. We are not investigating long-run equilibria and perfect markets, but rather situations characterized by imperfections, such as sunk costs, uncertainty and its "wait and see" effect, which produce rigidities in immediately implementing R&D investments and being successful. In other words, we estimate the duration of R&D expenditure, which is an “ex-ante” input indicator and, for this reason, makes the endogeneity problem less relevant than when considering “ex-post” innovative output indicators.Footnote 21.

To confirm our assumption, we proceed with two identification strategies that, although different, produce robust results. Both strategies are based on the vector partition \(x^{\prime }_{it}=(x^\prime _{0i}, x^\prime _{1it}, x^\prime _{2it})\). The term \(x^\prime _{0i}\) contains time-invariant measurable firm- and industry-level characteristics which, together with the duration model that accounts for unobservable individual effects, Eq. (12), and the Mundlak (1978)’s approach, allow for controlling the endogeneity due to the omission of stable drivers of innovation. The term \(x^\prime _{1it}\) contains exogenous time-varying covariates like the firm-specific shocks and the macroeconomic (common to all the companies) uncertainty. The term \(x^\prime _{2it}\) contains potentially non exogenous time-varying explanatory variables like cash flow, debt, size, PCM and share, revenues from export, and accumulated stock of knowledge.Footnote 22 Firms that continuously invest in R&D could obtain higher profits and greater availability of cash flow to internally finance the innovative expenses, a higher demand associated with decreasing prices (e.g., due to process innovation) and/or increasing market share (e.g., due to product innovation), and have a higher stock of cumulated R&D. However, we can reasonably assume that there are lags between an initial effort in R&D and the uncertain and delayed outcomes. Also, it should be emphasised that, compared to the PCM, which is also affected by measurement and collinearity problems, the elasticity of demand has proven to be quite stable over our sample period and can therefore be included in the \(x^\prime _{0i}\) term: consumer preferences are not directly influenced by companies, even if they spend a lot on advertising (see Sect. 3.3).

Our first identification strategy is supported by Nickell (1996) who uses lagged market power, and Hall et al. (1999) who, through bivariate causality regressions, show that sales growth clearly led to R&D growth in all the countries studied. Additionally, although innovation and standard measures of market power could be simultaneously determined, the evidence from the literature testing endogeneity is ambiguous (Gilbert, 2006). Hence, we use the vector \(x^{\prime }_{it}=(x^\prime _{0i}, x^\prime _{1it}, x^\prime _{2it-1})\) and assume that the values before the start of the spell, \(x^\prime _{2it-1}\), do not depend on the occurrence of exit in \([t, t+1)\) or in some later intervals. This corresponds to the weak exogeneity assumption in dynamic regression models or predictability (Ridder and Tunali, 1999) which implies that the observable values of the covariates for the hazard at time t are given just before t.

The second identification strategy is based on two-stage regressions (Chan, 2016) in which, in the first stage, we regress the possible endogenous explanatory variables \(x^\prime _{2it}\) on the included exogenous variables and the scores of the appropriate lags of the transformed endogenous variables (the instruments, \(s^{\prime }_{it}\)): \(x^\prime _{2it}=x^\prime _{0i}\gamma _{0}+x^\prime _{1it}\gamma _{1}+s^{\prime }_{it}\gamma _{2}+\epsilon _{it}\). In the second stage we use the reduced-form residuals, \(\hat{\epsilon }_{it}=x^\prime _{2it}-x^\prime _{0i}\hat{\gamma }_{0}-x^\prime _{1it}\hat{\gamma }_{1}-s^{\prime }_{it}\hat{\gamma }_{2}\), as additional variables in the estimation of the duration models,Footnote 23

To avoid the problem of over-fitting due to the use of many instruments, we use the Bontempi and Mammi (2015)’s procedure to extract the components that account for 90% of the variability of the set of instruments. We assume that \(\textbf{z}\) is the p-columns GMM-Lev style instrument matrix (Arellano and Bover, 1995), composed of the 14 to 24 lags in the pre-estimation period 1984–1995 of \(x^\prime _{2it}\) transformed into first differences to avoid correlations with unobserved heterogeneity. We extract p ordered eigenvalues \(\lambda _1, \lambda _2,..., \lambda _p\ge 0\) from the covariance/correlation matrix of \(\textbf{z}\), and find the corresponding eigenvectors \(e_1, e_2, \dots , e_p\). The instruments are the scores obtained from the principal component analysis \(\textbf{s}_w = \textbf{z} e_w \text { for } w = 1, 2, \dots , p\). Writing \(\textbf{z}=[z_1 \dots z_r \dots z_p]\) with \(z_r\) being the \(r^{th}\) column of the instrument matrix, the score \(s_w\) corresponding to the \(w^{th}\) component is \(s_w = e_{w1}z_1+ \dots + e_{wr}z_r + \dots +e_{wp}z_p\), where \(e_{wr}\) is the \(r^{th}\) element of the principal component \(e_w\). The first step uses \(w=5\), i.e. the first five components extracted from the variable-specific lags for cash flow, PCM and share; the lags of all variables together for R&D stock, debt, export earnings and size.Footnote 24 The identification based on the lagged explanatory variables resembles that proposed by Macher et al. (2021) assuming that R&D expenditures do not spillover from one period to future periods. Since we use lags computed in the pre-sample period, 1984–1995, we can suppose that positive temporal spillovers, if present, are nonetheless decreasing over time and have disappeared once long lags (14 to 24) are taken into account, thus not invalidating our instruments.

4 Results

In Table 5 we compare the estimates of our duration models, along the columns, using the same specification which is our preferred choice. It includes elasticity of demand \(-\eta\), size, the EPU index of macroeconomic uncertainty and the firm-specific uncertainty (the discrepancy between actual and planned investments), cumulativeness and left-censoring, and the set of control variables. We consider the fourth column of Table 5, the Cloglog model with “mass points”, as the most reliable empirical specification for our data, the other estimates being reported as robustness checks.Footnote 25 The results are discussed along the following points.

Persistence of R&D. The true persistence of the innovation process, linked to cumulativeness, irreversibility and increasing returns on innovation investment, learning-by-doing and learning-to-learn effects, is globally captured by the logarithm of the R&D stock and the variables Spell Number, Time. There is evidence of persistence in R&D investments: the coefficient for the lagged stock of accumulated knowledge is negative and significant. The coefficient of Time is also negative (significant in Cox and Probit models), suggesting that the longer a firm has continuously invested in R&D, the more likely it is to continue investing (negative duration dependence). This is coherent with our expectations, and confirms the results of Acs et al. (2008); Hecker and Ganter (2014); Máñez et al. (2015); Triguero et al. (2014) who found significant R&D temporal spillovers in Cox/Probit models. The coefficient of the number of previous spells is significant (and negative) in the continuous Cox and discrete log-logistic models under the assumption of normally distributed frailty. Firms that have shown a discontinuous attitude towards innovation, but have had several innovation spells, are less likely to stop investment again. In contrast, the frailty models take into account the possibility of unobserved differences between firms, e.g. that some companies have high hazards (many spells) while others have low hazards (few spells). Controlling for unobserved heterogeneity eliminates what might otherwise be interpreted as a causal effect of each spell on the hazard of subsequent R&D spells. When the models are estimated by adding the Heckman-type selection to check the unbiasedness of the results, only the lagged accumulated knowledge stock becomes non-statistically significant, while the other parameters are robust. The inverse Mills ratio correcting for selection bias appears to be collinear with the R&D stock, an expected outcome, but quite interesting to see confirmed in the data.

Left-Censoring. The dummy variable Left Cens. is negatively associated with firms discontinuing their innovative activity in all the duration models we estimated. As discussed in Sect. 3.2, this precaution mitigates the bias in estimation due to left-censoring. The negative and significant coefficient confirms the preliminary hypothesis that left-censored spells are likely to be the longest. The exclusion of such spells would have hence resulted in a severe overestimation of the hazard function.

Market Power and size. The parameter of PCM, the standard measure of market power, is negative and significant only in our favourite model, the Cloglog with frailty captured by mass points when instrumented with the two-stage regressions. It also assumes a value aligned with that of elasticity \(-\eta\). However, as outlined in Sect. 3.3, price cost margin suffers from multicollinearity with cash flow and size, two variables adversely affected by the inclusion of PCM in our models. The industry-level concentration index, share, is also negative and significant in the Cloglog model with mass-points frailty, although it suffers from a correlation problem with firm size.Footnote 26 Our measure of the implied demand elasticity, \(-\eta\), always shows negative coefficients, which are significant in our preferred duration models. This corroborates the Schumpeterian hypothesis of a positive relationship between market power and innovation, and also the “success-breads-success” effect hypothesis that explain firms’ innovation duration. Another positive feature of elasticity, \(-\eta\), is the absence of correlation with financing and firm size. In particular, size presents negative and significant coefficients. Consistent with the observation by Hall (2011), we argue that larger firms can diversify their activities and are therefore more likely to persist in R&D investment.

Financing. We find that the firm’s cash flow has a negative and significant effect on the probability of discontinuing R&D investment, supporting the hypothesis that firms with internal funds are better able to sustain the expenses connected with research and development activities. Overall, the negative sign of cash flow (CF) and the positive one for debt (D) tend to signal the presence of liquidity constraints due to agency costs and asymmetric information particularly relevant in the R&D case (Bontempi, 2016).

Technological Opportunities. In line with the findings of Coscollá-Girona et al. (2015); Crépon et al. (1998); Duguet and Monjon (2004); Hecker and Ganter (2014); Huang (2008); Máñez et al. (2015); Peters (2009); Triguero and Córcoles (2013); Triguero et al. (2014), technological opportunities, proxied by the dummy variables HT, MHT and MLT, have positive coefficients for medium-high and medium-low technology classes. The dummy HT is negative, although not significant, in the Cloglog models. In general, we observe that sectoral dummies, as well as other individual characteristics, are relevant in the Cox and probit models only. The non-significance of the dummy HT may depend on the fact that high-technology firms represent about 5% of the working sample; moreover, Italian firms are inclined to engage in R&D activities even in sectors that can be defined as low R&D, and this aspect will be further investigated at a more disaggregated level in Sect. 4.1.

Planned Investments. Although seldom significant, the variables considering firms’ planned investment in physical capital (machinery, IM) and software (IS) provide some information on the relationship between the different types of investment. While IM tends to show a negative coefficient, suggesting a kind of complementary effect with R&D effort, the coefficient of software investment is either not statistically significant or is positive, suggesting substitutability between innovative effort and IT acquisition. The difference between planned and actual investments in software, \(\Delta IS\), tends to have a positive and statistically significant parameter in the specifications containing PCM and share. This suggests that the probability of maintaining an R&D effort is negatively affected by unexpected deviations from investment plans. This effect of firm-specific uncertainty is in line with the result obtained for the variable EPU, which controls for uncertainty at the macroeconomic level (discussed below).

International Competition. The intensity for export earnings, Exp, controls for the firm’s exposure to international competition, thus adding further information to that captured by elasticity (see the Table 9 in the Appendix on the location of major competitors). The coefficient is negative and generally significant.Footnote 27 The literature (Antonelli et al., 2012; Coscollá-Girona et al., 2015; Hecker and Ganter, 2014; Máñez et al., 2015; Triguero and Córcoles, 2013; Peters, 2009; Masso and Vahter, 2008) finds a positive and strong relationship between exports and R&D. Lööf et al. (2015) point out that the openness of business sectors increases the likelihood that a firm accesses more information, exploits spillovers and thus increases its knowledge stock (the “learning-by-exporting” effect).

Uncertainty. All models were estimated with time-dummies as an alternative to the uncertainty index, EPU (we present the two cases for the specification with elasticity in Appendix A1.4). The coefficients of the time effects are jointly significant and negative in all specifications, capturing the 2008–2009 crisis. The same effect is more efficiently estimated by the EPU index, which also upholds the sunk cost theory and strengthens the dynamic link between current and past R&D activity.

Other Measurable Individual Characteristics. Individual characteristics such as age and ownership type (family and group) turn out to be insignificant in most of the specifications considered, apart from the Cox and probit models. If significant, group membership and the effect of ownership, captured by the dummies Group and Family, have a positive effect on the hazard rate. The dummies for the geographical area (Centre, South), taking Northern Italy as reference, represent the differences in institutional quality at the regional level. The coefficients are positive and significant, and the estimate of South is the highest.

Comparison of the effects. The estimated coefficient are like non-standardised regression coefficients, as they depend on the metric of each independent variable (unless it is a dummy variable). We can rely on exponentiated coefficients (always positive) that can be interpreted as hazard ratios. Making the computation for the Cloglog model with “mass points’ of Table 5, the hazard ratio for the dummy South tells us that firms operating in Southern Italy have a 116% higher innovation hazard than firms located in Northern Italy. Debt increases the hazard by 193% while internal funds, learning-by-exporting and accumulated knowledge reduce the hazard by 93%, 49% and 8%, respectively. The hazard for companies with inelastic demand (greater market power) is 45% lower than the hazard for companies with highly elastic demand. The hazard for large firms is 19% lower than for small firms.

4.1 Industry structure and aggregate investment

As innovation regimes vary dramatically across industries and there is significant firm-level heterogeneity within industries, a criticism of previous studies that have investigated the relationship between market power and industry R&D is to postulate an inverted-U pattern looking only at aggregated data (Aghion et al., 2005). Before delving into the results, a few words should be spent to illustrate the empirical context. Estimating heterogeneity by sector requires a long time span, so we exploit the unbalanced panel of 3,971 firms over the 1984–2012 period covering twenty-two groups of four-digit SIC codes (311 firms covering seventeen industries in the 1973–1994 period were used by Aghion et al. (2005)). We have twenty manufacturing industries, one Mining/electrical/gas/water industry (prod.& distr.), and one service industry (Communication, Software, R&D). The resulting industry-level panel is an unbalanced panel of 588 observations per industry year (354 in Aghion et al. (2005)).Footnote 28

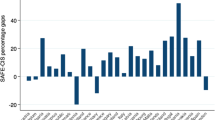

When exploring industry-level data, and to be coherent with Aghion et al. (2005) who use patent activity but assert that their results are robust to the use of R&D expenditure, we revert to the standard IO method and measure innovation input by the total amount of R&D investment per employee within each sector j in each year t (the amount may be null). Concentration is measured by \(share_{jt}\) as in Delbono and Lambertini (2022).Footnote 29 We estimate a quadratic function in which total R&D, at the aggregate level and by sector, is the dependent variable while the share of R&D performing firms within each sector and the square of the share are explanatory variables. The estimated results for the whole panel are displayed in the bottom left graph of Fig. 1. These results are robust to different estimation methods (GMM) and model’s specification (inclusion of industry and year effects, and dynamics).

The inverted U-shaped relationship of Aghion et al. (2005) is confirmed by the Italian panel data at the aggregate level. The results show that competition is positive at the aggregate level, as industries with a higher level of competition should have higher R&D. When competition is relatively low, increased (additional) competition leads to a larger increase in R&D than when the competition is relatively high. Furthermore, we expect R&D to decrease with competition as industries become more and more competitive.

However, at the disaggregated by-industry level, we have heterogeneous patterns. Among the twenty-two industries, we have selected the most emblematic cases, visualised in Fig. 1 and estimated in Table 6. Differences can be seen between sectors, from inverted U-shaped relationships of R&D and competition to U-shaped relations, passing through linear relationships. While, for example, the Aerospace, Computer, Pharmaceutical, Shipbuilding, Petroleum and most Low Tech. industries such as Textile (sectors 1, 2, 3, 5, 6, 8) have an inverted U-shaped relationship, the relationship is increasing-Arrovian in the Ferrous and Non-ferrous metal (sectors 12, 13), it is U-shaped in Fabr. metal, Non elec. machinery, Low Tech. Food/Tobacco industries and Communication/Software/R&D services (sectors 16, 17, 19, 21), and it is decreasing-Schumpeterian in the High Tech. Elec. machinery (sector 18). Delbono and Lambertini (2022) assume that high R&D productivity is characterised by an Arrovian pattern for drastic innovations and an inverted U-shaped pattern for small innovations; the Schumpeterian pattern characterises low R&D productivity.Footnote 30 The estimated share corresponding to the maximum R&D is lower than the average effective share in Aerospace and Pharmaceutical, while it is higher than the average effective share in Shipbuilding and Petroleum and is in line with the average effective share in Computer and Textile. The estimated share corresponding to the minimum R&D is higher than the average effective share in Non-metal min., Fabr. metal and Non elec. machinery, while it is lower than the average effective share in Communication/Software/R&D services.

Comparison between industries. The figure plots the estimated relationship between competition, on the x-axis, and R&D expenditure, on the y-axis. Each point represents an industry-year in the total plot, and a year in the by-industry plots. The overlaid estimated quadratic curves are reported in Table 6 for each industry. Industries: 1-Aerospace (Fabr. transport eq., HT); 2-Computer (Fabr. elect. eq.. HT); 3-Pharma (Chemicals, HT); 5-Shipbuilding (Fabr. transport eq., MLT); 6-Petroleum refining (MLT); 8-Textile/Clothing/Leather (LT); 9-Scient. instr. (Fabr. elect. eq., MHT); 11-Chemicals (MHT); 12-Fer. metal (Primary metals, MLT); 13-Non-fer. metal (Primary metals, MLT); 15-Non-met. min. (Minerals prod./glass/cement, MLT); 16-Fabr. metal (Fabr. metal/machinery/eq., MLT); 17-Non-el. mach. (Fabr.metal/machinery/eq., MHT); 18-Elec. machinery & Electronics (Fabr. elect. eq., HT/MHT); 19-Food/Tobacco (LT); 21-Communic./Software/R&D (HT)

The above findings show that the same non-monotone pattern does emerge in very heterogeneous industries, and therefore any merger proposal could and possibly should be assessed ex ante through analogous exercises to ascertain the nature and form of aggregate innovation efforts prior to the merger. It is also worth stressing that a similar argument holds even if the pattern is monotone. More explicitly, let us assume that Schumpeter is right. A horizontal merger should be allowed if technical progress prevails over any consideration of the price effect. Yet, merging firms should not get rid of any portion of their R&D plants to invoke the efficiency effect, as has been the case in the merger proposal designed by Dow and Dupont.

In this respect, a quick glance at Fig. 1 suggests a few additional considerations which help justifying the analysis of aggregate R&D curves at the sectoral level. Examine industries 1, 12 and 17, in which the related curves are, respectively, concave and single-peaked (1-Aerospace), concave and monotonically increasing (12-Primary metals) and U-shaped (17-Non-electrical machinery), and consider the impact of a horizontal merger in any of these sectors along the supply chain. For instance, if the merger takes place in the aerospace industry and, possibly, happens to drive the industry towards the peak of its own R&D curve - a fact that, in itself, may intuitively be brought forward to favour the merger proposal - the antitrust agency evaluating this merger proposal should also investigate its bearings on the technical progress characterising the two aforementioned industries operating upstream, in particular whether any of the firms in those sectors happens to belong to the same industrial group as one of the firms filing the merger proposal. In other words, a merger at any stage of the supply chain may systematically exert some relevant feedbacks along the entire supply chain, and this impact should be an integral part of the merger assessment. Similar considerations apply if one replaces industry 1 with 5 (Shipbuilding), industry 12 with 13 (Non-ferrous metals) and industry 17 with 18 (Electrical and electronic machinery).

All this is clearly connected with the long-standing discussion about the vertical externality affecting vertical relations along supply chains, and the related hold-up problem, pioneered by Williamson (1971) and later elaborated upon by Grout (1984); Rogerson (1992); MacLeod and Malcomson (1993), among others (a compact reconstruction of this debate can be found in Lambertini (2018)). Accordingly, the bearings of the intensity of competition (or industry structure) on R&D, both at the firm and industry level, should be reinterpreted theoretically as well as empirically, with the aim of providing a comprehensive assessment apt to put antitrust agencies in a position to take the most appropriate decisions concerning both vertical and horizontal mergers.

Last but not least, one should also consider that virtually any of the sectors whose R&D patterns appear in Figure 1 do operate at a global level, and their performance along many dimensions, including innovation, are shaped by international inter- and intraindustry trade as well as horizontal and vertical relations. The latter include mergers, supply and retail contracts, and the organization of R&D activities taking place along value chains in several forms, such as RJVs, possibly complemented by at least some degree of open innovation, whose relevance may in turn depend upon legal and institutional settings across countries. All of this prompts for a number of desirable extensions of the foregoing analysis, and indeed one has been recently probed by Tomàs-Porres et al. (2023). On the basis of a sample selected from the Spanish Technological Innovation Panel, this paper shows that persistence is reinforced by trade, the more so the larger is the relevant portion of the global economy in which a firm is actively involved. This seemingly suggests that the challenges posed by comparatively unfamiliar markets - so to speak - are a powerful incentive, a theme that would require further data, for example on the existence of innovation alliances.

5 Conclusions