Abstract

Minkowski space is shown to be globally stable as a solution to the Einstein–Vlasov system in the case when all particles have zero mass. The proof proceeds by showing that the matter must be supported in the “wave zone”, and then proving a small data semi-global existence result for the characteristic initial value problem for the massless Einstein–Vlasov system in this region. This relies on weighted estimates for the solution which, for the Vlasov part, are obtained by introducing the Sasaki metric on the mass shell and estimating Jacobi fields with respect to this metric by geometric quantities on the spacetime. The stability of Minkowski space result for the vacuum Einstein equations is then appealed to for the remaining regions.

We’re sorry, something doesn't seem to be working properly.

Please try refreshing the page. If that doesn't work, please contact support so we can address the problem.

Avoid common mistakes on your manuscript.

Introduction

It is of wide interest to understand the global dynamics of isolated self-gravitating systems in general relativity. Without symmetry assumptions, problems of this form present a great challenge even for systems arising from small data. In the vacuum, where no matter is present, the global properties of small data solutions were first understood in the monumental work of Christodoulou–Klainerman [10]. They show that Minkowski space is globally stable to small perturbations of initial data, i.e. the maximal development of an asymptotically flat initial data set for the vacuum Einstein equations which is sufficiently close to that of Minkowski space is geodesically complete, possesses a complete future null infinity and asymptotically approaches Minkowski space in every direction (see also Lindblad–Rodnianski [26], Bieri [2], and also Section 1.15 where these results, along with other related works, are discussed in more detail).

In the presence of matter, progress has been confined to models described by wave equations.Footnote 1 Here collisionless matter, described by the Einstein–Vlasov system, is considered. This is a model which has been widely studied in both the physics and mathematics communities; see the review paper of Andréasson [1] for a summary of mathematical work on the system. New mathematical difficulties are present since the governing equations for the matter are now transport equations, though in the case considered here, where the particles have zero mass and hence travel through spacetime along null curves, the decay properties of the function describing the matter are compatible in a nice way with those of the spacetime metric.

The Einstein–Vlasov system takes the form

The unknown is a Lorentzian manifold \((\mathcal {M},g)\) together with a particle density function \(f:P \rightarrow [0,\infty )\), defined on a subset \(P\subset T\mathcal {M}\) of the tangent bundle of \(\mathcal {M}\) called the mass shell. The function f(x, p) describes the density of the matter at \(x\in \mathcal {M}\) with velocity \(p\in P_x \subset T_x \mathcal {M}\). Here \((x^{\mu },p^{\mu })\) denote coordinates on the tangent bundle \(T\mathcal {M}\) with \(p^{\mu }\) conjugate to \(x^{\mu }\), so that (x, p) denotes the point \(p^{\mu } \partial _{x^{\mu }} \vert _x\) in \(T\mathcal {M}\). The Ricci curvature and scalar curvature of \((\mathcal {M},g)\) are denoted Ric, R respectively. The integral in (1) is taken with respect to a natural volume form, defined later in Section 2.2. The vector field \(X \in \Gamma (TT\mathcal {M})\) is the geodesic spray, i.e. the generator of the geodesic flow, of \((\mathcal {M},g)\). The Vlasov equation (2) therefore says that, given \((x,p) \in T\mathcal {M}\), if \(\gamma _{x,p}\) denotes the unique geodesic in \(\mathcal {M}\) such that \(\gamma _{x,p}(0) = x, \dot{\gamma }_{x,p}(0) = p\), then f is constant along \((\gamma _{x,p}(s), \dot{\gamma }_{x,p}(s))\), i.e. f is preserved by the geodesic flow of \((\mathcal {M},g)\). Equation (2) is therefore equivalent to

for all \(s \in \mathbb {R}\) such that the above expression is defined, where \(\exp _s : T\mathcal {M}\rightarrow T\mathcal {M}\) is the exponential map defined by \(\exp _s(x,p) = (\gamma _{x,p}(s), \dot{\gamma }_{x,p}(s))\).

In the case considered here, where the collisionless matter has zero mass, f is supported on the mass shell

a hypersurface in \(T\mathcal {M}\). In this case one sees, by taking the trace of (1), that the scalar curvature R must vanish for any solution of (1)–(2) and the Einstein equations reduce to

The main result is the following.

Theorem 1.1

Given a smooth asymptotically flat initial data set for the massless Einstein–Vlasov system suitably close to that of Minkowski Space such that the initial particle density function is compactly supported on the mass shell, the resulting maximal development is geodesically complete and possesses a complete future null infinity. Moreover the support of the matter is confined to the region between two outgoing null hypersurfaces, and each of the Ricci coefficients, curvature components and components of the energy momentum tensor with respect to a double null frame decay towards null infinity with quantitative rates.

The proof of Theorem 1.1, after appealing to the corresponding result for the vacuum Einstein equations, quickly reduces to a semi-global problem. This reduction is outlined below and the semi-global problem treated here is stated in Theorem 1.2.

Theorem 1.1 extends a result of Dafermos [12] which establishes the above under the additional restricted assumption of spherical symmetry. Note also the result of Rein–Rendall [29] which treats the massive case in spherical symmetry, where all of the particles have mass \(m>0\) (i.e. f is supported on the set of future pointing timelike vectors p in \(T\mathcal {M}\) such that \(g(p,p) = -m^2\)). The main idea in [12] was to show, using a bootstrap argument, that, for sufficiently late times, the matter is supported away from the centre of spherical symmetry. By Birkhoff’s Theorem the centre is therefore locally isometric to Minkowski space at these late times and the extension principle of Dafermos–Rendall [14] (see also [15]) then guarantees that the spacetime will be geodesically complete.

In these broad terms, a similar strategy is adopted here. The absence of good quantities satisfying monotonicity properties which are available in spherical symmetry, however, makes the process of controlling the support of the matter, and proving the semi-global existence result for the region where it is supported, considerably more involved. The use of Birkhoff’s Theorem and the Dafermos–Rendall extension principle also have to be replaced by the much deeper result of the stability of Minkowski space for the vacuum Einstein equations. The use of the vacuum stability result, which is in fact appealed to in two separate places, is outlined below.

The Uncoupled Problem

It is useful to first recall what happens in the uncoupled problem of the Vlasov equation on a fixed Minkowski background. Let \(v = \frac{1}{2}(t+r), u = \frac{1}{2}(t-r)\) denote standard null coordinates on Minkowski space \(\mathbb {R}^{3+1}\) (these form a well defined coordinate system on the quotient manifold \(\mathbb {R}^{3+1}/SO(3)\) away from the centre of spherical symmetry \(\{r=0\}\)) and suppose f is a solution of the Vlasov equation (2) with respect to this fixed background arising from initial data with compact support in space. From the geometry of null geodesics in Minkowski space it is clear that the projection of the support of f to the spacetime is related to the projection of the initial support of f as depicted in the Penrose diagram in Figure 1.

In particular, for sufficiently late advanced time \(v_0\) the matter will be supported away from the centre \(\{r=0\}\), and there exists a point \(q \in \mathbb {R}^{3+1}/SO(3)\), lifting to a (round) 2-sphere \(S \subset \mathbb {R}^{3+1}\), with \(r(q) >0\) such that

where \(J^-(S)\) denotes the causal past of S and \(\pi :P \rightarrow \mathcal {M}\) denotes the natural projection.

Initial Data and First Appeal to the Vacuum Result

Recall that initial data for the Einstein–Vlasov system (1)–(2) consists of a 3-manifold \(\Sigma \) with a Riemannian metric \(g_0\), a symmetric (0, 2) tensor K and an initial particle density function \(f_0\) satisfying the constraint equations,

for \(j=1,2,3\), where \(\mathrm {div}_0, \mathrm {tr}_0,R_0\) denote the divergence, trace and scalar curvature of \(g_0\) respectively, and \(T_{00}, T_{0j}\) denote (what will become) the 00 and 0j components of the energy momentum tensor. See [30] for a discussion of initial data for the Einstein–Vlasov system. The topology of \(\Sigma \) will here be assumed to be that of \(\mathbb {R}^3\). The issue of constructing solutions to the constraint equations (5) will not be treated here. A theorem of Choquet-Bruhat [4] guarantees that, given such an initial data set, a solution to (1)–(2) will exist locally in time.

The initial density function \(f_0\) is assumed to have compact support. It will moreover be assumed that \(f_0\) and a finite number of its derivatives will be small initially. The precise condition is given in Section 5. Note the assumption of compact support for \(f_0\) is in both the spatial variable x, and in the momentum variable p. As will become evident, the compact support in space is used in a crucial way. The assumption of compact support in momentum is made for simplicity and can likely be weakened.Footnote 2

Let \(B \subset \Sigma \) be a simply connected compact set such that \(\pi (\mathrm {supp}(f \vert _{P_{\Sigma }})) \subset B\), where \(P_{\Sigma }\) denotes the mass shell over \(\Sigma \). By the domain of dependence property of the Einstein–Vlasov system the development of the complement of B in \(\Sigma \), \(D^+(\Sigma \smallsetminus B)\), will solve the vacuum Einstein equations,

The stability of Minkowski space theorem for the vacuum Einstein equations then guarantees the stability of this region. See Klainerman–Nicolò [21] where exactly this situation is treated. In particular, provided \(g_0, K\) satisfy a smallness conditionFootnote 3 in \(\Sigma \smallsetminus B\) (i.e. they are suitably close to the \(g_0,K\) of Minkowski space), there exists a future complete, outgoing null hypersurface \(\mathcal {N}\) in this region which can be foliated by a family of 2-spheres, \(\{S_{u_0,v} \}\) parameterised by v, approaching the round 2-sphere as \(v \rightarrow \infty \). Moreover the Ricci coefficients and curvature components of the spacetime will decay to their corresponding Minkowski values and, by taking \(g_0, K\) suitably small, certain weighted quantities involving them can be made arbitrarily small on \(\mathcal {N}\). It will be assumed that \(g_0,K\) are sufficiently small so that the precise conditions stated in Theorem 5.1 are satisfied on \(\mathcal {N}\). A second appeal to a form of the stability of Minkowski space result in the vacuum (which can be shown to also follow from the Christodoulou–Klainerman Theorem [10] using upcoming work) will be made in Section 1.4 below.

Cauchy Stability

By Cauchy stability for the Einstein–Vlasov system (see Choquet-Bruhat [4] or Ringström [30]), Cauchy stability for the geodesic equations and the considerations of Section 1.1, provided the initial data on \(\Sigma \) are taken sufficiently small, there exists a 2-sphere \(S\subset \mathcal {M}\) and an incoming null hypersurface \(\underline{\mathcal {N}}\) such that \(S\subset \underline{\mathcal {N}}\), \(\mathrm {Area}(S) >0\), \(\pi (\text {supp}(f)) \cap S = \emptyset \), and

In other words, the existence of the point q in the Penrose diagram of Figure 1 is stable. It can moreover be assumed that the \(\mathcal {N}\) above and \(\underline{\mathcal {N}}\) intersect in one of the 2-spheres of the foliation of \(\mathcal {N}\),

where \(v_0\) can be chosen arbitrarily large. The induced data on \(\underline{\mathcal {N}}\) can be taken to be arbitrarily small, provided they are sufficiently small on \(\Sigma \).

A Second Version of the Main Theorem and Second Appeal to the Vacuum Result

A more precise version of the main result can now be stated. A final version, Theorem 5.1, is stated in Section 5.

Theorem 1.2

Given characteristic initial data for the massless Einstein–Vlasov system (1)–(2) on an outgoing null hypersurface \(\mathcal {N}\) and an incoming null hypersurface \(\underline{\mathcal {N}}\) as aboveFootnote 4, intersecting in a 2-sphere \(S_{u_0,v_0}\) of the foliation of \(\mathcal {N}\), then, if \(v_0\) is sufficiently large and the characteristic initial data are sufficiently smallFootnote 5, then there exists a unique spacetime \((\mathcal {M},g)\) endowed with a double null foliation (u, v) solving the characteristic initial value problem for (1)–(2) in the region \(v_0\le v < \infty \), \(u_0 \le u \le u_f\), where \(\mathcal {N} = \{u=u_0\}\), \(\underline{\mathcal {N}} = \{ v = v_0\}\), and \(u_f\) can be chosen large so that \(f=0\) on the mass shell over any point \(x\in \mathcal {M}\) such that \(u(x) \ge u_f -1\), i.e. \(\pi (\mathrm {supp}(f)) \subset J^-(\{u=u_f - 1\})\). Moreover each of the Ricci coefficients, curvature components and components of the energy momentum tensor (with respect to a double null frame) decay towards null infinity with quantitative rates.

This is depicted in Figure 2.

Theorem 1.1 follows from Theorem 1.2 by the considerations of Section 1.2, Section 1.3, and by another application of the vacuum stability of Minkowski space result with the induced data on a hyperboloid contained between the null hypersurfaces \(\{u = u_f\}\) and \(\{u = u_f-1\}\). The problem of stability of Minkowski space for the vacuum Einstein equations (6) with hyperboloidal initial data was treated by Friedrich [17], though his result requires the initial data to be asymptotically simple. This is, in general, inconsistent with the induced data arising from Theorem 1.2.Footnote 6 Whilst a proof of the hyperboloidal stability of Minkowski space problem with initial data compatible with Theorem 1.2 can most likely be distilled from the work [10], there is currently no precise statement to appeal to. In future work it will be shown how one can alternatively appeal directly to [10] by extending the induced scattering data at null infinity and solving backwards, in the style of [13].

A precise formulation of Theorem 1.1, including an explicit statement of the norms used in the first appeal to the vacuum result in Section 1.2 and the Cauchy stability argument of Section 1.3, will not be made here. The assumptions made in Theorem 5.1, the final version of Theorem 1.2, will be given some justification at various places in the introduction however. The remainder of the paper will concern Theorem 1.2, and in the remainder of the introduction its proof will be outlined. The greatest new difficulty is in obtaining a priori control over derivatives of f. The approach taken involves introducing the induced Sasaki metric on the mass shell P and estimating certain Jacobi fields on P in terms of geometric quantities on the spacetime \((\mathcal {M},g)\). This approach is outlined in Section 1.14 below.

Note that the analogue of Theorem 1.2 for the vacuum Einstein equations (6) follows from a recent result of Li–Zhu [25].

The Bootstrap Argument

The main step in the proof of Theorem 1.2 is in obtaining global a priori estimates for all of the relevant quantities. Once they have been established there is a standard procedure for obtaining global existence, which is outlined in Section 12. The remainder of the discussion is therefore focused on obtaining the estimates.

Moreover, using a bootstrap argument, it suffices to show that if the estimates already hold in a given bootstrap region of the form \(\{ u_0 \le u \le u' \} \cap \{ v_0 \le v \le v'\}\), depicted in Figure 3, then they can be recovered in this region with better constants independently of \(u',v'\). This is extremely useful given the strongly coupled nature of the equations.

The better constants in the bootstrap argument arise from either estimating the quantities by the initial data on \(\{v=v_0\}\) and \(\{ u = u_0\}\) or by \(\frac{1}{v_0}\), and using the smallness of the initial data and the largeness of \(v_0\). Recall that, in the setting of Theorem 1.1, both the largeness of \(v_0\) and the smallness of the induced data on \(\mathcal {N} = \{ u = u_0\}\), \(\underline{\mathcal {N}} = \{ v = v_0\}\) arise by taking the asymptotically flat Cauchy data on \(\Sigma \) to be suitably small.

The Double Null Gauge

The content of the Einstein equations is captured here through the structure equations and the null Bianchi equations associated to the double null foliation (u, v). The constant u and constant v hypersurfaces are outgoing and incoming null hypersurfaces respectively, and intersect in spacelike 2-spheres which are denoted \(S_{u,v}\). This choice of gauge is made due to its success in problems which require some form of the null conditionFootnote 7 to be satisfied.Footnote 8 See, for example, [7, 9, 13, 21, 27].

The foliation defines a double null frame (see Section 2.1) in which one can decompose the Ricci coefficients, which satisfy so called null structure equations, the Weyl (or conformal) curvature tensor, whose null decomposed components satisfy the null Bianchi equations, and the energy momentum tensor (which, by the Einstein equations (4), is equal to the Ricci curvature tensor).

It is the null structure and Bianchi equations which will be used, together with the Vlasov equation (2), to estimate the solution. Following the notation of [13, 27], the null decomposed Ricci coefficients will be schematically denoted \(\Gamma \). Two examples are the outgoing shear \(\hat{\chi }\), which is a (0, 2) tensor on the spheres \(S_{u,v}\), and the renormalised outgoing expansion \(\mathrm {tr}\chi - \frac{2}{r}\), which is a function on the spacetime, renormalised using the function r so that the corresponding quantity in Minkowski space will vanish.

The null decomposed components of the Weyl curvature tensor will be schematically denoted \(\psi \) and the null decomposed components of the energy momentum tensor will be schematically denoted \(\mathcal {T}\). This schematic notation, together with the p-index notation described in Section 1.8 below, will be used to convey structural properties of the equations which are heavily exploited later.

The Schematic Form of the Equations

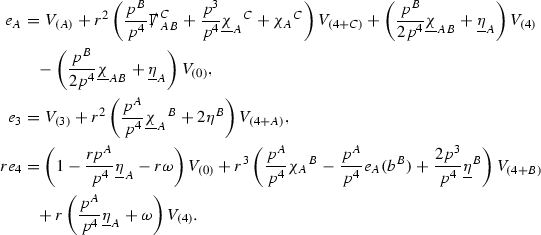

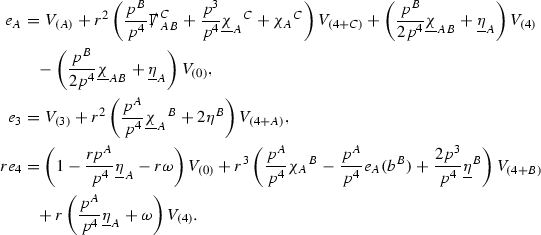

The null structure equations for the Ricci coefficients \(\Gamma \), which are stated in Section 2.5, take the following schematic form,

Here  and

and  denote the projections of the covariant derivatives in the incoming and outgoing null directions respectively to the spheres \(S_{u,v}\). The \(\frac{1}{r}\Gamma \) terms appear in the equations for the outgoing and incoming expansions \(\mathrm {tr}\chi - \frac{2}{r}, \mathrm {tr}\underline{\chi }+ \frac{2}{r}\), which are renormalised using the function r. Each \(\Gamma \) satisfies exactly one of the two form of equations (7) and hence are further decomposed as \(\overset{(3)}{\Gamma }\) or \(\overset{(4)}{\Gamma }\) depending on whether they satisfy an equation in the

denote the projections of the covariant derivatives in the incoming and outgoing null directions respectively to the spheres \(S_{u,v}\). The \(\frac{1}{r}\Gamma \) terms appear in the equations for the outgoing and incoming expansions \(\mathrm {tr}\chi - \frac{2}{r}, \mathrm {tr}\underline{\chi }+ \frac{2}{r}\), which are renormalised using the function r. Each \(\Gamma \) satisfies exactly one of the two form of equations (7) and hence are further decomposed as \(\overset{(3)}{\Gamma }\) or \(\overset{(4)}{\Gamma }\) depending on whether they satisfy an equation in the  or

or  direction respectively. It should be noted that there are further null structure equations satisfied by the Ricci coefficients which take different forms to (7), some of which will make an appearance later.

direction respectively. It should be noted that there are further null structure equations satisfied by the Ricci coefficients which take different forms to (7), some of which will make an appearance later.

The Weyl curvature components \(\psi \) can be further decomposed into Bianchi pairs, defined in Section 3.1, which are denoted \((\uppsi ,\uppsi ')\) (examples are \((\uppsi ,\uppsi ') = (\alpha ,\beta )\) or \((\beta ,(\rho ,\sigma ))\)). This notation is used to emphasise a special structure in the Bianchi equations, which take the form,

Here  denote certain angular derivative operators on the spheres of intersection of the double null foliation, and \(\nabla \mathcal {T}\) schematically denote projected covariant derivatives of \(\mathcal {T}\) in either the 3, 4 or angular directions.

denote certain angular derivative operators on the spheres of intersection of the double null foliation, and \(\nabla \mathcal {T}\) schematically denote projected covariant derivatives of \(\mathcal {T}\) in either the 3, 4 or angular directions.

The Ricci coefficients can be estimated using transport estimates for the null structure equations (7) since derivatives of \(\Gamma \) do not appear explicitly on the right hand sides of the equations. The transport estimates are outlined below in Section 1.11 and carried out in detail in Section 10. Note that using such estimates does, however, come with a loss, namely the expected fact that angular derivatives of \(\Gamma \) live at the same level of differentiability as curvature is not recovered. This fact can be recovered through a well known elliptic procedure, which is outlined below in Section 1.12 and treated in detail in Section 11. One cannot do the same for the curvature components and the Bianchi equations (8) due to the presence of the  terms on the right hand sides. In order to obtain “good” estimates for the Bianchi equations one must exploit the special structure which, if S denotes one of the spheres of intersection of the null foliation, takes the following form,

terms on the right hand sides. In order to obtain “good” estimates for the Bianchi equations one must exploit the special structure which, if S denotes one of the spheres of intersection of the null foliation, takes the following form,

i.e. the adjoint of the operator  is

is  . Using this structure, if one contracts the

. Using this structure, if one contracts the  equation with \(\uppsi \) and adds the

equation with \(\uppsi \) and adds the  equation contracted with \(\uppsi '\), the terms involving the angular derivatives will cancel upon integration and an integration by parts yields energy estimates for the Weyl curvature components. It is through this procedure that the hyperbolicity of the Einstein equations manifests itself in the double null gauge. These energy estimates form the content of Section 9 and, again, are outlined below in Section 1.10.

equation contracted with \(\uppsi '\), the terms involving the angular derivatives will cancel upon integration and an integration by parts yields energy estimates for the Weyl curvature components. It is through this procedure that the hyperbolicity of the Einstein equations manifests itself in the double null gauge. These energy estimates form the content of Section 9 and, again, are outlined below in Section 1.10.

We are therefore forced (at least at the highest order) to estimate the curvature components in \(L^2\). All of the estimates for the Ricci coefficients here will also be \(L^2\) based. In order to deal with the nonlinearities in the error terms of the equations, the same \(L^2\) estimates are obtained for higher order derivatives of the quantities and Sobolev inequalities are used to obtain pointwise control over lower order terms. Footnote 9 To do this, a set of differential operators \(\mathfrak {D}\) is introduced which satisfy the commutation principle of [13]. This says that the “null condition” satisfied by the equations (which is outlined below and crucial for the estimates) and the structure discussed above are preserved when the equations are commuted by \(\mathfrak {D}\), i.e. \(\mathfrak {D} \Gamma \) and \(\mathfrak {D} \psi \) satisfy similar equations to \(\Gamma \) and \(\psi \). The set of operators \(\mathfrak {D}\) is introduced in Section 3.3.

As they appear on the right hand side of the equations for \(\psi ,\Gamma \), the energy momentum tensor components \(\mathcal {T}\) are also, at the highest order, estimated in \(L^2\). These estimates are obtained by first estimating f using the Vlasov equation. It is important that the components of the energy momentum tensor, and hence also f, are estimated at one degree of differentiability greater than the Weyl curvature components \(\psi \). The main difficulty in this work is in obtaining such estimates for the derivatives of f. See Section 1.14 for an outline of the argument and Section 8 for the details.

The p-Index Notation and the Null Condition

The discussion in the previous section outlines how one can hope to close the estimates for \(\Gamma \) and \(\psi \) from the point of view of regularity. Since global estimates are required, it is also crucial that all of the error terms in the equations decay sufficiently fast in v (or equivalently, since everything takes place in the “wave zone” where \(r:= v-u+r_0\) is comparable to v, sufficiently fast in r) so that, when they appear in the estimates, they are globally integrable. For quasilinear wave equations there is an algebraic condition on the nonlinearity, known as the null condition, which guarantees this [20]. By analogy, we say the null structure and Bianchi equations “satisfy the null condition” to mean that, on the right hand sides of the equations, certain “bad” combinations of the terms do not appear. There is an excellent discussion of this in the introduction of [13]. As they are highly relevant, the main points are recalled here.

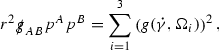

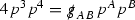

Following [13], the correct hierarchy of asymptotics in r for \(\Gamma \), \(\psi \) and \(\mathcal {T}\) is first guessed. This guess is encoded in the p-index notation. Each \(\Gamma ,\psi ,\mathcal {T}\) is labelled with a subscript p to reflect the fact that \(r^p\vert \Gamma _p \vert , r^p\vert \psi _p \vert , r^p\vert \mathcal {T}_p \vert \) are expected to be uniformly bounded.Footnote 10 Here \(\vert \cdot \vert \) denotes the norm with respect to the induced metric on the 2-spheres  . The weighted \(L^2\) quantities which will be shown to be uniformly bounded will imply, via Sobolev inequalities, that this will be the case at lower orders.

. The weighted \(L^2\) quantities which will be shown to be uniformly bounded will imply, via Sobolev inequalities, that this will be the case at lower orders.

In Theorem 5.1, the precise formulation of Theorem 1.2, it is asymptotics consistent with the p-index notation which will be assumed to hold on the initial outgoing hypersurface \(\mathcal {N} = \{ u=u_0\}\). In the context of Theorem 1.1, recall the use of the Klainerman–Nicolò [21] result in Section 1.2. The result of Klainerman–Nicolò guarantees that, provided the asymptotically flat Cauchy data on \(\Sigma \) has sufficient decay, there indeed exists an outgoing null hypersurface in the development of the data on which asymptotics consistent with the p-index notation hold.

Geometry of Null Geodesics and the Support of f

If the Ricci coefficients are assumed to have the asymptotics described in the previous section then it is straightforward to show that \(u_f\) can be chosen to have the desired property that \(f=0\) on the mass shell over any point \(x\in \mathcal {M}\) with \(u(x) \ge u_f - 1\). In fact, it can also be seen that the size of the support of f in \(P_x\), the mass shell over the point \(x\in \mathcal {M}\), will decay as \(v(x) \rightarrow \infty \). This decay is important as it is used to obtain the decay of the components of the energy momentum tensor. The argument for obtaining the decay properties of \(\mathrm {supp}(f)\) is outlined here and presented in detail in Section 7.

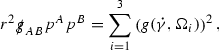

The decay of the size of the support of f in \(P_x\) can be seen by considering the decay of components of certain null geodesics. Suppose first that \(\gamma \) is a future directed null geodesic in Minkowski space emanating from a compact set in the hypersurface \(\{t=0\}\) such that the initial tangent vector \(\dot{\gamma }(0)\) is contained in a compact set in the mass shell over \(\{t=0\}\). One can show that, if

where \(e_1 = \partial _{\theta ^1},e_2 = \partial _{\theta ^2},e_3 = \partial _u,e_4 = \partial _v\) is the standard double null frame in Minkowski space, then the bounds,

hold uniformly along \(\gamma \) for some constant C.Footnote 11

The bounds (9) will be assumed to hold in \(\mathrm {supp}(f)\) in the mass shell over the initial hypersurface \(\{v = v_0\}\) in Theorem 5.1, the precise formulation of Theorem 1.2. In the setting of Theorem 1.1, the bounds (9) can be taken to hold on the hypersurface \(\underline{\mathcal {N}} = \{ v = v_0\}\) in view of the Cauchy stability argument of Section 1.3 and the fact that they hold globally in \(\mathrm {supp}(f)\) for the uncoupled problem of the Vlasov equation on a fixed Minkowski background.

The idea is now to propagate the bounds (9) from the initial hypersurface \(\{v=v_0\}\) into the rest of the spacetime. If \(e_1,\ldots ,e_4\) now denotes the double null frame of \((\mathcal {M},g)\) (defined in Section 2.1), one then uses the geodesic equations,

for a null geodesic \(\gamma \) with \(\dot{\gamma }(s) = p^{\mu }(s) e_{\mu }\vert _{\gamma (s)}\), a bootstrap argument and the pointwise bounds \(r^p\vert \Gamma _p \vert \le C\) to see that

The estimates (9) follow by integrating along \(\gamma \) since \(\frac{dr}{ds} \sim p^4(0)\).

Finally, to show the retarded time \(u_f\) can be chosen as desired, let u(s) denote the u coordinate of the geodesic \(\gamma \) at time s. Then

and hence \(\vert u(s) - u(0)\vert \le C\) for all \(s \in [0,\infty )\), for some constant C.

Global Energy Estimates for the Curvature Components

The global energy estimates for the Weyl curvature components can now be outlined. They are carried out in detail in Section 9. The Bianchi equations take the schematic form,

where c is a constant (which is different for the different \(\uppsi _{p'}'\)) and \(E_p\) is an error which will decay, according to the p notation, like \(\frac{1}{r^p}\). Similarly, \(E_{p' + \frac{3}{2}}\) is an error which will decay like \(\frac{1}{r^{p'+\frac{3}{2}}}\). Recall from equation (8) that the errors \(E_p\) and \(E_{p' + \frac{3}{2}}\) contain linear terms involving \(\Gamma \), nonlinear terms of the form \(\Gamma \cdot \psi \) and \(\Gamma \cdot \mathcal {T}\), and projected covariant derivatives of components of the energy momentum tensor \(\nabla \mathcal {T}\). Using (10) to compute \(Div \left( r^w \vert \uppsi _p \vert ^2 e_3 \right) \), \(Div \left( r^w \vert \uppsi _{p'}' \vert ^2 e_4 \right) \), after summing a cancellation will occur in the terms involving angular derivatives, as discussed in Section 1.7, and they can be rewritten as a spherical divergence. If the weight w is chosen correctly, a cancellationFootnote 12 also occurs in the \(c\mathrm {tr}\chi \uppsi _{p'}'\) term (which, since \(\mathrm {tr}\chi \) looks like \(\frac{2}{r}\) to leading order, cannot be included in the error \(E_{p'+ \frac{3}{2}}\)) and one is then left with,

where Div denotes the spacetime divergence and \(\mathcal {B}\) denotes a spacetime “bulk” region bounded to the past by the initial characteristic hypersurfaces, and to the future by constant v and constant u hypersurfaces. See Figure 3. Note that this procedure will generate additional error terms but they can be treated similarly to those arising from the errors in (10) and hence are omitted here. See Section 9 for the details.

If the curvature fluxes are defined as,

then by the divergence theorem, when the above identity (11) is summed over all Bianchi pairs \((\uppsi _p, \uppsi _{p'}')\), the left hand side becomes

Due to the relation between the weights \(w(\uppsi _p, \uppsi _{p'}')\) and \(p,p'\), and the bounds assumed for \(\Gamma \) and \(\mathcal {T}\) through the bootstrap argument, the right hand side of (11) can be controlled by,

for some constant C (which, of course, arises from inserting the bootstrap assumptions). It is this step where one sees the manifestation of the null condition in the Bianchi equations. Dropping the \(F^2_{u_0,u}(v)\) term on the left yields,

and hence, by the Grönwall inequality, \(F^1_{v_0,v}(u)\) can be controlled by initial data and the term \(\frac{C}{v_0}\). Returning to the inequality,

and inserting the above bounds for \(F^1_{v_0,v}(u)\), \(F^2_{u_0,u}(v)\) can now also be similarly controlled.

Global Transport Estimates for the Ricci Coefficients

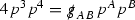

Turning now to the global estimates for the Ricci coefficients, which are treated in detail in Section 10, in the p-index notation the null structure equations take the form,

where again \(E_p\) is an error which decays, according to the p-index notation, like \(\frac{1}{r^p}\) and \(E_{p+2}\) decays like \(\frac{1}{r^{p+2}}\). Recall from equation (7) that \(E_{p}\) and \(E_{p+2}\) contain linear terms involving \(\Gamma , \psi , \mathcal {T}\), and quadratic terms of the form \(\Gamma \cdot \Gamma \). The  equations can be rewritten as

equations can be rewritten as

To estimate the \(\overset{(4)}{\Gamma }\) one then uses the identity, for a function h on \(\mathcal {M}\),

where the \(\mathrm {tr}\chi h\) term comes from the derivative of the volume form on \(S_{u,v}\), with \(h = r^{2p-2} \vert \overset{(4)}{\Gamma }_p\vert ^2\). The \(r^{-2}\) factor serves to cancel the \(\mathrm {tr}\chi \) term (which, recall, behaves like \(\frac{2}{r}\) and so is not globally integrable in v). Hence,

since the volume form is of order \(r^2\). Integrating in v from the initial hypersurface \(\{ v=v_0\}\) then gives,

Note that the error \(E_{p+2}\) is integrated over a \(u =\) constant hypersurface. These are exactly the regions on which the integrals of the Weyl curvature components were controlled in Section 1.10, and it is for this reason the curvature terms in the error \(E_{p+2}\) can be controlled in (12).

Since the volume form is of order \(r^2\), the bound (12) is consistent with \(\overset{(4)}{\Gamma }_p\) decaying like \(\frac{1}{r^p}\) and, after repeating the above with appropriate derivatives of \(\overset{(4)}{\Gamma }_p\), this pointwise decay can be obtained using Sobolev inequalities on the spheres.

It is not a coincidence that the \(\frac{p}{2}\) coefficient of \(\mathrm {tr}\chi \overset{(4)}{\Gamma }_p\) in the  equation is exactly that which is required to obtain \(\frac{1}{r^p}\) decay for \(\overset{(4)}{\Gamma }_p\). In fact some of the \(\overset{(4)}{\Gamma }_p\) will decay faster than this but the other null structure equations are required, along with elliptic estimates, to obtain this. It is therefore the \(\frac{p}{2}\) coefficient which determines the p index given here to the \(\overset{(4)}{\Gamma }\) as it restricts the decay which can be shown to hold using only the

equation is exactly that which is required to obtain \(\frac{1}{r^p}\) decay for \(\overset{(4)}{\Gamma }_p\). In fact some of the \(\overset{(4)}{\Gamma }_p\) will decay faster than this but the other null structure equations are required, along with elliptic estimates, to obtain this. It is therefore the \(\frac{p}{2}\) coefficient which determines the p index given here to the \(\overset{(4)}{\Gamma }\) as it restricts the decay which can be shown to hold using only the  equations. Note the difference with [13] where the authors are not constrained by this coefficient as they there integrate “backwards” from future null infinity.

equations. Note the difference with [13] where the authors are not constrained by this coefficient as they there integrate “backwards” from future null infinity.

Turning now to the equations in the 3 direction, the \(\overset{(3)}{\Gamma }_p\) quantities are estimated using the identity,

with \(h = r^{2p-2} \vert \overset{(3)}{\Gamma }_p\vert ^2\). It does not now matter that \(\mathrm {tr}\underline{\chi }\) only decays like \(\frac{1}{r}\) since the integration in u will only be up to the finite value \(u_f\).

Suppose first that \(\overset{(3)}{\Gamma }_p\) satisfies

where \(E_{p+1}\) decays like \(\frac{1}{r^{p+1}}\) and \(E_p^0\) decays like \(\frac{1}{r^p}\) but only contains Weyl curvature, energy momentum tensor and \(\overset{(4)}{\Gamma }\) terms which have already been estimated (the energy momentum tensor estimates are outlined below as they present the greatest difficulty but in the logic of the proof are estimated first). Then,

Integrating from \(u_0\) to u and inserting the bootstrap assumptions and the previously obtained bounds for \(E_p^0\), the Grönwall inequality then gives,

where \(\varepsilon _0\) controls the size of the initial data. Note that it was important that the only error terms which have not already been estimated are of the form \(E_{p+1}\), and not \(E_p\), in order to gain the \(\frac{1}{v_0}\) smallness factor. It turns out that there is a reductive structure in the null structure equations so that, provided they are estimated in the correct order, each \(\overset{(3)}{\Gamma }\) satisfies an equation of the form (13) where \(E_p^0\) now also contains \(\overset{(3)}{\Gamma }\) terms which have been estimated previously. Hence all of the \(\overset{(3)}{\Gamma }\) can be estimated with smallness factors.

Elliptic Estimates and Ricci Coefficients at the Top Order

The procedure in Section 1.11 is used to estimate the Ricci coefficients, along with their derivatives at all but the top order, in \(L^2\) of the spheres of intersection of constant u and constant v hypersurfaces. The derivatives of Ricci coefficients at the top order are estimated only in \(L^2\) on null hypersurfaces. These estimates are obtained using elliptic equations on the spheres for some of the Ricci coefficients, coupled to transport equations for certain auxilliary quantities. This procedure is familiar from many other works (e.g. [9, 10]) and forms the content of Section 11. It should be noted that these estimates are only required here for estimating the components of the energy momentum tensor. If one were to restrict the semi-global problem of Theorem 1.2 to the case of the vacuum Einstein equations (6) then the estimates for the Ricci coefficients and curvature components could be closed with a loss (i.e. without knowing that angular derivatives of Ricci coefficients lie at the same degree of differentiability as the Weyl curvature components) as only the null structure equations of the form (7) would be used, and these elliptic estimates would not be required. See Section 1.7.

Global Estimates for the Energy Momentum Tensor Components

At the zeroth order the estimates for the energy momentum tensor components follow directly from the bounds (9), which show that the size of the region \(\mathrm {supp}(f\vert _{P_x}) \subset P_x\) on which the integral in (1) is taken is decaying as \(r(x) \rightarrow \infty \), and the fact that f is conserved along trajectories of the geodesic flow. For example, using the volume form for \(P_x\) defined in Section 2.2, if \(\sup _{\{v=v_0\}} \vert f \vert \le \varepsilon _0\),

since  . In fact, provided the derivatives of f can be estimated, the estimates for the derivatives of \(\mathcal {T}\) are obtained in exactly the same way.

. In fact, provided the derivatives of f can be estimated, the estimates for the derivatives of \(\mathcal {T}\) are obtained in exactly the same way.

Global Estimates for Derivatives of f

A fundamental new aspect of this work arises in obtaining estimates for the derivatives of f. Recall from Section 1.7 that, in order to close the bootstrap argument, it is crucial that the energy momentum tensor components \(\mathcal {T}\), and hence f, are estimated at one degree of differentiability greater than the Weyl curvature components, i.e. k derivatives of f must be estimated using only \(k-1\) derivatives of \(\psi \). Written in components with respect to the frameFootnote 13 \(e_1,e_2,e_3,e_4, \partial _{p^1},\partial _{p^2},\partial _{p^4}\) for P, the Vlasov equation (2) takes the form,

where \(\Gamma _{\nu \lambda }^{\mu }\) denote the Ricci coefficients of \(\mathcal {M}\). See (24)–(28) below. One way to estimate derivatives of f is to commute this equation with suitable vector fields and integrate along trajectories of the geodesic flow. If V denotes such a vector field, commuting will give,

where E is an error involving terms of the form \(V(\Gamma _{\nu \lambda }^{\mu })\). At first glance this seems promising as derivatives of the Ricci coefficients should live at the same level of differentiability as the Weyl curvature components \(\psi \). This is not the case for all of the \(\Gamma _{\nu \lambda }^{\mu }\) however, for example if V involves an angular derivative then \(V(\Gamma _{4A}^B)\), for \(A,B = 1,2\), will contain two angular derivatives of the vector field b. See (17) below for the definition of b and (26) for \(\Gamma _{4A}^B\). The vector field b is estimated through an equation of the form,

and hence, commuting twice with angular derivatives and using the elliptic estimates described in Section 1.12 will only give estimates for two angular derivatives of b by first order derivatives of \(\psi \) and \(\mathcal {T}\). The angular derivatives of the spherical Christoffel symbols  , see (22) below, which also appear when commuting the Vlasov equation give rise to similar issues.

, see (22) below, which also appear when commuting the Vlasov equation give rise to similar issues.

Whilst it may still be the case that E as a whole (rather than each of its individual terms) can be estimated just at the level of \(\psi \), a different approach is taken here in order to see more directly that derivatives of f can be estimated at the level of \(\psi \). This approach, which is treated in detail in Section 8, is outlined now.

Consider again a vector \(V \in T_{(x,p)} P\). Recall the form of the Vlasov equation (3). Using this expression for f and the chain rule,

for any s, and hence, if \(J(s) := d \exp _s \vert _{(x,p)} V\),

If \(s<0\) is taken so that \(\pi (\exp _s(x,p)) \in \{ v = v_0\}\) then the expression (14) relates a derivative of f at (x, p) to a derivative of f on the initial hypersurface. It therefore remains to estimate the components of J(s), with respect to a suitable frame for P, uniformly in s and independently of the point (x, p).

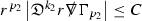

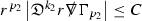

The metric g on the spacetime \(\mathcal {M}\) can be used to define a metric on the tangent bundle \(T\mathcal {M}\), known as the Sasaki metric [33], which by restriction defines a metric \(\hat{g}\) on the mass shell P. See Section 4 where this metric is introduced. With respect to this metric trajectories of the geodesic flow \(s\mapsto \exp _s(x,p)\) are geodesics in P and, for any vector \(V\in T_{(x,p)}P\), \(J(s) := d \exp _s \vert _{(x,p)} V\) is a Jacobi field along this geodesic (see Section 4). Therefore J(s) satisfies the Jacobi equation,

where \(\hat{\nabla }\) denotes the induced connection on P, and \(\hat{R}\) denotes the curvature tensor of \((P,\hat{g})\). Equation (15) is used, as a transport equation along the trajectories of the geodesic flow, to estimate the components of J. The curvature tensor \(\hat{R}\) can be expressed in terms of (vertical and horizontal lifts of) the curvature tensor R of \((\mathcal {M},g)\) along with its first order covariant derivatives \(\nabla R\). See equation (90). At first glance the presence of \(\nabla R\) again appears to be bad. On closer inspection, however, the terms involving covariant derivatives of R are always derivatives in the “correct” direction so that they can be recovered by the transport estimates, and the components of J, and hence Vf, can be estimated at the level of \(\psi \).

The above observations of course only explain how one can hope to close the estimates for \(\mathcal {T}\) from the point of view of regularity. In order to obtain global estimates for the components of J one has to use the crucial fact that, according to the p-index notation, the right hand side of the Jacobi equation \(\hat{R}(X,J)X\), when written in terms of \(\psi , \mathcal {T}, p^1, p^2, p^3,p^4\), decays sufficiently fast as to be twice globally integrable along \(s \mapsto \exp _s(x,p)\). This can be viewed as a null condition for the Jacobi equation and is brought to light through further schematic notation introduced in Section 8.2.

The fact that the right hand side of (15) has sufficient decay in r is perhaps not surprising. Consider for example the term

in \(\hat{R}(X,J)X\). Here \(\gamma \) is a geodesic in \(\mathcal {M}\) such that \(\exp _s(x,p) = (\gamma (s), \dot{\gamma }(s))\) and \(J^h\) is a vector field along \(\gamma \) on \(\mathcal {M}\) such that, together with another vector field \(J^v\) along \(\gamma \),

with \(\mathrm {Hor}_{(\gamma ,\dot{\gamma })}\) and \(\mathrm {Ver}_{(\gamma ,\dot{\gamma })}\) denoting horizontal and vertical lifts at \((\gamma ,\dot{\gamma })\) (defined in Section 4). The slowest decaying \(\psi \) and \(\mathcal {T}\) are those which contain the most \(e_3\) vectors. Whenever such \(\psi \) and \(\mathcal {T}\) arise in (16) however, they will typically be accompanied by \(p^3(s)\), the \(e_3\) component of \(\dot{\gamma }(s)\), which (recall from Section 1.9) has fast \(\frac{1}{r(s)^2}\) decay. Similarly the non-decaying \(p^4(s)\), the \(e_4\) component of \(\dot{\gamma }(s)\), can only appear in (16) accompanied by the \(\psi \) and \(\mathcal {T}\) which contain \(e_4\) vectors and hence have fast decay in r. In particular, potentially slowly decaying terms involving \(p^4(s)\) multiplying the \(\psi \) and \(\mathcal {T}\) which contain no \(e_4\) vectors do not arise in (16).

Finally, since Jf now itself is also conserved along \(s\mapsto \exp _s(x,p)\), second order derivatives of f can be obtained by repeating the above. If \(J_1,J_2\) denote Jacobi fields corresponding to vectors \(V_1,V_2\) at (x, p) respectively, then,

In order to control \(V_2 V_1 f (x,p)\) it is therefore necessary to estimate the \(J_2\) derivatives of the components of \(J_1\) along \(s\mapsto \exp _s(x,p)\). This is done by commuting the Jacobi equation (15) and showing that the important structure described above is preserved. The Jacobi fields which are used, and hence the vectors V used to take derivatives of f, have to be carefully chosen. They are defined in Section 8.3.

Note that this procedure can be repeated to obtain higher order derivatives of f. Whilst the pointwise bounds on \(\psi \) at lower orders mean that lower order derivatives of f can be estimated pointwise, at higher orders this procedure will generate terms involving higher order derivatives of \(\psi \) and hence higher order derivatives of \(\mathcal {T}\) must be estimated in \(L^2\) on null hypersurfaces. In fact, at the very top order, \(\mathcal {T}\) is estimated in the spacetime \(L^2\) norm.

Related Previous Stability Results in General Relativity

There are several related previous works on the stability of Minkowski space for the Einstein equations coupled to various matter models. Without simplifying symmetry assumptions, the first such work was that of Christodoulou–Klainerman [10]. They show that, given an initial data set for the vacuum Einstein equations, satisfying an appropriate asymptotic flatness condition, which is sufficiently close to that of Minkowski space, the resulting maximal development is geodesically complete, possesses a complete future null infinity, and asymptotically approaches Minkowski space with quantitative rates. The result, more fundamentally, provided the first examples of smooth, geodesically complete, asymptotically flat solutions to the vacuum Einstein equations, other than Minkowski space itself. The existence of such spacetimes if far from trivial. The proof relies on foliating the spacetimes they construct by the level sets of a so called maximal time function, along with another, null, foliation by the level sets an optical function. Detailed behaviour of the solutions are obtained, along with various applications including a rigorous derivation of the Bondi mass loss formula.

The proof of Christodoulou–Klainerman was generalised by Zipser [36], who showed that the analogue of their theorem holds for electromagnetic matter described by the Maxwell equations. The proof of this generalisation again relies on foliating the spacetimes by the level hypersurfaces of a maximal time and optical function.

The Christodoulou–Klainerman proof was later revisited by Klainerman–Nicolò [21] who showed the stability of the domain of dependence of the complement of a ball in a standard spacelike hypersurface in Minkowski space. Their smallness condition on initial data is similar to that of Christodoulou–Klainerman, however the Klainerman–Nicolò proof is based on a double null foliation, defined as the level hypersurfaces of two, outgoing and incoming, optical functions. The proof of Theorem 1.2 in this work is based on a similar approach and moreover, since the Klainerman–Nicolò result is appealed to in its proof, the smallness condition required in Theorem 1.1 is similar to that of [21]. See Section 1.2.

A new proof of the stability of Minkowski space for the vacuum Einstein equations using the harmonic gauge, the gauge originally used by Choquet-Bruhat [3] to prove local existence for the vacuum Einstein equations, was developed by Lindblad–Rodnianski [26]. Their proof essentially reduces to a small data global existence proof for a system of quasilinear wave equations and, despite the equations failing to satisfy the classical null condition of Klainerman [20], is relatively technically simple. The proof moreover requires a weaker asymptotic flatness condition on the data, compared to [10], and also allows for coupling to matter described by a massless scalar field. The asymptotic behaviour obtained for the solutions is less precise, however, than in [10].

The Christodoulou–Klainerman proof was returned to again by Bieri [2], who imposes a weaker asymptotic flatness condition on the initial data, in terms of decay, and is able to close the proof using fewer derivatives of the solution than [10]. The proof again, as in [10], is based on a maximal–null foliation of the spacetimes.

The stability of Minkowski space problem for the Einstein–Maxwell system, as studied by Zipser, was returned to by Loizelet [24], this time using the harmonic gauge approach of Lindblad–Rodnianski. The harmonic gauge approach was also used by Speck [34], who considers the Einstein equations coupled to a large class of electromagnetic equations, which are derivable from a Lagrangian and reduce to the Maxwell equations in an appropriate limit. A recent result of LeFloch–Ma [23] on the problem for the Einstein–Klein–Gordon system also uses the harmonic gauge approach (see also [35]).

Finally, there are more global stability results for the Einstein equations with a positive cosmological constant, for example the works of Friedrich [17], Ringström [30] and Rodnianski–Speck [31]. A more comprehensive list can be found in the introduction to the work of Had z̆ ić–Speck [19].

Outline of the Paper

In the next section coordinates are defined on the spacetime to be constructed, and on the mass shell P. The Ricci coefficients and curvature components are introduced along with their governing equations. In Section 3 the schematic form of the quantities and equations are given. Three derivative operators are then introduced which are shown to preserve the schematic form of the equations under commutation. Some facts about the Sasaki metric are recalled in Section 4 and are used to describe certain geometric properties of the mass shell. A precise statement of Theorem 1.2 is given in Section 5, along with the statement of a bootstrap theorem. The proof of the bootstrap theorem is given in the following sections. The main estimates are obtained for the energy momentum tensor components, Weyl curvature components and lower order derivatives of Ricci coefficients in Sections 8, 9 and 10 respectively. The estimates for the Ricci coefficients at the top order are obtained in Section 11. The results of these sections rely on the Sobolev inequalities of Section 6, and the decay estimates for the size of \(\mathrm {supp}(f\vert _{P_x}) \subset P_x\) as x approaches null infinity from Section 7. The fact that the retarded time \(u_f\) can be chosen to have the desired property, stated in Theorem 1.2, is also established in Section 7. Finally, the completion of the proof of Theorem 1.2, through a last slice argument, is outlined in Section 12.

Basic Setup

Throughout this section consider a smooth spacetime \((\mathcal {M},g)\) where \(\mathcal {M} = [u_0,u'] \times [v_0,v') \times S^2\), for some \(u_0 < u' \le u_f\), \(v_0 < v' \le \infty \), is a manifold with corners and g is a smooth Lorentzian metric on \(\mathcal {M}\) such that \((\mathcal {M},g)\), together with a continuous function \(f:P\rightarrow [0,\infty )\), smooth on \(P\smallsetminus Z\), where Z denotes the zero section, satisfy the Einstein–Vlasov system (1)–(2).

Coordinates and Frames

A point in \(\mathcal {M}\) will be denoted \((u,v,\theta ^1,\theta ^2)\). It is implicitly understood that two coordinate charts are required on \(S^2\). The charts will be defined below using two coordinate charts on \(S_{u_0,v_0} = \{u = u_0\} \cap \{ v = v_0\}\). Assume u and v satisfy the Eikonal equation

Following [9, 21], define null vector fields

and the function \(\Omega \) by

Let \((\theta ^1,\theta ^2)\) be a coordinate system in some open set \(U_1\) on the initial sphere \(S_{u_0,v_0}\). These functions can be extended to define a coordinate system \((u,v,\theta ^1,\theta ^2)\) on an open subset of the spacetime as follows. Define \(\theta ^1,\theta ^2\) on \(\{ u = u_0\}\) by solving

Then extend to \(u>u_0\) by solving

This defines coordinates \((u,v,\theta ^1,\theta ^2)\) on the region \(D(U_1)\) defined to be the image of \(U_1\) under the diffeomorphisms generated by L on \(\{u=u_0\}\), then by the diffeomorphisms generated by \(\underline{L}\). Coordinates can be defined on another open subset of the spacetime by considering coordinates in another region \(U_2 \subset S_{u_0,v_0}\) and repeating the procedure. These two coordinate charts will cover the entire region of the spacetime in question provided the charts \(U_1,U_2\) cover \(S_{u_0,v_0}\). The choice of coordinates on \(U_1,U_2\) is otherwise arbitrary.

The spheres of constant u and v will be denoted \(S_{u,v}\) and the restriction of g to these spheres will be denoted  . A vector field V on \(\mathcal {M}\) will be called an \(S_{u,v}\) vector field if \(V_x \in T_{x} S_{u(x),v(x)}\) for all \(x \in \mathcal {M}\). Similarly for (r, 0) tensors. A one form \(\xi \) is called an \(S_{u,v}\) one form if \(\xi (L) = \xi (\underline{L}) = 0\). Similarly for (0, s), and for general (r, s) tensors.

. A vector field V on \(\mathcal {M}\) will be called an \(S_{u,v}\) vector field if \(V_x \in T_{x} S_{u(x),v(x)}\) for all \(x \in \mathcal {M}\). Similarly for (r, 0) tensors. A one form \(\xi \) is called an \(S_{u,v}\) one form if \(\xi (L) = \xi (\underline{L}) = 0\). Similarly for (0, s), and for general (r, s) tensors.

In these coordinates the metric takes the form

where b is a vector field tangent to the spheres \(S_{u,v}\), which vanishes on the initial hypersurface \(\{u=u_0\}\). Note that, due to the remaining gauge freedom, \(\Omega \) can be specified on \(\{u=u_0\}\) and \(\{v=v_0\}\). Since, in Theorem 5.1, it is assumed that \(\left| \frac{1}{\Omega ^2} - 1 \right| \) and  are small on \(\{u=u_0\}\), it is convenient to set \(\Omega =1\) on \(\{u=u_0\}\) so that they both vanish.

are small on \(\{u=u_0\}\), it is convenient to set \(\Omega =1\) on \(\{u=u_0\}\) so that they both vanish.

Integration of a function \(\phi \) on \(S_{u,v}\) is defined as

where \(\tau _1,\tau _2\) is a partition of unity subordinate to \(D_{U_1},D_{U_2}\) at u, v.

Define the double null frame

and let \((p^{\mu }; \mu = 1,2,3,4)\), denote coordinates on each tangent space to \(\mathcal {M}\) conjugate to this frame, so that the coordinates \((x^{\mu },p^{\mu })\) denote the point

where \(x = (x^{\mu })\). This then gives a frame, \(\{e_{\mu }, \partial _{p^{\mu }} \mid \mu = 1,2,3,4 \}\), on \(T\mathcal {M}\). The Vlasov equation (2) written with respect to this frame takes the form

where \(\Gamma ^{\mu }_{\nu \lambda }\) are the Ricci coefficients of g with respect to the null frame (18). For f as a function on the mass shell P, this reduces to,

where \(\hat{\mu }\) now runs over 1, 2, 4, and \(\overline{p}^1,\overline{p}^2,\overline{p}^4\) denote the restriction of the coordinates \(p^1,p^2,p^4\) to P, and \(\partial _{\overline{p}^{\hat{\mu }}}\) denote the partial derivatives with respect to this restricted coordinate system. Using the mass shell relation (21) below one can easily check,

Note that Greek indices, \(\mu ,\nu ,\lambda \), etc. will always be used to sum over the values 1, 2, 3, 4, whilst capital Latin indices, A, B, C, etc. will be used to denote sums over only the spherical directions 1, 2. In Section 8 lower case latin indices i, j, k, etc. will be used to denote summations over the values \(1,\ldots ,7\).

Remark 2.1

A seemingly more natural null frame to use on \(\mathcal {M}\) would be

Dafermos–Holzegel–Rodnianski [13] use the same “unnatural” frame for regularity issues on the event horizon. The reason for the choice here is slightly different and is related to the fact that \(\underline{\omega }\), defined below, is zero in this frame.

Null Geodesics and the Mass Shell

Recall that the mass shell \(P \subset T\mathcal {M}\) is defined to be the set of future pointing null vectors. Using the definition of the coordinates \(p^{\mu }\) and the form of the metric given in the previous section one sees that, since all of the particles have zero mass, i.e. since f is supported on P, the relation

is true in the support of f. The identity (21) is known as the mass shell relation.

The mass shell P is a 7 dimensional hypersurface in \(T\mathcal {M}\) and can be parameterised by coordinates \((u,v,\theta ^1,\theta ^2,p^1,p^2,p^4)\), with \(p^3\) defined by (21).

To make sense of the integral in the definition of the energy momentum tensor (1) one needs to define a suitable volume form on the mass shell, \(P_x\), over each point \(x\in \mathcal {M} \cap \{u\le u_f\}\). Since \(P_x\) is a null hypersurface it is not immediately clear how to do this. Given such an x, the metric on \(\mathcal {M}\) defines a metric on \(T_x \mathcal {M}\),

which in turn defines a volume form on \(T_x\mathcal {M}\),

A canonical one-form normal to \(P_x\) can be defined as the differential of the function \(\Lambda _X : T_x \mathcal {M} \rightarrow \mathbb {R}\) which measures the length of \(X\in T_x \mathcal {M}\),

Taking the normal \(-\frac{1}{2} d \Lambda _x\) to \(P_x\), the volume form (in the \((u,v,\theta ^1,\theta ^2,p^1,p^2,p^4)\) coordinate system) can be defined as

This is the unique volume form on \(P_x\) compatible with the normal \(-\frac{1}{2} d \Lambda _x\) in the sense that

and if \(\xi \) is another 3-form on \(P_x\) such that

then

See Section 5.6 of [32].

The energy momentum tensor at \(x\in \mathcal {M}\) therefore takes the form

Ricci Coefficients and Curvature Components

Following the notation of [9] (see also [10, 21]), define the Ricci coefficients

The null second fundamental forms \(\chi , \underline{\chi }\) are decomposed into their trace and trace free parts

Note that due to the choice of frame, since \(e_3\) is an affine geodesic vector field, \(\underline{\omega }:= \frac{1}{2} g(\nabla _{e_3} e_4, e_3) = 0\). Also note that in this frame \(\zeta _A := \frac{1}{2} g(\nabla _{e_A} e_4, e_3) = - \underline{\eta }_A\). The Christoffel symbols of  with respect to the frame \(e_1,e_2\) are denoted

with respect to the frame \(e_1,e_2\) are denoted  ,

,

Define also the null Weyl curvature components

HereFootnote 14

is the Weyl, or conformal, curvature tensor of \((\mathcal {M},g)\) and \({}^* W\) denotes the hodge dual of W,

where \(\epsilon \) is the spacetime volume form of \((\mathcal {M},g)\).

Define the \(S_{u,v}\) (0,2)-tensor  to be the restriction of the energy momentum tensor defined in equation (1) to vector fields tangent to the spheres \(S_{u,v}\):

to be the restriction of the energy momentum tensor defined in equation (1) to vector fields tangent to the spheres \(S_{u,v}\):

Similarly let  denote the \(S_{u,v}\) 1-forms defined by restricting the 1-forms \(T(e_3, \cdot ), T(e_4, \cdot )\) to vector fields tangent to the spheres \(S_{u,v}\):

denote the \(S_{u,v}\) 1-forms defined by restricting the 1-forms \(T(e_3, \cdot ), T(e_4, \cdot )\) to vector fields tangent to the spheres \(S_{u,v}\):

Finally, let  denote the functions

denote the functions

The Minkowski Values

For the purpose of renormalising the null structure and Bianchi equations, define the following Minkowski values of the metric quantities using the function \(r:=v-u + r_0\), with \(r_0 >0\) a constant chosen to make sure \(r\ge \inf _{u} \mathrm {Area}(S_{u,v_0})\),

where \(\gamma \) is the round metric on the unit sphere. Similarly, define

and let  denote the spherical Christoffel symbols of the metric

denote the spherical Christoffel symbols of the metric  , so that,

, so that,

where  is the Levi-Civita connection of

is the Levi-Civita connection of  . These are the only non-identically vanishing Ricci coefficients in Minkowski space. All curvature components vanish, as do all components of the energy momentum tensor.

. These are the only non-identically vanishing Ricci coefficients in Minkowski space. All curvature components vanish, as do all components of the energy momentum tensor.

Note that the function r in general does not have the geometric interpretation as the area radius of the spheres \(S_{u,v}\). Note also that

in the region \(u_0 \le u \le u_f, v_0 \le v < \infty \), for some constant \(C > 0\).

The Renormalised Null Structure and Bianchi Equations

The Bianchi equations,

written out in full using the table of Ricci coefficients,

take the formFootnote 15

Here for an \(S_{u,v}\) 1-form \(\xi \),  denotes the transpose of the derivative of \(\xi \),

denotes the transpose of the derivative of \(\xi \),

The left Hodge-dual \({}^{*}\) is defined on \(S_{u,v}\) one forms and (0, 2) \(S_{u,v}\) tensors by

respectively. Here  denotes the volume form associated with the metric

denotes the volume form associated with the metric  and, for a (0, 2) \(S_{u,v}\) tensor \(\xi \),

and, for a (0, 2) \(S_{u,v}\) tensor \(\xi \),

The symmetric traceless product of two \(S_{u,v}\) one forms is defined by

and the anti-symmetric products are defined by

for two \(S_{u,v}\) one forms and \(S_{u,v}\) (0, 2) tensors respectively. Also,

for \(S_{u,v}\) (0, 2) tensors \(\xi ,\xi '\). The symmetric trace free derivative of an \(S_{u,v}\) 1-form is defined as

Finally define the  inner product of two (0, n) \(S_{u,v}\) tensors

inner product of two (0, n) \(S_{u,v}\) tensors

and the norm of a (0, n) \(S_{u,v}\) tensor

The notation \(\vert \cdot \vert \) will also later be used when applied to components of \(S_{u,v}\) tensors to denote the standard absolute value on \(\mathbb {R}\). See Section 6. It will always be clear from the context which is meant, for example if \(\xi \) is an \(S_{u,v}\) 1-form then \(\vert \xi \vert \) denotes the  norm as above, whilst \(\vert \xi _A \vert \) denotes the absolute value of \(\xi (e_A)\).

norm as above, whilst \(\vert \xi _A \vert \) denotes the absolute value of \(\xi (e_A)\).

The null structure equations for the Ricci coefficients and the metric quantities in the 3 direction, suitably renormalised using the Minkowski values, take the form

and in the 4 direction

Through most of the text, when referring to the null structure equations it is the above equations which are meant. The following null structure equations on the spheres will also be used in Section 11,

where K denotes the Gauss curvature of the spheres  .

.

The additional propagation equations for \(\hat{\chi }, \hat{\underline{\chi }}\),

will also be used in Section 11 to derive propagation equations for the mass aspect function \(\mu , \underline{\mu }\) defined later. Here  is the trace free part of

is the trace free part of  .

.

The following first variational formulas for the induced metric on the spheres will also be used,

where \(\mathcal {L}\) denotes the Lie derivative.

There are additional null structure equations but, since they will not be used here, are omitted.

The Schematic Form of the Equations and Commutation

In this section schematic notation is introduced for the Ricci coefficients, curvature components and components of the energy momentum tensor, which is used to isolate the structure in the equations that is important for the proof of Theorem 1.2. A collection of differential operators is introduced and it is shown that this structure remains present after commuting the equations by any of the operators in the collection. This section closely follows Section 3 of [13] where this notation was introduced.

Schematic Notation

Consider the collection of Ricci coefficientsFootnote 16 which are schematically denoted \(\Gamma \),

Note that the \(\Gamma \) are normalised so that each of the corresponding quantities in Minkowski space is equal to zero. In the proof of the main result it will be shown that each \(\Gamma \) converges to zero as \(r\rightarrow \infty \) in the spacetimes considered. Each \(\Gamma \) will converge with a different rate in r and so, to describe these rates, each \(\Gamma \) is given an index, p, to encode the fact that, as will be shown in the proof of the main result, \(r^p \vert \Gamma _p \vert \) will be uniformly bounded. The p-indices are given as follows,

so that \(\Gamma _1\) schematically denotes any of the quantities  etc. It may be the case, for a particular \(\Gamma _p\), that \(\lim _{r\rightarrow \infty } r^p \vert \Gamma _p \vert \) is always zero in each of the spacetimes which are constructed here. This means that some of the Ricci coefficients will decay with faster rates than those propagated in the proof of Theorem 1.2. Some of these faster rates can be recovered a posteriori.

etc. It may be the case, for a particular \(\Gamma _p\), that \(\lim _{r\rightarrow \infty } r^p \vert \Gamma _p \vert \) is always zero in each of the spacetimes which are constructed here. This means that some of the Ricci coefficients will decay with faster rates than those propagated in the proof of Theorem 1.2. Some of these faster rates can be recovered a posteriori.

The notation \(\overset{(3)}{\Gamma }\) will be used to schematically denote any \(\Gamma \) for which the corresponding null structure equation of (39)–(48) it satisfies is in the  direction,

direction,

Similarly, \(\overset{(4)}{\Gamma }\) will schematically denote any \(\Gamma \) for which the corresponding null structure equation of (39)–(48) is in the  direction,

direction,

Finally, \(\overset{(3)}{\Gamma }_p\) will schematically denote any \(\Gamma _p\) which has also been denoted \(\overset{(3)}{\Gamma }\). So, for example, \(\hat{\underline{\chi }}\) may be schematically denoted \(\overset{(3)}{\Gamma }_1\). Similarly, \(\overset{(4)}{\Gamma }_p\) will schematically denote any \(\Gamma _p\) which has also been denoted \(\overset{(4)}{\Gamma }\).

Consider now the collection of Weyl curvature components, which are schematically denoted \(\psi \),

Each \(\psi \) is similarly given a p-index,

to encode the fact that, as again will be shown, \(r^p\vert \psi _p\vert \) is uniformly bounded in each of the spacetimes which are constructed.

When deriving energy estimates for the Bianchi equations in Section 9, a special divergence structure present in the terms involving angular derivatives is exploited. For example, the  equation is contracted with \(\alpha \) (multiplied by a suitable weight) and integrated by parts over spacetime. The

equation is contracted with \(\alpha \) (multiplied by a suitable weight) and integrated by parts over spacetime. The  equation is similarly contracted with \(\beta \) and integrated by parts. When the two resulting identities are summed, a cancellation occurs in the terms involving angular derivatives leaving only a spherical divergence which vanishes due to the integration on the spheres. The

equation is similarly contracted with \(\beta \) and integrated by parts. When the two resulting identities are summed, a cancellation occurs in the terms involving angular derivatives leaving only a spherical divergence which vanishes due to the integration on the spheres. The  equation is thus paired with the

equation is thus paired with the  equation. To highlight this structure, consider the ordered pairs,

equation. To highlight this structure, consider the ordered pairs,

Each of these ordered pairs will be schematically denoted \((\uppsi _p, \uppsi _{p'}')\), with the subscripts p and \(p'\) as in (56), and referred to as a Bianchi pair.

The components of the energy momentum tensor are schematically denoted \(\mathcal {T}\),

and each \(\mathcal {T}\) is similarly given a p-index,

to encode the fact that \(r^p \vert \mathcal {T}_p \vert \) will be shown to be uniformly bounded.

Finally, for a given \(p\in \mathbb {R}\), let \(h_p\) denote any smooth function \(h_p : \mathcal {M} \rightarrow \mathbb {R}\), depending only on r, which behaves like \(\frac{1}{r^p}\) to infinite order, i.e. any function such that, for any \(k\in \mathbb {N}_0\), there is a constant \(C_k\) such that \(r^{k+p} \vert (\partial _v)^k h_p \vert \le C_k\), where the derivative is taken in the \((u,v,\theta ^1,\theta ^2)\) coordinate system. In addition, the tensor field  may also be denoted \(h_p\). Note that

may also be denoted \(h_p\). Note that  . For example,

. For example,

The Schematic Form of the Equations

Using the notation of the previous section, the null structure and Bianchi equations can be rewritten in schematic form. For example the null structure equation (40) can be rewritten,

Here and in the following, \(\Gamma _{p_1} \cdot \Gamma _{p_2}\) denotes (a constant multiple of) an arbitrary contraction between a \(\Gamma _{p_1}\) and a \(\Gamma _{p_2}\). In the estimates later, the Cauchy–Schwarz inequality \(\vert \Gamma _{p_1} \cdot \Gamma _{p_2} \vert \le C \vert \Gamma _{p_1}\vert \vert \Gamma _{p_2} \vert \) will always be used and so the precise form of the contraction will be irrelevant. Similarly for \(h_{p_1} \Gamma _{p_2}\).

Rewriting the equations in this way allows one to immediately read off the rate of decay in r of the right hand side. In the above example one sees that  is equal to a combination of terms whose overall decay is, according to the p-index notation, like \(\frac{1}{r^2}\), consistent with the fact that applying

is equal to a combination of terms whose overall decay is, according to the p-index notation, like \(\frac{1}{r^2}\), consistent with the fact that applying  to a Ricci coefficient does not alter its r decay (see Section 3.3). Each of the null structure equations can be expressed in this way.

to a Ricci coefficient does not alter its r decay (see Section 3.3). Each of the null structure equations can be expressed in this way.

Proposition 3.1

(cf. Proposition 3.1 of [13]). The null structure equations (39)–(48) can be written in the following schematic form

whereFootnote 17

This proposition allows us to see that the right hand sides of the  equations behave like \(\frac{1}{r^p}\), whilst the right hand sides of the

equations behave like \(\frac{1}{r^p}\), whilst the right hand sides of the  equations behave like \(\frac{1}{r^{p+2}}\). This structure will be heavily exploited and should be seen as a manifestation of the null condition present in the Einstein equations.

equations behave like \(\frac{1}{r^{p+2}}\). This structure will be heavily exploited and should be seen as a manifestation of the null condition present in the Einstein equations.

Remark 3.2

The term \(\frac{p}{2} \mathrm {tr}\chi \ \overset{(4)}{\Gamma }_p\) on the left hand side of equation (58) is not contained in the error since \(\mathrm {tr}\chi \) behaves like \(\frac{1}{r}\) and so this term only behaves like \(\frac{1}{r^{p+1}}\). This would thus destroy the structure of the error. It is not a problem that this term appears however since, when doing the estimates, the following renormalised form of the equation will always be used:

This can be derived by differentiating the left hand side using the product rule, substituting equation (58) and using the fact that \((\mathrm {tr}\chi _{\circ } - \mathrm {tr}\chi ) \overset{(4)}{\Gamma }_p\) can be absorbed into the error.

It is not a coincidence that the coefficient of this term is always \(\frac{p}{2}\), it is the value of this coefficient which decides the rate of decay to be propagated for each \(\overset{(4)}{\Gamma }_p\). This will be elaborated on further in Section 10. This was not the case in [13]; they have more freedom since they are integrating backwards from null infinity, rather than towards null infinity, and so can propagate stronger decay rates for some of the \(\overset{(4)}{\Gamma }_p\). These stronger rates could be recovered here using the ideas in Section 11, however it is perhaps interesting to note that the estimates can be closed with these weaker rates.

The Bianchi equations can also be rewritten in this way.

Proposition 3.3

(cf. Proposition 3.3 of [13]). For each Bianchi pair \((\uppsi _p, \uppsi _{p'}')\), the Bianchi equations (29)–(38) can be written in the following schematic form

where  denotes the angular operator appearing in equation for the particular curvature component under considerationFootnote 18 and \(\gamma [\uppsi _{p'}'] = \frac{p'}{2}\) for \(\uppsi _{p'}' \ne \beta \), \(\gamma [\beta ] = 2\). The error terms take the form

denotes the angular operator appearing in equation for the particular curvature component under considerationFootnote 18 and \(\gamma [\uppsi _{p'}'] = \frac{p'}{2}\) for \(\uppsi _{p'}' \ne \beta \), \(\gamma [\beta ] = 2\). The error terms take the form

where \(\mathfrak {D}\) is used to denote certain derivative operators which are introduced in Section 3.3.

When applied to \(\mathcal {T}_p\), the operators \(\mathfrak {D}\) should not alter the rate of decay so again this schematic form allows one to easily read off the r decay rates of the errors. This structure of the errors will again be heavily exploited. The first summation in \(E_4[\uppsi _{p'}']\) can in fact actually always begin at \(p'+2\) except for in \(E_4[\beta ]\) where the term \(\eta ^{\#} \cdot \alpha \) appears. Also the terms,

in \(E_3[\uppsi _{p}]\) can be upgraded to,

in \(E_3[\alpha ]\) and \(E_3[\beta ]\). These points are important and will be returned to in Section 9.

The Commuted Equations

As discussed in the introduction, the Ricci coefficients and curvature components will be estimated in \(L^2\) using the null structure and Bianchi equations respectivelyFootnote 19. In order to deal with the nonlinearities some of the error terms are estimated in \(L^{\infty }\) on the spheres. These \(L^{\infty }\) bounds are obtained from \(L^2\) estimates for higher order derivatives via Sobolev inequalities. These higher order \(L^2\) estimates are obtained through commuting the null structure and Bianchi equations with suitable differential operators, showing that the structure of the equations are preserved, and then proceeding as for the zero-th order case. It is shown in this section that the structure of the equations are preserved under commutation.

It is also necessary to obtain higher order estimates for components of the energy momentum tensor in order to close the estimates for the Bianchi and Null structure equations. Rather than commuting the Vlasov equation, which leads to certain difficulties, these estimates are obtained by estimating components of certain Jacobi fields on the mass shell. See Section 8.

Define the set of differential operators  acting on covariant \(S_{u,v}\) tensors of any orderFootnote 20, and let \(\mathfrak {D}\) denote an arbitrary element of this set. These operators are introduced because of the Commutation Principle of [13]:

acting on covariant \(S_{u,v}\) tensors of any orderFootnote 20, and let \(\mathfrak {D}\) denote an arbitrary element of this set. These operators are introduced because of the Commutation Principle of [13]:

Commutation Principle: Applying any of the operators \(\mathfrak {D}\) to any of the \(\Gamma , \psi , \mathcal {T}\) should not alter its rate of decay.

This will be shown to hold in \(L^2\), though until then it serves as a useful guide to interpret the structure of the commuted equations.

If \(\xi \) is an \(S_{u,v}\) tensor field, \(\mathfrak {D}^k \xi \) will be schematically used to denote any fixed k-tuple \(\mathfrak {D}_k\mathfrak {D}_{k-1} \ldots \mathfrak {D}_1 \xi \) of operators applied to \(\xi \), where each  .

.

In order to derive expressions for the commuted Bianchi equations in this schematic notation, the following commutation lemma will be used. Recall first the following lemma which relates projected covariant derivatives of a covariant \(S_{u,v}\) tensor to derivatives of its components.

Lemma 3.4

Let \(\xi \) be a (0, k) \(S_{u,v}\) tensor. Then,

and

The commutation lemma then takes the following form.

Lemma 3.5

(cf. Lemma 7.3.3 of [10] or Lemma 3.1 of [13]). If \(\xi \) is a (0, k) \(S_{u,v}\) tensor then,

and

where K is the Gauss curvature of  .