Abstract

Fuzzy logic-based systems are nowadays commonly used in nonlinear function approximation when incoming data are available. Their main advantage is that the resulting rules can be interpreted understandably. Nevertheless, when the data are noisy an overfitting may occur which leads to poor accuracy and generalization ability. Prior information about the nonlinear function may improve fuzzy system performance. In this paper the case when the function is monotonic with respect to some or all variables is considered. Sufficient conditions for the monotonicity of first-order Takagi–Sugeno fuzzy systems with raised cosine membership functions are derived. Performance of the proposed fuzzy system is tested on two benchmark datasets

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For a few decades, fuzzy logic-based systems remain a favorite tool for nonlinear regression. The main advantage of this universal approximator is the possibility to include a linguistically represented knowledge about the object and vice-versa to characterize the identified system by words and thus enhance the interpretability of the obtained model.

Unfortunately, not any prior knowledge about the system can be easily expressed in the form of If-Then rules [1]. One such knowledge that is quite often encountered in many real-world applications is the information about the monotonicity of the corresponding mapping. Such information can be of different origins. In nonlinear dynamical systems, it may result from physical laws describing their behaviour, e.g., the level of a liquid in the tank increases with the inlet. Very often monotonicity reflects natural human behaviour, e g., the price of a flat is increasing with its area. Last but not least monotonicity appears as a consequence of human observations, e.g. the risk of many diseases increases with the mass and the age of the patient.

There are many papers showing that the incorporation of prior information about monotonicity into regression or classification has some benefits. In [1, 2] it is demonstrated that preserving monotonicity suppresses the effect of the noise and prevents the model from overfitting. Using information about monotonicity may increase the generalization ability of fuzzy systems, especially in the regions where the obtained data are sparse [3]. In classification problems the results of monotonic procedures are more reasonable and better interpretable [4, 5].

Possibilities of how to include the requirement of monotonicity have been considered for most machine learning-based techniques. Monotonic support vector regression (SVR) and support vector machines (SVM) were investigated in [6, 7], respectively. Pelckmans et al [8] used the duality to include monotonicity conditions in kernel regression. Properties of monotonic classification of evolutionary algorithms are examined in [9], nearest neighbour in [10] and decision trees in [11, 12] with their fusion in [13, 14]. Basic results on the monotonicity of multilayer perceptrons were stated in [15] and [16]. The applications include nonlinear dynamic system identification and control [17, 18], credit risk rating [19, 20], consumer loan evaluation [21], predicting Alzheimer’s disease progression [22], manufacturing [23] or breast cancer prediction [24] and its detection on mammograms [25].

In the case of fuzzy systems, monotonicity was first studied in [1] where Mamdani-type fuzzy systems with piecewise linear membership functions were considered. First results on monotonic Takagi–Sugeno fuzzy systems with differentiable membership functions and membership functions that are not differentiable in a finite number of points were presented by Won et al. [26] and further discussed in [27] and applied for least square identification in [28]. Since then some more results have been achieved on different inference engines [29], fuzzy-inference methods [30], defuzzification methods [2, 31], hierarchical fuzzy systems [32], fuzzy decision trees [14] or type-2 fuzzy systems [33, 34]. Monotonicity conditions for fuzzy systems with ellipsoidal antecedents were presented in [35]. Deng et al. [36] handle monotonicity of TS fuzzy systems for classification on a set of virtual samples expressed as constraints on related optimization tasks. Applications of monotonic fuzzy systems can be found in the detection of failures [37], decision making [38], thermal comfort prediction [39], assessing material recyclability [40] and classification [9, 41, 42].

Unfortunately, there are significant drawbacks when considering Takagi–Sugeno fuzzy systems for regression with the most commonly used membership functions [43, 44]. Triangular or trapezoidal membership functions produce non-smooth output whereas monotonicity conditions for Gaussian membership functions [26] are very conservative due to unbounded support. The latter is applicable for membership functions with the same width only. Those facts lead to increasing interest in smooth membership functions with compact support if the smooth monotone output is required [35]. Advantages of smooth fuzzy models have been shown in [45, 46].

In this paper, first-order Takagi–Sugeno fuzzy systems with raised cosine membership functions are investigated. Even though not commonly used within a fuzzy community a big advantage of raised cosine functions is that they are infinitely differentiable and therefore constitute a smooth fuzzy system. The derived sufficient monotonicity conditions are quite intuitive and make it possible to use efficient and reliable numerical algorithms for solving related optimization problems. Performance of the proposed smooth monotone fuzzy system will be compared with other monotone and non-monotone artificial intelligence-based regressors on a few commonly used benchmark datasets.

2 Problem Formulation

2.1 First-Order Takagi–Sugeno Fuzzy System

Let us consider first-order Takagi–Sugeno type fuzzy system [47] where \(M_j\) fuzzy sets \(A_j^1,A_j^2\ldots ,A_j^{M_j}\) characterize each input \(x_j, j=1,\ldots ,n\). The corresponding \(M = \prod _{j=1}^n M_j\) rules covering the whole input space are in the form

where \(x = [x_1,\ldots , x_n]^\text{T} \in U = [U_1 \times U_2 \times \cdots \times U_n], U_j = [\underline{u}_j,\overline{u}_j]\) is the input vector, y is the scalar output, \(a^\textbf{k} = [a^\textbf{k}_{1},\ldots ,a^\textbf{k}_{n}]^\text{T} \in \Re ^n\) and \(a^\textbf{k}_{0} \in \Re \) are the parameters of the linear submodels and \(\textbf{k} = (k_1,k_2,\ldots ,k_n) \in K \subset N_0^n, 1 \le k_j \le M_j\) is the multi-index. Using the product inference engine and center of gravity defuzzification the total output of the fuzzy model is determined by

where \(\mu _j^{k_j}(x_j)\) are the membership functions characterizing input fuzzy sets \(A_j^{k_j},\, j=1,\dots ,n\).

2.2 Raised Cosine Membership Function

The raised cosine membership functions investigated in this paper are defined by \(\mu _j^{k_j}(x_j)\), i. e.

where \(z = \pi \frac{x_i-c}{\sigma }\), \(\sigma > 0\), see Fig. 1.

2.3 Monotone Fuzzy System

For a monotone fuzzy system, its output is monotonic with respect to its input. For multi-input fuzzy systems investigated in this paper, the definition used in [26] is adopted.

Definition 1

(Monotonicity of fuzzy system, [26]). The mapping \(F: U \rightarrow \Re \) defined by (1) is said to be monotonically nondecreasing with respect to \(x_i\) if and only if \(x_i^1 < x_i^2\) implies

for any \([x_1,\ldots ,x_{i-1},x_{i+1},\ldots ,x_n]\) such that \([x_1,\ldots ,\) \(x_{i-1},x_i^1,x_{i+1},\ldots ,x_n]^{\text{T}}\), \([x_1,\ldots ,x_{i-1},x_i^2,x_{i+1},\ldots \), \(x_n]^{\text{T}}\) \(\in U\). A non-increasing mapping is defined adequately.

3 Monotonicity Conditions

Since the mapping (1) with membership functions defined by (2) is differentiable in any \(x \in U\) it is monotonically non-decreasing with respect to \(x_i\), \(i=1,\ldots ,n\) if and only if partial derivative \(\frac{\partial F}{\partial x_i} \ge 0\) for all \(x \in U\). The partial derivative can be expressed as

for all multi-indices \(\textbf{p} < \textbf{q}, \textbf{p}, \textbf{q} \in K,\) where the inequality \(\textbf{p} < \textbf{q}\) stands for \(\textbf{p} = [k_1,\ldots ,k_{i-1},p,k_{i+1},\ldots ,M_i]\),

\(\textbf{q} = [k_1,\ldots ,k_{i-1},q,k_{i+1},\ldots ,M_i]\) such that \(1 \le p < q \le M_i\) (i. e. for all combinations of \((k_1,\ldots ,k_{i-1},k_{i+1}\),

\(\ldots ,k_n),\) where \(k_i \in [1,M_i]\)).

Therefore the mapping (1) is non-decreasing with respect to \(x_i\) if

-

1.

\(a^\textbf{k}_{i} > 0 \,\, \forall \textbf{k} \in K\),

-

2.

\(x^\text{T} a^\textbf{p} + a^\textbf{p}_{0} - x^\text{T} a^\textbf{q} - a^\textbf{q}_{0} \le 0, \textbf{p} < \textbf{q}\),

-

3.

\(\frac{d\mu _i^p (x_i)}{dx_i}\mu _i^q (x_i) - \frac{d\mu _i^q (x_i)}{dx_i}\mu _i^p (x_i) \le 0 \, \forall x_i \in U_i\) and \(1 \le p < q \le M_i\).

Here one can see the fundamental advantage of membership functions with compact support. The conditions 2. and 3. need to be satisfied only for those \(\textbf{p} < \textbf{q},\) where the corresponding rules fire simultaneously. Therefore they are much less restrictive than e. g. in the case of Gaussian or sigmoidal membership functions where those conditions need to be satisfied for all pairs \(\textbf{p},\textbf{q} \in K\).

Since the rules \(R_\textbf{p}\) and \(R_\textbf{q}\) are active at the same time if and only if \(c_i^q - \sigma _i^q < c_i^p + \sigma _i^p\) the condition 2. need to be met on the domain \(U^\textbf{pq} = \prod _{i=1}^{n} [c_i^q - \sigma _i^q,\,c_i^p + \sigma _i^p]\) that corresponds to \(2^n\) linear constraints on coefficients \(a^\textbf{p}, a^\textbf{q}\) for each \(\textbf{p} < \textbf{q}\) generated by all combinations of lower and upper bounds of those intervals. Denote the parameter vector

Then those constraints can be expressed as

Fulfillment of condition 3. is guaranteed by the following theorem.

Theorem 1

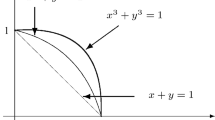

Membership functions (2) satisfy condition 3. if \(c_i^p - \sigma _i^p \le c_i^q - \sigma _i^q\) and \(c_i^p + \sigma _i^p \le c_i^q + \sigma _i^q\) for all \(1 \le p < q \le M_i\).

Proof

For the sake of simplicity in what follows the index i is omitted. The condition is clearly satisfied whenever \(\mu ^p(x) = 0\) or \(\mu ^q(x) = 0\) and for \(x \in [c^p,c^q],\) where \(\mu ^p(x) \le 0\) and \(\mu ^q(x) \ge 0\). In the region where both membership functions are active simultaneously \((x \in U^{pq} = (c^q - \sigma ^q,\, c^p + \sigma ^p))\) the condition 3. yields

with \(z_p = \pi \frac{x-c^p}{\sigma ^p}\) and \(z_q = \pi \frac{x-c^q}{\sigma ^q}\).

Let us first check it for \(x \in (c^q - \sigma ^q,c^p),\) where \(z_p,\,z_q \in (-\pi ,\,0)\) and \(z_p > z_q\). In that interval inequality (7) is equivalent to

that can be rewritten as

Since \(z_p > z_q\) and function tangent is monotonically increasing for \(z_p, z_q \in (-\pi ,\,0)\) inequality (8) is satisfied whenever \(\sigma ^p \ge \sigma ^q\).

For the case \(\sigma ^p < \sigma ^q\) first let us observe that the values of the function f(x) are decreasing with increasing parameter \(c^q\) since \(z_q\) and hence \(\tan \Big (\frac{z_q}{2}\Big )\) are decreasing and negative. Hence it is sufficient to check inequality (8) for the lowest possible value of \(c^q\) which under conditions of theorem 1 happens if \(c^p - \sigma ^p = c^q - \sigma ^q\). Let us prove that in that case f(x) is a decreasing function of x. The derivative

is negative if

Since because of \(\sigma ^q > \sigma ^p\) and \(z_p > z_q\), \(z_p,\,z_q \in (-\pi ,0)\) and thus \( \sin \big (\frac{z_q}{2}\Big ) < \sin \Big (\frac{z_p}{2}\Big )\) the derivative of left-hand side of (10) gives

and therefore \(f'(x)\) is a decreasing function of x and achieves its maximum value at the minimum point of the admissible interval \(x = c^q - \sigma ^q\). Since that maximum value is negative due to \(z_q = -\pi \) and \(\cos \Big (\frac{z_p}{2}\Big ) < 0\) the inequality (10) holds and the first derivative \(f'(x)\) is decreasing. Therefore it is sufficient to check inequality (8) as well in the point \(x = c^q - \sigma ^q,\) where f(x) achieves its maximum value.

To obtain the maximum value of f(x) let us apply three times L’Hospital’s rule on the left term of (8) that gives

Therefore maximum of f(x) is equal to 0 and the inequality (8) holds for \(x \in (c^q - \sigma ^q,\,c^p)\).

Analogous reasoning can be used to confirm that the inequality (8) holds for \(x \in (c^q,\,c^p + \sigma ^p)\). \(\square \)

Theorem 1 actually defines the ordering of fuzzy sets. Even though such an ordering and the monotonicity conditions are quite natural (see Fig. 2) they do not guarantee monotonicity for arbitrarily shaped membership functions.

4 Monotone Fuzzy System-Based Regression

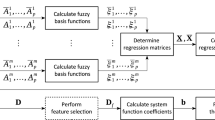

Suppose that the parameters of membership functions (2) are given such that conditions in Theorem 1 hold. Then the mapping (1) can be written as

with

and the parameter vector \(\theta, \) defined by (5) where \(\otimes \) stands for Kronecker product, \(\mu ^{\textbf{k}_j} = \prod _{i=1}^{n} \mu _i^{k_i}(x_i)\) and \(\textbf{k}_j \in K,\, j = 1,\ldots ,M\). The mapping (12) is linear with respect to the submodel parameters \(a^{\textbf{k}_j}\).

Now assume that the regressor \(\{x^l,y^l;x^l \in \Re ^n,y^l \in \Re , l = 1,\ldots ,N\}\) is available. The parameters guaranteeing monotonicity and minimizing the least squares are given by

where \(Z = [\Phi (x^1)^{\text{T}},\ldots ,\Phi (x^N)^{\text{T}}]^{\text{T}}\), \(\textbf{y} = [y^1,\ldots ,y^N]^{\text{T}}\).

5 Methods Evaluation

Performance of a fuzzy system will beevaluated by its accuracy and goodness of fit, both on validation data \(\{x_\text{v}^k,y_\text{v}^k;x_\text{v}^k \in \Re ^n,y_\text{v}^k \in \Re , k = 1,\ldots ,N_\text{v}\}\).

The accuracy of the models will be validated by root mean square error (RMSE) between the given output \(y_\text{v}\) and the fuzzy model output \(F(x_\text{v})\) defined as

The goodness of fit of the models will be measured by \(R^2\) coefficient defined as

where \(\bar{y} = \frac{1}{N_\text{v}}\sum _{k=1}^{N_\text{v}}y_\text{v}^k\) is the average of the observed data.

Monotonicity of a TS fuzzy model (1) non-decreasing with respect to a set of indices \(L,\, L \subset \{1,\dots ,n\}\) will be evaluated by monotonicity index \(I_\text{mon} \in [0,1]\) that is defined as

where \(\text{card}(\cdot )\) stands for cardinality of a set. The set \(X_\text{g}\) is usually generated by gridding the input set U.

6 Benchmark Datasets

6.1 Predicted Mean Vote (PMV) Dataset

PMV dataset is related to the prediction of the thermal comfort index [48]. The thermal comfort index in EN ISO 7730 is used and needed to evaluate the room climate. The predicted mean vote for thermal comfort is determined by the heat balance of the human being with his environment. The PMV index is a function of six variables, namely air temperature, radiant temperature, relative humidity, air velocity, human activity level, and clothing thermal resistance. The PMV index is calculated using a complex equation from [48] and it ranges between \(-3\) and 3. It increases with increasing air temperature and relative humidity, the remaining attributes are supposed to have no monotone impact. The dataset contains 567 samples reported in [49].

The achieved results will be compared with monotone zero-order TS fuzzy system identification procedure described in [49]. That procedure transforms related constrained optimization problem into a sequence of unconstrained minimization problems that are solved by David-Fletcher-Powell (DFP) algorithm. Let us note that just as the presented method the method in [49] (Stage E which gives the best results) guarantees monotonicity on the whole domain.

For each simulation the original 567 samples in 425 (75 %) is randomly split constituting a training data set and the remaining 142 (25 %) for the testing set. That step was repeated 50 times, and average values of RMSE for the testing set were compared. All the experiments are carried out on Intel core i7 5600K, 3.5GHz with 4GB of RAM and MATLAB R2016b.

At first, the original monotone data are used. Average RMSE achieved on testing sets of non-monotone zero-order TS fuzzy system (NON ZOTS, results taken from [39]), the presented monotone first-order TS fuzzy system (MON FOTS) and Stage E of the monotone zero-order TS fuzzy system proposed in [49] (MON ZOTS) for different numbers of input membership functions are compared in Table 1. Raised cosine membership functions (plotted in Fig. 3) were chosen to be similar to triangular membership functions used in [49]. One can see that the presented monotone first-order TS fuzzy system gives similar results and the results are improving for a higher number of input fuzzy sets which is not the case of method in [49]. Table 1 also shows that for noise-free data the difference between monotone and non-monotone regression is quite small. A slightly higher accuracy of the presented method is not surprising since a first-order TS fuzzy system contains more parameters compared to a zero-order TS fuzzy system. Moreover, the average computational time reported in [49] is 401.7012s whereas for the presented method it is 94.1s. The presented method is significantly faster since it relies on very efficient linearly constrained least squares algorithms.

RMSE achieved on all 567 data samples for non-monotone zero-order TS fuzzy system for \(M_1 = M_2 = 5\) is 0.0774 (reported in [39]) and for Stage E of the monotone zero-order TS fuzzy system proposed in [49] is 0.0021. RMSE on the same data samples for the proposed monotone first-order TS fuzzy system is 0.0036.

Next, the original data are contaminated by noise,

where rand is a normally distributed random number with a mean of 0 and variance 1. Now non-monotone data are obtained and the same identification algorithms as in the previous case are used to create fuzzy models. A comparison of RMSE is shown in Table 2. The values show that for a small number of input fuzzy sets the presented monotone TS fuzzy system is worse compared to the monotone ZO TSFS but it gets much better for a higher number of input fuzzy sets. Quite naturally, both monotone TS fuzzy systems exhibit better results compared to non-monotone ones. The average computational time for monotone FO TSFS (\(M_1 = M_2 = 5\)) was 101.5s which is almost the same as for noise-free dataset and it is considerably less than the 1903.59s reported in [49] for monotone ZO TSFS.

In Table 3 and Table 4, the presented method using cosine membership functions was compared with triangular, Gaussian, and sigmoidal membership functions. Unfortunately, there are no conditions guaranteeing monotonicity for Gaussian and sigmoidal membership functions with different widths, therefore the same width was used for all of them. As expected, the presented method achieves better results since the conditions imposed on the linear models in the consequents are less restrictive for cosine membership functions.

The original dependency and its approximations by non-monotone and monotone TS fuzzy systems with \(M_1 = M_2 = 5\) membership functions are shown in Fig. 4, 5 and 6, respectively.

6.2 Boston Housing Dataset

In the second case study, a commonly used dataset that contains information about different houses in Boston [50] is adopted. The dataset was developed to illustrate the issues with using housing market data to measure consumer willingness to pay for clean air. The input to this model was a dataset comprising the Boston Standard Metropolitan Statistical Area, with the nitric oxide concentration (NOX) serving as a proxy for air quality. The variables in the dataset were collected in the early 1970 s and come from a mixture of surveys, administrative records, and other research papers. The goal is to predict the price of a house based on 13 input attributes described in Table 5. The dataset contains 506 datapoints.

After performing a correlation analysis, the following 6 attributes are considered monotone (ordered by decreasing absolute value of correlation coefficient). The lower status of the population (LSTAT), the higher the average number of rooms per dwelling (RM), the lower the tax rate (TAX), the lower the nitric oxide concentration (NOX), the lower the distance to the center (DIST), the lower the crime level (CRIM), the higher the median value of the price.

Because of the strong correlation between the attributes TAX and RAD (positive), DIS and AGE (negative), and NOX and INDUS (negative), attributes RAD, AGE, and INDUS are not considered for prediction. Because of the weak correlation between the price and the attributes CHAS and B those two were disregarded as well. The remaining attributes PTRA and ZN are treated as non-monotone. Two raised cosine membership functions are used for each attribute.

506 datapoints were divided as 380 for the training set (75 %) and 106 for the testing set (25 %). That step is repeated 50 times and average values of RMSE and its variance and \(R^2\) are calculated. The performance is compared with monotone multilayer perceptron (MLP) (proposed in [51]) and monotone MIN-MAX network (MM, proposed in [15]). The RMSE and \(R^2\) values for those two methods and linear regression (LR) are taken from [52]. Let us note that all considered tools guarantee monotonicity.

Table 6 shows that the best results in terms of mean RMSE and its variance were achieved for the four-layer MIN-MAX network. Nevertheless, in contrast to presented TS fuzzy systems, MIN-MAX networks do not produce smooth output. First-order TS fuzzy systems achieve better accuracy compared to monotone two-layer perceptron. As expected, all nonlinear regression approaches exhibit better predictions than linear ones.

6.3 Large Datasets

Three more benchmark datasets adopted from the machine learning repository of the University of California, Irvine (UCI) [53] were used to compare the presented algorithm with many commonly used regression models, both monotone and non-monotone. As the third tested dataset, a large Abalone dataset was used. The Abalone dataset contains the physical measurements of abalones, which are large, edible sea snails. Predicting the age of abalone from physical measurements. The age of abalone is determined by cutting the shell through the cone, staining it, and counting the number of rings through a microscope—a boring and time-consuming task. Other measurements, which are easier to obtain, are used to predict the age. Further information, such as weather patterns and location (hence food availability) may be required to solve the problem.

The dataset contains 4177 items, each of which has 7 attributes, 6 of them were handled as monotone (Length, Diameter, Height, Whole weight, Shucked weight, Viscera weight), one as a non-monotone (Shell weight).

The dataset containing 9568 data points collected from a Combined Cycle Power Plant over 6 years (2006-2011), when the power plant was set to work with full load, was used as another example. Features consist of hourly average ambient variables temperature, ambient pressure, relative humidity, and exhaust vacuum to predict the net hourly electrical energy output of the plant. The higher the temperature and the exhaust vacuum the lower the electrical energy power. Therefore the attributes temperature and exhaust vacuum were treated as monotone, the remaining ones as non-monotone. In both datasets the categorization of monotone and non-monotone attributes was taken from [52].

As the last dataset, the Auto-MPG taken from the StatLib library maintained by Carnegie Mellon University was used. The dataset contains 392 instances, each having eight attributes: number of cylinders (decreasing), displacement, horsepower, weight of the car (decreasing), acceleration, model year (increasing), and origin. The aim is to predict the city-cycle fuel consumption in miles per gallon (mpg). According to an expert who majors in automobiles and mechanical engineering fuel consumption grows with increasing model year and decreasing number of cylinders and weight of the car. The other attributes were considered to have a non-monotone impact on the predicted output.

The datasets were divided into a training set (70 % of the dataset) and a test set (remaining 30 % of the dataset). That step was repeated 50 times for different numbers of membership functions and different widths and the average results were taken. The presented monotone first-order TS fuzzy system with cosine membership functions (MonCOS ) was compared with the regression models commonly used in machine learning. As non-monotone regressors first-order TS fuzzy system with cosine (NonCOS) and Gaussian membership functions (NonGAUSS), non-monotone multilayer perceptron (NonMLP), and non-monotone support vector regression (NonSVR) are considered. Monotone first-order TS fuzzy system with Gaussian membership functions (MonGAUSS, [26]), monotone multilayer perceptron (MonMLP, [51]), monotone MIN-MAX network (MonMM, [15]) and monotone support vector regression (MonSVR, [6], does not guarantee monotonicity) were taken into account as monotone regression models.

First, the results confirm the expected fact—taking into account monotonic dependency considerably improves any regression model performance. Second, when comparing monotone regressors there is no big difference among the models. Generally, neural networks (MLP, MIN-MAX) exhibit the best results, nevertheless their interpretability is very low. One can also see that there is almost no difference between non-monotone fuzzy systems with cosine and Gaussian membership functions. However, in the monotone case, cosine membership functions have better accuracy since the monotonicity conditions imposed on the consequent part are less restrictive.

7 Conclusion

The paper presents sufficient conditions guaranteeing the monotonicity of first-order Takagi–Sugeno fuzzy systems with raised cosine membership functions. The conditions are separated into antecedent and consequent parts. Whereas the former are satisfied by suitable choice of membership functions parameters the latter are expressed as linear constraints on submodels parameters. Experiments performed on benchmark datasets confirm that using knowledge about monotonicity with respect to some or all input variables improves performance of nonlinear regression analysis, especially in the presence of noise in data. The main advantage of monotone TS fuzzy systems with raised cosine membership functions over other smooth functions is that the derived monotonicity conditions are feasible for membership functions with different widths and are less conservative and therefore performance of those systems is better. When comparing the goniometric membership functions with triangular ones the former produces a smooth output and the corresponding algorithm is much faster.

Data Availability

The dataset analyzed in the example in section 6.1 is available as supplemental material of the paper C. Y. Teh and Y. W. Kerk and K. M. Tay and C. P. Lim: On Modeling of Data-Driven Monotone Zero-Order TSK Fuzzy Inference Systems Using a System Identification Framework, IEEE Trans. Fuzzy Syst., 26(6), 2018, pp. 3860-3874.

The dataset analyzed in the example in section 6.2 is available from http://lib.stat.cmu.edu/datasets/boston.

The datasets analyzed in section 6.3 are available from https://archive.ics.uci.edu/ml/datasets.

Code Availability

Code used for generating the results is available from the corresponding author on reasonable request.

References

Lindskog, P., Ljung, L.: Ensuring monotonic gain characteristics in estimated models by fuzzy model structures. Automatica 36(2), 311–317 (2000)

Van Broekhoven, E., De Baets, B.: Only smooth rule bases can generate monotone Mamdani–Assilian models under center-of-gravity defuzzification. IEEE Trans. Fuzzy Syst. 17(7), 1157–1174 (2009)

Hušek, P.: On monotonicity of Takagi–Sugeno fuzzy systems with ellipsoidal regions. IEEE Trans. Fuzzy Syst. 24, 1673–1678 (2016)

Doumpos, M., Pasiouras, F.: Developing and testing models for replicating credit ratings: a multicriteria approach. Comput. Econ. 25(4), 327–341 (2005)

Li, C., Yi, J., Zhao, D.: Analysis and design of monotonic type-2 fuzzy inference systems. IEEE Int. Conf. Fuzzy Syst. 2009, 1193–1198 (2009)

Chuang, H.C., Chen, C.C., Li, S.T.: Incorporating monotonic domain knowledge in support vector learning for data mining regression problems. Neural Comput. Appl. 32, 11791–11805 (2020)

Li, S.T., Chen, C.C.: A regularized monotonic fuzzy support vector machine model for data mining with prior knowledge. IEEE Trans. Fuzzy Syst. 23(5), 1713–1727 (2015)

Pelckmans, K., Espinoza, M., Brabanter, J.D., Suykens, J., Moor, B.D.: Primal-dual monotone kernel regression. Neural Process Lett. 22(2), 171–182 (2005)

Garcia, J., AlBar, A.M., Aljohani, N.R., Cano, J.R., Garcia, S.: Hyperrectangles selection for monotonic classification by using evolutionary algorithms. Int. J. Comput. Intell. Syst. 9(1), 184–202 (2016)

Duivesteijn, W., Feelders, A.: Nearest neighbour classification with monotonicity constraints. In: ECMLPKDD’08 Proceedings of the 2008th European Conference on Machine Learning and Knowledge Discovery in Databases, vol. Part I, pp. 301–316 (2008)

Pei, S., Hu, Q., Chen, C.: Multivariate decision trees with monotonicity constraints. Knowl. Based Syst. 112, 14–25 (2016)

Pei, S., Hu, Q.: Partially monotonic decision trees. Inf. Sci. 424, 104–117 (2018)

Qian, Y., Xu, H., Liang, J., Liu, B., Wang, J.: Fusing monotonic decision trees. IEEE Trans. Knowl. Data Eng. 27(10), 2717–2728 (2015)

Wang, J., Qian, Y., Li, F., Liang, J., Ding, W.: Fusing fuzzy monotonic decision trees. IEEE Trans. Fuzzy Syst. 8(5), 887–900 (2020)

Daniels, H., Velikova, M.: Monotone and partially monotone neural networks. IEEE Trans. Neural Netw. 21(6), 906–917 (2010)

Kay, H., Ungar, L.H.: Estimating monotonic functions and their bounds. AIChE J. 46(12), 2426–2434 (2000)

Chen, S., Cowan, C.F.N., Grant, P.M.: Orthogonal least squares learning algorithm for radial basis function networks. Int. J. Control 56(2), 319–346 (1992)

Zhang, L., Li, K., Bai, E.W.: A new extension of Newton algorithm for nonlinear system modelling using RBF neural networks. IEEE Trans. Autom. Control 58, 2333–2929 (2013)

Chen, C.C., Li, S.T.: Credit rating with a monotonicity-constrained support vector machine model. Expert Syst. Appl. 41(16), 7235–7247 (2014)

Doumpos, M., Zopounidis, C.: Monotonic support vector machines for credit risk rating. New Math. Nat. Comput. 5(3), 557–570 (2009)

Li, S.T., Shiue, W., Huang, M.H.: The evaluation of consumer loans using support vector machines. Expert Syst. Appl. 30, 772–782 (2006)

Zhang, X., Yang, Y., Li, T., Zhang, Y., Wang, H., Fujita, H.: CMC: a consensus multi-view clustering model for predicting Alzheimer’s disease progression. Comput. Methods Progr. Biomed. (2020). https://doi.org/10.1016/j.cmpb.2020.105895

von Kurnatowski, M., Schmid, J., Link, P., Zache, R., Morand, L., Kraft, T., Schmidt, I., Schwientek, J., Stoll, A.: Compensating data shortages in manufacturing with monotonicity knowledge. Algorithms 14(345), 1–18 (2021)

Ryu, Y., Chandrasekaran, R., Jacob, V.: Data classification using the isotonic separation technique: application to breast cancer prediction. Expert Syst. Appl. 181, 842–854 (2007)

Daniels, H., Samulski, M.: Partially monotone networks applied to breast cancer detection on mammograms. In: 18th International Conference on Artificial Neural Networks (ICANN), LNCS 5163, pp. 917–926 (2008)

Won, J.M., Park, S.Y., Lee, J.S.: Parameter conditions for monotonic Takagi–Sugeno–Kang fuzzy system. Fuzzy Sets Syst. 132(2), 135–146 (2002)

Won, J.M., Karray, F.: Toward necessity of parametric conditions for monotonic fuzzy systems. IEEE Trans. Fuzzy Syst. 22(2), 465–468 (2014)

Koo, K., Won, J.M., Lee, J.S.: Least squares identification of monotonic fuzzy systems. In: IEEE Annual Meeting of the Fuzzy Information. Processing NAFIPS’04, vol. 2, pp. 745–749. Barcelona, Spain (2004)

Teh, C.Y., Tay, K.M., Lim, C.P.: On the monotonicity property of the TSK fuzzy inference system: the necessity of the sufficient conditions and the monotonicity test. Int. J. Fuzzy Syst. 20(6), 1614–1915 (2018)

Seki, H., Ishii, H., Mizumoto, M.: On the monotonicity of fuzzy-inference methods related to T-S inference method. IEEE Trans. Fuzzy Syst. 18(3), 629–634 (2010)

Van Broekhoven, E., De Baets, B.: Monotone Mamdani–Assilian models under mean of maxima defuzzification. Fuzzy Sets Syst. 159(21), 2819–2844 (2008)

Kouikoglou, V.S., Phillis, Y.A.: On the monotonicity of hierarchical sum-product fuzzy systems. Fuzzy Sets Syst. 160(24), 3530–3538 (2009)

Kerk, Y.W., Tay, K.M., Lim, C.P.: Monotone interval fuzzy inference systems. IEEE Trans. Fuzzy Syst. 27(11), 2255–2264 (2019)

Li, C., Yi, J., Zhang, G.: On the monotonicity of interval type-2 fuzzy logic systems. IEEE Trans. Fuzzy Syst. 22(5), 1197–1212 (2014)

Hušek, P.: Monotonic smooth Takagi–Sugeno fuzzy systems with fuzzy sets with compact support. IEEE Trans. Fuzzy Syst. 27(3), 605–611 (2019)

Deng, Z., Cao, Y., Lou, Q., Choi, K.S., Wang, S.: Monotonic relation-constrained Takagi–Sugeno–Kang fuzzy system. Inf. Sci. 582, 243–257 (2022)

Jee, T.L., Tay, K.M., Lim, C.P.: A new two-stage fuzzy inference system-based approach to prioritize failures in failure mode and effect analysis. IEEE Trans. Rel. 64(3), 869–877 (2015)

Qian, X., Huang, H., Chen, X., Huang, T.: Generalized hybrid constructive learning algorithm for multioutput RBF networks. IEEE Trans. Cybern. 47(11), 3634–3648 (2017)

Li, C., Yi, J., Wang, M., Zhang, G.: Monotonic type-2 fuzzy neural network and its application to thermal comfort prediction. Neural. Comput. Appl. 23(7), 1987–1998 (2013)

Kouikoglou, V.S., Phillis, Y.A.: A monotonic fuzzy system for assessing material recyclability. Fuzzy Sets Syst. 160(24), 3530–3538 (2009)

Alcalá-Fdez, J., Alcalá, R., González, S., Nojima, Y., García, S.: Evolutionary fuzzy rule-based methods for monotonic classification. IEEE Trans. Fuzzy Syst. 25(6), 1376–1390 (2017)

Wang, T., Yi, J., Li, C.: The monotonicity and convexity of unnormalized interval type-2 TSK fuzzy logic systems. In: Proceedings of IEEE International Conference on Fuzzy Systems, pp. 1–7. Barcelona, Spain (2010)

Eyoh, I.J., Umoh, U.A., Inyang, U.G., Eyoh, J.E.: Derivative-based learning of interval type-2 intuitionistic fuzzy logic systems for noisy regression problems. Int. J. Fuzzy Syst. 22(3), 1007–1019 (2020)

Yan, C., Liu, Q., Liu, J., Liu, W., Li, M., Qi, M.: Payments per claim model of outstanding claims reserve based on fuzzy linear regression. Int. J. Fuzzy Syst. 21(6), 1950–1960 (2019)

Sadjadi, E.N., Garcia, J., Lopez, J.M.M., Borzabadi, A.H., Abchouyeh, M.A.: Fuzzy model identification and self learning with smooth compositions. Int. J. Fuzzy Syst. 21(8), 2679–2693 (2019)

Sadjadi, E.N., Herrero, J.G., Molina, J.M., Moghaddam, Z.H.: On approximation properties of smooth fuzzy models. Int. J. Fuzzy Syst. 20(8), 2657–2667 (2018)

Takagi, T., Sugeno, M.: Fuzzy identification of systems and its applications to modeling and control. IEEE Trans. Syst. Man Cybern. 15(1), 116–132 (1985)

Fanger, P.O.: Thermal Comfort: Analysis and Applications in Environmental Engineering. McGraw-Hill, New York (1970)

Teh, C.Y., Kerk, Y.W., Tay, K.M., Lim, C.P.: On modeling of data-driven monotone zero-order TSK fuzzy inference systems using a system identification framework. IEEE Trans. Fuzzy Syst. 26(6), 3860–3874 (2018)

Harrison, D., Rubinfeld, D.L.: StatLib—Datasets Archive, Carnegie Mellon University, USA . http://lib.stat.cmu.edu/datasets/boston (1980)

Zhang, H., Zhang, Z.: Feed forward networks with monotone constraints. In: IEEE International Joint Conference on Neural Networks, vol. 3, pp. 1820–1823. Washington (1999)

Minin, A., Lang, B., Daniels, H.: Comparison of universal approximators incorporating partial monotonicity by structure. Neural Netw. 23(4), 471–475 (2010)

Merz, C., Murphy, P.: UCI machine learning repository. University of California, Department of information and computer science, Irvine (1995)

Funding

Open access publishing supported by the National Technical Library in Prague. This work has been supported by the Grant Agency of Czech Technical University (CTU) in Prague under Grant SGS22/166/OHK3/3T/13.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors do not know about any Conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hušek, P. Monotonic Fuzzy Systems With Goniometric Membership Functions. Int. J. Fuzzy Syst. (2024). https://doi.org/10.1007/s40815-024-01758-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40815-024-01758-4