Abstract

The massive spread of COVID-19 and the crash of China Eastern Airlines MU5735 have negatively impacted the public’s perception of civil aviation safety, which further affects the progress of the civil aviation industry and economic growth. The aim of research is to investigate the public’s perception of China’s civil aviation safety and give the authorities corresponding suggestions. First, we use online comment collection and sentiment analysis techniques to construct a novel evaluation index system reflecting the public’s greatest concern for civil aviation safety. Then, we propose two novel large-scale group decision-making (LSGDM) models for aggregating evaluation: (1) K-means clustering with a novel distance measure for evaluators combined with unsupervised K-means clustering in two-stage, (2) unsupervised K-means clustering for evaluators combined with unsupervised K-means clustering for processing evaluation in two-stage. Finally, we compare the characteristics of different models and use the average of the two models as the final evaluation results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the rapid growth of the domestic economy in recent years, the public’s travel rate has increased significantly, and Chinese air transport industry has also developed in leaps and bounds. According to the civil aviation industry development statistics bulletin of China: Before the outbreak of COVID-19, China's air transport volume up to 11,705.3 billion person/kilometers in 2019. Affected by the epidemic, China’s civil aviation passenger transport volume has a large fluctuation. However, in the post-epidemic era of national policy guidance, China’s civil aviation passenger transport volume growth is still a future development trend [1]. In addition, during February 2022, China’s civil aviation transport aviation sustained safe flight time is more than 100 million hours, which is the best safety performance throughout China’s civil aviation industry and the best sustained safe flight record in the history of the world’s civil aviation [2]. However, because most of the official statistical bulletins use technical terms to describe civil aviation safety, they are highly objective but not easily understood; the crash of China Eastern Airlines MU5735 and the long-term nature of the accident investigation process aggravate citizens’ doubts about the safety of China’s civil aviation. A lot of panic and questioning remarks appear in major online media platforms, which reflects that the public does not have an objective understanding of the actual state of China’s civil aviation safety, and there is a certain deviation between the understanding of safety state of China’s civil aviation and the actual safety level. Based on the above realistic background, the main research motivation of the article is as follows.

-

(1)

Investigating the public’s overall perceived degree for civil aviation safety status in China.

-

(2)

Discovering the deviation of perceived level with different indicators.

-

(3)

Providing suggestions on how to reduce the perception deviation with related indicators.

In this process, it is necessary to construct an evaluation index system, scientifically characterize each evaluation information, and aggregate all data. The following section describes each of these three aspects.

In a large number of civil aviation safety evaluations, the evaluation indicator system is constructed based on relevant regulations [3], actual research of experts from civil aviation related institutions [4], reviews of relevant previous studies in domestic and foreign countries [5], and so on. All these researches evaluate the civil aviation safety level on the basis of the objective actual data, but without the evaluation indicator system constructed from the public perspective. Therefore, this paper uses online data collection technology and text analysis technology to dig the public concerns among comments and construct the evaluation indicator system accordingly.

At the same time, we find many people that focus on the causes of accidents among the many questionable online statements about civil aviation safety, which in turn become the basis for people to choose to take a flight or not. Most individuals’ expressions in online comments and daily communications appear hesitant and ambiguous, indicating that people prefer to use uncertain language to express their inner feeling in natural language expressions. This inspires us to accommodate the safety perception evaluation of China’s civil aviation (SPECCA) problem with the natural language model. Many researchers have proposed models for that including the 2-tuple linguistic model [6], virtual linguistic model [7], hesitant fuzzy linguistic term set (HFLTS) [8], extended hesitant fuzzy linguistic term set [9], and so on. Among them, the HFLTS is suitable for this research because the investigation of our paper is oriented to the public with different birth background and working environment, and the HFLTS can quantitatively model the natural language. In addition, we use hesitant 2-tuple linguistic term sets (H2TLTS) [78] consisting of numeric symbols the possibilistic 2-tuple linguistic pairs (P2TLPs) [78] to represent the aggregated information, which can preserve as much information integrity as possible during the aggregation process.

A large amount of data based on HFLTS will appear in this research, which are intricate, but all contain public’s safety perception level of China’s civil aviation. Due to that, we ponder on how to aggregate a large amount of information in a way that can reduce distortion and capture representative information and then obtain the results of public perception regarding the level of civil aviation safety in China at the present. Recently, many researchers have developed information aggregation techniques, which include extended hesitant fuzzy linguistic term (EHFLT) aggregation operators [9], hesitant fuzzy linguistic ordered interaction distance (HFLOID) operators [10], possibility distribution aggregation operators [11], application [12] and expansion [13, 14] of intuitionistic fuzzy sets, and so on. The N2S-KMC clustering algorithm paradigm proposed by Chen et al. [88] is the most relevant for this work. It divides the data aggregation into grouping and aggregation process, in which grouping adopts a method of establishing optimization model to minimize the number of calculations for each group aggregation after grouping. Secondly, a two-stage aggregation method is used for the obtained groups: the aggregation operator is used to get results for each group and the simplified clustering centers are obtained by K-means clustering for H2TLTS. However, due to more investigators involved in this study, the grouping method with traversal will greatly increase the computation, and the pre-clustering process of H2TLTS after aggregation will also lead to too long computing time and too large space occupation of the computing algorithm, so we further improve the aggregation method as follows:

-

(1)

The grouping of evaluators is replaced by clustering, (i) K-means clustering with only improved distance measures and (ii) unsupervised K-means clustering with the introduction of judgment clustering effect are proposed, where the improved judgmental clustering effects are based on measuring the distribution state of the data inspired by the destiny canopy pre-clustering algorithm, and both clusters reduce the computational effort of the data compared to the traversal grouping approach.

-

(2)

The clustering in the second stage is improved, and the unsupervised clustering applicable to H2TLTS is proposed by drawing on the core idea of density crown pre-clustering, which is added in K-means clustering.

In summary, this paper utilizes online review collection technology and text analysis technology to explore the concerns of the public in online reviews to build an evaluation index system; after collecting evaluation information through questionnaire survey, the evaluators are clustered and then the information is aggregated using a two-stage algorithm. This study makes the following contributions:

-

Theory

-

(1)

Building a civil aviation safety perception evaluation index system from the public’s perspective.

-

(2)

Proposing a novel distance formula to measure the distance between different evaluators with their H2TLTS assessment information.

-

(3)

Providing an unsupervised K-means clustering method to improve the clustering efficiency.

-

(4)

Proposing two novel algorithms for aggregating LSGDM information.

-

(1)

-

Applications

-

(1)

Utilizing the online text collection and statistical techniques to construct the index system.

-

(2)

Exploring the degree of public’s civil aviation safety perception under different indicators, and providing direction for effective publicity guidance to enhance it.

-

(1)

The remainder of this paper is as follows: Sect. 2 provides an overview about civil aviation safety level perception research and information representation and aggregation, Sect. 3 provides an introduction about the content for the model, Sect. 4 describes the process for constructing the indicator system and the collection of data, Sect. 5 provides a detailed description of the proposed algorithm, and Sect. 6 compares the algorithms and analyzes the evaluation results.

2 Literature Review

Focusing on the research theme, this paper elaborates on previous studies in two aspects, which are perception level of safety in civil aviation and the LSGDM model.

2.1 Civil Aviation Safety Level Perception Research

As early as 1997, Williamson et al. [15] studied about perceptions and attitudes towards safety climate, and his research was conducted with the aim of producing a measure regarding perceptions and attitudes towards safety as an indicator towards safety culture for the working population. Subsequently, the attention of a wide range of scholars on safety perception gradually increased, resulting in the relevant research areas expanded to transportation [16,17,18,19], tourism [20,21,22], food safety [23], medical staff [24,25,26], and environment [27, 28]. Relevant studies on safety perceptions also exist in the field of air transportation. Specifically, Li et al. [29] studied on the social influences and public perception to aviation accidents and airlines, which verified that the people witnessed an accident are strongly affected. Ringle et al. [30] unveiled how perceived safety can be one of the critical drivers capable of explaining overall customer satisfaction. Mauro [31] compared the Layman’s view of aviation safety with the same view of professionals, and the factors that influence it and the factors that are influenced by it. Ji et al. [32] showed through a study that passengers’ daily acquired safety knowledge moderates the relationship between their perceptions of in-flight safety and satisfaction, security procedures is also done the same research with satisfaction. Lee et al. [33] researched that the relationship is among air passengers’ perceptions of pre-flight safety communication, attitude, subjective norm, control of perceived practices and intentions to engage in safety communication prior to flight. Sandada and Matibiri [34] discovered that safety perception had a positive influence on customer loyalty in Southern Africa. Sakano et al. [35] found that travelers who lacked experience in using public transportation had lower perception levels of airport security. Shiwakoti et al. [36] studied the relationship among safety perceptions of civil aviation passengers, demographic characteristics, service quality indicators, overall satisfaction and faithfulness using a route from Vietnam to Australia as an example, which found that the SERVQUAL model and service quality in airline had a significant impact on passengers’ safety perceptions. Ma et al. [37] found that safety perception, along with functionality, layout accessibility, and cleanliness, affects passenger satisfaction with civil transportation, which in turn affects travel intentions. Chan et al. [38] found that frontline pilots and managers can be in conflict due to different perceptions of safety and performance.

In addition, the research related to civil aviation safety needs to establish the evaluation indicator system for different research contents. Most scholars construct it based on the integration of literature, the concentration of relevant documents and data materials within the industry. Su and Xie [39] combined performance with physical parameters and applied them to the civil aircraft safety assessment index system, which can assess the safety of civil aircraft. Chen et al. [40] applied system and job analysis, event tree analysis, fault tree analysis, and bow-tie analysis to establish four types of security performance indicators and proposed a new model for departmental risk assessment based on them. Zhao et al. [41] established the civil airports security evaluation indicator system with operational features of civil airports, including four aspects: people, equipment, environment and management. Zhang et al. [42] constructed the evaluation index system according to the theory of system engineering including person-equipment- environment- management. Liu [43] referred to the definition of flight operating efficiency proposed by the Performance Review Commission and considered the development status of civil Aviation in China to define the flight efficiency according to variables such as quality, safety, environment or economic benefits. Tang et al. [44] integrated previous studies and the documents including ICAO documents and the Civil Aviation Administration of China (CAAC). Besides, the associated content interviewed with ATC safety management experts was also added to the indicator system to assess air traffic control system. Yang and Feng [45] researched the requirements of security work for security information systems in civil aviation airports, as well as key equipment information and technical characteristics; from these, the evaluation index system of the security information system of civil aviation airports, which is composed of three dimensions: hardware (host system security), software (network security) and data (data security), is constructed. Bartulović and Steiner [46] used safety performance indicators and organizational indicators developed by an aviation training organization to examine the correlation between the above-mentioned indicators.

The above studies conducted safety evaluation from different fields of civil aviation industry and constructed evaluation index systems based on relevant documents, regulations, information data and experts’ suggestions; however, such evaluation indicator systems are not suitable for public’ perception of civil aviation safety level due to the strong professionalism. The evaluation index system based on public’ perceptions need to start from the actual concerns and grasp their real demand to make the index system more reasonable.

At present, with the leap of information technology and the rapid increase of Chinese netizens, more people are expressing their opinions and suggestions through online comments, and the large amount of data hidden behind this are the real information about the concerns of groups in related fields. By analyzing online comments, understanding the opinions of netizens and discovering the real demands of the public, we can find the essential reasons behind the phenomenon and then propose powerful improvement strategies. Deep mining and analysis techniques based on online reviews have been widely used in various research fields [47,48,49,50,51]; some of the literature is organized as follows.

For financial sector, Cockcroft and Russell [52] presented research in information systems, accounting and finance integrated with big data, and prospects for future research areas in accounting and finance. Hassani et al. [53] introduced big data analytics that can uncover meaningful information hidden behind complex data, such as data mining (DM) techniques that can help the banking industry achieve better strategic management.

For customer satisfaction, Wu et al. [54] proposed a sentiment analysis method and a consensus model of feedback mechanism by identifying the intensity of emotions in online reviews, above providing consumers with the best hotel options. Wu et al. [55] proposed a satisfactory hotel selection decision model based on online reviews and verified the validity of the model. Luo and Xu [56] analyzed the emotional orientation of customers dining in the COVID-19 pandemic era by mining online reviews of restaurants. Sharma and Shafiq [57] analyzed text comments on online forums, retail marketplace websites or social media to determine the different possible intentions of users. Chen et al. [58] conducted a text mining technique to extract what consumers care most about in online shopping from Q&A systems and online reviews, as well as to compare the features and similarities of the two kinds of mining results. Barbosa and Páramo [59] conducted a sentiment analysis of travelers’ reviews on two of the most popular online travel platforms in Mexico, which aims to understand the behavior of travel consumers using online platforms to book luxury hotels with COVID-19.

For food science, Tao et al. [60] provided an overview of data sources, computational methods and applications of text data in the food industry by compiling extensive literature. Food safety hazards were, Goldberg et al. [61], rearranged by mining relevant comments in online media. Correa et al. [62] studied the impact of traffic conditions on online food distribution services according to mainly performance indicators. Lee et al. [63] studied the influence of information on readers’ opinions using genetically modified food reports as an example. Ma et al. [64] studied fresh food repurchase intention by collecting online reviews by partial least squares structural equation modelling. Tontini et al. [65] investigated the effect of spontaneous customer reviews on restaurant service satisfaction, where service quality includes the quality and price of food. Moreover, research on online reviews exists in other fields as well, mostly by obtaining opinions to improve the quality of the research subjects or by using evaluations to uncover information that is more beneficial to the industry. However, few studies have used public concern as an evaluation indicator. The perceptions of people extracted by using online comment analysis techniques are applied to the construction of the evaluation index system, which make the system more consistent with the main purpose of the survey.

2.2 Information Representation and Aggregation with LSGDM

With the development of web technology, it is possible for a large number of people to be surveyed. The number of LSGDM participants in Zhang et al.’s paper is 11 [66], while the number of participants in Wu and Xu’s paper is 20 [67]. Besides the increase in the number of people surveyed, the process of representing and aggregating information is the other important part of the LSGDM process. After constructing the evaluation indicator system, the necessity is to measure and aggregate the level of citizens’ perception of civil aviation security. This is of great importance to ensure that the information is more in line with the respondents’ psychological state and that the final evaluation results are realistic and valid.

Existing studies have mainly used scales as a tool to characterize the measurement of evaluation information, with the most widely utilized being Likert scales. For medical research, the scale is developed as COVID Stress Scales [68] and is used to evaluate the subjective burden and perspective of healthcare providers [69], to assess health technology of optoelectronic biosensors for oncology [70] and so on. For equipment evaluation, the scale is used to evaluate mainstream wearable fitness devices [71], to evaluate magnetic resonance imaging [72] and so on. For psychological research, related literature includes exploring fear mental with university students [73] and measuring the anxiety of the Korean people [74]. The Likert scale has been widely used as a common measurement tool, but it is a simple collection of data, which cause distortion of information because the scale cannot reflect the degree of fuzzy hesitation with actual evaluation information due to different growth environments and educational experiences with each people.

With the aim of obtaining a closer approximation to the real evaluation information, many scholars have studied the quantitative transformation of evaluation-based natural language, including 2-tuple linguistic model [6], virtual linguistic model [7], proportional 2-tuple linguistic model [75], uncertain linguistic terms (ULTS) [76], HFLTS [8], extended HFLTS (EHFLTS) [9] and novel type-1 and type-2 fuzzy envelopes of EHFLTS [77], H2TLTSs [78] and related extend [79], personalized personal semantic multi-attribute learning function[80], interval type-2 fuzzy sets (IT2FSs) [81], incomplete fuzzy relation and contradictive fuzzy relation [82]. Among them, HFLTS can represent hesitancy in natural language yet avoiding complex mathematical representation which is suitable for the collection information in this paper.

After optimizing the conversion process of the evaluation information, the information also needs to be valid aggregated within LSGMD that is applied in many fields, such as professional knowledge and decision-making preference [83,84,85], building construction [86] or high-speed rail service evaluation [87]. The existing studies on aggregated HFLTS include that mainly add distribution firstly and then aggregate the data. The major studies of the aggregation method were two-stage aggregation paradigm for HFLTS possibility distributions and K-means clustering for the aggregation of HFLTS possibility distributions, both of which are proposed by Chen et al. [78, 88]. The former of that simplifies the aggregated information by aggregating and clustering in a two-stage manner. And the latter adds a grouping step to perform a 2-stage calculation on the grouped information, which can reduce the amount of calculation for each group of aggregation; meanwhile, K-means clustering for H2TLTS can reduce the number of data aggregated, which is beneficial for acquisition of hidden information. However, the increase in the number of evaluators leads to a complex grouping process, which cannot achieve the effect of simplifying the calculation. Accordingly, we improve the model on the basis of the algorithm proposed by Chen et al. [88] for aggregating evaluation with the large number of participants in this paper.

3 Preliminaries

The basic symbols of this paper follow Chen et al. [88] to unify with previous studies and make the article easier to understand. Examples of basic symbolic representations are shown in Table 1.

On the basis of the above instruction, the following symbolic expressions can be more easily understood. In this paper, a possibility distribution \(\left( {p_{ - \tau } , \cdots ,p_{i} , \cdots ,p_{\tau } } \right)\) is represented by P; the set of LTS is \(S = \left\{ {S_{t} \left| {t = - \tau , \cdots , - 1,0,1, \cdots ,\tau } \right.} \right\}\); for convenience, it can also be noted as \(S = \left\{ {S_{t} \left| {t = \left\lfloor \tau \right\rfloor } \right.} \right\}\); \(\overline{S}\),\(\overline{S} = S \times [ - 0.5,0.5)\), is a 2-tuple combination derived from \(S = \left\{ {S_{t} \left| {t = \left\lfloor \tau \right\rfloor } \right.} \right\}\); \(\Omega = \left\{ {PL_{1} ,PL_{2} , \cdots ,PL_{n} } \right\}\) is a set of P2TLPs.

The basic concepts of development for HFLTS possibility distribution are provided as follows.

There are a large number of complex language forms in daily communication, and the quantification of natural language becomes the first step in subsequent research. Language quantification has experienced a process from a single variable to multiple variables, which gradually describes natural language appropriately. In the development of multiple linguistic variable transformation models, Rodríguez et al. [8] had extended the linguistic term set (LTS) based on the single linguistic variable model to the HFLTS in view of the ambiguous expression in natural communication. The specific concepts are as follows.

Definition 1

([8]) Let \(S = \left\{ {S_{0} ,S_{1} , \cdots ,S_{g} } \right\}\) be an LTS. \({\rm H}_{S}\) is an HFLTS with S, which is an ordered finite subset of consecutive linguistic terms, S.

With further research, symmetrically distributed linguistic term sets \(S = \left\{ {S_{t} \left| {t = \left\lfloor \tau \right\rfloor } \right.} \right\}\) are easier to understand in the process of natural language quantitative transformation than traditional LTS that are gradually used in more and more literatures. In set \(S = \left\{ {S_{t} \left| {t = \left\lfloor \tau \right\rfloor } \right.} \right\}\), \(S_{0}\) is designated as the median value; other variables are evenly and symmetrically distributed on both sides of 0. Since then, Wu and Xu [11] have studied the aggregation process of more detailed uncertain linguistic information, HFLTS, where possibility distribution is added in the model.

Definition 2

([11]) Let \(S = \left\{ {S_{t} |t \in \left\lfloor \tau \right\rfloor } \right\}\) and \({\rm H}_{S} (\vartheta ) = \left\{ {S_{L} ,S_{L + 1} , \cdots ,S_{U} } \right\}\). \({\mathbf{P}} = \left( {p_{ - \tau } , \cdots ,p_{l} , \cdots ,p_{\tau } } \right)\) means the possibility distribution for HS is represented by \({\mathbf{P}} = \left( {p_{ - \tau } , \cdots ,p_{l} , \cdots ,p_{\tau } } \right)\), and \(p_{l}\) is shown with.

where \(p_{l}\) is the possibility with an arbitrary term \(S_{l}\) existing under a certain indicator, and the sum of possibility for all linguistic terms under the same indicator is 1, \(\sum\nolimits_{l = - \tau }^{\tau } {p_{l} } = 1\), and each \(p_{l}\) is between 0 and 1, that is, \(0 \le p_{l} \le 1\) and \(l \in \left\lfloor \tau \right\rfloor\).

After information aggregation or when more accurate evaluation is required for some problems, the use of LTS will lead to the loss of some evaluation information making the results lose accuracy. Therefore, Herrera and Martínez [6] proposed a 2-tuple linguistic representation model, which is used to more accurately quantify the natural language and makes the evaluation closer to the real psychological state.

Definition 3

([6]) Let \(\beta_{i} \in \left[ {0,g} \right]\) be the result after aggregation. \(\Delta_{S}\) is a function, where \(\beta_{i}\) can be converted to the 2-tuple linguistic information equivalents with.

\(\Delta_{S} \left( {\beta_{i} } \right) = \left( {s_{i} ,\alpha_{i} } \right)\), with \(\left\{ \begin{gathered} s_{i} , \, i = round\left( {\beta_{i} } \right), \hfill \\ \alpha_{i} = \beta - i, \, \alpha_{i} \in \left[ { - 0.5,0.5} \right). \hfill \\ \end{gathered} \right.\)

where \(round\left( {\beta_{i} } \right)\) is the usual round operation.

In addition, the inverse function \(\Delta_{S}^{ - 1}\) is proposed to convert 2-tuple semantics to \(\beta\).

Similarly, \(S = \left\{ {S_{t} \left| {t = \left\lfloor \tau \right\rfloor } \right.} \right\}\) with semantic symmetric distribution can also be applied to 2-tuple linguistic representation model. Wei and Liao [91] expands on this basis to combine the HFLTS with the binary linguistic model, so that HFLTS can retain more information in the process of aggregation.

Definition 4

([91]) Let \(\overline{S}\) be the 2-tuple set associated with S defined as \(\overline{S} = S \times \left[ { - 0.5,0.5} \right)\).\(\left\{ {\Delta_{{\text{S}}} (\beta_{i} )|i \in \left[ n \right]} \right\}\) with 2-tuple linguistic values, where \(\Delta_{{\text{S}}} (\beta_{i} ) \le \Delta_{{\text{S}}} (\beta_{j} )\) for any \(i \le j\) is called an H2TLTS on S.

Both LTS and HFLTS provide a basis for quantitative language conversion, while the 2-tuple linguistic model and H2TLTS provide a basis for word calculation on the basis of the former. In addition, research on statistical methods of multiple hesitant fuzzy semantics is a way for information aggregation explored by researchers. Among them, Chen et al. [78] combine the semantic value of each HFLTS with its possibility to form a 2-tuple semantic pair, so that each pair of information can be calculated separately in the aggregation process.

Definition 5

([78]) Let \(\overline{S}\) be the 2-tuple set associated with S defined as \(\overline{S} = S \times \left[ { - 0.5,0.5} \right)\). The binary group \(\left( {\Delta_{S} \left( {\beta_{i} } \right),p_{i} } \right)\) with 2-tuple linguistic term and the possibility, is called a P2TLP. If \(\alpha_{i} = 0\), then a P2TLP \(\left( {\Delta_{S} \left( {\beta_{i} } \right),p_{i} } \right)\) becomes \(\left( {\left( {S_{{t_{i} }} ,0} \right),p_{i} } \right)\).

The evaluation of the same problem by different evaluators will form a combination of hesitation fuzzy linguistic terms and probability, \(\Omega = \{ PL_{1} ,PL_{2} , \cdots ,PL_{n} \}\), and the aggregation operator of the whole set is proposed by Chen et al. [78].

Definition 6

([78]) Let \(\Omega = \{ PL_{1} ,PL_{2} , \cdots ,PL_{n} \}\) be a set of P2TLPs, where \(PL_{i} = \left( {\Delta_{S} \left( {\beta_{i} } \right),p_{i} } \right)\) and \(\beta_{i} = \Delta_{S}^{ - 1} \left( {s_{i} ,\alpha_{i} } \right)\) for all \(i \in [n]\), and \({\mathbf{w}} = \left( {w_{1} ,w_{2} , \cdots ,w_{n} } \right)\) is the associated weights of \(PL_{i}\), such that \(w_{i} \in \left[ {0,1} \right]\) and \(\sum\nolimits_{i = 1}^{n} {w_{i} = 1}\). The possibilistic 2-tuple weighted average (P2TLWA) operator can be defined as:

For the convenience of calculation, an evaluation, H2TLTS, is combined with the probability distribution, which is called H2TLTS possibility distribution, and its existence can better continue the follow-up research.

Definition 7

([78]) Let \(\vartheta\) be an H2TLTS with \({\mathbf{P}} = \left( {p_{ - \tau } , \cdots ,p_{l} , \cdots ,p_{\tau } } \right)\), the associated possibility distribution, in which \(p_{i} \in \left[ {0,1} \right]\) is the possibility of 2-tuple linguistic value \(\Delta_{S} \left( {\beta_{i} } \right) = \left( {S_{{t_{i} }} ,\alpha_{i} } \right)\) in \(\vartheta\). An H2TLTS possibility distribution \(\vartheta \left( {\mathbf{P}} \right)\) can be defined as.

where \({\rm T}_{i}\) is subset of \(\left\lfloor \tau \right\rfloor\), \(\# \vartheta \left( {\mathbf{P}} \right)\) is the cardinality of P2TLPs in \(\vartheta \left( {\mathbf{P}} \right)\), all \(PL_{i} = \left( {\Delta_{S} \left( {\beta_{i} } \right),p_{i} } \right)\) form a set of ordered finite P2TLPs, and \(p_{i}\) is the possibility of each two-tuple linguistic value \(\Delta_{S} \left( {\beta_{i} } \right)\) such that \(\sum\nolimits_{{i \in \left[ {\# \vartheta \left( {\mathbf{P}} \right)} \right]}} {p_{i} = 1}\) and \(p_{i} \in [0,1][0,1].\)

The H2TLTS possibility distribution is denoted as \({\rm H}_{{\vartheta \left( {\mathbf{P}} \right)}} = \left\{ {\vartheta_{1} \left( {{\mathbf{P}}_{1} } \right),\vartheta_{2} \left( {{\mathbf{P}}_{2} } \right), \cdots ,\vartheta_{n} \left( {{\mathbf{P}}_{n} } \right)} \right\}\), in which \(\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right) = \left\{ {\left. {PL_{{t_{{j_{i} }} }} = \left( {\Delta_{S} \left( {\beta_{{_{{t_{{j_{i} }} }} }} } \right),p_{{t_{{j_{i} }} }} } \right)} \right|t_{{j_{i} }} \in {\rm T}_{{j_{i} }} ,j_{i} \in \left[ {\# \vartheta_{i} \left( {{\mathbf{P}}_{i} } \right)} \right],i \in [n]} \right\}\). The aggregation operator applied to that is hesitant possibilistic 2-tuple linguistic weighted average (HP2TLWA) operator, which based on P2TLWA, and simplified possibilistic two-tuple linguistic average operator (P2TLA).

Definition 8

([78]) Let \({\rm H}_{{\vartheta \left( {\mathbf{P}} \right)}}\) be a set of H2TLTS possibility distributions. Let \({\mathbf{w}} = \left( {w_{1} ,w_{2} , \cdots ,w_{n} } \right)\) be the associated weights of \({\rm H}_{{\vartheta \left( {\mathbf{P}} \right)}}\), which satisfies that \(w_{i} \in \left[ {0,1} \right]\) for all \(i \in \left[ n \right]\) and \(\sum\nolimits_{i = 1}^{n} {w_{i} = 1}\). The HP2TLWA operator is defined by.

where

After using HP2TLWA operator to aggregate the evaluation, there will be a large number of evaluation linguistic terms and probability distribution values. The crucial point with the problem is to find a few values that can effectively represent the overall situation. Therefore, Chen et al. [78] applied clustering algorithm to the data processing after aggregation, so that a small amount of data can be found to represent the whole, and the hidden rules behind the data can be discovered. In the clustering process, the most important thing is to define the distance, for which Chen et al. [78] further proposed the distance formula applied to P2TLPs.

Definition 9

([78]) Let \(S\) and \(\overline{S}\) be as before. Let \(PL_{i} = \left( {\Delta_{S} \left( {\beta_{i} } \right),p_{i} } \right)\) and \(PL_{j} = \left( {\Delta_{S} \left( {\beta_{j} } \right),p_{j} } \right)\) be arbitrary two P2TLPs, where \(\beta_{i} = \Delta_{S}^{ - 1} \left( {S_{{t_{i} }} ,\alpha_{i} } \right)\), \(\beta_{j} = \Delta_{S}^{ - 1} \left( {S_{{t_{j} }} ,\alpha_{j} } \right)\), \(p_{i} ,p_{j} \in \left[ {0,1} \right]\), \(t_{i} ,t_{j} \in \left\lfloor \tau \right\rfloor\). The distance measure between them is defined as.

The basic knowledge required in this paper is as above, and the following begins to introduce the construction of the indicator system and the evaluation model.

4 Excavating Citizen Attention for Civil Aviation Safety in China by Online Comments Mining

This paper constructs an evaluation index system by mining online information on people’s concerns about the safety of civil aviation in China. SPSS software is applied in testing the reliability for the evaluation.

4.1 Evaluation Index System Construction

With the science and technology progress, more real-time information is rapidly spreading through the Internet and causing heated discussions in the population. Online communication has become a new trend for public communication, and people’s opinions focus on their attitudes and views on a certain event. As a mainstream online communication platform, Weibo brings together many news, reports and public comments within it. In this paper, we use a crawler tool, Octopus collector, to explore the concerns of publics about civil aviation safety and build a civil aviation safety evaluation indicator system from these concerns under the public’s perspective.

We collect the information through finding out the media reports of Chinese domestic civil aviation safety incidents in the past three years by searching four groups of keyword pairs: “civil aviation, accident”, “aircraft, unsafe”, “flight, unsafe”, “civil aviation, unsafe”, then collect the comments under the news. Since most of the secondary comments under the Weibo are specific discussions on the primary comments, there are many invalid comments that are not related to the factual news, we only collect the primary comments, and a total of 4940 comments are collected. After filtering, the comments containing discussions on the causes of civil aviation safety incidents and obvious directional concerns about the safety of air travel are 529 messages.

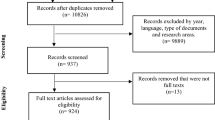

The filtered content is analyzed by the word separation and word frequency statistics software Content Mining System User Manual Version 6.0 (ROSTCM6), and a part of the valid word frequency statistics and classification summarized results are shown in Fig. 1. In summary, there are a total of ten concerns about civil aviation safety. Accordingly, the evaluation indicators are summarized in Table 2.

4.2 Evaluation Linguistic Model Construction and Data Collection

People prefer to use fuzzy language to express their opinions and attitudes in daily life, and many studies have been developed on the quantification of fuzzy language. Among many linguistic quantification models, HFLTS can both efficiently represent participants’ evaluations in natural language and be simple to understand for the public, so this paper uses HFLTS to characterize evaluation information. Therefore, the options in the questionnaire were set to allow for one or more consecutive choices, that is, one or more consecutive choices among “don’t care a lot, don’t care much, don’t care little, neutral, care little, care much, care a lot”. And in this process, we consider the possibility that each element answered by the participants is equally frequent, thus adding information about the equal possibility distribution for each participant-specific set of linguistic terms. For details, see Definition 3 and Definition 5 in Sect. 3. In addition, the evaluation team members consisted of 20 people with different cultural, and work backgrounds and then this study is a LSGDM problem. We present the collected evaluation information with the corresponding complementary possibility distributions as follows. For simplicity, the indicator is represented with “IN” in Tables 3 and 4.

4.3 Reliability and Validity Tests of the Questionnaire

Since there are not unique options in the collected data, it is necessary to first supplement the probability distribution before calculating the expected value. The example is as follows.

Example 1

The evaluation of indicator 5 (IN5) by the sixth participant in Table 3 includes three values, and the corresponding probability distribution values need to be added first, as follows.

Furthermore, the expectation of this set is: \(\left( { - 3} \right) \times \left( { - {1 \mathord{\left/ {\vphantom {1 3}} \right. \kern-0pt} 3}} \right) + \left( { - 2} \right) \times \left( { - {1 \mathord{\left/ {\vphantom {1 3}} \right. \kern-0pt} 3}} \right) + \left( { - 1} \right) \times \left( { - {1 \mathord{\left/ {\vphantom {1 3}} \right. \kern-0pt} 3}} \right) = - 2\), that is, \(S_{ - 2}\). The other values in Table 3 use the same method to calculate the expected value. The processed values are listed in Table 4, and we verify the validity and reliability of the questionnaire using SPSS with the linguistic terms of the evaluation processed.

4.4 Reliability tests

The Cronbach’s alpha coefficient, which is valued between 0 and 1, is the most used reliability measure. When the alpha coefficient is lower than 0.6, the researchers considered that the survey has deficiency internal consistency reliability; when it is greater than 0.7 but not more than 0.8, that is the scale has considerable reliability; and between 0.8 and 0.9 indicates excellent reliability. We use SPSS.26 to calculate the coefficients to get the reliability of the questionnaire and obtained alpha with 0.977, which is higher than 0.9, indicating that the questionnaire is reliable. The result of reliability tests is shown in the following Table 5.

4.5 Validity Tests

The Kaiser–Meyer–Olkin (KMO) statistic is also in the range of 0 and 1. Usually, a KMO greater than 0.7 is considered to satisfy the requirements for investigation. Meanwhile, Bartlett’s sphericity test is regarded as a tool to determine the validity of independent factor analysis method for each variable. The KMO is greater than 0.8 calculated by SPSS, as shown in Table 6, which indicates the investigation meets the requirement; the sphericity test (P = 0.0001) is lower than 0.05, which indicates that the variables have nothing to do with other to some extent.

5 Public Civil Aviation Safety Perception Evaluation Model Based on the Improved N2S-KMC

N2S-KMC was first proposed by Chen et al. [88]. However, the improved algorithm still has some drawbacks when applied to large group decision problems:

-

First, if there are many evaluators and complex evaluation information in large group decision making, grouping with the optimization model will lead to a significant increase in the algorithm running time in the situation.

-

Second, the performance of the K-means clustering relies on the selection of the initial clustering center and the number of clusters, which affects the typicality and accuracy of the results after simplification, as well as the running time with the algorithm.

Based on that, we improve the N2S-KMC as follows. The K-means clustering with the distance measures is used to cluster evaluators instead of grouping evaluations under each criterion, which can reduce computing time; during the process, the (1) unsupervised K-means clustering and (2) improved traditional K-means clustering are proposed, accordingly; second, we also use the improved unsupervised K-means clustering method to refine the shortcomings that appear when clustering the aggregated results, where the clustering performance is improved by automatically increasing the number of clusters. The detailed interpretation of the basic symbols applied throughout the algorithm is as follows.

-

Assuming that the evaluation team contains n members, the x-th evaluator is noted as \(ev_{x}\), where \(x = 1,2, \cdots ,n\).

-

Assuming that in the evaluation index system exists m indicators, the evaluation with the x-th evaluator about the y-th indicator is denoted as \(\vartheta_{y}^{x} \left( {{\mathbf{P}}_{y}^{x} } \right)\) that is made up with H2TLTS possibility distributions, where \(y = 1,2, \cdots ,m\).

-

The evaluation vector from the x-th evaluator with all indicators is denoted as \({\mathbf{E}}_{x} = \left( {\vartheta_{1}^{x} \left( {{\mathbf{P}}_{1}^{x} } \right), \cdots ,\vartheta_{y}^{x} \left( {{\mathbf{P}}_{y}^{x} } \right), \cdots ,\vartheta_{m}^{x} \left( {{\mathbf{P}}_{m}^{x} } \right)} \right)^{{\text{T}}}\), and the entire assessment matrix with all evaluators is denoted as \({\mathbf{E}} = \left\{ {{\mathbf{E}}_{1} , \cdots {\mathbf{E}}_{x} , \cdots ,{\mathbf{E}}_{n} } \right\}\).

In addition, the distance measures applied to the improved K-means clustering are introduced as follows, including the distance between evaluation about the certain indicator from different evaluators and between evaluators with all evaluation in each indicator. Details of that are described below.

As mentioned before, we use HFLTS possibility distribution to quantify the evaluation information from the specific evaluator with the indicator, in which the basic unit of that is P2TLP. So, computing the distance between evaluation from different evaluators about the same indicator requires two steps: one is obtaining the distance between two arbitrary P2TLPs, and the other is computing the distance between two HFLTS possibility distributions. During this period, different values of P2TLP are involved in the different HFLTS possibility distributions. Inspired by Xiong et al. [92] who proposed the method to measure the distance between two arbitrary HFLTS possibility distributions, we follow the process of its complementary elements to HFLTS possibility distributions. Meanwhile, following Chen et al.’s [78] distance measure, a novel distance measure is defined with HFLTS possibility distributions supplemented. For the process of elemental supplementation, the brief description is that each HFLTS possibility distribution is supplemented with specific P2TLPs containing the corresponding absent linguistic terms with 0 possibility value, which makes the same number of linguistic terms with P2TLPs contained in each HFLTS possibility distribution for calculating distance. For the novel distance measure, the definition and corresponding proposition and proof are as follows.

Definition 10

Let \(\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right)\) and \(\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)\) be two arbitrary supplementary HFLTS possibility distributions, where.

and the number of P2TLPs contained in \(\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right)\) or \(\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)\) is denoted as \(\# \vartheta^{\prime}\left( {\mathbf{P}} \right)\). The distance between two arbitrary \(\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right)\) and \(\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)\) is defined as:

There are some results related to such distance measure.

Proposition 1.

For two arbitrary HFLTS possibility distributions \(\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right)\) and \(\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)\), the following statements hold:

-

(1)

Boundedness: \(0 \le D\left( {\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right),\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)} \right) \le 1\).

-

(2)

Symmetry: \(D\left( {\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right),\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)} \right) = D\left( {\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right),\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right)} \right)\).

-

(3)

Reflexivity: \(D\left( {\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right),\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)} \right) = 0\) if and only if \(\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right) = \vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)\).

-

(4)

Triangle inequality:\(D\left( {\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right),\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)} \right) \le D\left( {\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right),\vartheta_{k} \left( {{\mathbf{P}}_{k} } \right)} \right) + D\left( {\vartheta_{k} \left( {{\mathbf{P}}_{k} } \right),\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)} \right)\) for an arbitrary \(\vartheta_{k} \left( {{\mathbf{P}}_{k} } \right)\).

Proof.

Proof of Boundedness, Symmetry and Reflexivity is easy to obtain. The following is the proof of the triangle inequality.

Suppose that for two arbitrary HFLTS possibility distributions, \(\vartheta_{i} \left( {{\mathbf{P}}_{i} } \right)\) and \(\vartheta_{j} \left( {{\mathbf{P}}_{j} } \right)\), then we have

As mentioned above we obtained the distance between different evaluations under the same indicator and hence further proposed the distance measure between different evaluators with all indicators, which is the average of the distances of any two evaluators with different indicators.

The above provides the foundation for constructing the evaluation model, and the following section focuses on the improvement of the original algorithm combined with the new distance measure.

5.1 Two K-Means Clustering Algorithms for Clustering Evaluators

Clustering the evaluators instead of traversing the groupings in N2S-KMC can significantly shorten the time of the algorithm calculating. Simultaneously, the clustered centers are used to represent the corresponding clusters, which can reduce the amount of data while providing representative evaluation. However, K-means clustering is sensitive to the selection of clustering centers and the number of clusters, which will affect the clustering performance and computing time. Therefore, two K-means clustering are proposed in this paper, which are (1) K-means clustering using only the new distance measure and (2) unsupervised K-means clustering incorporating clustering effect evaluation. The following is an introduction to each.

5.1.1 K-Means Clustering for Evaluators Only with the Novel Distance Measure (KND)

Step 1: Determine the initial clustering centers and number of clusters. The initial number of clusters K is randomly decided, and the initial cluster centers are randomly selected from \({\mathbf{E}}\), which is represented as \({\mathbb{C}} = \left\{ {c_{1} , \cdots ,c_{t} , \cdots ,c_{K} } \right\}\) with \(c_{t} = \left\{ {c_{1}^{t} ,c_{2}^{t} , \cdots ,c_{y}^{t} , \cdots ,c_{m}^{t} } \right\}\). Each assessment vector is denoted as \({\mathbf{E}}_{x} = \left( {\vartheta_{1}^{x} \left( {{\mathbf{P}}_{1}^{x} } \right),\vartheta_{2}^{x} \left( {{\mathbf{P}}_{2}^{x} } \right), \cdots ,\vartheta_{y}^{x} \left( {{\mathbf{P}}_{y}^{x} } \right), \cdots ,\vartheta_{m}^{x} \left( {{\mathbf{P}}_{m}^{x} } \right)} \right)^{{\text{T}}}\).

Step 2: Assign the elements to the corresponding clusters according to the shortest distance. Calculate the distance between \({\mathbf{E}}_{x}\) and \(c_{t}\) with Eq. (2). Suppose

then \({\mathbf{E}}_{x}\) is allocated to the nearest cluster \(G_{e}\).

Step3: Update the cluster centers. Calculate the cluster centers according to the clusters from step 2 with operator HP2TLWA. Let \(I_{y}^{{G_{t} }}\) be a set of the elements in cluster Gt with indicator y, where \(I_{y}^{{G_{t} }} = \left\{ {\vartheta_{y}^{1} \left( {{\mathbf{P}}_{y}^{1} } \right),\vartheta_{y}^{2} \left( {{\mathbf{P}}_{y}^{2} } \right), \cdots ,\vartheta_{y}^{{\# G_{t} }} \left( {{\mathbf{P}}_{y}^{{\# G_{t} }} } \right)} \right\}\), then the center of cluster Gt with indicator y is

the center of cluster \(G_{t}\) is updated with \( c^{\prime}_{t} = \left\{ {c_{1}^{t}{\prime} , \cdots ,c_{y}^{t}{\prime} , \cdots ,c_{m}^{t}{\prime} } \right\} \), where \(c_{y}^{t}{\prime } = HP2TLWA\left( {I_{y}^{{G_{t} }} } \right)\).

Step 4: Determine iteration termination conditions. Let the threshold value be \(\varepsilon\) (\(\varepsilon > 0\)). If \(Dis \le \varepsilon\), go to the next step; otherwise, return to step 2. \(Dis\) is obtained as \(Dis = D\left( {c_{t} ,c^{\prime}_{t} } \right)\), where \(c_{t}\) and \(c^{\prime}_{t}\) are the clustering centers before and after the update respectively.

Step 5: Obtain the cluster and the corresponding center. \({\mathbb{G}} = \left\{ {G_{1} ,G_{2} , \cdots ,G_{K} } \right\}\) is the cluster and \({\mathbb{C}} = \left\{ {c_{1} ,c_{2} , \cdots ,c_{t} , \cdots ,c_{K} } \right\}\) is the center.

5.1.2 Unsupervised K-Means Clustering with Density Assessment (UK)

Since the traditional K-means clustering is supervised clustering, the performance of clustering is directly related to the number of initial clusters and cluster centers. In addition, inspired by Zhang et al.’s algorithm [89] for density canopy pre-clustering, if the final generated clusters are consistent with the data distribution pattern, the clustering is appropriate. Accordingly, we propose density-based intra-group distance measure, inter-group distance measure, mean coefficients and extended silhouette coefficients, which are used to estimate the performance of clustering and improve the clustering to unsupervised K-means clustering. The novel concepts proposed are as follows.

Definition 11

Let \({\mathbf{E}} = \left\{ {{\mathbf{E}}_{1} ,{\mathbf{E}}_{2} , \cdots {\mathbf{E}}_{x} , \cdots ,{\mathbf{E}}_{n} } \right\}\) be an assessment matrix with n evaluators, where \({\mathbf{E}}_{x} = \left( {\vartheta_{1}^{x} \left( {{\mathbf{P}}_{1}^{x} } \right),\vartheta_{2}^{x} \left( {{\mathbf{P}}_{2}^{x} } \right), \cdots ,\vartheta_{y}^{x} \left( {{\mathbf{P}}_{y}^{x} } \right), \cdots ,\vartheta_{m}^{x} \left( {{\mathbf{P}}_{m}^{x} } \right)} \right)^{{\text{T}}}\) with m indicators. After being clustered, \({\mathbf{E}} = \left\{ {C_{1} ,C_{2} , \cdots ,C_{t} , \cdots ,C_{K} } \right\}\), where \(C_{t} = \left\{ {\left. {E_{i} } \right|i = 1,2, \cdots ,t, \cdots ,\# C_{t} } \right\}\), then the intra-class average distance with \(C_{t}\) is given by.

Definition 12

The cluster distance \(b\left( {E_{i}^{{}} } \right)\) represents the distance between \(E_{i}^{{}}\) and the other \(E\) with a higher density, which is calculated by.

Based on the intra-class distance and inter-class distance, we propose mean coefficient and density silhouette coefficient.

Definition 13

Let \(E_{i}\), \(E_{j}\), \(C_{t}\) be as described above. The mean coefficient is the sum of the differences between the average distance of all elements and the average distance of elements within the cluster. The mean coefficient is expressed in M.

where \(f(x) = \left\{ \begin{gathered} 0,x < 0 \hfill \\ 1,x \ge 0 \hfill \\ \end{gathered} \right.\). \({\mathbf{M}} = 0\) proves that the clustering is valid.

Definition 14

Density silhouette coefficient is based on data density to assess the clustering performance, and \(a\left( {E_{i} } \right)\), \(b\left( {E_{i} } \right)\) are as above. Calculation of the density silhouette coefficient is given by.

The average density silhouette values derived by Eq. (3) is \(S = {{\sum\nolimits_{i = 1}^{n} {s\left( {E_{i} } \right)} } \mathord{\left/ {\vphantom {{\sum\nolimits_{i = 1}^{n} {s\left( {E_{i} } \right)} } n}} \right. \kern-0pt} n}\), when \(S \ge 0.5\), the result of cluster is considered appropriate.

The mean and silhouette coefficients can effectively evaluate the performance of clustering, while applying the distance measure to the improved K-means clustering algorithm makes the H2TLTS possibility distribution clustering more reasonable. The specific unsupervised clustering process is described in Algorithm 1.

5.2 Distance-Entropy Weight Model Based on Clustering Centers

Information entropy, as a powerful tool to describe information uncertainty, has been widely used in information theory for information processing optimization. Entropy is computed from information, where the former measures the degree of disorder in a system. At present, in the field of information fusion, entropy is mostly used as a tool for determining weights and measuring the orderliness of a system, which can effectively reduce the interference of subjective factors by measuring the amount of information contained in the system. Guan et al. [90] combined information entropy and distance to propose distance entropy: (1) by considering evaluation information as a system, the process of evaluation information fusion is similar to the process of molecular interaction in the system; (2) the stability of the system is achieved by reducing the distance between molecules, at which time the total entropy of the fusion system is the smallest, the information of the system is the largest, and the decision is the most advantageous. This paper draws on Guan et al.’s idea [90] and applies it to the evaluation after clustering to determine the weights of each evaluation indicator.

Entropy is obtained by measuring the distance between elements in the system and representing the probability of occurrence of individuals in the system by the distance ratio, which is distance entropy. In view of presenting a large amount of evaluation information, this paper calculates the distance entropy using a new evaluation matrix composed of the clustering centers obtained with Sect. 5.1. Meanwhile, the distance measure between different evaluations follows Eq. (2). Details are as follows.

Definition 15

Let \({\mathbb{C}} = \left\{ {c_{1} , \cdots ,c_{t} , \cdots ,c_{K} } \right\}\) be as before, the optimal value for the y-th indicator be \(\vartheta_{y}^{{c^{ * } }} \left( {{\mathbf{P}}_{y}^{{c^{ * } }} } \right)\), where \(c^{ * }\) represents the clustering center corresponding to the optimal value. Then, the distance between other evaluation information \(\vartheta_{y}^{{c^{ * } }} \left( {{\mathbf{P}}_{y}^{{c^{ * } }} } \right)\) and \(\vartheta_{y}^{{c_{t} }} \left( {{\mathbf{P}}_{y}^{{c_{t} }} } \right)\) under the same indicator is.

Definition 16

Let \(Ed_{y}\) be the distance entropy with indicator y. \(d_{{\vartheta_{y}^{{c_{t} }} \left( {{\mathbf{P}}_{y}^{{c_{t} }} } \right)}}\) is as before. The entropy calculation formula is as follows.

The ratio that the sum total of the distances between all elements below the index is used as the possibility of each evaluation information occurring within the informational entropy, which is the distance entropy of the index. Accordingly, we propose the distance-entropy weight model for clustering centers. Except for the introduction of distance entropy, the calculation process is close to that of the traditional entropy method. The process is as follows.

Step 1: Calculate the distance between all evaluations under each indicator and the optimal evaluation. The distance measure is shown in Eq. (2).

Step 2: Calculate the distance entropy of each indicator with Eq. (2).

Step 3: Normalizing the distance entropy in Step2 generates the entropy value that characterizes the importance of the indicator, where the normalization equation is.\(e_{y} = - \frac{1}{\ln m}\,Ed_{\,y}\),\(y = 1,2,...,m\).

Step 4: The objective weights \(w_{y}\) of indicator y are further obtained by normalizing \(1 - e_{y}\).

5.3 Two K-2S-KMC Evaluation Aggregation Algorithms

Clustering of the evaluators enables the initial evaluation data to be approximate (subSect. 5.1), and the weights of each indicator are calculated by an improved distance entropy-entropy weighting model (subSect. 5.2). Then, the simplified evaluation information needs to be aggregated, where we follow the statistical principle-based aggregation operator (Definition 8) proposed by Chen et al. [78]. However, the data aggregated are transformed into H2TLTS expressions and their number grows explosively, which is difficult to analyze the results for decision makers. Accordingly, the N2S-KMC algorithm introduces K-means clustering for clustering H2TLTS, but K-means clustering still has its inherent shortcoming: its performance is sensitive to the initial clustering centers and the number of clusters. This paper improves it to unsupervised K-means clustering for H2TLTS, which can output suitable clustering results without artificial adjustment. The improved K-means algorithm for H2TLTS and the overall K-2S-KMC algorithm process are described below.

5.3.1 Unsupervised K-Means Clustering Algorithm for Clustering the Aggregated Data

The selection of initial clustering centers in our improved K-means clustering algorithm follows the approach in N2S-KMC: all aggregated evaluations are sorted in descending order by evaluation semantic values and then selected based on the number of identified initial clusters. The principle of the unsupervised K-means clustering for H2TLTS is like the content in subSect. 5.1, but the difference is that all evaluation measures of clustering performance are to be applied in H2TLTS. The new measure for assessing the efficiency of clustering according to data distribution is presented first below.

Definition 17

Let \(\Omega\) be a H2TLTS and the associated possibility distribution, where \(\Omega = \left\{ {PL_{1} ,PL_{2} ,...,PL_{n} } \right\}\), and \(PL_{i} = (\Delta_{S} (\beta_{i} ),p_{i} )\) for all \(i \in [n]\). Denote the clustered \(\Omega\) as \(\Omega = \left\{ {G_{1} ,...,G_{c} ,..,G_{N} } \right\}\), where \(G_{c} = \left\{ {\left. {PL_{{i^{*} }}^{c} } \right|i^{*} = 1,2,...,\# G_{c} } \right\}\). The elements in \(G_{c}\) are clustered by improved unsupervised K-means clustering, where \(G_{c} = G_{c,1} \cup G_{c,2} \cup ... \cup G_{c,k}\) and \(G_{c,m} = \left\{ {\left. {PL_{{i^{*} ,m}}^{c} } \right|i^{*} = 1,2,...,\# G_{c,m} ,m = 1,2,...,k} \right\}\). Then, the intra-class average distance of \(PL_{{i_{c}^{*} ,m}}^{c}\) is defined as.

Definition 18

Let \(\Omega\),\(G_{c}\),\(G_{c,m}\),\(PL_{{i_{c}^{*} ,m}}^{c}\) be as described above. The cluster distance of \(PL_{{i_{c}^{*} ,m}}^{c}\) is shown below.

The interclass distance is also defined according to Zhang et al.’s [89] idea based on the comparison of the density between the cluster in which the element to be measured is located and other classes. And the density is the number of elements in the cluster.

Definition 19

Let \(\Omega\),\(G_{c}\),\(G_{c,m}\),\(PL_{{i_{c}^{*} ,m}}^{c}\) as described above. The mean coefficient of \(G_{c}\) is the sum of the differences between the average distance of all elements and the average distance of elements within each class. The mean coefficient is expressed with M. proves that the clustering is valid, otherwise it should be adjusted. M=0.

and \(f(x) = \left\{ \begin{gathered} 0,x < 0 \hfill \\ 1,x \ge 0 \hfill \\ \end{gathered} \right.\), proves that the clustering is valid.\({\text{M}} = 0\)

Definition 20

The intra-cluster distance is obtained by Definition 17 and the inter-cluster distance is obtained by Definition 18. Thus, the improved silhouette coefficient for the cluster \(G_{c}\) is as below.

The improved silhouette coefficient of a cluster is obtained by calculating the ratio of the difference between the intra-cluster distance and the inter-cluster distance of each point to the maximum difference and then taking the average. And if the contour coefficient is greater than 0.5, the clusters are with good effectiveness, otherwise it should be adjusted.

Based on the above content, the specific calculation process of the improved unsupervised K-means is as follows:

It is worth noting that the distinction between UK and UKC lies in different stages applied to LSGMD, with the former clustering the evaluators based on HFLTS and the latter clustering the aggregated H2TLTS. Also, based on the above differences between the two linguistic representation models, there are variations in the measurement of distance and the determination of clustering centers in the clustering algorithm.

5.3.2 K-2S-KMC Evaluation Aggregation Algorithm

The K-2S-KMC algorithm is a further extension of the N2S-KMC proposed by Chen et al. [88]. Based on the detailed description of the improvement in the previous subsections, the following content mainly describes the overall flow of the K-2S-KMC algorithm. For details, see Fig. 2. For ease of expression, the algorithm with KND to cluster evaluators and UKC in two stage is denoted by KND-2S-UKC, and the algorithm with UK and UKC with two stage is shown as UK-2S-UKC.

From the perspective of improving the algorithmic process, the enhanced LSGMD framework in this paper has the following advantages.

-

(1)

The use of clustering algorithm instead of grouping model in N2S-KMC algorithm greatly improves the computational efficiency.

-

(2)

Distance entropy is taken as the method to determine the weights of different indicators, integrating the natural language distance measure into the entropy weighting process which demonstrates more realistically the dispersion of data under different indicators.

-

(3)

Using unsupervised K-means clustering to cluster the aggregated data makes the clustering results more reasonable and more efficient.

Both algorithms proposed in the article provide new ideas for large group decision problems.

6 Perception Evaluation of China’s Civil Aviation Safety Based on K-2S-KMC

The evaluation data are presented in Table 3. This section aggregates the evaluation values used the two algorithms proposed above, and the corresponding weights are determined by distance-entropy weight model.

6.1 The Calculation Process of the Two Algorithms

KND-2S-UKC Algorithm

The algorithm consists of two processes: clustering of evaluators with KND and 2-stage aggregation of evaluation data with 2S-UKC.

Cluster the evaluators using KND:

The KND algorithm proposed in subSect. 5.1 is used to cluster the evaluators with HFLTS. By randomly determining the cluster centers, the distance between the evaluators and the cluster centers is calculated, and then, the final cluster centers are determined using HP2TLWA operator. The most appropriate number of clusters is 5 with threshold of 0.1 after several experiments. The clustering results are listed in Table 7. The clusters are aggregated by the following methods, and the cluster centers are used to calculate the index weights using the distance-entropy weight model.

Use 2S-UKC to Aggregate Evaluations:

2S-UKC consists of two stages with the idea of aggregation and then clustering.

Stage 1 Aggregation of evaluation information under each indicator for evaluators within each cluster after clustering, the number of which are listed in Table 7.

Stage 2 The weights of each cluster are normalized. The number of P2TLPs under each indicator after aggregation is counted, and the evaluation information of clusters exceeding 2 is clustered with UKC; the centers are listed in Table 8.

Repeat the Two-Stage Paradigm

The P2TLPs with different clusters are aggregated for each indicator in Stage 1.

The aggregated P2TLPs are clustered with UKC in Stage 2.

The result is listed in Table 8.

UK-2S-UKC algorithm

The algorithm consists of two processes: clustering of evaluators with UK and then 2-stage aggregation of evaluation data with 2S-UKC.

Cluster the evaluators using UK:

The UK algorithm proposed in subSect. 5.1 with H2TLTS is used to cluster the evaluators, the value of threshold is 0.1 and M = 0 with S > 0.5, and cluster results with evaluators are listed in Table 9. The clusters are aggregated by 2S-UKC and the indicator weights are obtained using the distance-entropy weight model with the cluster centers.

Use 2S-UKC to aggregate evaluations:

The process is same as the one in KND-2S-UKC algorithm. The Stage 1 aggregates the evaluation data under each indicator, and Stage 2 clusters the aggregated data with UKC. Then, the two-stage paradigm are repeated. The results are listed in Table 10.

6.2 Comparison

This section compares the running time, space occupation and evaluation results with the two proposed models. All algorithms are run as well as the comparison of time complexity and space complexity are obtained by MATLAB.

6.2.1 Operation Time Comparison

Time complexity is the amount of computational effort required to conduct the algorithm, the running time of both algorithms is almost the same from the above figure, which also indicates that the computing time of UK and KND is almost the same when clustering and evaluating people. Because the time complexity of K-means algorithm is linearly increasing, while the improved unsupervised K-means clustering only increases the number of clusters by judging whether the clustering effect meets the requirements and iterates again, whose time growth is still linearly related. However, when the number of evaluators increases significantly, such as 500 evaluators, the running time of KND-2S-UKC is shorter and the calculation is more efficient.

6.2.2 Space Requirements Comparison

Space complexity of an algorithm described that the total space used with the algorithm. To illustrate the spatial storage requirements for the different algorithms, this paper compares the three aspects as shown in Fig. 4, respectively. As shown above, the physical memory occupation of the UK-2S-UKC run is about 100 M more than the KND-2S-UKC, which is not a significant difference. It is evident that the space occupied in computing this data set using UK iterative calculating process is not too much compared to KND. Similarly, KND-2S-UKC uses only a small amount of memory when calculating a large amount of data.

6.3 Discussion

As can be seen from Fig. 5, the results obtained by the two algorithms are more consistent in the trend of the last four indicators and have a similar expectation at indicator 3 and indicator 6, but differ significantly at indicator 2. This indicates that the process of clustering people reduces the complexity of operations but reduces certain accuracy. At the same time, the indicator weights originate from the clustering center, and different clustering results have corresponding effects on the calculation of the weights, which eventually lead to their differences. In contrast, UK-2S-UKC is more convenient in the process of using because it automatically evaluates the clustering effect. In order to synthesize the strengths and weaknesses with the two algorithms, the average value corresponding to each indicator is taken as the citizens’ perception of the current status of civil aviation safety in China in this paper.

As can be seen from Fig. 6, the participants show their concerns about the state of civil aviation safety from all ten indicators, which verifies that the data collected online are indeed the aspect that the public is worried about. Secondly, participants are most concerned about the skills and quality of the professionals with indicator 1 and fell some anxiety about passenger safety awareness with indicator 9. For indicator 4, 5, 7 and 10, the participants’ perceptions of safety awareness among domestic passengers, airport safety management, airline operations, and terrorist attacks are similar, and they do not show any particular concern about them. The highest level of perception is Indicator 8, followed by Indicator 6, indicating that the probability of a safety event due to weather or aircraft maintenance is perceived to be lower than in other aspects. And the type or route with indicator 2 and accident investigation situation with indicator 3 will make the participants feel some apprehension.

The results prove that the ten aspects of this paper are truly what people worry about, and in fact, the safety level of China’s civil aviation has been maintained at a high level, the government should strengthen publicity on the ten aspects to guide the public to regain confidence in China’s civil aviation industry: most importantly, the training and selection process of the flight crew should be vigorously publicized, and a documentary can be filmed for the public to understand; in the process of air travel and daily life, it is also necessary to promote the knowledge of self-rescue and emergency rescue process in the process of air travel and daily life, so that passengers can establish the awareness of protecting themselves and others; the process and results of accident investigation should be communicated at the first time to increase the credibility of the government and dispel passengers’ concerns; a simple explanation of aircraft types, routes and daily maintenance can be shown on electronic screens in the terminal building; in addition, intelligent robots can be prevented in the airport to answer common passenger concerns.

7 Conclusions

In this paper, we collect public concerns and build an evaluation indicator system through online data collection technology and text analysis technology from a practical point of view; we improve the aggregation algorithm for the characteristics of the collected evaluation information to finally obtain the degree of citizens’ perception of the current situation of civil aviation safety in China. The work carried out is described as follows.

-

(1)

Creatively use data mining technology to establish the evaluation indicator system of civil aviation safety perception, and use SPSS to verify the validity and reliability of the evaluation indicator system.

-

(2)

Novel distance measures are proposed to calculate the distance between different indicators and between the overall evaluations made by different participants, laying the foundation for clustering by human units.

-

(3)

Two clustering algorithms are proposed to cluster the evaluators: KND follows the traditional clustering algorithm and mainly improves the distance measure, while UK uses the mean coefficient and the improved contour coefficient to iteratively adjust to achieve good results. We also improved unsupervised K-means clustering into a clustering algorithm that can be applied to H2TLTS, and redefined the formula for judging the effect of clustering.

-

(4)

KND-2S-KMC and UK-2S-KMC were proposed and jointly aggregate the evaluation information and compared in terms of algorithm running time, space occupation, and it was found that the two runtimes are similar, and the space occupied by UK-2S-KMC is slightly larger than that of KND-2S-KMC, and some differences exist in the final aggregation results.

-

(5)

In order to evaluate the results with more realism, this paper takes the average value of the calculation results of the two models as the final degree of our citizens' perceptions of the safety of civil aviation. We find that citizens are most worried about the professional skills and personal quality of the flight crew affecting flight safety, followed by safety accidents due to the lack of safety awareness of passengers. And we propose corresponding measures to improve safety perception.

Note that there are some limitations in this paper. The evaluation indicator system is inadequately constructed, which only considers the concerns of the online public and does not capture the concerns of the public in daily communication. The number of samples collected is small and can only reflect the perception of some people. In later studies, the sample size should be increased to more than one hundred for the data being more representative of the perception level in a larger range. At the same time, the LSGDM framework may need further adjustment when the sample grows larger. The original method for data density metrics may lead to an increase in computational cost due to the increase in data volume, and whether it can meet the needs is to be further investigated.

In addition, with the development of economy and society, a large number of innovative things are emerging, and the research of evaluation methods in different fields has greater significance. Accordingly, we present the following suggestions for evaluation studies.

-

(1)

The construction of the evaluation index system can be extracted by using data mining methods with different fields’ development status in addition to integrating literature information.

-

(2)

The characteristics of natural language in different scenarios need to be accurately quantified and modeled when conducting evaluation studies on different research subjects.

-

(3)

The accuracy and efficiency of aggregation methods are improved according to different research demands. Most of the existing algorithms consider local optimality, a global optimal LSGMD method need to be explored.

References

Civil Aviation Administration of China. Statistical Bulletin of Civil Aviation Industry Development in 2021[EB/OL]. (2022-05). [2022-09-11]. CCTV news.

The continuous safe flight time of China’s civil aviation transportation has exceeded 100 million hours [EB/OL]. (2022-02-25). [2022-09-11]. People’s daily online.

Wu, R.B., Wang, Z.Q., Wang, W.W., Zhang, Y.Y.: Airspace operation risk assessment based on IAHP and information entropy portfolio empowerment. Mod. Electron. Technol. 43(06), 51–56 (2020)

Wan, J., Xia, Z.H., Wang, J.H., Zhu, X.P.: A civil aviation flight safety risk evaluation method based on QAR overrun events. Sci. Technol. Herald 37(11), 101–108 (2019)

Qian F R. Research on the analysis and evaluation of general aviation operational safety risks[D]. Civil Aviation Flight Academy of China, 2020.

Herrera, F., Martínez, L.: A 2-tuple fuzzy linguistic representation model for computing with words. IEEE Trans. Fuzzy Syst. 8(6), 746–752 (2000)

Xu, Z.: A method based on linguistic aggregation operators for group decision making with linguistic preference relations. Inf. Sci. 166(1–4), 19–30 (2004)

Rodríguez, R.M., Martinez, L., Herrera, F.: Hesitant fuzzy linguistic term sets for decision making. IEEE Trans. Fuzzy Syst. 20(1), 109–119 (2011)

Wang, H.: Extended hesitant fuzzy linguistic term sets and their aggregation in group decision making. Int. J. Comput. Intell. Syst. 8(1), 14–33 (2015)

Sonia H, Nesrin H, Habib C. An implementation of the Hesitant Fuzzy Linguistic Ordered Interaction Distance Operators in a Multi Attribute Group Decision Making problem[C]//2020 IEEE 2nd International Conference on Electronics, Control, Optimization and Computer Science (ICECOCS). IEEE, 2020: 1–4.

Wu, Z., Xu, J.: Possibility distribution-based approach for MAGDM with hesitant fuzzy linguistic information. IEEE Trans. Cybern. 46(3), 694–705 (2015)

Kumar, K., Chen, S.M.: Group decision making based on weighted distance measure of linguistic intuitionistic fuzzy sets and the TOPSIS method. Inf. Sci. 611, 660–676 (2022)

Atanassov, K.T.: New topological operator over intuitionistic fuzzy sets. J. Comput. Cogn. Eng. 1(3), 94–102 (2022)

Garg, H., Kumar, K.: Linguistic interval-valued atanassov intuitionistic fuzzy sets and their applications to group decision making problems. IEEE Trans. Fuzzy Syst. 27(12), 2302–2311 (2019)

Williamson, A.M., Feyer, A.M., Cairns, D., et al.: The development of a measure of safety climate: The role of safety perceptions and attitudes. Saf. Sci. 25(1–3), 15–27 (1997)

Márquez, L.: Safety perception in transportation choices: progress and research lines. Ingeniería y competitividad 18(2), 11–24 (2016)

Rasch, A., Moll, S., López, G., et al.: Drivers’ and cyclists’ safety perceptions in overtaking maneuvers. Transport. Res. F 84, 165–176 (2022)

Graystone, M., Mitra, R., Hess, P.M.: Gendered perceptions of cycling safety and on-street bicycle infrastructure: bridging the gap. Transp. Res. Part D 105, 103237 (2022)

Edelmann, A., Stümper, S., Petzoldt, T.: The interaction between perceived safety and perceived usefulness in automated parking as a result of safety distance. Appl. Ergon. 108, 103962 (2023)

Lai, I.K.W., Hitchcock, M., Lu, D., et al.: The influence of word of mouth on tourism destination choice: tourist–resident relationship and safety perception among Mainland Chinese tourists visiting Macau. Sustainability 10(7), 2114 (2018)

Zou, Y., Yu, Q.: Sense of safety toward tourism destinations: a social constructivist perspective. J. Destin. Mark. Manag. 24, 100708 (2022)

Cheng, Y., Fang, S., Yin, J.: The effects of community safety support on COVID-19 event strength perception, risk perception, and health tourism intention: the moderating role of risk communication. Manag. Decis. Econ. 43(2), 496–509 (2022)

Amfo, B., Ansah, I.G.K., Donkoh, S.A.: The effects of income and food safety perception on vegetable expenditure in the Tamale Metropolis, Ghana. J. Agribus. Dev. Emerg. Econ. 9(3), 276–293 (2019)

Rasmussen, K., Røislien, J., Sollid, S.J.M.: Does medical staffing influence perceived safety? An international survey on medical crew models in helicopter emergency medical services. Air Med. J. 37(1), 29–36 (2018)