Abstract

Cyber-physical empirical methods enable to address problems that classical empirical methods alone, or models alone, cannot address in a satisfactory way. In CPEMs, the substructures are interconnected through a control system that includes sensors and actuators, having their own dynamics. The present paper addresses how the fidelity of CPEMs, that is the degree to which they reproduce the behaviour of the real system under study, is affected by the presence of this control system. We describe an analysis method that enables the designer of a CPEM to (1) identify the artefacts (such as biases, noise, or delays) that play a significant role for the fidelity, (2) define bounds for the describing parameter of these artefacts ensuring high-fidelity of the CPEM, and (3) evaluate whether probabilistic robust fidelity is achieved. The proposed method is illustrated by considering a substructured slender structure subjected to dynamic loading.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Cyber-physical empirical methods (CPEMs) are empirical methods in which the dynamical system under study is partitioned into physical and numerical substructures. The behaviour of the physical substructures is partly unknown, while the numerical substructures are described by validated computational models. The substructures interact with each other through a control system. CPEM therefore augment classical empirical methods with validated numerical models, to address problems that classical empirical methods alone, or models alone, can not conveniently or reliably address. This is for example the case: (Issue 1) when the dynamical system under study is ”ill-conditioned”, i.e. when it contains a large span of characteristic spatial dimensions and/or time constants. In that case, the part of the system that does not fit in the laboratory, or whose dynamics is slow, can advantageously be replaced by a numerical model. (Issue 2) when scaling effects should be tackled. Scaling laws, used in classical empirical methods, aim at preserving the balance, at small scale, between two effects that are known to be of importance for the full-scale system. When other effects, disregarded by the chosen scaling law, play an important role too, so-called scaling effects occur, which may reduce the confidence in experimental results. A CPEM might alleviate this issue by isolating, in the numerical substructures, the parts of the system that cause scaling issues. (Issue 3) when component testing should be performed, that is when the focus is on the performance of a specific uncertain substructure, that is interacting with the other substructures as part of a complex system.

CPEM have been concurrently developed and applied in earthquake engineering [1], thermomechanics [2], aerospace engineering [3], in the automotive industry to develop engines [4], car suspensions [5], or more generally to investigate chassis dynamics and vibrations [6]. Other examples of applications of CPEM include studies of the acoustic footprint of ships [7], of the dynamical behaviour of offshore wind turbines [8], and of complex electrical power systems [9].

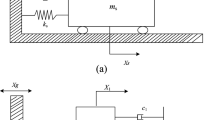

In the following, we will postulate that the performance of the system under study is quantified through Quantities of Interest (QoI) derived from the response of the system to a given load. See Fig. 1. In a substructural partition, as illustrated in Fig. 2, the power continuously exchanged between the substructures can be modeled as the product of a flow and an effort [10]. For example, in a translatory mechanical system, the flow is the linear velocity, while the effort is the force. For an electrical system, the flow is the electrical current, and the effort the voltage. Compatibility between two substructures is achieved when the sent and received flows are equal at each instant, and similarly, equilibrium refers to the consistency of the efforts.

Definition 1

The control system consists of hardware components (such as sensors, actuators, computers and network infrastructure) and software components (such as observers, controllers and allocation algorithms), which aim at ensuring compatibility and equilibrium between the substructures. See Fig. 3.

To achieve compatibility and equilibrium a significant amount of mechanical or electrical power must in general be transferred through actuators or amplifiers, which have their own dynamics.

Let us now recall that the very purpose of empirical methods is to gain knowledge about the behaviour of some system through observation of this system. When using a CPEM, we do not observe the real system (Fig. 1), but a substructured version of it, orchestrated by a control system (Fig. 3). A legitimate question is then whether the observations generated with this system, actually are representative of those we would have obtained with the real system. Only if this is the case, new knowledge about the real system can be inferred from these observations. Defining, loosely for now, the notion of fidelity of a cyber-physical empirical setup as the degree to which the setup reproduces the behaviour of the real system under study, the following statement can then be made regarding CPEMs

Fidelity may be jeopardized by factors which are related to each component of the CPEM represented in Fig. 3 (including the substructures themselves), but in the present paper, we will focus on the role of the control system that interconnects the substructures. In [11, Chapter 1], an example from the field of fluid mechanics (sloshing) shows how the control system could cause a severe loss of fidelity, which might not be noticed by the experimentalist. The influence of the control system on fidelity can be studied by various approaches, the choice of which depends on (1) the nature of the control system, and the way it is modeled, (2) the way the physical and numerical substructures are modelled, and (3) the choice of indicators representing the fidelity. These aspects have been reviewed in details in [11, Chapter 1].

Our conclusion is that (1) Heterogeneous types of artefacts (such as delays, noise, sensor calibration errors, and actuator saturation) may occur simultaneously leading to problems which become intractable by purely analytical methods. However, all of these artefacts should be included in a fidelity analysis, if they cannot be eliminated. (2) Artefacts may be non-deterministic, such as noise and sporadic signal loss (3) The artefacts may interact with substructures that exhibit a complex behaviour, which should be modeled properly. In the example mentioned above, the observed bifurcation phenomenon, that causes the loss of fidelity can only be captured if a nonlinear sloshing model is used to describe the behaviour of the physical substructure. (4) The fidelity should be evaluated based on the actual QoI, and not based on the actuator’s performance, since there might not be continuity, in a mathematical sense, between the two quantities.

This paper gathers, in a concise and accessible way, the contributions of the author about this subject published in the PhD thesis [11], and in the journal articles [12] and [13]. These contributions are the following ones. (1) We provide a unified and quantitative definition of the fidelity of a CPEM, which fits to a wide class of applications. (2) We present a method to systematically identify and rank the control system-induced artefacts that jeopardize the most the fidelity. This information is of great operational relevance when designing a CPEM. (3) We present a framework to verify the probabilistic robust fidelity of cyber-physical empirical setups, and to derive fidelity bounds, which can be used as specifications to the control system. The proposed analysis method is non-intrusive, and thus not limited to analytic models, which allows its application to the wide class of dynamical systems represented in CPEM. It can handle an arbitrary number and type of artefacts, which exhibit parametric uncertainty. And finally the method is computationally efficient, even for high-dimensional and high-reliability problems, as it is based on modern surrogate modelling and active learning techniques.

The paper is organized as follows. The method is described in Sections “Fidelity of a CPEM” and “Analysis”. It is then applied to study the fidelity of the active truncation of a slender structure, in the section entitled “??”.

Fidelity of a CPEM

Let s denote the total number of substructures in the substructural partition. Given a duration T > 0, let τ(t) = (τ1(t),..., τs(t)) represent an exogenous excitation, with support [0, T], acting on the substructural partition. A generic way of modelling the ideal substructured system, which is suitable for most existing applications of CPEMs, is the following interconnected system:

and

where xi is the internal state of substructure Σi, yi its output, and uij is the input to Σi originating from Σj. A block diagram of this interconnected system is presented, for s = 3, in Fig. 4. The yi and uij are related to the power continuously exchanged between the substructures. If yi represents a flow, then ∀j≠i, uij represent efforts, and vice versa. The behaviour of the system described by equations (1–3), is in principle similar to the behaviour of the real system. However, it does not account for the effect of the control system.

Definition 2

An artefact Δij is a parametrized function describing the effect the control system on the connection between the output of substructure Σj and the input of Σi.

Artefacts are not necessarily first principles-based models of the components of the control system, but model the consequences, on the exchanged signals, of including these components in the substructural partition. In other words, two components of the control system of different natures, such as a communication link or an actuator, could in a first approximation, be modelled by the same artefact, such as a time delay.

An artefact Δij consists in general of a combination of elementary artefacts of various natures (heterogeneous), which simultaneously affect the signal. The effect of selected elementary artefacts on a reference signal is shown in Fig. 5. More examples of elementary artefacts, together with their possible sources, are given in [11, Chapter 3].Footnote 1 The properties of each elementary artefact are described by one or several parameters denoted θi with support \(\mathcal {D}_{i} \subset \mathbb {R}\), as examplified in Table 2.

The artefact Δij presented in Fig. 6 consists of five elementary artefacts, and is parametrized by six values: the scaling factor, bias value, noise variance, duration of the delay, probability of occurrence of the signal loss, and inverse duration parameter of the signal loss. The effect of such a ”composite” artefact on an input signal is illustrated in Fig. 7.

The ideal interconnection represented in Fig. 4 can now be modified, to model the effect of the control system on the signals. This is done as shown in Fig. 8. Equations (1) and (2) remain valid, but the relationship equation (3) between the output of substructure j and the input to substructure i originating from substructure j becomes:

where \(\theta \in \mathcal {D} \subset \mathbb {R}^{M}\) gathers all the θi parameters describing the elementary artefacts in all Δij. In other words, if the artefact Δij presented in Fig. 6 was affecting all interconnections in Fig. 8, then θ would be of dimension M = 36 (6 artefacts × 6 parameters per artefact).

For \(i \in \mathbb {N}^{*}_{s}\), let \(\bar {x}_{i}\) denote the state of Σi when ∀j≠i, uij ≡ yj. In that case, no artefact is present, and the CPEM behaves exactly as the emulated system (the ideal substructured system in Fig. 4). For a given \(Q\in \mathbb {N}^{*}\), let then \((\gamma _{q})_{q \in \mathbb {N}_{Q}^{*}}\), be a family of cost functions satisfying

We will now define the fidelity, in a quantitative manner, as follows:

Definition 3

The fidelity φ is defined as

The rationale behind the proposed definition is the following. (1) Each γq function compares selected QoI derived from the states (x1, x2,..., xs)|θ with the corresponding QoI derived from \((\bar {x}_{1},\bar {x}_{2},...,\bar {x}_{s})\). If all states xi converge towards \(\bar {x}_{i}\), then all γq tend to zero, and \(\varphi \rightarrow \infty \). Fidelity quantifies therefore the capability of the CPEM to generate QoIs that are similar to the real system, when subjected to same excitation. (2) The reciprocal is however not true: high fidelity can be achieved even if some states xi which are not of interest, i.e. not included in the calculation of any γq, differ from \(\bar {x}_{i}\). This is a major difference with the concept of resilient cyber-physical systems (see e.g. [14]). A high fidelity value does not imply a correct estimation of the complete state \((x_{i})_{i\in \mathbb {N}^{*}_{s}}\) in presence of artefacts, but rather a correct estimation of selected state-derived quantities. (3) If the setup becomes unstable because of the introduced artefacts, some γq may blow up in some domains of \(\mathcal {D}\). On the other hand, when studying high-fidelity setups, we may be interested in emphasizing the difference between small values of the γq. The logarithm in equation (6) is introduced for this reason. (4) A sum of the squares, rather a maximum function, is used in equation (6) to combine the cost functions γq, which preserves the smoothness properties of the functions θ↦γq(θ). Using a maximum function instead would have compromised the differentiability of φ even if the γq were smooth functions. This choice will prove convenient when analyzing the problem.

Definition 3 is rather general, and must be adapted to the specific problem at hand. More specifically, the exogenous excitation τ, and the functions γq that select and compare the QoI, should be carefully defined. The excitation τ(t) must reflect the loads that the empirical setup will eventually be subjected to. It may for instance include impulsive loads, ramps, frequency sweeps, pink noise, or combine several of the above. If nonlinear effects are significant, several excitation levels should be included. The selection of the QoI through the γq functions must be connected to the very purpose of the tests, and should not be necessarily related to the outputs yi that play an active role in the interconnection.Footnote 2 The QoI may also be of different natures. They can be time series or more generally fields, such as in [15]. They can also be derived quantities, such as statistical moments, parameters of extreme value distributions, or derived transfer functions. The QoI should obviously be selected carefully, and in accordance with the purpose of the tests: the fidelity, calculated from the extrema of a signal for instance, will convey a very different type of information from the fidelity calculated from the complete time series.

The concepts introduced in this section enable us to define, in a quantitative manner, the fidelity φ of a CPEM. Our objective is to investigate how the control system, modelled by the artefacts Δij(θ), may deteriorate the fidelity. Even if CPEM are developed in a controlled laboratory environment, some uncertainty is entailed to the artefacts: sensor noise variance, or the interconnection delays between the substructures, remain for example uncertain at the design stage, may vary during the execution of the experiment, and can only be quantified accurately a posteriori, i.e. when the experiment has ended. However, the amount of uncertainty on these quantities can be estimated a priori from expert judgment or dedicated surveys, and therefore modeled within a probabilistic framework.

Assumption 1

The artefact parameter θ is the realization of a multidimensional random variable Θ with a known, but arbitrary, distribution fΘ.

Before adressing these RQ, let us first define the following sets.

Definition 4

The domain of failure of a CPEM is the set \(\mathcal {D}_{f}\subset \mathcal {D}\) defined by:

Definition 5

The limit state surface \({\mathscr{L}}\subset \mathcal {D}\) is defined by

We notice that addressing (RQ2) and (RQ3) is equivalent to identifying \(\mathcal {D}_{f}\). Indeed, identifying \(\mathcal {D}_{f}\) answers immediately RQ3 since the θ leading to sufficiently high fidelity are found in \(\mathcal {D}\setminus \mathcal {D}_{f}\). Then, letting \(\mathbb {I}_{\mathcal {D}_{f}}(\theta )\) be the indicator function for \(\mathcal {D}_{f}\), the probability of failure of a CPEM, involved in (RQ2), can simply be evaluated from

We will discuss the choice of the value of φadm in Section “Example: Active Truncation of a Slender Marine Structure”.

Analysis

We will address our RQ by using surrogate modeling techniques, particularly suitable for uncertainty quantification purposes. Surrogate models are functions that can be quickly evaluated, and mimic the behaviour of a target function, here φ(θ), over its whole domain of definition, or over part of it. Due to space constraints, ths paper will provide only the minimum mathematical background necessary to understand the principle of the analysis. For more details on the employed techniques, the interested reader may consult [11, Chapter 2] and the references therein for more details. The fidelity analysis method we propose is presented as a flow chart in Fig. 9, and described in the following.

Step 1: Sampling

The functions fi describing the substructure dynamics, and hence involved in the calculation of φ, may be non-analytical or so-called black boxes. A sampling-based approach must therefore be employed to address our problem. We therefore start by generating N samples of Θ in \(\mathcal {D}\) by using a space-filling sampling method such as Latin Hypercube Sampling (LHS).

For each sample θ, the interconnected system including artefacts (represented in Fig. 8), when subjected to τ, is co-simulated.Footnote 3 The fidelity φ(θ) is obtained for each θ(i) by comparing the result of this co-simulation to the one without artefacts (Fig. 4) using equation (6). We let

Step 2: Sensitivity Analysis

For nonlinear functions such as φ(θ), the sensitivity to θ may be radically different in different regions of \(\mathcal {D}\). This rules out the use of local sensitivity analysis methods (considering e.g. the gradient of φ at a given point), especially when there are uncertainties regarding the value of θ. The global sensitivity of φ(Θ) can however be studied by Analysis of Variance (ANOVA), i.e. by determining how much of the variance of φ(Θ) can be attributed to each component of Θ. Indeed, if fixing some component of Θ to their ”true” value significantly reduces the variance of φ(Θ), then it can be concluded that the sensitivity to this component is large. If, on the contrary, a component is left free to vary over its whole range of uncertainty without causing large variations of the variance of φ(Θ), then this component has no global influence on φ and could be fixed.

To practically perform the ANOVA, we will make use of Sobol’ indices [16], which satisfy

The Si are called first-order Sobol’ indices , Sij second order Sobol’ indices, and so on. Si measures the proportion of the variance of φ(Θ) that is due to Θi only, and Sij describes the proportion of the variance of φ(Θ) that is due to Θi and Θj, but cannot solely be explained by individual variations of Θi or Θj.

The fact that Si = 0 is a necessary, but not sufficient, criterion to conclude on the insensitivity of φ to the ith component of its input. Indeed, this component could play a significant role in interaction with another component, which would be visible by examining the higher-order Sobol’ indices.

Definition 6

The total Sobol’ index \(S_{T_{i}}\) is defined as the sum of all Sobol’ indices in equation (12) that involve the parameter i.

\(S_{T_{i}}\) quantifies the total effect of an input parameter, either alone, or in combination with others. \(S_{T_{i}} = 0\) is then a necessary and sufficient condition to conclude that Θi is non-influential. Furthermore, by ranking the ST, i, the components of Θ having the greatest impact on the variations of φ can be identified. Also, by comparing each ST, i to Si, it is possible to evaluate whether Θi influences φ alone (in the case Si ≈ ST, i), or jointly with other components of Θ.

When they were introduced, the Sobol’ indices were computed using Monte-Carlo simulation [17]. This proved to be quite time consuming, and higher-order Sobol’ indices were rarely accessible in practice. Recently, [18] put in evidence the analytical connection between the coefficients of the Polynomial Chaos Expansion (PCE) of φ(Θ), and the Sobol’ indices. In other words, once a PCE model was established, the Sobol’ indices could be obtained at practically no cost. Thanks to this finding and new methods to efficiently compute sparse PCEs [19], PCE has become an intermediate of choice for global sensitivity analyses. More details about this are given in [11, Chapter 2].

Back to our problem, by using \(\mathcal {E}\) and \(\mathcal {F}\) defined in equations (10) and (11), a PCE-based surrogate model of φ is constructed, and the sensitivity of φ to the components of Θ can be expressed through the Sobol’ indices derived from the PCE. This answers (RQ1). Note that the the expected fidelity E[φ(Θ)] and the associated uncertainty Var[φ(Θ)] are also directly linked to the coefficients of the PCE.

Step 3: Reliability Analysis

We stated in the end of Section “Fidelity of a CPEM” that (RQ2) and (RQ3) could be solved simultaneously through the identification of \(\mathcal {D}_{f}\). However, at the present stage, the information gathered on φ is insufficient for this purpose. Indeed, the sampling \(\mathcal {E}\) was so far aimed at filling the space \(\mathcal {D}\), so if E[φ] was well above φadm and Var[φ] was small, none or only few of the samples of θ in \(\mathcal {E}\) might land into \(\mathcal {D}_{f}\), i.e. yield too low fidelity.

The strategy to resolve the limit state \({\mathscr{L}}\) (boundary of Df, see Definition 5) is the following. We stepwise add samples of Θ, i.e. enrich \(\mathcal {E}\), in locations that are believed to be close to \({\mathscr{L}}\) (we will come back to that in the next paragraphs, since \({\mathscr{L}}\) is a priori unknown) and create a surrogate model, whose accuracy near \({\mathscr{L}}\) step-by-step increases. Once estsablished, this surrogate model can be interrogated to find out if a given θ is in \(\mathcal {D}_{f}\) or not, whcih answers (RQ3). Furthermore, Monte-Carlo Simulations, using a large number of auxiliary samples can be performed since the cost of evaluating the surrogate model is much lower than the cost of evaluating φ(θ) itself. The enables us to evaluate Pf from equation (9), verify probabilistic robustness of the setup, and answer (RQ2).

The surrogate model used in this step is a so-called Polynomial Chaos Kriging (PCK) model. Details regarding the structure of this model, the method to determin its coefficients based on \(\mathcal {E}\) and the corresponding values of φ can be found in [20] and in [13]. The key aspect of this surrogate model is that it associates, to any θ, not only an estimated value of φ (denoted \(\mu _{\mathcal {K}}(\theta )\)) but also an uncertainty on this value (through a variance denoted \(\sigma _{\mathcal {K}}^{2}\)).

Once the PCK surrogate model of φ(θ) is identified based on the available samples in \(\mathcal {E}\), additional relevant samples of Θ can iteratively be generated to enrich \(\mathcal {E}\), in strategic locations, characterized by a large probablity of misclassification Pm:

where Φ is the standard normal cumulative distribution function. Pm(θ) estimates the probability of the PCK model to predict that θ is in \(\mathcal {D}_{f}\) while it is actually not, or vice-versa. From equation (13), we see that large values of Pm(θ), correspond to regions of \(\mathcal {D}\) where (1) θ is close to \({\mathscr{L}}\), since φ is probably close to φadm, and/or (2) there is a large epistemic uncertainty (due to lack of data in \(\mathcal {E}\)) on the value of φ(θ).

This is in the region where Pm is large that we select \(K\in \mathbb {N}^{*}\) new samples of Θ, and add them to \(\mathcal {E}\). The newly obtained set \(\mathcal {E}^{\prime }\) is used to establish a new PCK model, and generate K new samples that will be added to \(\mathcal {E}^{\prime }\), and so on. Step-by-step, the boundary \({\mathscr{L}}\) between high- and low-fidelity regions becomes more precise, and the accuracy of Pf increases. More details on this method called Adaptive Kriging can be found in [21].

Step 4

The PCE and PCK surrogate models established in Steps 2 and 3, respectively, can also be used to perform online, or a posteriori, fidelity assessment of a test based on measured or estimated values of θ. The PCK model will be a good classifier when θ is near the boundary of \(\mathcal {D}_{f}\), and adequate if we wish to know whether sufficient fidelity has been achieved. The PCE model can be used when θ takes higher probability values to estimate the achieved fidelity value.

Note also that using the defined surrogate models, an optimal control system design can be found as the one minimizing some cost function c(θ) representing e.g. the cost of the control system (increasing with its performance) while maximizing the fidelity, and with a hard constraint on the minimum fidelity:

where w1 and w2 being positive weights.

In the present work, the surrogate models have been established by using the UQLab software [22]. The reader is referred to [23] for the documentation of UQLab related to PCE, to [24] for sensitivity analysis, to [25] for PCK, and to [26] for rare events estimation, and Adaptive Kriging in particular.

Example: Active Truncation of a Slender Marine Structure

During the design of a floating structure (such as an oil production platform, or a floating wind turbine), hydrodynamic model testing is usually performed at a scale λ < 1 to validate the numerical models used by the designer, to study of specific complex physical phenomena, and to eventually perform a final verification of the design [27]. Slender marine structures such as mooring lines, risers, or power cables connect then the floating structure to the sea bed. Testing a structure in ultradeep water at a typical scale λ = 1/60 would require a laboratory with a diameter of at least 100 m and a water depth of 50m, which is much larger than any of the existing ocean basins.

Active truncation of the slender marine structures is one of the possible ways of alleviating this issue [11, Chapter 4]. The truncated part of the slender marine structure, denoted Σ1 and depicted in blue in Fig. 10, becomes a numerical substructure, simulated using the finite element method. The connection between the physical substructure (Σ2, in red) and the numerical substructure happens through a set of sensors and actuators, located at the truncation point, in other words on the floor of the hydrodynamic laboratory. In this section, we will investigate the fidelity of the active truncation setup. This will illustrate the capabilities of the generic fidelity analysis method presented in the previous section, when multiple, heterogeneous and random artefacts are involved.

We will consider a simple taut polyester mooring line, as depicted in Fig. 10. The properties of the mooring line are given in Table 1. Without loss of generality, we assume that the problem is two dimensional, and we define a direct x-z coordinate system, whose z axis is vertical and pointing upwards. The water depth is D = 1200 m. The bottom of the hydrodynamic laboratory, where sensors and actuators are installed, is located at a water depth of (1 − α)Dλ = 4 m. Active truncation is to be performed as shown in Fig. 10, with a truncation ratio (proportion of the depth that is covered by the numerical model) of α = 0.8.

The fidelity of active truncation will be evaluated by studying the response of this slender marine structure to an external excitation τ(t), with a duration T = 200s. This load is designed to be representative, in terms of amplitude, frequency content and direction, of a severe load that can be encountered during the testing of a floating system. The dynamic part of τ(t) represents wave loads transferred from the floater to the slender structure, and is therefore applied to the top of the line. It has two components. The first low-frequency component mainly acts axially, has an amplitude of 1MN, and a frequency content sweeping [0, 0.02] Hz. It mimics the effect of second-order difference-frequency wave loads on the floater. The superimposed wave-frequency component has an amplitude of 250kN, a frequency content sweeping [0,0.2] Hz, and a direction with constant rate of change. Time series of the described top load can be seen on the upper plot in Fig. 14. This dynamic load comes in addition to the static top tension applied to the slender structure (see Table 1), and to the drag load associated to a shear current, whose velocity varies linearly throughout the water column for 0m/s at the seabed to 0.5m/s at the free surface.

The force components fx and fz, originating from the physical part of the line Σ2 at the truncation point are measured by two independent force sensors. An actuator prescribes the velocity (vx, vz) of the truncation point, computed from the simulation of Σ1.

We will now focus on the definition of the fidelity indicator φ for the active truncation problem. Hydrodynamic model test campaigns focus generally on the behaviour of the floater, and on extreme tensions in the slender marine structures, but not on their local deflection or curvature. The objective is therefore to make the interaction between the truncated slender marine structure, the (physical) floater and the (numerical) sea bottom reflect the corresponding interactions in a fully physical setup. Based on this reasoning, two fidelity indicators are suggested. Let Vx, top and Vz, top be the components of the top velocity of the slender structure when it is subjected to τ(t), and Fx, bottom and Fz, bottom the components of the force vector at its lower end. These four values are calculated by co-simulation of the substructured system, subjected to artefacts described by a parameter θ that will be defined later on. Letting \(\bar {V}_{top}\) and \(\bar {F}_{bottom}\) be their ideal counterparts, obtained by simulation of the system without artefacts, the first fidelity indicator can be written, consistently with equation (6)

where

φ1 quantifies how well the top end of the structure responds to the prescribed external load τ, and thus how well the substructured system manages to replicate the mechanical impedance of the slender structure. φ1 is therefore important when motions of the floater are investigated.

The second fidelity indicator is defined by

where

φ2 quantifies how well the external load is transferred to the sea bottom, and is then more relevant when the focus is on the loads at the anchors. If both aspects are important, φ1 and φ2 could easily be combined into a single indicator. In the following, we use φ to designate either φ1 or φ2, for conciseness.

Let us now move to the artefacts describing the control system. As shown in Fig. 11, ten individual artefacts, parametrized by M = 12 parameters, are assumed to affect the setup. Each component of the force measurement is assumed to be contaminated by calibration error (gain), bias, and noise. In the acquisition process, the force signals can be delayed, or lost, before entering the numerical substructure. A signal loss artefact, affecting the output of the numerical substructure, models the fact that nonlinear iterations in the numerical substructure may not complete on time. An additional delay on the actuation side models computation and communication processes. It is assumed that some delay-compensation techniques have been applied, see e.g. [28], so that only values of the residual delay are considered here.

The artefacts and their probabilistic description are summarized in Table 2. Estimates of upper bounds, lower bounds, mean values, or standard deviations of the θi parameters were obtained from the experimental work reported in [28], and the maximum entropy principle was used to define fΘ(θ).

Sampling and Uncertainty Propagation

As described in Section “Analysis”, the first step is to sample Θ and φ(Θ). A set \(\mathcal {E}\) of N = 512 samples of Θ is generated with the space-filling LHS method. φ(θ) is evaluated by co-simulation for each sample in \(\mathcal {E}\). These co-simulations might be rather time consuming since they involve iterations (1) due to nonlinearities when simulating the dynamics of each substructure, but also (2) to achieve equilibrium and compatibility between the substructures, while accounting for the artefacts. This is detailed in [11, Chapter 4]. However these N co-simulations are independent of each other and can therefore be performed in parallel.

Figure 12 represents a scatter diagram of φ1, plotted against each component of θ, i.e. representations in ”slices” of the 12-dimensional space containing θ. From this representation, it is rather difficult to identify clear trends in the behaviour of φ, or interactions between components of θ influence this behaviour. This is where the Sobol’ indices prove useful.

Scatter diagrams showing the value of φ1 (fidelity indicator based on the top velocity of the line), as a function of the twelve parameters describing the artefacts. The dots correspond to N = 512 samples of Θ obtained by Latin Hypercube Sampling (set denoted \(\mathcal {E}\) in the text). The samples leading to the highest and lowest fidelity are represented by red triangles

The distributions of φ1 and φ2 (and of an additional indicator φ3 that will be introduced later on), estimated from \(\mathcal {E}\) are plotted in Fig. 13. Quantile-quantile plots (not shown here) show that these distribution deviate significantly from Gaussian distributions. They have heavier tails, which makes the estimation of probabilistic robustness difficult, based on the sole sample set \(\mathcal {E}\). This is where the Adaptive Kriging process will come into play.

Based on the initial set \(\mathcal {E}\), a Polynomial Chaos Expansion of φ(Θ), denoted \(\hat {\varphi }\) is established. It consists of 58 orthogonal polynomial terms,Footnote 4 that represent best φ, in the sense that it minimizes an error metric based on \(\mathcal {E}\). The values of E[φ(Θ)] and Var[φ(Θ)] estimated from the coefficients of the PCE, and from \(\mathcal {E}\) directly, match and are equal to:

For illustration, Fig. 14 shows the realization of Θ leading to the median value of φ1, for which φ1 = 1.33 and φ2 = 1.62. Seen in light of equations (21) and (22), this realization corresponds to an average fidelity if the behaviour of the top of the line of interest, and to a quite poor fidelity if the objective was to reproduce the bottom force correctly.

Co-simulation of active truncation with a set of the artefacts leading to the median value of φ1. For this realization, the measurement of fx (resp. fz) is affected by a -0.3% (resp. -3%) calibration error, a -0.012 N (resp. 0.28 N) bias, and noise with a standard deviation of 0.040 N (resp. 0.037N), in model scale. The force measurement is delayed by 2.6ms, and has a probability of signal loss of 7.5%, with a duration parameter of 0.47, which corresponds to frequent and short periods of signal loss. On the actuation side, the delay is 1.3ms, and the probability of occurrence and duration parameter of signal loss are 6.8 % and 0.17, respectively. The resulting fidelity indicators are φ1 = 1.33 and φ2 = 1.62

Note that this uncertainty propagation analysis does not, by itself, assess whether the expected fidelity of the active truncation setup at hand is sufficiently high. The value of the minimum admissible fidelity depends on the intended use of the empirical data, and is to be assessed by the designer of the active truncation setup. Lower fidelity values might be tolerated in early-stage conceptual studies of floating systems, for instance, while high-fidelity would be required for final verification tests, or for the validation of numerical methods.

Sensitivity Analysis

The sensitivity analysis aims at identifying the artefacts contributing the most to the uncertainty on the fidelity, estimated in equations (21) and (22). This provides a rational course of action to reduce this uncertainty, but also to improve the expected fidelity if it is deemed too low.

In low-dimension (i.e. small M) cases, and particularly when the number of samples in \(\mathcal {E}\) is large, visual inspection of scatter diagrams such as Fig. 12, enables one to determine directly which artefact component(s) affects the most the fidelity. This inspection-based method becomes however less reliable in high dimensions, such as in the present case with M = 12. Sobol’ sensitivity indices can instead be used, and derived directly from \(\hat {\varphi }\). Before looking at the Sobol’ indices, let us recall that the absolute values of the total Sobol’ indices ST, i are of secondary importance: the ST, i should be compared to each other to identify the most influencing artefacts’ parameters. Furthermore, ST, i can be compared to the first order Sobol’ index Si, to understand whether the artefact parameter Θi influences the variance of φ(Θ) alone, or in an interaction with another parameter Θj, or several others.

Let us first outline the main conclusions that can be drawn from the total Sobol’ indices ST, i, represented by grey ”background” bars in Fig. 15. The fidelity indicator based on the top velocity response, φ1, is very sensitive to θ9 (the duration of the signal loss on the force signal) and to the calibration errors of the fx and fz measurement (θ1 and θ2). φ1 is much less sensitive to the other θi, and clearly insensitive to noise (described by θ5 and θ6). Focusing now on the bottom force, we see that φ2 is mostly sensitive to θ1, then θ2 (calibration errors), and then to a much less extent to the biases θ3 and θ4, which have both comparable total Sobol’ indices. φ2 is slightly sensitive to θ9, the duration parameter for signal loss on the force measurement, and insensitive to the other θi parameters.

Physical Interpretation

We will now relate these results, obtained by our systematic and data-driven approach, to their physical causes.

It is clear from Fig. 14 that the noise affecting force measurements (parametrized by θ5 and θ6) induces a significant velocity response at the truncation point. This response is however filtered mechanically by hydrodynamic drag forces and, to a less extent, structural damping, before reaching the top and bottom of the mooring line. Therefore noise does not significantly affect the fidelity indicators φ1 and φ2. The fact that the ST, i associated to this artefact are negligible, means that the corresponding parameters θ5 and θ6 (noise variances) could have been set to deterministic values (here, zero), without affecting the variance of φ.

The force sensors feeds the numerical substructure, while the actuator controls the bottom part of the physical substructure, whose response directly enters in the definition equation (15) of φ1. A natural question, when looking at Fig. 15, is then why the top velocity (or φ1) is more sensitive to signal loss, when it acts on the force sensor (duration parameter θ9) rather than when it acts on the velocity actuation (parameter θ12). The reason is the following. When signal loss on the velocity command happens, the velocity of the truncation point keeps a constant value. On the other hand, signal loss on the force sensor may cause large variations of the truncation point’s velocity, due to an interaction with the numerical substructure (commented in detail in [11, Chapter 4]). Both the amplitude of these perturbations and their duration increase when the signal loss characteristic duration increases, which enhances their propagation to the top of the mooring line.

The fact that φ2 is more sensitive to θ1 (calibration error for fx measurement) than to its counterpart θ2 (acting on fz) can be explained as follows. Transverse motions of the mooring line are subjected to hydrodynamic drag damping forces, while axial motions are only damped by structural damping, which means that transverse motions will be subjected to a significantly higher level of damping than axial motions. Consequently, an axial dynamic force error will be less damped than its transverse counterpart. Since the mooring line forms an angle of γ = 39.2 o with respect to the x-axis at the truncation point, the axial forces have an x-component larger than their z − component, and a calibration error on fx (parametrized by θ1) will play a greater role for φ2 than a calibration error on fz (parametrized by θ2).

Also, as explained earlier, Total Sobol’ indices and first-order indices differ when there is a non-additive interaction between two (or more) θi in φ. The nature of this interaction can be determined by considering higher-order Sobol’ indices (not shown here). We found for example that the interaction between θ1 and θ2 explains ≈ 20% of the variance of φ1, and ≈ 15% of the variance of φ2. This is due to the fact that if θ1 and θ2 differ significantly from each other, the amplitude, but also the direction of the force at the truncation point will be affected. Since the stiffness and damping properties of the line are strongly anisotropic, as explained earlier, this change in direction will have a significant effect on the fidelity.

From Fig. 15, we then see that biases have a sensible influence on φ1 and φ2 (total Sobol indices), and that this influence is due to interactions (S5 ≪ ST,5 and S6 ≪ ST,6 in both cases). Here, the mechanisms in play are slightly different for φ1 and φ2. The interested reader might consult [11, Chapter 5] for more details.

We have, in this section, shown how the results of the systematic sensitivity study, based on PCE and Sobol’ indices, leads to the identification of the the most critical artefacts for this problem, and enables one to gain insight in the complex coupling between the control system and the mooring line dynamics. Note that time delays, which have received a lot of attention in the CPEM literature, do not play a major role for the present setup.

Reliability Analysis

While the previous subsections addressed (RQ1), described in Section “Fidelity of a CPEM”, the present section will exemplify how probabilistic robust fidelity of the active truncation setup can be assessed (RQ2), and how the fidelity bounds of the considered setup could be established (RQ3). In this section, we will assume that the interaction of the top of the line with the floater is of interest, and consider only the fidelity indicator φ1.

As a design choice, we set the minimum admissible fidelity for this setup to φadm = 0.8, and we will consider that probabilistic robust fidelity is achieved if

By considering Fig. 12, we see that none of the samples of Θ in the initial set \(\mathcal {E}\) leads to a fidelity less than φadm. However this set had a cardinality of N = 512, which is too low to confidently assess whether equation (23) holds. Furthermore, as noted when commenting Fig. 13, the distribution of φ1 has heavy tails, so extrapolating the empirical distribution to lower quantile values is hazardous.

The Adaptive Kriging method is applied to address this problem.. Batches of K = 16 new samples of Θ are added at each step of the enrichment process. The K co-simulations and evaluations of φ(θ) are performed in parallel.

Starting from a subset \(\mathcal {E}^{\prime }\) of \(\mathcal {E}\) which contains 256 samples of Θ, the first enrichment step is taken. In this step, the PCK surrogate model established from \(\mathcal {E}^{\prime }\) is used to evaluate (through the probability of misclassification) where φ1 could have dropped close to φadm and/or is highly uncertain. As illustrated in Fig. 16, the regions of \(\mathcal {D}\) associated with a large Pm are detected where the ratio θ2/θ1 deviates significantly from unity, where θ8 is small, and, to some extent where θ7 and θ10 are simultaneously large. In physical terms, this corresponds to a distortion of the measured force angle, to long periods of signal loss of the force sensor (both have a significant influence on the fidelity as commented in Section “Sensitivity Analysis”), and to significant delays in the loop, which may yield an unstable, and thus low-fidelity, system. We note that since the K new samples are chosen by a clustering algorithm, they are nicely spread over the limit state margin \({\mathscr{L}}\). These new samples are added to \(\mathcal {E}^{\prime }\), a new PCK model is established, a set of K new samples are obtained by AK, and so on at each enrichment step.

Illustration of the first enrichment step. The grey dots represent the samples in the initial set \(\mathcal {E}\). The blue dots corresponds to areas of the twelve dimensional space with large probability of misclassification (Pm > 0.45). The red diamonds represent the K = 16 samples selected by the clustering algorithm, at this step

The evolution of the estimated probability of failure Pf during the enrichment process is shown in Fig. 17. From the initial sample set (256 samples), Pf is estimated to 2.5 × 10− 2 > εadm, but after about 50 enrichment steps in which the accuracy of the PCK model near \({\mathscr{L}}\) is improved, Pf stabilizes at 3.6 × 10− 4 < εadm, which confirms robust fidelity of this setup. Note that even for rather low values of Pf, and even if the problem is twelve-dimensional, the total number of required enrichment steps remains of the order of 102.

Fidelity vs Local Performance Indicators

Considering exactly the same truncation setup and set of artefacts as in the previous section, we now introduce another indicator denoted φ3, which quantifies the mechanical power mismatch between the numerical and physical substructures at the truncation point. This indicator is defined as

where

and where fi and vi denote the force and velocity vectors at the truncation point, for the numerical substructure Σ1 and physical substructure Σ2, respectively. In other words, considering the plots on the second and third rows of Fig. 14, φ3 quantifies the mismatch between the power obtained from the red curves and the blue curves. This type of indicator has been previously used in the analysis of several CPEM in the past [11, Chapter 1].

We qualify this performance indicator φ3 as ”local”, since it penalizes a loss of compatibility and equilibrium at the truncation point, without considering the behaviour of other parts of the substructures. It is quite different from φ1 and φ2, in the sense that it is not based on a quantity of direct interest for the experiment, and does neither use the emulated system (without artefacts) as a reference. The indicator φ3 is therefore not a fidelity indicator, according to Definition 3. However, φ3 is based on a similar comparison structure (normalized time integration of the squared error) as the one used in φ1 and φ2, which makes the comparison between these three indicators meaningful and interesting.

Using the same set \(\mathcal {E}\) as in the previous section, and the same PCE-based approach, the two first moments of φ3 can be estimated to

By comparing \(E[\hat {\varphi }_{3}({\Theta })]\) to \(E[\hat {\varphi }_{1}({\Theta })]\) and \(E[\hat {\varphi }_{2}({\Theta })]\) given in equations (21) and (22) for the same set of artefacts, it is seen that the error on the transfer of power between the substructures can be significantly largerFootnote 5 than the resulting errors on the quantities of interest. Furthermore, the coefficient of variation for φ3 is 17%, while it was close to 10% for φ1 and φ2, meaning that the local indicator is in comparison more sensitive to the the given set of artefacts than the fidelity indicators.

The Sobol’ indices for φ3 are shown in Fig. 15, and can be compared to the Sobol’indices for φ1 and φ2. The conclusions drawn from this sensitivity analysis are radically different from the ones obtained for φ1 and φ2. Indeed, while calibration errors and signal loss on the force sensors were found to be the most influencing parameters for φ1 and φ2, they have an insignificant effect on φ3. Besides, φ3 is mostly affected by the artefacts acting the velocity actuation (time delay and signal loss), which played a very minor role for φ1 and φ2.

The probability of this indicator to drop below φadm = 0.8 can be directly estimated by considering the distribution of φ3, without need for Adaptive Kriging, due to the generally lower values taken by φ3. This probability is found to be about 10%, which is significantly larger than εadm.

From this analysis, we can conclude that designing the control system based on the local indicator φ3 would have led to an over-conservative (or possibly unfeasible) design for given performance targets. Furthermore, based on the conclusions of the sensitivity analysis, the designer would have attempted to minimize the artefacts present on the velocity actuation side, which have been shown to be of marginal importance for the fidelity.

Conclusion

We studied the fidelity of cyber-physical empirical methods (CPEM), with a focus on the control system that interconnects the substructures. An imperfect control system prevents the compatibility and equilibrium between the substructures to be fulfilled, hereby influencing the properties of the dynamical system under study. This jeopardizes the validity of CPEMs as empirical methods.

To investigate this issue, we formulated a generic model for CPEMs. In this model, the interconnections between the substructures were subjected to multiple, random and heterogeneous artefacts, modeling the imperfect control system, and described by a parameter θ. The effect of the artefacts on the quantities of interest for the experimentalist was quantified by a performance indicator, denoted the fidelity. It was assumed that a probabilistic description of θ was available, based on previous experience or on a dedicated survey.

Based on this problem formulation, we developed a pragmatic method that enables the designer of a CPEM to identify artefacts that play a significant role for the fidelity (RQ1). We also showed how absolute bounds on θ could be established, which guarantee sufficiently high fidelity (RQ3). Associating this result with the probabilistic description of θ enabled us to conclude whether probabilistic robust fidelity of a given CPEM was achieved (RQ2). An overview of the method was given in Fig. 9.

The developed method was illustrated by studying the fidelity of the active truncation of a slender marine structure. In this problem, the substructures had to be described by non-closed form models, and the setup was subjected to a larger number of heterogeneous artefacts (gains, biases, noise, signal losses and time delays), parametrized in a twelve-dimensional space. Strong assumptions would have been required to make this problem tractable with classical analytical methods, which would have weakened the obtained conclusions about fidelity.

Through this case study, we also showed the importance of assessing the considered CPEM through its fidelity, based on the the Quantities of Interest for the experimentalist, rather than through local performance criteria, such as the ability of the control system to transfer the mechanical power between the substructures.

The computational efficiency of the method for higher-dimensional and high-reliability problems was put in evidence through this example. Global sensitivity results could be obtained with a few hundred simulations, and probability of failures of the order of 10− 4 could be estimated with less than 100 enrichment steps, even if θ was taking values in a twelve-dimensional space.

The present fidelity analysis framework is generic and application-agnostic. Further work should be targetted at developping guidelines suggesting relevant τ, γq, and φadm for other CPEMs than active truncation.

Notes

This reference also discusses how to handle artefacts that intrinsically involve randomness, such as noise or signal loss/jitter.

We will for instance show in Section “Example: Active Truncation of a Slender Marine Structure” that scrutinizing the power mismatch at the interconnection leads to erroneous conclusions

Co-simulation of the system under study is not detailed in this paper, the interested reader might consult [11, Chap. 3 and 4]

We remind about the logarithm in the definition of the φi

References

McCrum D, Williams M (2016) An overview of seismic hybrid testing of engineering structures. Eng Struct 118:240–261. https://doi.org/10.1016/j.engstruct.2016.03.039

Korzen M, Magonette G, Buchet P (1999) Mechanical loading of columns in fire tests by means of the substructuring method. Zeitschrift für Angewandte Mathematik und Mechanik 79:617–S618

Wagg D, Neild S, Gawthrop P (2008) Real-time testing with dynamic substructuring. In: Bursi OS, Wagg D (eds) Modern testing techniques for structural systems, no. 502 in CISM international centre for mechanical sciences. Springer, Vienna, pp 293–342

Filipi Z, Fathy H, Hagena J, Knafl A, Ahlawat R, Liu J, Jung D, Assanis DN, Peng H, Stein J (2006) Engine-in-the-loop testing for evaluating hybrid propulsion concepts and transient emissions-HMMWV case study. Tech. rep., SAE Technical Paper

Misselhorn WE, Theron NJ, Els PS (2006) Investigation of hardware-in-the-loop for use in suspension development. Veh Syst Dyn 44(1):65–81. https://doi.org/10.1080/00423110500303900

Plummer AR (2006) Model-in-the-loop testing. Proceedings of the Institution of Mechanical Engineers Part I: Journal of Systems and Control Engineering 220(3):183–199. https://doi.org/10.1243/09596518JSCE207

Botelho RM, Christenson RE (2014) Mathematical framework for real-time hybrid substructuring of marine structural systems. In: Dynamics of civil structures, vol 4. Springer, pp 175– 185

Sauder T, Chabaud V, Thys M, Bachynski EE, Sæther LO (2016) Real-time hybrid model testing of a braceless semi-submersible wind turbine. Part I: the hybrid approach. In: ASME 2016 35th international conference on ocean, offshore and arctic engineering

Edrington CS, Steurer M, Langston J, El-Mezyani T, Schoder K (2015) Role of power hardware in the loop in modeling and simulation for experimentation in power and energy systems. Proc IEEE 103(12):2401–2409. https://doi.org/10.1109/JPROC.2015.2460676

Gawthrop PJ, Wallace MI, Wagg DJ (2005) Bond-graph based substructuring of dynamical systems. Earthquake Engineering & Structural Dynamics. https://doi.org/10.1002/eqe.450

Sauder T (2018) Fidelity of cyber-physical empirical methods. Application to the active truncation of slender marine structures. PhD Thesis Norwegian University of Science and Technology. Trondheim

Sauder T, Marelli S, Larsen K, Sørensen AJ (2018) Active truncation of slender marine structures: influence of the control system on fidelity. Appl Ocean Res 74:154–169. https://doi.org/10.1016/j.apor.2018.02.023

Sauder T, Marelli S, Sørensen AJ (2019) Probabilistic robust design of control systems for high-fidelity cyber-physical testing. Automatica 101:111–119

Fawzi H, Tabuada P, Diggavi S (2014) Secure estimation and control for cyber-physical systems under adversarial attacks. IEEE Trans Autom Control 59(6):1454–1467. https://doi.org/10.1109/TAC.2014.2303233

Drazin PL, Govindjee S, Mosalam KM (2015) Hybrid simulation theory for continuous beams. J Eng Mech 141(7):04015005. https://doi.org/10.1061/(ASCE)EM.1943-7889.0000909

Sobol IM (1993) Sensitivity estimates for nonlinear mathematical models. Math Modell Comput Exper 1 (4):407– 414

Homma T, Saltelli A (1996) Importance measures in global sensitivity analysis of nonlinear models. Reliab Eng Syst Safety 52(1):1–17

Sudret B (2008) Global sensitivity analysis using polynomial chaos expansions. Reliab Eng Syst Safety 93 (7):964–979. https://doi.org/10.1016/j.ress.2007.04.002

Blatman G, Sudret B (2010) An adaptive algorithm to build up sparse polynomial chaos expansions for stochastic finite element analysis. Probab Eng Mech 25(2):183–197. https://doi.org/10.1016/j.probengmech.2009.10.003

Schöbi R, Sudret B, Wiart J (2015) Polynomial-chaos-based Kriging. Int J Uncertain Quantif 5:2

Dubourg V (2011) Adaptive surrogate models for reliability analysis and reliability-based design optimization. PhD thesis. Université, Blaise Pascal-Clermont-Ferrand II

Marelli S, Sudret B (2014) UQLAB: a framework for uncertainty quantification in MATLAB. SIAM conference on uncertainty quantification (ICVRAM) USA

Marelli S, Sudret B (2017) UQLab manual – polynomial chaos expansions. Tech. Rep. UQLab-V1.0-104, Chair of Risk. Safety & Uncertainty Quantification, ETH Zuricḧ

Marelli S, Lamas C, Sudret B, Konakli K (2017) UQLab user manual – sensitivity analysis. tech. Rep. UQLab-V1.0-106, Chair of Risk, Safety & Uncertainty Quantification, ETH Zuricḧ

Schöbi R, Marelli S, Sudret B (2017) UQLab user manual – PC-Kriging. Tech. Rep. UQLab-V1.0-109, Chair of Risk, Safety & Uncertainty Quantification. ETH Zuricḧ

Marelli S, Schobi R, Sudret B (2017) UQLab user manual – Structural Reliability. Tech. Rep. UQLab-V1.0-107, Chair of Risk, Safety & Uncertainty Quantification, ETH Zuricḧ

Magee AR (2018) The role of model testing in the execution of deepwater projects. In: Proceedings of the offshore technology conference. Kuala Lumpur

Vilsen S, Sauder T, Sørensen AJ (2017) Real-time hybrid model testing of moored floating structures using nonlinear finite element simulations. In: Dynamics of coupled structures, conference proceedings of the society for experimental mechanics series, vol 4. Springer International Publishing, pp 79–92

Blatman G, Sudret B (2011) Adaptive sparse polynomial chaos expansion based on least angle regression. J Comput Phys 230(6):2345–2367. https://doi.org/10.1016/j.jcp.2010.12.021

Acknowledgments

This work was supported by the Research Council of Norway through the Centres of Excellence funding scheme (project number 223254 - AMOS). SINTEF Ocean is acknowledged for its support to NTNU AMOS. The corresponding author states that there is no conflict of interest.

Funding

Open Access funding provided by NTNU Norwegian University of Science and Technology (incl St. Olavs Hospital - Trondheim University Hospital).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sauder, T. Fidelity of Cyber-Physical Empirical Methods. Exp Tech 44, 669–685 (2020). https://doi.org/10.1007/s40799-020-00372-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40799-020-00372-x